A Survey on Offloading in Federated Cloud-Edge-Fog Systems with Traditional Optimization and Machine Learning

Abstract

The huge amount of data generated by the Internet of things (IoT) devices needs the computational power and storage capacity provided by cloud, edge, and fog computing paradigms. Each of these computing paradigms has its own pros and cons. Cloud computing provides enhanced data storage and computing power but causes high communication latency. Edge and fog computing provide similar services with lower latency but with limited capacity, capability, and coverage. A single computing paradigm cannot fulfil all the requirements of IoT devices and a federation between them is needed to extend their capacity, capability, and services. This federation is beneficial to both subscribers and providers and also reveals research issues in traffic offloading between clouds, edges, and fogs. Optimization has traditionally been used to solve the problem of traffic offloading. However, in such a complex federated system, traditional optimization cannot keep up with the strict latency requirements of decision making, ranging from milliseconds to sub-seconds. Machine learning approaches, especially reinforcement learning, are consequently becoming popular because they can quickly solve offloading problems in dynamic environments with large amounts of unknown information. This study provides a novel federal classification between cloud, edge, and fog and presents a comprehensive research roadmap on offloading for different federated scenarios. We survey the relevant literature on the various optimization approaches used to solve this offloading problem, and compare their salient features. We then provide a comprehensive survey on offloading in federated systems with machine learning approaches and the lessons learned as a result of these surveys. Finally, we outline several directions for future research and challenges that have to be faced in order to achieve such a federation.

Index Terms:

Offloading, cloud computing, edge computing, fog computing, federation, optimization, machine learningI Introduction

There are many computing paradigms which provide computational power and storage services for the huge amounts of data generated by an ever-increasing number of heterogeneous devices. Three of the most well-known and widely adopted computing paradigms are cloud, edge, and fog computing. The terms cloud, edge, and fog represent three computing tiers of cloud, edge, and fog computing systems.

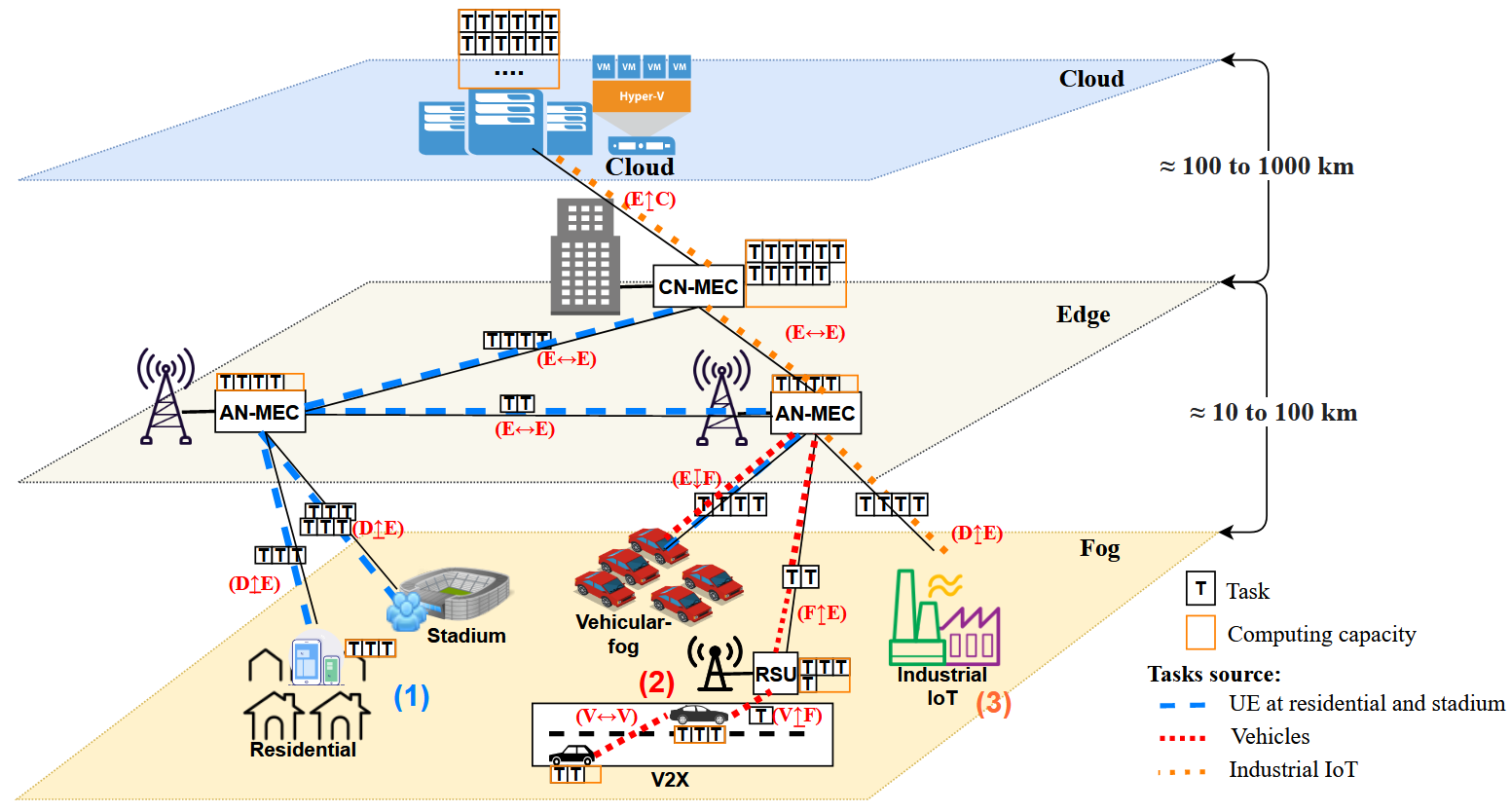

Figure 1 shows the cloud-edge-fog system consists of three tiers. (a) Cloud tier: the top tier is a cloud system [1], that encompasses the cloud computing paradigm which is the most well-known and widely adopted computing paradigm for more than a decade because of its attractive features such as scalability, rapid elasticity, resource pooling, cost saving, and easy maintenance. This tier consists of different clouds, such as Google and Amazon. These basically deal with industrial big data, business logic, and analytical databases, data “warehousing,” and so on. (b) Edge tier: the middle tier is an edge system [2], that comprises the edge computing paradigm. Edge computing has its origins with European Telecommunication Standards Institute (ETSI) that proposed virtualizing the capabilities of cloud computing into mobile network operators. An edge server can be deployed behind a cellular system’s base station and central office. This tier includes different service providers such as Verizon, T-Mobile, AT&T, Chunghwa telecom, and so on, and consists of local network assets, micro-data centers, central offices, base stations, etc. (c) Fog tier: the bottom tier is a fog system [3] or IoT system consisting of both mobile users (e.g., smartphones, tablets, and laptops), smart vehicles forming vehicular-fogs [4], and IoT devices, such as industrial actuators, wearable devices, and smart sensors, and the like. It covers real-time data processing on industrial PCs, process-specific applications and autonomous equipment, group of local computing devices, electronic vehicles, etc.

I-A The Federation: Cloud, Edge, and Fog

The Internet of Things (IoT) devices that have taken the world by storm need computational power and storage capacity for the huge amount of data generated by them so as to provide services to their subscribers [5]. Cloud, edge, and fog computing are the potential paradigms that could fulfil the demand of subscribers [6]. Cloud computing is the on-demand availability of computer system resources, especially data storage and computing power, without the need for direct active management by a user [1]. However, cloud computing introduces high communication latency as its servers are far from end-users or subscribers. A cloud computing paradigm is not suitable for some applications with very strict communication latency limitations. This is where edge and fog computing models play a crucial role in providing similar services with lower latency [7][8].

Again, all these computing paradigms, i.e., cloud, edge, and fog, have their own limitations in the terms of capacity, capability, coverage, storage, and latency. A single computing paradigm cannot by itself fulfil the diverse requirements of a vast number of traditional and heterogeneous IoT devices. For example, a user might need to use two different applications at the same time and one of them is latency sensitive while the other is computation sensitive. In this case, the user would require the services provided by both cloud and edge or fog [9]. Also, if a cloud customer needs some extra service that is not available in that cloud, then the cloud must try to arrange that service for the customer without a delay as to provide satisfaction. The cloud may otherwise lose the trust of the customer and, in some cases, may lose the customer, which may affect its business financially and in reputation. This is where a federation between multiple computing paradigms can play a key role in resolving these issues. Such a federation is not only suitable for subscribers but also for providers. A subscriber will be able to access the services provided by different computing paradigms without having to buy multiple subscriptions. On the other hand, providers would be able to extend their capacity, capability, and coverage without having to lose subscribers to other providers.

I-B Offloading in Federated Systems

A federation between multiple computing paradigms gives rise to many different opportunities and challenges such as authentication, access control, resource sharing, and traffic offloading. In this work, we focus on offloading in a federated environment where cloud, edge, and fog offload traffic to each other. Such offloading is basically a transfer of tasks that are resource intensive to a separate platform in order to perform a task in a better way. Such offloading becomes necessary when a task assigned to a service provider exceeds its computing resources and has to be offloaded to another service provider that can provide the required computing power. Offloading is thus required in order to fulfil different constraints under different situations. Some of the important constraints to be overcome are latency, load balancing, privacy, and storage constraints.

There can be two types of offloading In a federated system, intra-domain and inter-domain offloading, as there are multiple domains in such a federation. Intra-domain offloading involves the traffic offloaded between the entities belonging to the same tier i.e., cloud-to-cloud, edge-to-edge, or fog-to-fog, while inter-domain offloading involves the entities belonging to different tiers, such as cloud-to-edge, cloud-to-fog, or edge-to-fog etc.

Optimization has traditionally been used effectively to offload traffic in single networks [10] or in a federation as a single network provides the optimal offloading ratio that will reduce the overall cost of the network. Although traditional optimization has been used for years, it takes much time to generate decisions because of a network’s complexity and the very large number of variables involved. The non-convex algorithms in traditional optimization perform an exhaustive search to find an optimal solution, and which takes much time to converge [11]. Modern applications are latency sensitive and cannot afford such delays in offloading decisions, as the control and data planes need a decision in milliseconds to subseconds. In the current era, optimization solutions for quick offloading decisions are becoming obsolete and machine learning approaches are taking the place of traditional optimization in complex network systems because of their faster response times.

The machine learning approach has an advantage over the traditional optimization approach in such complicated federated systems because machine learning does not require a complete knowledge of the system compared to traditional optimization, and it can quickly solve offloading problems with various bits of unknown information. In the various machine learning approaches, reinforcement learning (RL) is the most suitable for offloading decisions because RL does not need a data set and can learn directly from the environment [12]. This makes RL suitable for offloading decisions in a dynamic environment with much unknown information. This also shows that RL is better than the traditional optimization approach because in such complex systems traditional optimization may not be able to converge to an optimal solution and may preferably rely on heuristics. Traditional optimization would take much more time for decision making compared to RL because of exhaustive searching. When we consider offloading in a complex federated environment together with traditional optimization, machine learning, and reinforcement learning, many research opportunities and challenges arise.

We summarize these various research opportunities such as V2X, fog-fog federation, mobility in vehicular fog, scaling, resource allocation, centralized vs. distributed federation, etc. below. We also provide some insight into the important challenges that will need to be faced by the operators to deploy this kind of federation such as redundancy, fault tolerance, SLA, reliability, geo-diversity, performance, security, and interoperability between entities of the different domains in a federated environment.

| Coverage | ||||||||

| References |

Cloud |

Edge |

Fog |

Vehicular-fog |

Device |

Federation model |

Optimization |

Focus of the survey |

| [13] | ✓ | Data offloading techniques in cellular networks | ||||||

| [14] | ✓ | Offload techniques and management in wireless access and core networks | ||||||

| [17] | ✓ | Traffic offloading in heterogeneous cellular network | ||||||

| [15] | ✓ | Opportunistic Offloading | ||||||

| [16] | ✓ | Mobile data offloading technologies | ||||||

| [18] | ✓ | Computation offloading for mobile systems | ||||||

| [19] | ✓ | Security and privacy challenges in mobile cloud computing | ||||||

| [20] | ✓ | Adaptation techniques in computation offloading | ||||||

| [21] | Single | Architecture and Computation Offloading | ||||||

| [23] | Multi-objective decision-making for mobile cloud offloading | |||||||

| [25] | ✓ | A Survey and taxonomy on task offloading for edge-cloud computing | ||||||

| [26] | ✓ | Computation offloading for vehicular environments | ||||||

| [9] | Computation offloading in edge-cloud systems | |||||||

| [22] | ✓ | Offloading in fog computing: Enabling technologies | ||||||

| [24] | ✓ | Data offloading techniques in V2X networks | ||||||

| [27] | ✓ | T | Computation offloading modeling for edge computing | |||||

| [12] | ✓ | ML | Machine learning-based approaches in mobile edge computing | |||||

| Our | ✓ | ✓ | ✓ | ✓ | ✓ | Multiple | T/ML | Offloading in federated cloud-edge-fog systems |

| T: Traditional; ML: Machine Learning | ||||||||

I-C Overview of Surveys

In this section, we discuss recent works that survey offloading in federated systems, as well as the importance of our survey. Table I compares offloading surveys which are divided into coverage, federation models, optimization approaches, and what the focus of that survey is.

The authors of [13, 14, 17, 15, 16] discussed traffic and data offloading between cellular, WiFi, and opportunistic networks, but did not consider computation offloading in a federated system such as an edge-cloud system. Rebecchi et al. [13] reviewed data offloading approaches in cellular system with WiFi environment and categorized them based on their latency requirements. Maallawi et al. [14] surveyed offloading and management approaches in wireless access and in core networks. Their objective was to address providers’ problems such as radio access scheduling, revenue per user decrease, and coverage. Chen et al. [17] surveyed traffic offloading in heterogeneous cellular networks, including small cells, WiFi networks, and opportunistic networks, and [15] focused on the algorithm for selecting the optimal subset of offloading nodes in an opportunistic network, which would allow a node to offload traffic and computation tasks to another node. This kind of D2D offloading is beneficial to a cellular operator and users in terms of monetary cost. Huan et al. [16] surveyed mobile data offloading, which involves small cells, WiFi networks, opportunistic networks, and heterogeneous networks. The pros and cons of each of these networks are also detailed.

Computation offloading between mobile device and cloud is discussed in [18, 19, 20]. Kumar et al. [18] categorized offloading techniques based on the decision characteristics and applications. The security and privacy challenges in mobile cloud computing are discussed in [19]. The offloading techniques with environmental variation which included applications, networks, execution platforms, and cloud managements are summarized in [20].

Edge-cloud system offloading was surveyed in [21, 23, 25, 26, 9, 22, 24, 27, 12]. Mach et al. [21] discussed mobile edge-cloud system architectures and considered computation offloading, resources allocation and mobility management. Wu et al. [23] discussed multi-objective offloading, which was initiated by a large heterogeneous system such as mobile edge computing. Response time and energy consumption were their two main objectives. Offloading criteria were categorized into what, when, where, and how to offload. The taxonomy of edge-cloud offloading was categorized in [25], based on task type, offloading scheme, objectives, device mobility, and multi-hop cooperation. De et al. [26] presented a classified taxonomy of V2X system offloading, based on a communication standard, problem, and experiment.

Fog-edge-cloud offloading was discussed in [9, 22, 24, 27, 12]. Jiang et al. [9] surveyed and discussed state-of-the-art computational offloading in mobile edge computing. Aazam et al. [22] discussed the offloading technologies in fog computing for IoT. The survey of Zhou et al. [24] focused on vehicular offloading, which included vehicle-to-vehicle, vehicle-to-infrastructure, and vehicle-to-everything, with a brief discussion of the architecture design, algorithm, and problem formulation. Lin et al. [27] focused on offloading modeling, which included communication, computation, energy harvesting, and channel modeling. Shakarami et al. [12] classified machine learning-based offloading into approaches such as supervised ML, unsupervised ML, and reinforcement learning (RL).

None of these surveys details a federation between cloud, edge, fog, and vehicular fog. Each combination of such a federation has different characteristics and offloading directions which leads to complex issues. In most of the surveys traditional optimization was used to optimize the offloading decision in a federation. Traditional optimization takes a long time to converge in such a complex federation system. By contrast, an offloading decision must be rapidly determined by the control plane. ML-based approaches have recently become popular to solve offloading optimization problems in such a complex federation system with fast response times. This survey focuses on edge-cloud federation offloading and covers state-of-the-art offloading approaches that use ML.

I-D Contribution

The major contributions in this paper are as follows. First, we discuss the classification of federation between cloud, edge, and fog systems, followed by a classification the possible offloading techniques between the entities of a federated system. We discuss the current research status of different federated architectures and offloading techniques, and survey offloading based on traditional optimization and machine learning approaches and make a comparative study of both approaches. Finally, we discuss some key research challenges associated with the task offloading and point to possible future research directions.

The rest of this paper is organized as follows. Section II describes a federation of fog-edge-cloud and classification of such a federation. Section III presents the offloading, classification of the offloading, and the current research status of federated architecture and offloading. The survey on offloading is detailed in Section IV, which also classified the approaches into traditional optimization and machine learning. Lessons learned from the survey are discussed in Section V. The research opportunities and challenges are presented in Section VI and the conclusions of this survey discussed in Section VII.

II A Federation

A federation can be defined as the collection of clouds that cooperate to provide resources requested by users [28]. Stated another way, a cloud can provide computing resources wholesale or rent to another cloud provider [29]. A federation can render the cloud a user and resource provider at the same time [30]. A customer’s request submitted to one cloud can be fulfilled by another cloud. A cloud provides capacity and coverage, but for latency reduction and fault tolerance, a cloud needs edge and fog. Likewise, fog and edge need the service of a cloud to increase their capacity and coverage.

Cloud, edge, and fog computing paradigms provide different services to users or subscribers, depending on their limitations and capacity [31]. Since subscribers have different demand and service requirements, each paradigm may not have all kinds of services to fulfil all users’ needs, because each computing paradigm has its limitations [32]. There is thus a need for a “federation” between different service providers to cope with a users’ heterogeneous requirements and increase the capacity, coverage, capability, and fault tolerance of service providers. For example, in a smart city environment, different users or IoT devices have different requirements that may not be addressed by a single service provider. A federation then comes into the picture to fulfil the various demands. The benefits of the federation are twofold, i.e., from both a subscriber’s and provider’s perspectives. A subscriber would not have to subscribe to the services of all providers but will get the services of all by just subscribing to one of them. Subscribers do not have to keep multiple accounts and do not have to pay multiple providers. On the other hand, a provider will not lose a customer just because it cannot provide a particular service.

II-A Federation vs. Non-federation

A non-federated scenario is one where a service provider cannot share its resources with other service providers, and it can neither lend nor rent its surplus resources to others. In such a scenario, it is difficult to handle the dynamic demands of users, and the service provider may face issues like Lock-in [33][34][35] and single point failure [36][37]. Lock-in is one of the most cited and controversial obstacles to widespread cloud computing adopted by enterprises [38]. It is also risky for a customer to be tied to a single vendor because that vendor might raise prices, go out of business, become unreliable, or fail to keep up with technological progress.

Different service providers, such as cloud, edge, or fog, provide different services to their subscribers depending on their limitations and capacity [39]. Again, a subscriber of a service provider may have different demands at a different time, which may not be fulfilled by the service provider always. This can also be understood with the help of an example of a perfect smart city where there would be different types of IoT devices, and each type would have its own requirements that a single IoT service provider cannot fulfil. In such a scenario, the provider will be able to provide all sorts of IoT services after federating with other providers. When acquiring IoT deployment, a federated environment is thus more beneficial than a non-federated scenario.

II-B Classification of a Federation

With federation technology, different users of or subscribers to different service providers get different benefits. With this technology, different service providers can federate with each other to provide a better service to their users. A federation between these service providers can be divided into three categories, horizontal, vertical, and hybrid federations. These federations are all based on the cloud, edge and fog integrated architecture shown in Figure 1; the classification of all possible federation scenarios is shown in Figure 2. To the best of our knowledge, such a classification has not been dealt with in the any of the studies we reviewed.

1) Horizontal Federation. A horizontal federation consists two federated entities in the same tier, such as a cloud-cloud federation [40]. A horizontal federation can be cloud-cloud or , edge-edge or , or Fog-Fog or .

2) Vertical Federation. A vertical federation is a federation between entities in different tiers [30] as in a cloud-edge federation. Since a cloud-edge-fog system is a three-tier system, we can classify a vertical federation into two and three-tier federations, such as cloud-edge , edge-fog , and cloud-fog federations, or a cloud-edge-fog federation.

3) Hybrid Federation. A hybrid federation is a federation that combines both horizontal and vertical combinations [54], where entities can simultaneously federate horizontally with another entity in the same tier as well as can vertically with entity in another tier. For example, in an edge-edge-cloud federation, an edge is federated with another edge in tier-2 and also federated with a cloud in tier-3. Such a hybrid federation can be classified into two-tier and three-tier federations.

-

1.

A two-tier hybrid federation is the combination of all possible combinations of horizontal and two-tier vertical federations. For example, in federation, one cloud will federate with another cloud horizontally and with an edge vertically. Similarly, in , two clouds become federated with each other, two edges are federated with each other horizontally, and are simultaneously also federated vertically . All nine possible two-tier hybrid federation combinations are shown in Figure 2.

-

2.

A three-tier hybrid federation is the combination of all possible combinations of a horizontal federation and three-tier vertical federation; all seven possible federation combinations are also shown in Figure 2. For example, in , two clouds in tier-3, are federated with each other, two edges in tier-2 are federated with each other, and two fogs in tier-2 get federated with each other. At the same time, with and with also become federated vertically.

III Offloading

When an entity or service provider (say SP1) with a federated architecture receives requests from its subscribers or customers, and needs another entity (say SP2) to execute tasks on behalf of SP1 and return the results. This is called offloading [41]. Again, there are various criteria that are used when deciding whether or not to offload certain tasks. A few examples of this are as follows. To meet a resource constraint: when a task requires more computing resources than the local system’s available capacity, it must be offloaded to another system with the required capacity [42]. To address latency: as distance affects time-sensitive applications, the node closest to the receiving node must be involved in the task of offloading so as to provide the services faster [43]. Load balancing: when a server has reached its capacity for executing tasks, additional tasks need to be distributed between other entities in the service provider’s ecosystem [44]. Storage: small computing devices with limited storage facilities may require offloading to another that has large storage capacity [45]. To maintain privacy, confidentiality, and security: depending on the sensitive of data, they may be offloaded to more secure cloud storage [46].

III-A Renting vs. Scaling vs. Offloading

In an offloading scenario, resources are used based on the requests from customers. These may vary from time to time, based on demand. Here the use of resources can be scaled up or down based on the demand, and a customer will pay according to use. This is called autoscaling. However, in renting, a customer will reserve the required resources for a predetermined duration for future use. The customer may or may not utilize the entire resources that was reserved, but will pay according to the reservation. Offloading is a method where a service provider passes the request to another service provider to provide the service to its own customer. For example, a client of Amazon sends a request to Amazon, but Amazon passes the request to Google, and Google provides the service, providing there is a federation agreement between the two service providers.

III-B Classification of Offloading

Based on the federation agreement between entities, one entity can offload its tasks to another entity for service. This offloading can be classified into Horizontal, Vertical, or Hybrid offloading, based on different federation agreements. Our offloading classification focuses on the computation capacity and communication time perspective. However, an offloading classification can also be applied to other criteria such as storage, security, etc.

1) Horizontal Offloading. Horizontal offloading always takes place between two entities in the same tier with a horizontal federation agreement. As with a horizontal federation, the horizontal offloading also comes in three types, shown as #1 to #3 in Figure 3.

-

1.

In cloud-to-cloud horizontal offloading, two federated clouds can offload to each other [47]. Google can offload to Amazon or vice versa.

-

2.

In edge-to-edge horizontal offloading, two service provider in edge tiers can offload to each other [88].

-

3.

In fog-to-fog horizontal offloading, two computing resources in two different fogs can offload to each other [48]

2) Vertical Offloading. Vertical offloading always takes place between two entities in different tiers, as for example, edge-to-cloud. There are fifteen different vertical offloading combinations from #4 to #18 in Figure 3, which can be classified into four different categories: upward (#4 to #6), downward (#7 to #9), reverse (#7 to #9), and bi-directional (#10 and #11).

-

1.

Vertical offloading occurs upward from the lower to the higher tier, which is more centralized, covers a bigger area, and has a greater computing capacity than the lower tier [71]. The possible upward offloading scenarios are edge-to-cloud fog-to-edge , and fog-to-cloud offloading.

-

2.

When an upper tier offloads its task to a lower layer entity that is closer to the user and has lower network latency than the upper tier, it is known as downward vertical offloading. [56, 89]. The possible downward offloading scenarios are cloud-to-edge , cloud-to-fog , and edge-to-fog offloading. These scenarios are triangular, i.e., the user requests are given to an upper-tier entity and then offloaded to a lower-tier entity. For example, in cloud-to-edge offloading, the cloud user gives its request to the cloud then the cloud will offload the task to the edge with which it has a federation agreement.

-

3.

Reverse offloading is a special type of downward vertical offloading, where the distance between two entities is relatively far, and to overcome latency and data transfer costs associated with highly time-sensitive tasks, an entity in the upper tier can reverse offload its task to a lower-tier entity [51]. These are non-triangular offloading scenarios, i.e., if there is a federation between two entities in two different tiers, and if a subscriber of an entity in an upper tier is closer to an entity in a lower tier, then a users’ requests are given directly to the lower tier entity, instead of to the entity in the upper tier. For example, cloud-to-edge reverse offloading: if there is a federation between cloud and edge, the subscriber to the cloud is closer than to an edge, it can directly send the request to the edge instead of to the cloud. Cloud-to-edge and cloud-to-fog are the two reverse offloading scenarios for our system. Since edge and fog are very close to each other, we do not consider the reverse offloading scenario between them.

-

4.

Bidirectional offloading is a combination of all possible scenarios of upward with downward offloading, upward reverse offloading, or a combination of all three, i.e., upward with both downward and reverse offloading. For example, the offloading scenario #12 in Figure 3 is a combination of offloading scenario #4 and #8; similarly, #14 is a combination of #4, #8 and #10. All possible bidirectional vertical offloading scenarios are shown in Figure 3.

3) Omni-directional Offloading. Omni-directional offloading is the combination of all possible horizontal and bidirectional offloading scenarios. For example, the #22 offloading scenario in Figure 3 is the combination of offloading scenarios #1 and #13 (a combination of #4 and #10). There are twenty-one different omni-directional offloading scenarios from #19 to #39 as shown in Figure 3.

However, these scenarios are only limited to two-tier architectures. They can be further extended to three-tier architecture by combining two two-tier architectures. To the best of our knowledge, such classification of the offloading scenarios has not previously been considered and is here set out.

| Satutation Level | Federation Types | Offloading Types |

|---|---|---|

| Saturated | ||

| Semi-saturated | , , | , , , |

| New | , , , , , |

III-C Current Research Status of Federated Architectures and Offloading

Before doing the survey, we consider the current status of different federated architectures and offloading scenarios, which are divided into three categories, as shown in Table II. Figure 3 shows 39 different offloading scenarios. However, out of these scenarios, 11 are core offloading scenarios that are considered for this categorization based on a one-to-one federation and offloading. Some scenarios have been addressed in many papers, which we consider a saturated scenario—for example, a federation. In [52] Mashayekhy et al. proposed a game-theoretical model to reshape the business structure between cloud providers, which could improve their dynamic resource scaling capabilities by establishing cooperation with the federation method. They proposed a cloud federation mechanism to maximize the profit of cloud providers by reducing the utilization of computing resources. Hassan et al. [53] presented a capacity-sharing mechanism using game theory in a federated cloud environment. This mechanism may lead to a global energy sustainability policy for federated systems and can encourage such systems to cooperate. The main goal of the paper is to minimize the overall energy cost by means of a capacity sharing technique that will promote the long-term individual profit of cloud providers.

The integration of vertical and horizontal cloud federations is discussed in [54]. In this integration, private clouds are known as secondary clouds, and are federated with each other horizontally, which become federated vertically with the public clouds, termed primary clouds. The objective of [54] is to establish stable cooperative partnerships for the federation so as to improve efficiency. In [55], a distributed resource allocation problem is discussed in a horizontally dynamic cloud federation (HDCF) platform. These authors used a game theoretical solution to address this problem, to ensure mutual benefits to encourage cloud providers (CPs) to form an HDCF platform.

Similarly, cloud-to-cloud offloading is very rare as the clouds lack capacity, capability, etc. One cloud may not have something that another one can cover, and it is then considered saturated. There are some federation architectures and offloading scenarios which have been addressed by some researchers but there is still much to address. These scenarios are termed as semi-saturated; rest are called new scenarios, in which hardly any research has been done. These three categories are shown in Table II. Note that the fog as used in this paper includes any static or dynamic fog, including vehicular-fog that may have mobility.

Figure 4 illustrates offloading in a three-tier fog-edge-cloud federation. The fog system is comprised of a variety of devices, such as smartphones, laptops, automobiles, and road-side units (RSUs), all of which interact with one another and can even collaborate on some tasks. Between fog and cloud lies a two-tier MEC system with computing capacity behind the base stations (AN-MEC) and in a central office with core network functions (CN-MEC). Cloud computing is the top tier, with massive computing capacity but is geographically remote from UEs or data sources.

Figure 4 shows three different offloading scenarios based on task sources. The first scenario (1) involves a heavy task or hotspot traffic at a stadium that is hosting a sporting event or music concert. The task will be offloaded from the UEs to the nearest AN-MEC. Because of the AN- MEC’s limited computational capabilities, the task can be offloaded to a less loaded AN-MEC or CNMEC, and computing delay can thus be minimized. In the second scenario (2) the vehicle generates tasks from its sensors or multimedia applications for safety and comfort. Some vehicle tasks are latency-sensitive that are part of the navigation, autopilot, accident, or alert systems. A nearby server must serve those kinds of tasks with low propagation and computing latencies . The tasks can be offloaded either horizontally to other vehicles or vertically to an RSU. If the RSU is overloaded, it will vertically offload the tasks to an AN-MEC, and the overloaded AN-MEC can offload the tasks downward to vehicular fog. The third scenario (3) describes the traffic generated by industrial IoT sensors, with some operations requiring low latency, such as robotic process automation, danger alerts, and suspicious activity alerts, and can be vertically offloaded to AN-MEC. Very large amounts of sensor data from industrial IoT can be offloaded to a cloud for future analysis.

IV Survey on Offloading

This section provides a summary of literature that deals with the federated environment with different offloading scenarios. Some papers discuss current surveys on cloud federation [49][50] with cloud to cloud offloading, some edge federation [88], some edge to cloud offloading [23], some edge to vehicular-fog offloading [56], and some cloud to edge reverse offloading [51]. The major purpose of a federation is enhancing storage and processing capabilities. Many factors influence offloading strategies, such as the location [57], energy [60], and different optimization objectives. We classify this work on offloading into two categories, (a) traditional optimization techniques, that mostly focus on management plane decisions, and (b) machine learning techniques that focus on control plane decisions.

IV-A Traditional Optimization

Table III lists the earlier research on traditional optimization-based offloading, according to the direction and destination of offloading.

1) Device-to-Device (D2D) Offloading. Some research papers focus on device-to-device (D2D) offloading [57][59]. Wang et al. [57] investigated the mobility-assisted opportunistic computation offloading problem focusing on the patterns of contacts between mobile devices. They used the convex optimization method to determine the amount of computation tasks that can be offloaded from one device to another. Pu et al. [58] proposed a device-to-device (D2D) fogging framework, where mobile users can dynamically and beneficially share computation and communication resources between themselves. The objective of D2D fogging is to achieve optimal energy conservation for executing the tasks of network-wide users. Yu et al. [59] proposed a hybrid multicast-based task execution framework for multi-access edge computing (MEC). In this framework multiple devices can collaborate at the edge of a network for wireless distributed computing (MDC) and outcome sharing. Such a framework is socially aware of building effective D2D links with the objective of achieving an energy-efficient task assignment policy for mobile users. They used the Monte-Carlo search tree-based algorithm to achieve their objective.

2) Device-to-Fog (D2F), Device-to-Edge (D2E) and Device-to-Cloud (D2C) Offloading. Two papers, [60] and [68], focused on device-to-cloud and device-to-fog offloading, respectively, while device-to-edge offloading was discussed in [61, 62, 63, 64, 65, 66, 67]. Barbera et al. [60] tested the feasibility of mobile computation offloading in real-life scenarios. They considered an architecture where each real device is associated with a software clone on the cloud. Huang et al. [61] proposed a dynamic offloading algorithm based on the Lyapunov optimization that maximizes energy efficiency while preserving the required latency with face recognition applications. Zhang et al. [62] investigated the trade-off between energy consumption and latency for an MEC system with energy harvesting technology. They formulated the weighted sum of energy consumption and computation latency minimization of mobile device with the stability of queues and battery level, and used the Lyapunov function to ensure system stability.

Zhao et al. [63] proposed a multi-mobile-user MEC system, where multiple smart mobile devices (SMDs) can offload their tasks to an MEC server, with the objective of minimizing the energy consumption of SMDs. To optimize this, they coordinated the offloading selection, radio resource allocation, and computational resource allocation, and use the branch and bound method to solve the optimization problem. Wang et al. [64] investigated partial computation offloading with dynamic voltage scaling (DVS) technology in mobile edge computing, where devices can partially offload their tasks. They formulated an optimization problem with two objectives: energy consumption of SMD minimization (ECM) and latency minimization of application execution (LM). They proposed two optimal algorithms named Energy Optimal Partial Computation Offloading (EPCO), and Latency Optimal Partial Computation Offloading (LPCO) to solve the ECM, and LM problems, respectively.

To achieve minimum average delay, Liu et al. [65] adopted the Markov decision model for computational task scheduling. They proposed a searching algorithm to determine optimal scheduling. Such task scheduling is unique as the computation tasks are scheduled based on the queueing state of the task buffer, the execution state of the local processing unit, and the state of the transmission unit. Mao et al. [66] developed a Lyapunov Optimization-based Dynamic Computation Offloading (LODCO) algorithm to minimize the execution delay and addressed task failure as the performance metric. This algorithm determines the offloading decision, the CPU-cycle frequencies for mobile execution, and the transmission power for computation offloading. However, without requiring distribution information such as computation task requests, wireless channel, energy harvesting (EH) processes, etc., these decisions depend only on the system’s current state.

Chen et al. [67] formulated a multi-user, multi-task computation offloading problem for green Mobile Edge Cloud Computing (MECC) and used the Lyaponuv Optimization approach to determine an energy harvesting policy. This policy determines how much energy is harvested from each wireless device (WD)n the task offloading schedule – the set of computation offloading requests that can be admitted into the mobile edge cloud, the set of WDs that can be assigned to each accepted offloading request, and the amount of workload that can be processed at the assigned WDs. In [68], Hasan et al. present the Aura architecture, a highly localized and mobile ad-hoc cloud computing model using IoT devices present in the ubiquitous environment for task offloading schemes and enhancing applications. They implemented the Aura on the Contiki platform and a simplified Map-Reduce port, which demonstrates such architecture’s feasibility.

3) Device-Fog-Cloud and Device-Edge-Cloud Vertical Upward Offloading. The offloading scenarios adopted in papers [69, 70, 71, 73, 74, 75, 76] were vertical upward, which included from device to any entity offloading and one entity to another entity offloading. Gou et al. [69] present an architecture for collaborative computation offloading over FiWi networks. They addressed the problem of cloud-MEC collaborative computation offloading to minimize the energy consumption of all the MDs while satisfying the computation execution time constraint. They proposed a distributed collaborative computation offloading scheme by adopting game theory and analyzing the Nash equilibrium.

Sun et al. [70] addressed the latency-aware workload offloading (LEAD) problem, where they formulated a task offloading problem to minimize the average response time for mobile users. They designed the LEAD strategy and offloaded the workloads to suitable cloudlets to reduce average response times. Tong et al. [71] proposed a hierarchical edge cloud architecture to improve the performance of mobile computing by leveraging cloud computing and offloading mobile workloads for remote execution at the cloud. For the efficient utilization of resources and workload placement, they used simulated annealing (SA) [72] to determine which programs are placed on which edge cloud servers and how much computational capacity is available to execute that program. They implemented the proposed architecture in small-scale and conducted a simulation experiment over a larger topology and evaluated the performance of a proposed workload placement algorithm.

Rodrigues et al. [73] proposed a heuristic offloading algorithm to determine whether tasks should be processed locally, in nearby cloudlets, or in a remote cloud, which would initially be determined by an UE. The UE would then choose a different location to process the task and calculate the latency difference between the new location and the previous location. Each UE could make this distinction and leverage the chosen location when bidding for an offloading decision. The offloading decision with the highest bid is then chosen.

Chekired et al. [74] introduced a new scheduling model for the industrial Internet of things (IIoT) data processing, and proposed a two-tier cloud-fog architecture for IIoT applications by deploying multiple servers at the fog tier. The objective of this architecture was to minimizing communication and data processing delays in IIoT systems. Resource allocation and offloading optimization for heterogeneous real-time tasks were carried out by means of an adaptive queueing weight (AQW) resource allocation policy in [75]. A trade-off between throughput and task completion ratio optimization was also achieved by taking laxity and completion times into account when designing the offloading policy. Adhikari et al. [76] designed a novel delay-dependent Priority-Aware Task Offloading (DPTO) algorithm for scheduling and handling IoT device tasks in an appropriate computing server. The computing locations were chosen based on the types of task deadlines, which were classified as soft and hard-deadline tasks.

4) Device-Edge-Cloud Hybrid Offloading. Hybrid offloading was discussed in [77, 78, 79] which included device-edge vertical offloading. Tran and Pompili [77] formulated a mathematical model for the joint optimization of task offloading and resource allocation in MEC. In this work they did not only account for the allocation of computing resources but also for the allocation of the transmission power of mobile users.

The two-tier MEC architecture proposed by yahya et al. [78] is comprised of an access network (AN) and a core network (CN) MEC. CN-MEC has greater capacity but is less wide spread than AN-MEC. Two-phase optimization was used to achieve capacity optimization by modifying the offloading ratio and capacity iteratively. For hot-spot traffic, offloading and scaling were merged into short-term and long-term solutions. They considered both vertical, device-edge, and horizontal offloading between edges. In a comparison between pre-CORD and CORD, shown in Figure 6, a trade-off between computing and communication latency was introduced for different distances of the CN MEC which affected the task processing distribution. Thai et al. [79] proposed workload and capacity optimization to minimize computation and communication costs for cloud-edge federated systems, by taking consideration vertical and horizontal offloading. They designed a branch and bound algorithm with parallel multi-start search points to solve this problem.

Villar et al. [51] introduced osmotic computing, a new paradigm for edge and cloud integration. In their research they develop the concept of reverse offloading, where not only can an edge offload its tasks to the cloud, but the cloud can also reverse offload time-sensitive tasks to edges. A two-tier cloud-edge federated architecture was proposed by Kar et al. [90], who considered edge-to-edge horizontal offloading and edge-to-cloud vertical offloading together with cloud-to-edge reverse offloading. They formulated an optimization problem with the objective of minimizing costs where latency was the key constraint, and used simulated annealing to solve it. As shown in Figure 5, the simulated annealing technique gathers system information and carries out an exhaustive search into acquiring the best offloading decision.

5) Fog-Edge-Cloud Vertical Upward Offloading. Some papers [80, 82], [84]-[85] focus on entity to entity upward offloading, and some adopt hybrid offloading scenarios [87]-[88]. Fantacci and Picano [80] carried out queueing analysis of cloud-fog-edge computing infrastructure and proposed a heuristic to determine offloading ratios and computing capacities at fog, edge, and cloud. Kar et al. [81] considered a federated architecture with mobile device, edge, cloud, and vehicular-fog together. They used the queueing theory to analyze the performance to minimize QoS violation probability, and used a subgradient searching algorithm to determine the optimal probabilities.

An intelligent offloading method (IOM) for smart cities, conserving privacy, improving offloading efficiency, and promoting edge utility, was proposed to address privacy disclosure in Xu et al. [82]. These authors used the ant colony optimization (ACO) [83] method to achieving the trade-offs between minimizing service response time, energy optimization and maintaining load balance while ensuring privacy preservation during service offloading. An energy-efficient computation offloading mechanism for MEC in 5G heterogeneous networks was proposed in [84]. They formulated the energy minimization problem of an offloading system, where both task computing and file transmission energy costs were considered.

Lu et al. [85] addressed the problem of computation offloading by using edge computing. They formulated the problem as a two-stage Stackelberg game problem and show that it achieves a Nash equilibrium. Their objective was to maximize cloud service operators’ and edge server owners’ utilities by obtaining optimal payment and computation offloading strategies with low delay. Ma et al. [86] proposed a cloud-assisted framework in MEC, termed Cloud Assisted Mobile Edge computing (CAME), to minimize resource costs by combing queueing network and convex optimization theories. They solved the convex problem by using Karush-Kuhn-Tucker (KKT) conditions, and augmented reality to represent delay-sensitive and computation-intensive mobile applications.

Jiao et al. [87] presented an integrated framework for computation offloading and resource allocation in MEC networks, where both single and multi-cell networks were taken into consideration. To minimize energy consumption and delay, they proposed an energy-aware offloading scheme that considers both computation and communication resource allocation. In [88] a horizontal edge federation was proposed together with UE to edge and edge to cloud vertical offloading scenarios. They experimentally showed that an edge federation model improves the quality of experience (QoE) of end-users and saves on the costs of edge infrastructure providers (EIPs).

6) Vehicular-Fog and V2X Offloading. The single edge to vehicular-fog task offloading problem was addressed in [56] where an iterative greedy algorithm was used to solve the optimization problem. Yen et al. [89] proposed a decentralized offloading configuration protocol (DOCP) for single edge to vehicular-fog offloading, with a matching protocol between multiple edge systems to resolve resource contention when resources from the same vehicular-fog were requested simultaneously.

Offloading optimization for vehicular-to-everything (V2X) systems was addressed in [91, 92]. Zhang et al. [91] considered hybrid offloading between vehicles and fogs, and formulated a mixed-integer, nonlinear programming (MINLP) solution for optimizing both user association and radio resource allocation in vehicular networks (VNET). To obtain a globally optimal solution, this MINLP problem was transformed by applying norm theory to non-convex nonlinear fraction optimization and then showed to be equivalent to convex optimization using weighted minimum mean square error (WMMSE) and Perron-Frobenius theory. Wang et al. [92] proposed a real-time traffic management algorithm for fog-based Internet-of-Vehicle (IoV) systems. This consisted of a three-tier architecture of fog, cloudlet, and cloud for providing computing resources to traffic management systems. They also looked into vertical offloading optimization between fog, cloudlet, and cloud.

| Metrics | |||||||||

| References | Offloading Types |

Cost |

Energy |

Capacity |

Latency |

Approach | Method | Evaluation | Application |

| [57] | Exact | Convex optimization | Simulation | Offloading in realistic | |||||

| human mobility scenario | |||||||||

| [58] | Analysis | Lyapunov optimization | Simulation | Fogging framework | |||||

| [59] | Scheme | Tree search algorithm | Simulation | Face recognition | |||||

| [60] | No approach | Real test-bed | Experimental | MCC application | |||||

| [61] | Analysis | Lyapunov optimization | Simulation | Face recognition | |||||

| [62] | Heuristic | ODLOO | Simulation | Generic user applications | |||||

| [63] | Analysis | Branch and bound | Simulation | Smart mobile device (SMD) | |||||

| [64] | Analysis | EPCO algorithm, | Simulation | Data partitioned in SMD | |||||

| LPCO algorithm | |||||||||

| [65] | Policy | One-dimensional | Simulation | MEC systems | |||||

| search algorithm | |||||||||

| [66] | Analysis | Lyapunov optimization | Simulation | Energy harvesting for | |||||

| devices | |||||||||

| [67] | Policy | Lyaponuv optimization | Simulation | Multi-user multi-tasking | |||||

| [68] | Scheme | Aura architecture | Experimental | Prototype design | |||||

| [82] | Analysis | Ant colony optimization | Simulation | Smart city | |||||

| [84] | Scheme | EECO scheme | Simulation | 5G heterogeneous networks | |||||

| [85] | Analysis | Game theory | Simulation | Payment strategy in edge | |||||

| computing | |||||||||

| [86] | Analysis | KKT conditions | Simulation | Augmented reality | |||||

| [56] | Heuristic | Iterative greedy | Simulation | Intelligent transportation | |||||

| systems | |||||||||

| [89] | Heuristic | Iterative greedy, | Simulation | Intelligent transportation | |||||

| DOCP | systems | ||||||||

| [69] | , | Scheme | Game theory | Simulation | FiWi networks | ||||

| [70] | No approach | LEAD algorithm | Simulation | MCC application | |||||

| [71] | Analysis | Simulated annealing | Experimental, | Traffic engineering | |||||

| [73] | Heuristic | Iterative search algorithm | Simulation | Generic user applications | |||||

| [74] | , | Analysis | Simulated annealing | Simulation | Industrial IoT | ||||

| [75] | Heuristic | LTS-AQW | Simulation | Real-time applications | |||||

| [76] | Heuristic | DPTO | Simulation | IoT applications | |||||

| [80] | , | Analysis | Iterative greedy | Simulation | Generic user applications | ||||

| [81] | , | Analysis | Subgradient iterative | Simulation | MCC applications and ITS | ||||

| method | |||||||||

| [87] | , | Scheme | Iterative search algorithm | Simulation | Multi-cell MEC networks | ||||

| [91] | , | Heuristic | Iterative searching | Simulation | Multimedia applications | ||||

| [92] | , | Analysis | Branch-and-bound and | Simulation | Traffic management system | ||||

| Edmonds–Karp | |||||||||

| [77] | , | Heuristic | Bisection method | Simulation | Multi-cell wireless network | ||||

| [78] | Heuristic | Two-phases iterative | Simulation | URLLC, eMBB, and MMTC | |||||

| optimization | |||||||||

| [79] | , | Analysis | Branch and bound | Simulation | Traffic engineering | ||||

| [88] | , | Scheme | Dynamic algorithm | Experimental | Traffic engineering | ||||

| [51] | , | No approach | Architecture | Osmotic computing | |||||

| [90] | , | Analysis | Simulated annealing | Simulation | Traffic engineering | ||||

| , | |||||||||

-

•

: Device; : Device to Device; MCC: Mobile Cloud Computing; MEC: Multi-access Edge Computing; IoT: ; EPCO: Energy-Optimal Partial Computation Offloading; LPCO: Latency-Optimal Partial Computation Offloading; LEAD: Latency-Aware workloAd offloaDing; EECO: Energy-Efficient Computing Offloading; KKT: Karush-Kuhn-Tucker; DOCP: Decentralized Offloading Configuration Protocol

A summary of the above-discussed literature is given in Table III. The organization of the comparison table is as follows. We discussed different core offloading methods used in the papers, including device-to-device (D2D) and device to other entities. Four standard metrics, i.e., cost, energy, capacity, and latency, are considered that are commonly used in most literature. Although there are other factors such as QoS, load balance, intensive, etc., those are not presented in the table but are already addressed in descriptions. Each paper has different approaches such as exact, analysis, scheme, policy, heuristic, and evaluation method presented in the table.

IV-B Machine Learning

| ML Approaches | Paper | Online learning | Supervisor | Learning object | Model dependence | Learning direction | Performance | Adaptability | ||

| Model based | Model free | Value based | Policy based | |||||||

| Supervised ML | [93, 95, 96] | Yes | Dataset | Depend on data and learning algorithm | No | |||||

| DL | [101, 105, 109, 110] | |||||||||

| MAB | [114] | No | Environment | Depend on the experience | Through exploration | |||||

| (RL)Q-Learning | [100, 104] | |||||||||

| (DRL)DQN | [97, 103, 107, 111, 120] | |||||||||

| (DRL)E2D | [98] | |||||||||

| (DRL)DDPG | [94, 96, 99, 102, 113, 108] | |||||||||

In federation architecture, an offloading module which distributes tasks from one entity to other entities or tiers is part of the control plane. The decision of task offloading in an extensive federated system must be carried out quickly, usually in seconds. Traditional optimization, such as a non-convex algorithm, carries out an exhaustive search that takes a long time to converge and violates the delay requirements of tasks [116]. Furthermore, a traditional optimization algorithm needs complete system information to determine offloading, which some federations may not provide. Intensive system monitoring that provides complete information for determining offloading action in a federation is not trivial because each provider uses different devices, protocols, and operating systems. Some applications provided by federation may also have different requirements [93, 94, 96, 102]. Machine learning is a suitable approach to address such offloading problems in a highly dynamic system with some unknown information.

Machine learning- based (ML) offloading can automatically improve its actions by learning from the collected data (dataset) or interacting with the environment. Some ML approaches are compared in Table IV. Supervised ML and Deep Learning (DL) update their model’s weight in order to execute the best offloading decision by learning from previous data, which is categorized as offline learning. A well-labelled data-set has first to be constructed before being provided to the ML algorithms. Some providers may restrict the details of their data-sets because of security. Another way to train an offloading model is through online interaction between a learning agent and the environment, which is termed Reinforcement Learning (RL). The learning agent observes an environment’s conditions to determine an offloading action. An environment will then give positive and negative feedback on the taken action, termed reward and punishment. In essence, an agent memorizes this interaction in the form of a table to decide the best action to take in the future. In a large system, such as a federation, maintaining agent interactions in a table leads to a scalability problem. Deep Reinforcement Learning exchanges the table with a neural network which can predict the reward of an actions for given environment’s state.

These concepts are classified into 11 types in Table V, depending on their offloading direction and destination in the federated fog, edge, and cloud.

1) Device-Edge-Cloud Offloading. Junior et al. [93] considered cloud capacity to provide an external computation capacity to UE applications, such as image editor, face detection, and online games. They proposed a device application architecture which consists of middle-ware, a profiler and a decision engine to determine offloading policy. Multiple classifiers were evaluated to find the most accurate one, with the objective of achieving high offloading accuracy, which would result in low latency and energy efficiency.

Studies [94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105] dealt with device to edge offloading. Other than offloading policy, Saguil and Azim [94] also considered caching strategy to locate application codes and data. Q-learning and DQN-based algorithm solved this joint optimization problem.

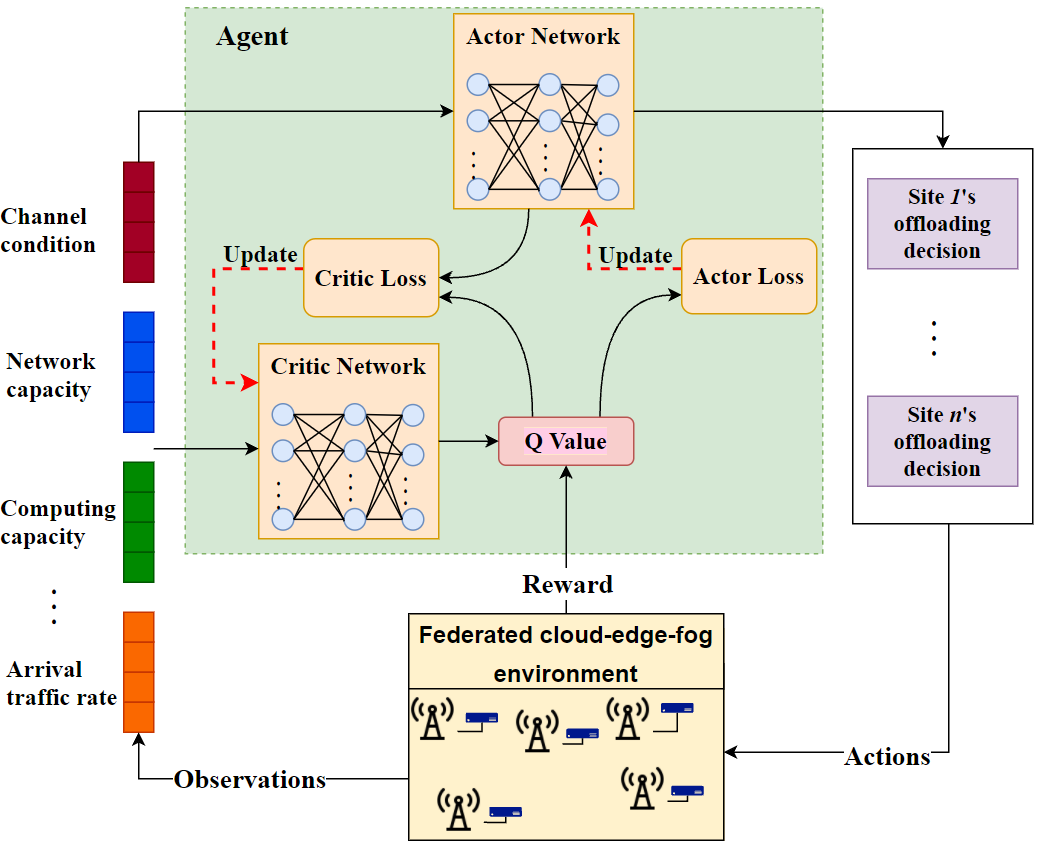

Li et al. [95] considered task deadline time in determining task offloading policy. Tey proposed an E2D DRL to derive the best offloading policy and solve the scalability problem of DQN action space. Wang et al. [96] optimized a UAV trajectory and offloading decision, which included discrete and continuous variables, by using multi-agent reinforcement learning. DDPG technique was chosen because it solved the overestimation problem of RL and works in high-dimensional action spaces. Figure 7 shows the DDPG algorithm overview. This is implemented on an agent that determines the optimal offloading decision based on federated system data such as channel status, arrival traffic information, computation and networking capacity. DDPG makes use of Actor and Critic neural networks. Actor networks predict the optimal action for a given state, whereas Critic networks predict the value of state-action pairs. The Q-value provides the discounted total future reward for the current state-action pair. By satisfying Bellman’s equation, the critic network learns this value.

Joint offloading and resources allocation optimization was carried out by Yang et al. [97], who applied single and multi-agent reinforcement learning to optimize caching and offloading decision, and LSTM to predict task popularity in pre-processing. Ale et al. [98] addressed the computation offloading problem of a multi-server MEC system by using DRL. Reformatting the features and storing in a tree-like data structure were carried out to accelerate DRL’s convergence time.

DRL was used in [99] to group NOMA’s UEs to minimize offloading energy, by minimizing multiple access interference. Chen et al. [100] extended DDPG with a temporal feature extraction network (TFEN) and a rank-based prioritized experience replay (rPER) to achieve training stability and reduce convergence time. Guo et al. [101] used a binary-tree based supervised ML to construct an intelligent offloading task with high accuracy and low complexity. Multichannel access problems arise in multi-user offloading when some mobile users utilise the same channel, which then results in longer transmission latency due to interference. Cao et al. [102] used multi-agent reinforcement learning to derive the best offloading policy. The user device plays the role of an agent that observes channels condition to determine offloading policy. Yang et al. [103] combined offline learning based on a feed-forward neural network and online inference to derive an offloading strategy in near real-time. Zhang et al. [120] enhanced the DQN algorithm with a heuristic offloading technique in order to reduce both latency and energy consumption. The objective of using an heuristic algorithm was to minimize convergence time. DQN and DDPG were compared in optimizing offloading decisions by [104]. DDPG outperform DQN in term of convergence time and performance in minimizing system latency. Li et al. [105] integrated a Lyapunov optimization with DDPG to achieve a long-term objective in online offloading.

Other than vertical offloading from UE devices to edge, He et al. [106] also considered horizontal offloading between UE devices. They applied QoE in determining offloading policy, and defined task priority assignment, redundant task elimination, and defined task scheduling to achieve optimum QoE. Since the offloading decision involved continuous action space, the DDPG-based method of DRL was used.

The edge and cloud federation was considered in [107, 108, 109]. Sun et al. [107] proposed a machine learning model cooperatively trained the two-tier edge-cloud architecture. The Industrial Internet of Things (IIoT) devices was used to the determined offloading their tasks to an edge or cloud, depending on which would satisfy the tasks in terms of latency. This is categorized as upward vertical offloading. If both edge and cloud could not meet the latency requirements, the IIoT device processed the task locally. Hou et al. [108] applied Cybertwin to coordinate resources between end-edge-cloud. Cybertwin functions as an intelligent agent that makes the offloading decisions necessary to accomplish the objectives of maximizing processing efficiency and task completion rate. They classified IoT applications into either delay-sensitive or delay-tolerant. To maximize processing efficiency, a joint optimization of hierarchical task offloading and resource allocation based on MADDPG was proposed. The offloading agent was trained in a federated fashion. These approaches share only a trained model during the training process, avoiding the sharing of local data, which could jeopardize privacy. Zhang et al. [109] discussed downward vertical offloading, which was carried out by multi-cloud systems to edge servers or mobile devices. Multiple clouds compete with each other to access network and MEC resources. A distributed offloading problem arises in a system with no centralized control, such as a multi-cloud system. They also proposed multi-agent Q-learning to determine optimum offloading policy, which minimizes system latency.

2) Device-Fog-Cloud Offloading. Devices to fog offloading was discussed in [110, 111, 112]. Saguil and Azim [110] considered worst-case execution time in determining offloading policy to fog node. Their objective was to minimize the execution time of time-consuming ML tasks generated by an embedded system. Li et al. [111] considered time-varying task characteristics and fog node capability in determining the offloading policy of a DQN-based algorithm. Alelaiwi et al. [113] also considered a fog and cloud federation, particularly horizontal offloading between fogs. DL was used to predict response times at multi-tier fog, edge, and cloud, which were task- offloading destinations. They applied Deep Belief Network (DBN) and logistic regression layer, which accepted processing, memory and link capacity as inputs. Ren et al. [112] used MADRL-based DQN to determine the best fog access point (F-AP) to serve as an IIoT node request. Because of the capacity constraints of the F-AP, some IIoT device requests have to be offloaded to the cloud, a decision made using a low-complexity greedy algorithm.

3) V2X Offloading. A federation which included a V2X system were considered in [114, 115, 116, 117, 118, 119]. The papers [114, 115, 116] optimized vertical offloading from vehicles to edge servers. Online and offline learning were used by Fan et al. [114] to maximize user and access network throughput. Pareto optimization mapped the vehicles and access points, and the optimal results were used to construct a data set for DNN model training. An online stage used the output of the trained DNN model to predict the optimal association between vehicles and access points.

Ning et al. [115] optimized offloading decisions and resource allocations jointly in a vehicular edge system, with the objective of maximizing QoE. DQN-based offloading task scheduling, which also considers user mobility, was proposed. Sonmez et al. [116] proposed an ML-based task orchestrator for vehicular edge systems, including LAN, MAN, WAN networks. An ML-based task orchestrator guarantees a task being served successfully (in time) and in the lowest service time, and Xie et al. [117] considered not only vertical offloading between vehicles and edge, but also considered horizontal offloading between vehicles. Vehicles, which have tasks to offload, learned the environment with the multi-armed bandit (MAB) method to determine offloading policy, which resulted in lower average latency than the Greedy algorithm.

The papers [118, 119] considered a fog and cloud federation to accommodate offloading tasks from vehicles. Khayyat et al. [118] used deep-Q learning, which has multiple DNN that can work in parallel to obtain the optimal offloading decision. In their environment, five DNNs would outperform a single DNN. Gao et al. [119] addressed the task dependency offloading problem by using multi-agent reinforcement learning. Their objective was minimizing energy and latency of the offloading task. LSTM was integrated into an RL to alleviate an incomplete environment’s state.

| References | Offloading Types | Metrics | Method | Agent | Evaluation | Application | |||

|

Cost |

Energy |

Capacity |

Latency |

||||||

| [93] | ML supervised | 1 | Experimental | Multimedia apps. | |||||

| [94] | (DRL)DQN | 1 | Simulation | Real-time video analytic | |||||

| [95] | (DRL)E2D | 1 | Simulation | Video, smart home, and AI apps | |||||

| [96] | (MADRL)DDPG | n | Simulation | UAV based application | |||||

| [97] | (MARL)Q-Learning | n | Simulation | Generic user applications | |||||

| [98] | (DRL)DQN | 1 | Simulation | Generic user application | |||||

| [99] | (DRL)DQN | 1 | Simulation | Generic user applications | |||||

| [100] | (DRL)DDPG+ TADPG | n | Simulation | Generic user applications | |||||

| [101] | ML supervised | 1 | Simulation | Resource-hungry IoT apps. | |||||

| [102] | (MADRL)DDPG | n | Simulation | IIoT | |||||

| [103] | DL | 1 | Simulation | Generic user applications | |||||

| [104] | (DRL)DQN & DDPG | 1 | Simulation | Generic user applications | |||||

| [105] | (DRL)DDPG+ Optimization | 1 | Simulation | Generic user applications | |||||

| [106] | , | (DRL)DDPG | 1 | Simulation | Resource-hungry applications | ||||

| [120] | (DRL)DQN | 1 | Simulation | Generic user applications | |||||

| [107] | , | DL | 1 | Simulation | IIoT | ||||

| [108] | (MARL)DDPG | n | Simulation | IoT applications | |||||

| [109] | , | (MARL)Q-Learning | n | Simulation | Generic user applications | ||||

| [110] | ML supervised | 1 | Experimental | IoT with ML jobs | |||||

| [111] | (DRL)DQN | 1 | Simulation | Generic user applications | |||||

| [112] | , | (MADRL) DQN | n | Simulation | IIoT applications | ||||

| [113] | , , | DL-unsupervised | 1 | Simulation | Mobile applications | ||||

| [114] | DL + Pareto optimization | 1 | Simulation | V2X applications | |||||

| [115] | (DRL) DQN | 1 | Simulation | V2X applications | |||||

| [116] | ML, MAB | 1 | Emulation | V2X applications | |||||

| [117] | , | MAB | 1 | Simulation | IoT application | ||||

| [118] | , | (DRL) DQN | 1 | Simulation | Generic user applications | ||||

| [119] | (MARL)DDPG+LSTM | n | Simulation | Payment application | |||||

A summary of ML-based offloading literature is shown in Table V. The comparisons are classified based on the offloading types in the federation. Like traditional optimization-based offloading, the commonly used metrics in the literature are cost, energy, capacity, and latency. The ML-based offloading methods are supervised ML, DL, RL, and DRL. Each ML algorithm has different characteristics, which are discussed in Table IV.

IV-C Traditional Optimization vs. Machine Learning

Three reasons why machine learning is required for offloading federated MEC systems are summarized in Table VI. First, a control plane module must make an immediate choice about offloading. Traditional optimization, with its high computational complexity and exhaustive searching, is not capable of meeting a control plane’s latency requirement. Second, monitoring dynamic MEC environments is not trivial and can introduce unknown information into the control plane module that is responsible for determining offloading policy. Third, modelling a heterogeneous MEC system precisely is challenging. Some researchers carried out traditional optimization in federated offloading using a system snapshot.

The papers [103, 105, 108, 120] employed ML to achieve fast offloading decisions in a complex federated system. These offloading decisions and resource allocations were modelled as mixed-integer nonlinear programming (MINLP) that would take a long time to solve by conventional optimization. Yang et al. [103] used DL approaches that solved the MINLP problem in near-real-time. DL also outperforms a conventional branch-and-bound algorithm in terms of system costs. A mobile device in an MEC system should take an online offloading decision in a complex and dynamic system which makes relaxation-based and local-search-based approaches to rerun in every change to the environment. These traditional optimization algorithms carry out exhaustive searching, which is not suitable for online decisions. Zhang et al. [120] extended a heuristic algorithm to the DQN, resulting in a fast-convergence algorithm suitable for real-time application offloading, and Huang et al. [105] proposed a Lyapunov-aided DRL framework to determine offloading policy in near-real-time with a near-optimum result compared to exhaustive searching approaches.

Offloading in dynamic federated systems with unknown information was considered by proposing ML-based approaches in the papers [114, 119, 98, 102, 115]. Fan et al. [114] extended an SDN-controller with DL to learn a dynamic V2X system and carried out optimum offloading. This approach outperformed conventional traffic offloading (CTO), which uses heuristic algorithms, in terms of network throughput. Gao et al. [119] modelled offloading problem of V2X systems into Multi-Armed Bandit (MAB) and solved it by Probability-Based V2X Communication(PBVC) and adaptive learning-based task offloading (ALTO). Ale et al. [98] proposed DRL to address dynamic MEC systems for IoT. The current optimization techniques only take a snapshot of a system and cannot not address the dynamic environment. In their previous work Ale et al. [121], predicted traffic conditions and updated the cache by using DL. However, DL needs a large, labeled data-set to train models.

Channel conditions, available communication, and computation resources change dynamically over time. Such changes may render some information unknown to IIoT agents which determine offloading policy. Guo et al. [102] used a multi-agent DDPG approach to tackle an offloading problem with some unknown or incomplete information. To ensure that a conventional algorithm, such as Greedy, works in this scenario, assumptions such as requiring agents to be aware of the channel and resource conditions in real-time, were made. In terms of the success rate in utilizing available channels, the results showed that MADDPG outperforms the Greedy algorithm. Zhaolong et al. [115] addressed offloading and resource allocation problems by using a DRL approach. The proposed DRL approach had higher system utilities than a Greedy and a little lower than Brute-force. However, Brute-force carried out exhaustive searching, which is not suitable for a control plane.

A heterogeneous federated system is difficult to model precisely, which make traditional offloading optimization difficult to implement. The papers [116, 106] used ML to carry out offloading in such a heterogeneous system. In Sonmez et al. [116], the ML-based approach outperformed the Game-theory-based optimization in term of the success of tasks. Quality of experience (QoE)-aware task offloading in a Mobile Edge Network (MEN), which has heterogeneous computation and communication resources, is difficult to model for conventional optimization. He et al. [106] therefore proposed Double DDPG with which its learning agents could automatically update its model according to its experiences in interacting with the environment. This proposed method outperformed Greedy in term of latency.

| References | ML Approach | Traditional Optimization | Reason of using ML | Conclusion |

|---|---|---|---|---|

| [103] | DL | Branch and bound | Computation complexity | ML-based offloading has lower cost |

| [120] | DRL | Greedy and Heuristic | ML-based offloading has lowest convergence time with better latency and energy usage | |

| [105] | DRL | Relaxation-based, and local-search-based approaches | ML-based offloading has lowest convergence time with near-optimum result in term of computation rate | |

| [114] | DL | Heuristic CTO | Unknown information in dynamic Environtment | ML-based offloading has higher throughput |

| [119] | MAB | Greedy | ML-based offloading has lower latency | |

| [98] | DRL | Greedy | ML-based offloading has more completed task | |

| [102] | MADDPG | Greedy | ML-based has lower latency and has higher channel access success rate | |

| [115] | DQN | Greedy, Bruteforce | ML-based offloading has higher system utilities than greedy and little bit lower than Bruteforce | |

| [116] | ML, MAB | Game theory optimization | Heterogenous environment | ML-based offloading has fewer failed task |

| [106] | Double DDPG | Greedy | ML-based offloading has lower latency |

The references in Table VI do not specifically compare the traditional optimizations with the ML-based approaches. Most of them used model-free reinforcement learning approaches, such as DQN and DDPG, because these can directly adopt a model from the environment and do not need to provide the environment’s model to the learning agent. The Greedy algorithm is the preferred traditional algorithm because, with incomplete information from the environment, Greedy can still converge, although it may become stuck in local optima/minima. ML-based approaches can converge faster than traditional optimization with near-optimum results.

V Lessons Learned

We categorize the lessons learned from this survey on the approaches that were used in the survey, such as traditional optimization and machine learning.

V-A Traditional Optimization-Based Offloading

Some understanding comes from survey of papers on traditional optimization-based offloading which explore the basic idea of carrying out offloading in a cloud-edge-fog system.

1) Traffic offloading is a short-term solution to the dynamic arrival traffic rate, while capacity allocation is a long-term solution. Traffic and task offloading in an MEC system can be categorized into control plane and management plane problems. On the arrival of traffic or a task, the control plane decides on offloading policy, leading to an objective such as minimizing latency. The control plane responds to arriving traffic in a matter of seconds. On the other hand, the management plane forecasts future traffic or task arrival rates based on historical data. The system’s capacity is then scaled to accommodate the predicted offloaded traffic. By integrating the control and management plane modules, it is possible to meet the arrival traffic or task’s latency requirements while allocating the fewest possible resources.

2) There are two offloading decisions to be made– where to offload, and how much to offload. An offloading decision could be a binary decision, which is a decision to offload or not, or a ratio-based offloading decision, which determines how much and where to offload tasks or traffic. Binary offloading is usually carried out by UEs, as UEs lack complete knowledge of external system resources. An UE measures its capacity to compute a task locally or to offload to external resources. Ratio-based offloading is carried out by network devices controlled by an orchestrator, which has global information to determine where and how much to offload.