A Survey on Multi-modal Summarization

Abstract.

The new era of technology has brought us to the point where it is convenient for people to share their opinions over an abundance of platforms. These platforms have a provision for the users to express themselves in multiple forms of representations, including text, images, videos, and audio. This, however, makes it difficult for users to obtain all the key information about a topic, making the task of automatic multi-modal summarization (MMS) essential. In this paper, we present a comprehensive survey of the existing research in the area of MMS, covering various modalities like text, image, audio, and video. Apart from highlighting the different evaluation metrics and datasets used for the MMS task, our work also discusses the current challenges and future directions in this field.

1. Introduction

Everyday, the Internet is flooded with tons of new information coming from multiple sources. Due to the technological advancements, people can now share information in multiple formats with various modes of communication to be used at their disposal. This alarmingly increasing amount of content on the Internet makes it difficult for the users to receive useful information from the torrent of sources, necessitating research on the task of multi-modal summarization (MMS). Various studies have shown that including multi-modal data as input can indeed help improve the summary quality (Jangra et al., 2020b; Li et al., 2017). Zhu et al. (2018) claimed that on an average having a pictorial summary can improve the user satisfaction by 12.4% over a plain text summary. The fact that nearly every content sharing platform has a provision to accompany an opinion or fact in multiple media forms, and every mobile phone has the feature to deliver that kind of facility are indicative of the superiority of a multi-modal means of communication in terms of ease in conveying and understanding information.

Information in the form of multi-modal inputs has been leveraged in many tasks other than summarization including multi-modal machine translation (Specia, 2018; Caglayan et al., 2019; Huang et al., 2016; Elliott, 2018; Elliott et al., 2017), multi-modal movement prediction (Wang et al., 2018; Kirchner et al., 2014; Cui et al., 2019), multi-modal question answering (Singh et al., 2021), multi-modal lexico-semantic classification (Jha et al., 2022), multi-modal keyword extraction (Verma et al., 2022), product classification in e-commerce (Zahavy et al., 2016), multi-modal interactive artificial intelligence frameworks (Kim et al., 2018), multi-modal emoji prediction (Barbieri et al., 2018; Coman et al., 2018), multi-modal frame identification (Botschen et al., 2018), multi-modal financial risk forecasting (Sawhney et al., 2020; Li et al., 2020b), multi-modal sentiment analysis (Yadav and Vishwakarma, 2020; Morency et al., 2011; Rosas et al., 2013), multi-modal named identity recognition (Moon et al., 2018b; Arshad et al., 2019; Zhang et al., 2018; Moon et al., 2018a; Yu et al., 2020; Suman et al., 2020), multi-modal video description generation (Ramanishka et al., 2016; Hori et al., 2017, 2018), multi-modal product title compression (Miao et al., 2020) and multi-modal biometric authentication (Snelick et al., 2005; Fierrez-Aguilar et al., 2005; Indovina et al., 2003). The shear number of application possibilities for multi-modal information processing and retrieval tasks are quite impressive. Research on multi-modality can also be utilized in other closely related research problems like image-captioning (Chen and Zhuge, 2019, 2020), image-to-image translation (Huang et al., 2018), seismic pavement testing (Ryden et al., 2004), aesthetic assessment (Zhang et al., 2014; Kostoulas et al., 2017; Liu and Jiang, 2020), and visual question-answering (Kim et al., 2016a).

Text summarization is one of the oldest problems in the fields of natural language processing (NLP) and information retrieval (IR), that has attracted various researchers due to its challenging nature and potential for many applications. Research on text summarization can be traced back to more than six decades in the past (Luhn, 1958). The NLP and IR community have tackled research in text summarization for multiple applications by developing myriad of techniques and model architectures (See et al., 2017; Chen and Bansal, 2018; Jangra et al., 2020a; Liu et al., 2022). As an extension to this, the problem of multi-modal summarization adds another angle by incorporating visual and aural aspects into the mix, making the task more challenging and interesting to tackle. This extension of incorporating multiple modalities into a summarization problem expands the breadth of the problem, leading to wider application range for the task. In recent years, multi-modal summarization has experienced many new developments, including release of new datasets, advancements in techniques to tackle the MMS task, as well as proposals of more appropriate evaluation metrics. The idea of multi-modal summarization is a rather flexible one, embracing a broad range of possibilities for the input and output modalities, and also making it difficult to apprehend existing works on the MMS task with knowledge of uni-modal summarization techniques alone. This necessitates a survey on multi-modal summarization.

The MMS task, just like any uni-modal summarization task, is a demanding one, and existence of multiple correct solutions makes it very challenging. Humans creating a multi-modal summary have to use their prior understanding and external knowledge to produce the content. Establishing computer systems to mimic this behaviour becomes difficult given their inherent lack of human perception and knowledge, making the problem of automatic multi-modal summarization a non-trivial but interesting task.

Although quite a few survey papers were written for uni-modal summarization tasks including surveys on text summarization (Yao et al., 2017; Gambhir and Gupta, 2017; Tas and Kiyani, 2007; Nenkova and McKeown, 2012; Gupta and Lehal, 2010; Jain et al., 2022a) and video summarization (Kini and Pai, 2019; Sebastian and Puthiyidam, 2015; Money and Agius, 2008; Hussain et al., 2020; Basavarajaiah and Sharma, 2019), and a few survey papers covering multi-modal research (Baltrušaitis et al., 2018; Soleymani et al., 2017; Atrey et al., 2010; Jaimes and Sebe, 2007; Ramachandram and Taylor, 2017; Sebe et al., 2005). However, to the best of our knowledge, we are the first to present a survey on multi-modal summarization. The closest work to ours is the work on multi-dimensional summarization by Zhuge (2016), who proposes the method for summarization of things in cyber-physical society through a multi-dimensional lens of semantic computing. However, our survey is distinct from that work as Zhuge (2016) focuses on how understanding human behaviour, psychology, and advances in cognitive sciences can help to improve the current summarization systems in the emerging cyber-physical society while in this manuscript we mostly focus on the direct applications and techniques adopted by the research community to tackle the MMS task. Through this manuscript, we unify and systematize the information presented in related works, including the datasets, methodology, and evaluation techniques. With this survey, we aim to assist researchers familiarize with various techniques and resources available to proceed with research in the area of multi-modal summarization.

The rest of the paper is structured as follows: We formally define the MMS task in Section 2. In Section 3, we provide an extensive organization of existing works. In Section 4, we give an overview about the techniques used for the task of MMS. In Section 5 we introduce the datasets available for the MMS task and evaluation techniques devised for the evaluation of multi-modal summaries, respectively. We discuss about possibilities of future work in Section 7 and conclude our paper in Section 8.

2. Multi-modal Summarization task

In this section we formally define what classifies as a multi-modal summarization task. Before formalizing the multi-modal summarization we broadly define the term summarization111In this paper, summarization stands for automatic summarization unless specified otherwise.. According to Wikipedia222https://en.wikipedia.org/wiki/Automatic_summarization, automatic summarization is “the process of shortening a set of data computationally, to create an abstract that represents the most important or relevant information within the original content.” Formally, summarization is the process of obtaining the set such that , where is the output summary, is the input data, and function is the summarization function.

The multi-modal summarization task can be defined as a summarization task that takes more than one mode of information representation (termed as modality) as input, and depends on information sharing across different modalities to generate the final summary. Mathematically speaking, when the input dataset can be broken down into several partially disjoint sets of different modality information , where and several pairs of for such that the shared latent information between is not , then the task of obtaining the set is known as multi-modal summarization333The reason for restricting for the task definition is limitation of current techniques, that are unable to successfully generate modalities other than text for multi-modal summarization. Even though there have been some recent breakthroughs in text-to-image generation (like Open AI’s DALL-E (Ramesh et al., 2021)), and text-to-speech synthesis (like Google’s Duplex (Leviathan and Matias, 2018)); they still lack the level of integrity and robustness to be used in a real-world application like MMS.. If for , then the output summary is multi-modal; otherwise, the output is a uni-modal summary.

In this survey, we mainly focus on recent works that have natural language as the central modality444We believe that the MMS models that have video as the central modality tend to be closely related to the task of video summarization., where a central modality (or key modality) is selected according to the intuition: ”For any information processing task in multi-modal scenarios, including content summarization, amongst all the modalities, there is often a preferable mode of representation based on the significance and ability to fulfill the task” (Jangra et al., 2021). Other modalities that aid the central modality to convey information are termed as adjacent modalities.

Various aspects of multi-modal summarization: Literature has explored the MMS task for myriad of reasons and motives, and doing so, has lead to different challenges and variants of the task. Some of the most prominent and interesting ones are discussed below:

-

•

Combined complementary-supplementary multi-modal summarization task (CCS-MMS) (Jangra et al., 2021): Jangra et al. (2021) proposed the CCS-MMS task of generating a multi-modal summary that considers text as the central modality, and images, audio and videos as the adjacent modality. The task is to generate the multi-modal summary such that it consists of both supplementary and complementary enhancements, which are defined as follows:

-

–

Supplementary enhancement: When the adjacent modalities reinforce the facts and ideas presented in the central modality, the adjacent modalities are termed as supplementary enhancements.

-

–

Complementary enhancement: When the adjacent modalities complete the information by providing additional but relevant information that is not covered in the central modality, the adjacent modalities are termed as complementary enhancements.

-

–

-

•

Summarization objectives: We can distinguish prior work based on summarization objectives they have used. For instance, Li et al. (2017) uses weighted sum of three sub-modular objective functions to create an extractive text summarization system that is guided by multi-modal inputs. The chosen submodular functions are - salience of input text, image information covered by text summary, and non-redundancy in input text. Jangra et al. (2020b) uses a single objective function for an ILP setup, that is the weighted average of uni-modal salience, and cross-modal correspondence. Jangra et al. (2020c) proposes two different sets of multi-modal objectives for the task of extractive multi-modal summary generation - a) summarization-based objective, and b) clustering-based objectives. For summarization-based objectives, they use the following three objectives - i) Salience(txt) / Redundancy(txt), ii) Salience(img) / Redundancy(img), and iii) cross-modal correspondence; while for clustering-based objectives, they use PBM (Pakhira et al., 2004), a popular cluster validity index (a function of cluster compactness and separation) to evaluate the uni-modal clusters of image and text, giving the following set of objectives - i) PBM(txt), PBM(img), and cross-modal correspondence. Almost all the neural networks based multi-modal summarization frameworks (Li et al., 2018a; Palaskar et al., 2019; Chen and Zhuge, 2018a, b) on the other hand use the standard negative log-likelihood function over the output vocabulary as the training objective. Some works also use textual and visual coverage loss to prevent over-attending the input as well (Li et al., 2018a; Zhu et al., 2018).

-

•

Multi-modal social media event summarization: Various works have been conducted on the social media data that consists of opinions and experiences of a diverse set of population. Tiwari et al. (2018) proposes the problem of summarizing asynchronous information from multiple social media platforms like Twitter, Instagram, and Flickr to generate a summary of event that is widely covered by users of these platforms extensively. Bian et al. (2013) propose multi-modal summarization of trending topics in microblogs. They use Sina Weibo555http://www.weibo.com/ microblogs for the experimentation, which is a very popular microblogging platform in China. Qian et al. (2018) uses the Weibo platform information to summarize disaster events like train crash and earthquakes.

3. Organization of existing work

Different attempts have been made to solve the MMS task, and thus it is important to categorize the existing works to get a better understanding of the task. We categorize the prior works into three broad categories, depending upon encoding the input, the model architecture, and decoding the output. We have also illustrated these categorizations through a generic model diagram in Figure 1. A detailed pictorial representation of the taxonomy is shown in Figure 2 and a comprehensive study is provided in Table 2 (note that if some classifications are not marked in the table, then either the information about that category was not present, or is not applicable.).

3.1. On the basis of encoding the input

A multi-modal summarization task is highly driven by the kind of input it is given. Due to this dependency on diverse input modalities, the feature extraction and encoding strategies also differ for different multi-modal summarization systems. Existing works can be distinguished from others on the basis of the type of input and its encoding strategy in the following categories:

Multi-modal Diversity (MMD): Different combinations of input (text, image,video & audio) involve different preprocessing and encoding strategies. We can classify the existing works depending on the combination of modalities in which the input is represented. Various combinations within the input modalities like text-image (Zhu et al., 2018; Li et al., 2018a; Chen and Zhuge, 2018a), text-video (Fu et al., 2020; Li et al., 2020a), audio-video666Note that audio-video and text-audio-video works are grouped together since in most of the existing works, automatic speech transcription is performed to obtain the textual modality part of data in the pre-processing step. (Erol et al., 2003; Evangelopoulos et al., 2013), and text-image-audio-video (UzZaman et al., 2011; Li et al., 2017; Jangra et al., 2020b, c, 2021) have been explored in the literature of MMS. The different feature extraction strategies for individual modalities are described in Section 3.1.1.

Input Text Multiplicity (ITM): Since a major focus of this survey is on MMS tasks with text as the central modality, the number of text documents in input can also be one way of categorizing the related works. Depending upon whether the textual input is single-document (Chen and Zhuge, 2018b; Li et al., 2018a; Zhu et al., 2018) or multi-document (Li et al., 2017; Jangra et al., 2020b, c, 2021), the input preprocessing and the overall summarization strategies might differ. Having multiple documents makes the task a lot more challenging, since the degree of redundant information in input becomes a lot more prominent, making the data somewhat more noisy (Ma et al., 2020).

Multi-modal Synchronization (MMSy)777Note that the term synchronization is mostly used when there is a continuous media in consideration.: Synchronization refers to the interaction of two or more things at the same time or rate. For multi-modal summarization, having a synchronized input indicates that the multiple modalities have a coordination in terms of information flow, making them convey information in unison. We then classify input as synchronous (Erol et al., 2003; Evangelopoulos et al., 2013) and asynchronous (Li et al., 2017; Jangra et al., 2020b, c; Tjondronegoro et al., 2011; Jangra et al., 2021).

Domain Specificity (DS): Domain can be defined as the specific area of cognition that is covered by any data, and depending upon the extent of domain coverage, we can classify works as domain-specific or generic. The approach to summarize a domain-specific input can differ from the generic input greatly, since feature extraction in the former can be very particular in nature while not so in the latter, impacting the overall techniques immensely. Most of the news summarization tasks (Jangra et al., 2020c; Zhu et al., 2018; Li et al., 2017; Chen and Zhuge, 2018a; Jangra et al., 2021) are generic in nature, since news covers information about almost all the domains; whereas movie summarization (Evangelopoulos et al., 2013), sports event summarization for tennis (Tjondronegoro et al., 2011) and soccer (Sanabria et al., 2019), meetings recording summarization (Erol et al., 2003), tutorial summarization (Libovickỳ et al., 2018), social media event summarization (Tiwari et al., 2018) are examples of domain specific tasks.

3.1.1. Feature Extraction Strategies

In a multi-modal setting, pre-processing & feature extraction becomes vital step, since it involves extracting features from different modalities. Each input modality has been dealt with using modal-specific feature-extraction techniques. Even though some works tend to learn the semantic representation of data using their own proposed models, nearly all follow the same steps for feature extraction. Since the related works have different sets of input modalities, we describe feature extraction techniques for each modality individually.

Text: Traditionally, before the era of deep learning, Term Frequency-Document Inverse Frequency (TF-IDF) (Salton, 1989) was used to identify relevant text segments (Erol et al., 2003; Tjondronegoro et al., 2011; Evangelopoulos et al., 2013). Due to significant advancements in feature extraction, almost all the MMS tasks in the past five years either use pre-trained embeddings like word2vec (Mikolov et al., 2013) or Glove (Pennington et al., 2014). These pre-trained embeddings utilize the fact that the semantic information of a word is related to its contextual neighbors for training. Some works also train similar embeddings on their own datasets (Zhu et al., 2018, 2020a) (refer to Feature Extraction in Section 4.1.1). Some works also adopt different pre-processing steps, depending upon the task specifications. For example, Tiwari et al. (2018) applied a normalizer to handle the concept of expressive lengthening dealing with microblog datasets. Even though current MMS systems have not yet adopted them, it is worth mentioning Transformer-based word representations (Vaswani et al., 2017) like BERT, etc. that have achieved state-of-the-art performance in the vast majority of NLP and vision tasks. This achievement can be credited to their fast training due to parallelization, and ability to pre-train the language models on unlabelled corpora. We even have multi-lingual embeddings like LabSE (Feng et al., 2020), and multi-modal text-image embeddings like UNITER (Chen et al., 2020), VilBERT (Lu et al., 2019), VisualBERT (Li et al., 2019), Pixel-BERT (Huang et al., 2020), etc.

| Pre-trained network | Works using this framework |

|---|---|

| VGGNet (Simonyan and Zisserman, 2015) | Li et al. (2017), Li et al. (2018a), Zhu et al. (2018), Chen and Zhuge (2018a), Zhu et al. (2020a), Jangra et al. (2020b), Jangra et al. (2020c), Chen and Zhuge (2018b), Modani et al. (2016), Jangra et al. (2021) |

| ResNet (He et al., 2016) | Fu et al. (2020), Li et al. (2020a), Li et al. (2020c) |

| GoogleNet (Szegedy et al., 2015) | Sanabria et al. (2019) |

Images: Images, unlike text, are non-sequential and have a two-dimensional contextual span. Convolutional neural network (CNN) based deep neural network models have proven to be very promising in feature extraction tasks, but training these models requires large datasets, making it difficult to train features on MMS datasets. Hence, most of the existing works use pre-trained networks (e.g., ResNet (He et al., 2016), VGGNet (Simonyan and Zisserman, 2015), GoogleNet (Szegedy et al., 2015)) trained on large image classification datasets like ImageNet (Deng et al., 2009). The technique of extracting local features (containing information about a confined patch of image) along with global features has shown promise in the MMS task as well (Zhu et al., 2018). A detailed list of frameworks that use pre-trained deep learning networks can be found in Table 1. Tiwari et al. (2018) uses Speeded-Up Robust Features (SURF) for each image, following a bag-of-word approach to creating a visual vocabulary. Chen and Zhuge (2018b) handle images by first extracting the Scale Invariant Feature Transform (SIFT) features. These SIFT features are fed to a hierarchical quantization module (Qian et al., 2014) to obtain a 10,000-dimensional bag of the visual histogram. Having been inspired by the success of self-attention and Transformers (Vaswani et al., 2017) in effectively modeling textual sequences, researchers in computer vision have adopted the techniques like self-attention, unsupervised pre-training, parallelizability of transformer architecture, etc. to better model the image representations888The readers are encouraged to read the extensive survey provided by Khan et al. (2021).. In order to adopt the self-attention layer dedicated to text sequences, Parmar et al. (2018) proposed a framework that restricts the self-attention to the local neighborhoods, thus significantly increasing the size of images that the model can process, despite maintaining larger receptive fields per layer than a CNN framework. Dosovitskiy et al. (2020) illustrated that usage of self-attention in conjunction with CNNs is not required, and a pure transformer applied to the sequence of image patches can also perform well on image classification tasks. Touvron et al. (2021) developed and optimized deep image transformer frameworks that do not saturate early with more depth.

To the best of our knowledge, none of the existing multi-modal summarization works use image transformers to encode the images. Since these large-scale models have a lot more capability to store more learned patterns from large-scale datasets due to the huge parameter space, they are bound to improve the overall summarization process by aiding in better image understanding.

Audio and video: Audio and video are usually present together as a single synchronized continuous media, and hence we discuss the pre-processing techniques used to extract features from them simultaneously. Continuous media has been processed in many diverse ways. Since audios and videos are susceptible to noise, it becomes of utmost importance to detect relevant segments before proceeding to the training phase999Note that some deep neural models like Fu et al. (2020) or Li et al. (2020a) prefer to encode individual frames using CNNs, and then use trainable RNNs to encode temporal information in videos. This CNN-RNN framework is not part of pre-processing, but instead, it belongs to the main model since these layers are also affected during training.. While some works have adopted a naïve sliding window approach, making equal length cuts and further experimenting on these segments (Erol et al., 2003), quite a few have done a modal conversion, changing the information media using automatic speech transcription to generate speech transcriptions and extracting key-frames from video using techniques like boundary shot-detection (Jangra et al., 2020c, b; Li et al., 2017; Tjondronegoro et al., 2011; Jangra et al., 2021). Some works have also taken into account the nature of the dataset, and performed semantic segmentation, getting better segment slices. For example, Tjondronegoro et al. (2011) worked on a tennis dataset and used the information that the umpire requires the audience to remain quiet during the match point, performing segmentation consisting of a segment that begins with low audio activity followed by high audio energy levels as a result of the cheering and the commentary. If the audio and video are converted into another modality, then their pre-processing follows the same procedure as the new modalities, whereas, in the case of segmentation, various metrics like acoustic confidence, audio magnitude, sound localization for audio, motion detection, and Spatio-temporal features driven by intensity, color, and orientation for video have been explored to determine the salience and relevance of segments depending upon the task at hand (Erol et al., 2003; Li et al., 2017; Evangelopoulos et al., 2013).

Cross-modal correspondence: Although the majority of works train their own shared embedding space for multiple modalities using the information from the target datasets (Li et al., 2018a; Zhu et al., 2018; Libovickỳ et al., 2018), quite a few works (Jangra et al., 2020c, b; Li et al., 2017; Modani et al., 2016; Jangra et al., 2021) also tend to use pre-trained neural network models (Wang et al., 2016; Karpathy et al., 2014) trained on the image-caption datasets like Pascal1k (Rashtchian et al., 2010), Flickr8k (Hodosh et al., 2013), Flick30k (Young et al., 2014) etc. to leverage the information overlap amongst different modalities. This becomes a necessity for small datasets that are mostly used for extractive summarization. However even these pre-trained models cannot process raw data, and hence the text and image inputs are first pre-processed to desired embedding formats and then are fed to these models with pre-trained weights. For example, Wang et al. (2016) required a 6,000-dimensional sentence vector and 4,096-dimensional image vector generated by applying Principal Component Analysis (PCA) (Pearson, 1901) to the 18,000-dimensional output from the Hybrid Gaussian Laplacian mixture model (HGLMM) (Klein et al., 2014) and extracting the weights from the final fully connected layer, , from VGGNet (Simonyan and Zisserman, 2015), respectively. In recent years, various Transformer-based (Vaswani et al., 2017) models have also been developed to correlate semantic information across textual and visual modalities. These BERT (Devlin et al., 2019) inspired models include ViLBERT (Lu et al., 2019), VisualBERT (Li et al., 2019), VideoBERT (Sun et al., 2019), VLP (Zhou et al., 2020), Pixel-BERT (Huang et al., 2020) etc. to name a few. There has also been some video-text representation learning like (Lin et al., 2021) and (Patrick et al., 2020) that can be used to summarize multi-modal content with continuous modalities. However, none of the recent works on multi-modal summarization has utilized these transformer-based techniques in their system pipelines.

Domain specific techniques: Most of the systems proposed to solve the problem of multi-modal summarization are generic and can be adapted to other domains and problem statements as well. But, there do exist some works that benefit from the external knowledge of particular domains and problem settings to create better-performing systems. For instance, Tjondronegoro et al. (2011) utilizes the fact that in tennis, the umpire always requires spectators to be silent before a serve, until the end of the point. The authors also pointed out that the end of the point is usually marked by a loud cheer from the supporters of the players in the audience. They used this fact to perform smooth segmentation of tennis clips using audio energy levels to indicate the start and end positions of a segment. Similar to this, Sanabria et al. (2019) utilized atomic events in a game of soccer like a pass, goal, dribble, etc. to segment the video, which is later connected together to generate the summary. Other than sports, such domain-specific solutions have also been adopted in other domains. For example, Erol et al. (2003), when summarizing meeting recordings of a conference room, seeks out some visual activity like “someone entering the room” or “someone standing up to write something on a whiteboard” to detect some event likely to contain relevant information. In a different domain setting, people have benefited from other data pre-processing strategies, for instance, Li et al. (2020c) extracts various key aspects of products like “environmentally friendly refrigerators” or “energy efficient freezers” to generate a captivating summary for Chinese e-commerce products.

3.2. On the basis of method

A lot of various approaches have been developed to solve the MMS task, and we can organize the existing works on the basis of proposed methodologies as follows:

Learning process (LP): A lot of work has been done in both supervised learning (Zhu et al., 2020a; Libovickỳ et al., 2018; Chen and Zhuge, 2018b; Zhu et al., 2018; Li et al., 2018a) and unsupervised learning (Jangra et al., 2020c, b; Erol et al., 2003; Evangelopoulos et al., 2013; Li et al., 2017; Jangra et al., 2021). It can be observed that a large fraction of supervised techniques adopt deep neural networks to tackle the problem (Li et al., 2018a; Chen and Zhuge, 2018a; Zhu et al., 2018; Libovickỳ et al., 2018), whereas in unsupervised techniques a large diversity of techniques have been adopted including deep neural networks (Chen and Zhuge, 2018b), integer linear programming (Jangra et al., 2020b), differential evolution (Jangra et al., 2020c, 2021), submodular optimization (Li et al., 2017) etc.

Handling of continuous media (HCM): We can also distinguish between works depending upon how the proposed models handle continuous media (audio and video in this case). There are three broad distinctions possible, a) extracting-information, where the model extracts information from continuous media to get a discrete representation (Jangra et al., 2020c, b; Li et al., 2017; Jangra et al., 2021), b) semantic-segmentation, where a logical technique is proposed to slice out the continuous media (Tjondronegoro et al., 2011; Evangelopoulos et al., 2013, 2009), and c) sliding window, when a naïve fixed window based modeling is performed (Erol et al., 2003).

Notion of importance (NI): One of the most significant distinctions would be the notion of importance used to generate the final summary. A diverse set of objectives ranging from interestingness (Tjondronegoro et al., 2011), redundancy (Li et al., 2017), cluster validity index (Jangra et al., 2020c), acoustic energy / visual illumination (Evangelopoulos et al., 2009, 2013), and social popularity (Sahuguet and Huet, 2013) have been explored in attempt to solve the MMS task.

Cross-modal information exchange (CIE): The most important part of a MMS model is the ability to extract and share information across multiple modalities. Most of the works either adopt a proximity-based approach (Xu et al., 2013; Erol et al., 2003), a pre-trained model on image-caption pairs based corpora for information overlap (Jangra et al., 2020c, b; Li et al., 2017; Jangra et al., 2021), or learn the semantic overlap over uni-modal embeddings (Li et al., 2018a; Zhu et al., 2018, 2020a; Chen and Zhuge, 2018b, a).

Algorithms (A): The algorithm for the multimodal summarization task varies from traditional multiobjective optimization strategies to modern deep learning-based approaches. We can classify the existing works based on the different algorithms as Neural models (NN), Integer Linear Programming based models (ILP), Submodular Optimization-based models (SO), Nature-Inspired Algorithm based models (NIA), Graph-based models (G), and other algorithms (Oth). We have discussed these different methods in detail in Section 4.1. The other algorithms comprise different clustering-based, LDA (Blei et al., 2003) - based and audio-video analysis-based techniques which were earlier used for performing multimodal summarization.

3.3. On the basis of decoding the output

The summarization objective decides the desired type of output. For different summarization objectives, the type of output and decoding method vary. Depending on the type of output and the decoding method, we can categorize the existing works on the following basis:

Content intensity (CI): The degree to which an output summary elaborates on a concept can hugely impact the overall modeling. The output summary can either be informative, having detailed information about the input topic (Libovickỳ et al., 2018; Yan et al., 2012), or indicative, only hinting at the most relevant information (Zhu et al., 2018; Chen and Zhuge, 2018a).

Text Summarization Type (TST): The most widely discussed distinction for text summarization works is the distinction of extractive vs abstractive. Abstractive summarization systems generally use a beam search or greedy search mechanism for decoding the output summary. While extractive systems during decoding use some scoring mechanism to identify the salient, non-redundant, and readable elements from the input for the final output. Depending on the nature of an output text summary, we can also classify the works in MMS tasks (containing text in the output) into extractive MMS (Jangra et al., 2020c, b; Chen and Zhuge, 2018b; Li et al., 2017; Jangra et al., 2021) and abstractive MMS (Zhu et al., 2020a; Chen and Zhuge, 2018a; Zhu et al., 2018; Li et al., 2018a)101010Note that other modalities beside text have been so far subject to only extractive approaches in MMS researches..

Multi-modal expressivity (MME): Whether the output is uni-modal (comprising of one modality) (Libovickỳ et al., 2018; Li et al., 2018a; Chen and Zhuge, 2018b; Li et al., 2017; Evangelopoulos et al., 2013) or multi-modal (comprising of multiple modalities) (Jangra et al., 2020c, b; Zhu et al., 2020a; Chen and Zhuge, 2018a; Zhu et al., 2018; Tjondronegoro et al., 2011; Jangra et al., 2021) is a major classification for the existing work. Mostly the systems producing multimodal output involve some post-processing steps for selecting the final output elements from the non-central modalities.

Central modality (CM): Based on central-modality (defined in Section 2), existing works can also be distinguished depending on the base modality around which the final output, as well as the model, are formulated. A large portion of the prior work adopts either a text-centric approach (Jangra et al., 2020b; Libovickỳ et al., 2018; Chen and Zhuge, 2018a; Zhu et al., 2018; Li et al., 2018a, 2017; Jangra et al., 2021) or a video-centric111111Here audio is assumed to be a part of video since in all the existing works video and audio are synchronous to each other. approach (Sahuguet and Huet, 2013; Evangelopoulos et al., 2013; Tjondronegoro et al., 2011; Erol et al., 2003). Few of the decoding methods followed popularly in neural models have been discussed in detail in Section 4.1.1.

| Input Based | Output Based | Method Based | ||||||||||||||||||||||||||||

| Papers | MMD | ITM | MMSy | DS | CI | TST | MME | CM | LP | HCM | NI | CIE | A | |||||||||||||||||

|

Text |

Images |

Audio |

Video |

Single-doc |

Multi-doc |

Sync |

Async |

Domain Specific |

Generic |

Informative |

Indicative |

Abstractive |

Extractive |

Uni-modal |

Multi-modal |

Text |

Video |

Unsupervised |

Supervised |

Extracting info |

Semantic seg |

Sliding window |

Redundancy based |

Interestingness |

Other |

Image Caption |

Proximity |

Uni-modal embedding |

Algorithms |

|

| Erol et al. (2003) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | Oth | ||||||||||||||||

| Tjondronegoro et al. (2011) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | Oth | ||||||||||||||||

| UzZaman et al. (2011) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | Oth | |||||||||||||||||||

| Evangelopoulos et al. (2013) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | Oth | ||||||||||||||||

| Li et al. (2017) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | SO, G | ||||||||||||||

| Li et al. (2018a) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NN | |||||||||||||||||

| Zhu et al. (2018) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NN | |||||||||||||||||

| Chen and Zhuge (2018a) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NN | |||||||||||||||||

| Libovickỳ et al. (2018) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NN | |||||||||||||||

| Palaskar et al. (2019) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NN | |||||||||||||||

| Zhu et al. (2020a) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NN | |||||||||||||||||

| Jangra et al. (2020b) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ILP | ||||||||||||||

| Jangra et al. (2020c) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NIA | ||||||||||||||

| Jangra et al. (2021) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NIA | ||||||||||||||

| Xu et al. (2013) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NIA | |||||||||||||||||

| Sahuguet and Huet (2013) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | Oth | |||||||||||||||

| Tiwari et al. (2018) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | SO | |||||||||||||||||

| Bian et al. (2013) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | Oth | |||||||||||||||||||

| Yan et al. (2012) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | G | |||||||||||||||||||

| Qian et al. (2019) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | Oth | ||||||||||||||||||

| Chen and Zhuge (2018b) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NN | |||||||||||||||||

| Evangelopoulos et al. (2009) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | Oth | ||||||||||||||||

| Bian et al. (2014) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | Oth | |||||||||||||||||||

| Fu et al. (2020) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NN | |||||||||||||||

| Li et al. (2020a) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NN | |||||||||||||||

| Li et al. (2020c) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NN | |||||||||||||||||

| Modani et al. (2016) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | SO, G | |||||||||||||||||

| Sanabria et al. (2019) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | NN | |||||||||||||

4. Overview of Methods

A lot of works have attempted to solve the MMS task using supervised and unsupervised techniques. In this section, we attempt to describe the MMS frameworks in a generalized manner, elucidating the nuances of different approaches. Since the variety of inputs, outputs and techniques that were used span a large spectrum of possibilities, we describe each one individually. We have broken down this section into three stages, pre-processing, main model, and post-processing.

4.1. Main Model

A lot of different techniques have been adopted to perform the MMS task using extracted features. Figure 3 illustrates the analysis of techniques adopted by researchers to solve the MMS task. We have tried to cover almost all the recent architectures that mainly focus on text-centric output summaries. In the approaches that have text as the central modality, the adjacent modalities are treated as a supplement to the text summaries, often getting selected at the post-processing step (Section 4.2).

4.1.1. Neural Models

A few extractive summarization models (Chen and Zhuge, 2018b) and almost all of the abstractive text summarization based MMS architectures (Li et al., 2018a; Zhu et al., 2018; Chen and Zhuge, 2018a; Libovickỳ et al., 2018; Zhu et al., 2020a) use Neural Networks (NN) in one form or another. Obtaining annotated dataset with sufficient instances to train these supervised techniques is the most difficult step for any Deep learning based MMS framework. The existing datasets satisfying these conditions belong to news domain, and have text-image type input (refer to datasets #4, #5, #6, #7, #19 in Table 3) or text-audio-video type input (refer to datasets #17, #18 in Table 3). All these frameworks utilize the effectiveness of seq2seq RNN models for language processing and generation, and encoding temporal aspects in videos; CNN networks are also adopted to encode discrete visual information in form of images (Zhu et al., 2018; Chen and Zhuge, 2018a) and video frames (Li et al., 2020a; Fu et al., 2020). All the neural models have an encoder-decoder architecture at their heart, having three key elements: 1) a feature extraction module (encoder), 2) a summary generation module (decoder), and 3) a multi-modal fusion module. Fig. 4 describes a generic neural model to generate text-image121212We formulate text-image summaries in our generic model since the existing neural models only output text (Li et al., 2018a; Chen and Zhuge, 2018b; Fu et al., 2020) or text-image (Li et al., 2020a; Zhu et al., 2020a; Chen and Zhuge, 2018a; Zhu et al., 2018) output. summaries for multi-modal input.

Feature Extraction (Encoder): Encoder is a generic term that entails both textual encoders as well as visual encoders. Various encoders have been explored to encode contextual information in textual modality, ranging from sentence-level encoders (Li et al., 2018a) to hierarchical document level encoders (Chen and Zhuge, 2018a) with Long Short Term Memory (LSTM) units (Hochreiter and Schmidhuber, 1997) or Gated Recurrent Units (GRU) (Cho et al., 2014) as the underlying RNN architecture. Most of the visual encoders do not train the parameter weights from scratch, but rather prefer to use CNN based pre-trained embeddings (refer to Section 3.1.1). However, notably, in order to capture the contextual information of images, Chen and Zhuge (2018a) used a bi-directional GRU unit to encode information from multiple images (encoded using VGGNet (Simonyan and Zisserman, 2015)) into one context vector, which is a unique approach for discrete image inputs. However, this RNN-CNN based encoding strategy is very standard approach adopted to encoding video input. Fu et al. (2020) and Li et al. (2020a) in their respective works use pre-trained CNNs to encode individual frames, and then feed them as input to randomly initialized bi-directional RNNs to capture the temporal dependencies across these frames. Libovickỳ et al. (2018) and Palaskar et al. (2019) use ResNeXt-101 3D Convolutional Neural Network (Hara et al., 2018) trained to recognize 400 diverse human actions on the Kinetics dataset (Kay et al., 2017) to tackle the problem of generating text summaries for tutorial videos from How2 dataset (Sanabria et al., 2018).

Multi-modal fusion strategies: A lot of fusion techniques have been developed in the field of MMS. However, most of the works that take text-image based inputs focus on multi-modal attention to facilitate a smooth information flow across the two modalities. Attention strategies has proven to be a very useful technique to help discard noise and focus on relevant information (Vaswani et al., 2017). The attention mechanism has been adopted by all the neural models that attempt to solve the MMS task. It has been applied to modal-specific information (uni-modal attention), as well as at the information sharing step in form of multi-modal attention to determine the degree of involvement of a specific modality for each input individually. Li et al. (2018a) proposed the hierarchical multi-modal attention for the first time to solve the task of multi-modal summarization of long sentences. The attention module comprises of individual text and image attention layers, followed by a subsequent layer of modality attention layer. Although multi-modal attention has shown great promise in text-image summarization tasks, it itself is not sufficient for text-video-audio summarization tasks (Li et al., 2020a). Hence, to overcome this weakness, Fu et al. (2020) proposed bi-hop attention as an extension of bi-linear attention (Kim et al., 2016b), and Li et al. (2020a) developed a novel conditional self-attention mechanism module to capture local semantic information of video conditioned on the input text information. Both of these techniques were backed empirically, and established state-of-the-art in their respective problems.

Decoder: Depending on the encoding strategy used, the textual decoders also vary from plain unidirectional RNN (Zhu et al., 2018) generating a word at a time to hierarchical RNN decoders (Chen and Zhuge, 2018a) performing this step in multiple levels of granularity. Although a vast majority of neural models focus only on generating textual summary using multi-modal information as input (Li et al., 2018a; Libovickỳ et al., 2018; Chen and Zhuge, 2018b; Palaskar et al., 2019; Li et al., 2020c), some work also output images as an supplement to the generated summary (Zhu et al., 2018; Chen and Zhuge, 2018a; Li et al., 2020a; Zhu et al., 2020a; Fu et al., 2020); reinforcing the textual information and improving the user experience. These works either use a post-processing strategy to select the image(s) to become a part of final multi-modal summary (Zhu et al., 2018; Chen and Zhuge, 2018a), or they incorporate this functionality in their proposed model (Zhu et al., 2020a; Fu et al., 2020; Li et al., 2020a). All the three frameworks that have implicit text-image summary generation characteristic adapt the final loss to be a weighted average of text generation loss together with the image selection loss. Zhu et al. (2020a) treats the image selection as a classification task and adopts cross-entropy loss to train the image selector. Fu et al. (2020) also treats the image selection process as a classification problem, and adopts an unsupervised learning technique that uses RL methods (Zhou et al., 2017). The proposed technique uses representativeness and diversity as the two reward functions for the RL learning. Li et al. (2020a) perceives the proposes a cover frame selector131313Model proposed by Li et al. (2020a) only selects one image per input, chosen from the video frames. that selects one image based on the hierarchical CNN-RNN based video encoding conditioned on article semantics using a conditional self-attention module. Li et al. (2020a) uses pairwise hinge loss to measure the loss during the model training.

Although the encoder-decoder model acts as the basic skeleton for the neural models solving MMS task, a lot of variations have been made, depending upon the input and output specifics. Zhu et al. (2018) proposes a visual coverage mechanism to mitigate the repetition of visual information. Li et al. (2018a) uses two image filters, namely image attention filter and image context filter to avoid noise introduction, filtering out useful information. Zhu et al. (2020a) proposes a multi-modal objective function, that generates multi-modal summary at the end of this step, avoiding any statistical post-processing step for image selection. Fu et al. (2020) utilizes the fact that audio and video are synchronous, and audio can easily be converted to textual format, utilizing these speech transcriptions as the the bridge across the asynchronous modalities of text and video. They also formulate various fusion techniques including early fusion (concatenation of multi-modal embeddings), tensor fusion (Zadeh et al., 2017), and late fusion (Liu et al., 2018) to enhance the information representation in the latent space.

4.1.2. ILP-based Models

Integer linear programming (ILP) has been used for text summarization in the past (Alguliev et al., 2010; Galanis et al., 2012), primarily for extractive summarization. Jangra et al. (2020b) has shown that if properly formulated, ILP can also be used to tackle the MMS task. More specifically, Jangra et al. (2020b) attempt to solve the problem of generating multi-modal summaries from a multi-document multi-modal news dataset by extracting necessary sentences, images and videos. They propose a Joint Integer Linear Programming framework that optimizes weighted average of uni-modal salience and cross-modal correspondence. The model takes pre-trained joint embedding of sentences and images as input, and performs a shared clustering, generating text clusters and image clusters. A recommendation-based setting is used to create the most optimal clusters. The text cluster centers are chosen to be the extractive text summary, and a multi-modal summary containing text, images and videos is generated at the post-processing step.

4.1.3. Submodular Optimization based Models

Sub-modular functions have been quite useful for text summarization tasks (Lin and Bilmes, 2010; Sipos et al., 2012) thanks to their assurance that the local optima is never worse than (63%) of the global optima (Nemhauser et al., 1978). A greedy algorithm having time complexity of is sufficient to optimize the functions. Tiwari et al. (2018), Li et al. (2017), and Modani et al. (2016) have also utilized these properties of submodular functions in order to solve the MMS task. Tiwari et al. (2018) uses coverage, novelty and significance as the submodular functions to extract the most significant documents for the task of timeline generation of a social media event in a multi-modal setting. Li et al. (2017) proposes a linear combination of submodular functions (salience of text, redundancy and visual coverage in this case) under a budget constraint to obtain near-optimal solutions at a sentence level to obtain an extractive text summary using news input comprising of text, images, videos and audio. Modani et al. (Modani et al., 2016) uses a weighted sum of five submodular functions (coverage of input text/images, diversity of text/images in final summary, and coherence of text part and image part of the final summary) to generate a summary comprising of text and images.

4.1.4. Nature Inspired Algorithms

Genetic algorithms (Saini et al., 2019a) and other nature inspired meta-heuristic optimization algorithms like the Grey Wolf Optimizer (Mirjalili et al., 2014) and Water Cycle algorithm (Eskandar et al., 2012) have shown great promise for extractive text summarization (Saini et al., 2019b). Jangra et al. (2020c) has illustrated that such algorithms can also be useful in multi-modal scenarios by experimenting with a multi-objective setting using differential evolution as the underlying guidance strategy. For the multi-objective optimization setup, the authors have proposed two different sets of objectives: one redundancy based (including uni-modal salience, redundancy and cross-modal correspondence) and one using cluster validity indices (PBM index (Pakhira et al., 2004) was used in this case). Both of these settings have performed better than the baselines. The optimization setup outputs the top most suitable sentences and images, which follow similar post-processing procedure as Jangra et al. (2020b). Jangra et al. (2021) on the other hand used Grey Wolf Optimizer (Mirjalili et al., 2014) based multi-objective optimization strategy to obtain the combined complementary-supplementary multi-modal summaries. The proposed approach was split into two key steps: a) global coverage text format (GCTF) - obtaining extractive text summaries using Grey Wolf Optimizer over all the input modalities in a clustering setup, b) visual enhanced text summaries (VETS) - using one-shot population based strategy to enhance the obtained text summaries with visual modalities to obtain the complementary and supplementary enhancements in a data-driven manner. The overall pipeline adopted similar pre-processing and post-processing steps as Jangra et al. (2020c).

4.1.5. Graph based Models

Graph based techniques have been widely adopted in extractive text summarization frameworks (Mihalcea, 2004; Mihalcea and Tarau, 2004; Erkan and Radev, 2004; Modani et al., 2015). These techniques involve graph formulation of text documents where nodes are represented by document sentences and the edge weights are formulated using similarity across two sentences. Extending this idea to a multi-modal set up, Modani et al. (Modani et al., 2016) proposed a graph based approach to generate text-image summaries. A graph was constructed using content segments (representing either sentences or images) as the nodes, and each node is given a weight depending on its information content. For sentences, this weight is computed as the sum of #nouns, #adverbs, #adjectives, #verbs, and half the #pronouns, while an images node’s weight is given by the average similarity score with all other image segments. An edge weight for an edge connecting two sentences is computed as the cosine similarity of sentence embeddings (evaluated using auto-encoders), edge weight connecting two images is computed as the cosine similarity of image embeddings (evaluated using VGGNet (Simonyan and Zisserman, 2015)) and the edge weight connecting a sentence and an image is computed as the cosine similarity of sentence embedding and image embedding projected in a shared vector space (using Deep Fragment embeddings (Karpathy et al., 2014)). After graph construction, an iterative greedy strategy (Modani et al., 2015) is adopted to select appropriate content segments and generate the text-image summary.

Li et al. (Li et al., 2017) also use a graph based technique to evaluate the salience of text to generate an extractive text summary using multi-modal input (containing text documents, images, videos). A guided LexRank (Erkan and Radev, 2004) was proposed to evaluate the salience score of the text unit (comprising of document sentences and speech transcriptions). The guidance strategy proposed by Li et al. (Li et al., 2017) had bidirectional connections for sentences belonging to documents, but only unidirectional connections were made for speech transcriptions with only outward edges to follow on their assumption that speech transcriptions might not always be grammatically correct, and hence should only be used for guidance and not for summary generation. This textual score was then used as a submodular function for the final model (refer to Sec 4.1.3).

4.2. Post-processing

Most of the existing works are not capable of generating multi-modal summaries141414Although, all the surveyed methods are ”multi-modal summarization” approaches, i.e. they all summarize multi-modal information, however, most of them summarize it to generate uni-modal outputs.. The systems that do generate multi-modal summaries either have an inbuilt system capable to generating multi-modal output (mainly by generating text using seq2seq mechanisms and selecting relevant images) (Li et al., 2020a; Zhu et al., 2020a) or they adopt some post-processing steps to obtain the visual and vocal supplements of the generated textual summaries (Jangra et al., 2020b; Zhu et al., 2018). Neural network models that use multi-modal attention mechanisms to determine the relevance of modality for each input case have been used for selecting the most suitable image (Zhu et al., 2018; Chen and Zhuge, 2018a). More precisely, the visual coverage scores (after the last decoding step), i.e. the summation of attention values while generating the text summary, are used to determine the most relevant images. Depending upon the needs of the task, a single image (Zhu et al., 2018) as well as multiple images (Chen and Zhuge, 2018b) can be extracted to supplement the text.

Jangra et al. (2020b) proposes a text-image-video summary generation task, which as the name suggests, outputs all possible modalities in the final summary. Having extracted most important sentences and images (containing video key-frames as well) using the ILP framework, the images are separated from the key-frames, and are supplemented with other images from the input set that have a moderate similarity, with a pre-determined threshold and upper bound to avoid noisy and redundant information. Cosine similarity of global image features is used as the underlying similarity matrix in this case. A weighted average of verbal scores and visual scores is used to determine the most suitable video for the multi-modal summary. verbal score is defined as the information overlap between speech transcriptions and generated text summary, while the visual score is defined as the information overlap between the key-frames of a video with the generated image summary.

5. Datasets and Evaluation Techniques

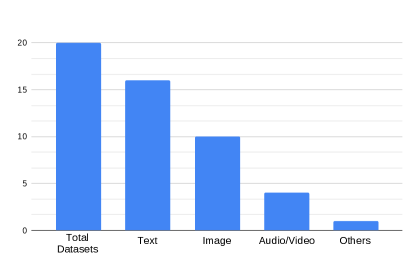

Due to the flexible nature of the MMS task, with a large variety of input-output modalities, the MMS task does not have a standard dataset used as a common evaluation benchmark for all approaches to this date. Nonetheless, we have collected information about datasets used in the previous works, and a comprehensive study of 20 datasets can be found at Table 3. It was found that out of these 21 datasets, 12 datasets are of news-related origin (Jangra et al., 2020b; Li et al., 2018a; Zhu et al., 2018; Fu et al., 2020; Yan et al., 2012), and including the dataset on video tutorials by Sanabria et al. (2018), there are 13 datasets that are domain-independent, thus suitable to test out domain generic models. 6 out of the 21 datasets produce text-only summaries using multi-modal input; out of these six datasets, 2 datasets’ output comprises of extracted text summaries (Chen and Zhuge, 2018b; Li et al., 2017) and 4 datasets’ output contains abstractive summaries (Li et al., 2018a, 2020c; Fu et al., 2020; Sanabria et al., 2018). One the other hand, there are 8 datasets that output text-image summaries, which can further be divided into 6 extractive text-image summary generation datasets (Xu et al., 2013; Tiwari et al., 2018; Bian et al., 2013) and 2 abstractive text-image summary generation datasets (Li et al., 2020a; Chen and Zhuge, 2018a). Datasets #19 ((Jangra et al., 2020b)) and #20 ((Jangra et al., 2021)) are the only two datasets that comprise of text, image, audio and video in the output. However, these datasets are small, and thus limited to extractive summarization techniques. Meanwhile, dataset #20 ((Jangra et al., 2021)) is the only existing dataset that comprises of both complementary and supplementary enhancements in the multi-modal summary (refer to Section 2 for the definition). Out of the 21 datasets, 17 datasets contain text in the multi-modal summary, 11 contain images as well, 3 comprises solely of audio-video outputs (Evangelopoulos et al., 2009, 2013; Sanabria et al., 2019), and 1 dataset has a fixed template151515Tjondronegoro et al. (2011) focusing on summarizing tennis matches, and thus the output has a fixed template comprising of three different summarization tasks: a) summarization of entire tournament, b) summarization of a match and c) summarization of a tennis player. as output (Tjondronegoro et al., 2011). Of these 17 text-containing datasets, 10 datasets contain extractive text summaries (Xu et al., 2013; Tiwari et al., 2018; Bian et al., 2013; Jangra et al., 2020b) and the rest 7 datasets contain abstractive summaries (Li et al., 2020a; Chen and Zhuge, 2018a; Fu et al., 2020; Sanabria et al., 2018). It is interesting to note that 5 out of these 7 abstractive datasets belong to the news-domain (Li et al., 2020a; Chen and Zhuge, 2018a; Fu et al., 2020; Li et al., 2018a; Zhu et al., 2018), while the other two focus on e-commerce product summarization (Li et al., 2020c) and tutorial summarization (Sanabria et al., 2018).Out of the datasets only (Li et al., 2017; Sanabria et al., 2018; Jangra et al., 2021; Zhu et al., 2018) of them are publicly available.

Depending on the input, we can also divide the 20 datasets based on the presence/absence of video in the input. There are 10 datasets that contain videos, whereas the rest 11 mostly work with text-image inputs. Due to the nature of this survey (the main focus on text modality), all 21 datasets in consideration contain text as input. A majority of these text sources are single documents (Li et al., 2020a; Chen and Zhuge, 2018a; Li et al., 2020c; Zhu et al., 2018), but there are 6 datasets that have multiple documents in the input (Li et al., 2017; Jangra et al., 2020b; Xu et al., 2013; Bian et al., 2013, 2014; Jangra et al., 2021). Sanabria et al. (2019), Evangelopoulos et al. (2009) and Evangelopoulos et al. (2013) however do not contain text documents, but the speech transcriptions from corresponding audio inputs. While most of these datasets comprise of multi-sentence summaries generated from input documents, Li et al. (2018a) contains a single sentence as the source as well as the reference summary. Most of these datasets use English-based text and audio, but there are 3 datasets that contain Chinese text (Bian et al., 2013; Li et al., 2017; Bian et al., 2014; Li et al., 2020a).

There are some datasets that have inputs other than text, image, audio and video. For instance, Tiwari et al. (2018) and Yan et al. (2012) contain temporal information about the dataset for the task of multi-modal timelines generation. Qian et al. (2018) also utilize user information including demographics like gender, birthday, user profile (short biography), and other information including user name, nickname, number of followers, number of microblogs posted, profile registration time, and user’s level of interests in different topics for generating summaries of an event based on social media content. Detailed plots for selected statistics on the datasets covered in this study can be found at Figure 5.

| ID & Paper | Used In Paper | Input Modalities | Output Modalities | Data Statistics | Domain |

|---|---|---|---|---|---|

| #1: Li et al. (2018a) (2018) | (Li et al., 2018a) | T, I | TA | 66,000 triplets (sentence, image and summary) | News |

| #2: Zhu et al. (2018)(2018)* | (Zhu et al., 2018, 2020a) | T, I | TA, I | 313k documents, 2.0m images | News |

| #3: Chen and Zhuge (2018a) (2018) | (Chen and Zhuge, 2018a) | T, I | TA, I | 219k documents | News |

| #4: Xu et al. (2013) (2013) | (Xu et al., 2013) | T, I | TE, I | 8 topics (each containing 150+ documents) | News |

| #5: Bian et al. (2013) (2013) | (Bian et al., 2013) | TC, I | TE, I | 10 topics (127k microblogs and 48k images) | Social Media |

| #6: Bian et al. (2014) (2014) | (Bian et al., 2014) | TC, I | TE, I | 20 topics (310k documents, 114k images) | Social Media |

| #7: Li et al. (2020c) (2020) | (Li et al., 2020c) | TC, I | TA | 1,375,453 instances from home appliances, clothing, and cases & bags categories | E-commerce |

| #8: Chen and Zhuge (2018b) (2018) | (Chen and Zhuge, 2018b) | T, A | TE | - | News |

| #9: Tiwari et al. (2018) (2018) | (Tiwari et al., 2018) | T, I, TM | TE, I | 6 topics | Social Media |

| #10: Yan et al. (2012) (2012) | (Yan et al., 2012) | T, I, TM | TE, I | 4 topics (6k documents, 2k images) | News |

| #11: Qian et al. (2019) (2019) | (Qian et al., 2019) | T, I, U | TE, I | 12 topics (9.1m documents, 2.2m users, 15m images) | News (disasters) |

| #12: Tjondronegoro et al. (2011) (2011) | (Tjondronegoro et al., 2011) | T, A, V | TF | 66 hrs video (33 matches), 1,250 articles related to Australian Open 2010 tennis tournament | Sports (Tennis) |

| #13: Sanabria et al. (2018) (2018)* | (Libovickỳ et al., 2018) | T, A, V | TA | 2,000 hrs video | Multiple domains |

| #14: Fu et al. (2020) (2020) | (Fu et al., 2020) | T, A, V | TA | 1970 articles from Daily Mail (avg. video length 81.96 secs), and 203 articles from CNN (avg. video length 368.19 secs) | News |

| #15: Li et al. (2020a) (2020) | (Li et al., 2020a) | T, A, V | TA, I | 184,920 articles (Weibo) with avg. video duration 1 min, avg. article length 96.84 words, avg. summary length 11.19 words | News |

| #16: Sanabria et al. (2019) (2019) | (Sanabria et al., 2019) | T, A, V | A, V | 20 complete soccer games from 2017-2018 season of French Ligue 1 | Sports (Soccer / Football) |

| #17: Evangelopoulos et al. (2009) (2009) | (Evangelopoulos et al., 2009) | T, A, V | A, V | 3 movie segments (5-7 min each) | Movies |

| #18: Evangelopoulos et al. (2013) (2013) | (Evangelopoulos et al., 2013) | T, A, V | A, V | 7 half hour segments of movies | Movies |

| #19: Jangra et al. (2020b) (2020) | (Jangra et al., 2020b, c) | T, I, A, V | TE, I, A, V | 25 topics (500 documents, 151 images, 139 videos) | News |

| #20: Jangra et al. (2021) (2021)* | (Jangra et al., 2021) | T, I, A, V | TE, I, A, V | 25 topics (contains complementary and supplementary multi-modal references) | News |

| #21: Li et al. (2017) (2017)* | (Li et al., 2017) | T, TC, I, A, V | TE | 25 documents in English, 25 documents in Chinese | News |

These datasets span a wide variety of domains, including sports like tennis (Tjondronegoro et al., 2011) and football (Sanabria et al., 2019), movies (Evangelopoulos et al., 2009, 2013), social media-based information (Bian et al., 2013, 2014; Tiwari et al., 2018), e-commerce (Li et al., 2020c). In the coming days, we are likely bound to see more large-scale domain specific datasets to advance this field.

Although there have been a lot of innovative attempts in solving the MMS task, the same does not go for the evaluation techniques used to showcase the quality of generated summaries. Most of the existing works use uni-modal evaluation metrics, including ROUGE scores (Lin, 2004) to evaluate the text summaries, accuracy and precision-recall based metrics to evaluate the image and video parts of generated summaries. A few works have also reported True Positives and False Positives as well (Sahuguet and Huet, 2013). The best way to evaluate the quality of a summary is to perform extensive human evaluations. Various techniques have been used to get the best user performance evaluations including the quiz method (Erol et al., 2003), and user-satisfaction test (Zhu et al., 2018). These manual evaluation techniques are mainly of two kinds: a) simple scoring of summary quality based on input (Zhu et al., 2020a, 2018; Jangra et al., 2021), and b) answering the questions based on input to quantify the information retention of input data instance (Li et al., 2020a). However, one major issue with these manual evaluations is that they cannot be conducted for the entire dataset, and are hence performed on a subset of the test dataset. There are a lot of uncertainties involving this subset, as well as the mental conditions of the human evaluators while performing these quality checks. Hence it can be unreliable to compare two results of separate human evaluation experiments, even for the same task.

5.1. Text Summary Evaluation Techniques

Since the scope of this work is mostly limited to text-centric MMS techniques, it is important to discuss evaluation of text summaries separately and in tandem to other modalities. Even though quite a few MMS works generate uni-modal text summaries from multi-modal inputs (Li et al., 2018a, 2017; Libovickỳ et al., 2018), they still use very basic string based n-gram overlap metrics like ROUGE (Lin, 2004) to conduct the evaluation. Through this survey, we want to direct the researchers to not just focus on ROUGE, but also look at other aspects of text summarization as well. For instance, Fabbri et al. (2021) proposes four key-characteristics that an ideal summary must have:

-

(1)

Coherence the quality of smooth transition between different summary sentences such that sentences are not completely unrelated or completely same.

-

(2)

Consistency the factual correctness of summary with respect to input document.

-

(3)

Fluency the grammatical correctness and readability of sentences.

-

(4)

Relevance the ability of a summary to capture important and relevant information from the input document.

Fabbri et al. (2021) also illustrated how ROUGE is not capable of gauging the quality of generated summaries by doing an n-gram overlap with human written reference summaries. There are other metrics out there that use more advanced strategies to do the evaluation, such as n-gram based metric like WIDAR (Jain et al., 2022b), embedding-based metrics like ROUGE-WE (Ng and Abrecht, 2015), MoverScore (Zhao et al., 2019) and Sentence Mover Similarity (SMS) (Clark et al., 2019) or neural model-based metrics like BERTScore (Zhang* et al., 2020), SUPERT (Gao et al., 2020), BIANC (Vasilyev and Bohannon, 2021) and S3 (Peyrard et al., 2017). These evaluation metrics have been proven to be empirically better at captivating the above-mentioned characteristics of a summary (Fabbri et al., 2021), and hence upcoming research works should also report performance on some of these metrics along with ROUGE to have more accurate analysis of the generated summaries.

5.2. Multi-modal Summary Evaluation Techniques

In an attempt to evaluate the multi-modal summaries, Zhu et al. (2018) proposes a multi-modal automatic evaluation (MMAE) technique that jointly considers uni-modal salience and cross-modal relevance. In their case, the final summary comprises of text and images, and the final objective function is formulated as a mapping of three objective functions: 1) salience of text, 2) salience of images, and 3) text-image relevance. This mapping function is learnt using supervised techniques (Linear Regression, Logistic Regression and Multi-layer Perceptron in their case) to minimize training loss with human judgement scores. Although the metric seems promising, there are a lot of conditions that must be met in order to perform the evaluation.

The MMAE metric does not effectively evaluate the information integrity161616Information integrity is the dependability or trustworthiness of information. In context of a multi-modal summary evaluation task, it refers to the ability to make a judgement that is unbiased towards any modality (i.e. an ideal evaluation metric does not give higher importance to information from one modality (e.g. text) over other modality (e.g. images)). of a multi-modal summary, since it uses uni-modal salience scores as a feature in the overall judgement making process, leading to a cognitive bias. Zhu et al. (2020a) improves upon this by proposing an evaluation metric based on joint multi-modal representation (termed as MMAE++), projecting the generated summaries and the ground truth summaries into a joint semantic space. In contrast to other multi-modal evaluation metrics, they attempt to look at the multi-modal summaries as a whole entity, than a combination of piece-wise significant elements. A neural network based model is used to train this joint representation. Images of two image caption pairs are swapped to obtain two image-text pairs that are semantically close to each other to obtain the training data for joint representation automatically. The evaluation model is trained using a multi-modal attention mechanism (Li et al., 2018a) to fuse the text and image vectors, using max-margin loss as loss function.

Modani et al. (2016) propose a novel evaluation technique termed by them as Multimedia Summary Quality (MuSQ). Just like other multi-modal summarization metrics described above, MuSQ is also limited to text-image summaries. However, unlike the majority of previous evaluation metrics for multi-modal summarization or document summarization techniques (Ermakova et al., 2019), MuSQ does not require a ground truth to evaluate the quality of generated summary. MuSQ is a naive coverage based evaluation metric denoted as , and is defined as:

| (1) | |||

| (2) | |||

| (3) | |||

| (4) |

where denotes the degree of coverage of input text document by text summary , denotes the degree of coverage of input images by the image summary . measures the cohesion across the text sentences and images of final multi-modal summary. and are respectively the individual reward values for each input sentence and input image that denote the extent of information content in each content fragment (a text sentence or an image).

| Metric name & corresponding paper | Pros & Cons |

|---|---|

| Multi-modal Automatic Evaluation (MMAE). Zhu et al. (2018) | Advantages |

| - MMAE shows high correlation with human judgement scores. | |

| Disadvantages | |

| - Requires a substantial manually annotated dataset. | |

| - Might perform ambiguously‡ for evaluation of other new domains. | |

| MMAE++. Zhu et al. (2020a) | Advantages |

| - Utilizes joint multi-modal representation of sentence-image pairs to better improve the correlation scores over MMAE metric (Zhu et al., 2018). | |

| Disadvantages | |

| - Requires a substantial manually annotated dataset. | |

| - Might perform ambiguously1 for evaluation of other new domains. | |

| Multimedia Summary Quality (MuSQ). Modani et al. (2016) | Advantages |

| - Does not require manually created gold summaries. | |

| Disadvantages | |

| - The technique is very naive, and only considers coverage of input information and text-image cohesiveness. | |

| - The metric output is not normalized. Hence the evaluation scores are highly sensitive to the cardinality of input text sentences and input images. |

-

1

Here ”might perform ambiguously” refers to the fact that since model-based metrics are biased towards the training data, it is hard to determine how well would they perform on unseen domains. For instance, if the model is trained on news summarization dataset, and the task is to evaluate medical report summaries, then the model performance cannot be determined without further experiments.

To sum up, only a handful of works have focused on the evaluation of multi-modal summaries. Even the proposed evaluation metrics have a lot of drawbacks. The evaluation metrics proposed by Zhu et al. (2018) and Zhu et al. (2020a) require a large human evaluation score-based training data to learn the parameter weights. Since these metrics are highly dependent on the human-annotated dataset, the quality of this dataset can compromise the evaluation process if the training dataset is restrictive in domain coverage or is of poor quality. It also becomes difficult to generalize these metrics since they depend on the domain of training data. The evaluation technique proposed by Modani et al. (2016), although independent from gold summaries, is too naive, and has its own drawbacks. The evaluation metric is not normalized, and hence shows great variation when comparing the results of two input data instances with different sizes.

Overall, the discussed strategies have their own pros and cons; however, there is a great scope for future improvement in the area of ‘evaluation techniques for multi-modal summaries’ (refer to Section 7).

For population based techniques (Jangra et al., 2020c, 2021), the best score across multiple solutions were reported in this work.

| Paper | Dataset No. | Domain | Text score (ROUGE) | Image score | ME | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| R-1 | R-2 | R-L | R-SU4 | Precision | Recall | MAP | ||||

| Li et al. (2017) (EXT) | Li et al. (2017) (English) | News | 0.442 | 0.133 | N.A | 0.187 | N.A | N.A | N.A | ✓ |

| Li et al. (2017) (Chinese) | 0.414 | 0.125 | N.A | 0.173 | N.A | N.A | N.A | ✓ | ||

| Li et al. (2018a) (ABS) | Li et al. (2018a) | News | 0.472 | 0.248 | 0.444 | N.A | N.A | N.A | N.A | |

| Zhu et al. (2018) (ABS) | Zhu et al. (2018) | News | 0.408 | 0.1827 | 0.377 | N.A | 0.624 | N.A | N.A | ✓ |

| Zhu et al. (2020a) (ABS) | Zhu et al. (2018) | 0.411 | 0.183 | 0.378 | N.A | 0.654 | N.A | N.A | ✓ | |

| Chen and Zhuge (2018b) (EXT) | Chen and Zhuge (2018b) | News | 0.271 | 0.125 | 0.156 | N.A | N.A | N.A | N.A | |

| Chen and Zhuge (2018a) (ABS) | Chen and Zhuge (2018a) | News | 0.326 | 0.120 | 0.238 | N.A | N.A | 0.4978 | N.A | |

| Libovickỳ et al. (2018) (ABS) | Sanabria et al. (2018) | Multi-domain | N.A | N.A | 0.549 | N.A | N.A | N.A | N.A | ✓ |

| Jangra et al. (2020b) (EXT) | Jangra et al. (2020b) | News | 0.260 | 0.074 | 0.226 | N.A | 0.599 | 0.38 | N.A | |

| Jangra et al. (2020c) (EXT) | Jangra et al. (2020b) | 0.420 | 0.167 | 0.390 | N.A | 0.767 | 0.982 | N.A | ||

| Jangra et al. (2021) (EXT) | Jangra et al. (2021) | News | 0.556 | 0.256 | 0.473 | N.A | 0.620 | 0.720 | N.A | ✓ |

| Xu et al. (2013) (EXT) | Xu et al. (2013) | News | 0.369 | 0.097 | N.A | N.A | N.A | N.A | N.A | |

| Bian et al. (2013) (EXT) | Bian et al. (2013) | Social Media | 0.507 | 0.303 | N.A | 0.232 | N.A | N.A | N.A | |

| Yan et al. (2012) (EXT) | Yan et al. (2012) | News | 0.442 | 0.109 | 0.320 | N.A | N.A | N.A | N.A | |

| Bian et al. (2014) (EXT) | Bian et al. (2014) (social trends) | Social Media | 0.504 | 0.307 | N.A | 0.235 | N.A | N.A | N.A | |

| Bian et al. (2014) (product events) | 0.478 | 0.279 | N.A | 0.187 | N.A | N.A | N.A | |||

| Fu et al. (2020) (EXT) | Fu et al. (2020) (DailyMail) | News | 0.417 | 0.186 | 0.317 | N.A | N.A | N.A | N.A | ✓ |

| Fu et al. (2020) (CNN) | 0.278 | 0.088 | 0.187 | N.A | N.A | N.A | N.A | ✓ | ||

| Li et al. (2020a) (ABS) | Li et al. (2020a) | News | 0.251 | 0.096 | 0.232 | N.A | N.A | N.A | 0.654 | ✓ |

| Li et al. (2020c) (ABS) | Li et al. (2020c) (Home Appliances) | E-commerce | 0.344 | 0.125 | 0.224 | N.A | N.A | N.A | N.A | ✓ |

| Li et al. (2020c) (Clothing) | 0.319 | 0.111 | 0.215 | N.A | N.A | N.A | N.A | ✓ | ||

| Li et al. (2020c) (Cases & Bags) | 0.338 | 0.125 | 0.224 | N.A | N.A | N.A | N.A | ✓ | ||

6. Results and Discussion