A Survey on Adversarial Attacks

for Malware Analysis

Abstract

The last decade has experienced an exponential growth in research and adoption of Machine Learning (ML) and Artificial Intelligence (AI), and its application in different use-cases. Traditional machine learning algorithms have evolved into data intensive deep learning architectures, which have fostered cutting edge and unprecedented technological advancements revolutionizing today’s world. The capability of these ML algorithms to uncover knowledge and patterns from semi- or unstructured data to support automation in decision making has led to the revamping of domains such as medicine, e-commerce, autonomous cars, and cybersecurity. The growth and adoption of machine learning solutions have been lately slowed down with the advent of adversarial attacks. Adversaries are able to modify data at training and testing time, maximizing the classification error of the ML models. Minor intentional perturbations in test samples are crafted by the adversaries to exploit the discovered blind spots in trained models. The increased data dependency of these algorithms have offered a way for high incentives to disguise ML models. In order to survive against possible catastrophic implications, continuous research is required to find vulnerabilities in form of adversarial and design resilient autonomous systems.

Machine learning-based malware analysis approaches are widely researched and deployed in critical infrastructures for detecting and classifying evasive and growing malware threats. However, minor perturbations or few ineffectual bytes insertion can easily ’fool’ these trained ML classifiers, essentially making them ineffective against these crafted and smart malicious software. This survey aims at providing an encyclopedic introduction to adversarial evasion attacks that are carried out specifically against malware detection and classification systems. Since most of the research in the adversarial malware domain is new and has been performed in the last couple of years, our survey will cover the relevant literature published on the malware adversarial evasion attacks between the year 2013 to 2021. The paper will begin by introducing various machine learning techniques used to generate adversarial in malware analysis and explaining the structures of target files. The survey will model the threat posed by adversaries followed by brief descriptions of widely used adversarial algorithms. We will also provide a taxonomy of adversarial evasion attacks with respect to the attack domains and adversarial generation techniques that are widely used in malware detection and classification. Adversarial evasion attacks carried out against malware detectors will be discussed under each taxonomical headings and compared with related literature. The survey will conclude by highlighting the open problems, challenges and future research directions.

Index Terms:

Adversarial Evasion attack, Adversary Modeling, Data Poisoning, Malware Analysis, Machine Learning, Deep Learning, Security, Windows Malware, Android Malware, PDF Malware1 Introduction

Machine Learning has revolutionized the modern world due to its ubiquity and generalization power over the humongous volume of data. With Zettabytes of data hovering around the cloud [1], modern technology’s power resides in extracting knowledge from these unstructured raw data. Machine learning (ML) has provided the unprecedented power to automate the decision-making process, outperforming humans by far margin. ML has powered more robust and representative feature set in comparison to hand-crafted features. Transformation of ML approaches from classical algorithms to modern deep learning technologies are providing the major breakthroughs in state-of-art research problems. Further, deep learning (DL) has excelled in areas where traditional ML approaches were infeasible (or unsuccessful) to apply. The evolving deep learning techniques have furnished the fields of natural language processing [2, 3, 4], image classification [5, 6, 7, 8], autonomous driving [9, 10, 11, 12], neuro science [13, 14, 15, 16] and many other wide range of domains. Society is experiencing high-end amazing products like Apple Siri111https://www.apple.com/siri/, Amazon Alexa222https://alexa.amazon.com/ and Microsoft Cortana333https://www.microsoft.com/en-us/cortana due to recent advances in machine learning and artificial intelligence (AI). Needless to say that machine learning has started shaping our daily life habits; connecting to people on social media, ordering food and groceries from online stores, listening to music on Spotify444https://www.spotify.com/us/, watching movies on Netflix555https://www.netflix.com/, reading online news and books, are all examples of systems built around the recommendation engines powered by deep learning based models. Machine learning based solutions not only control our lifestyle but it has also revolutionized cyber security critical operations in different domains including malware analysis [17, 18, 19, 20, 21, 22], spam filtering [23, 24, 25, 26], fraud detection [27, 28, 29, 30], medical analysis [31, 32, 33, 34, 35], access control [36, 37, 38, 39], among others.

Malware analysis is one of the most critical fields where ML is being significantly employed. Traditional malware detection approaches [40, 41, 42, 43, 44] rely on signatures where unique identifiers of malware files are maintained in a database and are compared to extracted signatures from newly encountered suspicious files. However, several techniques are used to rapidly evolve the malware to avoid detection (more details in Section 4). With security researchers looking for detection techniques addressing such sophisticated zero-day and evasive malware, ML based approaches came to their rescue [45]. Most of the modern anti-malware engines, such as Windows Defender666https://www.microsoft.com/en-us/windows/comprehensive-security, Avast777https://www.avast.com/, Deep Instinct D-Client888https://www.deepinstinct.com/endpoint-security and Cylance Smart Antivirus999https://shop.cylance.com/us, are powered by machine learning[46], making them robust against emerging variants and polymorphic malware [47]. As per some estimates [48], around 12.3 billion devices are connected worldwide and spread of malware in this scale can result in catastrophic consequences. As such, it is evident that economies worth billions of dollars are directly or indirectly relying on machine learning’s performance and growth to be protect from this rapidly evolving menace of malware. Despite the existence of numerous malware detection approaches, including ones that leverage ML, recent ransomware attacks, like the Colonial Pipeline attack where operators had to pay around $5 million for recovering 5,500-mile long pipeline[49], the MediaMarkt attack worth around $50M bitcoin payment [50] and the computer giant Acer attack [51], highlight the vulnerabilities and limitations of current security approaches, and necessitates more robust, real-time, adaptable and autonomous defense mechanisms powered by AI and ML.

The performance of ML models relies on the basic assumption that training and testing are carried out under similar settings and that samples from training and testing datasets follow independent and identical distribution. This assumption is overly simplified and, in many cases, does not hold true for real world use-cases where adversaries deceive the ML models into performing wrong predictions (i.e. adversarial attacks). In addition to traditional threats like malware attack [52, 53, 54], phishing [55, 56, 57], man-in-the-middle attack [58, 59, 60], denial-of-service [61, 62, 63] and SQL injection [64, 65, 66], adversarial attacks has now emerged as a serious concern, threatening to dismantle and undermine all the progress made in the machine learning domain.

Adversarial attacks are carried out either by poisoning the training data or manipulating the test data (evasion attacks). Data poisoning attacks [67, 68, 69, 70] have been prevalent for some time but are less scrutinized as access to training data by the attackers is considered unlikely. In contrast, evasion attacks, first introduced by Szegedy et al. [71] against deep learning architectures, are carried out by carefully crafting imperceptible perturbation in test samples, forcing models to mis-classify as illustrated in Figure 1. Here, the attacker’s effort is to drag a test sample across the ML’s decision boundary through the addition of minimal perturbation to that sample. Considering the availability of research works and higher-risk in practicality, this survey will entirely focus on adversarial evasion attacks that are carried out against the malware detectors.

Adversarial evasion attacks were initially crafted on images as the only requirement for perturbation in an image is that it should be imperceptible to the human eye [72, 73]. A very common example for adversarial attack in images, shown in Figure 2, is performed by Goodfellow et al. [74] where GoogLeNet [75] trained on ImageNet[76] classifies panda as gibbon with addition of very small perturbations. This threat is not limited to experimental research labs but have already been successfully demonstrated in real world environments. For instance, Eykholt et al. performed sticker attacks to road signs forcing the image recognition system to detect ’STOP’ sign as a speed limit. Researchers from the Chinese technology company Tencent101010https://www.tencent.com/ tricked Tesla’s111111https://www.tesla.com/ Autopilot in Model S and forced it to switch lanes by adding few stickers on the road [77]. Such adversarial attacks on real world applications force us to rethink the increasing reliability over smart technologies like Tesla Autopilot121212https://www.tesla.com/autopilot.

| Paper | Year | Application Domain | Taxonomy |

Threat Modeling |

Adversarial Example |

|---|---|---|---|---|---|

| Barreno et al. [78] | 2008 | Security | Attack Nature | ||

| Gardiner et al. [79] | 2016 | Security | Attack Type/Algorithm | ||

| Kumar et al. [80] | 2017 | General | Attack Type | ||

| Yuan et al. [81] | 2017 | Image | Algorithm | ||

| Chakraborty et al. [82] | 2018 | Image/Intrusion | Attack Phase | ||

| Akhtar et al. [83] | 2018 | Image | Image domains | ||

| Duddu et al. [84] | 2018 | Security | Attack Type | ||

| Li et al. [85] | 2018 | General | Algorithm | ||

| Liu et al. [86] | 2018 | General | Target Phase | ||

| Biggio et al. [87] | 2018 | Image | Attack Type | ||

| Sun et al. [88] | 2018 | Image | Image Type | ||

| Pitropakis et al. [89] | 2019 | Image/Intrusion/Spam | Algorithm | ||

| Wang et al. [90] | 2019 | Image | Algorithm | ||

| Qiu et al. [91] | 2019 | Image | Knowledge | ||

| Xu et al. [92] | 2019 | Image/Graph/Text | Attack Type | ||

| Zhang et al. [93] | 2019 | Natural Language Processing | Knowledge/Algorithm | ||

| Martins et al. [94] | 2019 | Intrusion/Malware | Approach | ||

| Moisejevs [95] | 2019 | Malware Classification | Attack Phase | ||

| Ibitoye et al. [96] | 2020 | Network Security | Approach/Algorithm | ||

| Our Work | 2021 | Malware Analysis | Domain/Algorithm |

Year: Published Year, Application Domain: Dataset domain on which adversarial is crafted, Taxonomy: Basis on which attack taxonomy is made, Threat Modeling: Presence of threat modeling, Adversarial Example: Discuss actual adversarial attacks crafted in literature

However, adversarial generation is a completely different game in the malware domain, in comparison to computer vision, due to the increased number of constraints. Perturbations in malware files should be generated in a way that it should not affect both their functionality and executability. Adversarial evasion attacks on malware are carried out by manipulating or inserting few ineffectual bytes in the malware executables in a way that does not tamper with its original state, but change the classification decision by the ML model. For instance, one early demonstrated attack against anti-malware engine was carried out by Anderson et al. [97] using reinforcement learning. This black-box attack was able to bypass Random forest and gradient boosted decision trees (GBDT) detectors by modifying few bytes of Windows PE malware files. Kolosnjaji et al. [98] carried out evasion attack using gradient based approach against convolutional neural network (CNN) based malware detector. Since then, there has been numerous works trying to optimize the attacks, discovering better approaches to attack wide domains of malware detectors. Demetrio et al. [99] success in crafting adversarial from few header byte modification and Suciu et al. [100] experiment on inserting perturbations in different file locations, further magnified the interest towards improving the standard of attacks. The fear of evolving adversarial attack is growing among the cyber security research community and has provoked the everlasting war between adversarial attackers and defenders. To help researchers better understand the current situation of adversarial attacks in the malware domain and infer vulnerabilities on current approaches, this paper will provide a comprehensive survey of ongoing adversarial evasion attack researches against Windows, Android, PDF, Linux and Hardware-based malware.

1.1 Motivation and Contribution

1.1.1 Prior Surveys and Limitations

The surveys on adversarial attacks crafted in different domains have been summarized in Table I. Majority of surveys on adversarial attacks are focused on computer vision for images mis-classification. Yuan et al. [81] summarized major adversarial generation methods for images. Chakraborty et al. [82] surveyed adversarial in form of evasion and poisoning in image and anomaly detection. Akhtar et al.’s [83] work was restricted on the computer vision domain like most of the works. Biggio et al. [87] presented a historical timeline of evasion attacks along with works carried out on security of deep neural networks. Sun et al. [88] surveyed practical adversarial examples on graph data. Many of the surveys did not only focused on a single domain but covered generalized field across multiple domains including image, text, graph, intrusion, spam and malware. Kumar et al. [80] classified adversarial attacks into four overlapping classes. Li et al. [101] explains adversarial generation and defense mechanism through formal representation. Liu et al. [86] reviewed some general security threats and associated defensive techniques. Pitropakis et al. [89] surveyed adversarial in intrusion detection, spam filtering and image domain. Xu et al. [92] surveyed vulnerabilities, analysed reasons behind it and also proposed ways to detect adversarial examples. There have been a few works focusing on the security related domains like intrusion, malware, and network security. Barreno et al. [78] worked on one of the very first surveys done on security of machine learning where different categories of attacks and defenses against ML systems are discussed. Gardiner et al. [79] focused on reviewing call and control detection techniques. They identified vulnerabilities and also pointed limitations of malware detection systems. Duddu et al. [84] discussed the concern of privacy being leaked by information handled by machine learning algorithms. They also presented cyber-warfare testbed for the effectiveness of attack and defense strategies. Martins et al. [94] performed generalized survey on attacks focusing on cloud security, malware detection and intrusion detection. Ibitoye et al. [96] surveyed adversarial attacks in network domain using risk grid map.

With the discussed surveys, we can make certain conclusions reflecting the growing attention and concerns in the community as the world moves toward automation. First, the interest of people in adversarial domain has surged in last 3 or 4 years. Second, very few of the survey papers is solely focused on adversarial malware analysis, which is a growing menace. Majority of the surveys conducted on adversarial domain is built around computer vision attacks. Recent flux of works are spread in wide domains including network, natural language processing, security, and intrusion detection. There has been limited research on adversarial attacks in malware analysis, being a relatively new domain. The few existing surveys on malware domain are not focused on malware analysis but spread around multiple domains. The current surveys also does not cover entire attacks carried out on malware detection domain, but focuses on small subset of attacks. The outpouring interests in adversarial and lack of surveys justifying entire adversarial attacks on malware domain, motivates us to extensively survey adversarial evasion attack on malware.

| Acronym | Full Form |

|---|---|

| ACER | Actor Critic model with Experience Replay |

| AE | Adversarial Example |

| AI | Artificial Intelligence |

| AMAO | Adversarial Malware Alignment Obfuscation |

| API | Application Programming Interface |

| ATMPA | Adversarial Texture Malware Perturbation Attack |

| BFA | Benign Features Append |

| BRN | Benign Random Noise |

| CFG | Control Flow Graph |

| CNN | Convolutional Neural Network |

| CRT | Cross Reference Table |

| CW | Carlini-Wagner |

| DCGAN | Deep Convolutional GAN |

| DE | Differential Evolution |

| DL | Deep Learning |

| DNN | Deep Neural Network |

| DRL | Deep Reinforcement Learning |

| FGM | Fast Gradient Method |

| FGSM | Fast Gradient Sign Method |

| GADGET | Generative API Adversarial Generic Example by Transferability |

| GAN | Generative Adversarial Network |

| GAP | Global Average Pooling |

| GBDT | Gradient Boosted Decision Trees |

| GD-KDE | Gradient Descent and Kernel Density Estimation |

| GEA | Graph Embedding and Augmentation |

| GRU | Gated Recurrent Units |

| HDL | Hardware Description Language |

| HMD | Hardware Malware Detectors |

| IoT | Internet of Things |

| KNN | K-Nearest Neighbors |

| LLC | Logical Link Control |

| LR | Logistic Regression |

| LRP | Layer-wise Relevance Propagation |

| LSTM | Long Short Term Memory |

| MEV | Modification Evaluating Value |

| MIM | Momentum Iterative Method |

| ML | Machine Learning |

| MLP | Multi Layer Perceptron |

| MRV | Malware Recomposition Variation |

| OPA | One Pixel Attack |

| Portable Document Format | |

| PE | Portable Executable |

| PGD | Projected Gradient Descent |

| ReLU | Rectified Linear Unit |

| RF | Random Forest |

| RL | Reinforcement Learning |

| RNN | Recurrent Neural Network |

| RTLD | Resource Temporal Locale Dependency |

| SDG | System Dependency Graph |

| SHAP | SHapley Additive exPlanation |

| SR | Success Rate |

| SVM | Support Vector Machine |

| TCD | Trojan-net Concealment Degree |

| TLAMD | A Testing framework for Learning based Android Malware Detection systems for IoT Devices |

| TPR | True Positive Rate |

| VOTE | VOTing based Ensemble |

| ZOO | Zeroth Order Optimization |

1.1.2 Our Contributions

This work will contribute in understanding the arms race between attacker and defender by discussing adversarial evasion attacks in different folds of the malware domain. We aim to provide completely self-contained survey on adversarial attacks carried out against malware detection techniques. Based on our knowledge, this work is one among the first to solely focus on adversarial attacks on malware detection systems. In this work, our contributions cover the following dimensions:

-

•

As our goal is to make the survey as comprehensive as possible, we provide all the related information required to completely comprehend the contents of the survey. We discuss the machine learning approaches used, the adversarial generation algorithms used by attackers, the malware detection methods attacked and the structure of files that has been exploited to insert adversarial perturbations.

-

•

We provide the threat modeling to adversarial evasion attacks carried out in malware domain. The threat model helps in quantify and analyze the attack-specific risk associated to particular target of malware. The threat is modeled in terms of attack surface of the malware detector, attacker’s knowledge about the malware detector, attacker’s capabilities on malware, and adversarial goals that is to be achieved through the malware files. The proper threat modeling also helps to well understand the behaviors of malware, allowing the adversarial attacker to craft effective perturbations.

-

•

We systematically analyze different adversarial generation algorithms proposed in different domains, which have been attempted to be used in the malware domain. We then discuss the basics of standard adversarial algorithms and taxonomize adversarial evasion attacks in the malware domain with respect to various attack domains. As Windows malware are the most abundant and also the most exploited area, we further taxonomize attacks on Windows malware based on the attack algorithms used. We also discuss attacks carried out in the less frequent file structures like Android and PDF.

-

•

We discuss real evasion attacks carried out against anti-malware engines by the researchers, under each taxonomical headings. We also cover the attack strategies used by researchers to generate adversarial attacks, showing how the attacks evolved with time. Further, we compare the motivation and limitations of each research in tabular forms for each taxonomy-class.

-

•

We discuss the challenges and limitations on existing adversarial attacks while carrying out in real world environment. We also highlight the future research directions to carry out more practical, robust, efficient and generalized adversarial attacks on malware classifiers.

1.2 Survey Organization

In this paper, we structure our survey in a hierarchical manner as shown in Figure 3. Section 6 is placed before section 5 in the Figure 3 just to manage space but actual order in the paper is in incremental order of section number. We begin our survey, as discussed in Section 1, by introducing the field of adversarial machine learning along with motivation for the need to study adversarial attacks in the malware analysis domain. Note that Table II provides acronyms that are used frequently in the survey. Section 2 discusses different machine and deep learning algorithms that are used in state-of-art adversarial research. Understanding key concepts of machine learning prerequisites provides the readers the appropriate background to grasp adversarial generation techniques discussed later in the survey. Section 3 explains the structures of Windows, Android and PDF files. The structure of files plays a key role in apprehending adversarial attacks as perturbation depends on flow and robustness of files’ structure. Section 4 provides an introduction to malware detection approaches against which adversarial attacks are designed. Section 5 models the adversarial threat from different dimensions. This section briefly elaborates on attack surface, attacker’s knowledge, attacker’s capabilities and adversarial goals. Section 6 discusses various adversarial algorithms that are considered as standard techniques for perturbation generation across different domains. Section 6 taxonomizes existing real adversarial attacks based on the execution domains (Windows, PDF, Android, Hardware, Linux) and algorithms maneuvered to carry out attack. This section discusses real attacks carried out against malware detection approaches in detail and provides comparisons among related works. Section 8 highlights challenges of current adversarial generation approaches and sheds the light on open research areas and future directions for adversarial generation in malware analysis. Finally, Section 9 concludes our survey.

1.3 Literature Search Resources

To discover the relevant state-of-art works and publications in adversarial attacks on malware analysis, we relied on different digital libraries for computer science scholarly articles. Our major sources are IEEE Xplore131313https://www.ieee.org/, ACM digital library141414https://dl.acm.org/, DBLP151515https://dblp.uni-trier.de/, Semantic Scholar161616https://www.semanticscholar.org/ and arXiv171717https://arxiv.org/. Apart from these digital libraries, we also searched directly through Google181818https://www.google.com/ and Google Scholar191919https://scholar.google.com/ to get impactful papers in domain that were somehow missed in other libraries. Among numerous keywords used to fetch the papers from public libraries, ”Adversarial Malware” and ”Adversarial attacks in malware” gave us the most number of relevant papers. After listing all the published works in adversarial generation between year 2013 to 2021, we filtered out papers with good impact, relevance and prepared the final list to conduct our detailed survey.

2 Machine Learning Preliminaries

We are in the era of Big Data [102, 103, 104, 105, 106, 107], and an unprecedented amount of digital information is generated and flowing around us. With more than 2.5 Quintilian data bytes every day, around 200 million active web pages, 500 million tweets every day and a few million years of videos in YouTube, we can imagine the magnanimity of data around [108, 109, 110, 111]. Manual extraction of valuable information from raw data is a cumbersome, tedious, and infeasible task given the volume of data. Machine learning, due to its intrinsic capability to process this humongous amount of data which can learn from raw data, discover patterns and give decisions with least human interference. ML allows automatic detection of patterns in data and use the learned model to predict future data. Prediction on unseen data helps in probabilistic decision-making under uncertainty. Tom M. Mitchell, chair of Machine Learning at Carnegie Mellon University in his book Machine Learning (McGraw-Hill1997) [112] defines machine learning as ”A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with the experience E”. Simply stating, machine learning is a branch of artificial intelligence that enables learning from data used for training. Modern literature often confuses the term ’Artificial Intelligence’ with ’Machine Learning’ and they are used interchangeably. Machine learning are the subset of artificial intelligence as shown in Figure 5 that focuses on learning the patterns and improving the predictions as experience grows. Another term ’Deep Learning’ is the current hot-topic inside machine learning which we will discuss later in this section. A trained system should learn and improve with experience, being able to make predictions based on previous learning. The normal workflow of machine learning is shown in Figure 4, where training data is passed through learning algorithm and trained models are used to make predictions.

Interests in computational approaches to learning can be seen starting back in mid-1950s [113] and since then there has been continuous growth in the development of learning systems. It was only after the 1980s that ML was observed as real-world potential, and today it continues to foster its growth towards increased intelligence in the form of deep learning. The unprecedented power of making predictions, advancement of machine learning techniques and broadening of its application areas have increased exponentially. Originating from data analysis and statistics, it has already gained a pioneering position in fields of text recognition, Natural Language Processing (NLP), speech processing, computer vision application, computational biology, fraud detection, and many security-related critical applications. Classification[114, 115, 116], regression[117, 118, 119], clustering [120, 121, 122], dimensionality reduction [123, 124], and ranking [125, 126, 127] are some examples of the major machine learning tasks applied in different applications. Starting from search engines, online product recommendations to high-end self-driving cars and space missions, the growth of human civilization has already started to be driven by the progress of machine learning.

Classical machine learning is classified based on the way of interaction between the learner and the environment [128]. The most basic approaches include supervised [129, 130], unsupervised [131, 132], semi-supervised [133, 134] and reinforcement learning[135, 136]. Supervised learning deals with training from a set of labeled training data while unsupervised learning trains on unlabeled data to find any meaningful patterns. Having the capability of finding associations among data, machine learning was able to provide tailored product development based on customer demands. The normal workflow of traditional machine learning algorithms is shown in Figure 6. The raw data presented as image in the figure are first passed to feature extraction phase which outputs feature vectors in a form that is suitable to be fed to machine learning models. The feature vectors are then used for either training or testing machine learning algorithms. In this section, we will discuss the core machine learning terminologies that will be used frequently later in the survey. This section will not be covering traditional machine learning approaches as they are rarely used for generating adversarial these days in comparison to modern deep-learning based algorithms. The subsections of this section follows no particular hierarchical order, with each subsection being a stand-alone topic.

2.1 Deep Learning

Deep Learning (DL) is a sub-field of machine learning that uses supervised and unsupervised techniques to learn multiple level of representations and features in hierarchical architectures. The ability of conventional machine learning was very limited while processing a raw data. Deep learning has been able to make significant breakthroughs for challenges faced by ML practitioners by showcasing its ability to find patterns in very high dimensional data. Deep learning has enabled researchers to reach unparalleled success in fields of image recognition [137, 138, 139], speech recognition [140, 141, 142], neuro-science integration [143], malware detection [144, 145] and most of the ML powered research areas. Since the start, structuring conventional machine learning algorithm required careful feature engineering and high level domain expertise to extract meaningful features from raw data. The effectiveness of machine learning is largely dependent on the representation ability of feature vectors. Representation learning is an approach for ML which allows models to be fed with raw data and automatically learns the representation required to make decisions.

Deep-learning methods are representation learning approach with multiple level of representation, obtained by non-linear transformations from lower to higher abstraction level. By combining such simple, non-linear transformation, machine finally learns complex function. Taking representation to higher level in each step signifies amplifying aspects of input which are important for discrimination while suppressing irrelevant features. It can be observed in Figure 6 that all the feature extraction overhead of traditional learning is replaced by neural nets in deep learning. A standard neural network architecture are made up of connected neurons which are the processors and each neuron outputs a sequence of real-valued activation. Environmental input obtained by sensors activate the input neurons while deeper neurons get activated through weighted connections from previously active neurons. Deep learning operations are usually composed of weighted combination of a group of hidden units having a non-linear activation function, based on a model structure. The architecture of neural network resembles to the perception process of human brain, where a specific sets of unit get activated if it has a role in influencing the output of neural network model. Mathematically, the deep neural network architecture are usually differentiable, so that the optimal weights of the model are learned by minimizing a loss function using variants of stochastic gradient descent through back propagation. For the example mentioned in Figure 6, we can consider an image classification example where image input comes in the form of an array of pixel value [146]. During the first layer of representation, deep learning models learns the presence or absence of edges at particular orientation. Second layer tries to detect some arrangements in detected edges discarding the minute variations in the position of edges. These arrangement of edges are combined into larger combinations, corresponding to the sections of familiar objects and subsequent layer capable of giving the detection results.

2.2 Convolutional Neural Network

Convolutional Neural Network (CNN) is one of the most popular deep learning architecture inspired by natural visual perception of the living beings[147]. It takes its name from mathematical linear operation between the matrixes called convolution. One of the first multi layer artificial neural network (ANN), LeNet-5 [148, 149], as shown in Figure 7, is considered to have established the modern framework of CNN architecture. CNN has received ground breaking success in recent years in the field of image processing [150] which has been replicated to many other fields. One of the biggest aspects behind the success of CNN is its ability of reducing the parameters in ANN.

CNN is mainly composed of 3 layers as shown in Figure 7: convolutional layer, pooling layer and fully connected layer. Convolutional layer aims to learn feature representation from the input raw data. Feature maps are computed using convolutional kernels, with each neuron of a feature map connected to a region of neighbouring neurons in the previous layer. New feature map is received by convolving around the inputs with a learned kernel and applying element-wise nonlinear activation function on the convolved results. During feature map generation, kernels are shared by all the spatial locations of the input. The role of pooling layer is to reduce the number of connections among convolutional layers which in turn helps in reducing the complexity of computation. Pooling layer hovers over each activation map and scales the dimensionality using appropriate functions like max, average and so on. Stride and filter size of pooling defines the scaling. Fully connected layers in CNN have same role as that of standard ANN, producing class scores from the activation. Other common CNN architectures include AlexNet [150], VGG 16 [151], Inception ResNet [152], ResNeXt [153], DenseNet [154].

2.3 Reinforcement Learning

Reinforcement learning (RL) can be viewed as a learning problem and a sub-field of machine learning [155]. Basically, its about using past experience to enhance the future manipulation of a dynamic system and learning by maximizing some numerical value which helps to meet long term goals. A supervised learning model learns from data and its labels whereas a RL model completely relies on its experience. In RL, a model is trained to make sequence of decisions through the action of agent in a game-like environment. Diagrammatic representation of reinforcement learning is shown in Figure 8 where it is shown as the combination of four elements: an agent capable of learning, the current environment state, an action space from which an agent can choose an action and the reward value that an agent is provided in response to each action. Program is deployed to go through a trial and error process to reach the solution of a problem. Agent acting on an environment gets either a reward or penalties for an action it performs and the goal of learning is to maximize the total reward. The programmer sets up the action space, environment and reward policy required for learning and the model figures out the way of performing tasks and maximizing the reward. An agent learning starts with random trials and errors leading to highly sophisticated tactics and superhuman decision making. In a formal definition, a system governed by machine learning algorithm observes a state from its environment at time step . The agent performs action in state to make transition to a new state . The state is basically the information about environment which is sufficient for an agent to take best possible actions. The best sequence of actions are defined by the rewards provided by the environment while executing the actions. After completion of each action and transition of environment to new state, environment provides a scalar reward to the agent in form of feedback. Rewards could be positive to increase the strength and frequency of the action or negative to stop the occurrence of action. The goal of an agent is to learn a policy that maximizes the reward. Reinforcement learning faces the challenge of requiring extensive experience before reaching optimal policy.

Exploration and exploitation through all possible directions in high dimensional state spaces leads the learning process to an overwhelming number of states and negatively impact the performance. This had limited the previous success of reinforcement learning [156, 157, 158] to lower-dimensional problems. We have discussed the rise of deep learning in last decade by providing low dimensional representation in previous sections. In its way to solve the curse of dimensionality, deep learning also enabled reinforcement learning to scale to very high-dimensional states problem, which were previously considered impractical. Mnih et al. [136] work to play Atari game using deep reinforcement learning and beating human level experts, easily elevated the application of reinforcement learning in combination with deep learning. An actor-critic model with experience replay was used to reach such performance on the Atari game. In deep reinforcement learning framework, agent acting on end-to-end way, takes raw pixels as an input and outputs the associated rewards for each actions. The learned reward function is the basis for deep Q-learning which keeps refining over the experience. Deep reinforcement learning has already been very successful in fields such as robotics [159, 160, 161, 162] and game playing [163, 164, 165] where learning from experience is very effective, replacing hand-engineered low-dimensional states.

2.4 Recurrent Neural Network

Neural networks has already been established as a very powerful tool to perform in many supervised and unsupervised machine learning problems. Their ability to learn from underlying raw features which are not individually decipherable has been unparalleled. Despite their significant power to learn from hierarchical representations, they rely on assumption of independence among the training and test sets [166]. Despite of neural net’s ability to function perfectly with independent test cases, their assumption of independence fails while data points are correlated in time or space. Recurrent Neural Network (RNN) being a connectionist model, are able to pass information across the sequence steps and processes single sequential data at a time. We can relate this to understanding meaning of a word in text by understanding the previous contexts. RNN is a adaptation of the standard feed-forward neural network allowing it to model sequential data. The basic schema of RNN is shown in Figure 9 where hidden unit takes input of current unit as well as contextual units to provide output. Different from the feed-forward neural networks, the decision for current input depends on activation from previous time steps[167]. The activation values from previous state are stored inside the hidden layers of a network which provides all the temporal contextual information in place of fixed contextual Windows used for feed forward neural networks (FFNN). Hence, dynamically changing contextual window helps RNN better suited for sequence modeling tasks.

The gradients of the RNN are very easily computed using back-propagation through time [168], and gradient descent is a suitable option to train RNN. However, dynamics of RNN makes effectiveness of gradient highly unstable, resulting to exponential gradient decays or gradient blows up. To resolve this issue, enhanced RNN architecture, Long Short-Term Memory (LSTM) is designed [169]. The architecture of LSTM are made up of special units called memory blocks inside the hidden layer of RNN. Memory cells are made up of memory blocks storing the temporary state of network and gates controlling the information flow. A forget gate prevents LSTM models from processing continuous input streams by resetting the cell states. Today RNN are being extended towards deep RNNs, bidirectional RNNs and recursive neural nets. Among many application areas, language modeling [170, 171, 172], text generation [173, 174, 175], speech recognition [176, 177, 178], text summarization [179, 180, 181] are the major areas transformed by the use of RNN models.

2.5 Generative Adversarial Network

Generative Adversarial Network (GANs) are the generative modeling approach using deep learning methods. Goodfellow et al. [182] proposed GAN as a technique for unsupervised and semi-supervised learning. In a GAN model, two pairs of networks namely: Generator and Discriminator are trained in combination to reach the goal as presented in Figure 10. Creswell et al. [183] define the generator as an art forger and the discriminator as an art expert. The forger create forgeries, with the aim of making realistic images whereas discriminator tries to distinguish between forgeries and real image. The generator’s goal is to mimic a model distribution and the discriminator separates the model distribution from the target [184]. The concept here is to consecutively train the generator and the discriminator in turn, with goal of reducing difference between the model distribution and the target distribution. During the training of GANs, discriminator learns its parameters in such a way that its classification accuracy is maximized and generator learns its parameters is such a way that it maximally forges the discriminator. The generator and the discriminator must be differentiable, while not necessarily being invertible.

GAN’s ability to train a flexible generator functions, without absolutely computing likelihood has made GAN successful in image generation [185, 186] and image super resolution [187, 188]. The flexibility of the GAN models has allowed them to be extended to structured prediction [189, 190], training energy based models [191, 192], generating adversarial examples for malware [193, 194], and robust malware detection [45, 195]. GAN models suffers from issues of oscillation during training process [196], depriving them from converging to a fixed point. Approaches that has been taken to stabilize the learning process still rely on heuristics which are very sensitive to modifications [197]. Recent research work [198, 199] is being carried out to address the stability issues of GANs.

3 File Structure

Executable files are structured differently based on the target/host OS. In this survey, we briefly cover the adversarial attacks across Windows portable executable (PE) file, PDF file and Android files. Although detailed discussions on file structure is out-of-scope for survey, a good understanding of file structure is essential for successful generation of adversarial examples. Different sections of a file are classified into two groups, mutable and immutable. Mutable sections are those which can be modified for adversarial generation without altering the functionality of file whereas immutable sections either breaks the file or alters the functionality on modification. This section will provide brief overview of three kinds of file’s structure that are discussed in later parts of survey.

3.1 Windows PE File Structure

Windows PE file format is an executable file format based on the Common Object File Format (COFF) specification. The PE file is composed of linear streams of data. The structure of Windows PE file as shown in Figure 11 is derived and confirmed from [200, 201, 202]. The header section consists of MS-DOS MZ header, MS-DOS stub program, PE file signature, the COFF file header and an optional header. File headers are followed by body sections, before closing the file with debug information. First 64 bytes of PE file are occupied by MS-DOS header. This header is required to maintain the compatibility with files created on Windows version 3.1 or earlier. In absence of MZ header, the operating system will fail to load the incompatible file [201]. The Magic number used in the header determines if the file is of compatible type. Stub-program is run by MS-DOS after loading the executable and is responsible for giving output messages which include errors and warnings.

PE file header is searched by indexing the e_lfanew field to get the offset of file which is the actual memory-mapped address. This section of the PE file is one of the target areas to perform modification by using these locations as macros in order to create adversarial examples. The macro returns the offset of file signature location without any dependency on the type of executable file. At offset 0x3c, 4-byte signature is placed which helps to identify the file as a PE image. The next 224 bytes is taken by optional header. Even though it may be absent in few types of file, it is not an optional segment for PE files. It contains information like initial stack size, program entry point location, preferred base address, operating system version, section alignment information and few other [201]. Section headers are of 40 bytes without any padding in between. The number of entries in the section portion is given by the NumberofSections field in the file header [203]. Section header contains fields like Name, PhysicalAddress or VirtualSize, VirtualAddress, SizeOfRawData, PointerToRawData and few more pointers with characteristics.

Data is located in data directories inside data section. Information from both the section header as well as optional header are required to retrieve data directories. The .text section contains all the executable code sections along with the entry point. An uninitialized data for the applications are stored in the .bss section which includes all declared static variables and .rdata section represents all the read only data like constants, strings and debug directory information. The .rsrc section contains resorce information for the module and export data for an application are present in .edata section. Section data are the major area where perturbation takes place to make a file adversarial. Debug information is placed on .debug section but the actual debug directories resides in the .rdata section.

3.2 Android File Structure

Android APK file has been recently victimized as a tool for adversarial attacks [204, 205, 206, 207]. APK file is basically a ZIP files containing different entries as shown in Figure 12. Different sections of APK files are described below:

-

•

Androidmanifest.xml: AndroidManifest.xml contains the information to describe the application. It contains the information like application’s package name, components of application, permissions required and compatibility features [208]. Due to presence of large amount of information, AndroidManifest.xml is one of the majorly exploited section in APK file for adversarial attack.

-

•

classes.dex: As Android applications are written in Java, source code will be with extension .java. These source code are optimized and packed into this classes.dex file.

-

•

resources.arsc: This file is an archive of compiled resources. Resources include the design part of apps like layout, strings and images. This file form the optimized package of these resources.

-

•

res: Resources of app which is not compiled to store in resources.arsc stays in res folder. The XML files present inside this folder are compiled to binary XML to boost performance [209]. Each sub-folder inside res store different types of resources.

-

•

Meta-INF: This section is only present in signed APKs and has all the files in APK along with their signatures. Signature verification is done by comparing the signature with the uncompressed file in archive [210].

3.3 PDF File Structure

In this section we will look into the internal structure of PDF file format. PDF is a portable document with wide range of features, capable of representing documents which includes text, images, multimedia and many others. The basic structure of a PDF file is shown in Figure 13 and are discussed below:

-

•

PDF header: PDF header is the first line of PDF which specifies the version of a PDF file format.

-

•

PDF Body: The body of a PDF file consists of objects present in the document. The objects include image, data, fonts, annotations, text streams, etc. [211]. Interactive features like animation and graphics can also be embedded in the document. This section provides the possibility of injecting contents and files within it, which makes it the most favourable avenue for adversarial attackers.

-

•

Cross-reference table: The cross-reference table stores the links of all the objects or elements in a file. Table helps on navigating to other pages and contents of a document. Cross-reference table automatically gets updated on updating the PDF file.

-

•

The Trailer: The trailer denoted end of PDF file and contain a links to cross-reference table. The last line of trailer contains the end-of-file marker, %%EOF.

4 Malware Detection

In globally networked world, malware has posed a serious threat to data, devices and users on internet. From data theft to disrupting the computer operation, with increasing reliability over internet, malware is a growing menace. Malware is being used as a weapon on digital world carrying malicious intentions throughout the internet. Malware attacker tries to take advantage from legitimate users and accomplishing financial or other goals. Malware can be in any forms like viruses, trojan, ransomware, rootkits, spyware and so on. Global cybercrime cost is projected to be around $10.5 trillion in 2025 [212] which shows the required urgency to mitigate or limit the damage from these malicious software. Security researchers all around the world are working to combat with these malware files via antivirus software, firewalls and numerous other approaches. However, with big incentives driving malware production, millions of new malware202020https://www.av-test.org/en/statistics/malware/ are introduced to cyber world every year. These exponentially growing malware number comes with highly equipped tools and techniques, thus requiring continuous work on effective and efficient malware detection technologies.

Current malware detection techniques are broadly classified into signature based and behavioral based approaches as shown in Figure 14. Traditionally, signature based approaches were used to detect malware. However, due to inability of this approach to detect zero-day attacks, the much focused has been moved into behavioral based approaches (dynamic and online). In modern day anti-virus, hybrid approaches are considered by combining signature based approaches with behavioral based techniques. We will now discuss different types of malware detection approaches.

4.1 Signature Based Malware Detection

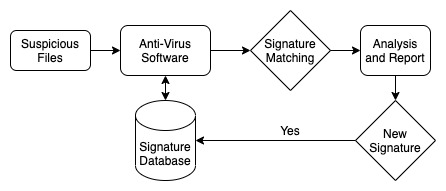

Signature is a short sequence of bytes unique to each malware and helps in identifying malware from rest of the files. Since this approach works by maintaining malware signature database, there are very low false positives rate. Signature based detection has been very effective and fast for malware detection but it is not able to capture the ‘unseen’ malware. Figure 15 shows the malware detection process using signature. As shown in figure, signature database is predefined list of all the possible malware signatures and is solely responsible for entire malware detection process. The anti-malware engine if detects the malicious objects, malware signature is updated in signature database for future detection. A good malware detector’s signature database has a huge number of signature that can detect malware [213]. Signature based malware detection are good at speed of detection, efficiency to run and broad accessibility [214]. However, the inability to detect zero-day malware whose signature is not available in database of anti-malware engine led to question the reliability of signature based approaches. Digital signature patterns can be extracted easily by attacker and implemented to confuse the signature of malware. Current malware comes with polymorphic and metamorphic properties [215, 216] which can easily change their behavior enough to change the signature of file. With complete dependence over known malware, signature based detection can neither detect zero day attacks nor the variations of existing attacks. In addition to it, signature database grows exponentially with malware family growing at a rapid pace [217].

4.2 Zero-day Malware Detection

To overcome the limitations posed by signature based approach, zero-day malware detection techniques are focused to capture the unseen malware. In modern zero-day detection approaches, suspicious objects are identified based on behavior or potential behavior of the file [22, 21]. An object’s potential behavior is first analyzed for suspicious activities before deploying in a real-time production environment. Those behavior which are anomalous to benign file actions, indicates the presence of malware. Most of the zero-day detection approaches are built around machine learning systems, with state-of-art works using modern deep learning architectures. The captured behavior of the file under inspection is generalized using machine learning models, which is later used to detect unseen malware family. Here, we discuss three different types of zero-day malware detection in this section below:

-

•

Static Approach: Static malware detection is the closest approach to signature based system as detection is carried out without running the file. Execution of unknown file may not be always possible in system due to security risks and this is where static detection comes into play. Anti-malware system captures static attributes like hashes, header information, file type, file size, presence of API calls, n-grams etc. from binary code of executable using reverse engineering tools. Once the features are extracted, they are pre-processed to keep only non-redundant and important features. Among numerous available features, n-Grams [218, 219] for byte sequence analysis and Opcode [220] used to analyze the frequency of ’Operation Code’ appearance are the most widely used ones. As shown in Figure 16, the extracted features are fed to different machine learning algorithms ranging from classical to deep learning architectures to train the detection model. The trained model is then used to carry out detection of malware from static features. However, static detection alone is not sufficient to detect more sophisticated attacks [221, 222, 223] as the static features can not reflect the exact behavior of malware on run time, which limits its applications [224] in real world.

-

•

Dynamic and Online Approach: Dynamic approaches [225, 226, 227] are constructed by executing a suspicious file inside the isolated virtual environments like a sandbox [228] and detecting malware based on a run-time behavior of a program. The use of closed environment is to prevent malware from escaping and attacking the system where analysis is being conducted. Malware on execution can change the registry key maliciously and obtain the privileged mode of operating system [229]. During the execution of malware, properties of operating system changes which is logged by agent in controlled environments. Dynamic analysis enables system to capture dynamic indicators like application programming interface (API) calls, registry keys, domain names, file locations and other system metrices. These features are pre-processed and fed to machine learning model to train malware detector in the flow as shown in Figure 17. Dynamic analysis are considered more powerful than static due to ability to capture more number of system features. Code obfuscation approaches and polymorphic malware are considered ineffective against dynamic malware detection [230] reflecting its resilience from such sophisticated malware.

Dynamic approach, though overcoming some limitations of the static detection, have its own challenges. Every suspicious file needs to be executed in an isolated environment for specific time frame which results in expense of significant time and resources [231]. The malware file does not guarantee to exhibit a same behavior both in a sandbox and live environment [232]. Modern smart malware comes with the ability of detecting the presence of sandbox and stay dormant till they reach live systems. In most of the current applications, both static and dynamic analysis are combined to detect the presence of malware in the file [233, 234, 235]. To combat the issue of polymorphic and metamorphic malware which are evasive to control malicious functionality only during some particular events, online malware detection approaches are performed [236, 237, 238, 20, 19]. Online malware analysis continuously monitors the system for the presence of maliciousness in any file [239]. Continuous monitoring helps to capture the malware at any time in live environment. However, online detection also demands for continuous monitoring overhead to the system. As most of the adversarial attacks performed so far in the literature are on static malware detection approaches, this survey will primarily focus on evasion attacks carried out against static malware detection.

5 Adversarial Threat Model

Security threats are defined in terms of their goals and capabilities. In this section, we defined the adversarial threat model, tailored to evasion attacks in malware, into four parts: adversarial knowledge, attack surface, adversarial capabilities and adversarial goals. This section aims to provide the readers with explanations to the major components of adversarial attacks.

5.1 Adversarial Knowledge

The adversary’s knowledge is the amount of information about a model under attack that the attacker has, or is assumed to have, to carry out adversarial attacks against the model. An adversarial attack can be classified into two groups based on the attacker’s knowledge:

-

•

White box attack: In a white box approach, an attacker has full knowledge about the underlying model. Such knowledge might include, but not limited to, the name of the algorithm, training data, tuned hyper-parameter, gradient information, among others. It is relatively easy to carry out attacks in white box model due to large amount of available knowledge. Current state-of-art works on white box environment have achieved near perfect adversarial attacks [100].

-

•

Black box attack: In a black box approach, an attacker only have access to inputs and outputs of the model. There is no information provided about the internal structure of the model. Generally in black box attack, surrogate model is created by making guess on internal structure of target model using input and output [240, 81]. In addition, in a gray box attack [241], a type of black box attacks, the attacker knows the output performance of the model in the form of accuracy, confusion matrix or some other performance metrics.

There is large variation on the amount of adversarial knowledge starting from complete access to actual source codes to receiving only output of models. In general, it is assumed that black box adversarial attacks are difficult to orchestrate compared to white box, primarily due to the information available regarding the underlying target model. However, black box attacks reflect more real world use-cases where, in practical sense, an attacker will not likely have any knowledge of models or other parameters.

5.2 Attack Surface

Attack surface includes different vulnerable points by which an attacker attacks the target model. Machine learning algorithms pass through a pipeline of different stages before deployment. The flow of data through this data pipeline introduces vulnerabilities in each stage [242]. Starting from collection of data, transformation and processing to output generation, an attackers have different attack entry points. Attack surface comprise all those points in machine learning models (malware defender models in our case), where adversaries can carry out their attacks. Based on different approaches to carry out attacks, attack surface has been classified into following broad categories [243]:

-

•

Poisoning Attack: This attack is carried out by contaminating training data during the training process of models [244, 245, 246]. Training data is poisoned with faulty data, making machine learning models learn on wrong dataset. As a result of poisoned training data, the entire training process is compromised.

- •

- •

5.3 Adversarial Capabilities

Adversarial capabilities denote the abilities of adversaries and are dependent on their knowledge of the target model. Some adversaries have access to training data, some have access to gradient information of the model, while others do not have any access to the model at all. The capabilities of attacker vary depending on the information and phase (i.e. training or testing phase) of the model they are attacking. The most straightforward attack approach is attacker having access to full or partial training data. For adversarial attacks carried out on malware files, adversarial capabilities can be classified into following categories:

-

•

Data Injection: Data injection is the ability of attackers to inject a new data. There are multiple types of data injection that might take place. One type of injection can be done on training data before training process. Another type of data injection is carried out by inserting a perturbations which forms a new section or replaces original section within an existing file. Injected data can corrupt the original model or cause the data injected file to evade detection.

-

•

Data Modification: Data modification can also be performed both for training data and evading file. If attacker has access to training data, data can be modified to cause model learn on modified data. Attacker can also modify input data to cause perturbation and leading to evasion.

-

•

Logic Corruption: Logic corruption is the most dangerous ability to be possessed by attacker and also the most improbable. Whenever an attacker has complete access over a model, they can modify the learning parameters and other hyper-parameters related to model. Logic corruption can go undetected which makes it hard to design any remedies.

5.4 Adversarial Goals

An attacker tries to fool the target model, causing it to produce misclassifications. Details of algorithms used to successfully attack and achieve the adversaries goals are discussed in section 6. Typically, the adversarial goals of attacker’s are categorized as follows:

-

•

Untargeted Misclassification: An attacker tries to change the output of model to a value different than original prediction. For a malware classification problem, if a ML model is predicting a malware file as family A, the goal is to force the model to misclassify it as a family other than A.

-

•

Targeted Misclassification: An attacker tries to change the output of the model to a target value. For example, if a ML model is predicting a malware file as family A, the goal is to force the model to misclassify it as a family B.

-

•

Confidence Reduction: An attacker’s goal is to reduce the confidence of a ML model’s prediction. It is not necessary to change the prediction value but a reduction of confidence is enough to meet the goal.

To summarize, Figure 18 gives an overview of the adversarial attack difficulty with respect to the attacker’s knowledge, capabilities and goals. While moving in the direction of increasing attack complexity from confidence reduction to targeted misclassification, attack difficulty also increases for the attacker. However, whitebox attacks with higher attacker’s capability has least attack difficulty.

6 Adversarial Algorithms

In this section, we will explore the most distinguished adversarial attack algorithms that have been discovered in different domains and are applied to generate adversarial malware samples. Different algorithms are developed in numerous time frames battling the trade off in terms of application domain, performance, computational efficiency and complexity [249]. We will discuss the architecture, implementation and challenges of each algorithm. Most of the attack algorithms are gradient based approaches where perturbations are obtained by optimizing some distance metrics between original and perturbed samples.

6.1 Limited-memory Broyden - Fletcher - Goldfarb - Shanno (L-BFGS)

Szegedy et al. [71] proposed one of the first gradient based approaches for adversarial example generation in the imaging domain using the box constrained Limited-Memory Broyden-Fletcher-Goldfarb-Shanno optimization technique. The authors studied counter-intuitive properties of deep neural networks which allow small perturbations in the images to fool deep learning models for misclassification. Adversarial examples trained for particular neural network are also able to evade other neural networks trained on completely different hyper-parameters. These results are attributed to non-intuitive characteristics and intrinsic blind spots of deep learning models learned by back propagation, with structure connected to data distribution in a non-obvious way. Traditionally, for small enough radius 0 around the given training sample , satisfying will be classified correctly by a model with very high probability. However, many underlying kernels are found not holding to this kind of smoothness. Simple optimization procedure is able to find adversarial sample using imperceptibly small perturbations, leading to incorrect classifications by classifier. While adding noise to an original image, the goal is to minimize perturbation added to the original image under distance. A classifier mapping pixel value vectors to a discrete label set is denoted as and the loss function associated is given by . For a given image with a target label , box-constrained optimization problem is defined as :

| (1) |

where x is the original image, r is the added perturbation, f is the loss function of the classifier and l is the label of incorrect prediction by the classifier. Perturbed is arbitrarily chosen using distance minimizer. The computation of distance is done by approximation using box-constrained L-BFGS. After this early proposal of L-BFGS for adversarial examples generation, plenty of research were triggered to dive into flaws of deep learning.

6.2 Fast Gradient Sign Method (FGSM)

Considering gradient-based optimization technique as a workhorse of modern AI, Goodfellow et al. [74] proposed an efficient approach for generation of adversarial perturbation in image domain. In contrast to earlier works which explained adversarial phenomena to non-linearity and overfitting, the authors argued the linear nature of neural networks leading to their vulnerability. Linear behaviour in high dimensional space are found sufficient to cause adversarial samples. Linearity is the result of trade off while designing models that are easy to train. LSTMs [169], ReLUs and maxout networks [250] are all found to be intentionally designed to behave linearly for ease of optimization. To define the approach formally, let’s consider as a parameter of model, as input to the model, as target associated with and be the cost function for training neural network. On linearizing the cost function around the current parameter values , perturbation can be obtained by

| (2) |

where required gradient can be computed using backpropagation and the approach is called as Fast Gradient Sign Method.

Conversion of features from problem space to feature space affects the precision. Commonly images are represented by 8 bits per pixel and all other information below 1/255 of continuous range are discarded. With limited precision, classifier may not be able to respond to all perturbations whose size is smaller precision of feature. Classifier having well-separated decision boundary for for classes are expected to assign same class for original sample and perturbed sample until where is small enough to be discarded. Taking the dot product and weight vector w and an adversarial example :

| (3) |

The adversarial perturbation increases the activation by . The amount of perturbation can be controlled by keeping max norm constraint on and assigning . Taking with dimensions and having average magnitude weight vector , the activation grows by . Even though does not grow with increasing dimensionality of the problem but for high-dimensional problems, the activation change caused by the adversarial perturbation can grow linearly. In presence of sufficient dimensions, even simple linear models are seen to have adversarial examples. Adversarial examples are found to occur in contiguous regions of 1-D subspace defined by the fast gradient sign method, where traditional belief was in fine pockets. This allows adversarial examples to be abundant and generalizable across different machine learning models. FGSM being one of the most efficient techniques for adversarial with fast generation of samples, is among the most used technique in this field.

6.3 Iterative Gradient Sign Method (IGSM)

Different from the one step perturbation approach where single large step in direction of increasing loss of classifier, Iterative Gradient Sign Method takes iterative small steps while adjusting the direction after each step [72]. Basic iterative method extends FGSM approach by applying it multiple times with small step size and clipping the pixel values after each iteration to ensure the perturbation within neighbourhood of original image.

| (4) |

where is the perturbed image at iteration and function performs pixel wise clipping on image in order to keep perturbation inside -neighbourhood of source image X. Kurakin et al. [72] extended basic iterative method to iteratively least likely class method to produce adversarial for targeted misclassification. Desired class for this version of iterative approach is chosen based on the prediction of the trained network, given as:

| (5) |

To make adversarial classified as is maximized taking iterative steps in direction given by . Now the adversarial generation cost function can be viewed as:

| (6) |

This iterative algorithm helps to add finer perturbations without damaging the original sample even with higher .

6.4 Jacobian Saliency Map Attack (JSMA)

Most of the adversarial generation techniques are based on observing output variations to generate input perturbations, while Papernot et al. [251] crafted adversarial samples by constructing a mapping of input perturbations with output variations. The approach is based on limiting the -norm of the perturbation which deals with minimal number of pixel modification. The proposed adversarial generation algorithm against feed forward DNN modifies small portion of input features by applying heuristic search approaches. Adversarial sample is constructed by adding perturbation to benign sample through following optimization problem:

| (7) |

where is the adversarial sample and is the desired adversarial output. Forward derivative is used to evaluate the changes on output due to corresponding modifications in input and these changes are presented on matrix form called as Jacobian of the function. Replacing gradient descent techniques with forward derivative allows attacker to generalize attack for both supervised and unsupervised architecture for broad families of adversaries. Forward derivative of Jacobian matrix of function F is learnt by neural network during training process. For a function with single dimensional output, Jacobian matrix is given as:

| (8) |

Forward derivative helps to distinguish the region which are unlikely to generate adversarial sample and focus on features with high forward derivative for efficient search and smaller distortions. JSMA is a black-box attack with only assumption of DNN architecture using differentiable activation function. Algorithms take a benign sample , a target output , a feedforward DNN F, a maximum distortion parameter , feature variation parameter and undergoes following steps to give adversarial sample such that .

-

•

Compute forward derivative

-

•

Construct a Saliency map S based on the derivative

-

•

Modify an input feature by

The forward derivative calculate gradients similar to those computed for back-propagation, taking derivative of network directly in place of its cost function and differentiating with respect to input features in place of the network parameters. Consequently, gradients are propagated forward which helps in determining input components leading to significant changes in network outputs. Authors extended application of saliency maps [252] to construct adversarial saliency maps which gives features having significant impact on output and thus is a very versatile tools to generate wide range of adversarial examples. Once saliency map gives the input feature that needs to be perturbed, benign samples are perturbed using distortions limited by parameter . The limiting parameter depends on human perception of adversarial sample. The experiment is carried out on LeNet architecture using MNIST dataset. Adversarial crafting is done by increasing or decreasing the pixel intensities of images. Before wrapping up JSMA, we discuss briefly about Saliency Vector. Saliency Vector contains the features in input blocks of data and their significance for machine learning model. Importance of input feature given by saliency vector can be thought of as a function of network’s sensitivity to changes in the input feature [253]. The regions of element in original files corresponds to the position of elements in the vector and value of that element gives the measure of importance of that feature region. Zhou et al. [254] proposed Class Activation Mapping to produce visual interpretation for CNN-based model. Authors used global average pooling to indicate discriminative image regions used by CNN to make the decision. Due to difficulty of modifying and retraining the original model to obtain CAM, Selvaraju et al. [255] proposed Grad-CAM method. Gradient-weighted CAM uses the gradient information flowing into the final convolutional layer to produce a localization map highlighting the important regions of image. Saliency vector allows to observe database bias and improve the models based on training data.

6.5 Carlini & Wagner attack (C&W)

Carlini & Wagner [256] proposed an adversarial generation approach to overcome the defensive distillation. Defensive distillation has been recent discovery to harden any feed-forward neural network against adversarial examples by performing only a single retraining. Proposed approach is able to perform three types of attacks: attack, attack and attack to evade defensively distilled and undistilled networks. These attacks are based on different distance metrics which are:

-

•

distance, measuring the number of pixels modified in an image

-

•

distance, measuring the standard Euclidean distance between original sample and perturbed sample

-

•

distance, measuring the highest change among any of the perturbed coordinates

The optimization problem for adversarial generation of input image is given as:

| (9) |

where input is fixed and goal is to reach that minimizes . could be any of distance metric among . Different approaches are taken to limit the modification and generate valid perturbations:

-

•

Projected gradient descent is allowed to perform only one standard gradient descent, clipping all other coordinates

-

•

Clipping gradient descent does not clips input perturbation on each iteration, but clips into objective function to be minimized

From their experiments [256], it is observed that attack has low distortion while and is not fully differentiable as well as bad suited for gradient descent.

6.6 DeepFool

Dezfooli et al. [257] proposed an untargeted white-box adversarial generation technique called as DeepFool. DeepFool works by minimizing the euclidean distance between perturbed sample and original samples. Attack begins by generating linear decision boundary to separate the given classes and accompanied by addition of perturbation perpendicular to the decision boundary that separates classes as demonstrated in Figure 19. Attacker projects the perturbation into a separating line called hyper-plane and tries to push it beyond for mis-classification. In high dimensional space, decision boundaries are usually non-linear, so the perturbation are added iteratively by performing multiple attacks till evasion. Attack for such multiclass finds the closest hyperplane and projects input towards that hyperplane and then proceeds to other. The minimal perturbation required to misclassifiy classifier is the orthogonal projection of onto and is given by closed loop formula in Equation 10.

| (10) |

where is perturbation, is classifier function. is gradient and the original sample.

6.7 Zeroth Order Optimization (ZOO)