[1]\fnm \surJiabao Zhao

[1]\orgdivThe School of Computer Science and Technology, \orgnameEast China Normal University, \cityShanghai, \postcode200062, \stateShanghai, \countryChina

[2]\orgdivThe School of Psychology and Cognitive Science, \orgnameEast China Normal University, \cityShanghai, \postcode200062, \stateShanghai, \countryChina

A Survey of Explainable Knowledge Tracing

Abstract

With the long-term accumulation of high-quality educational data, artificial intelligence (AI) has shown excellent performance in knowledge tracing (KT). However, due to the lack of interpretability and transparency of some algorithms, this approach will result in reduced stakeholder trust and a decreased acceptance of intelligent decisions. Therefore, algorithms need to achieve high accuracy, and users need to understand the internal operating mechanism and provide reliable explanations for decisions. This paper thoroughly analyzes the interpretability of KT algorithms. First, the concepts and common methods of explainable artificial intelligence (xAI) and knowledge tracing are introduced. Next, explainable knowledge tracing (xKT) models are classified into two categories: transparent models and “black box” models. Then, the interpretable methods used are reviewed from three stages: ante-hoc interpretable methods, post-hoc interpretable methods, and other dimensions. It is worth noting that current evaluation methods for xKT are lacking. Hence, contrast and deletion experiments are conducted to explain the prediction results of the deep knowledge tracing model on the ASSISTment2009 by using three xAI methods. Moreover, this paper offers some insights into evaluation methods from the perspective of educational stakeholders. This paper provides a detailed and comprehensive review of the research on explainable knowledge tracing, aiming to offer some basis and inspiration for researchers interested in the interpretability of knowledge tracing.

keywords:

Explainable artificial intelligence, Knowledge tracing, Interpretability, Evaluation1 Introduction

The emergence and application of numerous educational tools, such as profiling and prediction [1], intelligent tutoring systems [2, 3], assessment and evaluation [4], adaptive systems and personalization [5, 6], are transforming traditional methods of teaching and learning. Knowledge tracing (KT) is an important research direction in the field of artificial intelligence in education (AIED) that can automatically track the learning status of students at each stage. KT has been widely used in intelligent tutoring systems, adaptive learning systems and educational gaming [7, 8]. Recently, deep learning-based methods have significantly improved the performance in KT tasks; however, this improvement comes at the cost of interpretability [9, 10]. A lack of explainability is not conducive for stakeholders to understand the reasons behind an algorithm’s decisions, which may reduce stakeholders’ trust in these tools. For instance, if a knowledge tracing model yields unrealistic predictions, teachers may fail to understand the actual knowledge level of their students, and students may not receive an accurate assessment of their weaknesses. In addition, it is not easy for users or regulators to find defects in black box applications, which may raise security issues, such as learner resistance [11] or an increase in high-risk students [12]. To solve the above issues, researchers have been attempting to improve the interpretability of AI in various educational tasks, such as explainable learner models [13, 14, 15], explainable recommender systems [16], explainable at-risk student prediction [17, 18], and explainable personalized interventions [19].

To the best of our knowledge, there has not been a comprehensive survey of related research on the interpretability of knowledge tracing. This paper aims to fill this gap. Compared to the existing knowledge tracing surveys [20, 21, 22, 11], this study primarily focuses on explainable algorithms and knowledge tracing interpretability. The motivation behind this paper is threefold. First, it aims to provide a detailed and comprehensive review of the research on explainable knowledge tracing (xKT). Second, different interpretability methods for knowledge tracing should be compared, and evaluation methods should be explored. Finally, this study aims to provide a foundational and inspirational resource for researchers interested in the field of explainable knowledge tracing.

1.1 Contributions

The contributions of this survey are to provide an in-depth examination of the current status of explainable knowledge tracing. By doing so, it aims to establish a solid foundation of understanding and inspire additional research interest in this rapidly growing area.

Inspired by the classification criteria of xAI for complex object models as delineated by Arrieta et al. [9], this paper offers a novel categorization of knowledge tracing models into two distinct types: transparent models and black-box models. This dichotomy is further explored with a detailed examination of interpretable methods tailored to these models across three critical stages: ante hoc, post hoc, and other dimensions.

Moreover, the current evaluation methods for explainable knowledge tracing are still lacking. In this paper, contrast and deletion experiments are conducted to explain the prediction results of the deep knowledge tracing model on the same dataset by using three XAI methods. Furthermore, this work extends an insightful overview into the evaluation of explainable knowledge tracing, tailored to varying target audiences, and delves into the prospective directions for the future development of explainable knowledge tracing.

1.2 Systematic Literature Review (SLR) and Execution

In this paper, the systematic literature review (SLR) methodology was adopted. This methodology was developed by Kitchenham and Charters [23] and is specifically designed for comprehensive analyzes in software engineering and computer science. The SLR methodology is significant because of its systematic approach to collating and synthesizing literature, providing a comprehensive understanding of the interpretability of knowledge tracing. The survey process commenced with the formulation of specific research questions, focusing on the application and implications of xAI in knowledge tracing. The primary questions addressed were as follows: a) How can the interpretability of knowledge tracing algorithms be improved? b) What are the applications and classifications of xAI in knowledge tracing? c) How can explainable knowledge racing models be effectively evaluated?

Our search strategy involved a comprehensive list of keywords, such as “explainable artificial intelligence”, “xAI”, ”explainable”, ”explainability”, ”explanation”, ”interpretable”and “knowledge tracing”. These keywords were chosen based on their prevalence in the current literature and relevance to our research questions. We used the Boolean operators ’AND’ and ’OR’ to construct detailed search strings. We conducted searches in databases such as IEEE Xplore, ACM Digital Library, Science Direct, and SpringerLink. These databases were selected for their extensive coverage of computer science and AI literature. From an initial pool of 1,783 studies, 517 were screened based on their relevance to knowledge tracing. After a full-text review, 57 papers were selected based on our inclusion and exclusion criteria, and the search period ended on November 2023.

Inclusion Criteria: 1) Relevance: Studies must focus on the interpretability of knowledge tracing algorithms, including theoretical analyses, application case studies, and empirical evaluations. 2) Novelty and Contribution: Studies should offer novel insights or approaches in the field of knowledge tracing interpretability. This includes introducing new methodologies, providing unique theoretical perspectives, or presenting novel empirical findings that significantly advance the understanding of the topic. 3) Publication Quality: Studies must be published in peer-reviewed journals or conference proceedings. In cases where no peer-reviewed version is available, but the study is highly relevant to the research topic, non-peer-reviewed versions (e.g., arXiv preprints) will also be considered for inclusion. 4) Language: The study must be written in English. Exclusion Criteria: 1) Relevance: Studies not directly addressing the research questions, specifically those not focusing on the interpretability of knowledge tracing algorithms, will be excluded. 2) Empirical and Methodological Rigor: Studies lacking empirical data support or detailed methodological descriptions will be excluded. This includes opinion pieces or conceptual framework studies without specific empirical analysis.

The number of papers published each year is shown in Fig. 1. Research on the interpretability of knowledge tracing has shown a significant increasing trend since 2019, indicating that researchers have realized that high accuracy alone is insufficient to gain the trust of stakeholders when applying AI models to real-world educational scenarios, and improving the interpretability of model decisions is a crucial issue that needs to be addressed.

1.3 Structure

The remainder of this survey is organized as follows. Section 2 discusses the relevant research on explainable artificial intelligence and knowledge tracing while also emphasizing the importance of interpretability in knowledge tracing algorithms. Section 3 delves further into explainable knowledge tracing, offers insights into its various dimensions and implications, and presents a detailed examination of interpretable methods suitable for explaining knowledge tracing models. The focus of Section 4 is on the methodologies for scientifically evaluating the interpretability of knowledge tracing models. Finally, Section 5 outlines future research directions in explainable knowledge tracing, pinpointing key areas that warrant further investigation and innovation. To enhance readers’ understanding of this paper’s architecture, we depict it in detail in Fig. 2.

2 Background

This section thoroughly examines the developmental background of KT and xAI, presenting the latest frameworks and methodologies in these fields. It delves into the concepts, classifications, and evolution of KT models while revealing the inherent limitations of these models. The section also explores the ongoing challenge of finding a balance between model accuracy and interpretability, discussing how to achieve the optimal compromise between the two. Additionally, it offers an in-depth analysis of xAI’s fundamental principles, methodologies, and evaluation mechanisms, underscoring its crucial role in enhancing transparency and comprehension of complex AI systems. Specifically, the section explores the application of tailored explanation methods in the KT domain, proposing targeted solutions for both professional and lay users. By comprehensively analyzing the theoretical foundations and practical applications of KT and xAI, this chapter aims to provide groundbreaking insights into the interpretability of knowledge tracing.

2.1 About Knowledge Tracing: Concepts, Taxonomies, Evolution, Limitations

Knowledge tracing has become a key component of learner models. A large amount of historical learning trajectory information provided by an intelligent tutoring system (ITS) is used to model learners’ knowledge states and predict their performance in future exercises [24]. Thus, knowledge tracing provides personalized learning strategies [25] and learning path recommendations [26] for education stakeholders and is a crucial element of adaptive education. Specifically, knowledge tracing is a task for predicting students’ performance in future practice according to changes in learners’ knowledge mastery in historical practice [27]; this task involves two main steps: 1) modeling learners’ knowledge state according to their historical practice sequence and 2) predicting learners’ performance in future practice. In other words, this task can be formulated as a supervised time series learning task, where represents a student’s answer pair, represents the exercise ID, and represents the answer result for related exercise , (1 indicates the correct answer and vice versa). Given a student’s exercise sequence and the next exercise, the task objective is to predict the correct probability of the exercise .

| Category | Description | Representative Works |

|---|---|---|

| Markov process-based knowledge tracing | Assuming that a student’s learning process is representable as a Markov process, it can be modeled with probabilistic models. | BKT [28] DBKT [29] |

| Logistic knowledge tracing | The logistic function represents the probability of a student answering an exercise correctly, under the premise that this probability is expressible as a mathematical function involving the student and the KC parameter. The logistic model posits that students’ binary answers (correct or incorrect) adhere to Bernoulli distributions. | LFA [30] PFA [31] KTMs [32] |

| Deep learning-based knowledge tracing | DLKT models can simulate changes in students’ knowledge states and encapsulate a broad spectrum of complex features, which might be challenging to extract through other methods. | DLKT: [27] [33] [34] [35] [36] [37] [38] [39] [40] |

According to the general classification method of knowledge tracing models, existing models can be classified into the following three categories [21]: 1) Markov process-based knowledge tracing, 2) logistic knowledge tracing, and 3) deep learning-based knowledge tracing (DLKT). The taxonomies of knowledge tracing are shown in Table 1. Next, we introduce a series of seminal works on the aforementioned three types of models and outline the timeline of knowledge tracing evolution, as shown in Fig. 3.

In 1994, Corbett et al. proposed Bayesian knowledge tracing (BKT) [28], which is based on a two-state Hidden Markov Model(HMM) that treats student knowledge states as hidden variables [41]. However, since the model uses a shared set of parameters for the same knowledge component (KC), it cannot personalize modeling for students at different levels. To overcome this limitation, researchers have added personalized features to make the model more realistic, leading to the emergence of various variations based on Bayesian knowledge tracing, marking the initial phase of knowledge tracing research. One notable improvement is dynamic BKT (DBKT) [29]. To address the issue of BKT modeling each KC individually, DBKT employs dynamic Bayesian networks to represent multiple KCs jointly in a single model. This approach models the prerequisite hierarchies and relationships within KCs. Both DKT and DBKT are representative models of knowledge tracing based on the Markov process. In 2006, Cen et al. [30] proposed learning factor analysis (LFA), which inherits the Q matrix used in psychometrics to assess cognition and extends the theory of learning curve analysis. An improved LFA model is performance factor analysis (PFA) [31], which was developed in 2009. Additionally, knowledge tracing machines (KTMs) [32] utilize a factorization machine to model all variable interactions. These three methods are representative models of logistic knowledge tracing, and a detailed explanation of each will be provided in Section 3.2. In general, logistic knowledge tracing has achieved better performance than BKT, and knowledge tracing has gradually entered a development period [42]. Since deep knowledge tracing (DKT) [27] was proposed in 2015, deep learning techniques have shown more vital feature extraction ability in knowledge tracing than the other two types of models. Based on this seminal work, much deep learning-based knowledge tracing (DLKT) has emerged. For example, researchers have applied deep learning techniques to knowledge tracing in various ways. Below, we list several categories of representative work: 1) memory-aware knowledge tracing: DKVMN [33]; 2) attention-aware knowledge tracing: SAKT [35] and AKT [36]; 3) graph-based knowledge tracing: GKT [37] and HGKT [43]; 4) relation-aware knowledge tracing: RKT [38]; 5) exercise-aware knowledge tracing: EKT [39]; and 6) interpretable knowledge tracing: TC-MIRT [44], IKT [40], QIKT [45], GCE [46], and stable knowledge tracing using causal inference [47].

As shown in Fig. 3, before the emergence of deep learning in knowledge tracing, Bayesian knowledge tracing and logistic knowledge tracing were widely used because of their relatively simple model structures and powerful interpretability [48]. However, due to the massive and multidimensional nature of online learning data, these two types of models were unable to achieve good performance on big data [49]. Deep learning-based models have a clear advantage in processing large datasets. However, when applied to real-world teaching scenarios, DLKT may face the following challenge: the large number of network layers and parameters in deep networks may limit the interpretability of the generated parameters. Additionally, the lack of interpretability in deep learning-based models can also lead to potential ethical and privacy issues. Stakeholders need to be able to trust the models and understand how the models make decisions.

Overall, DLKT models exhibit strong performance but poor interpretability [11], while simple models with strong interpretability are far weaker than the former, as shown in the upper left corner of Fig. 3. Consequently, the tradeoff between interpretability and performance poses a significant challenge for researchers challenge for researchers. In recent years, researchers have utilized various methods to explain knowledge tracing models and have attempted to maximize transparency while ensuring model performance. In Section 3, we will elaborate on the interpretable methods existing in the above proposed models.

2.2 About Explainable Artificial Intelligence (xAI): A Brief Overview

The goal of explainable artificial intelligence (xAI) is to provide an understanding of the internal workings of a system in a manner that humans can comprehend. XAI aims to answer questions such as “How did the model arrive at this result?”, “Will different inputs yield the same result?”, and “What is the reliability of the model’s outputs?”. In essence, xAI’s purpose is to provide an explanation to the explainees regarding why the model generates the corresponding output based on the input. Based on the diverse needs of explainees, explainers offer appropriate types of explanations. The process of explanation provided by xAI enhances explainees’ degree of trust in the system, thus increasing the system’s utility ratio across various industries. To enhance the readers’ understanding of explainable artificial intelligence, we introduce the xAI framework illustrated in Fig. 4. Improving the interpretability of algorithms is important in AIED, and the benefits can be summarized as follows: 1) Developers can enhance the transparency of models in a more scientific way, which can lead to better model optimization. 2) Transparency can help domain experts discover the cognitive rules in the learning process, leading to deeper insights and better decision-making. 3) Transparency can help users better understand the reasons and logic behind AI-driven decisions, which can increase their trust in the technology. 4) Regulatory authorities can use transparency to achieve effective supervision and ensure the safety of intelligent products used in education while also ensuring compliance with the law.

| [1pt] Methods | Year | Stage | Category | Domain | Description | ||

|---|---|---|---|---|---|---|---|

| Anc-hoc | Post-hoc | Model-specfic | Model-Agnostic | ||||

| Attention[50] | 2014 | ✓ | ✓ | CV/NLP | Attention weight matrix visualization | ||

| Bayes Rule List[51] | 2015 | ✓ | ✓ | - | Trees and Rule-based Models | ||

| Generalized additive models (GAMs)[52] | 2015 | ✓ | ✓ | - | The final decision form is obtained by combining each single feature model with linear function | ||

| Neural Additive Model[53] | 2020 | ✓ | ✓ | CV | Train multiple deep neural networks in an additive fashion such that each neural network attend to a single input feature | ||

| Activation Maximization[54] | 2010 | ✓ | ✓ | CV | Maximize neuronal activation by identifying the optimal input for a neuron at a specific network layer | ||

| Gradient-based Saliency Maps[55] | 2013 | ✓ | ✓ | CV | The back propagation mechanism of DNN is used to propagate the decision importance signal of the model from the output layer neurons to the input of the model layer by layer to deduce the feature importance of the input samples | ||

| DeConvolution Nets[56] | 2014 | ✓ | ✓ | CV | |||

| Guided Backprops[57] | 2015 | ✓ | ✓ | CV | |||

| SmoothGrad[58] | 2017 | ✓ | ✓ | CV | |||

| Layer-wise Relevance BackPropagation (LRP)[59] | 2015 | ✓ | ✓ | CV/NLP | |||

| Salient Relevance (SR) Map[60] | 2019 | ✓ | ✓ | CV | |||

| Class Activation Mapping (CAM)[61] | 2016 | ✓ | ✓ | CV | The neural network’s feature map is utilized to ascertain the significance of each segment of the original image | ||

| Grad-CAM[62] | 2017 | ✓ | ✓ | CV | |||

| Grad-CAM++[63] | 2018 | ✓ | ✓ | CV | |||

| Local Interpretable Model-Agnostic Explanations (LIME)[64] | 2016 | ✓ | ✓ | CV | An interpretable model with simple structure is used to locally approximate the decision result of the model to be explained for an input instance | ||

| SHapley Additive exPlanations (SHAP)[65] | 2017 | ✓ | ✓ | CV | Reflects the influence of each feature in the input sample and shows the positive and negative influence | ||

| Concept Activation Vectors(CAV)[66] | 2018 | ✓ | ✓ | CV | Measures the relatedness of concepts within the model’s output | ||

| [1pt] |

Existing algorithms can be classified as transparent (white box) or black box models, depending on their complexity [67] (details in Section 3.1). Transparent models are characterized by simple internal components and self-interpretability, allowing users to intuitively understand their internal operation mechanism [68]. A black box model refers to a model with a complex, nonlinear relationship between the input and output, with an operating mechanism that is difficult to understand, such as that of a neural network [69]. Based on the characteristics of these two models, xAI methods are typically categorized as ante-hoc interpretable methods or post-hoc interpretable methods [70]. Ante-hoc interpretable methods are mainly applied to models with simple structures and strong interpretability (such as transparent models) or to build interpretable modules in the model to make it intrinsically interpretable. In contrast, post-hoc interpretable methods, such as black box models, develop interpretive techniques to interpret trained machine learning models. Post-hoc interpretable methods are usually subdivided into model-specific approaches and model-agnostic approaches based on their application scope [71]. These methods are introduced in detail in the following sections. Table 2 provides an overview of representative interpretable methods.

There is currently no widely accepted scientific evaluation standard for xAI. Different experts from various disciplines have conducted preliminary investigations based on different evaluation objectives, such as the characteristics of the model being evaluated or the requirements of users and application scenarios [72]. One prominent evaluation method is the three-level approach proposed by Doshi Velez et al. [73], which includes the following steps: 1) application-grounded evaluation, 2) human-grounded evaluation, and 3) functionally-grounded evaluation. These three levels of evaluation provide useful frameworks for evaluating the interpretability of xAI systems in different contexts.

.

[1pt]

Angles

Methods

Limitations

Subjective evaluation

Qualitative evaluation based on open-ended questions[74, 75, 76];

Quantitative evaluation based on closed-ended questions[77], [78];

A mixed-methods approach that combines both qualitative and quantitative evaluation.[79, 80]

This method may be susceptible to bias and variability owing to individual differences among evaluators. Moreover, the requisite for professional human resources can lead to elevated evaluation costs.

Objective evaluation

Fidelity [81]; Consistency[82]; Stability[80]; Sensitivity[80]; Causality[83]; Complexity [84, 85]

Models might rely on particular evaluation methods, and varying metrics could yield disparate results.

[1pt]

Generally, application-level evaluation is considered an effective approach because the interpretation is applied to the appropriate field and evaluated by professionals, resulting in more convincing results. Currently, the popular classification method for xAI evaluation involves dividing it into subjective and objective evaluations based on whether humans are involved[80, 86]. Table 3 shows the evaluation methods, metrics, and current limitations of both subjective and objective evaluation in xAI. It is important to carefully select appropriate evaluation metrics based on the specific features and goals of the evaluated xAI system. Overall, the combination of subjective and objective evaluation methods may be the most effective approach for assessing the interpretability of xAI systems while balancing cost and performance.

2.3 What are good explanations for knowledge tracing?

According to Merriam-Webster, ”interpret” means to present something in understandable terms and explain its meaning [87]. However, what constitutes a good explanation varies across different fields, and experts have attempted to define it in different ways. In computer science, Lipton [88] emphasized the importance of comparative explanations, i.e., whether the predicted outcome will change for different inputs . Physicist Max Tegmark described a good explanation as one that answers more questions than asked [89]. Moreover, psychology researchers have highlighted the significance of explanations in learning and inference and how individuals’ explanatory preferences can impact explanation-based processes in a systematic way [90]. In certain scenarios of adaptive education, researchers have used verbal and visual explanations [91] or interactive interfaces [92] to provide explanations and have achieved positive outcomes. For example, Cristina Conati et al. [93] added an interactive simulation program to the adaptive CSP (ACSP) applet to provide an explanation function. The research results demonstrated that providing explanations can enhance students’ trust in ACPS prompts.

Explanations also play a pivotal role in the process of knowledge tracing, but what are good explanations for knowledge tracing? Research suggests that explanations must be audience-specific and goal-oriented [94, 72].Stakeholders in knowledge tracing are divided into professional users (developers, researchers) and non-professional users (teachers, students), each requiring tailored explanations [95]. For professionals, explainability enhances understanding, system debugging, model optimization, and credibility [96]. For non-professionals, it facilitates comprehension of learning processes, encourages result acceptance, and boosts model satisfaction [97]. The field has developed methods ensuring both accuracy and transparency, making model operations and decisions clear to all users, thereby improving interpretability. Further details on these methods will be provided in the next section.

3 Explainability Techniques for Explainable Knowledge Tracing Models

This section delves into the key aspects of enhancing the interpretability of knowledge tracing models. Initially, we categorize and discuss explainable knowledge tracing models, focusing on the critical distinctions between transparent models and complex black-box models. This discussion lays the groundwork for understanding the internal mechanisms of these models. Subsequently, we shift our focus to exploring methods for augmenting the interpretability of knowledge tracing. These methods encompass both ante-hoc and post-hoc strategies, as well as other dimensions. The aim is to reveal how various approaches can enhance the models’ transparency and comprehensibility. Finally, we examine the practical implementation of explainable knowledge tracing in real-world applications, such as generating diagnostic reports. The section concludes with a critical discussion evaluating the balance between the models’ interpretability, accuracy, and their practical application in educational settings.

3.1 The Concept of Explainable Knowledge tracing

As mentioned in Section 2.2, machine learning models are typically categorized as transparent or black box models according to the complexity of the objects they are intended to explain, based on the criteria of xAI. Transparent models are characterized by high transparency of internal components and self-interpretability, such as, linear/logistic regression [98, 99], bayesian models [100, 101, 102], decision trees [103, 104], k-nearest neighbors [105, 106, 107], rule based learners [108, 109, 110], general additive models [111, 112, 113], etc. For transparent models, interpretability can be understood from three perspectives: algorithmic transparency, decomposability, and simulatability [114]. However, models such as multi–layer neural network [115, 116] and other deep network [117, 115, 118], which have complex internal structures and difficult operation mechanisms, are usually referred to as black box models. XAI algorithms are capable of understanding the architecture and layer configurations of transparent models, but they lack the ability to comprehend the operational mechanisms inherent in black box models[119].

Explainable Knowledge Tracing Model Taxonomy. Inspired by the criteria for classifying model complexity in xAI [9], we propose a novel taxonomy specifically tailored for knowledge tracing models. This framework categorizes models based on their explainability and comprehensibility. We identify models employing Markov processes and logistic regression as ”transparent models” due to their straightforward structures and the ease with which users can understand them. This classification is rooted in the models’ interpretability and the transparency of their decision-making processes, emphasizing the accessibility and interpretability of how decisions are made. For example, state transitions in Markov models and parameter settings in logistic regression models are intuitive, making these models’ decision-making processes easily traceable and explainable. In contrast, knowledge tracing models based on deep learning, especially those involving complex multi-layered network structures, are considered akin to ”black box” by users. The internal mechanisms of these models are difficult to comprehend because of their complexity and rich nonlinear characteristics, obscuring the internal decision-making process. Consequently, these models and their variants are categorized as ”black-box models”. We define explainable knowledge tracing and show its framework in Fig. 5.

Methodologies for Explainable Knowledge Tracing. In the following section, we delve into interpretable methods for the two types of knowledge tracing models mentioned earlier, categorizing them into three phases: 1) Ante-hoc interpretable methods; 2) Post-hoc interpretable methods; and 3) Other dimensions. Ante-hoc interpretable methods that focus on transparent models and model-intrinsic interpretability, which can be achieved by simplifying the model’s structure or incorporating intuitive modules to enhance its interpretability. Post-hoc interpretability methods, on the other hand, center around model-specific and model-agnostic approaches, such as using external tools or techniques to elucidate the model’s decision-making process. Other dimensions include interpretability methods that are specific to knowledge tracing models but have not yet been widely discussed in the current xAI literature. An example would be leveraging the associative relationships between problems, concepts, or users within the context of knowledge tracing. To aid readers in locating various interpretable methods, we present a framework diagram in Section 3 in Fig. 6. Additionally, all the reviewed explainable knowledge tracing methods are summarized in Table LABEL:summary-table.

3.2 Stage 1: Ante-hoc Interpretable Methods

As mentioned in Section 2.2, ante-hoc interpretable methods are primarily used for transparent models or model-intrinsic interpretability [120]. These methods aim to make the model itself capable of interpretation by training a transparent model with a simple structure and strong interpretability or by building interpretable components into a complex model structure [9, 119]. This paper mainly focuses on two types of ante-hoc interpretable methods for xKT: transparent models and model-intrinsic interpretability; these methods are described in the following subsections.

3.2.1 Category 1: Transparent Models

Based on the classification criteria in Section 3.1, knowledge tracing models that use the Markov process and logistic regression are considered knowledge tracing transparent models due to their easy-to-understand internal structure and operational process. The following sections elaborate on how the knowledge tracing transparent model achieves interpretability.

Bayesian Knowledge Tracing (BKT). BKT is a probabilistic model grounded in the Markov process, predicting students’ skill mastery by updating beliefs based on performance. Although not as performant as deep knowledge tracing models, BKT’s use of the HMM framework ensures a satisfactory and comprehensible explanation of the knowledge tracing. Some extended models of BKT are personalized by incorporating individual student characteristics. For example, Pardos et al. [121] set different initial probabilities for different students to achieve partial personalization. However, differentiating the initial probabilities only partially achieves personalization, and Lee et al. [122] used a separate set of personalization parameters for each student to adequately model interindividual differences. Although the personalized parameters are good for conferring variability across individuals, they do not consider the influence of knowledge concepts. Hawkins et al. [123] proposed BKT-ST to calculate the similarity between current knowledge concepts and those learned previously, enhancing the model’s capacity to represent interconnected linkages across concepts. In addition, Wang et al. [124] proposed the use of multigrained-BKT and historical-BKT to model the relationships between different knowledge components (KCs). Moreover, Sun et al. [125] used a genetic algorithm (GA) to optimize the model to solve the exponential explosion problem when tracing multiple concepts simultaneously. Moreover, Moreover, the interpretable knowledge tracing (IKT) model proposed by Minn et al. [40], which is distinct in its use of tree-augmented naive Bayes and focuses on skill mastery, learning transfer, and problem difficulty, offers greater interpretability and adaptability in student performance prediction. Beyond basic parameters and knowledge concepts, the BKT model is also increasingly taking into account students’ emotions and additional behavioral aspects. For examples, Spaulding et al. [126] introduced the concept of affective BKT, integrating students’ emotional states into the model. Furthermore, to accurately capture how students’ memory retention changes over time, Nedungadi et al. [127] developed the PC-BKT model. This adaptation incorporates a temporal decay function to model the process of forgetting, offering a more nuanced understanding of students’ learning and memory retention behaviors. The HMM describes the probabilistic relationship between observable and hidden variables, and the probabilistic relationship varies over time. In Bayesian knowledge tracing, the model estimates students’ learning state by observing the results of students’ responses to questions related to knowledge concepts. The state transfer probability calculation process and the decision process are transparent, and the change process of the model can be effectively observed through the state transfer diagram, as shown in Fig. 7. Here, represents the probability of mastery, indicating a student’s current understanding of the skill, represents the probability of transition, which is the rate at which a student transitions from nonmastery to mastery, is the probability of guessing correctly, accounting for lucky guesses, and refers to the probability of slipping, where a mastered skill is incorrectly applied. In BKT, knowledge mastery is updated along with the learning parameters. When the probability of students mastering the relevant knowledge concept in the initial knowledge state is greater than 0.95 [128], students have mastered the knowledge concept.

In summary, from an interpretable perspective, the Bayesian knowledge tracing model has good transparency in the computational process as a purely probabilistic model. Observing different state transfers of BKT can provide a basis for modeling decisions for students and teachers. From the model perspective, on the one hand, Bayesian knowledge tracing models do not account for the differences in the initial knowledge levels of different students and lack an assessment of the difficulty of the questions. Although many models have been extended based on BKT, they still cannot be applied to knowledge tracing scenarios with large-scale data. On the other hand, these models assume that students do not forget the knowledge they have mastered, which is not consistent with actual cognitive characteristics. In addition, using binary groups to represent recent knowledge states does not match the real cognitive state situation. It is difficult to adequately predict the relationship between each exercise and specific knowledge concepts due to the ambiguous mapping between hidden states and exercises.

Knowledge Tracing Based on Item Response Theory (IRT). Item response theory originates from the field of psychometrics and assumes that an underlying trait represents each candidate’s ability and can be observed through their response to items. The two models underlying the IRT model are the normal ogive model and the logistic model. However, the logistic regression model is the most common in practical applications [129]. The IRT model is based on four assumptions: 1) monotonicity (the probability of a correct response increases as the level of the trait increases). 2) one-dimensionality (it is assumed that a dominant underlying trait is being measured). 3) local independence (responses to separate items in a given test are independent of each other at a given level of ability). 4) invariance (it is assumed that students’ abilities remain constant over time).

Here, is defined as the individual ability parameter of the student, is defined as the discrimination parameter of question , is defined as the difficulty parameter of question , and is defined as the guessing parameter of question . With one-parameter IRT, it is possible to provide students with interpretable parameters in terms of two dimensions, personal ability and difficulty, as shown in Fig. 8. A two-parameter IRT model uses two parameters (difficulty and discrimination) to predict the probability of a successful response. Therefore, the discrimination parameter can vary between items and be plotted with different slopes, thus eliminating the explanatory information. A three-parameter IRT model adds a guessing parameter to the two-parameter model. The items answered by guessing indicate that the student’s ability is less than the difficulty of the question to which he or she is responding. The three-parameter IRT model can provide explanatory information about guessing behavior.

In IRT, the greater the difficulty of the item is, the greater the corresponding competencies needed by the student. By explicitly defining parameters such as item difficulty and student competencies, the model is transparent in its computational process and has good interpretability. However, the static IRT model assumes that students’ abilities remain constant over time, which is particularly unsuitable for long-term knowledge tracing.

Knowledge Tracing Based on Factor Analysis Model. Factor analysis is another critical approach for assessing learners’ knowledge mastery. Cen et al. [30] proposed learning factor analysis (LFA), a theory whose primary purpose is to find a more valid cognitive model from students’ learning data. Moreover, LFAs inherit the Q matrix used in psychometrics to assess cognition and extend the theory of learning curve analysis, as shown in Fig. 9.

LFA allows researchers to evaluate different representations of knowledge concepts by performing a heuristic search of the cognitive model space. Based on LFA theory, Cen et al. proposed the additive factor model (AFM) [130] and performance factor analysis (PFA) [31]. The AFM is a particular case of PFA and is equivalent when equals . The AFM explains how the difficulty of a student’s knowledge points and the number of attempts to solve the problem affect the student’s performance, while PFA explains the student’s performance in terms of the difficulty of the knowledge points, the number of successes, and the number of failures.

| [1pt] Model | Users | Items | Skills | Wins | Fails | Attempts | KC(Time Window) | Items(Time Window) |

| IRT | ✓ | ✓ | ||||||

| MIRT | ✓ | ✓ | ||||||

| AFM | ✓ | ✓ | ||||||

| PFA | ✓ | ✓ | ✓ | |||||

| KTM | ||||||||

| DASH | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| DAS3H | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Best-LR | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| [1pt] |

Large-scale factor analysis models have been further developed based on earlier factor analysis models. Using a factor decomposition approach, Vie et al. [32] proposed knowledge tracing machines (KTMs). KTMs use a sparse set of weights for all features to model the learner’s correct answer probability. The DASH (difficulty, ability, student interaction history) model is used for memory forgetting and factor analysis [131]. The DAS3H is a newer model that combines IRT and PFA and extends the DASH model by using a time window-based counting function to calculate characteristic factors [131, 132]. With the DAS3H model, the factor analysis method can explain student changes over a continuous time window, thus extending the scope of application of the factor analysis method. Gervet et al. [133] proposed Best-LR based on DAS3H. Unlike DAS3H, Best-LR does not use a window but directly uses the number of successes or failures as an additivity factor. The factors that Best-LR can explain are similar to those that can explain DAS3H. The performance of Best-LR is better than that of DAS3H because Best-LR does not need to calculate window features.

In summary, logistic regression models can explain two main types of features: 1) coded embeddings representing questions and KCs and 2) counting-based features. In Table 4, we compare the factors used by factor analysis models. Counting features summarize the history of students’ interactions with the system, and counting methods vary among different models, with some even introducing the concept of time windows.

3.2.2 Category 2: Model-Intrinsic Interpretability

Due to the invisibility of the internal structure and operation mechanism of the neural network model, model-intrinsic interpretability can only be realized by adding an interpretable module. Deep neural networks have large network layers and large parameter spaces. An end-to-end process is used to obtain the output prediction from the input sample . This process is similar to that of a black box. Therefore, researchers have attempted to embed a simple or an easy-to-interpret module inside the model to achieve model-intrinsic interpretability, thus resembling an interpretable model from the outside to provide explanations for the audience, as shown in Fig. 10. For instance, attention mechanisms can provide explanations by visualizing attention weights. As a result, attention mechanisms have become common intrinsic explainable modules in neural networks and are widely used in computer vision [134, 135], sentiment analysis [136, 137], recommendation systems [138, 139], and other fields.

In xAI, this kind of interpretation method is essentially explained by following strict axioms, rule-based final decisions, granular interpretations of decisions, etc. [119]. It is worth noting that this method can only be used for a specific model, which leads to poor transferability. In the xKT model, as shown in Table 5, researchers have attempted to improve the model interpretability by introducing attention mechanisms, educational psychology, and other theories as interpretable modules. These model-intrinsic interpretability methods aim to make the model more transparent and understandable to stakeholders while maintaining good performance. In the following section, we elaborate on these two methods of model-intrinsic interpretability.

| [1pt] Model-intrinsic module | References |

|---|---|

| Attention mechanism | [36] [140] [38] [141] [142] [143] |

| Educational psychology and other theories | Item response theory (IRT) [144] [145] [146] [147] |

| Multidimensional item response theory (MIRT) [44] | |

| Constructive learning theory [148] [149] | |

| Learning curve theory and forgetting curve theory [150] | |

| Finite state automaton (FSA) [151] | |

| Classical test theory (CTT) [152] | |

| Monotonicity theory [153] | |

| [1pt] |

Integrating attention mechanism module. The self-attentive knowledge tracing (SAKT) model [35] identifies concepts related to a given concept from historical student interaction data and predicts learners’ performance in the next exercise by considering related exercises in past interactions. This process involves sparse data. To address the problem that the attention layer is too shallow to recognize the complex relationships between exercises and responses, separated self-attentive neural knowledge tracing (SAINT) [154], which is based on transformers and stacked two multihead attention layers on the decoder, was proposed to more effectively model the complex relationships between exercises and answers. The above work proved that the introduction of an attention mechanism into knowledge tracing greatly improves the performance of the model. Furthermore, several researchers have studied the construction of an attention mechanism for the knowledge tracing model as an interpretable module to improve the model’s explainability.

For example, Liu et al. [39] proposed explainable exercise-aware knowledge tracing (EKT), which utilizes a novel attention mechanism to deeply capture the focusing information of students on historical exercises. This technique can track students’ knowledge states on multiple concepts and visualize knowledge acquisition tracing and student performance prediction to ensure the interpretability of the model. Context-aware attentive knowledge tracing (AKT) [36] combines interpretable components into a novel monotonic attention mechanism and uses the Rasch model to regularize concepts and exercises; this approach has been proven to have excellent interpretability via experiments. Moreover, Zhao et al. [140] proposed a novel personalized knowledge tracing framework with an attention mechanism that uses learner attributes to explain the prediction of mastery. The relation-aware self-attention model for knowledge tracing (RKT) [38] uses interpretable attention weights to help visualize the relationships between interactions and temporal patterns in the human learning process. Similarly, Zhang et al. [141] introduced a new vector to capture additional information and used attention weights to analyze the importance of input features, making it easier for readers to understand the predicted results. Recently, Li et al. [142] regarded the attention mechanism as an effective interpretability module for constructing a new knowledge tracing model, effectively improving the interpretability and predictive ability of the model. Yue et al. [155] , based on ability attributes and an attention mechanism, provided explanations through an inference path. Zu et al. introduced CAKT[156], an innovative model that merges contrastive learning and attention networks to enable interpretable knowledge tracing.

Attention mechanisms, through visualized attention weights, explain aspects of decision-making in models. However, the interpretability of these tools is contingent upon the complexity of the model and the expertise of the interpreter. While elucidating certain decisions in simpler models, attention weights may become less transparent in more complex architectures, where multiple layers and nonlinear interactions obscure the interpretability of the information. Therefore, despite their utility, attention mechanisms should be integrated with complementary techniques for more holistic interpretability in sophisticated deep learning models.

Integrating educational psychology and other theories. Item response theory [157] is a modern psychometric theory in which “items” refer to the questions in students’ papers and “item responses” refer to students’ answers to specific questions. As the parameters of IRT are interpretable, many scholars have combined IRT with deep learning methods, which have powerful feature extraction capabilities for enhancing interpretability. Deep-IRT [144] integrates dynamic key-value memory networks (DKVMNs) with IRT for knowledge training. The DKVMN captures learners’ trajectories, inferring their abilities and item difficulties via neural networks, which are subsequently utilized in IRT to predict answer correctness. This model combines the predictive strength of the DKVMN model with the interpretability of IRT, enhancing both the performance and insight into learner and item profiles.

Even though IRT can utilize predefined interpretable parameters to describe students’ behavior, students’ ability to solve problems is not limited; therefore, one-dimensional IRT parameters cannot be used to effectively explain students’ complex behaviors in a real-world scenario. To address this issue, enhanced deep multidimensional item response theory (TC-MIRT) [44] integrates the parameters of a multidimensional item response theory into an improved recurrent neural network, which enables the model to predict students’ states and generate interpretable parameters in each specific knowledge field. Inspired by the powerful interpretability of IRTs, many studies have integrated them into model frameworks to improve the model reliability in recent years. For example, knowledge interaction-enhanced knowledge tracing (KIKT) [145] uses the IRT framework to simulate learners’ performance and obtains an interpretable relationship between learners’ proficiency and project characteristics. Geoffrey Converse et al. [146] improved the model interpretability by transforming the representation of high-dimensional student ability from a deep learning model to an interpretable IRT representation at each time step; leveled attentive knowledge tracing (LANA) [147] uses the interpretable Rasch model to cluster students’ ability levels, thus using leveled learning to assign different encoders to different groups of students. Recently, Chen et al. [45] developed QIKT, a question-centric KT model, improved knowledge tracing interpretability using question-centric representations and an interpretable item response theory layer.

In addition, constructivist learning theory [158] is a classical cognitive theory that emphasizes knowledge mastery differences as the result of knowledge internalization. Based on this theory, the ability boosted knowledge tracing (ABKT) model [148] utilizes continuous matrix factorization to simulate the knowledge internalization process for enhancing the model’s interpretability. PKT [149] was designed based on constructivist learning and item response theories and features interpretable and educationally meaningful parameters. The forgetting curve theory [159] indicates that a decrease in students’ memory during learning usually reduces their proficiency in knowledge concepts. The learning curve [160] regards knowledge acquisition as a mathematical expression in the process of human learning; that is, students can acquire knowledge after each practice. According to the above two types of pedagogical research, Zhang et al. [150] constructed learning and forgetting factors at the learner level as additional factors to better trace and explain changes in learners’ knowledge levels. Moreover, some researchers have attempted to integrate a mathematical compression model into the KT model to enhance the interpretability of the model. For example, Wang et al. [161] utilized finite state automation (FSA) to interpret the hidden state transition of DKT when receiving inputs. In addition, MonaCoBERT [152] uses a classical test theory-based (CTT-based) embedding strategy to consider the difficulty of an exercise to improve the performance and interpretability of the model. Recently, the counterfactual monotonic knowledge tracing (CMKT) [153] method enhances interpretability by integrating counterfactual reasoning with the emonotonicity theory in knowledge acquisition, demonstrating superior performance across real-world datasets.

Incorporating educational psychology theories into models offers interpretability through psychological frameworks and parameters. However, the efficacy of these methods in complex real-world educational contexts is limited and often constrained by the specificity and scope of the underlying theories. While providing insights into controlled scenarios, these approaches may struggle to encapsulate the multifaceted and dynamic nature of learning processes. Consequently, their application necessitates a nuanced and broadened perspective, blending theoretical insights with empirical data analysis to enhance the overall interpretability of the model in diverse educational environments.

3.3 Stage 2: Post-hoc Interpretable Methods

Post-hoc interpretability techniques are applied to pretrained machine learning models, especially those considered “black box” models, to explain their decisions, as shown in Fig. 11. Unlike ante-hoc methods, which are built into the model during development for inherent interpretability, post-hoc methods are employed after model creation, mainly to clarify the predictions [162, 163]. However, some post-hoc methods, such as knowledge distillation [164, 165], rule extraction [166], and activation maximization [167], extend beyond explaining outputs; they also attempt to uncover the model’s internal mechanisms. In this paper, we delve into post-hoc interpretable methods for knowledge tracing from two angles: model-specific and model-agnostic methods. Model-specific methods are tailored to particular types of knowledge tracing models, reflecting their unique architectures and learning algorithms. Conversely, model-agnostic methods offer broader applicability, allowing for interpretability across various knowledge tracing models, regardless of their specific designs. This distinction is crucial for developing a comprehensive understanding of how different interpretability methods can be leveraged to demystify the predictions of knowledge tracing models, thereby enhancing their utility and trustworthiness in educational applications.

3.3.1 Category 1: Model-Specific

Most model-specific methods focus on the interpretability of deep learning, mainly for a certain type of model. It is important to note that model-specific approaches are not necessarily model-based but specific to a class of models. At present, model-specific methods are mainly used to explain the following two categories of models: 1) ensemble-based models [168, 169, 170]; and 2) neural networks [171, 172]. Knowledge tracing based on deep learning is a black box that is difficult to understand due to the large parameter space inside the neural network. At present, researchers generally use visualization methods to explain this type of neural network in models.

Layer-wise Relevance BackPropagation (LRP). The LRP technique was proposed by Bach et al. [59] to calculate the correlation scores of single features in the input data by decomposing the output prediction of the deep neural network. It uses a specially designed set of propagation rules to operate a neural network by backpropagating predictions, where the inputs can be images, videos, or texts [59, 173]. In recurrent neural networks (RNNs), correlations are propagated to hidden states and memory units. Several researchers have applied the LRP method to the knowledge tracing model to enhance its interpretability. For example, Lu et al. [174] proposed solving the interpretability of deep DLKT by adopting the LRP method, which backpropagates the relevance from the output layer of the model to its input layer to explain the RNN-based DLKT model. Wang et al. [175] studied whether the same post hoc interpretable method could be applied to the extensive dataset EdNet and achieved particular effectiveness. However, its effectiveness decreases with increasing learner size and learner practice sequence . Subsequently, Lu et al. [176] used the classical LRP method to interpret the output forecast variables of the DLKT model from the ready-made inputs of the DLKT model, which captured skill-level semantic information. The model output is progressively mapped to the input layer using a backward propagation mechanism, and the interpretation method assigns relevant values to each input sky-answer pair.

The LRP provides valuable insights into neural network-based KT models by mapping predictions back to input features. However, its reliance on correlation for attribution measurements raises concerns about fidelity, as it may be influenced by spurious correlations. This limitation, along with scalability issues in handling large datasets and complex learner sequences, as indicated in [175], questions its applicability and relevance in diverse educational settings. The effectiveness of this method in treating KT thus requires careful consideration of these potential drawbacks.

Causal Inference. Causal inference is a method for analyzing causal relationships in observational data, attempting to determine whether different treatments (such as different strategies or methods in an experiment) lead to different outcomes [177, 178]. The focus is on distinguishing true causal effects from mere correlations, especially when dealing with confounding variables [179]. Causal inference enhances the transparency and interpretability of AI models by clarifying the “why” behind AI decisions and distinguishing between direct causal relationships and spurious associations. Zhu et al.[47] focused on applying causal inference to the field of knowledge tracing. By adjusting confounding variables within a causal inference framework, they aimed to enhance the prediction accuracy and stability of knowledge tracing models. This approach takes into account key factors, such as confounding variables, to improve the models’ ability to predict students’ knowledge states accurately. Furthermore, the temporal and causal-enhanced knowledge tracing (TCKT) model[180] integrates causal self-attention with temporal dynamics. This integration not only enhances prediction accuracy and interpretability in educational settings but also effectively mitigates dataset bias by employing causal inference to model the student learning process more accurately.

Causal inference, which is critical in distinguishing between correlation and causation, is invaluable in KT for analyzing the impact of educational interventions. The challenges, as outlined in [177], lie in the need for robust statistical frameworks and the management of confounding variables, which can be daunting in practical educational contexts. Its application in KT requires a careful balance between theoretical robustness and practical feasibility.

Visualization. A classic approach to interpret black box models is visualization, which provides intuitive explanations through analysis of the model’s training process. Based on their previous work [181], Ding et al. [182] tried to open the “box” of the deep knowledge tracing model. First, they used the larger dataset EdNet to visually analyze the behavior of the DKT model in high-dimensional space, tracked the changes in activation over time, and analyzed the influence of each skill relative to other skills, which solved the problem that interpretation methods were not intuitive.

Visualization techniques provide an intuitive means of interpreting complex KT models. However, they necessitate a high level of expertise in both the model’s workings and the data represented. The risk here, particularly with high-dimensional data, is the potential for oversimplification or misinterpretation of the model’s dynamics, leading to incorrect conclusions about the learning process.

Ensembling Approaches. Several researchers have attempted to use ensemble approaches to improve the interpretability of knowledge tracing models on big data. For example, Tirth Shah et al. [183] used a combination of 22 models to predict whether students can answer given questions correctly and discovered that an ensembling approach can improve the prediction performance and interpretability of knowledge tracing tasks. EnKT [184] is based on BKT and DKT and represents student concepts and student questions using learning and performance parameters, respectively, to improve the interpretability of the model.

Ensembling approaches combine multiple models to enhance both predictive accuracy and interpretability in KT. However, the increased complexity of these methods can obscure the contributions of individual models within the ensemble. This complexity poses a significant challenge in KT, where understanding the specific influence of different factors on learning outcomes is crucial.

contfoot-textnormalContinued on the next page

\SetTblrTemplatecontfoot-textnormal

{longtblr}[

caption = A summary of different types of explainable knowledge tracing models.,

label = summary-table

]

colspec=Q[l,m,0.15] Q[c,m,0.05] Q[c,m,0.08] Q[c,m,0.08] Q[c,m,0.08] Q[c,m,0.08] Q[c,m,0.06] Q[c,m,0.065] Q[c,m,0.06],

rowsep=0.6pt, hspan=minimal,

vline2,3=1-Z0.5pt,vline5=1-Z0.5pt,vline7=2-Z0.5pt,vline9=2-Z0.5pt

[1pt]

Models Year Taxonomy Interpretable Methods

Transparent Model Black-box model Ante-hoc Post-hoc Other Dimensions

Self-explanatory Model-Intrinsic Model-Specfic Model-Agnostic

BKT [28] 1994 ✓ ✓

Pardos et al. [121] 2010 ✓ ✓

Lee et al. [122] 2012 ✓ ✓

BKT-ST [123] 2014 ✓ ✓

Wang et al. [124] 2016 ✓ ✓

Sun et al. [125] 2022 ✓ ✓

IKT [40]

2022 ✓

Affective BKT [126] 2015 ✓ ✓

PC-BKT [127] 2014 ✓ ✓

LFA [30] 2006 ✓ ✓

AFM [130] 2008 ✓ ✓

PFA [31] 2009 ✓ ✓

KTM [32] 2019 ✓ ✓

DASH [131] 2014 ✓ ✓

DAS3H [132] 2019 ✓ ✓

Best-LR [133] 2020 ✓ ✓

AKT [36] 2020 ✓ ✓

RKT [38] 2020 ✓ ✓

Zhang et al. [141] 2021 ✓ ✓

Li et al. [142] 2022 ✓ ✓

Yue et al. [155] 2023 ✓ ✓

CAKT [156] 2023 ✓ ✓

MonaCoBERT [152] 2022 ✓ ✓

CMKT [153] 2023 ✓ ✓

deep-IRT [144] 2019 ✓ ✓

TC-MIRT [44] 2011 ✓ ✓

KIKT [145] 2020 ✓ ✓

Geoffrey Converse et al. [146]

2021 ✓ ✓

LANA [147]

2021 ✓ ✓

QIKT [45]

2023 ✓ ✓

ABKT [148]

2022 ✓ ✓

PKT[149]

2023 ✓ ✓

zhang et al. [150]

2021 ✓ ✓

EAKT [185]

2022 ✓ ✓

Zhu et al. [151]

2022 ✓ ✓

Ding et al. [181]

2019 ✓ ✓

Ding et al. [182]

2021 ✓ ✓

Zhu et al.[47]

2023 ✓ ✓

TCKT [180]

2024 ✓ ✓

Tirth Shah et al. [183]

2020 ✓ ✓

EnKT [184]

2022 ✓ ✓

Lu et al. [174]

2020 ✓ ✓

Valero et al. [186]

2023 ✓ ✓

Wang et al. [175]

2021 ✓ ✓

Lu et al. [176]

2022 ✓ ✓

Varun Mandalapu et al. [187]

2021 ✓ ✓

Wang et al. [188]

2022 ✓ ✓

GCE [46]

2023 ✓ ✓

Liu et al. [39]

2019 ✓ ✓

SPDKT [189]

2021 ✓ ✓

CoKT [190]

2021 ✓ ✓

Lee et al. [191]

2019 ✓ ✓

HGKT [43]

2022 ✓ ✓

GKT [37]

2019 ✓ ✓

SKT [192]

2020 ✓ ✓

Zhao et al.[193]

2022 ✓ ✓

JKT [194]

2021 ✓ ✓

[1pt]

3.3.2 Category 2: Model-Agnostic

Model-agnostic techniques separate explanations from model outputs and are applicable to any machine learning model [162]. It only acts on the input and output of the neural network, providing explanations by perturbing the input or simplifying the model. Because model-agnostic techniques are not limited to a specific model, most researchers currently prefer model-agnostic approaches over model-specific approaches.

Local Interpretable Model-Agnostic Explanations (LIME). The LIME was proposed by Ribeiro et al. [64]. This method trains local surrogate models to explain a single prediction a global black-box model gives. LIME partially replaces complex models with simpler models to provide local explanations. Specifically, since the perturbed data will affect the model’s output, LIME trains a local interpretable model to learn the mapping relationship between the perturbed data and the model’s output and uses the similarity between the perturbed input and the original input as the weight. Finally, the essential K features are selected from the local interpretable model for interpretation. This approach can provide a very effective local approximation to the black box model. In xKT, Varun Mandalapu et al. [187] utilized LIME to understand the impact of various features on best-performing model predictions.

LIME provides microlevel insights into specific predictions of KT models by training local interpretable surrogate models. Its major strength lies in revealing the influence of particular features in specific instances. However, LIME’s focus on local explanations may not capture the model’s global behavior, particularly in KT, where diverse learning paths can significantly influence model decisions. Additionally, the dependence of LIME on perturbation strategies and the choice of local models might affect the consistency and accuracy of its interpretations.

Deep SHapley Additive exPlanations (Deep SHAP). By combining DeepLIFT [195] with Shapley values [196], Lundberg and Lee [65] proposed a fast method to approximate Shapley values for CNNs called Deep SHAP. Deep SHAP decomposes the prediction of the deep learning model into the sum of feature contributions through backpropagation and obtains the reference specific contribution of each feature to the prediction through the backpropagation prediction difference. Several studies [197] have concentrated on using the DKT model to predict test scores based on skill mastery and then assessed the influence of each skill on the predicted score using SHAP analysis. Inspired by this idea, Valero-Lea et al. [186] aimed to explain learners’ skill mastery by analyzing past interactions using a SHAP-like method to determine the importance of these interactions. Wang et al. [188] proposed a four-step procedure to interpret the DLKT model and obtained effective explicable results. The four-step procedure is as follows: 1) Given a sample x to be interpreted, reference samples are selected, and predictions are made about the last questions; 2) the difference between each reference sample and sample x is calculated; 3) the prediction is then backpropagated from the output layer to the input layer to calculate the reference-specific feature contribution between each reference sample and the interpreted sample; and 4) the contribution of each question-answer in sample x to the prediction of the DLKT model is obtained.

Deep SHAP, which combines DeepLIFT with Shapley values, elucidates feature contributions to predictions in deep learning models. KT helps us understand the relative importance of various features, such as prior performance or interaction frequencies. While effective at revealing individual feature impacts, Deep SHAP may struggle with high-dimensional feature spaces and overlook complex interfeature interactions, which are crucial in KT with diverse learning trajectories.

Causal Explanation(CE). Li et al. [46] addressed the explainability issue of DLKT models by proposing a genetic causal explainer (GCE) based on genetic algorithms (GAs). The GCE established a causal framework and a specialized coding system, effectively resolving the issues of spurious correlations caused by reliance on gradients or attention scores, thereby enhancing the accuracy and readability of explanations. Additionally, the GCE is a post hoc explanation method that can be applied to various DLKT models without interfering with model training, offering a flexible and effective means of explanation.

GCE based on genetic algorithms addresses explainability in DLKT models by establishing a causal framework. While GCE offers a novel approach to understanding deep causal relationships in KT, establishing causal connections requires precise data modeling and hypothesis validation. The complexity and computational demands of GCE, particularly for large datasets, pose significant challenges.

3.4 Stage 3: Other Dimensions

Beyond the mainstream ante-hoc and post-hoc methods, there are interpretatable approaches specific to the knowledge tracing domain, yet underrepresented in current xAI literature. These approaches exploit unique data features in KT, such as interconnected relationships among questions, concepts, or users. Upcoming sections will provide a detailed exploration of these approaches and their role in enhancing interpretability.

3.4.1 Category 1: Output Format

Visual explanations. To verify the interpretability of the proposed model, many researchers use radar charts or heat maps to provide readers with visual explanations. In the following, several representative works will be introduced. Self-paced deep knowledge tracing (SPDKT) [189] reflects the difficulty of the problem by assigning different weights to the problem, visualizing the difficulty of the problem, and improving the interpretability of the model. Similarly, collaborative embedding method for Knowledge Tracing (CoKT) [190] provided an interpretable question embedding by visualizing the distance between question embeddings that share the same concepts and those that do not. Lee et al. [191] proposed a knowledge query network (KQN) model, which uses the dot product between the knowledge state vector and skill vector to define knowledge interaction and uses a neural network to encode students’ responses and skills into vectors of the same dimension. Moreover, the KQN can query students’ knowledge of different skills and subsequently enhance the interpretability of the model by visualizing the interaction between two types of vectors.

Visual explanations enhance surface-level interpretability in KT but often fail to delve into the internal mechanisms of models. The explanatory power of these models heavily relies on the quality of the data representation; inaccurate representations may lead to misleading interpretations. Moreover, complex visual outputs, such as relationships in high-dimensional spaces, can be challenging for general users to understand, limiting their practical effectiveness. Therefore, these methods require further refinement and development to provide more in-depth and thorough explanations.

3.4.2 Category 2: Data Attribute

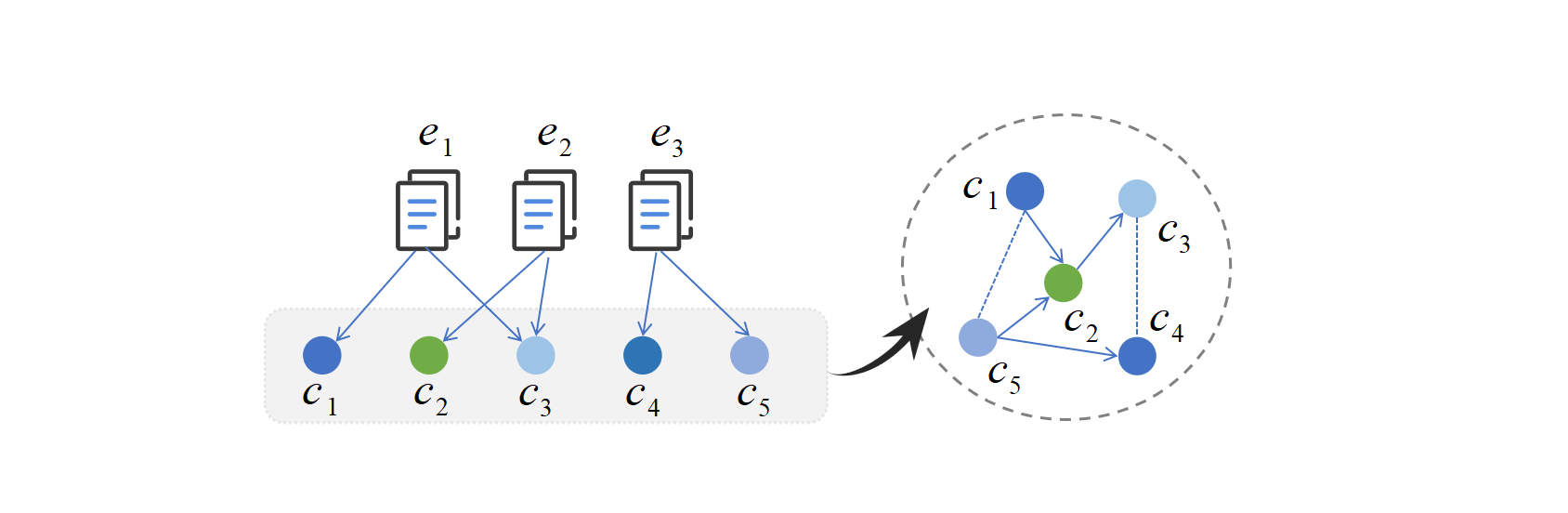

Interaction between exercises and concepts. Several researchers have shown that the relationship between exercises and concepts may be structured into a graph by analyzing learners’ learning data. For example, one exercise may involve multiple concepts, and one concept may also correspond to multiple exercises. In addition, two relationships exist among concepts, prerequisite and similarity, as shown in Fig. 12. The prerequisite is that the mastery of concept requires the mastery of concept , for example, by adding before multiplying; the similarity relationship means that knowledge and knowledge belong to the same category, such as addition. Some studies show that incorporating this graph structure into the knowledge tracing model as a relation induction deviation can enhance interpretability in the prediction process. Generally, there are exercises-to-exercises, concepts-to-concepts, exercises-to-concepts, and other potential relationships in the graph structure constructed from learner learning data. Next, this subsection describes in detail the works that have been done to increase the interpretability of the model by using the underlying graph structure in the data.

At the level of exercise-to-exercise relationships, hierarchical graph knowledge tracing (HGKT) [43] constructs a hierarchical exercise graph according to the latent hierarchical relationships (direct relationships and indirect relationships) between exercises and introduces a problem schema to explore the dependencies of exercise learning, which enhances the interpretability of knowledge tracing. On the level of concept-to-concept relationships, the graph-based knowledge tracing model (GKT) [37] synchronously learns the latent relationships between concepts in the prediction process. The model updates the knowledge state related to the current exercises each time, thus realizing interpretability at the concept level. Educational entities emphasize the significance of knowledge structure. Structure-based knowledge tracing (SKT) [192] utilizes two different propagation models to track the influence of prerequisites or similarity relationships between concepts and has been used in many experiments to prove interpretability. This work visually demonstrated the interpretability of the models in the manner described in 3.1, such as through heatmaps and radar maps. On the level of exercise-to-concept relationships, Zhao et al. [193] used a graph attention network to learn the underlying graph structure between concepts in the answer record and input information from the model containing the relationship information between the exercises and the concept, which enhances the interpretability of the model. To dig deeper into the relationship between exercise-to-exercise and concept-to-concept, a joint graph convolutional network-based deep knowledge tracing (JKT) [194] framework was used to model the multidimensional relationships of the above two factors into a graph and fuse them with “exercise-to-concept” relationships. The model connects exercises under cross-concepts and helps capture high-level semantic information, which increases the interpretability of the model.

Graph-based approaches in knowledge tracing offer intricate insights into the relationships among exercises, concepts, and hierarchical interdependencies. While these methods enhance interpretability by mapping complex educational theories onto graph structures, they also present challenges in terms of complexity and accessibility. Their reliance on sophisticated graph representations and computational models may limit usability for nontechnical users such as teachers and students, hindering their practical application in diverse educational settings. Moreover, the assumptions inherent in these graph-based models about learning processes and relationships might not fully align with the dynamic and varied nature of real-world learning, raising questions about their generalizability and effectiveness.

3.5 Explainable Knowledge Tracing: Application

In this section, we explore the practical application of xKT in educational settings. Our focus is on its role in generating diagnostic reports, personalized learning, resource recommendations, and knowledge structure discovery. This section examines how xKT algorithms are used to track and predict learners’ knowledge states, enabling the creation of dynamic, personalized educational pathways. We discuss the balance between algorithmic complexity and the need for clear, interpretable results in educational settings.

Knowledge tracing in diagnostic reports and visualization. In the realm of educational technology, knowledge tracing primarily manifests in the generation and visualization of learning diagnostic reports. Algorithms such as BKT and DLKT have been pivotal in this regard. BKT utilizes probabilistic modeling to continually update a student’s knowledge state, adjusting the likelihood of concept mastery after each educational interaction. DLKT, leveraging neural network architectures, excels in capturing complex learning patterns, offering nuanced insights into student performance. Despite its interpretability, BKT sometimes struggles with complex learning scenarios, whereas DLKT, though proficient at deciphering intricate behaviors, compromises clarity for the sake of complexity.

To enhance the interpretability of diagnostic reports, models such as KSGKT [198] integrate knowledge structures with graph representations, employing attention mechanisms to accurately predict learner performance. HGKT [43] uses a hierarchical graph neural network to analyze learner interactions, enabling detailed categorization of exercises for a deeper understanding of learner knowledge and problem-solving skills. Both models aim to provide granular diagnostic reports to support personalized learning paths. Additionally, visual explanations, such as intuitive graphs and heatmaps [189], significantly augment the interpretability of KT algorithm outputs, transforming complex data into actionable insights for tailored educational strategies.

Knowledge Tracing in Personalized Learning and Resource Recommendation. The field of personalized learning and resource recommendation has greatly benefited from advancements in knowledge tracing algorithms. These algorithms are adept at tailoring learning pathways and recommending appropriate learning resources based on a student’s current state of knowledge and learning preferences [199]. The introduction of deep learning technologies, such as DKT, has further enhanced the precision and personalization of these recommendations. However, one limitation is the often reduced explainability of these sophisticated models, which can make it challenging for educators to understand the rationale behind specific recommendations.