A Survey of Deep Learning for Low-Shot Object Detection

Abstract.

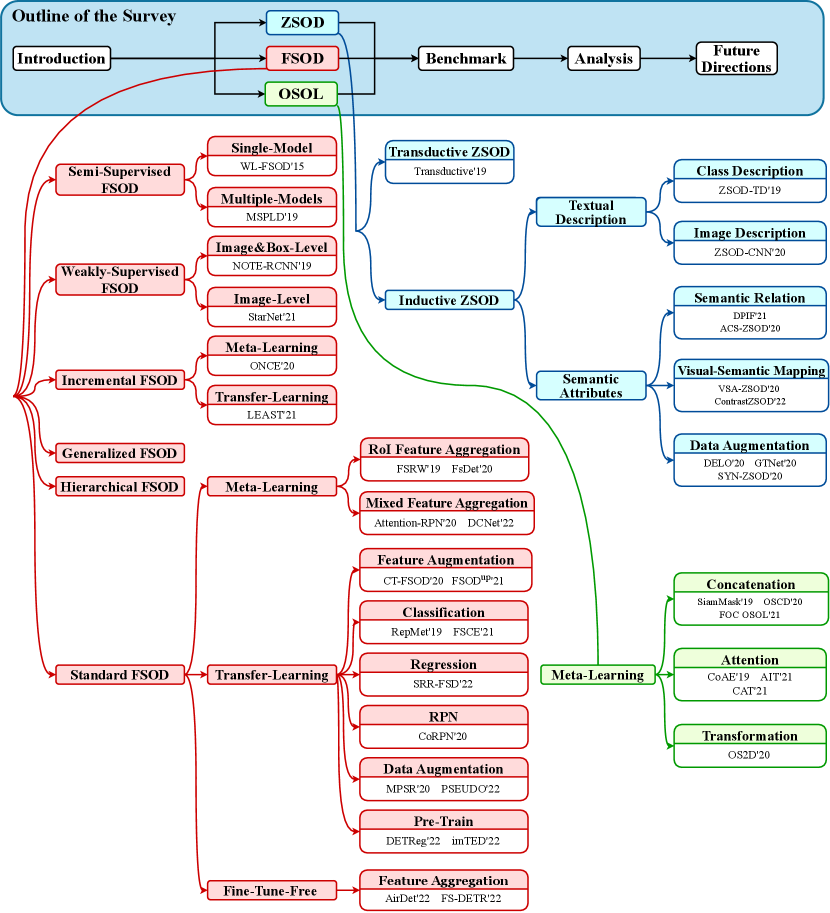

Object detection has achieved a huge breakthrough with deep neural networks and massive annotated data. However, current detection methods cannot be directly transferred to the scenario where the annotated data is scarce due to the severe overfitting problem. Although few-shot learning and zero-shot learning have been extensively explored in the field of image classification, it is indispensable to design new methods for object detection in the data-scarce scenario since object detection has an additional challenging localization task. Low-Shot Object Detection (LSOD) is an emerging research topic of detecting objects from a few or even no annotated samples, consisting of One-Shot Object Localization (OSOL), Few-Shot Object Detection (FSOD), and Zero-Shot Object Detection (ZSOD). This survey provides a comprehensive review of LSOD methods. First, we propose a thorough taxonomy of LSOD methods and analyze them systematically, comprising some extensional topics of LSOD (semi-supervised LSOD, weakly-supervised LSOD, and incremental LSOD). Then, we indicate the pros and cons of current LSOD methods with a comparison of their performance. Finally, we discuss the challenges and promising directions of LSOD to provide guidance for future works.

1. Introduction

Object detection is a fundamental yet challenging task in computer vision, aiming to locate objects of certain classes in images. It has been widely applied to many computer vision tasks like object tracking (Yilmaz et al., 2006; Wang et al., 2019b; Voigtlaender et al., 2019), autonomous driving (Grigorescu et al., 2020; Yurtsever et al., 2020), scene graph generation (Teng and Wang, 2021; Yang et al., 2018; Tang et al., 2020).

The general process of object detection is to predict classes for a set of bounding boxes (imaginary rectangles for reference in the image). Most traditional methods are slow since they generate the bounding boxes using brute force by sliding a window through the whole image. Viola-Jones (VJ) detector (Viola and Jones, 2001) first achieves real-time detection of human faces with three speed-up techniques: integral image, feature selection, and detection cascades. Later, histogram of oriented gradients (HOG) (Dalal and Triggs, 2005) is proposed, and many traditional object detectors adopt it for feature description. Deformable part-based model (DPM) (Felzenszwalb et al., 2008) is a representative traditional method. DPM divides an object detection task into several fine-grained detection tasks, then uses some part-filters to detect parts of an object and aggregates them for final prediction. Although people have made many improvements, traditional methods are restricted by their slow speed and low accuracy.

Compared with these traditional methods, deep-learning-based methods have significantly improved performance. Current deep detectors roughly consist of two-stage detectors and single-stage detectors. Two-stage detectors first generate region proposals (i.e., image regions which are more likely to contain objects) and next make predictions on them, following a similar framework to traditional methods. R-CNN (Girshick et al., 2014) is one of the earliest works of two-stage detectors. It uses selective search to obtain region proposals then extracts their features with a pre-trained CNN model for further classification and regression. Fast R-CNN (Girshick, 2015) improves R-CNN by using a region of interest (RoI) pooling layer to generate feature maps for region proposals from the integral feature map. Faster R-CNN (Ren et al., 2015) further proposes a region proposal network (RPN) to generate region proposals from the whole image feature map using anchors (i.e., pre-defined bounding boxes with specific height and width). However, the generation of region proposals requires high computation cost and storage costs. To mitigate this problem, single-stage detectors are proposed to combine these two stages. YOLO-style object detectors (Redmon and Farhadi, 2018; Bochkovskiy et al., 2020; Ge et al., 2021) are the representative works of single-stage detectors. Given the feature map extracted from the original image, YOLO-style detectors directly pre-define anchors with multiple scales over all locations of the image and predict the class probabilities, regression offsets and object confidence scores of each anchor. Single-stage detectors achieve higher speed, but they generally underperform two-stage detectors. Moreover, some methods like focal loss (Lin et al., 2017b) have been proposed to decrease the performance gap between single-stage and two-stage detectors. Recently, a transformer-based detector named DETR (Carion et al., 2020) has been proposed. DETR achieves end-to-end detection and has comparable performance to many classic detectors. Some extended methods (Zhu et al., 2021b; Dai et al., 2021) are proposed to mitigate the slow convergence problem of DETR.

However, these deep detectors tend to overfit when the training data is scarce and thus require abundant annotated data. In real life, it is hard to collect sufficient annotated data for some object classes due to the scarcity of these classes or special labeling costs, and current deep detectors are not competent in this situation. Therefore, the ability to detect objects from a few or even zero annotated samples is desired for modern detectors. To achieve this goal, Low-Shot Object Detection (LSOD) is introduced into object detection, including One-Shot Object Localization (OSOL), Few-Shot Object Detection (FSOD), Zero-Shot Object Detection (ZSOD). These three settings of LSOD mainly differ in the number of annotated samples for each category. Concretely, OSOL and FSOD tackle the situation that each object category has one or more annotated image samples, while ZSOD differentiates different classes according to the semantic information of each category instead of image samples.

OSOL and FSOD are developed following the mainstream scheme of few-shot learning (FSL). Few-shot learning divides the object classes into base classes with many annotated samples (denoted as base dataset) and novel classes with a few annotated samples (denoted as novel dataset). Note that the annotated samples and the test samples in novel classes are named as support samples and query samples, respectively. Few-shot learning requires to pre-train the model on the base dataset then uses the model to predict novel classes on the novel dataset for evaluation. Current few-shot learning methods are roughly categorized into meta-learning methods and transfer-learning methods. Meta-learning methods adopt a “learning-to-learn” mechanism, which defines multiple few-shot tasks on the base dataset to train the model, and enables the model to adapt to the real few-shot tasks quickly. Moreover, transfer-learning methods learn a good image representation by directly training the model on the base dataset, which is used for the novel dataset. Although meta-learning is a more natural approach to tackle the few-shot problem, Tian et al. (Tian et al., 2020) find that the baseline transfer-learning methods surpass some classic meta-learning methods, especially in the cross-domain few-shot learning. Current few-shot learning methods are mainly explored on the task of image classification. OSOL and FSOD are more challenging than few-shot image classification because object detection requires an extra task to locate the objects. As the branches of few-shot learning, OSOL and FSOD also inherit the core methods (meta-learning & transfer-learning) of it.

OSOL is a few-shot learning setting on object detection which locates objects using only one labeled image of each category in the image. Current OSOL methods all adopt the scheme of meta-learning following few-shot learning, where a large number of one-shot tasks are defined on the base dataset to train the model. OSOL has a strong guarantee that the model precisely knows the object classes contained in each test image. With this strong guarantee, the latest OSOL methods have achieved relatively high performance.

| Notation | Description | Notation | Description |

| Feature map of integral query image | Pool operation | ||

| Feature map of integral support image | Element-wise sum | ||

| The aggregated feature map of and | Channel-wise multiplication | ||

| The RoI feature map in the query image | Convolutional operation | ||

| The RoI feature vector in the query image | FC layer | ||

| The RoI semantic embedding in the query image | Softmax operation | ||

| The pooled feature vector | Sigmoid function | ||

| The aggregated feature vector of and | RELU function | ||

| The semantic embedding of class | The norm of a vector | ||

| The prediction score for class of a RoI | Concatenation operation | ||

| The absolute value of a vector |

However, OSOL setting is not realistic enough since the object classes in the test images are not pre-known in real life. Therefore, another few-shot setting on object detection is adopted by more papers, which is named Few-Shot Object Detection (FSOD). The major differences between FSOD and OSOL are as follows: (1) FSOD needs to predict the correct category of potential objects in the test image. (2) OSOL samples support images independently for each test image, FSOD samples the support images only once for all test images. (3) In FSOD, the number of labeled samples per category can be larger than one. Similar to methods on few-shot image classification, FSOD methods are categorized into two mainstream methods: meta-learning methods and transfer-learning methods. Early FSOD methods mainly adopt the meta-learning scheme. The core operation of meta-learning FSOD methods is to extract the features of a few annotated samples (support features) and aggregate them into the features of query images (query features) for guidance on the prediction of query images. This aggregation operation promotes the model to learn adequate information from a few annotated samples. Early meta-learning FSOD methods simply aggregate the support features with the features of RoIs (RoI features) in the query images. Afterwards, researchers find that the aggregation of integral features is essential for performance improvement since the shallow components in the model also require the information of annotated samples (e.g., the RPN component in Faster R-CNN needs the support features to filter out unmatched region proposals). Therefore, this survey categorizes meta-learning FSOD methods into RoI feature aggregation and mixed feature aggregation methods (“mixed” means using RoI feature aggregation and integral feature aggregation together). Unlike meta-learning methods, transfer-learning FSOD methods directly pre-train the detector on the base dataset and fine-tune it on the novel dataset. Early FSOD methods rarely adopt transfer-learning due to its poor performance. TFA (Wang et al., 2020a) subverts this cognition, which proposes a two-stage fine-tuning strategy to fine-tune the model and achieves better performance than contemporary meta-learning methods. In addition to the standard FSOD discussed above, other extensional settings like semi-supervised FSOD (Misra et al., 2015; Dong et al., 2019), weakly-supervised FSOD (Gao et al., 2019; Karlinsky et al., 2021) and incremental FSOD (Pérez-Rúa et al., 2020; Li et al., 2021a) are also explored by researchers and investigated in this survey.

ZSOD assigns abundant labeled samples to base classes, but it assigns no annotated image samples to novel classes. Instead, mainstream ZSOD allocates semantic attributes to each class (including base and novel classes), and it classifies object proposals according to their semantic similarities with different classes. Mainstream ZSOD methods include visual-semantic mapping methods, semantic relation methods, and data augmentation methods. Most early ZSOD methods belong to visual-semantic mapping methods. These methods aim to learn a visual-semantic function using the annotated samples of the base dataset, which projects visual features into semantic embeddings for comparison with class semantic attributes. Next, semantic relation methods utilize the semantic relation between different classes to make predictions. Moreover, data augmentation methods attempt to generate visual samples for novel classes and re-train the model. Besides the mainstream ZSOD setting described above, this survey discusses some rarely explored settings like transductive ZSOD and textual-description-based inductive ZSOD. Recently, with the emergence of large-scale cross-modal models (e.g., CLIP (Radford et al., 2021)), Open-Vocabulary Object Detection (OVD) attracts more and more research interest, which first trains a stronger visual-semantic mapping function for multiple classes and significantly improves the performance of the further ZSOD task.

The overview of this survey is illustrated in Figure 1. The preliminaries for meta-learning and transfer-learning are given in section 2. The more fine-grained categorization and analysis of methods for LSOD are described in section 3, section 4, section 5, section 6. The two popular datasets (MS COCO dataset (Lin et al., 2014) and PASCAL VOC dataset (Everingham et al., 2010)) and evaluation criteria of LSOD are described in section 7. The performance of current LSOD methods is summarized in section 8. The promising directions LSOD are discussed in section 9. Finally, section 10 concludes the contents of this survey. The key notations used in this survey are summarized in Table 1.

2. Preliminaries

2.1. Meta-Learning

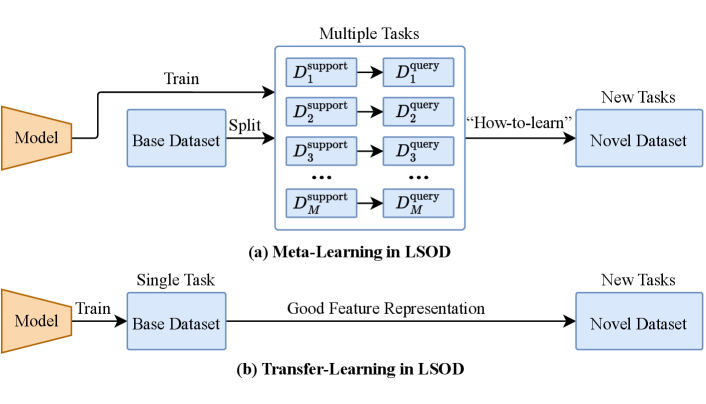

Meta-learning is a “learning-to-learn” (Thrun and Pratt, 1998; Hospedales et al., 2022) paradigm extended from the conventional “learning” paradigm. Conventional learning paradigm directly trains the model from scratch on the whole dataset as a single task. Differently, meta-learning learns the training pattern (e.g., parameter initialization) from multiple tasks, which is capable of generalizing across different tasks and facilitating the learning of new tasks. Therefore, meta-learning is suitable for quick adaptation of the model to the new tasks in few-shot learning. The framework of meta-learning is shown in Figure 2 (a), and a more detailed illustration is in section S1 of the supplementary online-only material.

2.2. Transfer-Learning

Transfer-learning methods aim to transfer the knowledge (good feature representation) from a related domain (named source domain) to the current domain (named target domain), in order to improve the performance of model on the target domain, as shown in Figure 2 (b). Traditional transfer-learning approaches include instance-based methods, feature-based methods, parameter-based methods, and relational-based methods (Zhuang et al., 2021).

For the transfer-learning methods in few-shot learning, the base dataset is viewed as the source domain, and the novel dataset is viewed as the target dataset. Tian et al. (Tian et al., 2020) find that simply transferring a strong feature extractor from the base dataset to the novel dataset outperforms many meta-learning methods on few-shot image classification, and many FSL methods follow this paradigm. Transfer-learning is not suitable for OSOL since the target domain consists of only one image for each task, yet it is widely adopted in FSOD after the emergence of TFA (Wang et al., 2020a).

3. One-Shot Object Localization

Task Settings. One-Shot Object Localization (OSOL) needs to locate objects in a query image according to only one support image for each novel class existing in this query image. The training dataset (base dataset ) of OSOL comprises abundant annotated instances of base classes , and the test dataset (novel dataset ) comprises instances of novel classes ( and are not intersected). Specifically, for each query image in , OSOL randomly samples a support image for each novel class existing in the image. Next, OSOL locates the novel objects in the query image according to the corresponding support image. The main difference from FSOD is that OSOL only requires a binary classification task to discriminate whether the potential object is foreground or background according to the given support image, while FSOD requires a multi-class classification task because FSOD doesn’t pre-know the classes of the existing objects in the query images.

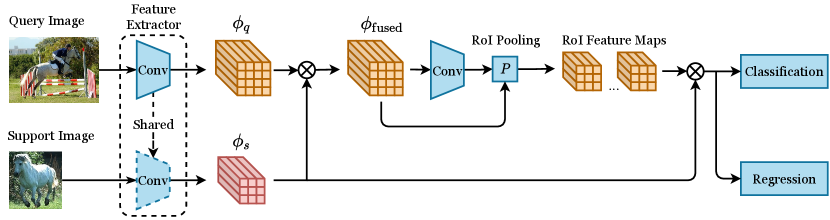

Framework of Current OSOL Methods. Some previous object tracking methods like SiamFC (Cen and Jung, 2018), SiamRPN (Li et al., 2018) are forerunners of OSOL, which are used for comparison with early OSOL methods. Current OSOL methods adopt the meta-learning scheme, and their framework is based on Faster R-CNN, as shown in Figure 3. First, they extract the integral features of the query image and the support image using the same convolutional backbone (named query features and support features, respectively), then conduct “integral feature aggregation” to generate a fused feature map by aggregating the query features with the support features. This fused feature map is fed into RPN and RoI layer to generate category-specific region proposals and the corresponding RoI features, respectively. Finally, these RoI features are used for the final classification and localization tasks. Furthermore, some methods additionally conduct “RoI feature aggregation” to further aggregate the RoI features with the support features.

Current OSOL methods mainly differ in the feature aggregation method, and this survey accordingly categorizes OSOL methods into concatenation-based methods, attention-based methods, and transformation-based methods. In the following sections, , and denote the query feature map, the RoI feature map and the support feature map, respectively. Note that , , , and are the channel and sizes of the feature maps.

3.1. Concatenation-Based Methods

Concatenation-based methods simply adopt the concatenation operation to aggregate and , which are mainly adopted by early OSOL methods (SiamMask (Michaelis et al., 2018), OSCD (Fu et al., 2021), FOC OSOL (Yang et al., 2021a) and OSOLwT (Li et al., 2020c)), as shown in Figure 4.

SiamMask (Michaelis et al., 2018). SiamMask is one of the early deep-learning-based methods for OSOL, which concatenates with the absolute difference between and the pooled embedding vector of to generate the aggregated feature map , as shown in Equation 1. In SiamMask, is directly used for further components (RPN, RoI layer) in Faster R-CNN without other modifications. SiamMask does not achieve satisfying performance since it tackles a segmentation task simultaneously. Nevertheless, as the first method for OSOL, SiamMask proposes a benchmark based on MS COCO dataset for performance comparison, pioneering many future works on OSOL and establishing a baseline for future work.

| (1) |

OSCD (Fu et al., 2021). Different from SiamMask, OSCD directly concatenates with the pooled embedding vector of to generate , as shown in Equation 2. Besides, OSCD further conducts RoI feature aggregation to leverage the information of to facilitate the prediction of RoIs, which concatenates the RoI feature map and in depth. OSCD proposes another OSOL benchmark based on PASCAL VOC dataset for evaluation, and it outperforms SiamFC and SiamRPN by a large margin on this benchmark.

| (2) |

OSOLwT (Li et al., 2020c) and FOC OSOL (Yang et al., 2021a). They add convolutional blocks into the concatenated features, which capture the relation between different feature units for performance improvement, as shown in Equation 3.

| (3) |

Discussion of Concatenation-Based Methods. Concatenation-based methods are mainly adopted by early OSOL methods. SiamMask and OSCD are the earliest concatenation-based methods for feature aggregation, while FOC OSOL and OSOLwT extend SiamMask and OSCD with convolutional blocks and some other elaborated training strategies. However, the limitation of concatenation-based methods is that they simply aggregate features without fully excavating the relation between different local parts of two feature maps, thus impairing the matching between the foreground parts of query feature map with support feature map.

3.2. Attention-Based Methods

Attention-based methods take advantage of the correspondence between different parts of the support features and the query features, as shown in Figure 5.

CoAE (Hsieh et al., 2019). CoAE is the first attention-based OSOL method, which proposes two operations for integral feature aggregation: co-attention (ca) operation and co-excitation (ce) operation. The co-attention operation is implemented using the non-local operation (Wang et al., 2018) (an attention operation), which aggregates two feature maps according to their element-wise attention:

| (4) |

where denotes the non-local operation and . The co-excitation operation generates by aggregating with the pooled embedding vector of with a channel-wise multiplication:

| (5) |

CoAE adopts both these two operations for integral feature aggregation. Besides, CoAE proposes a proposal ranking loss to supervise RPN based on RoI feature aggregation. CoAE outperforms SiamMask on the MS COCO benchmark and OSCD on the PASCAL VOC benchmark, demonstrating the capacity of the attention mechanism on OSOL.

BHRL (Yang et al., 2022), ABA OSOL (Hsieh et al., 2023), ADA OSOL (Zhang et al., 2022a), and AUG OSOL (Du et al., 2022). These later methods follow the co-attention and co-excitation operations in CoAE with some elaborated modifications.

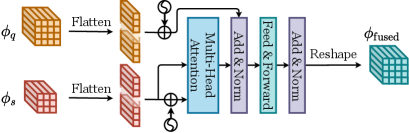

AIT (Chen et al., 2021a), CAT (Lin et al., 2021), SaFT (Zhao et al., 2022a). With the wide usage of transformers (Subakan et al., 2021) in computer vision, some methods (AIT, CAT, SaFT) adopt multi-head attention into OSOL for feature aggregation. These methods flatten the query feature map and the support feature map to be feature sequences and , then generates using multi-head attention to capture bidirectional correspondence between grids of them.

Discussion of Attention-Based Methods. Compared to attention-based methods with transformer, attention-based methods with co-attention require fewer extra parameters and less computation cost. However, CoAE is an early OSOL method, and the simple non-local operation is not enough for feature aggregation of current OSOL methods. Actually, recent methods of this type integrate co-attention with other elaborated operations to improve their performance. On the other hand, methods based on transformer significantly improve performance, and they can easily integrate other elaborated variants of transformer structure into this framework for further performance improvement. However, current transformer-based methods bring too much extra computation cost into model training. Therefore, the efficient transformer structure is expected to be adopted for the trade-off between performance and computation cost.

3.3. Transformation-Based Methods

OS2D (Osokin et al., 2020) proposes a transformation-based method for feature aggregation, which conducts feature map transformation to match query feature map and support feature map. Given the query feature map and the support feature map , OS2D first computes a 4D correlation matrix of shape which represents the correspondence between all pairs of locations from these two feature maps. Then, it uses a pre-trained TransformNet (Rocco et al., 2018) to generate a transformation matrix that spatially aligns the support feature map with the query feature map. Finally, the classification score of each location of the query feature map is obtained from the combination of the correlation matrix and the transformation matrix.

Discussion of OSOL Methods. To sum up, concatenation-based methods are easy to implement, and they require smaller computation cost, but they have poorer performance. Attention-based methods can capture the correspondence between support images and the foreground of query images, thus outperforming concatenation-based methods. The weakness of attention-based methods is that it is more complicated to implement them, and they require larger computation cost. Transformation-based methods make the decision process of OSOL more interpretable, but they require a large pre-trained model to capture the spatial correspondence between query and support images.

4. Standard Few-Shot Object Detection

Task Settings. The previous OSOL setting guarantees that every query image contains objects with the same category as the given support image, i.e., the model knows precisely the object classes contained in each test image. However, this setting is not realistic in the real world, and a more challenging LSOD setting, named Few-Shot Object Detection (FSOD), is adopted by more papers. This section first introduces the standard FSOD, and other FSOD settings (named extensional FSOD) are based on the standard FSOD, which will be analyzed in the later sections. Specifically, the base dataset () of standard FSOD consists of abundant annotated instances of base classes , and the novel dataset () consists of scarce annotated instances of novel classes ( and are not intersected). During testing, the model is evaluated on the test dataset comprising objects of both base classes and novel classes. The differences between FSOD and OSOL are as below:

-

(1)

Since OSOL precisely knows the object categories contained in each test image, it only requires a binary classification task to discriminate whether the potential object is foreground or background according to the given support image. In contrast, FSOD requires a multi-class classification task to predict the category of the potential object.

-

(2)

OSOL samples support images independently for each test image, FSOD samples the support images only once for all test images.

-

(3)

The shot number of support images per category can be larger than one in FSOD.

Method Categorization. Current standard FSOD methods can be categorized into fine-tune-based methods and fine-tune-free methods. Most methods are fine-tune-based methods, which require to fine-tune the model on the novel dataset for the significant improvement of performance. Early fine-tune-based methods adopt the scheme of meta-learning, and they also concentrate on the methods of feature aggregation as OSOL methods. Furthermore, the increased number of annotated samples opens up the possibility for standard FSOD methods to adopt the scheme of transfer-learning, which pre-trains an object detector on the base dataset and fine-tunes this pre-trained model for novel classes on the novel dataset. Early transfer-learning methods like LSTD (Chen et al., 2018) are outperformed by the meta-learning methods in that period until the emergence of TFA (Wang et al., 2020a). On the other hand, fine-tune-free methods aim to remove the fine-tuning step because fine-tuning step is not suitable for FSOD in real life for its nonnegligible computation cost. In this survey, the meta-learning methods are first analyzed since they are highly correlated to OSOL methods, then transfer-learning methods and fine-tune-free methods are analyzed later.

4.1. Meta-Learning Methods

Similar to OSOL, the meta-learning methods for standard FSOD first define a large number of few-shot detection tasks on the base dataset to train the model. The difference is that each few-shot task contains a query image and multiple support images since FSOD requires support images from all base classes for the multi-class classification task. Another difference is that meta-learning methods for standard FSOD have an additional fine-tuning stage that OSOL methods lack, which continues to meta-train the model by sampling support images from both base classes and novel classes for each few-shot task. The meta-learning framework of standard FSOD is similar to that of OSOL, which conducts “integral feature aggregation” and “RoI feature aggregation” to aggregate the query features with support features to incorporate the information of support images into the query image for prediction. Early meta-learning methods only conduct RoI feature aggregation, and later methods conduct both integral and RoI feature aggregation (named “mixed feature aggregation”) for better performance. Therefore, meta-learning methods for standard FSOD are categorized into RoI feature aggregation methods and mixed feature aggregation methods for a more explicit presentation in this survey.

4.1.1. RoI Feature Aggregation Methods

RoI feature aggregation methods aggregate the RoI features with support features to generate class-specific RoI features for the classification and regression tasks. Unlike OSOL methods that almost all adopt Faster R-CNN as the detection framework, early meta-learning methods explore RoI feature aggregation methods on both single-stage and two-stage detectors. These RoI feature aggregation methods can be categorized into two types according to the type of aggregated features: RoI feature-vector aggregation methods (FSRW (Kang et al., 2019), Meta R-CNN (Yan et al., 2019), CME (Li et al., 2021c), TIP (Li and Li, 2021), VFA (Han et al., 2023), FSOD-KT (Kim et al., 2020), GenDet (Liu et al., 2022a), FsDet (Xiao and Marlet, 2020), DRL (Liu et al., 2021b), and AFD-Net (Liu et al., 2021a)) and RoI feature-map aggregation methods (Attention-RPN (Fan et al., 2020), QA-FewDet (Han et al., 2021), KFSOD (Zhang et al., 2022d), PNSD (Zhang et al., 2020a), MM-FSOD (Han et al., 2022c), SQMG-FSOD (Zhang et al., 2021c), ICPE (Lu et al., 2022), DAnA-FasterRCNN (Chen et al., 2021b), TENET (Zhang et al., 2022c), Hierarchy-FasterRCNN (Park and Lee, 2022), IQ-SAM (Lee et al., 2022a), and Meta Faster R-CNN (Han et al., 2022a)).

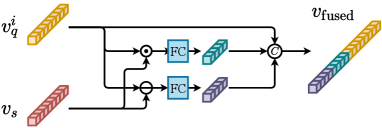

The “RoI feature-vector aggregation methods” can be categorized into two types, which are first proposed by FSRW and FsDet, respectively.

FSRW (Kang et al., 2019) is the first meta-learning method for standard FSOD based on the YOLOv2 detection framework. FSRW simply aggregates each feature vector at each pixel of the query feature map with the pooled embedding of the support feature map, aiming to highlight the important features corresponding to the support image using a simple element-wise multiplication:

| (6) |

The fused feature vector is used to predict the classification score (for the class that is from) and location regression, as shown in 6(a). Meta R-CNN (Yan et al., 2019), CME (Li et al., 2021c), TIP (Li and Li, 2021), VFA (Han et al., 2023), FSOD-KT (Kim et al., 2020) and GenDet (Liu et al., 2022a) follow this simple element-wise multiplication operation with other elaborated extensions.

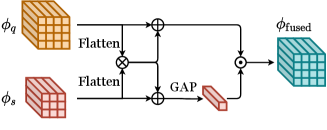

FsDet (Xiao and Marlet, 2020) upgrades this simple element-wise multiplication operation to a more complex yet effective version, as shown in 6(b). Specifically, given the RoI feature vector and the support feature vector , the aggregated feature vector is calculated as the concatenation of their linearly transformed element-wise multiplication, subtraction and the original , as shown in Equation 7 ( denotes a fully-connected layer that reduces the dimension). With this extended aggregation method, FsDet outperforms Meta R-CNN on both MS COCO benchmark and PASCAL VOC benchmark.

| (7) |

AFD-Net (Liu et al., 2021a) and DRL (Liu et al., 2021b). These two methods follow FsDet in this RoI feature-vector aggregation method with some other modifications.

Unlike the above RoI feature-vector aggregation methods which concentrate on the aggregation of feature vectors, RoI feature-map aggregation methods focus on the aggregation of feature maps that preserves spatial information for better excavating the relation between query and support images. Some methods only adopt simple concatenation operation and element-wise operation for the feature map aggregation, while newly proposed methods tend to adopt attention operation for feature map aggregation.

Concatenation operation & element-wise operation for RoI feature-map aggregation. SQMG-FSOD (Zhang et al., 2021c) simply concatenates the RoI feature map with the support feature map for the RoI feature-map aggregation. While some methods (Attention-RPN (Fan et al., 2020), QA-FewDet (Han et al., 2021), KFSOD (Zhang et al., 2022d), PNSD (Zhang et al., 2020a), FCT (Han et al., 2022b), and MM-FSOD (Han et al., 2022c)) utilize a multi-relation head that adopt both concatenation operation and element-wise operation. Specifically, this multi-relation head consists of a global-relation head, a patch-relation head, and a local-relation head. The global-relation head concatenates and in depth with a pooling operation. The patch-relation head concatenates and with several convolutional blocks on it. And the local-relation head aggregates and by calculating the pixel-wise and depth-wise similarities between them. These methods conduct both integral and RoI feature aggregation, which will be specified later.

Attention operation for RoI feature-map aggregation. Some methods (ICPE (Lu et al., 2022), DAnA-FasterRCNN (Chen et al., 2021b), TENET (Zhang et al., 2022c), Hierarchy-FasterRCNN (Park and Lee, 2022), IQ-SAM (Lee et al., 2022a), and Meta Faster R-CNN (Han et al., 2022a)) adopt the attention operation to conduct RoI feature-map aggregation. Specifically, they calculate the aggregated feature map according to the similarity score (attention) between each pair of elements from and . In these methods, ICPE conducts only RoI feature aggregation with some proposed modifications. Specifically, it additionally incorporates the information of query images into support images before the final feature aggregation, and it adjusts the importance of different support images instead of treating them as equals. Other methods conduct both integral and RoI feature aggregation, which will be specified later.

Discussion of RoI Feature Aggregation Methods. RoI Feature Aggregation Methods are categorized into RoI feature-vector aggregation methods and RoI feature-map aggregation methods. RoI feature-vector aggregation methods are early meta-learning methods for FSOD, whose approaches are simple and limit their performance. On the other hand, RoI feature-map aggregation methods preserve spatial information of query and support samples, towards fully extracting the spatial relations between query and support features. Therefore, RoI feature-map aggregation methods can better discriminate features of different objects and achieve higher performance.

4.1.2. Mixed Feature Aggregation Methods

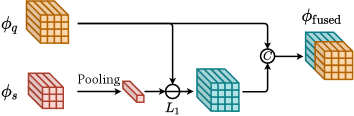

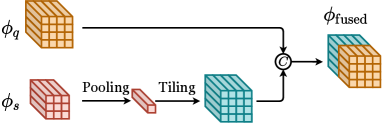

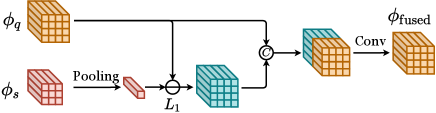

The above section discusses only the RoI feature aggregation, while most newly proposed methods (named “mixed feature aggregation methods”) additionally conduct integral feature aggregation to incorporate class-specific information into the shallow components of the detection model. The integral feature aggregation methods are mainly conducted on the feature-maps (not feature-vectors) and can be categorized into concatenation & element-wise operations (Attention-RPN (Fan et al., 2020), QA-FewDet (Han et al., 2021), KFSOD (Zhang et al., 2022d), PNSD (Zhang et al., 2020a), MM-FSOD (Han et al., 2022c), Meta Faster R-CNN (Han et al., 2022a)), convolutional operation (SQMG-FSOD (Zhang et al., 2021c)), and attention operation (DAnA-FasterRCNN (Chen et al., 2021b), TENET (Zhang et al., 2022c), Hierarchy-FasterRCNN (Park and Lee, 2022), IQ-SAM (Lee et al., 2022a), DCNet (Hu et al., 2021), Meta-DETR (Zhang et al., 2021b), FCT (Han et al., 2022b)).

Concatenation & element-wise operations for integral feature aggregation. Attention-RPN (Fan et al., 2020) conducts integral feature map aggregation by using as a kernel and sliding it across to compute similarities at each location. Specifically, the element at the location of the aggregated feature map is calculated in Equation 8 (note that ). Some methods (QA-FewDet (Han et al., 2021), KFSOD (Zhang et al., 2022d), PNSD (Zhang et al., 2020a), MM-FSOD (Han et al., 2022c), Meta Faster R-CNN (Han et al., 2022a)) follow this integral feature aggregation method with other extensions.

| (8) |

Convolutional operation for integral feature aggregation. SQMG-FSOD (Zhang et al., 2021c) proposes another integral feature aggregation method by generating convolutional kernels from support features and using the generated kernels to enhance query features. Furthermore, SQMG-FSOD not only learns a distance metric to compare RoI features and support features for filtering out irrelevant RoIs but also utilizes this metric to assign weights to support samples by comparing them with query images. Additionally, it proposes a hybrid loss to mitigate the false positive problem (i.e., some background RoIs are misclassified into objects).

Attention operation for integral feature aggregation. Newly proposed methods (DCNet (Hu et al., 2021), DAnA-FasterRCNN (Chen et al., 2021b), TENET (Zhang et al., 2022c), Hierarchy-FasterRCNN (Park and Lee, 2022), IQ-SAM (Lee et al., 2022a), Meta-DETR (Zhang et al., 2021b), and FCT (Han et al., 2022b)) tend to adopt attention operation for integral feature aggregation. Attention operation aggregates two feature-maps using a similar manner as scaled dot-product attention (Subakan et al., 2021). It extracts the key map and the value map from the query image and the support image, respectively, then calculates the pixel-wise similarities between these two key maps and uses them to aggregate two value maps.

-

•

Meta-DETR also adopts attention operation for integral feature aggregation with a significant boost in performance. The major difference is that it adopts Deformable DETR (Zhu et al., 2021b) as the detection framework. DETR is an end-to-end transformer-based detector that eliminates anchor boxes in former detectors. Besides, Meta-DETR proposes a correlational aggregation module (CAM) that uses single-head attention to aggregate the query feature-maps with the support feature-maps. The aggregated features are finally fed into a class-agnostic transformer to predict object categories and locations.

-

•

Most of these methods aggregate the query and support features that are extracted from the backbone independently, while FCT surpasses this limit and instead aggregates the features in each layer of the ViT backbones, which achieves significant performance improvement. First, it splits query images and support images into image tokens and add position & branch embeddings into them (i.e., position embedding discriminates the position of the token, and branch embedding discriminates whether the token is from support image or query image). Next, it concatenates all query and support tokens into a sequence and feeds them into a transformer to generate the aggregated integral features.

Discussion of Mixed Feature Aggregation Methods. Compared to RoI feature aggregation methods, mixed feature aggregation methods additionally conduct integral feature aggregation to incorporate category-specific information into the shallow components (mainly RPN) of the detection model, which extracts more positive region proposals for the further classification & regression tasks and improves the performance. Mixed feature aggregation methods are categorized into three types: concatenation & element-wise operations, convolutional operation, and attention operation. Simple concatenation & element-wise operations are mostly adopted by early FSOD methods, which have poor performance and need to combine other components altogether for performance improvement. Convolutional operation is still simple, which cannot fully incorporate the information of support features into query features. Attention operation captures the relation between local regions in query feature maps and support feature maps, which better discriminates different local regions, and these methods overall achieve better performance.

4.1.3. Other Meta-Learning Methods

There are some other meta-learning methods that focus on issues other than the aggregation method of features, which are weight-prediction-based methods and metric-learning-based methods.

Weight-Prediction-Based Methods. MetaDet (Wang et al., 2019a) proposes a meta-learning method that learns to predict the weights of category-specific components of the model. MetaDet predicts category-specific (e.g., the classification and regression branches) weights for novel classes from few samples and fine-tunes the model on the novel dataset. Meta-RetinaNet (Li et al., 2020b) is another method which adopts RetinaNet as the detection framework and predicts the weights of the whole network.

Metric-Learning-Based Methods. IR-FSOD (Huang et al., 2021) directly learns to compare the similarity between the RoI features with support features from different classes to generate the classification scores. CAReD (Quan et al., 2022) also adds another metric learning branch for classification apart from the main classification branch.

4.2. Transfer-Learning Methods

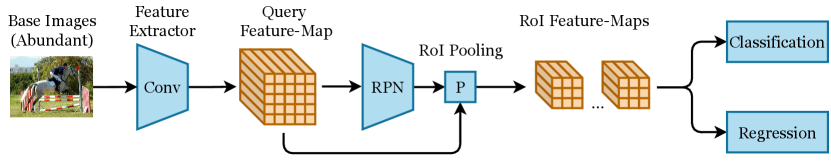

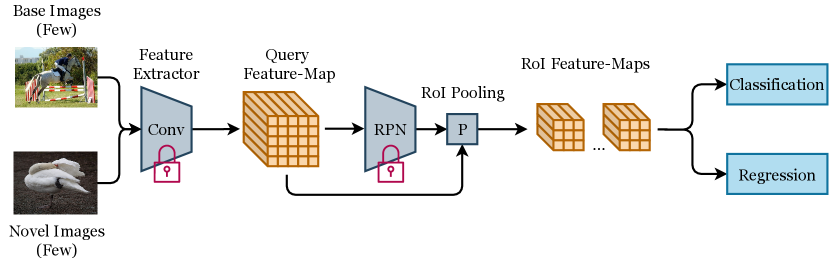

Transfer-learning methods regard FSOD as a transfer-learning problem in which the source domain is the base dataset, and the target domain is the novel dataset. Current transfer-learning methods mainly adopt Faster R-CNN as the detection framework, consisting of two stages: base training and few-shot fine-tuning, as shown in Figure 7. The base training stage trains an object detector on the base dataset. After this stage, the object detector will obtain an effective feature extractor and achieve good performance on base classes. Then, in the few-shot fine-tuning stage, this pre-trained object detector will be fine-tuned on the novel dataset to detect novel classes. In this way, the common knowledge for feature extraction and proposal generation can be transferred from base classes to novel classes.

LSTD (Chen et al., 2018) is the first method to adopt the transfer-learning scheme for FSOD. It adopts Faster R-CNN as the detection framework with two regularization terms in the few-shot fine-tuning stage. Specifically, the first term suppresses background regions in the feature maps, and the second term promotes the fine-tuned model to generate similar predictions with the source model. Regrettably, the performance of LSTD is exceeded by the meta-learning methods during the same period.

TFA (Wang et al., 2020a) (Two-Stage Fine-tuning Approach) significantly improves the performance of transfer-learning methods based on the Faster R-CNN detection framework. In the base training stage, TFA pre-trains the model on the base dataset as previous transfer-learning methods. Then, in the few-shot fine-tuning stage, it freezes the main components of Faster R-CNN and only fine-tunes the last two layers (box classification and regression layers) of Faster R-CNN. The loss function used in the few-shot fine-tuning stage is the same as the base training stage but with a lower learning rate. The dataset used in the few-shot fine-tuning stage is a balanced dataset containing a few training samples of novel classes and a few selected training samples of base classes. This design retains the model’s detection ability for base classes and mitigates the problem that some objects of base classes are misclassified into novel classes. With this simple but effective training strategy, TFA outperforms early meta-learning methods like FSRW, MetaDet, and Meta R-CNN on both MS COCO benchmark and PASCAL VOC benchmark.

DeFRCN (Qiao et al., 2021) significantly improves the performance of TFA with two concise modifications: (1) DeFRCN assigns different importance values to the gradients from RPN module and R-CNN module, which is motivated by the viewpoint that RPN module and R-CNN module may learn paradoxically and the learning of these two modules should be decoupled. (2) DeFRCN utilizes a pre-trained classifier as an auxiliary branch for the classification of region proposals. DeFRCN further validates the effectiveness of transfer-learning methods for FSOD, and many methods are proposed following this transfer-learning paradigm. In this survey, transfer-learning methods are categorized into feature-augmentation-based methods, classification-based methods, regression-based methods, RPN-based methods, data-augmentation-based methods, and pre-train-based methods according to the detection stage they focus on.

4.2.1. Feature-Augmentation-Based Methods

Feature-augmentation-based methods focus on the feature extraction stage of an FSOD model. These methods apply different augmentations to the features, aiming to better transfer the features learned on the base dataset to the novel dataset. Current feature-augmentation-based methods can be categorized into three types: self-attention-based methods (CT-FSOD (Yang et al., 2020a), AttFDNet (Chen et al., 2020)), feature-discretization-based methods (SVD-FSOD (Wu et al., 2021b), KD-FSOD (Pei et al., 2022)), and feature-inheritance-based methods ( (Wu et al., 2021a), FADI (Cao et al., 2021)).

Self-Attention-Based Methods for Feature-Augmentation. Self-attention-based methods (CT-FSOD (Yang et al., 2020a), AttFDNet (Chen et al., 2020)) adopt self-attention to augment the extracted features.

Feature-Discretization-Based Methods for Feature-Augmentation. Feature-discretization-based methods (SVD-FSOD (Wu et al., 2021b), KD-FSOD (Pei et al., 2022)) discretize the feature-map by projecting each pixel of the feature-map into a learned codebook (i.e., replacing each pixel of the feature-map with its nearest code), thus enhancing the discrimination of features from different categories.

Feature-Inheritance-Based Methods for Feature-Augmentation. Feature-inheritance-based methods ( (Wu et al., 2021a), FADI (Cao et al., 2021)) inherit the features of base classes to the features of novel classes for augmentation, which mitigates the data scarcity problem of novel classes.

Discussion of Feature-Augmentation-Based Methods. Self-attention-based methods incorporate interpretability into the decision-making of FSOD through the attention heatmaps. However, self-attention-based methods are early FSOD methods, and the attention operations they adopt are primitive, restricting their performance.

Feature-discretization-based methods utilize feature discretization to enhance the discrimination of features from different categories, but they haven’t demonstrated the visual concepts that the discretized features represent. Besides, KD-FSOD requires an additional step to train an extra visual-word model and needs knowledge distillation to inherit the knowledge of this visual-word model into the few-shot detector, bringing a non-negligible burden into model training.

Feature-inheritance-based methods utilize the knowledge from base classes as “free lunch” to augment the features of novel classes with negligible cost. However, in the scenario that base classes and novel classes are not in the same domain, it is unclear whether these methods still work since base classes and novel classes share less common knowledge.

4.2.2. Classification-Based Methods

Classification-based methods aim to improve the classification branch of the detection model. Early classification-based methods focus on improving the main classification branch with some elaborated metric learning methods (RepMet (Karlinsky et al., 2019), NP-RepMet (Yang et al., 2020b), PNPDet (Zhang et al., 2021a), FSOD-KI (Yang et al., 2023)). New classification-based methods mostly propose another classification branch to assist the main classification branch, including additional-classifier-based methods (FSCN (Li et al., 2021e)), contrastive-learning-based methods (FSCE (Sun et al., 2021), FSRC (Shangguan et al., 2022), CoCo-RCNN (Ma et al., 2022)), knowledge-graph-based methods (KR-FSOD (Wang and Chen, 2022)), and semantic-infor-mation-based methods (SRR-FSOD (Zhu et al., 2021a)).

Metric Learning Methods for Classification. These methods (RepMet (Karlinsky et al., 2019), NP-RepMet (Yang et al., 2020b), PNPDet (Zhang et al., 2021a), FSOD-KI (Yang et al., 2023)) propose elaborated metric learning methods to directly improve the main classification branch.

Additional-Classifier-Based Methods for Classification. FSCN (Li et al., 2021e) proposes a few-shot correction network (FSCN) as an additional classification branch of the model, which makes class predictions for the cropped region proposals with a pre-trained image classifier. These classification scores are used to refine the classification scores from the main branch. Besides, this paper proposes a semi-supervised distractor utilization method to select unlabeled distractor proposals for novel classes and a confidence-guided dataset pruning (CGDP) method for filtering out training images containing unlabeled objects of novel-classes.

Contrastive-Learning-Based Methods for Classification. These methods (FSCE (Sun et al., 2021), CoCo-RCNN (Ma et al., 2022), FSRC (Shangguan et al., 2022)) adopt contrastive learning to assist the classification of region proposals.

-

•

FSCE introduces a contrastive loss to improve the classification performance of the model. FSCE proposes a contrastive loss function to maximize the similarity between objects of the same category and promote the distinctiveness of region proposals from different categories. This work is the first attempt to adopt contrastive learning into transfer-learning-based FSOD, which significantly improves the performance of the baseline TFA.

Knowledge-Graph-Based Methods for Classification. KR-FSOD (Wang and Chen, 2022) proposes an additional classification branch based on an external knowledge graph with potential objects as nodes. The model predicts the category of each potential object according to the information of its nearby objects, which is extracted from this external knowledge graph. KR-FSOD improves the performance by incorporating the external knowledge graph into the FSOD model.

Semantic-Information-Based Methods for Classification. SRR-FSOD (Zhu et al., 2021a) proposes an additional classification branch utilizing class semantic information to promote the classification, which utilizes the external semantic information into the FSOD model for higher performance. Specifically, SRR-FSOD projects the visual features into the semantic space using a linear projection. In this semantic space, multiple word embeddings are used as semantic embeddings to represent all base and novel classes. It generates class probabilities for the projected semantic embeddings by calculating the similarities between the projected visual features and the class semantic embeddings.

Discussion of Classification-Based Methods. Metric learning methods are early methods for FSOD with insufficient performance compared with the latest FSOD methods, indicating that simple modification on the RoI classifier is not enough for FSOD.

Additional-classifier-based method (FSCN) achieves a large performance improvement. However, it requires a pre-trained image classifier, resulting in an unfair comparison with other FSOD methods.

Contrastive-learning-based methods incur minimal additional cost during model training while yielding a substantial improvement in performance. Besides, they can be seamlessly integrated into other FSOD methods.

Knowledge-graph-based method (KR-FSOD) is well motivated, but the performance is currently not promising. Additionally, like FSCN, it cannot be readily applied to novel classes in real-world FSOD applications due to the unavailability of corresponding knowledge graphs.

Semantic-information-based method (SRR-FSOD) serves as a bridge between FSOD and zero-shot learning by incorporating class semantic information into the model. This approach has the potential for enhancing performance with the large-scale cross-modal models. Nevertheless, it may not be suitable for novel classes that haven’t been learned before.

4.2.3. Regression-Based Methods

Regression-based methods focus on improving the regression branch of detection model. SRR-FSD (Kim et al., 2022) proposes a refinement approach to improve the regression of region proposals in RPN. Specifically, SRR-FSD expands the regression branch into multiple successive regression heads. Each regression head receives the region proposals generated from the preceding regression head and continues to refine these region proposals for generating more positive samples.

Discussion of Regression-Based Methods. While the performance of the current regression-based method (SRR-FSD) is currently not ideal, it’s important to note that such methods are still rare, and there is ample opportunity for future exploration and improvement.

4.2.4. RPN-Based Methods

CoRPN (Zhang et al., 2020b) improves the RPN in Faster R-CNN for standard FSOD. CoRPN assumes that the RPN pre-trained on base classes will miss some objects of novel classes. Therefore, it uses multiple foreground-background classifiers in RPN instead of the original single one to mitigate this problem. During testing, a given proposal box is assigned with the score from the most certain RPN. During training, only the most certain RPN will get the gradient from the corresponding bounding box. CoRPN proposes a diversity loss to encourage the diversity of these RPNs and a cooperation loss to mitigate firm rejection of foreground proposals.

Discussion of RPN-Based Methods. RPN-based method (CoRPN) directly devises multiple RPNs to retrieve those missed novel objects, which addresses the problem that novel objects tend to be missed by the RPN trained on the base dataset. However, it is limited in R-CNN-based model, and it is unclear whether it still works when integrated into other FSOD models.

4.2.5. Data-Augmentation-Based Methods

Data-augmentation-methods aim to generate more samples for each novel class, thus directly tackling the data-scarce problem of few-shot setting. Current data augmentation methods can be divided into two categories: sample generation in the input-pixel space and sample generation in the feature space. The former type directly generates samples in the input-pixel space that are understandable and perceivable by humans, which can be further divided into multi-scale augmentation methods and novel-instance-mining methods. The latter type synthesizes more deep features for the novel classes, which can be further divided into distribution inheritance methods and generator-based methods.

Sample Generation In the Input-Pixel Space Multi-Scale Augmentation Methods. MPSR (Wu et al., 2020) and FSSP (Xu et al., 2021) both apply data augmentation to enrich the scales of positive samples.

-

•

MPSR claims that although feature pyramid network (FPN) (Lin et al., 2017a) may mitigate the scale variation issue, it cannot address the sparsity of scale distribution in FSOD. Therefore, MPSR proposes a strategy to directly augment the scales of objects in the input pixel space, which extracts each positive object independently and resizes them to multiple scales. The augmented multi-scale samples are fed into the RPN module and detection heads for training.

Sample Generation In the Input-Pixel Space Novel-Instance-Mining Methods. MINI (Cao et al., 2022), PSEUDO (Kaul et al., 2022), Decoupling (Gao et al., 2022a), and N-PME (Liu et al., 2022b) excavate the unlabeled novel objects in the dataset for data augmentation.

Sample Generation In the Feature Space Distribution Inheritance Methods. FSOD-KD (Zhao et al., 2022b), PDC (Li et al., 2022a), and FSOD-DIS (Wu et al., 2022) generate more novel features by transferring the feature distribution from the base dataset for data augmentation, which stem from the same few-shot learning method (Yang et al., 2021b). Specifically, these methods assume that the feature distribution of a class can be approximated as a Gaussian distribution and similar classes have similar feature distributions. Therefore, they calculate the feature distribution of base classes using their abundant samples and estimate the feature distribution of each novel class according to their nearest base classes. Finally, these methods sample more novel features from the estimated feature distribution and use them for training.

Sample Generation In the Feature Space Generator-Based Methods. Halluc (Zhang and Wang, 2021) aims to synthesize additional RoI features for novel classes. It proposes a simple hallucinator to generate hallucinated RoI features, implemented as a simple two-layer MLP. In the base-training stage, Halluc first trains a Faster R-CNN on the base dataset as regular object detection. Then, it freezes the parameters of the detector and pre-trains the hallucinator with a classification loss for the synthesized samples. Next, in the few-shot fine-tuning stage, Halluc unfreezes the parameters of detection heads (classification head & regression head) and adopts an EM-like algorithm to train the hallucinator and detection heads alternately. It is noted that this method shows impressive performance when the number of training samples is extremely small. However, its superiority over baseline methods such as TFA cannot be guaranteed as the number of training samples increases.

Discussion of Data-Augmentation-Based Methods. Methods for sample generation in the input-pixel space are categorized into multi-scale augmentation methods and novel instance mining methods. Multi-scale augmentation methods are effective data-augmentation methods for FSOD, and they are easy to implement. However, conducting data-augmentation only on the aspect of scale does not tackle the core of data-scarcity problem of FSOD, and they are early FSOD methods with insufficient performance. For the novel instance mining methods, it is true that on current FSOD benchmarks, many objects from novel classes indeed exist in the images without annotation. Capturing these objects effectively mitigates the data-scarcity problem in FSOD and significantly improves the performance. These methods have great potential to be integrated into other FSOD methods. However, this setting is not realistic. In real-life FSOD, it is not guaranteed that the images of the base dataset contain objects from novel classes.

Methods for sample generation in the feature space are categorized into two categories: distribution inheritance methods and generator-based methods. The former type effectively generates more samples for novel classes using the data distribution from the data-abundant base classes. It introduces no extra parameters and can be considered as a “free lunch” from the base dataset. However, it is not applicable in the real-world scenario that there is a significant difference between the data distribution of the base classes and novel classes. The latter type is more suitable for the scenario that base classes and novel classes differ a lot, but it introduces an extra generator which may increase the burden for model training.

4.2.6. Pre-Train-Based Methods

Almost all transfer-learning methods adopt a backbone pre-trained on ImageNet before the base training stage. Some methods (DETReg (Bar et al., 2022), imTED (Zhang et al., 2022b)) focus on improving this pre-training stage.

-

•

DETReg pre-trains a DETR model in an unsupervised manner. On the one hand, it uses Selective Search (Uijlings et al., 2013) to excavate object proposals and uses them to train the object localization branch of the model. On the other hand, it uses another pre-trained self-supervised model to generate object encodings and enforces the DETR model to mimic these object encodings.

-

•

imTED integrally migrates a pre-trained MAE model (He et al., 2022) to be a detection model. Concretely, imTED adds a region proposal network and a detection head into the MAE model following the design of Faster R-CNN. Besides, it proposes a multi-scale feature modulator to fuse multi-scale features extracted from a FPN (Lin et al., 2017a).

Discussion of Pre-Train-Based Methods. These methods explore the current FSOD problem in a new perspective that pursues a stronger backbone before the few-shot training stage, while current FSOD methods most simply adopt a backbone pre-trained with a classification task on ImageNet. Besides, the performance of these methods is significantly superior to other methods. However, these methods require a stronger pre-trained backbone (DETReg requires SwAV, and imTED requires MAE). Besides, these methods never clarify whether these stronger backbones cover the knowledge of novel classes in the FSOD setting, which will bring an unfair comparison with other FSOD methods.

4.3. Fine-Tune-Free Methods

Fine-tune-free methods focus on directly transferring the trained model from the base dataset to the novel dataset without fine-tuning. Existing fine-tune-free methods (AirDet (Li et al., 2022b), FS-DETR (Bulat et al., 2022)) adopt the scheme of meta-learning, and they also focus on the method of feature aggregation. Specifically, AirDet conducts integral feature aggregation with element-wise multiplication and concatenation operations, and it proposes to learn the weights of different support samples instead of treating them as equals. Besides, AirDet aggregates RoI features with support features for the regression branch. FS-DETR concatenates query features with support features into a common sequence and feeds it into the DETR model. FS-DETR proposes the learnable pseudo-class embeddings with the same shape as support features and adds them into support features to facilitate the model training.

Discussion of Fine-Tune-Free Methods. Fine-tune-free method requires less computation cost and are more suitable for real life. However, the performance of these methods is currently not ideal compared to the fine-tune-based methods.

5. Zero-Shot Object Detection

Zero-Shot Object Detection (ZSOD) is an extreme scenario of LSOD that novel classes do not contain any image sample. Concretely, the training dataset (base dataset ) of ZSOD consists of abundant annotated instances of base classes , and the test dataset (novel dataset ) does not consist of annotated instances of novel classes ( and are not intersected). As a substitute, ZSOD utilizes semantic information to assist in detecting objects of novel classes.

According to whether utilizing unlabeled test images for model training, this survey categorizes ZSOD into two domains: “transductive ZSOD” and “inductive ZSOD”. Inductive ZSOD is the mainstream of ZSOD, which does not require accessing the test images in advance. Differently, transductive ZSOD is rarely explored, which utilizes unlabeled test images to assist model training. Furthermore, inductive ZSOD is categorized according to the type of semantic information: semantic attributes and textual description. The former type utilizes the semantic attributes (word vector) as the auxiliary semantic information to represent each class. In contrast, the latter type utilizes the textual description (e.g., a description sentence for an image or a class) as the auxiliary semantic information. This section gives a comprehensive introduction to semantic-attributes-based inductive ZSOD (standard ZSOD). Textual-description-based inductive ZSOD and transductive ZSOD will be discussed in the later sections.

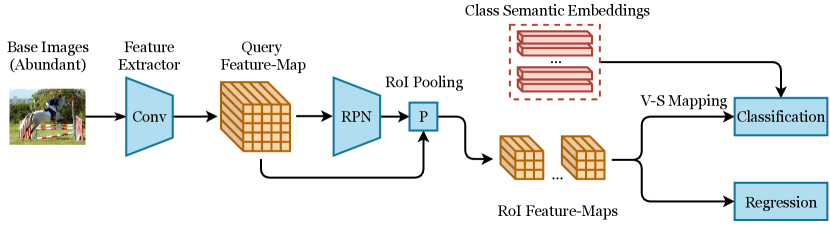

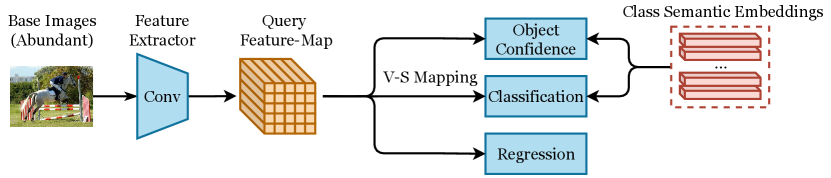

Current semantic-attributes-based inductive ZSOD methods adopt Faster R-CNN or YOLO-style model as the detection framework, as shown in Figure 8. Ankan Bansal et al. (Bansal et al., 2018) propose one of the earliest methods for semantic-attributes-based inductive ZSOD based on Faster R-CNN. This work first establishes a simple baseline built on Faster R-CNN, which uses a simple linear projection to project RoI features into semantic space and calculates the class probabilities of as the cosine similarities between the projected semantic embeddings and the semantic attributes of each class. As one of the earliest methods for ZSOD, this work sets up a benchmark adopted by many future works.

ZS-YOLO (Zhu et al., 2020b) is another early work for semantic-attributes-based inductive ZSOD based on YOLOv2. It projects each cell in the feature map into semantic embeddings for class prediction. Compared to the contemporaneous work (Bansal et al., 2018), ZS-YOLO adopts a different detection framework, and it does not require external training data and semantic embeddings of background class. However, these two methods are evaluated using different dataset settings, making it difficult to directly compare their performance.

As the forerunners of two mainstream detection frameworks for semantic-attributes-based inductive ZSOD, the above two methods (Bansal et al., 2018; Zhu et al., 2020b) are followed by many future works. The later methods mainly follow their framework with some extensions on different components of the framework. According to the modified components they focus on, this survey categorizes semantic-attributes-based inductive ZSOD methods into semantic relation methods, data augmentation methods, and visual-semantic mapping methods.

5.1. Semantic Relation Methods

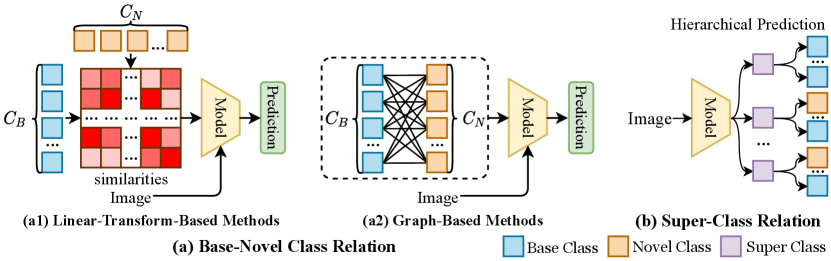

Semantic relation methods utilize the semantic relation between classes to detect objects of novel classes, which are further categorized into base-novel class relation and super-class relation. Methods based on base-novel class relation utilize semantic similarities between base classes and novel classes to transfer knowledge from base classes to novel classes. Methods based on super-class relation assume that there is a hierarchical relationship among categories, i.e., some similar classes can be grouped into a super-class (e.g., bed, sofa, and chair can be grouped into furniture), and they utilize this hierarchical relationship to assist prediction.

5.1.1. Base-Novel Class Relation

Methods based on base-novel class relation can be categorized into two types: linear-transform-based methods (TOPM-ZSOD (Shao et al., 2019), LSA-ZSOD (Wang et al., 2020b), DPIF (Li et al., 2021b)), and graph-based methods (SPGP (Yan et al., 2020), VSRG (Nie et al., 2022), CRF-ZSOD (Luo et al., 2020)). Linear-transform-based methods utilize the base-novel semantic relation to assist prediction through linear transforms of these semantic relations, and graph-based methods construct graphs with each node as a category, towards fully excavating the relation between base classes and novel classes through graph neural networks or conditional random fields.

Base-Novel Class Relation Linear-Transform-Based Methods. TOPM-ZSOD (Shao et al., 2019), LSA-ZSOD (Wang et al., 2020b), and DPIF (Li et al., 2021b) are all linear-transform-based methods to utilize base-novel class relation for ZSOD.

Base-Novel Class Relation Graph-Based Methods. SPGP (Yan et al., 2020), VSRG (Nie et al., 2022), and CRF-ZSOD (Luo et al., 2020) are graph-based methods to better excavate the relation between base and novel classes into the classification branch for higher performance.

Discussion of Methods Based on Base-Novel Class Relation. Linear-transform-based methods are simple approaches to directly utilize the base-novel semantic relation to assist prediction. However, linear transform does not fully excavate the base-novel relation for prediction, and it does not connect RoI features with the class semantic attributes together. Graph-based methods deeply excavate the relation between base classes and novel classes for prediction through graph neural networks or conditional random fields. Although they improve the performance through the graph structure modeling the relation between categories, they haven’t provided a quantitative analysis of whether the trained graph matches human intuition.

5.1.2. Super-Class Relation

Methods based on super-class relation (CG-ZSOD (Li et al., 2020a), JRLNC-ZSOD (Rahman et al., 2020b), ACS-ZSOD (Ma et al., 2020)) define some coarse-grained classes (super-classes) to cluster all classes into several groups, which separate the original classification problem into two sub-problems (coarse-grained classification and fine-grained classification).

Discussion of Methods Based on Super-Class Relation. These methods provide “free lunch” for the performance improvement of ZSOD, but they are unsuitable for situation where there is no hierarchical relationship between categories.

5.2. Visual-Semantic Mapping Methods

Visual-semantic mapping methods aim to find a proper mapping function to align visual features with the class semantic attributes. Visual-semantic mapping methods can be categorized into linear-projection-based methods (e.g., LSA-ZSOD (Wang et al., 2020b), DPIF (Li et al., 2021b), ZSDTR (Zheng and Cui, 2021)), weighted-combination-based methods (HRE-ZSOD (Demirel et al., 2018)), inverse-mapping methods (MS-ZSOD (Gupta et al., 2020), CCFA-ZSOD (Li et al., 2022c), SMFL-ZSOD (Li et al., 2021d)), auxiliary-loss-based methods (ContrastZSOD (Yan et al., 2022), VSA-ZSOD (Rahman et al., 2020a)), external-resource-based methods (CLIP-ZSOD (Xie and Zheng, 2022), BLC (Zheng et al., 2020)).

Linear-Projection-Based Methods. The earliest ZSOD method (Bansal et al., 2018) adopts this simplest visual-semantic mapping method that projects visual features into semantic space through a linear projection, which is followed by many ZSOD methods (e.g., LSA-ZSOD (Wang et al., 2020b), DPIF (Li et al., 2021b), ZSDTR (Zheng and Cui, 2021)). These methods are mostly based on CNN backbones, and only ZSDTR adopts DETR (Carion et al., 2020) (a vision-transformer-based detector) which projects the proposal encodings into semantic space.

Weighted-Combination-Based Methods. HRE-ZSOD (Demirel et al., 2018) calculates the semantic embeddings of the RoI feature as the weighted combination of different semantic attributes from all base classes according to their classification scores, as shown in Equation 9 ( denotes the probability that this RoI is predicted to be the base class ).

| (9) |

Inverse-Mapping Methods. Inverse-mapping methods (MS-ZSOD (Gupta et al., 2020), CCFA-ZSOD (Li et al., 2022c), SMFL-ZSOD (Li et al., 2021d)) conversely project class semantic attributes into visual space to align the class semantic attributes with the visual features.

Auxiliary-Loss-Based methods. Auxiliary-loss-based methods (ContrastZSOD (Yan et al., 2022), VSA-ZSOD (Rahman et al., 2020a)) propose some auxiliary losses to facilitate the visual-semantic mapping.

External-Resource-Based Methods. These methods utilize external resources (CLIP-ZSOD (Xie and Zheng, 2022), BLC (Zheng et al., 2020)) to better project visual features into semantic space. Specifically, CLIP-ZSOD utilizes a strong pre-trained CLIP model (Radford et al., 2021) for visual-semantic mapping, and BLC adopts external vocabulary for visual-semantic mapping.

5.3. Data Augmentation Methods

Data augmentation methods aim to generate multiple visual features for novel classes to mitigate the data-scarcity problem. The generated features are used to re-train the classifier of the detection model. Early data augmentation methods (DELO (Zhu et al., 2020a)) train a conditional generator with some auxiliary losses for data generalization, and later methods (GTNet (Zhao et al., 2020), SYN-ZSOD (Hayat et al., 2020), RSC-ZSOD (Sarma et al., 2022), RRFS-ZSOD (Huang et al., 2022)) all adopt GAN (generative adversarial network).

DELO (Zhu et al., 2020a) adopts a conditional generator to synthesize visual features for novel classes. Specifically, the generator consists of an encoder to extract the latent features of the corresponding semantic embeddings, and a decoder to synthesize the visual features from the latent features. DELO adopts the conditional VAE loss to train this generator, including a KL divergence loss and a reconstruction loss. Besides, it proposes three additional losses to encourage the consistency between the reconstructed visual features and the original visual features.

GTNet (Zhao et al., 2020), SYN-ZSOD (Hayat et al., 2020), RSC-ZSOD (Sarma et al., 2022), and RRFS-ZSOD (Huang et al., 2022). These methods all adopt GAN (generative adversarial network) to generate visual features for novel classes. The GAN consists of a generator to synthesize visual features and a discriminator to determine whether the visual features are synthesized or not. These methods propose some elaborated extensions on this framework respectively.

Discussion of Data Augmentation Methods. Data augmentation methods directly tackle the data-scarcity problem in ZSOD in an intuitive way. Actually, data augmentation methods can be seen as the inverse of visual-semantic mapping methods (i.e., mapping the class semantic attributes back into visual features). An important difference is that data augmentation methods incorporate intra-class variance into this mapping process, i.e., these methods generate different image features from different random noises for the same class. However, these methods can only synthesize visual features instead of visual samples (in the input pixel space), making it hard to interpret or visualize the synthesized samples. Besides, it is possible to substitute these methods by inverting the visual-semantic mapping functions.

6. Extensional Zero-Shot Object Detection

6.1. Open-Vocabulary Object Detection

Conventional ZSOD only learns to align visual features with semantic information for detection from a small set of base classes () and generalizes to the novel classes (), while Open-Vocabulary Object Detection (OVD) first accesses a much larger dataset (consisting of massive image-text pairs from multiple classes ) to train a stronger visual-semantic mapping function for multiple classes (intersecting with the base and novel classes for the later ZSOD task). We provide a detailed analysis of OVD in section S3 of the supplementary online-only material.

6.2. Textual-Description-Based Inductive ZSOD

Previous ZSOD methods use semantic attributes as semantic information to represent each class. Instead, textual-description-based methods use textual description as semantic information. Currently, only a few methods uncover textual-description-based inductive ZSOD, and they use different types of textual-description: class textual description (description text for each class) and image textual description (description text for each image).

Methods Based on Class Textual Description. ZSOD-TD (Li et al., 2019) adopts textual description to represent each class instead of semantic attributes (e.g., “stripe, equid” is used to describe zebra). ZSOD-TD projects the RoI features into semantic embeddings and makes predictions by comparing them with the features extracted from textual description.

Methods Based on Image Textual Description. In addition to the class textual description, ZSOD-CNN (Zhang et al., 2020c) adopts textual description to represent each image (e.g., “A bathroom with a sink and three towels.”), which also adopts Faster R-CNN as the detection framework. It uses a text CNN to extract text features, and concatenates the RoI features with the text features for further predictions. Besides, this method utilizes the OHEM technique to select hard samples for model training. During testing, it predicts the classification scores of novel classes according to those of base classes according to the semantic similarities between base and novel classes.

6.3. Transductive ZSOD

Transductive ZSOD (Rahman et al., 2019). Transductive ZSOD is an extended setting of inductive ZSOD, which incorporates unlabeled test images into model training. Rahman et al. (Rahman et al., 2019) propose the first work to uncover transductive ZSOD, which conducts transductive learning on a pre-trained ZSOD model. For transductive learning, it applies a pseudo-labeling paradigm on the unlabeled data, including a fixed pseudo-labeling step to generate fixed pseudo-labels for base classes using the pre-trained model, and a dynamic pseudo-labeling step to generate pseudo-labels for both base classes and novel classes iteratively. This work is the first to explore transductive learning on ZSOD, which shows promising potential for significant performance improvement, as other transductive methods in few-shot image classification.

7. Popular Benchmarks For Low-Shot Object Detection

7.1. Dataset Overview

In three settings (i.e., OSOL, FSOD, and ZSOD) of LSOD, the classes of the dataset are all split into two types: base classes with large labeled samples and novel classes with few or no labeled samples. The mainstream benchmarks for Low-Shot Object Detection are modified from widely-used object detection datasets like the PASCAL VOC dataset, MS COCO dataset. This survey summarizes the basic information of mainstream benchmarks for LSOD in Table 2 but omits some rarely-used benchmarks since they are not representative. In this table, the number of base classes, the number of novel classes, and the number of labeled samples per category for each benchmark are recorded. Moreover, split number denotes the number of category split schemes for each benchmark.

7.2. Evaluation Criteria

OSOL. OSOL has a guarantee that the model knows precisely the object classes contained in each test image. For each test image in the test stage, OSOL randomly samples one support image for each category existing in this image to locate the objects of this category and average their accuracy scores as the final results.

FSOD. Different from OSOL, FSOD methods randomly sample a small set of support samples for the whole test set instead of only one image. For the K-shot setting, some methods like LSTD (Chen et al., 2018) sample K support images for each novel category. This sampling strategy is not ideal since the number of objects in the images may differ. Current methods mostly sample K bounding boxes for each novel category instead, and this survey records the performance of FSOD methods under this setting. Early FSOD methods mostly adopt the support samples released by FSRW (Kang et al., 2019) for fair performance comparison, which are sampled only once. TFA (Wang et al., 2020a) samples support samples multiple times to obtain the average performance of the model. Currently, newly proposed FSOD methods mostly adopt this multiple sampling strategy to obtain more accurate performance.

ZSOD. ZSOD methods adopt two evaluation criteria for model performance comparison. The first criterion evaluates the model on a subset of test data that contains only objects of novel classes (ZSOD). The second setting, generalized ZSOD (GZSOD), evaluates the model on the complete test data, requiring the model to detect objects of both base classes and novel classes. Generalized ZSOD separately computes the mean average precision and recall of base classes and novel classes and uses a harmonic average to generate the average performance.