11email: [email protected] ✉, [email protected]

22institutetext: Bio-Inspired Robotics Laboratory, Department of Engineering,

University of Cambridge, United Kingdom 22email: [email protected]

A Study of Fitness Gains in Evolving Finite State Machines

Abstract

Among the wide variety of evolutionary computing models, Finite State Machines (FSMs) have several attractions for fundamental research. They are easy to understand in concept and can be visualised clearly in simple cases. They have a ready fitness criterion through their relationship with Regular Languages. They have also been shown to be tractably evolvable, even up to exhibiting evidence of open-ended evolution in specific scenarios. In addition to theoretical attraction, they also have industrial applications, as a paradigm of both automated and user-initiated control. Improving the understanding of the factors affecting FSM evolution has relevance to both computer science and practical optimisation of control. We investigate an evolutionary scenario of FSMs adapting to recognise one of a family of Regular Languages by categorising positive and negative samples, while also being under a counteracting selection pressure that favours fewer states. The results appear to indicate that longer strings provided as samples reduce the speed of fitness gain, when fitness is measured against a fixed number of sample strings. We draw the inference that additional information from longer strings is not sufficient to compensate for sparser coverage of the combinatorial space of positive and negative sample strings.

Keywords:

evolutionary computation finite state machines regular languages.1 Introduction

In all areas of computing inspired by biological evolution, the relationship between the parameters of selection and increases of fitness measures over time is an important factor. This applies to genetic programming, evolutionary computation, evolutionary artificial intelligence, or artificial life. In genetic programming, this relationship has been studied with respect to the speed at which a desired solution can be derived [1]. In artificial life, this relationship is connected to the arising of complexity (being an enabler of fitness) [2]. For constrained optimisation problems, the rate of change of selection parameters over time has been seen to enhance the performance of genetic algorithms [3]. Diversity, which can be seen as another aspect of selection (as a result of the severity of selection) has been extensively studied for its effects on fitness gains [4]. We investigate an example of the relationship between selection parameters and a fitness measure’s increase over generations.

1.1 Background

Understanding the relationship between selection function and adaptive performance benefits from simplified models, such as the constructs of automata theory. For the levels of automata from Finite State Machines (FSMs) up to deterministic Turing Machines, successful evolution to recognise formal languages has been reported [5].

Finite State Machines (FSMs, also known as deterministic finite automata or DFAs) are one of the simplest such classes of entities. They have several advantages as a model of study:

-

•

They are simple in concept;

-

•

their structure can be communicated using clear diagrams (for low numbers of states);

-

•

their complexity can be measured unambiguously; and

-

•

measures of how well an FSM recognises a regular language provide readily comprehensible fitness metrics.

For these reasons, FSMs have been a viable research tool in constructing simplified analogies reflecting aspects of biological evolution. For example, Rasek et al. [6] have proposed a definition of FSM-species and studied their formation. In another study, Moran and Pollack [7] have created simple ecosystem models of lineages of FSMs interacting in competitive and cooperative modes. They found that some results exhibit indications of open-endedness, i.e. the absence of an apparent upper bound to evolving complexity.

However, seemingly unbounded increases in complexity of evolving computational entities is the exception, rather than the rule. The review of the literature by Packard et al. [8] states the realisation that evolutionary simulations and genetic algorithms tend to approach plateaus of complexity. In other words, the complexity of evolved solutions to a problem posed tends to level out as generations succeed each other. Most commonly, a state is reached where few novel solutions of higher complexity are generated by such algorithms.

1.2 Our contribution

On the one hand, the literature documents plateaus of evolution under fixed selection parameters. On the other, there are examples of (at least an impression of) open-endedness with more complex regimes such as co-evolution of three or more interacting lineages. This suggests a need for better understanding of the influence of the selection regime on the evolution of FSMs, especially in the case of several simultaneous selection pressures.

In order to investigate this, we constructed an experimental setup using two simple selection pressures acting in opposite directions:

-

•

maximise the correct recognition of strings in or out of a language, and

-

•

minimise the number of states in the FSMs.

We use this setup to study the effect of an aspect of selection on fitness gain.

A good metric for the complexity of regular languages is their ”state complexity” [9]. Unlike other proposed measures (see [10]), state complexity relates directly to the resources needed for recognition. The family of languages used in this study has one language for each state complexity value. This provides control over the selection pressure promoting the addition of states over generations.

The remainder of the paper is organised as follows: Section 2 provides definitions for the key concepts we have combined in our study, and the way these experiments have applied these ideas. Section 3 presents the algorithms for creation of sample sets, managing the process of evolution, and the mutation operator. Section 4 describes our experiments and their results. Section 5 includes discussion and interpretation of the results. Finally, section 6 presents our conclusions and proposes future work.

2 Preliminaries

We summarise the elements we have drawn on in the experimental configuration: FSMs, the universal witness family of regular languages, and the way we have combined conflicting selection pressures.

2.1 Finite State Machines

A Finite State Machine is a 5-tuple , where

-

•

is a set of states;

-

•

is a set of symbols used as an input alphabet;

-

•

is a transition function from one state to another depending on the next input symbol;

-

•

is the starting state; and

-

•

is the subset of accepting states

The transition function can be extended to describe what state the machine transitions to if given each symbol of a string in succession. Using for the empty string, and to denote the symbol followed by the trailing sub-string , we can define the extended transition function :

| (1) | ||||

| (2) |

An FSM ”accepts” a string if the extended transition function maps it from the starting state to an accepting state, i.e. the following is true:

| (3) |

The set of strings accepted by an FSM is termed its language; the machine is said to ”recognise” the language. For a machine , we define its language as:

| (4) |

The languages recognisable by FSMs are the ”regular” languages, i.e. those that can be described by a regular expression [11]. Each regular language is recognised by a set of FSMs which are equivalent in that for any string, each of them produces the same result of acceptance or rejection. The lowest number of states of any FSM recognising a regular language is termed the ”state complexity” of the language, as explained by Yu [9]. For an algorithm to compute the state complexity from any equivalent FSM, see [12].

2.2 The languages as drivers of complexity

To be able to run experiments with a range of language complexities, we looked for a family of languages that differed primarily in their complexity only, and preferably only included one member language for each state complexity value. Both or these criteria are met by the ”universal witness” languages described by Brzozowski [13], which we use here. This is a family (or ”stream”) of languages , which has one member for any , which are examples (”witnesses”) of the upper bounds of state complexity in a number of theorems concerning the complexity of FSMs. In particular, it has the property that cannot be recognised by an FSM of less than states.

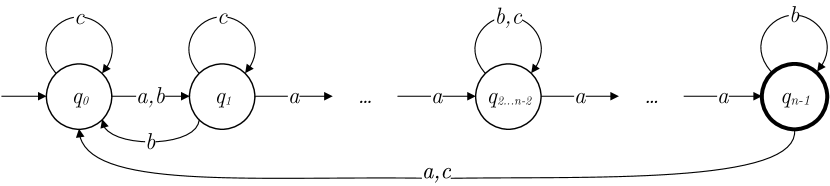

Each of the languages has a corresponding FSM that accepts it. The general structure of these FSMs is shown in Figure 1. The formal definition is as follows:

2.2.1 Definition of

- for , the FSM is a quintuple , where is the set of states, is the alphabet, is the initial state, is the set containing the one accepting state, and is:

;

; ; for ;

; for .

The proportion of strings from the ternary alphabet that are members of the languages decreases with increasing , as shown in Table 1.

| Up to | All strings | |||||||

|---|---|---|---|---|---|---|---|---|

| length | # | % | # | % | # | % | # | % |

| 7 | 3,280 | 100% | 656 | 20% | 490 | 15% | 320 | 10% |

| 8 | 9,841 | 100% | 1,968 | 20% | 1,452 | 15% | 1,122 | 11% |

| 9 | 29,524 | 100% | 5,904 | 20% | 4,280 | 15% | 3,616 | 12% |

| 10 | 88,573 | 100% | 17,714 | 20% | 12,688 | 14% | 11,040 | 13% |

| 11 | 265,720 | 100% | 53,144 | 20% | 37,874 | 14% | 32,640 | 12% |

| 12 | 797,161 | 100% | 159,432 | 20% | 113,548 | 14% | 95,042 | 11% |

| 13 | 2,391,484 | 100% | 478,296 | 20% | 340,992 | 14% | 276,016 | 12% |

| 14 | 7,174,453 | 100% | 1,434,890 | 20% | 1,024,128 | 14% | 806,000 | 11% |

| 15 | 21,523,360 | 100% | 4,304,672 | 20% | 3,074,490 | 14% | 2,375,360 | 11% |

| 16 | 64,570,081 | 100% | 12,914,016 | 20% | 9,225,836 | 14% | 7,065,762 | 11% |

2.3 Conflicting objectives: sample set recognition vs state count

The two objectives we combine in the selection applied in this study are:

-

1.

”linguistic fitness” - maximising the correct classification by FSMs of strings as being in or out of a language; and

-

2.

minimising the number of states of the FSMs.

The first objective favours more states, as more states can embody more complex information about the target language. The second objective selects for fewer states. In this way, the two objectives counteract each other.

To quantify the fitness of an FSM as an acceptor of a language, we use its performance in accepting or rejecting strings in two sample sets, positive (strings in the language) and negative (strings not in the language).

In this study, the ”linguistic fitness” of an FSM against a set of strings to be accepted and a set of strings to be rejected, is the total of correctly accepted and correctly rejected examples:

| (5) |

The maximum value of for an FSM is the total size of the positive and negative reference sets and . The fitness gains we study are specifically the increases of this linguistic fitness over time.

A genetic algorithm selecting for multiple objectives needs to decide which of a set of candidates to favour in the reproductive pool, based on how well they satisfy each of the objectives. As described by Neumann [14], this can be expressed in a ”dominance” relationship combining the two objectives.

In this study, we combine the objectives of maximisation of fitness and minimisation of states. The former is measured by a function ( and being the positive and negative sample sets), the latter by the number of states, .

This paper uses the two relations ”weakly dominates” (denoted by the symbol ) and ”dominates” (denoted by ) between FSMs X and Y:

Informally, X weakly dominates Y if it is no worse than Y on either fitness or complexity, and X dominates Y if it is no worse on either fitness or number of states, but better on at least one of the two.

3 Algorithms

3.1 Sample set generation methods

We use two methods of generating sample sets of positive and negative examples. In both methods, the positive and negative sets contain 500 strings each, for a total of 1,000 strings. The two methods labelled and , are defined below.

The reason for equal numbers of positive and negative examples (regardless of the percentage of strings of a given length that are in versus out of the language) is technical. An FSM of a single state can either accept all strings or rejects all strings. Its fitness score therefore would be the number of positive and negative samples respectively, but its complexity is the same in either case. Using the same number of positive and negative examples provides a common baseline of performance for minimal complexity.

3.1.1 method

The method (for ”balanced short strings”) generates positive and negative sets that have short strings, with the lengths of positive and negative examples being balanced, i.e. neither the positive nor the negative subset favours longer strings. The sample sets are generated by Algorithm 1.

3.1.2 method

To investigate the effect of longer sample strings, we devised the method (for ”Random, less than or equal to ”). This constructs positive and negative sample sets of equal size, from strings drawn uniformly at random from all strings over the alphabet of the target language, of length zero through . The method is implemented by by Algorithm 2.

3.2 The evolution algorithm

The study of complexity in multi-objective genetic programming by Neumann [14] describes the SMO-GP algorithm (Simple Multi-Objective Genetic Programming). It starts with a population of one individual. In each iteration, SMO-GP selects one member of the population, applies a mutation operation, and then either replaces all dominated individuals with the (single) new mutant, or drops the mutant if it does not dominate any existing members.

For this study, we adapted this algorithm is adapted to evolve FSMs starting with the simplest possible FSM, which we call , see Algorithm 3. is an FSM of a single non-accepting state, with the transition function mapping all inputs back to that state. This is in a sense the simplest possible FSM, and corresponds to the empty language, as it rejects all strings. (An FSM accepting all strings, i.e. accepting , would be equally simple.) The formal definition of such an FSM for a ternary alphabet is:

| (6) |

Note that half the 1,000 sample strings will not be elements of the language, so the FSM by rejecting them will achieve a linguistic fitness score of 500. The only way for a new mutant to enter the population is to weakly dominate an existing FSM in the population. This can come about by having higher fitness or fewer states, but no FSM can have less states than . Therefore, all other FSMs that will become part of the population will score at least 500.

In some preliminary work, we also experimented with starting evolution towards not with , but instead with the result of evoluition over a set number of generations toward . This approach did not produce any notable patterns correlating the starting point with improved speed of adaptation to higher fitness, and we did not pursue it further.

3.3 The mutation operation

The mutation operation used on FSMs selects one random state for the source of an arc, and a random input symbol from the alphabet for the label of the arc. A target state is then selected; half the time, this is an existing state of the FSM, otherwise it is a new state which has all outgoing arcs leading back to itself.

The FSM is then modified so that the arc from the source state, labelled with the chosen input symbol, is set to lead to the chosen target state.

Lastly, a random state is chosen and changed from accepting to non-accepting and vice versa. For details, see Algorithm 4.

4 Experimental results

Table 2 shows the mean fitness scores observed after given numbers of generations, under four different methods of generating sample sets for the language, across 30 runs with different randomisation seeds. Figure 2 shows a more detailed set of observations for the four methods.

The relative position of the data series in Figure 2 made us curious about whether a general trend might exist for using longer strings in sample sets to lead to slower evolution of better recognisers. We repeated the experiment for and ; results are shown in Figure 3. Note that the sampling was adjusted for and . In the experiment, we generated 500 positive and 500 negative sample strings, for each of , , and . However, the languages and do not contain 500 strings of at most 7 characters. In order to create comparable sample sets for and , we used , , and .

| Mean score | ||||

|---|---|---|---|---|

| Generation | ||||

| 100,000 | 912.67 | 845.47 | 826.37 | 823.83 |

| 200,000 | 941.03 | 854.90 | 836.57 | 826.33 |

| 500,000 | 960.63 | 898.60 | 841.90 | 833.87 |

| 1,000,000 | 986.80 | 938.73 | 856.80 | 851.03 |

| 2,000,000 | 1000.00 | 969.60 | 871.70 | 894.30 |

| 5,000,000 | 1000.00 | 986.97 | 898.97 | 923.57 |

| 10,000,000 | 1000.00 | 1000.00 | 917.87 | 951.63 |

5 Discussion

A pattern of higher used in sampling leading to slower improvement of recognition fitness is seen in all three Figures summarising the generated data. This observation suggests that selection by using longer strings provides less relevant information towards recognising the language than selection by shorter strings. This is perhaps counter-intuitive. Longer sample strings of necessity embed more information about the language in question, on a per string basis.

A possible explanation may be that as we increase in , a fixed-size sample set (e.g. 500 positive and 500 negative strings) is a smaller percentage of all strings of length up to . For a long string, a larger set of its leading substrings is likely to be missing from the sample set. So, a mutant FSM may randomly be correct in its categorisation of the long string, while not correctly categorising its prefixes. This implies that a gain of fitness under these circumstances is less likely to lead to further gains of fitness, compared to sample sets that are a more comprehensive coverage of shorter strings in the language.

In other words, the information about the language that is embedded in the sample set is less representative at higher values of in . With less comprehensive coverage of examples for higher in , the positive and negative sets of examples may become more akin to random strings rather than providing selection conducive to higher linguistic fitness in the next generation.

Although these experiments only investigated evolution against sample sets of through , for further members of the series, growth in the number of FSM states may be an important factor. As it is known that a perfect recogniser for needs to have at least states, we would expect that the growth in the number of states would be an important factor in enabling the evolution of better recognition for higher values of in .

Whether this finding transfers to other languages, and if so, whether it may be able to be generalised to other automata or evolutionary scenarios, is not answered by our study. Even within the languages, we have only investigated low values of . Further, languages with significantly different structures may behave differently. Algorithm 3 uses very small populations, so the effect of diversity on our observation is also an open question.

A potential application of our observation, if generalised, may be to find better solutions in fewer generations in evolutionary optimisation.

6 Conclusion

Under the experimental scenario we used, extending the sample sets of training strings to longer string lengths tends to slow down the achievement of higher fitness scores. A fixed size of sample set, drawn from pools of strings that increase in size as maximum length is extended provides less directed selection pressure. It appears that this is not counteracted by the additional information embedded in longer sample strings, possibly because gains of fitness may occur with a lower likelihood of leading to further gains of fitness, using the same fitness function.

This work was based on observation of the languages, for low values of the state complexity . It would be interesting to investigate whether these observations hold for: - with higher values of , or - for other languages arranged in a sequence of increasing state complexity, or - when the linguistic fitness function shifts from to .

Another fruitful avenue of further work may be to relax the criteria governing retention of FSMs in the population, and observe the effect of populations with higher diversity interacting with rising string lengths in the sample sets.

7 Acknowledgements

This work was supported by the Commonwealth of Australia. Frank Neumann was supported by the Australian Research Council through grant FT200100536.

References

- [1] Roostapour, V., Neumann, A., Neumann, F.: Single- And Multi-Objective Evolutionary Algorithms For The Knapsack Problem With Dynamically Changing Constraints. Theoretical Computer Science, vol. 924, pp. 129-147 (2022).

- [2] Standish, R.: Open-Ended Artificial Evolution. International Journal of Computational Intelligence and Applications, 3(02), pp. 167–175 (2003).

- [3] Kazarlis, S., Petridis, V.: Varying Fitness Functions In Genetic Algorithms: Studying The Rate Of Increase Of The Dynamic Penalty Terms. In: Eiben, A., Bäck, T., Schoenauer, M., Schwefel, H. (eds.) Parallel Problem Solving from Nature — PPSN V, pp. 211–220. Springer, Berlin/Heidelberg (1998).

- [4] Gabor, T., Phan, T., Linnhoff-Popien, C.: Productive Fitness In Diversity-aware Evolutionary Algorithms. Natural Computing 20, pp. 363–-376 (2021).

- [5] Naidoo, A.: Evolving Automata Using Genetic Programming. Master’s thesis, University of KwaZulu-Natal, Durban, South Africa (2008).

- [6] Rasek, A., Dörwald, W., Hauhs, M., Kastner-Maresch, A.: Species Formation In Evolving Finite State Machines. In: Floreano, D., Nicoud, JD., Mondada, F. (eds.) Advances in Artificial Life. ECAL 1999. LNCS, vol 1674, pp. 139–148. Springer, Berlin/Heidelberg (1999).

- [7] Moran, N., Pollack, J.: Evolving Complexity In Prediction Games. Artificial Life, vol. 25, pp. 74–91 (2019).

- [8] Packard, N., Bedau, M., Channon, A., Ikegami, T., Rasmussen, S., Stanley, K., Taylor, T.: An Overview Of Open-ended Evolution: Editorial introduction to the open-ended evolution II special issue. Artificial Life, vol. 25, pp. 93-–103 (2019).

- [9] Yu, S.: State Complexity Of Regular Languages. Journal of Automata, Languages and Combinatorics, vol. 6, no. 2, pp. 221–234 (2001).

- [10] Ehrenfeucht, A., Zeiger, P.: Complexity Measures For Regular Expressions, Journal of Computer and Systems Sciences, vol. 12, pp. 134–-146 (1976).

- [11] Sipser, M.: Introduction To The Theory Of Computation, pp. 55–76. PWS Publishing Company, Boston, MA (1997).

- [12] Hopcroft, J.: An log Algorithm For Minimizing States In A Finite Automaton, Stanford University, Stanford, CA, (1971).

- [13] Brzozowski, J.: In Search of Most Complex Regular Languages. In: Moreira, N., Reis, R. (eds.) Implementation and Application of Automata, LNCS, vol. 7381, pp. 5–24. Springer, Berlin/Heidelberg (2012).

- [14] Neumann, F.: Computational Complexity Analysis of Multi-Objective Genetic Programming. In: GECCO ’12: Proceedings of the 14th annual conference on Genetic and Evolutionary Computation, pp. 799–806. Philadelphia, Pennsylvania, USA (2012).