A structure preserving numerical scheme for Fokker-Planck equations of structured neural networks with learning rules

Abstract

In this work, we are concerned with a Fokker-Planck equation related to the nonlinear noisy leaky integrate-and-fire model for biological neural networks which are structured by the synaptic weights and equipped with the Hebbian learning rule. The equation contains a small parameter separating the time scales of learning and reacting behavior of the neural system, and an asymptotic limit model can be derived by letting , where the microscopic quasi-static states and the macroscopic evolution equation are coupled through the total firing rate. To handle the endowed flux-shift structure and the multi-scale dynamics in a unified framework, we propose a numerical scheme for this equation that is mass conservative, unconditionally positivity preserving, and asymptotic preserving. We provide extensive numerical tests to verify the schemes’ properties and carry out a set of numerical experiments to investigate the model’s learning ability, and explore the solution’s behavior when the neural network is excitatory.

Key words: Fokker-Planck equation, Nonlinear Noisy Leaky Integrate-and-Fire model, neuron network, structure preserving scheme, asymptotic preserving, multiscale model.

AMS subject classifications: 35M13, 65M08, 92B20.

1 Introduction

In biological neural networks, neurons transmit information in two ways: one is conveying rapid and transient electrical signals on their membranes, and the other is sending neurotransmitters at a synapse. The learning and memory of the network is based on the connection weights of the synapses among the neurons [1]. How a neural network with a given structure reacts to the environment and how the neural network’s infrastructure is modified by the environment in the long run are two of the essential topics in neural science.

Mathematically, there has been plenty of models describing the neural networks’ behavior from all scales and sizes. The most successful single-neuron model is undoubtedly the Hodgkin-Huxley model [2] and its simplification, the Fitzhugh-Nagumo model [3]. However, when modeling large-scale biological neural networks, these models are far too complicated and impractical. Therefore, scientists and mathematicians usually adopt the integrate-and-fire model [4] when modeling the system that consists of a large number of neurons [5, 6, 7, 8, 9, 10]. One of the most widely used version of the integrate-and-fire model is the nonlinear noisy leaky integrate and fire model (NNLIF). In this model, when the firing event does not occur, the membrane potential of a neuron is influenced by a relaxation to a resting potential , an incoming synaptic current from other neurons and the background. The current is approximately decomposed into a drift term and a term of Brownian motion. The SDE, in the simplest form, is given by

| (1.1) |

where the parameters and are determined by the synaptic current . The distinguished feature of this model is the incorporation of the firing event: when a neuron reaches a firing potential , it discharges itself and the potential jumps to a resetting potential immediately [11, 12]. Here, we assume, , and the firing event is expressed by

| (1.2) |

(1.1) and (1.2) constitute the stochastic process for the NNLIF model, which is a SDE coupled with a renewal process. By Itô’s formula, one can derive the time evolution of the density function [13, 14], which represents the probability of finding a neuron at the voltage and given time :

| (1.3) |

where

| (1.4) |

stands for the firing rate of the network, stands for the amplitude of the noise, stands for the Dirac delta function, and

| (1.5) |

stands for the rate of the rising of the neurons’ potential, consisting of a decay term , an external input function and a group reaction term . The coefficient in (1.5) can be either positive or negative. In the simplest scenario, is a constant. We say that the neural network is excitatory when , and inhibitory when .

When it comes to the learning behavior of the biological neural network, the most seminal work is the Hebbian rule [15] stated as a motto “neurons that fire together wire together”. A very simple mathematical setup of this rule is as follows: assuming that the strength of weights between two neurons and increases when the two neurons have high activity simultaneously. For neurons in interactions, the classical Hebbian rule relates the weights to the activity of the neuron as

In [16], the authors designed a model that integrates the NNLIF model and the Hebbian rule stated above. They considered a heterogeneous population of homogeneous neural networks structured by their synaptic weights , negative sign standing for inhibitory neurons and positive sign standing for excitatory neurons. They introduced a learning rule in order to modulate the distribution of synaptic weights and allow the network to recognize some given input signals by choosing an appropriate heterogeneous synaptic weight distribution adapted to the signal . They assumed that the subnetworks interact only via the total firing rate , with synaptic weights described with a single variable . And they gave the following interpretation: all the subnetworks parametrized by may modulate their intrinsic synaptic weight with respect to a function which depends on the intrinsic activity of the network parametrized by and of the total activity of the network . Then, the proposed generalization of the Hebbian rule consists in choosing

| (1.6) |

where represents the learning strength of the subnetwork with synaptic weight . Adding the above choice of the learning rule, they obtained the following equation

| (1.7) |

where stands for a time scale ratio which takes into account that learning is slower than the typical voltage activity of the network.

If we want to study the learning behavior of the model, we may further perform a time rescaling for (1.7) to arrive at

| (1.8) |

where means the left derivative in the direction and the corresponding initial and boundary conditions are

| (1.9) |

Here at a fixed time describes the probability density of finding a neuron with synaptic weight and voltage , henceforth the probability density of finding a single neuron with synaptic weight is defined as

| (1.10) |

and the normalization condition of probability ensures that holds for all . describes the firing rate of the neurons with synaptic weight , at time and describes the entire network’s firing rate at time summing over all the synaptic weights. is a positive noise coefficient. The model is a multi-scale transport equation, where the -wise transport represents the integrate-and-fire dynamics of the neural network, and the -wise transport represents the network’s learning behavior.

Systematic understanding of the model (1.8) is largely lacking, although some of its properties were proved in [16]. Particularly, the numerical studies are far from sufficient for understanding the possible uses and limitations of this model. In this work, we further explore this model from a numerical perspective. Specifically, we aim to design a structure-preserving numerical scheme for (1.8), and further explore this model with extensive numerical experiments to investigate possible solution structures and interpretations.

In our work, we propose a numerical scheme with the positivity preserving and asymptotic preserving properties. There are two key ingredients that make our scheme meet the desired properties. The first one is the Scharfetter-Gummel symmetric reconstruction [17] of the -wise convection and diffusion terms in (1.8). The second one is that we treat the flux shift term in (1.8) implicitly. With the symmetric reconstruction and this implicit treatment, we can prove that implementing our scheme means inverting a so-called -matrix at each time step, and the positivity preserving properties of the scheme is independent of the respective ratio between and or . The only grid ratio requirement is that has to satisfy the -wise CFL condition, which is a natural requirement. Furthermore, when letting without adjusting , we obtain a numerical scheme consistent with the asymptotic behavior of the model, consisting of a -wise convection equation for defined in (1.10) and a -wise quasi-steady equation for with given .

Numerical methods for Fokker-Planck type equations are abundant in the literature. In recent years many works have been proposed to preserve the structure of the solution such as mass conservation, positivity, entropy decay, and steady state preserving. This often relies on delicate choice of numerical fluxes and suitable time discretization. Without being exhaustive, we mention a few relevant works. Regarding spatial discretization, there are mainly two kinds of methods: one is based on formulating the equation into the transport form and using upwind fluxes [18]. This is later extended to general nonlinear aggregation and diffusion equations [19]. The other is based on formulating the equation into the diffusion form (i.e., Scharfetter-Gummel form). Depending on how to choose the fluxes, it bifurcates to several variants, for example, the Chang-Cooper scheme [20] and its generalization [21]; the symmetrized finite difference scheme [17], and more recently the high order finite difference scheme [22]. Regarding time discretization, high order explicit or semi-implicit schemes (c.f. [23]) can be used. However, we mention that to get positivity preserving implicit schemes for Fokker-Planck type equations, one is basically limited to first order scheme [24, 25, 26, 27]. The only exception we are aware of is the second order exponential method in [28] which is applicable to the linear Fokker-Planck equation and hard to generalize to more general cases. For the above reason, we limit ourself to first order method in this paper.

In the numerical experiment part, we verify the scheme’s order of accuracy (first order for , second order for ) and asymptotic preserving properties, test the model’s recognition properties presented in [16] and further explore the model’s behavior when the solution is supported in the region . The numerical results suggest that this model shows great potential in distinguishing a general input by producing distinct and unsymmetrical responses to orthogonal basis functions. When , the solution may either develop to a steady state supported in a region for some positive , or expand its support rapidly towards .

The rest of the paper is organized as follows. In Section 2, we present our schemes in detail, including the equations and their implementations; in Section 3, we give a detailed analysis of our schemes’ properties, including conservation, positivity preserving properties and asymptotic preserving properties; and in Section 4, we present the numerical results including the verification of the schemes’ accuracy, asymptotic preserving properties, numerical exploration of the model’s learning and recognition ability and the behavior of the model with positive synaptic weights.

2 The Schemes

Our work aims to provide a numerical scheme for (1.8). In this section, we will give a detailed description of the schemes we designed.

This section is organized as follows: in Section 2.1 and Section 2.2, we introduce the grid point settings and the numerical scheme for (1.8), including a -wise semi-implicit (-SI) implementation and a -wise fully-implicit (-FI) implementation; and in Section 2.3, we introduce the matrix form of the -SI and -FI schemes, from which we introduce a useful matrix to formulate further analysis.

2.1 Grid point settings and discretizations

Our scheme is a grid-based method, and this subsection gives the basic grid point settings of our scheme.

We assume that vanishes fast enough as or such that we truncate the domain into the bounded region

and suppose that is negligible out of this region. Then we divide the intervals , , into equal sub-intervals with size

| (2.1) |

respectively, so that the grid points are assigned as follows

| (2.2) |

then represents the numerical approximation of . We always assume that there is an index satisfying

| (2.3) |

which naturally treats the derivative’s discontinuity at . Actually it can also be seen from (2.1) and (2.2) that , so the two points related to flux shift are both aligned with the grid point.

For the discretization of , we approximate the derivative with the first-order finite difference

| (2.4) |

(here we have applied the boundary condition ); for the discretization of , we apply the simplest rectangular rule of numerical integration

| (2.5) |

which can also be explained as a trapezoidal rule of numerical integration since the boundary values and are supposed to be negligible (zero); furthermore, we also denote the discrete representation of as

| (2.6) |

2.2 The numerical scheme for the Fokker-Planck equation (1.8)

We design a scheme with a finite volume construction: for each , , , we have the difference equation

| (2.7) |

or, in an equivalent splitting form

| (2.8) |

| (2.9) |

This splitting form is introduced only for convenience when discussing the scheme’s properties in section 3.3. Our scheme is not based on the idea of operator splitting.

For the -wise transport, we take the following explicit flux construction adapted from Godunov’s Method (see, for example, (13.24) in [29]):

| (2.10) |

where

| (2.11) |

For the -wise transport, we need to treat the operator partially implicitly in an appropriate way such that the scheme’s stability is not limited by the grid ratio and the stiffness introduced by the smallness of . We inherit the idea from [30] which implements a Scharfetter-Gummel symmetric reconstruction for the convection-diffusion operator and imposes a flux-shift from the boundary back to , but we treat the flux shift operator implicitly:

| (2.12) |

where is the Heaviside function ( when and when ), and

| (2.13) |

Now to complete the scheme, the only thing that remains is to specify the expression of . As we shall see, the choice of will highly affect the implementations and properties of the scheme. Here we propose two choices of : either or . We call the scheme (2.7)~(2.13) with

the -wise semi-implicit (-SI) scheme, and the scheme (2.7)~(2.13) with

the -wise fully-implicit (-FI) scheme. The advantage of the -SI scheme is that its implementation is free of nonlinear solvers, and thus is the main focus of this work. The -FI scheme is mainly introduced for comparison.

To avoid ambiguity, when discussing the -SI scheme, we add a superscript ”SI” for all the variables, which will be put at the first place and separated with other superscripts by a semicolon:

and a similar set of notations will be introduced for the -FI scheme:

We still use when discussing the properties that the -SI scheme and the -FI scheme have in common (like the conservation and positivity preserving property).

It is worth noting that, in (2.12), the flux-shift part is for both the -SI and -FI construction of the scheme. This is an apparently small but important difference from the scheme proposed in [30]. This difference will be proved to make the scheme (unconditionally) positivity preserving for all and .

2.3 The matrix problem involved in the schemes and the iterative methods for the -FI scheme

In this subsection, we introduce the matrix equation one will face when implementing the proposed schemes. To begin with, we define the class of matrices which will frequently appear throughout our work:

Definition 1

For a given and , we define the matrix as an matrix such that

| (2.14) |

where

| (2.15) |

is tridiagonal except for one entry at , , and its properties will be further discussed in section 3.2. For now, having defined , we can write the matrix form of the proposed schemes.

For the -SI scheme, assume that we have already solved from the explicit convection step (2.8), then one easily checks444The only notable detail here is that in (2.12) should be replaced with according to (2.4). that solving the -wise transport step (2.9) means solving the system

| (2.16) |

for each , where

is the identity matrix and follows the definition given in (2.14). (2.16) is a linear equation for .

For the -FI scheme, solving the -wise transport equation means solving the system

| (2.17) |

for each , where

(2.17) is a nonlinear system since the matrix depends directly on the unsolved variable . Therefore, we have to solve such a system with a nonlinear solver.

We propose a fix point iteration method as follows. We set the initial guess as , hence , then for , let

| (2.18) |

where

We iterate rounds to satisfy the stopping criterion for some small enough , and then take

3 The Properties of the Scheme

Having proposed the scheme in Section 2.2, we discuss how it preserves the properties of the original model.

We show that both the -SI and the -FI schemes proposed in Section 2.2 are mass-conservative and positivity-preserving as long as the -wise CFL condition is satisfied (no matter how small and is). As for the AP property, the -FI scheme and the -SI scheme preserve the asymptotic limit in slightly different forms.

3.1 Conservative properties

Being total mass invariant is one of the most basic properties of our model (1.8), and our scheme satisfies the conservation property naturally thanks to the finite volume construction.

3.2 Properties of the matrix

Before discussing the properties of our schemes, we firstly investigate the matrix defined in (2.14) and (2.15). This matrix has a one dimensional kernel spanned by a strictly positive vector. This property will help us to show the positivity of the schemes.

The main result in this section is

Lemma 1

Proof: We want to study the solution of

| (3.3) |

We left-multiply both sides of (3.3) by the bi-diagonal matrix

obtaining (note that is non-singular, so has the same kernel with ), which can be rearranged into the following system:

| (3.4) |

One can derive from (3.4) recurrently (in a reversed order, from to ) that can all be written as a positive multiple of whose expressions only contains entries of . This implies strict-positiveness and uniqueness (up to a positive number multiplication) of .

3.3 Positivity preserving properties

Solutions being non-negative is another basic property of model (1.8), hence we also expect our scheme to be positivity preserving. In Theorem 2.2 [30], it is proved that the scheme’s positivity preserving property relies on the grid ratio . In this section, we see that the positivity preserving property of our scheme does not rely on or , and this improvement comes from our implicit treatment to the flux-shift operator that is stated in Section 2.

We start with the general matrix form of our problem (both for the -SI scheme and for the -FI scheme):

| (3.5) |

where

A crucial property of system (3.5) is that the to-be-inverted matrix is the so-called M-matrix, which is an important topic in the area of positive operators. In [31], the authors provided forty equivalent statements that ensures a Z-matrix (a matrix with all diagonal entries non-negative and all non-diagonal entries non-positive) to be an M-matrix. We pick out the statement F16 and K33 in this article, forming the following lemma:

Lemma 2 (Equivalence of statements F16 and K33 of Theorem 1 in [31])

A semi-positive Z-matrix is an M-matrix, and henceforth monotone. Specifically, if a matrix satisfies

and there exists a strictly positive (every entry is positive) vector such that , then is a non-singular M-matrix, and henceforth implies . Here

- means that all the entries of are positive, and

- means that all the entries of are non-negative.

With Lemma 2, we can further prove the following Lemma:

Lemma 3

For (3.5), indicates .

Proof: Firstly, it is not hard to check that is a Z-matrix according to the definitions. Secondly, we notice that

since is in the kernel of , according to Lemma 2 (taking as ), the matrix is a non-singular M-Matrix and henceforth indicates .

Applying Lemma 3 and (2.8), we can give out the following sufficient conditions of the positivity preserving property of the proposed schemes

Theorem 2

(Positivity Preserving Properties) holds for all and as long as

| (3.6) |

holds for all .

(3.6) tells us that as long as the grid ratio is not too big, (which is the most basic requirement for almost all numerical schemes for hyperbolic conservation law, and is also known as the CFL condition), the numerical solution will be non-negative. Such a property is unrelated to the grid ratio or the scaling parameter . This means that we can take arbitrarily small and without worrying about violations of the positivity of the solutions.

Especially, as we can take arbitrarily small for a fixed , it becomes possible for us to futher analyze the asymptotic behavior of the scheme when .

3.4 Asymptotic preserving properties

We first discuss the asymptotic properties of the original continuous model. (1.8)

Integrating the first equation of (1.8) over from to and applying the boundary condition, we get

| (3.7) |

where is defined in (1.10). When taking in (1.8), we have

| (3.8) |

According to Theorem 3.1 in [16], the solution of equation (3.8) is graranteed to exist and be unique when is given. Equations (1.10), (3.7) and (3.8) gives the asymptotic limit equation of (1.8) as , where (3.7) can be viewed as the macroscopic equation governing the slow dynamics while (3.8) gives the local microscopic equilibrium of the fast dynamics. We consider our scheme with only time discretized

| (3.9) |

where is and for the -SI scheme and -FI scheme, respectively.

For the -FI scheme, integrating the first equation in (3.9) and applying the boundary conditions, we have

| (3.10) |

which is a discretization of (3.7); and when , (3.9) becomes

| (3.11) |

which is directly the same as (3.8).

For the -SI scheme, Integrating the first equation in (3.9) and applying the boundary conditions, we obtain (3.10) again, while taking in (3.9), we have

| (3.12) |

We remark that the local equilibrium (3.12) can be viewed as a linearization of (3.11) such that the nonlinear coefficient is replaced by ,

The schemes (3.10) and (3.12) can be viewed as the limiting scheme for the following time rescaled maybe “delayed” equation as

| (3.13) |

Therefore, the -SI scheme is asymptotic preserving up to a time delay. In other words, when , it is still a consistent first-order in time discretization to the limit of the original model.

4 Numerical Results

This section gives the numerical results obtained from our schemes, involving both the tests on the schemes’ performance and the explorations of the model by our scheme. In Section 4.1, we numerically test the order of accuracy of our schemes; in Section 4.2, we numerically validate the asymptotic preserving properties of our schemes; in Section 4.3, we test the model’s learning and discrimination abilitites; in Section 4.4, we focus on exploring the model’s behavior when the neural system is excitatory.

4.1 Order of Convergence

In this part, we test the order of accuracy of the -SI schemes. Since the exact solution is unavailable, we estimate the order of the error by

where is the numerical solution with step length . The term above is an approximation for the accuracy order. Errors in both and norms are examined.

We choose , and

throughout this section, and we test the accuracy for both and . We test the numerical results on directions by fixing two of and while adjusting the third one. The results are shown in Table 1, Table 2 and Table 3, respectively.

| 2.0e-1 | 1.1337e-03 | 2.0818 | 3.5479e-03 | 2.0675 |

| 1.0e-1 | 2.6781e-04 | 2.0122 | 8.4643e-04 | 2.0080 |

| 5.0e-2 | 6.6390e-05 | 1.9340 | 2.1044e-04 | 1.8739 |

| 2.5e-2 | 1.7374e-05 | - | 5.7417e-05 | - |

| 2.0e-1 | 1.4571e-01 | 1.8905 | 2.8929e-01 | 1.7984 |

| 1.0e-1 | 3.9299e-02 | 1.7441 | 8.3168e-02 | 1.7309 |

| 5.0e-2 | 1.1731e-02 | 1.7872 | 2.5056e-02 | 1.8471 |

| 2.5e-2 | 3.3990e-03 | - | 6.9641e-03 | - |

| 4.0e-2 | 4.2858e-03 | 0.9550 | 9.9587e-03 | 0.9543 |

| 2.0e-2 | 2.2108e-03 | 1.0038 | 5.1394e-03 | 1.0030 |

| 1.0e-2 | 1.1025e-03 | 0.9849 | 2.5643e-03 | 0.9801 |

| 0.5e-2 | 5.5703e-04 | - | 1.3000e-03 | - |

| 4.0e-2 | 4.5348e-01 | 1.0102 | 3.9149e-01 | 0.7353 |

| 2.0e-2 | 2.2514e-01 | 1.0095 | 2.3516e-01 | 0.7557 |

| 1.0e-2 | 1.1183e-01 | 0.9717 | 1.3928e-01 | 0.6145 |

| 0.5e-2 | 5.7022e-02 | - | 9.0971e-02 | - |

| 2.0e-3 | 3.8523e-04 | 0.9730 | 1.2219e-03 | 0.9647 |

| 1.0e-3 | 1.9625e-04 | 0.9686 | 6.2608e-04 | 0.9626 |

| 5.0e-4 | 1.0028e-04 | 1.0093 | 3.2125e-04 | 1.0089 |

| 2.5e-4 | 4.9819e-05 | - | 1.5963e-04 | - |

| 2.0e-3 | 4.9612e-03 | 0.9473 | 4.5521e-03 | 0.9676 |

| 1.0e-3 | 2.5729e-03 | 0.9884 | 2.3278e-03 | 0.9954 |

| 5.0e-4 | 1.2969e-03 | 0.9781 | 1.1677e-03 | 0.9895 |

| 2.5e-4 | 6.5836e-04 | - | 5.8810e-04 | - |

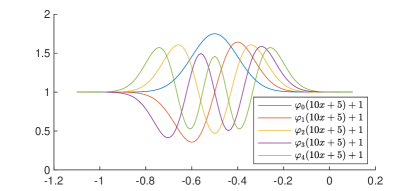

It can be seen that when , the scheme shows the expected second-order convergence rate in the direction, and the first-order convergence in directions. However, when , the - and - wise orders of accuracy decrease a bit. This may be caused by the -wise nonlinearity of the equation (1.8). Indeed, when the solution has already developed into a discontinuous pattern (Figure 1).

4.2 Asymptotic behaviors of the -SI and -FI schemes

In this subsection, we numerically validate the asymptotic preserving properties of both the -SI scheme and the -FI scheme by validating that with fixed grid size, the numerical solution of the scheme converge to the -wise quasi-steady state at the discretized level as .

Firstly we introduce a discretized version of the time-independent quasi-steady state equations (1.10) and (3.8) for a given . This will serve as a reference solution. We denote the solution of the continuous time-independent quasi-steady state equations (1.10) and (3.8) for a given as . Since (3.8) means that should nullify the stiff operator in (1.8), our discretized version can also be directly defined to nullify the stiff terms (order terms) in the main equation (2.7) in our scheme. For a given discretized grid function such that the -wise quasi-steady state in the discretized level and corresponding is defined to satisfy the following equations

| (4.1) |

| (4.2) |

| (4.3) |

| (4.4) |

To generate this discretized -wise quasi-steady state for a given with , one may solve the system

| (4.5) |

iteratively from some initial guess of , and take for sufficiently large that satisfies some stopping criterion like for some small enough .

The numerical experiments are conducted as follows:

-

1.

Fix all the scheme parameters except (these parameters include all the grid lengths, initial/boundary values and coefficients, especially, is fixed), and solve the scheme. The solution for a fixed is denoted as .

- 2.

-

3.

Plot versus for each (the norm is taken at a single time layer, chosen as norm in our numerical test). AP property means that after a short period of time (the length of which decreases with ), the difference between the solution and the corresponding discretized -wise quasi-steady state should drop to a small value which vanishes as , i.e.

(4.6)

In our simulations, we choose ,

| (4.7) |

and

| (4.8) |

We perform the aforementioned AP test for both the -FI scheme and the -SI scheme, choosing ranging from to , and the results are shown in Figure 2 and Figure 3, respectively.

From Figure 2, we can see that for the -FI scheme, the differences between and is decreasing when , we can even assert from the figure.

From Figure 3, we can see that for the -SI scheme, the differences between and is also decreasing when . However, this decreasing trend is halting when for and for , and further decrease of does not uniformly draw and closer. This can be explained by the time-delayed effect of the -SI scheme, which was presented in section 3.4. This time-delay effect introduces an difference between and , which will not further decrease when becomes a small number comparing to . We may say that when and when for the -SI scheme.

4.3 Learning and reacting

From this subsection on, we explore model (1.8) with our scheme. We always use the -SI scheme in the rest of this section.

In this subsection, we test the model’s learning and discrimination abilities. We let the model perform learning-testing tasks that was originally proposed in [16], containing a learning phase and a testing phase:

Learning Phase A heterogeneous input is presented to the system, while the learning process is active. The learning rule is determined for the inhibitory weights by by taking if . After some time, the synaptic weight distribution converges to an equilibrium distribution , which depends on .

Testing Phase A new input is presented to the system. This time, the neural system reacts to but does not learn it. In this stage, there is only -wise transport and no -wise convection. To achieve this goal, we implement the -wise quasi-steady state solver (4.5) with replaced by the testing signal , during which we keep we obtained in the Learning Phase.

The pattern recognition property of the system is that it can detect whether the new input is actually the same one that has been presented during the learning phase, i.e. : indeed, in this case, has a very specific shape that does not depend upon the original input that has been learned in the learning phase.

For the learning phase, We implement the -SI scheme; for the testing phase, we implement the -wise quasi-steady state solver (4.5), replacing the input function with the testing input function .

In our numerical experiments, the learning and testing input functions are chosen from the set

| (4.9) |

where stands for the -th normalized Hermite function defined recursively as

| (4.10) |

This set of functions are well known for forming a set of bases for the Hilbert space . We take the argument so that the input functions are centralized at and almost entirely supported in our truncated solving region; and the extra “” term widens the support of the solution so that it can react to input signals at wider range of . We choose , and is given as (4.7).

For each , we let the model perform the fore-mentioned task with and , and the corresponding network activity is shown in the -th row, -th column in Figure 5. It can be seen that, when the learned input and the testing input are the same (corresponding to the diagonal entries in the figure), the firing pattern is of the nice triangular shape ; but when and are different, the firing pattern is not in a regular shape. It is worth noting that in [16], the authors provided a primitive numerical result for the same learning-testing task, the networks firing pattern after reacting to the same learned input signal, shown in the article’s Figure 3, turned out to be quite zigzag. In comparison, with our numerical method, an almost-perfect triangular-shaped pattern is produced after the model learn and react to the same input (see the subfigures in the diagonal entries in Figure 5).

4.4 Positive synaptic weights

This subsection focus on exploring model’s behavior when the neural system is excitatory, i.e. the solution is supported in the with region with . In [16], the authors’ analytic work focus mainly on inhibitory networks. And for the excitatory neural system, they provided that, under some choice of parameters, the steady-state solution may not exist. We further explore with our numerical scheme the behavior of the solution when the synaptic weights are positive.

Our numerical exploration shows that in the excitatory cases, the network can either develop to a steady state like it did in the inhibitory cases, or exhibit a trend of accelerating expansion unlike it did with inhibitory cases under different parameter settings.

4.4.1 Long-time steady solution

We firstly test some scenarios in which the solutions present behavior similar to it would present with negative synaptic weights .

We choose , , , , , , and initial condition given as (4.7). The solution finally develops to a steady-state with forming a right triangular pattern supported in a single interval for some , just like it did with negative synaptic weights. This is the case that corresponds to the correct biological picture.

,

Indeed, as long as it can develop to a steady state, the excitatory neural network also has the recognition ability shown in subsection 4.3. To show this, we perform a similar learning-testing task as we did in the last subsection. The parameter settings are the same as they were in the last subsection except for that the initial condition is supported in the region with , , and the candidate input functions become

| (4.11) |

so that the input are centered at instead of for the inhibitory cases. The results are given in Figure 7. The arrangement of the figures are the same as that of Figure 5. It can be seen that subfigures at the diagonal entries are in perfect right triangular shape, which is the same as it is in Figure 7.

,

4.4.2 Unsteady solution

Now we turn our attention to a different case, where the solution is not converging to a steady solution. In [16], it was presented that even if we choose the firing function to be bounded

| (4.12) |

we may still face some scenarios that a steady state does not theoretically exist. And we further explore the solution’s behavior in this case.

We choose , , and the rest parameters were same as it was in the first numerical experiment in sub-subsection 4.4.1. The solution turns out to propagate to the region with greater . The -wise right boundary of the support of grows increasingly fast (Figure 8 right), and henceforth grows increasingly big (Figure 8 left). It should be noticed that our result shown in Figure 8 does not necessarily imply that this is a blow up solution, since we are not sure whether will grow to in a finite time.

Even if the solution is not a blow-up one, it still does not practically make sense that the network’s synaptic weight grows increasingly fast to . Therefore, the solution’s fast growing behavior is a non-physical effect of our model.

5 Conclusion

In this work, we have proposed a numerical scheme for the Fokker-Planck equation of structured neural networks (1.8), which is shown to be conservative, positivity-preserving, and asymptotic-preserving.

The key to success of our scheme is the implicit treatment for flux shift operators in (1.8), based on which the numerical scheme has an improved positivity preserving property: there is no -wise grid ratio constraint to guarantee the positivity, which is otherwise a major bottleneck for efficient simulation.

As for numerical exploration, we have conducted the learning-testing numerical experiments and yielded numerical solutions that not only match the theoretical results perfectly, but also fully demonstrate the recognition ability of the model and provide insights for potential uses and future studies. The numerical tests are also extended to the cases of excitatory neural networks. We have also explored with our numerical experiments the behavior of the model when a steady state solution does not exist. These numerical experiments are successful in capturing partial long time trends of the solution but still preliminary. Further numerical experiments can be done to explore, for example, the model’s behavior when the input signal becomes time-dependent, or alternative learning rules that can prevent from growing to infinity for the excitatory cases.

Acknowledgement

J. Hu’s research was partially supported by NSF CAREER grant DMS-1654152. Z. Zhou is supported by the National Key R&D Program of China, Project Number 2020YFA0712000 and NSFC grant No. 11801016, No. 12031013.

References

- [1] Stephen J Martin, Paul D Grimwood, and Richard GM Morris. Synaptic plasticity and memory: an evaluation of the hypothesis. Annual review of neuroscience, 23(1):649–711, 2000.

- [2] Alan L Hodgkin and Andrew F Huxley. A quantitative description of membrane current and its application to conduction and excitation in nerve. The Journal of physiology, 117(4):500, 1952.

- [3] Richard FitzHugh. Impulses and physiological states in theoretical models of nerve membrane. Biophysical Journal, 1(6):445–466, 1961.

- [4] Louis Lapicque. Recherches quantitatives sur l’excitation électrique des nerfs traitée comme une polarisation. J. Physiol. Pathol. Gen, 9(1):620–635, 1907.

- [5] María J Cáceres, José A Carrillo, and Benoît Perthame. Analysis of nonlinear noisy integrate & fire neuron models: blow-up and steady states. The Journal of Mathematical Neuroscience, 1(1):1–33, 2011.

- [6] José A Carrillo, María d M González, Maria P Gualdani, and Maria E Schonbek. Classical solutions for a nonlinear fokker-planck equation arising in computational neuroscience. Communications in Partial Differential Equations, 38(3):385–409, 2013.

- [7] María J. Cáceres, Pierre Roux, Delphine Salort, and Ricarda Schneider. Global-in-time classical solutions and qualitative properties for the nnlif neuron model with synaptic delay. Communications in Partial Differential Equations, 2018.

- [8] Nicolas Brunel. Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. Journal of computational neuroscience, 8(3):183–208, 2000.

- [9] Grégory Dumont and Pierre Gabriel. The mean-field equation of a leaky integrate-and-fire neural network: measure solutions and steady states. Nonlinearity, 33, 10 2017.

- [10] Nicolas Brunel and Vincent Hakim. Fast global oscillations in networks of integrate-and-fire neurons with low firing rates. Neural computation, 11(7):1621–1671, 1999.

- [11] François Delarue, James Inglis, Sylvain Rubenthaler, and Etienne Tanré. Global solvability of a networked integrate-and-fire model of mckean–vlasov type. The Annals of Applied Probability, 25(4):2096–2133, 2015.

- [12] François Delarue, James Inglis, Sylvain Rubenthaler, and Etienne Tanré. Particle systems with a singular mean-field self-excitation. application to neuronal networks. Stochastic Processes and their Applications, 125(6):2451–2492, 2015.

- [13] Jian-guo Liu, Ziheng Wang, Yuan Zhang, and Zhennan Zhou. Rigorous justification of the fokker-planck equations of neural networks based on an iteration perspective. arXiv preprint arXiv:2005.08285, 2020.

- [14] Jian-Guo Liu, Ziheng Wang, Yantong Xie, Yuan Zhang, and Zhennan Zhou. Investigating the integrate and fire model as the limit of a random discharge model: a stochastic analysis perspective. Mathematical Neuroscience and Applications, 1, 2021.

- [15] Donald Olding Hebb. The organization of behavior: A neuropsychological approach. John Wiley & Sons, 1949.

- [16] Benoît Perthame, Delphine Salort, and Gilles Wainrib. Distributed synaptic weights in a LIF neural network and learning rules. Physica D: Nonlinear Phenomena, 353-354:20–30, Sep 2017.

- [17] Shi Jin and Bokai Yan. A class of asymptotic-preserving schemes for the fokker-planck-landau equation. J. Comput. Physics, 230:6420–6437, 07 2011.

- [18] Marianne Bessemoulin-Chatard and Francis Filbet. A finite volume scheme for nonlinear degenerate parabolic equations. SIAM Journal on Scientific Computing, 34(5):B559–B583, 2012.

- [19] José A Carrillo, Alina Chertock, and Yanghong Huang. A finite-volume method for nonlinear nonlocal equations with a gradient flow structure. Communications in Computational Physics, 17(1):233–258, 2015.

- [20] JS Chang and G Cooper. A practical difference scheme for fokker-planck equations. Journal of Computational Physics, 6(1):1–16, 1970.

- [21] Lorenzo Pareschi and Mattia Zanella. Structure preserving schemes for nonlinear fokker–planck equations and applications. Journal of Scientific Computing, 74(3):1575–1600, 2018.

- [22] Jingwei Hu and Xiangxiong Zhang. Positivity-preserving and energy-dissipative finite difference schemes for the fokker-planck and keller-segel equations. arXiv preprint arXiv:2103.16790, 2021.

- [23] Sebastiano Boscarino, Francis Filbet, and Giovanni Russo. High order semi-implicit schemes for time dependent partial differential equations. Journal of Scientific Computing, 68(3):975–1001, 2016.

- [24] Jian-Guo Liu, Li Wang, and Zhennan Zhou. Positivity-preserving and asymptotic preserving method for 2d keller-segal equations. Mathematics of Computation, 87(311):1165–1189, 2018.

- [25] Jingwei Hu and Xiaodong Huang. A fully discrete positivity-preserving and energy-dissipative finite difference scheme for poisson–nernst–planck equations. Numerische Mathematik, 145(1):77–115, 2020.

- [26] Rafael Bailo, Jose A Carrillo, and Jingwei Hu. Fully discrete positivity-preserving and energy-dissipating schemes for aggregation-diffusion equations with a gradient flow structure. Commun. Math. Sci., 18:1259–1303, 2020.

- [27] Yong Zhang, Yu Zhao, and Zhennan Zhou. A unified structure preserving scheme for a multispecies model with a gradient flow structure and nonlocal interactions via singular kernels. SIAM Journal on Scientific Computing, 43(3):B539–B569, 2021.

- [28] Jingwei Hu and Ruiwen Shu. A second-order asymptotic-preserving and positivity-preserving exponential runge–kutta method for a class of stiff kinetic equations. Multiscale Modeling & Simulation, 17(4):1123–1146, 2019.

- [29] Randall J. LeVeque. Numerical methods for conservation laws (2. ed.). Lectures in mathematics. Birkhäuser, 1992.

- [30] Jingwei Hu, Jian-Guo Liu, Yantong Xie, and Zhennan Zhou. A structure preserving numerical scheme for fokker-planck equations of neuron networks: Numerical analysis and exploration. Journal of Computational Physics, 433:110195, 2021.

- [31] Robert J Plemmons. M-matrix characterizations. i—nonsingular m-matrices. Linear Algebra and its Applications, 18(2):175–188, 1977.