A Statistical Approach to Estimating Adsorption-Isotherm Parameters in Gradient-Elution Preparative Liquid Chromatography

Abstract

Determining the adsorption isotherms is an issue of significant importance in preparative chromatography. A modern technique for estimating adsorption isotherms is to solve an inverse problem so that the simulated batch separation coincides with actual experimental results. However, due to the ill-posedness, the high non-linearity, and the uncertainty quantification of the corresponding physical model, the existing deterministic inversion methods are usually inefficient in real-world applications. To overcome these difficulties and study the uncertainties of the adsorption-isotherm parameters, in this work, based on the Bayesian sampling framework, we propose a statistical approach for estimating the adsorption isotherms in various chromatography systems. Two modified Markov chain Monte Carlo algorithms are developed for a numerical realization of our statistical approach. Numerical experiments with both synthetic and real data are conducted and described to show the efficiency of the proposed new method.

keywords:

, , and

1 Introduction

The separation and purification of mixtures are important processes in many fields, including the fine-chemical, biomedical, pharmaceutical, food, and environmental industries. A popular technique for high qualified separation and purification is liquid chromatography, whose operating principle can be roughly described as follows: a mixed sample is injected into a fluid stream that is pumped through a pipe filled with small porous beads. These beads slow down the components traveling through the column because the components adsorb on the surface of the beads. Since the components adsorb at different rates, they will travel at different speeds through the column and exit the column at different times, and thereby be separated from each other. The foundational monograph by Guiochon and Lin (2003) provides a detailed theoretical analysis of preparative liquid chromatography.

In preparative chromatography, the Adsorption Isotherm can be considered the most important quantity as it can be used to calculate the specific surface area of materials, mean size of deposited particles, pore size or particle size distribution, etc. Loosely speaking, the adsorption isotherm describes the dependence of the amount of adsorbed substance on the partial pressure of the solution concentration at a given temperature. It can be viewed as an intrinsic physical quantity of a given chromatography system. Various approaches exist for estimating the adsorption isotherm in practice, and can be classified into two categories: experimental methods and computational methods. Most traditional methods, such as frontal analysis (Lisec, Hugo and Seidel-Morgenstern (2001)) and perturbation peak (Dose, Jacobson and Guiochon (1991)), belong to the category of experimental methods. They are usually conducted in a series of programmed concentration steps, each step resulting in a so-called breakthrough front giving one point on the adsorption-isotherm curve. Hence, to reduce the experimental costs, over the last two decades, numerous computational methods have been developed for efficiently estimating the adsorption isotherm in various chromatography systems (see, for example, Felinger, Zhou and Guiochon (2003); Forssén, Arnell and Fornstedt (2006); Zhang et al. (2016a, b); Cheng et al. (2017)). These types of methods are used to numerically estimate adsorption-isotherm parameters so that the simulated batch separation coincides with the actual experimental results. Most of such computational approaches require only a few injections of different sample concentrations, and so solute consumption and time requirements are very modest. The mathematical fundamentals of most computational methods are based on the numerical estimation of adsorption-isotherm parameters by solving an inverse problem in Partial Differential Equations (PDEs). However, to the best of our knowledge, all existing approaches belong to the class of deterministic models. Hence, the main goal of this paper is to develop a probabilistic model to estimate the adsorption-isotherm parameters, which will constitute a methodological contribution to the field of chromatography.

It should be noted that the complex structure (highly non-linear) of parameter-to-measurement mapping makes the corresponding optimization problem highly non-convex. Consequently, the global optimal estimator of the adsorption-isotherm parameters cannot be efficiently obtained through conventional optimization solvers. As with the fitting of Gaussian-mixture models, the estimation of adsorption-isotherm parameters is hindered by multiple global solutions, and optimization algorithms are valid only under certain constraints. Therefore, this paper proposes a hybrid method of optimization and sampling to estimate the adsorption-isotherm parameters, and the algorithm is shown to be valid on the Gaussian-mixture data, Gamma-mixture data and the data for the experimental gradient-elution preparative liquid chromatography. We adopt a Bayesian approach and model the solution path obtained from the numerical solver with additive white noise. The framework provides an uncertainty quantification for the model parameters using draws of model parameters from the Bayesian posterior distribution based on modified Markov chain Monte Carlo (MCMC) algorithms.

Bayesian modeling of PDEs and complex dynamic systems has already been studied in some previous literature, though their focus is mostly on the estimation and uncertainty quantification for the PDE solution instead of the finite-dimensional system parameters. For example, Xun et al. (2013) uses B-splines in a Bayesian hierarchical model to estimate the solution of PDEs. Their model assumption is different from ours since the PDEs in our problem have an existing numerical solver while theirs do not. Chkrebtii et al. (2016) proposes a systematic Bayesian calibration method to estimate both the PDE solution and the model parameters, characterizing the uncertainty from both the discretization error in numerical solvers and the random error in observed data. We refer readers to Cockayne et al. (2019) for a detailed review on Bayesian methods on PDEs. Although the framework considered in Chkrebtii et al. (2016) is very general, their main focus is on using the Gaussian process prior to measure the discretization error in the PDE solution. In contrast, in our chromatography problem, the primary interest is in estimating the finite-dimensional system parameters rather than the PDE solution itself, and the main challenge comes from the lack of identification in these parameters due to the Gaussian-mixture-like solution paths. This unique nonidentification issue cannot be addressed by the generic Bayesian MCMC Algorithm 2 in Chkrebtii et al. (2016). Instead, to decouple the strong dependence between model parameters, we propose a dimension-reduction strategy based on the knowledge of mixture-alike solution paths and then incorporate either a gradient descent or a Langevin dynamics subroutine into the MCMC algorithm, leading to significantly improved mixing of the posterior samples.

Section 2 describes the gradient-elution preparative liquid chromatography model from mathematical and statistical perspectives, while in Section 3 a statistical approach is developed to estimate the adsorption-isotherm parameters. Numerical simulations with synthetic data are presented in Section 4 to demonstrate the robustness of the proposed method. Finally, an experimental gradient data set is tested in Section 5, and a short discussion and concluding remarks are provided in Section 6.

2 Modeling of preparative liquid chromatography

In this section, we briefly review the mathematical models used in this paper for chromatographic processes and provide a parameter-to-measurement mapping of the considered inverse problem of estimating adsorption isotherms. Following this, a statistical model with spatial noise terms and corresponding notations is introduced. Finally, we visualize the structure of the target function in optimization, to illustrate the multi-solution and correlation in parameters intuitively.

2.1 Mathematical background

Without loss of generality, and for the convenience of readers who are interested only in our statistical approach that can be used for other types of real-world problems, we consider the following mass-balance equation of a two-component system for a fixed-bed chromatography column with the Danckwerts boundary condition, which is the most commonly used one for column chromatography (Ruthven (1984); Guiochon and Lin (2003); Horvath (1988); Javeed et al. (2011); Lin et al. (2017); Zhang et al. (2016b)).

| (1) |

where is distance, is time, and refers to the two components. and are the concentrations in the mobile and stationary phases, respectively, is the mobile phase velocity, and is the stationary-to-mobile phase ratio. is the diffusion parameter. is the length of the chromatographic column, and is an appropriate time point slightly larger than the dead time of chromatographic time . In this paper, we set . In addition, is the initial condition and is the boundary condition, which describes the injection profile in the experiment. We adopt a simplified model here for ease of illustration; a more detailed version of gradient-elution liquid chromatography, with further discussion, can be found in Section S1 of the supplementary material.

Throughout this paper, we focus on the case in which the adsorption-energy distribution is bimodal. In this case, the bi-Langmuir adsorption isotherm is usually adopted as follows:

| (2) | |||

where subscripts I and II refer to two adsorption sites with different levels of adsorption energy.

In this paper, the collection of adsorption-isotherm parameters is denoted by

| (3) |

Now, we consider the measurement-data structure. In most laboratory and industry environments, the total response is observed at the column outlet with

| (4) |

where is the solution to problem (1) with the bi-Langmuir adsorption-isotherm model (2), and represents the concentration of the -th component at the outlet . The parameter-to-measurement map : can be expressed as

| (5) |

where the model operator is defined through (4). To be more precise, for a given parameter , a bi-Langmuir adsorption-isotherm model can be constructed according to (2). Then, the concentration in mobile, i.e. , can be obtained by solving PDE (1). Finally, the experimental data can be collected by using the designed sensor with the physical law (4). The aim of this paper is to estimate adsorption-isotherm parameters from the time series database and the integrated mathematical model (5) via a statistical approach.

2.2 A statistical model

In a liquid-chromatography experiment, a sampler brings the sample mixture into the mobile-phase stream, which carries it into a column, and pumps deliver the desired flow and composition of the mobile phase through the column. The detector located at the end of the column records a signal proportional to the amount of sample component emerging from the column, for time period . The signal recorded is the observation of interest.

To build up a statistical model, let be the observation points of the experiment, collected at discrete time points . The liquid-chromatography data measured at time can be modeled by

| (6) |

where represents the measurement noise. The clean liquid-chromatography data are , where is the solution of a system of differential equations with static parameters ; represents the parameter of interest.

Let be the collection of the exact chromatography signals with parameter . Then, the general framework of the chromatography measurement of is represented by

| (7) |

stands for the measurement noise, and for simplicity we assume the noises are uncorrelated between every pair, with zero mean and identical variances .

Throughout this paper, the framework is based on a single observation, but it can be generalized to a case with multiple observations or multiple injection groups. Assuming there is a single observation with fixed parameter , the aim of the paper is to estimate through the posterior distribution as described in the following sections, with all the other parameters apart from assumed to be known.

2.3 Difficulties in optimization

A natural idea is to estimate the adsorption-isotherm parameters with optimization methods. For example, one may think the minimizer of the distance between and with respect to as a good estimator. However, the non-smooth loss function and the non-unique global minimum prevent us from obtaining valid estimates by optimization.

More specifically, let us consider a four-dimensional parameter by setting the parameters related to the second component as . Since all the elements of are positive, we can decompose into a two-dimensional unbounded parameter and a two-dimensional bounded parameter without losing any information. Let , and

be the loss function. While studying the structure of , we notice that it is convex with respect to for any , as shown in Fig. 1(a). The topography suggests that an optimal estimator can be easily obtained via optimization algorithms for each , as presented in Fig. 3(a)(c). However, if we try to optimize both and at the same time, the non-smooth loss function and potential multiple solutions, as illustrated in Fig. 1(b), will make the estimation invalid.

Similar loss functions can be observed when dealing with mixture models. Let us consider the simplest case. Assume the signal is the density function of a two-component Gaussian-mixture model with weights and mean . The corresponding loss function is visualized in Fig. 2. Similar to that of the chromatography system, we can also easily calculate the corresponding optimal means for any fixed weights, but multiple solutions to the problem and saddle points make the optimization unreliable. Unfortunately, this problem will get worse as the number of components grows. Therefore, we usually use Markov chain-based Bayesian methods to make inferences about such parameters of interest. The traversability of these chains and the possible existence of ”label switches” also reduce the impact of multiple solutions.

In summary, the adsorption-isotherm parameters are not likely to be directly estimated via gradient-based optimization algorithms, but such optimization methods might lead to a partial solution under certain restricted conditions. Meanwhile, since the mixture models imitate the chromatography system well in terms of the loss function and the former is more computationally efficient, they can be used as toy examples to verify our proposed methods.

3 Methodology

In this section, we introduce the main methodology of this paper. The section is organized as follows: first, the traditional Bayes sampling framework is constructed, after which a strategy of dimensionality reduction and restoration is presented. Finally, two algorithms based on this strategy and Metropolis-within-Gibbs sampling are explained.

3.1 Bayesian approach

To investigate the parameter of interest, we aim to draw samples from the posterior distribution of given the entire observation . In this section, we adopt a Bayesian framework to sample from the posterior distribution . Throughout this paper, is a generic symbol for continuous probability densities, denotes the prior densities, denotes the posterior densities, and denotes the proposal densities used in Markov chain Monte Carlo (MCMC) algorithms.

For Bayesian inference, we assume that the measurement errors are normally distributed, and impose the following prior distributions on and the error term variance :

where represents the normal distribution with mean and variacne , represents the inverse gamma distribution with shape parameter and scale parameter , and is a tuning parameter. We let , which includes all hyperparameters.

Given this prior specification, the posterior distribution of can be written explicitly as

| (8) | ||||

where stands for the multivariate normal density for the argument with mean and covariance matrix , is the identity matrix, and .

After identifying the posterior distribution, we can use the Metropolis-Hastings algorithm to sample from the posterior. Because and are highly correlated in the posterior, an independent random-walk proposal may not converge quickly, or even get stuck somewhere. For this reason, we consider the Metropolis-within-Gibbs sampler instead. The method is outlined in Algorithm 3.1, in which the acceptance probability is calculated as

The hyperparameters together with the variances of proposal distributions are tuned to make the chain as stable as possible and to have an acceptance rate of approximately to for each element of . Then the sampler will generate a recurrent Markov chain whose stationary distribution is the target posterior distribution .

Algorithm 1 Metropolis-within-Gibbs sampler

3.2 Strategy of dimensionality reduction and restoration

Modeling inverse problems as the posterior distributions will introduce correlation to the parameters of interest. When sampling more than one of the parameters directly from the posterior, the strong correlation might disrupt the motion of the Markov chain. To surmount this problem, we propose a dimension-reduction strategy with two modified MCMC algorithms to stabilize the posterior chains.

We first consider the degenerated case, in which the posterior distribution of is almost singular and only supported on a lower dimensional set the size of observed data tends to infinity. Specifically, suppose that the parameter of interest can be written as , where with , , and is a one-to-one mapping determined with pilot study. Roughly speaking, we first set as the largest dimension that leads to a stable posterior chain of . Then the dimensionality is reduced by combining the elements of according to the pilot study. Please see Section S4 of the supplementary material for a detailed discussion on how to choose and some empirical results.

In the extreme case that and are perfectly dependent in the posterior as the size of observed data tends to infinity, the conditional posterior is degenerate to a Dirac distribution for some unknown function , i.e. it is almost sure that . Then, the support of the posterior of can be expressed as

and the induced posterior distribution of is

In such a scenario, we can find the least squares estimator of in terms of from the following equation:

| (9) |

such that approximates as . In what follows, we assume that the optimization problem in (9) can be settled by the following gradient descent algorithm (GD) without discussing the optimization accuracy.

To incorporate the optimizing step in the MCMC algorithm, we keep the overall structure of Algorithm 3.1 for sampling and . In the extreme case of for some function , instead of sampling , we can calculate a from (9) in each MCMC iteration. Together with the function , can be obtained for any specific . The detailed algorithm is provided in Algorithm 3.3.

Our theoretical justification for this dimension-reduction strategy is as follows. We first introduce a series of technical assumptions, and our theoretical results will depend on different subsets of these assumptions. We define the loss function associated with the solution function , for any .

-

(A.1)

lies in a compact space , where and . There is a continuous one-to-one mapping such that and is also a compact space for . The true parameter is an interior point of . is a compact set, and are independent and identically distributed as for some .

-

(A.2)

Let be the Lebesgue measure of . As ,

(10) -

(A.3)

There exists a function , and positive constants , such that for any ,

(11) -

(A.4)

There exist positive constants , such that, for all that satisfies , .

-

(A.5)

There exist positive constants , such that, for all , .

Assumption A.1 assumes a compact parameter space and normal errors. Assumption A.2 assumes the uniform convergence of the empirical distance from the function to the true function over the compact parameter space . This assumption usually holds when are densely distributed in and is continuous in both and . Assumption A.3 is the identification condition for , where the lower bound guarantees that is the unique minimizer of the loss function over the argument for any given . This assumption is only used for justifying our later Algorithm 3.3 in the degenerate case of . Assumption A.4 imposes a local-continuity condition on the loss function in a small neighborhood of . Since we have already assumed that is an interior point of in A.1, the radius can be made small such that the whole ball is included in . Assumption A.5 imposes an identification condition on the loss function which guarantees that the true parameter is uniquely identified in . This is similar to the identification condition for moment estimation (see, for example, ZE.2 in Belloni and Chernozhukov 2009). The power constants , , and in A.3, A.4, and A.5 are not specified to allow flexibility in the local-continuity property of . In Section 2.2 of the supplementary material, we provide some partial verification for these assumptions using the model of simulation case 1 in Section 4.1.

Theorem 3.1.

- (i)

-

(ii)

Suppose that Assumptions A.1, A.2, A.3, and A.4 hold. Then, for any , and for every and every ,

(13) as , almost surely, where denotes the conditional posterior measure of given .

-

(iii)

Suppose that Assumptions A.1, A.2, A.4, and A.5 hold. Then, for any , and for every and every ,

(14) as , almost surely.

Theorem 3.1 provides justification for our Algorithm 3.3, in the sense that, in each MCMC iteration, we can numerically solve for in terms of from (9). Part (i) shows that, as the sample size of observations increases, the empirical solution becomes increasingly close to , which is the unique minimizer of the loss function . Part (ii) shows that, under the additional continuity condition on , most of the posterior probability mass of is concentrated around . Part (iii) gives the stronger result of posterior consistency of to the truth (Chapter 6, Ghosal and van der Vaart 2017) under the additional identification condition A.5 on , which implies that most of the posterior probability mass will concentrate around the true parameter asymptotically. The detailed proof of Theorem 3.1 can be found in Section S2.1 of the supplementary material. We emphasize that even when the strong assumption of A.3 that assumes does not hold, Part (iii) of Theorem 3.1 on the posterior consistency for the whole parameter vector remains valid as the sample size .

3.3 Main algorithms

After identifying the method of dimensionality reduction and restoration, we propose a new modified Metropolis-embedded gradient-descent-within-Gibbs sampler (MGDG). The detailed algorithm is presented below.

Algorithm 3 Metropolis-embedded gradient-descent-within-Gibbs sampler (MGDG)

The main strategy of this algorithm is to sample only and the error term variance from the posterior distribution, and is treated as a function of in the posterior. We can restore a posterior sample of using the posterior draws of and . The lower dimensionality of helps to improve the mixing of Markov chains and prevent the ”label switching” phenomenon that occurs in mixture models (Jasra, Holmes and Stephens 2005). After reaching the stationary distribution, the samples should be distributed around the truth , as shown in Theorem 3.1.

Now we consider the general case in which is not a function of , but may be highly correlated with in the posterior distribution . In such a scenario, we use the Metropolis-adjusted Langevin algorithm (MALA) (Roberts and Rosenthal, 1998; Roberts and Tweedie, 1996) to sample . We still use the Metropolis-within-Gibbs algorithm to draw and , and then use the MALA to draw using the gradient information from the conditional posterior of . We call this algorithm the Metropolis-adjusted Langevin-dynamics-within-Gibbs sampler (MALG).

Algorithm 4 Metropolis-adjusted Langevin-dynamics-within-Gibbs sampler (MALG)

where the values of the proposal densities, and , are calculated from

Because the log-likelihood term is proportional to , the gradient term is the same gradient as used in Algorithm 3.3. After choosing suitable values for the stepsize and the sub-chain length , this algorithm will return MCMC samples of all parameters, including .

4 Simulation study

In this section, we propose three numerical solvers based on mixture models to confirm the robustness of our algorithm from three aspects: initialization, dimension of and skewness of . To reach this conclusion, repeated sampling is performed in all three simulation cases to account for the effect of the initialization; the second case is designed by adding dimensions to on the basis of the first case; and the third case is constructed by introducing skewness to the first case. Although these numerical solvers have relatively simple structures, their parameters are highly correlated, which mimics the problem with the data for real experimental gradient-elution preparative liquid chromatography. Meanwhile, the calculation can be implemented through vectorization, which makes it possible to have larger sample sizes and more repeated trials. We only present the key plots in this section and leave other plots to Section S6 of the supplementary material.

4.1 Simulation Case 1

For the first case, we considered a Gaussian-mixture model with two components. Let the parameter of interest be , with a single observation with and noise variance from the following numerical solver:

| (15) |

To perform the dimension reduction, we selected and as follows:

These two parameters can be regarded as weights and means in the Gaussian-mixture model. Because , the MGDG algorithm can be initialized by uniformly sampling and setting as the one minimizing the norm between and , i.e.

with defined in (9). The noise variance is initialized with a random value from its prior distribution.

Let stand for the truncated normal density on interval with mean and variance . The other hyperparameters, prior distributions, and proposal distributions are summarized in Table 1. Since the two elements of can always be exchanged in the sampling, we set the smaller one as and the larger one as after each iteration.

| Proposal distribution of for | |

| Prior of | |

| Recording time | |

| Equally spaced time points used in calculation | |

| Number of burn-in samples | |

| Step size and chain length of sampling in MALG |

Fig. 4 shows an example of the samples from our algorithms and from the Metropolis-within-Gibbs sampler. It can be observed that traces of both MGDG and MALG are distributed closely around the ground truth. Such distribution suggests that our method can stably sample from the correlated posterior and that the sample is reliable in inferring . The performance of our method, in this case, differs from that of the Metropolis-within-Gibbs sampler (Hastings (1970)), for which the sample is less stationary and more biased in inferring . A possible reason is that the elements of are highly correlated in the posterior, which makes the Metropolis-within-Gibbs sampler not very reliable.

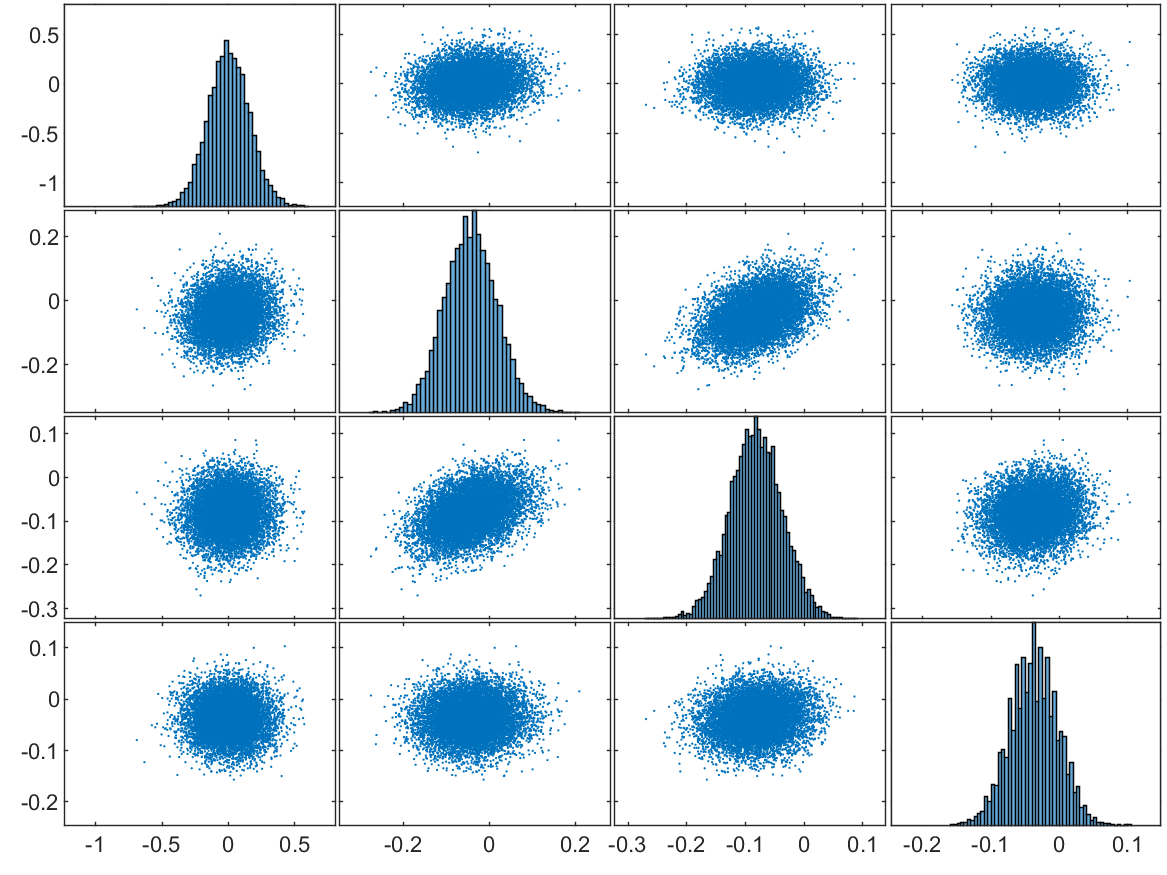

The algorithms are evaluated by the residuals in one sampling trial, i.e. , , and . The corresponding scatter-plot matrices with sample size are presented in Fig. 5. These plots suggest that these residuals are approximately normally distributed around 0, and that the bias is in the same order as the threshold in the gradient descent algorithm. The correlation between is not significant, but the variables in the same group in have a strong linear correlation.

To validate the robustness of our MGDG algorithm, it was run 100 times, and the result is summarized in Fig. 6. The two graphs in the first column show the boxplots of sampled in some iterations. These two panels suggest that the distribution of sampled in multiple repeated trials is very stable, and that the overall error is controlled within an acceptable range. Therefore, the restored should also have a stable distribution that is not far away from , which is consistent with the remaining four panels, where the boxplots of have similar quantiles, and the distance between and the box is in the same order as the gradient descent threshold. In general, the MGDG algorithm can robustly estimate the posterior distribution in multiple repeated experiments. The other algorithm, MALG, performed similarly, and the results can be found in Section S6 of the supplementary material.

Overall, our method is able to deal with the solver defined in (15). The algorithms provide samples of that are stably distributed around . With these samples, can be restored; it is also close to the ground truth . Compared with the Metropolis-within-Gibbs sampler, our MGDG algorithm is more reliable for estimating in this case.

4.2 Simulation Case 2

To test our algorithm with more local minimums on the basis of Case 1, another attempt with a 4-component Gaussian-mixture model and was performed. In this scenario, the observation was with and noise variance from the following numerical solver:

| (16) |

To perform the dimension reduction, we selected and as follows:

where . Similarly, these parameters can be regarded as the weights and means in the Gaussian-mixture model, and the MGDG algorithm was initialized by uniformly sampling and setting as the sample minimizing the norm between and . The noise-term variance was initialized with a random value from its prior distribution. The other hyperparameters, prior distributions, and proposal distributions are summarized in Table 2. Given that each of the elements in plays the same role in (16), we sort them in ascending order after each iteration in sampling.

| Proposal distribution of for | |

| Prior of | |

| Recording time | |

| Equally spaced time points used in calculation | |

| Number of burn-in samples | |

| Step size and chain length of sampling in MALG |

Fig. 7 shows the scatter-plot matrix of the residuals in a trial of MGDG and MALG with sample size . These panels suggest that all of the elements are unimodally distributed around , and that the correlations among the elements of and are not very significant, although some of the adjacent elements in have almost linear correlations. Overall, both and have nearly normal distributions, and the distance from their center to 0 is within an acceptable range. The algorithms were also repeated 100 times to investigate the robustness with the solver defined in (16), and the boxplots can be found in Section S6 of the supplementary material.

In general, our algorithms are still able to effectively estimate the parameters in (16) with acceptable bias. After increasing the dimensions by adding components to the solver, the exchangeable components give more local minima. Our algorithm is not much affected by these local solutions, and is able to provide samples that are normally distributed around the ground truth.

4.3 Simulation Case 3

To complement Case 1, we adopted a Gamma-mixture model to study the performance of our algorithm on skewed observations and steep loss functions. Let the parameter of interest be , and the single observation with and from the following numerical solver:

| (17) |

To perform the dimension reduction, we selected and as follows:

These two parameters can be regarded as the shape and scale parameters in a Gamma-mixture model. With the prior knowledge that , we can still uniformly sample and initialize as the minimizer of /.The noise variance was initialized by sampling from its prior distribution. The other hyperparameters, prior distributions, and proposal distributions are summarized in Table 3. Although the two elements of can always be exchanged in the sampling, we do not sort them in this case.

| Proposal distribution of for | |

| Propose standard derivation for MALG | |

| Propose standard derivation for MGDG | |

| Prior of | |

| Recording time | |

| Equally spaced time points used in calculation | |

| Number of burn-in samples | |

| Step size and chain length of sampling in MALG |

Fig. 8(a) roughly shows the shape and noise level of the input data in this simulation case. With such a skewed observation, the distance between and is no longer as symmetrical as in the previous cases. The huge slope on one side makes the gradient descent more difficult, and the rejection rate during the sampling process increases. As shown in Fig. 8(b), although the trajectory of the sample is still stable, it is far less smooth than in the previous cases.

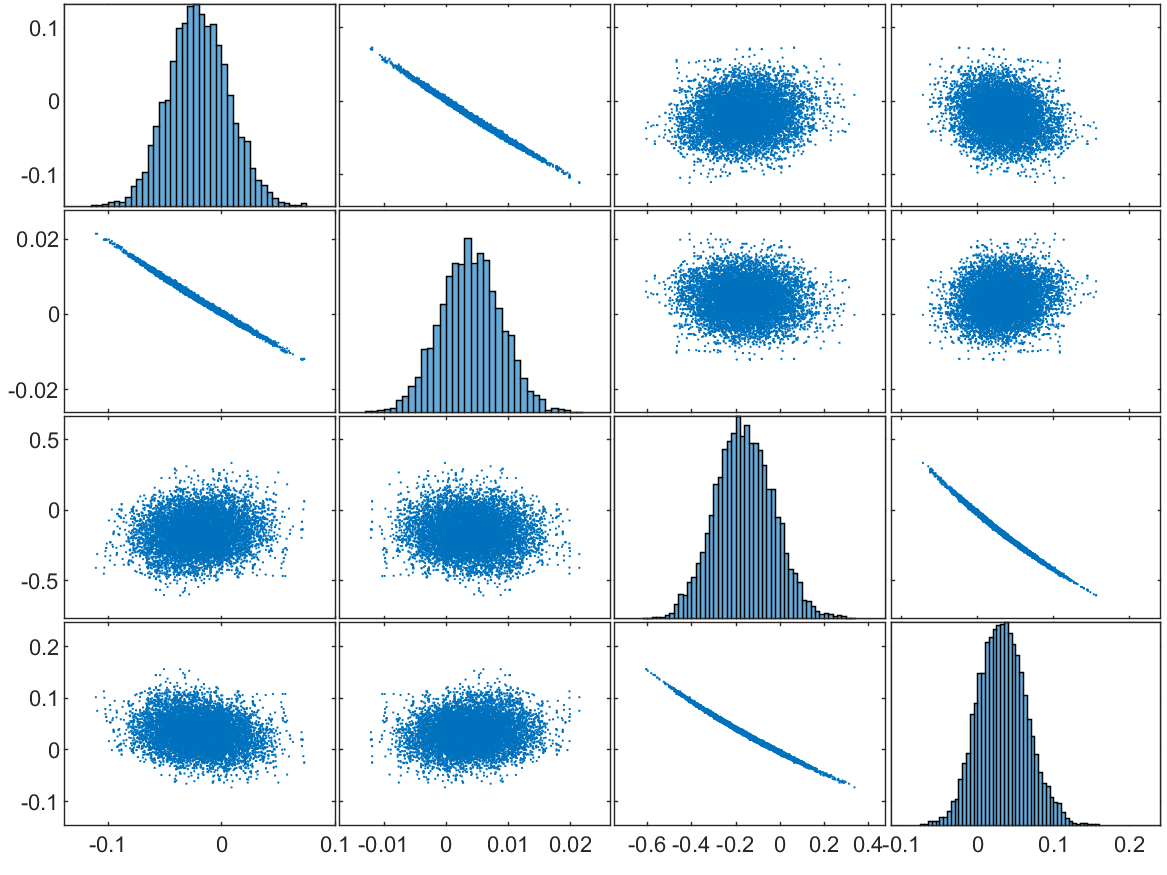

The estimation in one trial is also evaluated by the residuals of samples, and the corresponding scatter-plot matrices with sample size are shown in Fig. 9. According to these scatter plots, the residuals of each parameter are symmetrically distributed with a single peak, and the centers of the peaks are very close to 0, which is also within the range of the threshold of the gradient descent algorithm. The correlation between is not significant, but there seem to be straight lines in the scatter plots, which may be caused by the rejected proposals. As for , we can clearly see that the residuals of each element are also symmetrically and unimodally distributed around 0, and that the two elements belonging to the same component are highly correlated. In general, in a single experiment, samples can be effectively drawn from the posterior distribution.

According to the results from both algorithms, the bias of parameters related to the second component is much larger, which is because the second peak is flatter in signal and such bias does not change the shape of the observation significantly. We also repeated this sampling experiment 100 times, and the results were similar to that of the original (see Section S6 of the supplementary material). Overall, our method is able to deal with the skew-solver from the Gamma-mixture density function.

5 Real data application

In this section, we verify our algorithm with real data. First, we briefly introduce the background of the real experiment, after which the model, parameter settings, and data-generation process are presented in detail. Finally, we evaluate the performance of our method through repeated experiments.

5.1 Experiment background

Our data consist of gradient separations of a cycloheptanone/cyclohexanone mixture. The experiments were conducted on an Agilent 1200 system (Palo Alto, CA, USA), with a 150 mm 4.6 mm Kromasil column (AkzoNobel Eka, Bohus, Sweden) filled with C18-bonded porous silica, with an average particle diameter of 5 m. The system contained a 900 L-injection-loop auto-sampler, a binary pump system, a diode-array UV detector, and a column thermostat. In all experiments, the column was held at a constant temperature of 22 ∘C, and the flow rate was 1.0mL/min.

The solutes adopted in the experiments were cyclohexanone (95%) and cycloheptanone (95%) from Sigma-Aldrich (Steinheim, Germany), while the solvents used in the pycnometer measurements were dichloromethane (99.5%) from VWR International (Paris, France) and isopropanol (HPLC grade) from Fisher Scientific (Loughborough, UK). The mobile phase was composed of HPLC-grade methanol from Fisher Scientific (Loughborough, UK) and deionized water, with a conductivity of 18.2 Mcm, supplied by a Milli-Q Plus 185 water-purification system from Millipore (Merck Millipore, MA, USA).

During the experiments, calibration curves for cyclohexanone and cycloheptanone were recorded at 280 nm for several mobile-phase compositions. The column hold-up volume measured with a pycnometer was 1.38 mL. To match the injected amount of solute, the total area under the peaks in the elution profiles was adjusted. Indistinguishable inlet and outlet effects were included in the injection profile, and the injection profile was recorded and used in the calculations.

5.2 Model setting and data generation

Based on the experiment background, we can consider a chromatography system with a 150 mm 4.6 mm column, a flow rate of 0.7 mL/min, and 9000 theoretical plates. The hold time is 1.5 min and 400 L samples are introduced using rectangular injection profiles. In this section, we focus on a single observation from

where is the solution to the time-dependent convection-diffusion system defined in (1); all the other parameters adopted in that PDE system are summarized in Table 4. In obtaining real data, researchers usually make multiple observations of chromatographic curves with the same parameter settings and average the records to reduce errors. This allows the noise term to have a smaller variance and the averaged noise will be distributed closer to a Gaussian distribution. Overall, the noise level of observation used in this section is similar to the one shown in Fig. 10.

| Parameter | Description |

|---|---|

| Linear velocity [cm/s] | |

| Column length [cm] | |

| Dead time [s] | |

| Phase ratio | |

| Diffusion constant | |

| Initial condition | |

| Injection profile (mM) |

To simplify the problem, we silence part of the parameters. The solid black line in Fig. 10 is an example of a clean bimodal observation from with full parameter set and . Each peak in that signal roughly corresponds to a set of parameters , which also represents a component in the sample. We observe that these peaks can be separated after adjusting injection parameter , and the second one almost has the same shape as that from the observation from , where and , which is shown as Set 2 in Fig. 10. Therefore, can be directly estimated from the signal segment containing only the second peak, and the estimator will help us figure out the remaining parameters. Given the fact that multiple sets of the parameters will only bring additional calculation difficulties, we focus only on the case of one peak by setting the injection profile of the second group as and letting the parameter of interest be in this section.

5.3 Parameter estimation

After identifying the model and parameter settings, we let the observation of interest be from , where the noise level estimated from the flat part (i.e. ) is . To estimate with the MGDG algorithm, we set and as

To ensure the algorithm MALG produces a valid Markov chain, we adopt the element-wise transformation

and sample the elements from the entire real line.

Since are also non-negative and , we still sample and initialize as the one minimizing with respect to . The noise variance is initialized with a sampled value from its prior. Together with the other distributions and hyperparameters summarized in Table 5, posterior samples can be obtained from our algorithms.

| Proposal distribution of for in MGDG | |

| Proposal distribution of for in MALG | |

| Prior of | |

| Recording time | |

| Equally spaced time points used in calculation | |

| Number of burn-in samples | |

| Step size and chain length of sampling in MALG |

The sampling result is summarized in Fig. 11, in which the sampled with MGDG is distributed in a wide range, while the samples from MALG are distributed with some pattern and has a higher rejection rate. The acceptance rate is due to the design of the algorithm - a new proposal of is more easily accepted in one MGDG iteration, as we chose an optimal for it. From the samples, an empirical 95% credible interval (CI) can be constructed from the quantile of for each . Fig. 11(c)11(f) present this 95% CI from one trial of MGDG and MALG, in light blue. The CIs are so narrow that they almost coincide with the clean truth . Since there are multiple solutions, these samples are evaluated by the relative error between the and , that is,

where the lower and upper bound of the 95% CI in Fig. 11(c) have a relative error of approximately and , while itself has a relative error of approximately . Moreover, the two traces of are stationary distributed around . This, on the other hand, confirms the validity of our algorithm with real data.

|

|||||||

|---|---|---|---|---|---|---|---|

| MGDG | 0.4341(0.0944) | 0.4509(0.1313) | 2.9936(0.0000) | 0.1515(0.0000) | 0.0310(0.0020) | ||

| MALG | 0.4144(0.1041) | 0.3348(0.1970) | 3.0012(0.0225) | 0.1510(0.0020) | 0.0676(0.0290) |

To validate the robustness of our algorithms, the sampling was repeated 20 times, and the result is summarized in Table 6. The large standard deviations of can be attributed to multiple solutions from the time-dependent convection-diffusion system in (1). In contrast, the distribution of is very concentrated, and in most cases, the 95% CIs are very close to . Overall, these experiments indicate that our algorithms can robustly infer based on the observations .

6 Conclusion

The primary objective of this study was to develop a probabilistic model and estimate the adsorption-isotherm parameters in gradient-elution preparative liquid chromatography from a statistical perspective.

With the aim of estimating the adsorption-isotherm parameters reliably, a statistical observing model with spatial-correlation noise was proposed. Because the estimation was affected by the correlation between the parameters in the preliminary experiment, we designed two modified MCMC algorithms to reduce this effect. These algorithms were verified on several numerical solvers with highly correlated parameters, and they were able to produce approximately normally distributed estimators with acceptable bias. The verification indicates that our method is reliable with correlated parameters. The real data application revealed that the estimation of the parameters is not unique and that our method leads to reasonable results.

Our method has major implications for estimating parameters that are correlated among their elements. On one hand, these algorithms avoid the limitation of the local optimal solution, but on the other hand, they do not require the objective function to be derivable for all variables. The combination of random sampling and optimization methods expands their scope of application. Under certain conditions, the performance of the proposed approach is guaranteed theoretically.

It should be noted that the estimation procedure here has two limitations. First, the gradient of the objective function is calculated numerically. Although it does not consume too much time when implemented in parallel, thousands of repetitions of gradient descent embedded in sampling iterations still require enormous computing resources. Second, the proposed approach can only result in a group of possible estimators when the real data are processed, and we cannot make further evaluations of these results. Because the signals deduced from these estimates are very close to the initial observations, they have almost the same likelihood from a statistical point of view. It is possible that further research on the forward model could reduce the demand for computing resources and make these parameters identifiable. With such a forward model, the efficiency and accuracy of the proposed approach could be further improved.

Overall, a reliable estimation of the adsorption isotherm requires dealing with the correlation efficiently and describing the signal accurately. Our method enables us to explore the combination of sampling and optimization in a more advanced form, to further estimate the adsorption-isotherm parameters. Besides the considered chromatography application, our statistical approach has potential to be applied in other inverse problems associated with some time-dependent convection-diffusion PDEs such as the hydrogen plasma model in astrophysics (Berryman and Holland (1978)), the autowave model in environmental pollution (Chaikovskii and Zhang (2022)), and the atherosclerosis inflammatory disease model in biology (Hidalgo, Tello and Toro (2014)). We are currently looking into these extensions.

Supplementary material for “A Statistical Approach to Estimating Adsorption-Isotherm Parameters in Gradient-Elution Preparative Liquid Chromatography” \slink[doi]COMPLETED BY THE TYPESETTER \sdatatype.pdf \sdescriptionWe include all materials omitted from the main text.

Acknowledgements

The authors would also like to thank the Fundamental Separation Science Group (FSSG) under the supervision of Professor Torgny Fornstedt at Karlstad University, Sweden for providing the real data (cyclohexanone and cycloheptanone). Z.Y. would like to thank the Singapore MOE Tier 1 grants R-155-000- 196-114, A-0004826-00-00 and Tier 2 grant A-0008520-00-00 at the National University of Singapore. C.L. would like to thank the Singapore MOE Tier 1 grant A-0004822-00-00 at the National University of Singapore

References

- Belloni and Chernozhukov (2009) {barticle}[author] \bauthor\bsnmBelloni, \bfnmA.\binitsA. and \bauthor\bsnmChernozhukov, \bfnmV.\binitsV. (\byear2009). \btitleOn the computational complexity of MCMC-based estimators in large samples. \bjournalThe Annals of Statistics \bvolume37 \bpages2011–2055. \endbibitem

- Berryman and Holland (1978) {barticle}[author] \bauthor\bsnmBerryman, \bfnmJames G\binitsJ. G. and \bauthor\bsnmHolland, \bfnmCharles J\binitsC. J. (\byear1978). \btitleNonlinear diffusion problem arising in plasma physics. \bjournalPhysical Review Letters \bvolume40 \bpages1720. \endbibitem

- Chaikovskii and Zhang (2022) {barticle}[author] \bauthor\bsnmChaikovskii, \bfnmDmitrii\binitsD. and \bauthor\bsnmZhang, \bfnmYe\binitsY. (\byear2022). \btitleConvergence analysis for forward and inverse problems in singularly perturbed time-dependent reaction-advection-diffusion equations. \bjournalJournal of Computational Physics \bvolume470 \bpages111609. \endbibitem

- Cheng et al. (2017) {barticle}[author] \bauthor\bsnmCheng, \bfnmX.\binitsX., \bauthor\bsnmLin, \bfnmG.\binitsG., \bauthor\bsnmZhang, \bfnmY.\binitsY., \bauthor\bsnmGong, \bfnmR.\binitsR. and \bauthor\bsnmGulliksson, \bfnmM.\binitsM. (\byear2017). \btitleA modified coupled complex boundary method for an inverse chromatography problem. \bjournalJ. Inverse Ill-Posed Probl. \bpages1–17. \endbibitem

- Chkrebtii et al. (2016) {barticle}[author] \bauthor\bsnmChkrebtii, \bfnmO. A.\binitsO. A., \bauthor\bsnmCampbell, \bfnmD. A.\binitsD. A., \bauthor\bsnmCalderhead, \bfnmB.\binitsB. and \bauthor\bsnmGirolami, \bfnmM. A.\binitsM. A. (\byear2016). \btitleBayesian solution uncertainty quantification for differential equations. \bjournalBayesian Analysis \bvolume11 \bpages1239–1267. \endbibitem

- Cockayne et al. (2019) {barticle}[author] \bauthor\bsnmCockayne, \bfnmJ.\binitsJ., \bauthor\bsnmOates, \bfnmC. J.\binitsC. J., \bauthor\bsnmSullivan, \bfnmT. J.\binitsT. J. and \bauthor\bsnmGirolami, \bfnmM. A.\binitsM. A. (\byear2019). \btitleBayesian probabilistic numerical methods. \bjournalSIAM Review \bvolume61 \bpages756–789. \endbibitem

- Dose, Jacobson and Guiochon (1991) {barticle}[author] \bauthor\bsnmDose, \bfnmEric V.\binitsE. V., \bauthor\bsnmJacobson, \bfnmStephen\binitsS. and \bauthor\bsnmGuiochon, \bfnmGeorges\binitsG. (\byear1991). \btitleDetermination of isotherms from chromatographic peak shapes. \bjournalAnal. chemi. \bvolume63 \bpages833–839. \endbibitem

- Felinger, Zhou and Guiochon (2003) {barticle}[author] \bauthor\bsnmFelinger, \bfnmAttila\binitsA., \bauthor\bsnmZhou, \bfnmDongmei\binitsD. and \bauthor\bsnmGuiochon, \bfnmGeorges\binitsG. (\byear2003). \btitleDetermination of the single component and competitive adsorption isotherms of the 1-indanol enantiomers by the inverse method. \bjournalJ. Chromatogr. A \bvolume1005 \bpages35–49. \endbibitem

- Forssén, Arnell and Fornstedt (2006) {barticle}[author] \bauthor\bsnmForssén, \bfnmP.\binitsP., \bauthor\bsnmArnell, \bfnmR.\binitsR. and \bauthor\bsnmFornstedt, \bfnmT.\binitsT. (\byear2006). \btitleAn improved algorithm for solving inverse problems in liquid chromatography. \bjournalComput. Chem. Eng. \bvolume30 \bpages1381–1391. \endbibitem

- Ghosal and van der Vaart (2017) {bbook}[author] \bauthor\bsnmGhosal, \bfnmS.\binitsS. and \bauthor\bparticlevan der \bsnmVaart, \bfnmA. W.\binitsA. W. (\byear2017). \btitleFundamentals of Nonparametric Bayesian Inference. \bpublisherCambridge University Press. \endbibitem

- Guiochon and Lin (2003) {bbook}[author] \bauthor\bsnmGuiochon, \bfnmGeorges\binitsG. and \bauthor\bsnmLin, \bfnmBingchang\binitsB. (\byear2003). \btitleModeling for Preparative Chromatography. \bpublisherNew York: Academic Press. \endbibitem

- Hastings (1970) {barticle}[author] \bauthor\bsnmHastings, \bfnmW. K.\binitsW. K. (\byear1970). \btitleMonte Carlo sampling methods using Markov chains and their applications. \bjournalBiometrika \bvolume57 \bpages97-109. \bdoi10.1093/biomet/57.1.97 \endbibitem

- Hidalgo, Tello and Toro (2014) {barticle}[author] \bauthor\bsnmHidalgo, \bfnmA\binitsA., \bauthor\bsnmTello, \bfnmL\binitsL. and \bauthor\bsnmToro, \bfnmEF\binitsE. (\byear2014). \btitleNumerical and analytical study of an atherosclerosis inflammatory disease model. \bjournalJournal of Mathematical Biology \bvolume68 \bpages1785–1814. \endbibitem

- Horvath (1988) {bbook}[author] \bauthor\bsnmHorvath, \bfnmC.\binitsC. (\byear1988). \btitleIn high-performance liquid chromatography: Advances and perspectives (Volume 5). \bpublisherNew York: Academic Press. \endbibitem

- Jasra, Holmes and Stephens (2005) {barticle}[author] \bauthor\bsnmJasra, \bfnmA.\binitsA., \bauthor\bsnmHolmes, \bfnmC. C.\binitsC. C. and \bauthor\bsnmStephens, \bfnmD. A.\binitsD. A. (\byear2005). \btitleMarkov chain Monte Carlo methods and the label switching problem in Bayesian mixture modeling. \bjournalStatistical Science \bvolume20 \bpages50–67. \endbibitem

- Javeed et al. (2011) {barticle}[author] \bauthor\bsnmJaveed, \bfnmS.\binitsS., \bauthor\bsnmQamar, \bfnmS.\binitsS., \bauthor\bsnmSeidel-Morgenstern, \bfnmA.\binitsA. and \bauthor\bsnmWarnecke, \bfnmG.\binitsG. (\byear2011). \btitleEfficient and accurate numerical simulation of nonlinear chromatographic processes. \bjournalComputers and Chemical Engineering \bvolume35 \bpages2294-2305. \endbibitem

- Lin et al. (2017) {barticle}[author] \bauthor\bsnmLin, \bfnmG.\binitsG., \bauthor\bsnmZhang, \bfnmY.\binitsY., \bauthor\bsnmCheng, \bfnmX.\binitsX., \bauthor\bsnmGulliksson, \bfnmM.\binitsM., \bauthor\bsnmForssén, \bfnmP.\binitsP. and \bauthor\bsnmFornstedt, \bfnmT.\binitsT. (\byear2017). \btitleA regularizing Kohn-Vogelius formulation for the model-free adsorption isotherm estimation problem in chromatography. \bjournalApplicable Analysis \bpages1–28. \endbibitem

- Lisec, Hugo and Seidel-Morgenstern (2001) {barticle}[author] \bauthor\bsnmLisec, \bfnmO.\binitsO., \bauthor\bsnmHugo, \bfnmP.\binitsP. and \bauthor\bsnmSeidel-Morgenstern, \bfnmA.\binitsA. (\byear2001). \btitleFrontal analysis method to determine competitive adsorption isotherms. \bjournalJ. Chromatogr. A \bvolume908 \bpages19–34. \endbibitem

- Roberts and Rosenthal (1998) {barticle}[author] \bauthor\bsnmRoberts, \bfnmG. O.\binitsG. O. and \bauthor\bsnmRosenthal, \bfnmJ. S.\binitsJ. S. (\byear1998). \btitleOptimal scaling of discrete approximations to Langevin diffusions. \bjournalJournal of the Royal Statistical Society: Series B (Statistical Methodology) \bvolume60 \bpages255–268. \endbibitem

- Roberts and Tweedie (1996) {barticle}[author] \bauthor\bsnmRoberts, \bfnmG. O.\binitsG. O. and \bauthor\bsnmTweedie, \bfnmR. L.\binitsR. L. (\byear1996). \btitleExponential convergence of Langevin distributions and their discrete approximations. \bjournalBernoulli \bvolume2 \bpages341–363. \endbibitem

- Ruthven (1984) {bbook}[author] \bauthor\bsnmRuthven, \bfnmD. M.\binitsD. M. (\byear1984). \btitlePrinciples of adsorption and adsorption processes. \bpublisherNew York: Wiley. \endbibitem

- Xun et al. (2013) {barticle}[author] \bauthor\bsnmXun, \bfnmX.\binitsX., \bauthor\bsnmCao, \bfnmJ.\binitsJ., \bauthor\bsnmMallick, \bfnmB.\binitsB., \bauthor\bsnmMaity, \bfnmA.\binitsA. and \bauthor\bsnmCarroll, \bfnmR. J.\binitsR. J. (\byear2013). \btitleParameter estimation of partial differential equation models. \bjournalJournal of the American Statistical Association \bvolume108 \bpages1009–1020. \endbibitem

- Zhang et al. (2016a) {barticle}[author] \bauthor\bsnmZhang, \bfnmYe\binitsY., \bauthor\bsnmLin, \bfnmGuangliang\binitsG., \bauthor\bsnmForssén, \bfnmPatrik\binitsP., \bauthor\bsnmGulliksson, \bfnmMårten\binitsM., \bauthor\bsnmFornstedt, \bfnmTorgny\binitsT. and \bauthor\bsnmCheng, \bfnmXiaoliang\binitsX. (\byear2016a). \btitleA regularization method for the reconstruction of adsorption isotherms in liquid chromatography. \bjournalInverse Probl. \bvolume32 \bpages105005. \endbibitem

- Zhang et al. (2016b) {barticle}[author] \bauthor\bsnmZhang, \bfnmY.\binitsY., \bauthor\bsnmLin, \bfnmG.\binitsG., \bauthor\bsnmGulliksson, \bfnmM.\binitsM., \bauthor\bsnmForssén, \bfnmP.\binitsP., \bauthor\bsnmFornstedt, \bfnmT.\binitsT. and \bauthor\bsnmCheng, \bfnmX.\binitsX. (\byear2016b). \btitleAn adjoint method in inverse problems of chromatography. \bjournalInverse Probl. Sci. Eng. \bpages1–26. \endbibitem