A Spectral Approach for Learning Spatiotemporal Neural Differential Equations

Abstract

Rapidly developing machine learning methods has stimulated research interest in computationally reconstructing differential equations (DEs) from observational data which may provide additional insight into underlying causative mechanisms. In this paper, we propose a novel neural-ODE based method that uses spectral expansions in space to learn spatiotemporal DEs. The major advantage of our spectral neural DE learning approach is that it does not rely on spatial discretization, thus allowing the target spatiotemporal equations to contain long-range, nonlocal spatial interactions that act on unbounded spatial domains. Our spectral approach is shown to be as accurate as some of the latest machine learning approaches for learning PDEs operating on bounded domains. By developing a spectral framework for learning both PDEs and integro-differential equations, we extend machine learning methods to apply to unbounded DEs and a larger class of problems.

keywords:

Neural ODE , Spectral method , Inverse problem , Unbounded domains1 Introduction

There has been much recent interest in developing machine-learning-based methods for learning the underlying physics from data. Although machine learning approaches have been proposed for many types of inverse problems [1, 2, 3, 4], most of them make prior assumptions on the specific form of the underlying partial differential equation (PDE) and use discretization i.e., grids or meshes, of a bounded spatial variable to approximate solutions to a presupposed PDE. To our knowledge, there are no methods for learning, without prior assumptions, the “dynamics” of more general spatiotemporal differential equations (DEs) such as

| (1) |

Previous methods either assume a specific form of the operator in order to satisfy e.g., a conservation law [5], or circumvent learning by simply reconstructing the map from the initial condition to the solution at a later time. Moreover, since most prevailing numerical methods for time-dependent DEs rely on spatial discretization, they cannot be applied to problems defined on an unbounded domain [6].

In this paper, we propose a spectral-based DE learning method that extracts the unknown dynamics in Eq. (1) by using a parameterized neural network to express . Throughout this paper, the term “spatiotemporal DE” refers to a differential equation in the form of Eq. (1). The formal solution is then represented by a spectral expansion in space,

| (2) |

where is a set of appropriate basis functions that can be defined on bounded or unbounded domains and are the associated coefficients.

The best choice of basis functions will depend on the spatial domain. In bounded domains, any set of basis functions in the Jacobi polynomial family, including Chebyshev and Legendre polynomials, provides similar performance and convergence rates; for semibounded domains , generalized Laguerre functions are often used; for unbounded domains , generalized Hermite functions are used if the solution is exponentially decaying at infinity, while mapped Jacobi functions are used if the solution is algebraically decaying [7, 8]. By using such spectral expansions, a numerical scheme for Eq. (1) can be expressed as ordinary differential equations (ODEs) in the expansion coefficients

| (3) |

There have been a variety of machine-learning-based methods recently developed for learning PDEs. Long et al. [9, 10] applied neural networks to learning PDEs that contain only constant-coefficient spatial differential operators. Convolutional neural networks were then used to reconstruct these constant coefficients. Xu et al. [11] then studied spatiotemporal PDEs of the form , where is the to-be-learned row vector of coefficients associated with each type of potential term in the PDE. These methods imposed an additive form for . A neural PDE solver that partially relaxes the need for prior assumptions on was proposed in [5]. This approach learns the mapping for time-homogeneous conservation PDEs of the form . Since is the solution on grid points at times , this method also relies on spatial discretization and can only be used to learn bounded-domain local operators. Additionally, a Fourier neural operator (FNO) approach [12] which learns the mapping between the function space of the initial condition and the function space of the solution within a later time range has also been developed [13]. Generalizations that include basis functions other than the Fourier series were developed in [14]. However, such methods treat and in the same way using nonadaptive basis functions, which cannot be efficiently applied to unbounded-domain spatiotemporal problems where basis functions often need to be dynamically adjusted.

Recently, a spectrally adapted PINN (s-PINN) method was proposed to solve specified unbounded-domain PDEs [15]. The method expresses the underlying unknown function in terms of spectral expansions, does not rely on spatial discretization, and can be applied to unbounded domains. However, like many other approaches, the s-PINN approach assumes that the PDE takes the specific form , where all terms in are known and only the -independent source term can be learned because the neural network only accepts times as inputs. Therefore, the s-PINN method is limited to parameter inference and source reconstruction.

The spectral neural DE learning method proposed here differs substantially from the s-PINN framework because it does not make any assumption on the form of the spatiotemporal DE in Eq. (1) other than that the RHS does not contain time-differentials or time-integrals of . It inputs both the solution (in terms of a spectral expansion) and into the neural network and applies a neural ODE model [16] (see Fig. 1 (c)). Thus, general DEs such as Eq. (1) can be learned with little knowledge of the RHS. To summarize, the proposed method presented in this paper

-

(i)

does not require assumptions on the -dependence of other than it should not contain any time-derivatives or time-integrals of . Both spatiotemporal PDEs and integro-differential equations can be learned in a unified way.

-

(ii)

directly learns the dynamics of a spatiotemporal DE by using a parameterized neural network that can time-extrapolate the solutions, and

-

(iii)

does not rely on spatial discretization and can thus learn unbounded-domain DEs. By using adaptive spectral representations, our neural DE learning method also learns the dynamics of the adaptive parameters adjusting the basis functions.

In the next section, we formulate our spectral neural DE learning method. In Section 3, we use our spectral neural DE method to learn the underlying dynamics of DEs, across both bounded and unbounded domains, by carrying out numerical experiments. Although our main focus is to address learning unbounded-domain DEs, we perform benchmarking comparisons with other recent machine-learning based PDE learning methods available for bounded-domain problems. Concluding remarks are given in Section 4. Additional numerical experiments and results are given in the Appendix. Below is a table of the notation used in this paper.

| Symbol | Definition |

| spectral expansion order | |

| neural network hyperparameters | |

| order basis function in a spatial spectral expansion | |

| spatial scaling factor in basis functions: | |

| spatial translation in basis functions: | |

| order spectral expansion approximation: | |

| generalized Hermite function of order |

2 Spectral neural DE learning method

We now develop a spectral neural DE learning method for general spatiotemporal DEs of the general structure of Eq. (1), assuming the operator does not involve time-differentiation or time-integration of . However, unlike in [5], the operator can take any other form including differentiation in space, spatial convolution, and nonlinear terms.

First, consider a bounded spatial domain . Upon choosing proper orthogonal basis functions , we can approximate by the spectral expansion in Eq. (2) and obtain ordinary equations of the coefficients as Eq. (3). We aim to reconstruct the dynamics in Eq. (3) by using a neural network

| (4) |

where is the set of parameters in the neural network. We can then construct the RHS of Eq. (1) using where is the component of the vector . We shall use the neural ODE to learn the dynamics by minimizing the mean loss function between the numerical solution and the observations . When data are provided at discrete time points , we need to minimize

| (5) |

with respect to . Here, is the solution at of the trajectory in the dataset and denotes the spectral expansion solution reconstructed from the coefficients obtained by the neural ODE of the sample at .

To solve unbounded domain DEs in , two additional parameters are needed to scale and translate the spatial argument , a scaling factor , and a shift factor . These factors, particularly in unbounded domains, often need to be dynamically adjusted to obtain accurate spectral approximations of the original function [17, 6, 18]. Note that given the observed , the ground truth coefficients as well as the spectral adjustment parameters and are obtained by minimizing the frequency indicator (introduced in [6])

| (6) |

that measures the error in the numerical representation of the solution [19]. Therefore, minimizing will also minimize the approximation error .

Generalizing the spectral approximation Eq. (2) to higher spatial dimensions, we can write

| (7) |

where is the Hadamard product.

The two parameters , which are also functions of time, can also be learned by the neural ODE. More specifically, we append the scale and displacement variables to the coefficient vector and write for the ODEs satisfied by . The underlying dynamics is approximated as

| (8) |

by minimizing with respect to a loss function that also penalizes the error in and

| (9) | ||||

Similarly, the DE satisfied by is

| (10) |

where

| (11) |

and is the component of .

Here, and are the scaling factor and the displacement of the sample at time , respectively, and is the penalty due to squared mismatches in the scaling and shift parameters and . In this way, the dynamics of the variables are also learned by the neural ODE so they do not need to be manually adjusted as they were in [19, 6, 18, 15].

In case the space is high-dimensional, and the solutions are sufficiently smooth and well-behaved, they can be approximated by restricting the basis functions to those in the hyperbolic cross space of the full tensor product of basis functions. If this projection is performed optimally, the effective dimensionality of the problem can be reduced [20, 21] without significant loss of accuracy. We will show that our method can also easily incorporate hyperbolic spaces to enhance training efficiency in modestly higher-dimensional problems.

3 Numerical experiments

Here, we carry out numerical experiments to test our spectral neural DE method by learning the underlying DE given data in both bounded and unbounded domains. In this section, all numerical experiments are performed using the odeint_adjoint function along with the dopri5 numerical integration method developed in [16] for training the neural network. Both stochastic gradient descent (SGD) and the Adam method are used to optimize parameters of the neural network. In this work, we set to be the relative squared error

| (12) |

in the loss function Eq. (5) used for training.

Since algorithms already exist for learning bounded-domain PDEs, we we first examine a bounded-domain problem in order to benchmark our spectral neural DE method against two other recent representative methods, a convolutional neural PDE learner [5] and a Fourier neural operator PDE learning method [13].

Example 1.

For our first test example, we consider learning a bounded-domain Burgers’ equation that might be used to describe the behavior of viscous fluid flow [22]:

| (13) | ||||

where

| (14) |

This model admits the analytic solution . We then sample the two independent random variables from to generate a class of solutions to Eq. (14) for both training and testing. To approximate in Eq. (4), we use a neural network that has one intermediate layer with 300 neurons and the ELU activation function. The basis functions in Eq. (2) are taken to be Chebyshev polynomials. For training, we use two hundred solutions (each corresponding to a pair of values of Eq. (13), record the expansion coefficients at different times . The test set consists of another solutions, also evaluated at times .

In this bounded-domain problem, we can compare our results with those generated from the Fourier neural operator (FNO) and the convolutional neural PDE learner methods. In the FNO method, four intermediate Fourier convolution layers with 128 neurons in each layer were used to input the initial condition and function values (with ) [13]. In the convolutional neural PDE learner comparison, we used seven convolutional layers with 40 neurons in each layer to input (with ) [5]. Small and were used in the convolutional neural PDE learner method because this method depends on both spatial and temporal discretization, requiring fine discretization in both dimensions. For all three methods, we used the Adam method to perform gradient descent with a learning rate to run 10000 epochs, which was sufficient for the errors in all three methods to converge. We list in Table 2 both the MSE error and the mean relative error

| (15) |

| Training error | Testing error | |||

| MSE | Mean relative | MSE | Mean relative | |

| convolutional | 2.39e-051.82e-05 | 1.68e-027.86e-03 | 1.10e-046.62e-05 | 2.82e-026.62e-03 |

| Fourier | 3.21e-071.53e-07 | 7.43e-031.76e-03 | 4.66e-073.43e-07 | 8.61e-032.86e-03 |

| spectral | 1.15e-069.72e-07 | 9.82e-034.95e-03 | 1.17e-069.73e-07 | 9.99e-034.97e-03 |

For the FNO and spectral PDE learning methods, we aim to minimize the relative squared loss (Eq. (12)), while for the convolution neural PDE method, we must minimize the MSE loss since only partial and local spatial information on the solution is inputted during each training epoch so we cannot properly define a relative squared loss. Thus, as shown in Table 2, the MSE of the FNO method is smaller than the MSEs of the other two methods on the training set while the convolutional neural PDE method performs the worst. Nonetheless, our proposed neural spectral PDE learning approach gives similar performance compared to the FNO method, providing comparable mean relative and MSE errors for learning the dynamics associated with the bounded-domain Burgers’ equation.

Additionally, the run times in this example were hours for the convolutional PDE learning method, 6 hours for the FNO method, and 5 hours for our proposed spectral neural DE learning approach. These computations were implemented on a 4-core Intel® i7-8550U CPU, 1.80 GHz laptop using Python 3.8.10, Torch 1.12.1, and Torchdiffeq 0.2.3. Overall, even in bounded domains, our proposed spectral neural DE learning approach compares well with the recently developed convolutional neural PDE and FNO methods, providing comparable errors and efficiency in learning the dynamics of Eq. (13).

The Fourier neural operator method works well in reconstructing the Burgers’ equation in Example 1, and there could be other even more efficient methods for reconstructing bounded domain spatiotemporal DEs. However, reconstructing unbounded domain spatiotemporal DEs is substantially different from reconstructing bounded domain counterparts. First, discretizing space cannot be directly applied to unbounded domains; second, if we truncate an unbounded domain into a bounded domain, appropriate artificial boundary conditions need to be imposed [23]. Constructing such boundary conditions is usually complex and improper boundary conditions can lead to large errors, resulting erroneous learning of the DE. A simple example of when the FNO will fail when we truncate the unbounded domain into a bounded domain is provided in A.

Since our spectral method uses basis functions, it obviates the need for spatial discretization and can be used to reconstruct unbounded-domain DEs. Dynamics in unbounded domains are intrinsically different from their bounded-domain counterparts because functions can display diffusive and convective behavior leading to, e.g., time-dependent growth at large . This growth poses intrinsic numerical challenges when using prevailing finite element/finite difference methods that truncate the domain.

Although it is difficult for most existing methods to learn the dynamics in unbounded spatial domains, our spectral approach can reconstruct unbounded-domain DEs by simultaneously learning the expansion coefficients and the evolution of the basis functions. To illustrate this, we next consider a one-dimensional unbounded domain inverse problem.

Example 2.

Consider learning the solution

| (16) |

and its associated parabolic PDE

| (17) |

This problem is defined on an unbounded domain and thus neither the FNO nor the convolutional neural PDE methods can be used as they rely on spatial discretization (and thus bounded domains). However, given observational data for different , we can calculate the spectral expansion of via the generalized Hermite functions [8]

| (18) |

and then use the spectral neural DE learning approach to reconstruct the dynamics in Eq. (1) satisfied by . Recall that the scaling factor and the displacement of the basis functions are also to be learned. To penalize misalignment of the spectral expansion coefficients and the scaling and displacement factors and , we use the loss function Eq. (9). Note that taking the derivative of with respect to in the first term of Eq. (9) would involve evaluating ; similarly, taking the derivative of with respect to would involve evaluating . Expressing in terms of the basis functions would involve a dense matrix-vector multiplication of the coefficients of the expansion , which might be computationally expensive during backward propagation in the stochastic gradient descent (SGD) procedure. Alternatively, suppose the parameter set of the neural network is before the training epoch. We can fix ( are fixed values and do not depend on the weights ) and then modify Eq. (9) to

| (19) | ||||

so that backpropagation within each epoch will not involve taking derivatives w.r.t. for in the first term of Eq. (19). The reason is that when are well fitted (e.g. are ground-truth values), Eq. (19) will simply become

| (20) |

so no derivative of w.r.t. will be used and only the gradient of in Eq. (4) w.r.t. arises. Therefore, we can consider separating the fitting of the coefficients and the fitting of .

We use 100 samples for training and another 50 samples for testing with . A neural network with two hidden layers, 200 neurons in each layer, and the ELU activation function is used for training. Both training and testing data are taken from Eq. (16) with sampled parameters .

Setting , we first compare the two different loss functions Eqs. (9) and (19). After running 10 independent training processes using SGD, each containing 2000 epochs and using learning rate , the average relative error when using the loss function Eq. (9) are larger than the average relative errors when using the loss function Eq. (19). This difference arises in both the training and testing sets as shown in Fig. 2(a).

In Fig. 2(b), we plot the average learned (RHS in Eq. (1)) for a randomly select sample at in the testing set. The dynamics learned by using Eq. (19) is a little more accurate than that learned by using Eq. (9). Also, using the loss function Eq. (19) required only hour of computational time compared to 5 days when learning with Eq. (9) (on the 4-core i7-8550U laptop). Therefore, for efficiency and accuracy, we adopt the revised loss function Eq. (19) and separately fit the dynamics of the adaptive spectral parameters () and the spectral coefficients .

We also explore how network architecture and regularization affect the reconstructed dynamics. The results are shown in B, from which we observe that a wider and shallower neural network with 2 or 4 intermediate layers and 200 neurons in each layer yields the smallest errors on both the training and testing sets, and short run times. We also apply a ResNet [24] as well as dropout [25, 26] to regularize the neural network structure. Dropout regularization does not reduce either the training error or the testing error probably because even with a feedforward neural network, the errors from our spectral neural DE learner on the training set are close to those on the testing set and there is no overfitting issue. On the other hand, applying the ResNet technique leads to about a 20% decrease in errors. Results from using ResNets and dropout are shown in B.

Next, we investigate how noise in the observed data and changes in the adaptive parameter penalty coefficient in Eq. (19) impact the results. Noise is incorporated into simulated observational data as

| (21) |

where is the solution to the parabolic equation Eq. (17) given by Eq. (16) and is a Gaussian-distributed noise that is both spatially and temporally uncorrelated (i.e., ). The noise term is assumed to be independent for different samples. We use a neural network with 2 hidden layers, 200 neurons in each layer, to implement 10 independent training processes using SGD and a learning rate , each containing 5000 epochs. Results are shown in Fig. 2(c) and further tabulated in C. For , choosing an intermediate leads the smallest errors and an optimal balance between learning the coefficients and learning the dynamics of . When is increased to nonzero values (), a larger is needed to keep errors small (see Fig. 2(c) and C). If the noise is further increased to, say, (not shown in Fig. 2), an even larger is needed for training to converge. This behavior arises because the independent noise contributes more to high-frequency components in the spectral expansion. In order for training to converge, fitting the shape of the basis functions by learning is more important than fitting noisy high-frequency components via learning . A larger puts more weight on learning the dynamics of and basis function shapes.

We also investigate how the intrinsic noise in the parameters of the solution’s distribution Eq. (16) affect the accuracy of the learned DE by our method. We found that if the intrinsic noise in is larger, then the training errors of the learned DE models are larger. However, compared to models trained on data with lower , training using noisier data leads to lower errors when testing data are also noisy. Training with noisier data leads to better performance when testing data are also noisy. The training and testing errors that show this feature are presented in D.

Finally, we test whether the parameterized (Eq. (8)) learned from the training set can extrapolate well beyond the training set sampling interval . To do this, we generate another 50 trajectories and sample each of then at random times . We then used models trained with and different to test. As shown in Fig. 2(d), our spectral neural DE learner can accurately extrapolate the solution to times beyond the training set sampling time intervals. We also observe that a stronger penalty on and () leads to better extrapolation results.

In the last example, we carry out a numerical experiment on learning the evolution of a Gaussian wave packet (which may depend on nonlocal interactions) across a two-dimensional unbounded domain . We use this case to explore improving training efficiency by using a hyperbolic cross space to reduce the number of coefficients in multidimensional problems.

Example 3.

We solve a 2D unbounded-domain problem of fitting a Gaussian wave packet’s evolution

| (22) |

where is the center of the wave packet and is the minimum positional spread. If , the Gaussian wave packets defined in Eq. (22) solves the stationary zero-potential Wigner equation, an equation often used in quantum mechanics to describe the evolution of the Wigner quasi-distribution function [27, 28]. We set and in Eq. (22) and independently sample to generate data. Thus, the DE satisfied by the highly nonlinear Eq. (22) is unknown and potentially involves nonlocal convolution terms. In fact, there could be infinitely many DEs, including complicated nonlocal DEs, that can describe the dynamics of Eq. (22). An example of such a nonlocal DE is

| (23) | ||||

We wish to learn the underlying dynamics using a parameterized in Eq. (8). Since the Gaussian wave packet Eq. (22) is defined in the unbounded domain , learning its evolution requires information over the entire domain. Thus, methods that depend on discretization of space are not applicable.

Our numerical experiment uses Eq. (22) as both training and testing data. We take and generate 100 trajectories for training. For this example, training with ResNet results in diverging gradients, whereas the use of a feedforward neural network yields convergent results. So we use a feedforward neural network with two hidden layers and 200 neurons in each hidden layer and the ELU activation function. We train across 1000 epochs using SGD with momentum (SGDM), a learning rate , , and . For testing, we generate another 50 trajectories, each with starting time but taken from . We use the spectral expansion

| (24) |

to approximate Eq. (22). We record the coefficients as well as the scaling factors and displacements at different .

Because are defined in a 2-dimensional space, instead of a tensor product, we can use a hyperbolic cross space for the spectral expansion to effectively reduce the total number of basis functions while preserving accuracy [20]. Similar to the use of sparse grids in the finite element method [29, 30], choosing basis functions in the space

| (25) | ||||

can reduce the effective dimensionality of the problem. We explored different hyperbolic spaces with different and . We use the loss function Eq. (19) with for training. The results are listed in E. To show how the loss function Eq. (19) depends on the coefficients in Eq. (24), we plot saliency maps [31] for the quantity 111We take derivatives w.r.t. to only the coefficients of in Eq. (19) and not with w.r.t. the expansion coefficients of the observational data ., the absolute value of the partial derivative of the loss function Eq. (19) w.r.t. averaged over 10 training processes.

As shown in Fig. 3(a,b), using leads to similar errors as the full tensor product , but could greatly reduce the number of coefficients and improve training efficiency. Taking a too large leads to larger errors because useful coefficients are left out. From Fig. 3(c-f), there is a resolution-invariance for the dependence of the loss function on the coefficients though using different hyperbolic spaces with different . We find that an intermediate (e.g., ) can be used to maintain accuracy and reduce the number of inputs/outputs when reconstructing the dynamics of Eq. (22). Overall, the “curse of dimensionality” can be mitigated by adopting a hyperbolic space for the spectral representation.

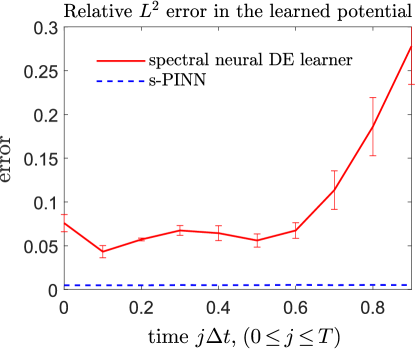

Finally, in F, we consider source reconstruction in a heat equation. Our proposed spectral neural DE learning method achieves an average relative error . On the other hand, if all terms on the RHS of Eq. (1) except an unknown source (which does not depend on the solution) is known, the recently developed s-PINN method [15] achieves a higher accuracy. However, if in addition to the source term, additional terms on on the RHS of Eq. (1) are unknown, s-PINNs cannot be used but our proposed spectral neural DE learning method remains applicable.

4 Conclusion

In this paper, we proposed a novel spectral neural DE learning method that is suitable for learning spatiotemporal DEs from spectral expansion data of the underlying solution. Its main advantages is that it can be applied to learning both spatiotemporal PDEs and integro-differential equations in unbounded domains, while matching the performance of the most recent high-accuracy PDE learning methods applicable to only bounded domains. Moreover, our proposed method has the potential to deal with higher-dimensional problems if a proper hyperbolic cross space can be justified to effectively reduce the dimensionality.

As a future direction, we plan to apply our spectral neural DE learning method to many other inverse-type problems in physics. One possible application is to learn the stochastic dynamics associated with anomalous transport [32] in an unbounded domain, which could be associated with fractional derivative or integral operators in the corresponding term. Finally, since the number of inputs (expansion coefficients) grows exponentially with spatial dimension, very high dimensional problems may not be sufficiently mitigated by even the most optimal cross space hyperbolicity (see Eq. (25)). Thus, higher dimensional problems remain challenging and exploring how prior knowledge on the observed data can be used within our spectral framework to address them may be a fruitful avenue of future investigation.

Acknowledgments

This work was supported in part by the Army Research Office under Grant W911NF-18-1-0345.

References

- [1] L. Bar, N. Sochen, Unsupervised deep learning algorithm for PDE-based forward and inverse problems, arXiv preprint arXiv:1904.05417 (2019).

- [2] J.-T. Hsieh, S. Zhao, S. Eismann, L. Mirabella, S. Ermon, Learning neural PDE solvers with convergence guarantees, in: International Conference on Learning Representations, 2018.

- [3] L. Ruthotto, E. Haber, Deep neural networks motivated by partial differential equations, Journal of Mathematical Imaging and Vision 62 (3) (2020) 352–364.

- [4] J. Sirignano, J. F. MacArt, J. B. Freund, DPM: A deep learning PDE augmentation method with application to large-eddy simulation, Journal of Computational Physics 423 (2020) 109811.

- [5] J. Brandstetter, D. E. Worrall, M. Welling, Message passing neural PDE solvers, in: International Conference on Learning Representations, 2021.

- [6] M. Xia, S. Shao, T. Chou, Efficient scaling and moving techniques for spectral methods in unbounded domains, SIAM Journal on Scientific Computing 43 (5) (2021) A3244–A3268.

- [7] K. J. Burns, G. M. Vasil, J. S. Oishi, D. Lecoanet, B. P. Brown, Dedalus: A flexible framework for numerical simulations with spectral methods, Physical Review Research 2 (2) (2020) 023068.

- [8] J. Shen, T. Tang, Tao, L.-L. Wang, Spectral Methods: Algorithms, Analysis and Applications, Springer Science & Business Media, New York, 2011.

- [9] Z. Long, Y. Lu, X. Ma, B. Dong, PDE-net: Learning PDEs from data, in: International Conference on Machine Learning, PMLR, 2018, pp. 3208–3216.

- [10] Z. Long, Y. Lu, B. Dong, PDE-net 2.0: Learning PDEs from data with a numeric-symbolic hybrid deep network, Journal of Computational Physics 399 (2019) 108925.

- [11] H. Xu, H. Chang, D. Zhang, DL-PDE: Deep-learning based data-driven discovery of partial differential equations from discrete and noisy data, Communications in Computational Physics 29 (3) (2021) 698–728.

- [12] A. Anandkumar, K. Azizzadenesheli, K. Bhattacharya, N. Kovachki, Z. Li, B. Liu, A. Stuart, Neural operator: Graph kernel network for partial differential equations, in: ICLR 2020 Workshop on Integration of Deep Neural Models and Differential Equations, 2020.

- [13] Z. Li, N. B. Kovachki, K. Azizzadenesheli, K. Bhattacharya, A. Stuart, A. Anandkumar, et al., Fourier neural operator for parametric partial differential equations, in: International Conference on Learning Representations, 2020.

- [14] V. Fanaskov, I. Oseledets, Spectral neural operators, arXiv preprint arXiv:2205.10573 (2022).

- [15] M. Xia, L. Böttcher, T. Chou, Spectrally adapted physics-informed neural networks for solving unbounded domain problems, Machine Learning: Science and Technology 4 (2) (2023) 025024.

- [16] R. T. Chen, Y. Rubanova, J. Bettencourt, D. Duvenaud, Neural ordinary differential equations, in: Proceedings of the 32nd International Conference on Neural Information Processing Systems, 2018, pp. 6572–6583.

- [17] T. Tang, The Hermite spectral method for Gaussian-type functions, SIAM Journal on Scientific Computing 14 (3) (1993) 594–606.

- [18] M. Xia, S. Shao, T. Chou, A frequency-dependent p-adaptive technique for spectral methods, Journal of Computational Physics 446 (2021) 110627.

- [19] T. Chou, S. Shao, M. Xia, Adaptive Hermite spectral methods in unbounded domains, Applied Numerical Mathematics 183 (2023) 201–220.

- [20] J. Shen, L.-L. Wang, Sparse spectral approximations of high-dimensional problems based on hyperbolic cross, SIAM Journal on Numerical Analysis 48 (3) (2010) 1087–1109.

- [21] J. Shen, H. Yu, Efficient spectral sparse grid methods and applications to high-dimensional elliptic problems, SIAM Journal on Scientific Computing 32 (6) (2010) 3228–3250.

- [22] H. Bateman, Some recent researches on the motion of fluids, Monthly Weather Review 43 (4) (1915) 163–170.

- [23] X. Antoine, A. Arnold, C. Besse, M. Ehrhardt, A. Schädle, A review of transparent and artificial boundary conditions techniques for linear and nonlinear Schrödinger equations, Communications in Computational Physics 4 (4) (2008) 729–796.

- [24] K. He, X. Zhang, S. Ren, J. Sun, Deep residual learning for image recognition, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [25] G. E. Hinton, N. Srivastava, A. Krizhevsky, I. Sutskever, R. R. Salakhutdinov, Improving neural networks by preventing co-adaptation of feature detectors, arXiv preprint arXiv:1207.0580 (2012).

- [26] N. Srivastava, G. E. Hinton, A. Krizhevsky, I. Sutskever, R. Salakhutdinov, Dropout: a simple way to prevent neural networks from overfitting, Journal of Machine Learning Research 15 (2014) 1929–1958.

- [27] Z. Chen, Y. Xiong, S. Shao, Numerical methods for the Wigner equation with unbounded potential, Journal of Scientific Computing 79 (1) (2019) 345–368.

- [28] S. Shao, T. Lu, W. Cai, Adaptive conservative cell average spectral element methods for transient Wigner equation in quantum transport, Communications in Computational Physics 9 (3) (2011) 711–739.

- [29] H.-J. Bungartz, M. Griebel, Sparse grids, Acta Numerica 13 (2004) 147–269.

- [30] C. Zenger, W. Hackbusch, Sparse grids, in: Proceedings of the Research Workshop of the Israel Science Foundation on Multiscale Phenomenon, Modelling and Computation, 1991, p. 86.

- [31] K. Simonyan, A. Vedaldi, A. Zisserman, Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps, in: Proceedings of the International Conference on Learning Representation (ICLR), 2014, pp. 1–8.

- [32] B. Jin, W. Rundell, A tutorial on inverse problems for anomalous diffusion processes, Inverse problems 31 (3) (2015) 035003.

Appendix A Using Fourier neural operator to reconstruct unbounded domain DEs

We shall show through a simple example that it is usually difficult to apply bounded domain DE reconstructing methods to reconstruct unbounded domain DEs even if we truncate the unbounded domain into a bounded domain, because appropriate boundary conditions must be provided. We wish to use the Fourier neural operator method to reconstruct the unbounded domain DE

| (26) |

If one imposes the initial condition , then

| (27) |

is the analytic solution to Eq. (26). For this problem, we will assume .

Since the Fourier neural operator (FNO) method relies on spatial discretization and grids, and cannot be directly applied to unbounded domain problems, we truncate the unbounded domain. Suppose one is interested in the solution’s behavior for . One approach is to truncate the unbounded domain to and use the FNO method to reconstruct the solution given . However, we show how improper boundary conditions of the truncated domain can leads to large errors.

For example, we assume the training set satisfies the boundary condition , which is not the correct boundary condition since it is inconsistent with the ground truth solution. Therefore, we will not be reconstructing the model in Eq. (26). We generated the testing dataset using the correct initial condition , without boundary conditions. The results are given in Table 3.

| Training error | Testing error | |||

| MSE | Mean relative | MSE | Mean relative | |

| Fourier | 3.78e-05 1.78e-05 | 6.09e-031.47e-03 | 8.95e-046.95e-05 | 3.07e-021.17e-03 |

From Table 3, the testing error is significantly larger than the training error because a different DE (not Eq. (26)) is constructed from the training data, which is not the heat equation we expect. Therefore, even if methods such FNO are efficient in bounded domain DE reconstruction problems, directly using them to reconstruct unbounded domain problems is not feasible if we cannot construct appropriate boundary conditions.

Appendix B Dependence on neural network architecture

The neural network structure of the parameterized may impact learned dynamics. To investigate how the neural network structure influences results, we use neural networks with various configurations to learn the dynamics of Eq. (16) in Example 2 in the noise-free limit. We set the learning rate and apply networks with 2,3,5,8 intermediate layers, and 50, 80, 120, 200 neurons in each layer.

| 2 | 3 | 5 | 8 | |

| 50 | ||||

| 80 | ||||

| 120 | ||||

| 200 |

| 2 | 3 | 5 | 8 | |

| 50 | ||||

| 80 | ||||

| 120 | ||||

| 200 |

From Tables 4 and 5, we see that a shallower and wider neural network yields the smallest error. Runtimes increase with the number of layers and the number of neurons in each layer; however, when the number of layers is small, the increase in runtime with the number of neurons in each layer is not significant. Thus, for the best accuracy and computational efficiency, we recommend a neural network with 2 hidden layers and 200 neurons in each layer.

Regularization of the neural network can also affect the spectral neural PDE learner’s ability to learn the dynamics or to reduce overfitting on the training set. We set in the loss function Eq. (19) and the training rate and train over 5000 epochs using SGD. We applied the ResNet and dropout techniques with a neural network containing 2 intermediate layers, each with 200 neurons. For the ResNet technique, we add the output of the first hidden layer to the output of the second hidden layer as the new output of the second hidden layer. For the dropout technique, each neuron in the second hidden layer is set to 0 with a probability . The results are presented in Table 6 below.

| Method | None | ResNet |

| Dropout | Dropout & ResNet | |

| 0.1 | ||

| 0.5 |

Table 6 shows the relative errors on the training set and testing set for Example 2 when there is no noise in both the training and testing data (). We apply regularization to the neural network, testing both the ResNet and the dropout techniques with different dropout probabilities . The errors are averaged over 10 independent training processes. Applying the ResNet technique leads to approximately a 20% decrease in the errors, whereas applying the dropout technique does not reduce the training error nor the testing error.

Appendix C How data noise and penalty parameter affect learning

We now investigate how different strengths and penalties affect the learning, including the dynamics of and . For each strength of noise , 100 trajectories are generated for training and another 50 are generated for testing according to Eq. (21). The penalty parameter tested are . The mean relative errors on the training set and testing set over 10 independent training processes are shown in Table 7 below and are plotted in Fig. 2(c).

| () | () | () | |

| () | () | () | |

| () | () | () | |

| () | () | () | |

| () | () | () | |

| () | () | () | |

| () | () | () |

Appendix D How noise in the parameter of the solutions affect the learned DE model

Here, we take different distributions of the three parameters in Eq. (16). We shall use and vary . For different , we train 10 independent models and the results are as follows.

| Train | 0 | | | |

| Training error | 4.43e-039.87e-04 | 1.25e-025.34e-04 | 1.64e-028.03e-03 | 1.62e-021.10e-03 |

| 0 | | | ||

| 4.43e-039.87e-04 | 2.81e-023.20e-03 | 3.88e-023.26e-03 | 5.64e-023.03e-03 | |

| 1.11e-026.55e-04 | 1.28e-025.77e-04 | 2.33e-021.40e-03 | 4.55e-022.40e-03 | |

| 1.24e-021.025e-03 | 1.07e-026.23e-04 | 1.65e-029.35e-04 | 2.95e-021.45e-03 | |

| 1.24e-021.07e-03 | 9.22e-038.66e-04 | 1.03e-027.98e-04 | 1.68e-021.24e-03 |

The training and testing error is the same for models with in Table 8 because if there is no uncertainty in the initial condition, all trajectories are the same. Though giving larger training errors, models trained on training sets with larger variances in the parameters of the initial condition could generalize better on testing sets where the variances in the parameters of the initial condition is larger.

Appendix E Choosing the hyperbolic cross space with different and

If the spectral expansion order is sufficiently large, using a hyperbolic cross space Eq. (25) can effectively reduce the required number of basis functions while maintaining accuracy. In our experiments, we set and (full tensor product), . We train the network for 1000 epochs using SGDM with a learning rate , , and . The penalty coefficient in Eq. (19) is .

| 5 | 9 | ||

| 36, 0.0180 (0.0164) | 100, 0.0204, (0.0194) | 225, 0.0110, (0.0105) | |

| 23, 0.0342, (0.0302) | 51, 0.0185, (0.0166) | 92, 0.0102, (0.0093) | |

| 21, 0.0414, (0.0363) | 42, 0.0230, (0.0210) | 70, 0.0309, (0.0279) | |

| 20, 0.0459, (0.0409) | 37, 0.0413, (0.0365) | 55, 0.0336, (0.0295) |

From Table 9, we see that a hyperbolic space with leads to minimal errors on the testing set. Furthermore, the number of basis functions for the hyperbolic space with is smaller than the full tensor product space for when , so the hyperbolic space with could be close to the most appropriate choice. We shall also use the saliency map to investigate the role of different frequencies and plot for different and in Fig. (4), where the loss function is Eq. (19). Even for different choices of , the changes in frequencies on the lower-left part of the saliency map (corresponding to a moderate and a large ) have the largest impact on the loss. This “resolution-invariance” justifies our choices that the proper hyperbolic space should have a larger but a moderate so that the total number of inputs or outputs are reduced to boost efficiency in higher-dimensional problems while accuracy is maintained.

Note that the errors in Table 9 can be larger on the training set than on the testing set, especially for larger training set errors (e.g., for ). This arises because the largest sampling time among the training samples is while for the testing samples the largest time is smaller than 1. If the trained dynamics does not approximate the true dynamics in Eq. (8) well, the error of the training samples with time will be larger than the error of testing samples due to error accumulation.

Appendix F Reconstructing learned dynamics

Table 10 shows that the errors from a new testing set, with randomly sampled time , , do not differ significantly from the errors in the first row of Table 7. This suggests that our PDE learner can accurately extrapolate the solution to times beyond those of the training samples.

| Mean relative error | |||||||

| s.d. of relative error |

To make a comparison with the s-PINN method proposed in [15], we consider the following inverse-type problem of reconstructing the unknown potential in

| (28) |

by approximating . The function is taken to be

| (29) |

where is i.i.d. sampled for different trajectories. Therefore, the true potential in Eq. (5) is

| (30) |

which is independent of . We use 100 trajectories to learn the unknown potential with . In the s-PINN method, since only is inputted, only one reconstructed (which is the same for all trajectories) is outputted in the form of a spectral expansion. However, in our spectral neural DE learning method, will be different for different inputted giving rise to a changing error along the time horizon. The mean and variance of the relative error is plotted in Fig. 5.

When all but the potential on the RHS of Eq. (1) is known, the s-PINN is more preferable because more information is inputted as part of the loss function in [15]. Nevertheless, our spectral neural DE learner can still achieve a relative error , indicating that without any prior information it can still reconstruct the unknown source term with acceptable accuracy. However, the accuracy of our spectral neural DE learner for reconstructing the potential deteriorates as the time horizon increases. Since solutions at later times include errors from earlier times, minimizing Eq. (19) requires more accurate reconstruction of the dynamics (RHS of Eq. (1)) at earlier times than at later times.