A Sparsity Adaptive Algorithm to Recover NB-IoT Signal from Legacy LTE Interference

Abstract

As a forerunner in 5G technologies, Narrowband Internet of Things (NB-IoT) will be inevitably coexisting with the legacy Long-Term Evolution (LTE) system. Thus, it is imperative for NB-IoT to mitigate LTE interference. By virtue of the strong temporal correlation of the NB-IoT signal, this letter develops a sparsity adaptive algorithm to recover the NB-IoT signal from legacy LTE interference, by combining -means clustering and sparsity adaptive matching pursuit (SAMP). In particular, the support of the NB-IoT signal is first estimated coarsely by -means clustering and SAMP algorithm without sparsity limitation. Then, the estimated support is refined by a repeat mechanism. Simulation results demonstrate the effectiveness of the developed algorithm in terms of recovery probability and bit error rate, compared with competing algorithms.

Index Terms:

-means clustering, LTE interference, Narrowband Internet of Things, sparse recovery.I Introduction

With the online convening of the ITU-R WP5D #35 meeting held on July 9th, 2020, Narrowband Internet of Things (NB-IoT) has been formally approved as part of the 5G standard, aiming at massive machine-type communications. As a forerunner in 5G ecosystem construction and industrial applications, NB-IoT will be inevitably coexisting with legacy Long-Term Evolution (LTE) systems. In real-world applications, NB-IoT can operate in three distinct modes: stand-alone, guard-band, or in-band. Among them, the in-band mode impacts NB-IoT and LTE performance as it bundles NB-IoT directly into the LTE’s channels. Thus, eliminating interference from legacy LTE signal benefits the performance of in-band operating NB-IoT.

As the bandwidth of NB-IoT is much smaller than that of LTE, the NB-IoT signal is sparse in the frequency domain. Accordingly, recovering the NB-IoT signal from wideband LTE interference can be modeled as a sparse recovery problem. In recent years, the theory of compressed sensing (CS) [1] was widely used to solve various sparse recovery problems in wireless systems. For instance, in [2] the CS theory was exploited to cancel narrow-band interference (NBI) in orthogonal frequency division multiplexing (OFDM) systems. In [3, 4], several CS-based greedy algorithms were developed for joint data recovery and NBI mitigation in OFDM systems. In [5], the CS theory was applied to achieve both high detection and low false alarm probabilities for wideband spectrum sensing in cognitive radio systems. On the other hand, there have been extensive works on designing CS-based greedy algorithms, such as subspace pursuit [6], sparsity adaptive matching pursuit (SAMP) [7], and iterative reweighted least-squares [8]. However, the CS theory requires that an observation matrix must satisfy the restricted isometry property [9], which does not always hold in practice.

Unlike the statistical schemes described above, machine learning was recently applied in [10] to eliminate NB-IoT interference to LTE system, where the support of NB-IoT subcarriers was located by an initial support distribution vector. In particular, this initial vector was first used to generate candidate supports from which several favorable ones were chosen, then the support distribution vector was updated as per the favorable ones by minimizing their cross-entropy. However, NB-IoT signal was simply modeled as an NBI in [10], without exploiting the signal characteristics. By accounting for the continuous distribution of multiple NB-IoT subcarriers, a recent work [11] developed an intelligent recovery algorithm based on -means clustering, where the support of subcarriers was first estimated based on -means strategy and then located by a sliding window whose length equals the prior sparsity of NB-IoT signals.

For in-band operating NB-IoT, as the coherence time of NB-IoT signal is much larger than that of one OFDM symbol[12], the NB-IoT signal is characterized by strong temporal correlation, implying it has invariant support over one received OFDM frame of interest [10]. On account of the temporal correlation effect, this letter designs a sparsity adaptive recovery algorithm by combining -means clustering and SAMP algorithm. In particular, the support of the NB-IoT signal is first estimated coarsely by -means clustering and SAMP algorithm without sparsity limitation. Then, the estimated support is refined by a repeat mechanism. Compared with state-of-the-art competing algorithms, extensive simulation results demonstrate the effectiveness of the developed algorithm in terms of recovery probability, bit error rate, and convergence.

Notation: Vectors and matrices are denoted by lower- and upper-case letters in bold typeface. The superscripts and indicate the pseudo-inverse and conjugate transpose, respectively. The matrices and with size refer to discrete Fourier transform (DFT) and inverse discrete Fourier transform (IDFT), respectively. The symbol transforms in time domain into frequency domain. The norms and stand for the - and -norm, respectively. The operator gives the cardinality of set and indicates the submatrix of , whose columns are indexed by set .

II System Model

As shown in Fig. 1, the base station (BS) multiplexes the NB-IoT and LTE traffic onto the same frequency spectrum. To be specific, the NB-IoT occupies one physical resource block (PRB) in the LTE system bandwidth while the LTE occupies the other PRBs. Obviously, the mutual interference between NB-IoT and LTE signals is inevitable.

Figure 2 depicts the frame structure of LTE. It is clear that the zero-padding (ZP) is the guard interval between two consecutive OFDM blocks, so as to eliminate the inter-symbol interference (ISI). As a result, each OFDM symbol is composed of an OFDM block consisting of subcarriers and a length- zero sequence.

Suppose the channel between an LTE user equipment (UE) and its associated BS has a channel impulse response (CIR) where is the length of CIR, then, the received LTE signal at the BS can be formulated as

| (1) |

where denotes the transmitted LTE signal in the frequency domain; indicates the zero-padding process; is modeled as a lower triangular Toeplitz matrix whose first column is , where . In view of all-zero submatrix in , can be expressed as a circulant matrix, with first row being .

As specified in Release 13 of the 3GPP specification TS 36.211 [13], a transport data block of NB-IoT is first generated through channel coding, modulation and resource mapping, and then transmitted over resource units (RUs). As illustrated in Fig. 3, there are four distinct RU formats in NPUSCH, with each occupying different subcarriers and slots. For instance, the RU format 1 occupies subcarriers and slots whereas format 4 occupies subcarrier and slots.

Based on the aforementioned discussion, the received NB-IoT signal at the BS can be formulated as a sparse signal:

| (2) |

with

| (3) |

where is the signal amplitude at the subcarrier, and and are the indices of the first and last subcarriers of the NB-IoT signal, respectively. is the support of , namely, the index set of nonzero elements of . Also, it is evident that with , as configured in Fig. 3.

In light of (1) and (2), the received signal in time domain at the BS can be expressed as

| (4) | ||||

| (5) |

where denotes an additive white Gaussian noise (AWGN) with zero mean and covariance matrix . Moreover, the instantaneous received power of the NB-IoT signal and LTE signal can be explicitly computed as

| (6) |

respectively. By definition, the received signal-to-noise ratio (SNR) is given by while the received signal-to-interference ratio (SIR) is .

III Algorithm Development

In this section, we first preprocess the received signal given by (5) so as to eliminate the interference caused by the LTE signal. Then, a sparsity adaptive algorithm combining -means clustering and SAMP algorithm is developed to efficiently recover NB-IoT signal.

III-A Preprocessing the Received Signal

As defined after (1) is a circulant matrix, it can be diagonalized such that (5) can be rewritten as

| (7) |

where is a diagonal matrix. After performing DFT over (7), we obtain the received signal in frequency domain:

| (8) |

Now, we are in a position to mitigate the LTE signal in (8). In light of being an orthogonal matrix and being a diagonal matrix, let , then, multiplying by gives

| (9) |

Next, by recalling the special structure of the ZP-OFDM system illustrated in Fig. 2, we can fully eliminate the LTE signal by using the zero-padding appended at the end of each OFDM block. To be specific, let , then, multiplying by yields

| (10) |

where is known as an observation matrix. Thus, our remaining task is to recover the sparse signal from the postprocessed .

As shown in Fig. 3, when NB-IoT operates in the in-band mode, the NB-IoT signal in frequency domain occupies several consecutive subcarriers. More accurately, the number of subcarriers is allowed to be , , or , i.e., . This feature implies that shown in (10) is a linear combination of several consecutive column vectors of . In other words, the correlation coefficients of and each column vector of will show one or more spikes, which can be located by the -means clustering.

Applying the least squares (LS) principle to (10), the optimization problem can be formulated as

| (11) | ||||

| (12) |

where the objective function is indeed the -norm of the residue error, that is, .

III-B Coarse Estimation of the Support

In principle, finding the support of amounts to choosing a set of consecutive column vectors of such that their linear combination minimizes the residue error in (11). To this end, the correlation coefficient of and each column of is computed as

| (13) |

On account of the continuous distribution of the support of (cf. Fig. 3), we employ the -means clustering algorithm to classify these coefficients by Euclidean distance [14]. Suppose that we obtain clusters, say, , where contains correlation coefficients in the cluster, then, the optimal cluster is determined by

| (14) |

where is the cluster with the largest mean value of the correlation coefficients. As a result, the optimal column index set is given by

| (15) |

Now, since is more likely a linear combination of column vectors whose column indices belong to , the optimization problem can be further simplified as

| (16) | ||||

| (17) |

To deal with , we define a count vector initialized as a null vector, then, the SAMP algorithm is used to solve the minimization problem in (16) without considering the sparsity constraint in (17). With the estimated support denoted , the count vector is updated as per

| (18) |

It is noteworthy that, in practice, the column vectors chosen by shown in (15) may be linearly dependent. If so, this dependence would degrade the recovery probability. To deal with this situation, we randomly disturb the columns of to form a new observation matrix and corresponding measurement vector . According to (13)-(15), a new optimal column index set is generated and in is replaced by . Then, the SAMP algorithm is repeatedly used to solve the problem in (16) again. The estimated support is denoted , and the count vector proceeds with updating by according to (18). The aforementioned operations are repeated times to obtain the final count vector , which is next used to refine the estimate of the support.

III-C Refined Estimation of the Support

To minimize the -norm of the residue error in (16), the estimated support by the SAMP at each repetition described above does not satisfy the constraint in (17). Now, we exploit the final count vector to refine the estimate of the support. It is not hard to understand that, after repetitions, the final contains the distribution information of the real support of NB-IoT signal. Since NB-IoT occupies several continuous subcarriers in the LTE spectrum, finding the real support of the NB-IoT signal is equivalent to finding an index range in where an spike occurs.

The procedure to recover the support of NB-IoT signal from the final count vector is as follows. First, we define a difference vector with entries given by

| (19) |

Then, given the indices of the minimum and maximum values in the difference vector , denoted and , respectively, determined by

| (20) |

the starting point and endpoint of the spike are located. Next, with and , a sample set can be generated as per

| (21) |

If , then it implies that the elements of between indices and shape one spike. Further, if , the estimated optimal support is finally given by

| (22) |

Otherwise, repeat the aforementioned operations until and . Simulation results to be discussed at the end of the next section demonstrate that the algorithm converges after repetitions.

With the optimal support estimated, the transmitted NB-IoT signal can be recovered by using the LS principle. Specifically, let , then can be expressed as

| (23) |

To sum up, the developed algorithm to recover the NB-IoT signal from LTE interference is formalized in Algorithm 1.

IV Simulation Results and Discussions

In this section, we present and discuss the computational complexity and simulation results pertaining to the developed algorithm. In the simulation experiments, to generate the NB-IoT signal, the information bits are encoded by Turbo code with code rate and the coded bits are modulated with quadrature phase shift keying (QPSK) constellation. As for the LTE signal, the OFDM block length is set to with ZP length . The length of CIR is . In the signal recovery algorithm, the maximum iteration number is set to with each the maximum repetition number , and the number of clusters generated by -means clustering is . The number of NB-IoT subcarriers is specified as , which coincides with the real configuration shown in Fig. 3.

IV-A Computational Complexity Analysis

Table I compares the computational complexity of the proposed algorithm with three benchmark ones developed in [7, 10, 11]. It is observed that the proposed algorithm has lower complexity than the classic SAMP [7], as the latter locates directly the support of NB-IoT subcarriers without any pre-estimate. The computational complexity of our algorithm and that in [11] (denoted ‘CWS’ for short) is independent of the number of NB-IoT subcarriers (i.e., ), whereas that in [10] (denoted ‘SCEM’ for short) increases quadratically with . The proposed algorithm has a bit higher complexity than that in [11] because the latter assumes the prior sparsity of NB-IoT signal, which is however unknown in practice.

IV-B Simulation Results and Discussions

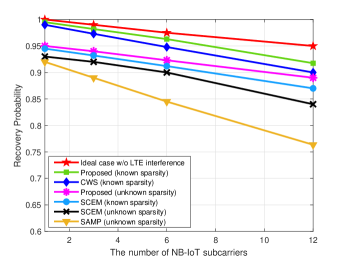

Figure 4 shows the recovery probability versus the number of NB-IoT subcarriers. In the pertaining simulation setup, the SNR is fixed to dB while the SIR is dB, which means that the system is interference dominated. It is seen from Fig. 4 that the recovery probability decreases with the number of NB-IoT subcarriers, as more subcarriers suffer higher interference. With sparsity known, the developed algorithm outperforms the SCEM and CWS algorithm and approaches the performance in the ideal case. On the other hand, in the case of unknown sparsity, the developed algorithm performs much better than the SCEM and SAMP algorithm.

Figure 5 illustrates the bit error rate (BER) versus the SNR of the NB-IoT signal with known sparsity, where the SIR is fixed to dB and in the left panel (or in the right panel). It is seen that the BER decreases with SNR but increases with the number of NB-IoT subcarriers, as expected. In the case of , given the target BER being , the left panel of Fig. 5 shows that the required SNR corresponding to the ideal case is about dB and the SNR required by the proposed algorithm is about dB, which outperforms the CWS algorithm by dB and the SCEM algorithm by dB.

Figure 6 depicts the BER versus the SNR of the NB-IoT signal with unknown sparsity, where the SIR is fixed to dB and in the left panel (or in the right panel). It shows that the BER of the proposed algorithm approaches that in the ideal case without interference whereas the SAMP and SCEM algorithm perform much worse.

Figure 7 shows the recovery probability versus the maximum repetition number in each iteration. In the simulation setup, the number of NB-IoT subcarriers is set to with SNR being dB and SIR being dB. It is observed that all three curves converge after 30 repetitions. In the case of known sparsity, the proposed algorithm obtains recovery probability, which outperforms the CWS algorithm by , albeit a bit slower convergence. In the case of unknown sparsity, the CWS algorithm does not work anymore but the proposed algorithm has a recovery probability about .

V Conclusions

In this letter, a sparsity adaptive algorithm was designed to recover NB-IoT signal from legacy LTE interference. In particular, by using the strong temporal correlation of NB-IoT signal, the support of the NB-IoT signal was first estimated in a coarse way, and then was refined by a repeat mechanism. As the developed two-stage algorithm needs neither the restricted isometry property on the observation matrix nor the sparsity information of the NB-IoT signal, it is promising in real-world applications.

References

- [1] Y. C. Eldar and G. Kutyniok, Compressed Sensing: Theory and Applications. Cambridge University Press, 2012.

- [2] A. Gomaa and N. Al-Dhahir, “A compressive sensing approach to NBI cancellation in mobile OFDM systems,” in Proc. IEEE GLOBECOM’2010, 2010, pp. 1–5.

- [3] H. Al-Tous, I. Barhumi, and N. Al-Dhahir, “Atomic-norm for joint data recovery and narrow-band interference mitigation in OFDM systems,” in Proc. IEEE PIMRC’2016, 2016, pp. 1–5.

- [4] H. Al-Tous, I. Barhumi, A. Kalbat, and N. Al-Dhahir, “ADMM for joint data and off-grid NBI recovery in OFDM systems,” in Proc. IEEE WCNC’2018, 2018, pp. 1–6.

- [5] X. Zhang, Y. Ma, Y. Gao, and S. Cui, “Real-time adaptively regularized compressive sensing in cognitive radio networks,” IEEE Trans. Veh. Technol., vol. 67, no. 2, pp. 1146–1157, Feb. 2018.

- [6] W. Dai and O. Milenkovic, “Subspace pursuit for compressive sensing signal reconstruction,” IEEE Trans. Inf. Theory, vol. 55, no. 5, pp. 2230–2249, May 2009.

- [7] T. T. Do, L. Gan, N. Nguyen, and T. D. Tran, “Sparsity adaptive matching pursuit algorithm for practical compressed sensing,” in Proc. 42nd ACSSC, 2008, pp. 581–587.

- [8] H. Xie, P. Wu, F. Tan, and M. Xia, “Adaptively-regularized compressive sensing with sparsity bound learning,” IEEE Commun. Lett., vol. 25, no. 4, pp. 1283–1287, Apr. 2021.

- [9] I. Daubechies, R. DeVore, M. Fornasier, and C. S. Güntürk, “Iteratively reweighted least squares minimization for sparse recovery,” Commun. Pure and Applied Math., vol. 63, no. 1, pp. 1–38, Jan. 2010.

- [10] S. Liu, L. Xiao, Z. Han, and Y. Tang, “Eliminating NB-IoT interference to LTE system: A sparse machine learning-based approach,” IEEE Internet of Things J., vol. 6, no. 4, pp. 6919–6932, Aug. 2019.

- [11] Y. Guo, P. Wu, and M. Xia, “Recovering NB-IoT signal from legacy LTE interference via K-means clustering,” in Proc. IEEE 93rd VTC’2021-Spring, Helsinki, Finland, Apr. 2021, pp. 1–5.

- [12] S. Liu, F. Yang, J. Song, and Z. Han, “Block sparse Bayesian learning-based NB-IoT interference elimination in LTE-advanced systems,” IEEE Trans. Commun., vol. 65, no. 10, pp. 4559–4571, Oct. 2017.

- [13] 3GPP, TS 36.211: “Evolved Universal Terrestrial Radio Access (E-UTRA); Physical Channels and Modulation (Release 15)”, 2018.

- [14] C. M. Bishop, Pattern Recognition and Machine Learning. Springer-Verlag New York, Inc., 2006, ch. 9, pp. 423–459.