A Sharp Fourier Inequality

and the Epanechnikov Kernel

Abstract.

We consider functions and kernels normalized by , making the convolution a “smoother” local average of . We identify which choice of most effectively smooths the second derivative in the following sense. For each , basic Fourier analysis implies there is a constant so for all . By compactness, there is some that minimizes and in this paper, we find explicit expressions for both this minimal and the minimizing kernel for every . The minimizing kernel is remarkably close to the Epanechnikov kernel in Statistics. This solves a problem of Kravitz-Steinerberger and an extremal problem for polynomials is solved as a byproduct.

1. Introduction

We are interested in the study of functions . These functions appear naturally in many applications (for example as “time series”) and a natural problem that arises frequently is to take local averages at a fixed, given scale. A popular way is to fix a kernel and consider the convolution

A natural question is now which kernel one should pick. We will always assume that the kernel is normalized so that the convolution is indeed a local average. There is no “right choice” of a kernel as different choices of weights are optimal in different ways. For example, in image processing theory [16] one is interested in kernels so that for any , the convolution has fewer local extrema than ; this property together with the other “scale-space axioms” uniquely characterizes the Gaussian kernel [1, 12, 23]. In kernel density estimation, one is interested in a kernel that minimizes least-squared error, which uniquely characterizes the Epanechnikov kernel [7]. In this paper, we build on the work of Kravitz and Steinerberger [15] and take the approach of asking that the convolution be as smooth as possible, which we show uniquely characterizes yet another kernel in the second derivative case.

To make this precise, first define the discrete derivative by and define higher-order derivatives inductively by . Then it follows from basic Fourier analysis (see Section 3.3) that for every kernel and every , there exists a constant so that

We can now ask a natural question.

Question. Given a positive integer , how small can be and which convolution kernels attain the optimal constant?

Such a kernel would then be the “canonical” kernel producing the smallest th derivatives and is a natural candidate for use in practice. The problem has been solved by Kravitz and Steinerberger when .

Theorem (Kravitz-Steinerberger [15]).

For any normalized ,

with equality if and only if is the constant kernel.

That is, averaging by convolving with the characteristic function of an interval best minimizes the first derivative. Kravitz and Steinerberger also studied the case under the assumption has non-negative Fourier transform.

Theorem (Kravitz-Steinerberger [15]).

For any normalized with nonnegative Fourier transform,

with equality if and only if is the triangle function

The main result of this paper resolves the case without additional assumptions on the kernel, providing the optimal constant and optimal kernel for all .

Theorem (Main Result).

A quick computation shows the optimal kernels satisfy, as , the following asymptotic equivalence; notice the asymptotic improvement from to when removing the nonnegative Fourier transform restriction:

| (1) |

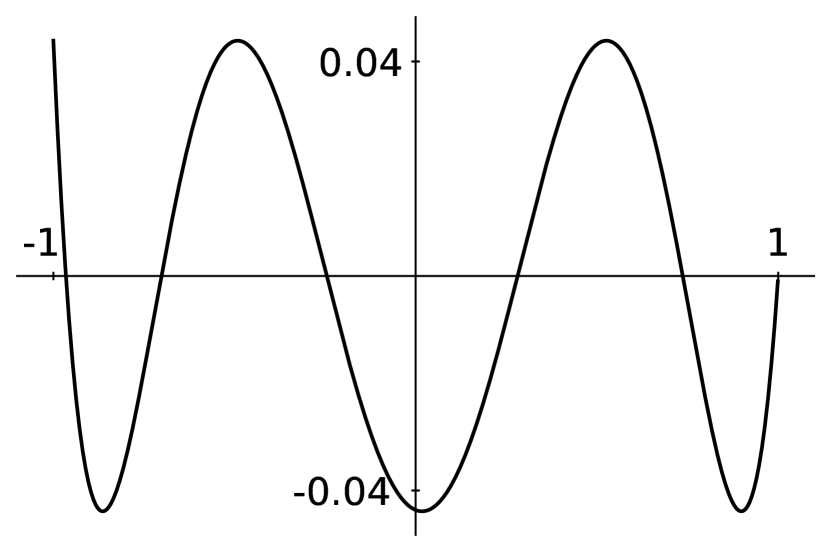

The optimal kernel for each is given as an integral expression by (3) in the following section, and Figure 1 pictures the optimal kernels and . Figure 2 depicts a time series function encoding the water level of Lake Chelan over a two week period as well as the smoothed data . Notice this smoothing reduces noise and clarifies long-term trends.

As seen in Figure 1, the optimal kernel resembles a parabola, but it turns out the points do not quite lie on any parabola. However, choosing weights by sampling from a parabola results in the discrete Epanechnikov kernel for each , which is a simple and effective approximation of the optimal kernel. Indeed, we show the parabolic Epanechnikov kernel has constant within of the optimal constant for large , providing a new reason to use the Epanechnikov kernel.

2. Results

2.1. A Sharp Fourier Inequality

We can rewrite the main result discussed in the Introduction as the following Fourier inequality. As usual, we only consider kernels normalized so that .

Theorem 1 (Main Result, restated).

Note the discrete Laplacian is precisely the discrete second derivative defined in the Introduction. The optimal that yields equality in Theorem 1 for any can be written explicitly by defining to be symmetric , then for setting

| (3) |

where is the th Chebyshev polynomial (as defined in Section 3.1), and

| (4) |

This result extends the work of Kravitz and Steinerberger [15] and adds to the recent research activity on sharp Fourier inequalities. For example, there is current research on sharp Fourier restriction and extension inequalities [5, 4, 17, 19, 20], on sharp Strichartz inequalities [8, 11], on sharp Hausdorff-Young inequalities [13, 14], and other Fourier inequalities [2, 3, 6, 9]

2.2. The Epanechnikov Kernel

The optimal kernel has a complicated expression as given in (3). However, as seen in Figure 1, this optimal resembles a parabola, and conversely we find that choosing weights by sampling from a parabola does extraordinarily well in smoothing the Laplacian of a given function. This choice of weights yields the discrete normalized Epanechnikov kernel defined by

| (5) |

The Epanechnikov kernel is widely used [10, 18, 22] in both theory and applications. This popularity stems from its computational efficiency and from Epanechnikov’s proof [7] that it is the optimal kernel to use in kernel density estimation (KDE) in terms of minimizing expected mean integrated square error. The following theorem reveals the Epanechnikov kernel is less than 2% worse than optimal in smoothing the Laplacian, providing another reason to use the Epanechnikov kernel in practice.

Theorem 2.

Let be as in (6). Then as we get the asymptotic equivalence

The constant in the above theorem is a universal constant defined by

| (6) |

2.3. A Sharp Polynomial Inequality

After taking a Fourier transform (see Section 3.3), Theorem 1 reduces to the following claim about polynomials, which is interesting in its own right.

Theorem 3.

Let be a polynomial of degree at most with . Then

with equality if and only if where is given in (4).

3. Proofs

3.1. A Sharp Polynomial Inequality

This section proves Theorem 3, which is the key to the proof of Theorem 1 and the formula for the optimal kernel given in (3). This proof makes heavy use Chebyshev polynomials, so we first recall some facts about Chebyshev polynomials for the convenience of the reader.

Chebyshev polynomials are a family of polynomials defined by declaring and , then defining the rest with the recurrence

A quick induction argument reveals Chebyshev polynomials also satisfy

| (7) |

The above relation is responsible for many nice properties of Chebyshev polynomials and so we typically consider Chebyshev polynomials over the domain where this formula can apply. This relation also reveals the zeroes of the Chebyshev polynomial are located at for . By taking the derivative of both sides of (7), we find that the derivative of Chebyshev polynomials over can be written for some polynomial that satisfies . These polynomials are called Chebyshev polynomials of the second kind. Finally, due to (7), each Chebyshev polynomial satisfies the equioscillation property, meaning there exists extrema so that .

We are now equipped to prove Theorem 3. The equation defining the optimal polynomial is long, but the idea behind its construction is a simple modification of Chebyshev polynomials. To construct a degree function that stays minimal over , we slightly stretch the Chebyshev polynomial to the function so that and . Then defining provides the minimal degree polynomial that satisfies the necessary conditions.

Proof of Theorem 3.

We start by verifying the claimed optimal polynomial fulfills the restrictions and satisfies the claimed inequality for any positive integer . We denote by and observe is a scaled and stretched Chebyshev polynomial for carefully chosen constant and linear change of variables :

Writing immediately reveals is a polynomial of order . The change of variables is designed so we have

using that is a zero of the Chebyshev polynomial . Thus we have is a well-defined polynomial of order as claimed. Next we show . First observe and now compute

where we used for the Chebyshev polynomial of the second kind with order . Recall and therefore, continuing our computation, we see the constant is chosen so that we get

Therefore fulfills the necessary conditions. To verify satisfies the equality, use and that on to see

This is indeed an equality because

We now show is the unique polynomial of degree at most that achieves this equality by deriving an equioscillation property for and modifying the standard argument for the minimizing property of Chebyshev polynomials. Recall the Chebyshev polynomial has extrema so that the equioscillation property is satisfied. To see has a similar equioscillation property, define inputs for , and observe by strictly increasing. Next, note the second extrema of Chebyshev polynomials is given by and so ; thus by strictly increasing, . Now compute , which implies . Therefore we find the inputs satisfy , and . That is, has maxima satisfying the equioscillation property in . Next suppose is any polynomial of degree so that and

We argue by using our equioscillation property to show the polynomials must intersect sufficiently many times. Formally, we count the zeros of the polynomial . By our equioscillation property and the assumption over , we require for odd and for even. Therefore must have a zero in each interval by the intermediate value theorem. That is, the intervals all contain a zero. Furthermore if any two intervals and share a zero at , then we can show will have a zero of multiplicity at least two at and therefore still has at least zeros counted with multiplicity on as follows. By at , we know has a minimum or maximum at , implying . Similarly, if , then and so we also find that has a minimum or maximum at , so . Therefore and so indeed has a zero of multiplicity at least two at and so has zeros on .

Now note and so is a polynomial of degree with the same roots on . Additionally observe

and therefore, evaluating at reduces to and so has roots. However because is of degree this implies is the zero polynomial. That is, . ∎

3.2. Reduction to symmetric kernels

Our Fourier analysis argument for Theorem 1 given in Section 3.3 only holds for symmetric kernels, satisfying . Luckily, the following lemma demonstrates that it is sufficient to only prove Theorem 1 for the class of symmetric normalized kernels.

Lemma 4.

Suppose for all symmetric and normalized kernels ,

| (8) |

for some . Then (8) holds for all normalized kernels .

Proof.

Let be any kernel normalized by . For any function , define its reflection . Next consider the symmetrization kernel , which will also satisfy . Now take any and compute

Therefore we find that for all , either

Hence we can conclude

∎

3.3. From discrete kernels to polynomial extremizers

Lemma 5 (Kravitz and Steinerberger [15]).

Given a symmetric and normalized kernel , define the polynomial

where is the th Chebyshev polynomial. Then,

For the proof of Lemma 5 we follow the argument given by Kravitz and Steinerberger [15], which uses the Fourier transform and Plancherel’s theorem. Before the proof, first recall that a function on the integers has a continuous Fourier transform defined on the 1-torus given by

This is called the discrete-time Fourier transform, which we will also denote by . This Fourier transform takes convolution to multiplication by

Another useful property is the Plancherel identity, which relates the inner product of functions with that of their Fourier transform by

Note we can express the Fourier transform of a shifted function by

Using the above, we can compute the Fourier transform of the discrete Laplacian.

We are equipped to prove Lemma 5, but first we follow up with a claim made in the Introduction. Indeed, observe that by the first computation in the following proof, which does not yet use the symmetry of , we can conclude quickly that for some constant depending continuously on . Furthermore, because the space of normalized kernels is compact, we conclude that there exists some optimal kernel that minimizes . This argument can be easily generalized to higher derivatives.

Proof of Lemma 5.

Let be a fixed symmetric, normalized kernel and let be any function in . Plancherel’s identity allows us to equate to an easier expression in terms of the Fourier transform by computing

| (9) | ||||

After taking a square root we get

Note that the only inequality in the derivation of the above is in (9). Furthermore, by choosing so that has mass concentrated at the in which the function achieves it’s maximum, we can make the above inequality arbitrary close to an equality. Thus we have shown our expression measuring the smoothing of the second derivative is equivalent to the following simpler expression:

Denote this simpler expression by

Because is symmetric, real-valued, and only supported on , we can rewrite by

Therefore we find

The substitution gives rise to

where denotes the Chebyshev polynomial of degree . Therefore, defining the polynomial by

we have our desired equality

∎

3.4. The Sharp Fourier Inequality and Optimal Kernel

Proof of Theorem 1.

For any symmetric and normalized , define the degree polynomial

as given in Lemma 5. Then note by the normalization of . Therefore combining Lemma 5 and Theorem 3 yields

Because the above inequality holds for symmetric normalized kernels, Lemma 4 implies this in fact holds for all normalized kernels. To see this inequality is sharp, let be the optimal degree polynomial as given in Theorem 3. Because the Chebyshev polynomials form a basis for the space of all degree polynomials, there exists unique coefficients so that

These coefficients define a corresponding kernel by setting for . Using , we find this kernel is properly normalized by computing

Noting , we can rewrite write as

Using the equality condition in Theorem 3, we find indeed satisfies

To find an explicit expression for the minimizing kernel , recall that Chebyshev polynomials are orthogonal and in particular

Therefore for any we have

Thus we can write as

where is the th Chebyshev polynomial, and

∎

3.5. The Epanechnikov Kernel

This section is dedicated to proving Theorem 2, which offers a kernel that is nearly optimal and easy to implement in practice. First we verify the Epanechnikov kernel satisfies the normalization requirement. Indeed, an induction argument gives the relation

Therefore we can compute

Next, note Lemma 5 allows us to reduce the asymptotic relation of Theorem 2 to a claim about the polynomials

In particular, Lemma 5 promises the equivalence

Therefore it suffices to show that as we have the asymptotic equivalence

For simplicity, we remove the normalizing coefficients of and instead consider

Therefore it suffices to show the following asymptotic equivalence as :

| (10) |

The first step in proving this asymptotic relation is to rewrite as follows.

Lemma 6.

Define the polynomial

Then,

| (11) |

Proof.

We first show the equality

| (12) |

This follows quickly by induction and the recurrence . Indeed, for we find Furthermore, if (12) holds for , then we find

Due to (12), the claim follows so long as we can prove

| (13) |

We prove (13) by induction. Indeed, for we can immediately compute the necessary relation Now suppose (13) holds for . First derive the following recurrence for .

Therefore we find (13) also holds for by the following computation, which uses our hypothesis and (12).

∎

With this new form for in hand, we turn back to our objective of showing (10). For each , we will bound the intervals and separately. Notice (11) is useful for bounding because the polynomial stays fairly small away from , which the following claim quantifies.

Lemma 7.

Over we have

Proof.

Write by making the substitution . Then apply trigonometric identities to get the bound

Therefore, substituting back to we find

∎

Now let and use (11) to bound

| (14) | ||||

We can simplify this further by taking the Taylor expansion of cosine about and computing the following limit.

Therefore, returning to the inequality chain (14), we have for the following asymptotic equivalence as :

Where the last inequality follows from by (6). We have shown

Therefore if only we can show the asymptotic equivalence

| (15) |

as , then (10) and hence Theorem 2 follows. Substitute and we claim uniform convergence

If this is true, the result follows immediately because uniform convergence allows the following interchange of maximum and limit.

Thus the following claim is the only remaining barrier to the proof of Theorem 2.

Lemma 8.

We have uniform convergence

as over .

Proof.

Use (11) and trigonometric identities to rewrite the sequence of functions as

Because uniformly, we only must show that

uniformly. We break this into two parts, first using that sine is Lipschitz with constant to compute

Notice the right hand side of the above inequality converges uniformly to as , giving the uniform convergence . Secondly, use Taylor’s theorem to get the following uniform convergence over :

Because and are bounded over , the product of the sequences converges uniformly to the product of the limits and so we get uniform convergence

∎

Acknowledgements

This material is based upon work supported by the National Science Foundation Graduate Research Fellowship under Grant No. DGE-2140004. Additional thanks to Stefan Steinerberger for bringing this problem to the author’s attention and for helpful conversations.

References

- [1] Jean Babaud, Andrew P. Witkin, Michel Baudin, and Richard O. Duda. Uniqueness of the Gaussian kernel for scale-space filtering. IEEE Transactions on Pattern Analysis and Machine Intelligence, PAMI-8(1):26–33, 1986.

- [2] William Beckner. Inequalities in Fourier Analysis. Annals of Mathematics, 102(1):159–182, 1975.

- [3] William Beckner. Pitt’s inequality and the uncertainty principle. Proceedings of the American Mathematical Society, 123(6):1897–1905, 1995.

- [4] Emanuel Carneiro, Damiano Foschi, Diogo Oliveira e Silva, and Christoph Thiele. A sharp trilinear inequality related to Fourier restriction on the circle. Revista Matematica Iberoamericana, 33(4):1463–1486, 2017.

- [5] Emanuel Carneiro, Diogo Oliveira e Silva, and Mateus Sousa. Extremizers for Fourier restriction on hyperboloids. Annales de l’Institut Henri Poincaré C, Analyse non linéaire, 36(2):389–415, 2019.

- [6] Michael G. Cowling and John F. Price. Bandwidth versus time concentration: The Heisenberg–Pauli–Weyl inequality. SIAM Journal on Mathematical Analysis, 15(1):151–165, 1984.

- [7] V. A. Epanechnikov. Non-parametric estimation of a multivariate probability density. Theory of Probability & Its Applications, 14(1):153–158, 1969.

- [8] Damiano Foschi. Maximizers for the Strichartz inequality. Journal of the European Mathematical Society, 9(4):739–774, 2007.

- [9] Felipe Gonçalves, Diogo Oliveira e Silva, and Stefan Steinerberger. Hermite polynomials, linear flows on the torus, and an uncertainty principle for roots. Journal of Mathematical Analysis and Applications, 451(2):678–711, 2017.

- [10] Peter Hall, Michael C. Minnotte, and Chunming Zhang. Bump hunting with non-Gaussian kernels. The Annals of Statistics, 32(5):2124 – 2141, 2004.

- [11] Dirk Hundertmark and Vadim Zharnitsky. On sharp Strichartz inequalities in low dimensions. International Mathematics Research Notices, 2006:34080, 2006.

- [12] Jan J Koenderink. The structure of images. Biological Cybernetics, 50(5):363–370, 1984.

- [13] Vjekoslav Kovač, Diogo Oliveira e Silva, and Jelena Rupčić. A sharp nonlinear Hausdorff–Young inequality for small potentials. Proceedings of the American Mathematical Society, 147(1):239–253, 2019.

- [14] Vjekoslav Kovač, Diogo Oliveira E Silva, and Jelena Rupčić. Asymptotically sharp discrete nonlinear Hausdorff–Young inequalities for the -valued Fourier products. The Quarterly Journal of Mathematics, 73(3):1179–1188, 2022.

- [15] Noah Kravitz and Stefan Steinerberger. The smoothest average: Dirichlet, Fejér and Chebyshev. Bulletin of the London Mathematical Society, 53(6):1801–1815, 2021.

- [16] Tony Lindeberg. Scale-space for discrete signals. IEEE Transactions on Pattern Analysis and Machine Intelligence, 12(3):234–254, 1990.

- [17] Diogo Oliveira e Silva, Christoph Thiele, and Pavel Zorin-Kranich. Band-limited maximizers for a Fourier extension inequality on the circle. Experimental Mathematics, 31(1):192–198, 2022.

- [18] M Samiuddin and GM El-Sayyad. On nonparametric kernel density estimates. Biometrika, 77(4):865–874, 1990.

- [19] Betsy Stovall. Uniform estimates for Fourier restriction to polynomial curves in . American Journal of Mathematics, 138(2):449–471, 2016.

- [20] Betsy Stovall. Extremizability of Fourier restriction to the paraboloid. Advances in Mathematics, 360:106898, 2020.

- [21] United States Geological Survey. Lake Chelan elevation of resevoir water surface. https://waterdata.usgs.gov/monitoring-location/12452000. Data from 2011-07-16 to 2011-07-30. Accessed 2023-05-24.

- [22] Ryoya Yamasaki and Toshiyuki Tanaka. Kernel selection for modal linear regression: Optimal kernel and irls algorithm. In 2019 18th IEEE International Conference On Machine Learning And Applications (ICMLA), pages 595–601. IEEE, 2019.

- [23] Alan L. Yuille and Tomaso A. Poggio. Scaling theorems for zero crossings. IEEE Transactions on Pattern Analysis and Machine Intelligence, PAMI-8(1):15–25, 1986.