2. Radiology, Weill Cornell Medical College

3. Brain and Mind Research Institute, Weill Cornell Medical College

4. Nancy E. & Peter C. Meinig School of Biomedical Engineering, Cornell University

A shared neural encoding model for the prediction of subject-specific fMRI response

Abstract

The increasing popularity of naturalistic paradigms in fMRI (such as movie watching) demands novel strategies for multi-subject data analysis, such as use of neural encoding models. In the present study, we propose a shared convolutional neural encoding method that accounts for individual-level differences. Our method leverages multi-subject data to improve the prediction of subject-specific responses evoked by visual or auditory stimuli. We showcase our approach on high-resolution 7T fMRI data from the Human Connectome Project movie-watching protocol and demonstrate significant improvement over single-subject encoding models. We further demonstrate the ability of the shared encoding model to successfully capture meaningful individual differences in response to traditional task-based facial and scenes stimuli. Taken together, our findings suggest that inter-subject knowledge transfer can be beneficial to subject-specific predictive models.111Our code is available at https://github.com/mk2299/SharedEncoding_MICCAI.

1 Introduction

Naturalistic imaging paradigms, such as movies and stories, emulate the diversity and complexity of real-life sensory experiences, thereby opening a novel window into the brain. The last decade has seen an increased foothold of naturalistic paradigms in cognitive neuroimaging, fueled by the remarkable discovery of inter-subject synchrony during naturalistic viewing [1]. Naturalistic stimuli also demonstrate increased test-retest reliability and more active subject engagement in comparison to alternate paradigms such as resting-state fMRI [2]. Furthermore, experiments have shown that naturalistic stimuli can induce stronger neural response than task-based stimuli [3], suggesting that the brain is intrinsically more attuned to the former. Taken together, these benefits suggest an exciting future for naturalistic stimulation protocols in fMRI.

With large-scale compilation of multi-subject neural data through open-source initiatives such as the Human Connectome Project (HCP) [4], the development of approaches that can handle this enormous data is becoming imperative. Two approaches, namely inter-subject correlation (ISC) analysis [1, 5] and shared response model (SRM) [6], have dominated the analysis of multi-subject fMRI data under naturalistic conditions. The former approach exploits similarity in activation patterns across subjects to isolate stimulus-induced processing. The latter technique, SRM, decomposes neural activity into a shared response component and subject-specific spatial bases, and has been used for inter-subject knowledge transfer through functional alignment. While simple and efficient, both these approaches rely on a common time-locked stimulus across subjects and cannot, by design, model responses to completely unseen stimuli. On the other hand, predictive modelling of neural activity through encoding models is based upon generalization to arbitrary stimuli and can thus offer more holistic descriptions of sensory processing in an individual [7].

Neural encoding models map stimuli to fine-grained voxel-level response patterns via complex feature transformations. Previously, neural encoding models have yielded several novel insights into the functional organization of auditory and visual cortices [8, 9, 10, 11]. Encoding models encapsulating different hypothesis about neural information processing can be pitted against each other to shed new light on how information is represented in the brain. In this manner, neural encoding models have been largely used for making group-level inferences. The potential to extract meaningful individual differences from naturalistic paradigms remains largely untapped. Understanding inter-subject variability in behavior-to-brain representations is of key interest to neuroscience and can potentially even help identify atypical response patterns [12]. Modelling individual brain function in response to naturalistic stimuli is one step in this direction; however, building accurate individual-level models of brain function often requires large amounts of data per subject for good generalization. The problem is further exacerbated by the variability in anatomy and functional topographies across individuals, making inter-subject knowledge transfer difficult. There is limited work in leveraging multi-subject data for more robust and accurate individualized neural encoding. To our knowledge, this problem has been studied only in the context of natural vision with a handful subjects using a Bayesian framework [13]. Further, the proposed method in [13] transfers knowledge from one subject’s encoding model into another through a two-stage procedure and does not allow simultaneous optimization of encoding models across multiple subjects.

In this paper, we attempt to fill this gap; to this effect, we propose a deep-learning based framework to build more powerful individual-level encoding models by leveraging multi-subject data. Recent studies have revealed that coarse-grained response topographies are highly similar across subjects, suggesting that individual idiosyncrasies manifest in more fine-grained response patterns [14, 6]. This hints to the idea that encoding models could share representational spaces across subjects to overcome the challenges imposed by a limited quantity of per-subject data. We exploit this intuition to develop a neural encoding model with a common backbone architecture for capturing shared response and subject-specific projections that account for individual response biases, as demonstrated in Figure 1. Our proposed approach has several merits: (i) It allows us to combine data from multiple subjects watching same or different movies to build a global model of the brain. At the same time, it can capture meaningful individual-level deviations from the global model which can potentially be related to individual-specific traits. (ii) It is amenable to incremental learning with diverse, varying stimuli across seen or novel subjects with less constraints on data collection from single subjects. (iii) It poses minimal memory overhead with additional subjects and can thus handle fMRI datasets with a large number of subjects.

2 Methodology

Our proposed methodology is illustrated in Figure 1. Neural encoding models comprise two components: (a) a feature extractor, which pulls out relevant features from raw images or audio waveforms and (b) a response model, which maps these stimuli features into brain responses. In contrast to existing works that employ a linear response model [11, 9], we propose a CNN-based response model where the coarse 3D feature maps are shared across subjects and fine-grained feature maps are individual-specific. Previous studies have reported a cortical processing hierarchy where low-level features from early layers of a CNN-based feature extractor best predict responses in early sensory areas while semantically-rich deeper layers best predict higher sensory regions [8, 9]. To account for this effect, we employ a hierarchical feature extractor based on feature pyramid networks [15] that combines features from early, intermediate and later layers simultaneously. The output of the feature extractor is fed into the convolutional response model to predict the evoked fMRI activation. This enables us to train both components of the network simultaneously in an end-to-end fashion.

Formally, let denote the training data pairs for N subjects, where Xi denotes the stimuli presented to subject and denotes the corresponding fMRI measurements. We represent Xi as RGB images or grayscale spectrograms for the visual and auditory models, respectively. The feature model maps the 2D input into a vector representation s and is parameterized using a deep neural network F(X that is common across subjects. In our experiments, this model is a feature pyramid network built upon pre-trained recognition networks as DNNs optimized for image or sound recognition tasks have proven to provide powerful feature representations for encoding brain response. We define a differentiable function G(s that maps the features into a shared latent volumetric space z, whose first 3 axes represent the 3D voxel space and the last axis captures the latent dimensionality. The predicted response for each subject is then defined using subject-specific differentiable functions Hi(z that project the coarse feature maps z into an individualized brain response. We represent G and Hi’s using convolutional neural networks to have a sufficiently expressive model. Thus, and represent a mix of convolutional kernels or dense weight matrices. The number of shared parameters, is kept much greater than the cardinality of subject-specific parameters to accurately estimate the shared latent space. All parameters are trained jointly to minimize the mean squared error between the predicted and true response. The proposed method allows us to propagate errors through the shared network even if the subjects are not exposed to common stimuli since we can always backpropagate errors for subjects independently within each batch. Furthermore, using individualized layers to account for subject-specific biases enables the model to weigh gradients coming from losses of each subject differently according to their signal-to-noise ratio. This makes the model less susceptible to noisy measurements when responses for the same stimuli are available from multiple subjects.

2.1 Implementation details

We employ pre-trained Resnet-50 [16] and VGG-ish [17] architectures in the bottom-up path of Figure 1 to extract multi-scale features from images and audio spectrograms, respectively. The base architectures were selected because pre-trained weights of these networks optimized for classification on large datasets, namely Imagenet[18] and Youtube-8M[19], were publically available. For Resnet-50, we use activations of the last residual block of each stage, namely, res2, res3, res4 and res5 (notation from [20]) to construct our stimulus descriptions s. From the VGG network, we use the activations of each convolutional block, namely, conv2, conv3, conv4 and the penultimate dense layer fc2[21]. The first three set of activations are refined through a top-down path to enhance their semantic content, while the last activation is concatenated into s directly (res4 activations are vectorized using global average pool). The top-down path comprises three feature maps at different resolutions with an up-sampling factor of 2 successively from the deepest layer of the bottom-up path. Each such feature map comprising 256 channels is merged with the corresponding feature map in the bottom-up path (reduced to 256 channels by 1x1 convolutions) by element-wise addition. Subsequently, the feature map at each resolution is collapsed into a 256 dimensional feature vector through a global average pool operation and concatenated into s. The aggregated features are then passed onto a shared CNN (denoted G above) comprising the following feedforward computation: a fully connected layer to map the features into a vector space which is reshaped into a 1024-channel cuboid of size 6x7x6 followed by two 3x3x3 transposed convolutions (conv.T) with a stride of 2 to up-sample the latter and obtain z. Each convolution reduces the channel count by half, thereby, resulting in a shared latent z that is a 256 channel cuboid of size 27x31x27x256. Subject-specific functions Hi’s are parameterized as a cascade of two 3x3x3 conv.T operations (stride 2) with output dimensions 128 and 1 respectively. It is important to emphasize that these operations constitute much fewer parameters, thereby favoring the estimation of a shared truth. As we demonstrate empirically, a shared space allows much better generalization. At the same time, we find that even the limited subject-specific parameters can adequately capture meaningful individual differences. All parameters were optimized using Adam[22] with a learning rate of 1e-4. Auditory and visual models were trained for 25 and 50 epochs respectively with unit batch size. Validation curves were monitored to ensure convergence.

2.2 Data and Preprocessing

We study 7T fMRI data (TR = 1s) from a randomly selected sample of N=10 subjects from HCP movie-watching protocol [4, 23]. The dataset comprises 4 audiovisual movies, each 15 mins long. Preprocessing protocols are described in detail in [24, 23]. For our experiments, we utilize the 1.6mm MNI-registered volumetric images of size 113 x 136 x 113 per TR. We compute log-mel spectrograms using same parameters as [17] over every 1 second of audio waveform to obtain a 2D image-like input for the VGG audio feature extractor. We extract the last frame of every second of the video to present to the image recognition network for visual features. We estimate a hemodynamic delay of using regression based encoding models, as the response latency that yields highest encoding performance. Thus, all proposed and baseline models are trained to use the above stimuli to predict the fMRI response 4 seconds after the corresponding stimulus presentation. We train and validate our models on three movies using a 9:1 train-val split and leave the fourth movie for independent testing. This yields 2000 training, 265 validation and 699 test stimulus-response pairs per subject.

2.3 Baselines

-

•

Linear response model (individual subject): Here, we train independent models for each subject using linear response models. We note that, thus far, this is the dominant approach to neural encoding. To enable a fair comparison, we extract hierarchical features of the same dimensionality as the proposed model to present to the linear regressor. The only difference here is the lack of a top-down pathway (since it is not pre-trained), which prevents the refinement of coarse feature maps before aggregation. We apply regularization on the regression coefficients and adjust the optimal strength of this penalty through cross-validation using log-spaced values in . We report the performance of the best model as ‘Individual (Linear)’.

-

•

CNN response model (individual subject): Here, we employ the same architecture as the proposed model but with only one branch of subject-specific layers. We train this network independently for each subject without weight sharing and denote its performance as ‘Individual model (CNN)’.

-

•

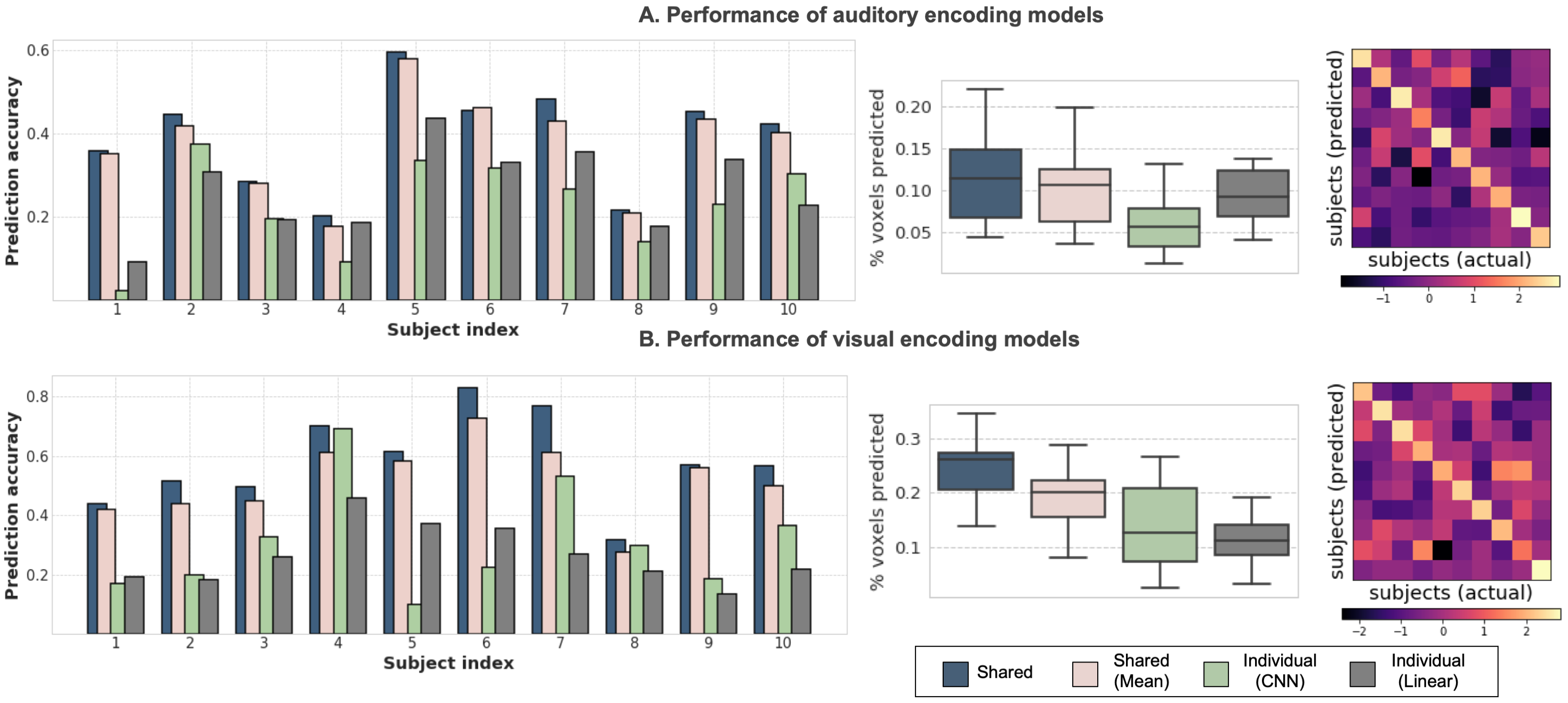

Shared model (mean): Here, we employ the proposed model after training but instead of computing predictions using the same subject’s learned weights, we compute predictions from all subject-specific branches. We compute the mean performance obtained by correlating each of these predictions with the ground truth response of a subject and denote this as ‘Shared (mean)’.

2.4 Performance evaluation

We measure performance on the test movie by computing the Pearson’s correlation coefficient between the predicted and measured fMRI response at each voxel. Since different subjects have a different signal-to-noise ratio, we normalize each voxel’s correlation by the subject’s noise ceiling for that voxel. We compute the subject-specific noise ceiling by correlating their repeated measurements on a validation clip. Further, since we are only interested in the stimulus-driven response, we measure performance in voxels that exhibit high inter-subject correlations. We randomly split the 10 subjects into groups of 5, and correlate the mean activity of the two groups. We repeat this process 5 times and voxels that exhibit a mean correlation greater than 0.1 are identified as synchronous voxels. We compute the mean normalized correlations across all synchronous voxels to achieve a single metric per subject, denoted as ‘Prediction accuracy’. We also correlate the predicted response of each subject against the predicted and true response of every other subject to obtain an correlation matrix for shared models. To account for higher variability in measured versus predicted response, we normalize the rows and columns of this correlation matrix following [25].

2.5 Demonstration of application: personalized brain mapping

To investigate if the proposed model is indeed capturing meaningful individual differences, we use the trained encoding model to predict fMRI activations for distinct visual object categories from the HCP task battery. Specifically, we predict brain response to visual stimuli (comprising faces, places, tools and body parts) from the HCP Working Memory (WM) task and use the predicted response to synthesize face and scene contrasts (FACES-AVG and PLACES-AVG respectively) for each individual. The predicted and true contrasts are thresholded to keep top of the voxels. We compute the Dice overlap between the predicted contrast for each subject against the true contrast of every subject (including self) to produce an matrix for each contrast.

3 Results

Figure 2 shows prediction accuracy of the proposed (‘Shared’) and baseline methods for each subject. The performance improvement is striking between proposed and individual subject models, suggesting that a shared backbone architecture can significantly boost generalization. Comparative boxplots further show that the proposed method predicts a much higher percentage of the synchronous cortex than individual subject models. Further, the difference between ‘Shared’ and ‘Shared (mean)’ as well as the dominant diagonal structure in correlation matrices suggest that the proposed method is indeed capturing subject idiosyncrasies rather than predicting a group-averaged response. Further, while the CNN response model performs slightly better in visual encoding, it incurs a performance drop compared to linear regression in auditory encoding. This perhaps suggests that the boost in accuracy seen for shared models is largely due to inter-subject knowledge transfer rather than the convolutional response model itself.

In Figure 3(A) & 3(B), we visualize the un-normalized correlations between the predicted and measured fMRI response for the proposed models, averaged across subjects. For the auditory model, we see significant correlations in the parabelt auditory cortex, extending into the superior temporal sulcus and some other language areas (55b) as well. For the visual model, while we see significant correlations across the entire visual cortex (V1-V8), the performance is much better in higher-order visual regions, presumably because of the semantically rich features. The lower performance in early visual regions could also result from the dynamic nature of visual stimulation in movies.

Figure 3(C) & 3(D) illustrate the ability of our proposed model to characterize individual differences even beyond the experimental paradigm it was trained on. The diagonal dominance in the dice matrix for both contrasts suggests that predicted contrasts are most similar to the same subject’s true contrast. No prominent diagonal structure was observed for individual subject models, presumably because of their poor generalization to out-of-domain stimuli from the HCP task battery. Further, predicted contrasts consistently highlight known areas for face and scene processing, namely the fusiform face area[26] and parahippocampal areas[27] respectively.

4 Discussion

In this paper, we presented a framework for utilizing multi-subject fMRI data to improve individual-level neural encoding. We showcased our approach on both auditory and visual stimuli and demonstrated consistent improvement over competing approaches. Our experiments further suggest that a single experiment (free-viewing of movies) can characterize a multitude of brain processes at once. This has important implications for brain mapping which traditionally relies on a battery of carefully-constructed stimuli administered within block-designs. Inter-subject variability in response patterns induced by the complexity of naturalistic viewing can facilitate the development of novel imaging-based biomarkers. Neural encoding models are not constrained to modeling the response to a limited set of experimental stimuli; their good generalization performance suggests that they can capture broad theories of cognitive processing. Accurate, individualized neural encoding models can thus bring us one step closer to achieving the goal of biomarker discovery.

Acknowledgements

This work was supported by NIH grants R01LM012719 (MS), R01AG053949 (MS), R21NS10463401 (AK), R01NS10264601A1 (AK), the NSF NeuroNex grant 1707312 (MS), the NSF CAREER 1748377 grant (MS) and Anna-Maria and Stephen Kellen Foundation Junior Faculty Fellowship (AK).

References

- [1] U. Hasson, Y. Nir, I. Levy, G. Fuhrmann, and R. Malach. Intersubject synchronization of cortical activity during natural vision. Science, 303(5664):1634–1640, Mar 2004.

- [2] S. Sonkusare, M. Breakspear, and C. Guo. Naturalistic Stimuli in Neuroscience: Critically Acclaimed. Trends Cogn. Sci. (Regul. Ed.), 23(8):699–714, Aug 2019.

- [3] J. Schultz and K. S. Pilz. Natural facial motion enhances cortical responses to faces. Exp Brain Res, 194(3):465–475, Apr 2009.

- [4] M. F. Glasser, S. N. Sotiropoulos, J. A. Wilson, T. S. Coalson, B. Fischl, J. L. Andersson, J. Xu, S. Jbabdi, M. Webster, J. R. Polimeni, D. C. Van Essen, and M. Jenkinson. The minimal preprocessing pipelines for the Human Connectome Project. Neuroimage, 80:105–124, Oct 2013.

- [5] U. Hasson, R. Malach, and D. J. Heeger. Reliability of cortical activity during natural stimulation. Trends Cogn. Sci. (Regul. Ed.), 14(1):40–48, Jan 2010.

- [6] Po-Hsuan Cameron Chen, Janice Chen, Yaara Yeshurun, Uri Hasson, James V. Haxby, and Peter J. Ramadge. A reduced-dimension fMRI shared response model. In NIPS, 2015.

- [7] G. Varoquaux and R. A. Poldrack. Predictive models avoid excessive reductionism in cognitive neuroimaging. Curr. Opin. Neurobiol., 55:1–6, 04 2019.

- [8] Alexander J.E. Kell, Daniel L.K. Yamins, Erica N. Shook, Sam V. Norman-Haignere, and Josh H. McDermott. A Task-Optimized Neural Network Replicates Human Auditory Behavior, Predicts Brain Responses, and Reveals a Cortical Processing Hierarchy. Neuron, 98(3):630–644.e16, may 2018.

- [9] U. Guclu and M. A. van Gerven. Deep Neural Networks Reveal a Gradient in the Complexity of Neural Representations across the Ventral Stream. J. Neurosci., 35(27):10005–10014, Jul 2015.

- [10] D. L. Yamins, H. Hong, C. F. Cadieu, E. A. Solomon, D. Seibert, and J. J. DiCarlo. Performance-optimized hierarchical models predict neural responses in higher visual cortex. Proc. Natl. Acad. Sci. U.S.A., 111(23):8619–8624, Jun 2014.

- [11] Haiguang Wen, Junxing Shi, Yizhen Zhang, Kun Han Lu, Jiayue Cao, and Zhongming Liu. Neural encoding and decoding with deep learning for dynamic natural vision. Cerebral Cortex, 28(12):4136–4160, dec 2018.

- [12] J. Dubois and R. Adolphs. Building a Science of Individual Differences from fMRI. Trends Cogn. Sci. (Regul. Ed.), 20(6):425–443, 06 2016.

- [13] Haiguang Wen, Junxing Shi, Wei Chen, and Zhongming Liu. Transferring and generalizing deep-learning-based neural encoding models across subjects. NeuroImage, 176:152–163, aug 2018.

- [14] Umut Güçlü and Marcel A.J. van Gerven. Increasingly complex representations of natural movies across the dorsal stream are shared between subjects. NeuroImage, 145:329–336, jan 2017.

- [15] Tsung-Yi Lin, Piotr Dollár, Ross B. Girshick, Kaiming He, Bharath Hariharan, and Serge J. Belongie. Feature pyramid networks for object detection. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 936–944, 2016.

- [16] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 770–778, 2015.

- [17] Shawn Hershey, Sourish Chaudhuri, Daniel P. W. Ellis, Jort F. Gemmeke, Aren Jansen, R. Channing Moore, Manoj Plakal, Devin Platt, Rif A. Saurous, Bryan Seybold, Malcolm Slaney, Ron J. Weiss, and Kevin W. Wilson. Cnn architectures for large-scale audio classification. 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 131–135, 2016.

- [18] Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Fei-Fei Li. Imagenet: A large-scale hierarchical image database. 2009 IEEE Conference on Computer Vision and Pattern Recognition, pages 248–255, 2009.

- [19] Sami Abu-El-Haija, Nisarg Kothari, Joonseok Lee, Apostol Natsev, George Toderici, Balakrishnan Varadarajan, and Sudheendra Vijayanarasimhan. Youtube-8m: A large-scale video classification benchmark. ArXiv, abs/1609.08675, 2016.

- [20] Ross Girshick, Ilija Radosavovic, Georgia Gkioxari, Piotr Dollár, and Kaiming He. Detectron. https://github.com/facebookresearch/detectron, 2018.

- [21] S. Hershley and et. al. Models for audioset: A large scale dataset of audio events. https://github.com/tensorflow/models/tree/master/research/audioset/vggish, 2016.

- [22] Diederik P. Kingma and Jimmy Ba. Adam: A method for stochastic optimization. CoRR, abs/1412.6980, 2014.

- [23] D. C. Van Essen, K. Ugurbil, E. Auerbach, D. Barch, T. E. Behrens, R. Bucholz, A. Chang, L. Chen, M. Corbetta, S. W. Curtiss, S. Della Penna, D. Feinberg, M. F. Glasser, N. Harel, A. C. Heath, L. Larson-Prior, D. Marcus, G. Michalareas, S. Moeller, R. Oostenveld, S. E. Petersen, F. Prior, B. L. Schlaggar, S. M. Smith, A. Z. Snyder, J. Xu, and E. Yacoub. The Human Connectome Project: a data acquisition perspective. Neuroimage, 62(4):2222–2231, Oct 2012.

- [24] A. T Vu, K. Jamison, M. F. Glasser, S. M. Smith, T. Coalson, S. Moeller, E. J. Auerbach, K. Ugurbil, and E. Yacoub. Tradeoffs in pushing the spatial resolution of fMRI for the 7T Human Connectome Project. Neuroimage, 154:23–32, 07 2017.

- [25] I. Tavor, O. Parker Jones, R. B. Mars, S. M. Smith, T. E. Behrens, and S. Jbabdi. Task-free MRI predicts individual differences in brain activity during task performance. Science, 352(6282):216–220, Apr 2016.

- [26] N. Kanwisher, J. McDermott, and M. M. Chun. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci., 17(11):4302–4311, Jun 1997.

- [27] S. Nasr, N. Liu, K. J. Devaney, X. Yue, R. Rajimehr, L. G. Ungerleider, and R. B. Tootell. Scene-selective cortical regions in human and nonhuman primates. J. Neurosci., 31(39):13771–13785, Sep 2011.

- [28] M. F. Glasser, T. S. Coalson, E. C. Robinson, C. D. Hacker, J. Harwell, E. Yacoub, K. Ugurbil, J. Andersson, C. F. Beckmann, M. Jenkinson, S. M. Smith, and D. C. Van Essen. A multi-modal parcellation of human cerebral cortex. Nature, 536(7615):171–178, 08 2016.