A Semi-automatic Oriental Ink Painting Framework for Robotic Drawing from 3D Models

Abstract

Creating visually pleasing stylized ink paintings from 3D models is a challenge in robotic manipulation. We propose a semi-automatic framework that can extract expressive strokes from 3D models and draw them in oriental ink painting styles by using a robotic arm. The framework consists of a simulation stage and a robotic drawing stage. In the simulation stage, geometrical contours were automatically extracted from a certain viewpoint and a neural network was employed to create simplified contours. Then, expressive digital strokes were generated after interactive editing according to user’s aesthetic understanding. In the robotic drawing stage, an optimization method was presented for drawing smooth and physically consistent strokes to the digital strokes, and two oriental ink painting styles termed as Noutan (shade) and Kasure (scratchiness) were applied to the strokes by robotic control of a brush’s translation, dipping and scraping. Unlike existing methods that concentrate on generating paintings from 2D images, our framework has the advantage of rendering stylized ink paintings from 3D models by using a consumer-grade robotic arm. We evaluate the proposed framework by taking 3 standard models and a user-defined model as examples. The results show that our framework is able to draw visually pleasing oriental ink paintings with expressive strokes.

Index Terms:

art and entertainment robotics, robotic drawing, 3D modelsI Introduction

Oriental ink painting, also known as Shuimohua in China and Suibokuga in Japan, is an ancient monochrome painting art that abstracts complex object into a few expressive strokes and draws the strokes on a rice paper by skillfully using black ink, water and a soft brush. The philosophy of oriental ink painting is similar to the concept of “less is more” from the minimalist [1]. However, it is difficult for common people or robots to draw aesthetically pleasing paintings because even a single stroke can produce considerable variations in shading, and an artist may spend years to master the drawing skills. Moreover, robotic painting from three-dimensional (3D) models involves several interdisciplinary fields such as robotics, computer graphics, human-computer interaction and art.

With the rapid advancement of Non-Photorealistic Rendering (NPR), a variety of digital arts have been created from images [2, 3]. However, most of these studies focus on generating digital works or imitate master’s painting styles from two-dimensional (2D) data. Nowadays, it is convenient to reconstruct 3D models of real-world objects through depth sensors or multi-view photos [4]. In comparison with 2D sketches and images, 3D models can better deliver the geometrical structure of objects. Thus, Grabli et al. [5] and Liu et al.[6] have studied the creation of stylized line drawings from 3D models. While these methods work well for generating digital paintings, it is a challenging task to realize physical paintings using a robotic arm, since the motion control of the robot and the interaction process among the brush, ink, and a paper are totally different from the digital rendering. Recently, Lindemeier et al. [7], Scalera et al. [8], and Löw et al. [9] have developed painting robots to achieve impressive oil painting, watercolor painting and portrait drawing from 2D images. However, the current robotic drawing methods haven’t considered generating stylized ink paintings from 3D models. In this work, we design an oriental ink painting framework to physically draw stylized strokes from 3D models as shown in Fig. 1. The main contributions of this work include:

-

•

a practical user interface to vectorize and extract expressive digital strokes from 3D models by referring to both the simplified contours and the original models.

-

•

an optimization and mapping method for drawing smooth and physically consistent strokes from the digital strokes.

-

•

a realization of two oriental ink painting styles which are termed as Noutan and Kasure to create aesthetic effects of shading and scratchiness on a rice paper.

-

•

a novel framework to convert 3D models to oriental ink paintings through interactive editing and robotic drawing.

II RELATED WORK

The publications that are directly related to our work can be categorized into two groups: stylized line drawing methods from 3D models in simulation stage, and robotic drawing methods in real execution stage.

II-A Line Drawings from 3D Models

The problem of extracting and drawing expressive lines from 3D models is equivalent to the problem of how artists create line drawings from real objects, which is one of the challenging task in NPR. The detailed survey of line drawing methods from 3D models can be found in Bénard and Hertzmann’s tutorial [10]. Saito and Takahashi [11] first created stylized contour lines and curved hatching from 3D models by using 2D image processing operations. Winkenbach and Salesin [12] extended the work of [11] to generate more realistic line drawings from 3D models by integrating 2D and 3D rendering. Zhang et al. [13] presented a cellular automaton model to simulate the Suibokuga-like painting of 3D trees. DeCarlo et al. [14] proposed Suggestive Contours to improve the quality of line drawings by connecting perception knowledge and differential geometry. Grabli et al. [5] created vivid stylized line drawings such as Japanese big brush from 3D models based on the work of [14]. Judd et al. [15] defined view-dependent curvature to generate Apparent Ridges that captured more detailed features than the Suggestive Contours. Recently, Uchida and Saito [16] introduced two fully convolutional neural networks to determine the intensity of strokes for stylized line drawings. Liu et al. [6] generated impressive stylized strokes for line drawings based on a combination of a differentiable geometric module and an image translation network. Although these methods have ability to extract feature lines and generate visually pleasing digital strokes from 3D models, they are unsuitable for creating physical stylized strokes for robotic drawing because the extracted lines are likely to be redundant or oversimplified, and the styles of digital strokes which are usually implemented by texture mapping are difficult to be realized in physical drawing. Inspired by the work of interactive inking for cleaning rough sketches [17], our work does not pursue fully automatic realization of line drawings but focuses on extracting a few non-overlapping expressive lines with a combination of existing automatic contour extraction method and human-computer interaction process.

II-B Robotic Drawing

The earliest work of robotic drawing could date back to Harold Cohen’s exhibitions at the Computer Museum in 1995 [18]. Cohen developed a robotic drawing system “AARON” that could color its own paintings using a variety of brushes. Thanks to advances of sensor and AI technologies, the diversity, perception and collaboration skills of robots improved significantly. Calinon et al. [19] designed a Degrees of Freedom (DOFs) robotic arm to draw a portrait detected from a webcam using traditional image processing and inverse kinematics methods. Löw et al. [9] designed a robotic system “drozBot” to draw artistic portraits from images based on a novel ergodic control algorithm. However, the above-mentioned methods can only create monochrome paintings. Recently, Lindemeier et al. [7] proposed a painting robot to realize colored oil painting styles from 2D images. Luo and Liu [20] realized an interesting colored Cartoon style portrait painting using NPR techniques. Scalera et al. Karimov et al. [21] developed a data-driven model for accurately color mixing and reproduced famous artworks based on a robotic arm. More recently, the deep learning methods have been developed to solve more complicated robotic drawing problems. Zhang et al. [22] trained a network to identify the type of individual strokes for intelligent calligraphy beautification. Gao et al. [23] developed a robotic system for efficiently drawing portraits from images based on a combination network of Neural Style Transfer and Generative Adversarial Network. Bidgoli et al. [24] used deep learning method to learn stylized brushstroke from human artists and reproduce them through robotic painting. Furthermore, the surface of robotic drawing has been extended from 2D to 3D. Song et al. [25] presented an impedance control method that can draw user’s 2D drawing on a 3D surface without vision support, and extended the drawing on a large and nonplanar surface with a mobile platform [26]. In contrast, Liu et al. [27] developed a robotic system to draw 2D strokes on 3D surface based on scanned point clouds and a robust motion planning algorithm. While all these robotic drawing methods have varying focuses such as artistic portrait drawing [19, 20, 23, 9] or stylized colored painting from 2D images [7, 20, 8, 28] or realistic calligraphy drawing [22] or robust drawing on 3D surfaces [25, 26, 27], they haven’t considered creating stylized ink paintings from 3D models which requires understanding the abstraction technique from 3D models and the motion control of a robotic arm and a brush to generate the styles of oriental ink painting such as Noutan and Kasure.

III OVERVIEW

In this work, we propose a semi-automatic robotic drawing framework to convert 3D models to physical stylized ink paintings. Fig. 2 illustrates the workflow of our robotic drawing framework. We start off with a shaded 3D model with different viewpoints. To depict shapes by few featured strokes for ink painting, we extract geometrical contours from the 3D model and then employ a neural network to simplify the contours. Next, the simplified contour image is converted to a vector image by taking account of local and global curvatures, and a user interface is designed to allow users to pick, merge and insert expressive polylines in accordance with personal artistic perception for detailed enrichment. After user interaction, a digital ink painting with selected strokes is automatically generated in real-time. In order to control and draw physical strokes that are consistent to the digital strokes by using a robotic arm, we optimize the stoke trajectory and map the stroke properties from simulation space to physical space. Finally, two typical oriental ink painting styles which are termed as Noutan and Kasure are realized to add aesthetic effects of shading and scratchiness to the physical strokes.

IV FEATURE LINE EXTRACTION AND SIMPLIFICATION

In contrast to western paintings which usually concentrate on the realism of visual objects since the Renaissance [29], most oriental ink paintings emphasize the inner spiritual essence instead of exact imitation of objects. A typical technique is drawing few well-organized stylized strokes on a rice paper to leave white space for stimulating imagination. In order to depict shapes by few feature strokes, we extract geometry-based contours from 3D models and then utilize a neural network to simplify the contour lines for ink painting.

IV-A Feature Line Extraction from 3D Models

Inspired by the investigation that digital line drawings can depict 3D model that even match the artist’s drawings [30], we select three typical geometry-based digital contours, namely occluding contours (OCs), suggestive contours (SCs)[14], and apparent ridges (ARs) [15], as the basis for the creation of ink paintings from 3D models. Given a camera viewpoint and a 3D model, the combination of these contours produces a raster contour image as shown in Fig. 3 (a-d), where OCs conveys the rough shape of the object, SCs and ARs add considerable amount of details to the 3D model.

IV-B Neural Simplification Network

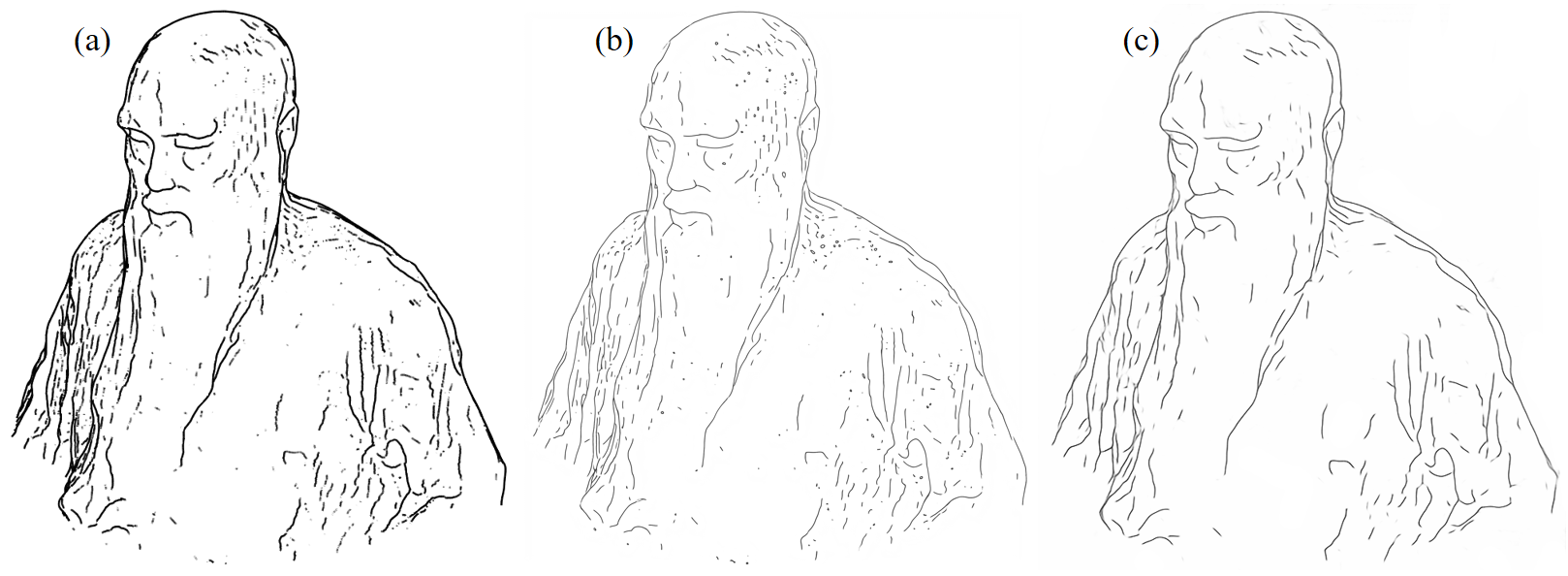

Although the extracted contours are a reasonable description of 3D shape, it creates large amount of dense and detailed lines that are inapplicable for oriental ink paintings which focus on sparse stylized strokes. Motivated by the CNN-based neural network for sketch simplification [31], we employ the similar network with 3 down-convolution layers, 17 flat-convolution layers and 3 up-convolution layers to simplify the contour image. The loss function is defined as the -norm of the difference between the output image and the target image. However, the dataset of the previous network is for cleaning rough pencil sketches. Therefore, we construct our contour simplification dataset through manual annotation.

The dataset consists of two parts, one part is rough contours automatically extracted from 3D models and the other part is the corresponding feature lines selected by users. The 3D models in the dataset are constructed from categories including humans, flowers, animals and furniture, and each category has 3D model as shown in Fig. 4. To make the training robust, we augmented the dataset by applying image blurring, flipping and adding noise. Then, we measured precision by defining the contour pixels as “True” and the rest pixels as “False”. The dataset was divided into training set, validation set and test set with a ratio of . After epochs of iterative training with batch size of , the precision of the network converged to and on the training set and the validation set when considering only one viewpoint, and the corresponding accuracy converged to and when considering two viewpoints as shown in Fig. 5, which indicated that increasing the number of viewpoints did not change the network accuracy much.

V VECTORIZATION AND INTERACTIVE EDITING

Thus far the simplified contours are represented by a raster image, it is a routine to convert the raster image to a vector image for ink painting. However, the vectorized image may possibly generate small disconnected or noisy contours. Moreover, the contours might be over-simplified that some expressive features are ignored. Therefore, we developed a user interface to interactively edit the simplified contours.

V-A Vectorization

To generate smooth individual curves without junctions for robotic drawing, locating proper corners is critical because corners have significant variations in curvature. Thus, we use a robust corner detector to remove junctions or intersection points at which curves meet based on local and global curvatures [32]. The local curvature of the th contour pixel in a raster image is denoted by . The pixels with the maxima of absolute curvature are selected as corner candidates. However, round corners which are important for forming smooth curves might be incorrectly marked as candidates. Therefore, a global curvature threshold is defined to remove round corners by considering the mean curvature within a region of support :

| (1) |

where and are the nearest curvature minima on both sides of pixel , and is the splitting ratio to control the number of vectorized curves. If of a pixel is less than , it is classified as round corner and then should be removed from the corner candidates. Finally, the rest corner pixels are utilized as endpoints to form individual polylines, and each polyline is represented by a series of line segments .

(a) Interactive editing of vectorized contours.

(b) Refined result by referring to the 3D model.

(b) Refined result by referring to the 3D model.

V-B Interactive Editing

To generate appropriate stylized strokes for oriental ink painting which emphasizes sparse and expressive contours, we developed a user interface (UI) as shown in Fig. 6 to allow users to flexibly edit the simplified contours. The left view of the UI is designed for human-computer interaction, and the right view simultaneously shows the completed digital ink painting. The UI consists of several tools including “Split”, “Pick”, “Delete”, “Merge” and “Insert”.

Split. A “Splitting ratio” slider is used to adjust the splitting ratio for vectorization. Small will break up long curves and generate short polylines. In contrast, large will retain long curves. The default value of is set as .

Pick. The “Pick” tool allows users to interactively select expressive polylines. By default, the vectorized outside OCs are automatically selected as the target as shown in Fig. 6(a). However, the inside polylines require manual selection because not all the simplified SCs and ARs are appropriate for stylized painting. Once a polyline is selected, the users can extend the polyline by inserting new points.

Delete. The “Delete” tool is used to remove incorrectly picked polylines.

Merge. For over-simplified or over-segmented curves, the “Merge” tool can be used to re-connect broken polylines and form long curves.

Insert. The “Insert” tool is used to add new polylines. The missing features may happen during the feature extraction step or after neural simplification. To ensure the expressive features are correctly added or selected, our interface refers not only to the vectorized contours but also to the original model as shown in Fig. 6(b).

Moreover, we design two more sliders to control the maxima and minima width (thickness) of a stroke. While the interaction process is not fully automatic, users can obtain expressive strokes according to their aesthetic understandings by taking advantage of the editing tools.

VI STROKE OPTIMIZATION AND MAPPING

Unlike digital ink paintings, it is difficult to precisely control and draw physical strokes by using a robotic arm with a soft brush. Thus, we optimize the stroke trajectory and map the stroke positions and thicknesses from simulation space to physical space instead of directly input them to robotic arm.

VI-A Stroke Optimization

The extracted polylines may not be smooth or unevenly sampled, which are not suitable for direct use in robotic drawing. A B-spline curve can generate evenly distributed smooth curve with several control points and avoid the Runge phenomenon. However, the choosing of control points is crucial because the generated curve does not necessarily pass through the sample points as shown in Fig. 7(a). Considering a cubic B-spline curve , where is control point and is the basis B-spline of degree . To compute a smooth curve for approximating endpoints of extracted lines, we employ a nonlinear optimization method [33] to fit using the following object function:

| (2) |

where is the squared distance error term to measure the distance between the point and the curve , and are smoothness terms, and are constant coefficients. The minimization problem of the object function can be solved by the Quasi-Newton iteration method to generate an updated fitting curve as shown in Fig. 7(b-d). After 15 iterations, the updated curve almost pass through all the sample points as seen in Fig. 7(d).

VI-B Thickness Setting

The thickness of a stroke at each of sampling vertices on the optimized B-spline curve is defined by an exponential interpolation of a minimum thickness and a maximum thickness in the middle of the curve:

| (3) |

where is an exponent to control the change rate of the stroke thickness [5]. Fig. 7(e) shows the simulated drawing stroke with thickness controlled by Eq. (3) when the default values of and were set as and respectively.

| 2 | 4 | 6 | 8 | 10 | 12 | 14 | 16 | 18 | |

| 3 | 4 | 5 | 6.5 | 10.5 | 12 | 13 | 14 | 14.5 | |

| Robotic drawing strokes |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7104480a-a49f-4364-be3e-e8d53baba796/stroke_mapping.jpg)

|

||||||||

VI-C Stroke Mapping

In order to physically draw strokes that are consistent to the simulated strokes as shown in Fig. 7(e), firstly, we carried out an experiment to derive the relationship between stroke thickness and the descent of brush which is controlled by a robotic arm. Let be the height at which the brush tip just reaches the paper without distortion. The descent step of the brush was set as , and was updated from to . The footprint of the brush is like a droplet and the increasing descent results in more severe brush bending, which in turn leads to wider stroke as shown in Tab. I. The thickness of each stroke was measured at the widest part of the stroke. Then, a standard least-squares fitting approach was applied to estimate the relationship between and . Finally, we obtained , where , , and the coefficient of determination is 0.957, which indicates that the linear fitting is appropriate for thickness prediction. Correspondingly, the stroke position for robotic drawing can be mapped by , where is the centroid of sampling vertices in simulation space, and is the center of robot Cartesian space. Fig. 7(f) shows the robotic drawing stroke which is close to the simulated one after using stroke mapping.

VII ROBOTIC DRAWING OF STYLIZED INK PAINTING

The oriental ink paintings utilize simple brush, black ink and water to generate strokes with varying painting styles, then to depict complex scenery such as bamboos, fish, shrimps and mountains. However, it is extremely difficult for common people to master the painting styles. Therefore, we employ a robotic arm to realize typical ink painting styles, in the vision that even an amateur can draw a fair ink painting by taking advantage of the robotic arm.

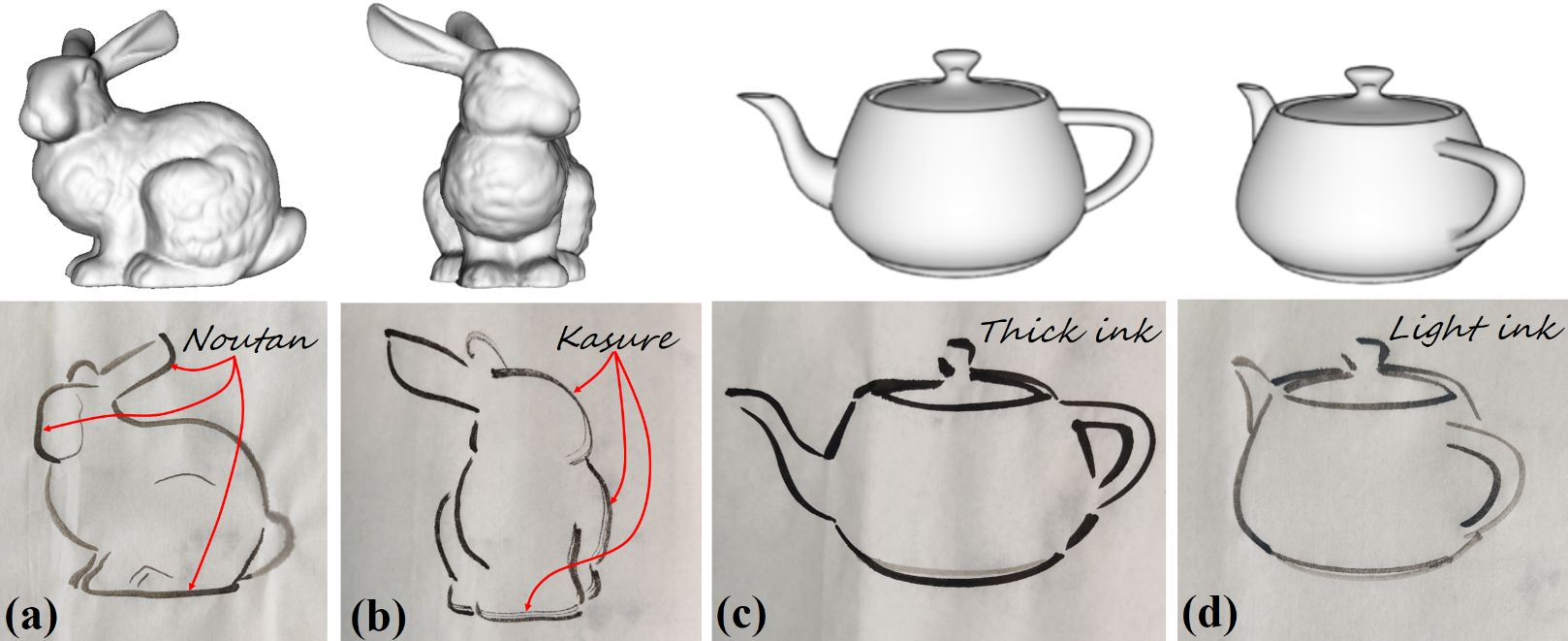

VII-A Oriental Ink Painting Styles

In traditional oriental ink paintings, the patterns of light and darkness can be applied to strokes by using two typical ink painting styles, which are termed as Noutan (shade) and Kasure (scratchiness) [13, 34] as shown in Fig. 2 and Fig. 8. Noutan uses water to lighten the ink and creates shade of gray color of a stroke. Fig. 8 (a, b) shows the light gray, dark gray and gradient shading strokes drawn by different Noutan shading styles. Kasure is the scratchy break-up of a stroke trajectory caused by insufficient and uneven supply of ink along the brush as shown in Fig. 8(a). In our work, we explore an automatic approach to achieve the two painting styles using robotic arm.

VII-B Robotic Drawing of Ink Painting Styles

To create the shading effect, we assume that Noutan is a diffusion process of a brush with varying color of ink. Therefore, two ink stones, which are filled with light ink and thick ink, are prepared for mixing the two kinds of inks using the brush as shown in Fig. 9.

In our experiment, we observed that fully moistening the brush in the thin ink and scraping the ink at the edge of ink stone in advance is helpful for forming natural Noutan style. Thus, we defined a series of actions for automatically rendering the Noutan style:

| (4) | ||||

where , , and represent the actions of translation, dipping and scraping of the brush; , and are the central positions and radii of the ink stones. Eq. (4) describes that the action Noutan consists of (1) a cross-like translation, dipping and scraping in directions for fully moistening and adjusting the brush in the light ink, and (2) a translation and dipping for mixing in the thick ink. Next, the dipping time and dipping height of the brush in the thick ink can be applied to control the shading degree of Noutan:

| (5) |

where is the length of a brush, and is a constant coefficient. Let which denotes the dipped ratio of a brush. Then, a small and a small will create bright shading effect as shown in Fig. 10(a) because of shallow mixing in the thick ink, and a large and a large will generate dark shading effect as shown in Fig. 10(b) because of sufficient mixing in the thick ink.

Kasure mainly depends on the property of brush hairs, the quantity of ink absorbed by the brush and the area of drawing strokes. It is difficult to dynamically control the property of each brush hair such as the length, stiffness and the ability of absorbing fluid. However, it is possible to control the amount of ink on the brush and the area of strokes. Therefore, we assume that the degree of scratchiness is proportional to the stroke area which consists of a series of trapezoids and inversely proportional to the absorbed quantity of ink which is related to the dipping time and the dipped ratio :

| (6) |

where and are constant coefficients. In our experiment, the brush remained dry before being dipped into an ink stone. Fig. 10(c) shows the distinguishable effect of scratchiness when setting a small and a small . In contrast, the Kasure effect only happened at the very end of the stroke when setting a large and a large as shown in Fig. 7(f).

VII-C Trajectory Planning

In order to draw stylized strokes using robotic arms, we first mapped the sampling positions and thicknesses of an optimized stroke in simulation space to in robot Cartesian space as introduced in Sec. VI. Then, we set the painting styles, dipping time and dipped ratio of a brush for stylized drawing. Next, the brush was lifted to an initial safe plane with a height above a drawing plane waiting for real executions as shown in Fig. 9. After that, a series of actions such as , and were combined to form various painting styles. Finally, the stroke trajectory integrated with the way-points of these actions were automatically converted to joints’ rotations from Cartesian space to joint space by solving an inverse kinematics problem [35]. One of an important design of trajectory planning is to choose a joint-space or task-space trajectory. In our framework, we used task-space trajectory since it generates physical strokes more faithful to the simulated ones. Furthermore, the speed of robotic drawing for each stroke was constrained by a typical trapezoidal velocity model which ensures piecewise trajectories of constant acceleration, zero acceleration, and constant deceleration.

VIII RESULTS AND DISCUSSION

Our robotic drawing framework was implemented in a PC configured with a CPU, RAM, and Windows OS. The user interface, optimization and control algorithms were developed by Python and C++. In order to satisfy the requirements of painting for common people, a consumer-grade robotic arm “Dobot Magician” (less than ) with positioning repeatability is used to draw different painting styles. All motions of the robotic arm are realized in DOFs, and the drawing speed of the robotic arm is set from to for stable painting.

VIII-A Evaluation of Simplification Network

Fig. 11 shows two different simplified contours generated from the input rough contours of a 3D model. We can observe that our updated model with augmented dataset generated cleaner and longer contours than the results of the pre-trained model of Simo-Serra et al. [31].

VIII-B Evaluation of Editing Tools

If we directly use all the simplified contours to generate strokes without interactive editing, it will generate many redundant strokes overlapping with each other or some expressive strokes might be ignored as shown in Fig. 12(c). Therefore, we designed the interactive editing tools to choose and modify expressive strokes by referring to both the simplified contours and the original 3D models. Fig. 12(d) shows that our editing tools can generate clean and expressive strokes.

VIII-C Evaluation of Stroke Optimization

To evaluate the effectiveness of stroke optimization algorithm, we have conducted an experiment to verify that the physically drawing stroke can keep the shape of a simulated curve when the brush is deformed with various descent height as shown in Fig. 13. The first row of Fig. 13 show the robotic drawing results of strokes before B-spline fitting and optimization. The second row of Fig. 13 shows the robotic drawing results of strokes after B-spline fitting without optimization, and the third row of Fig. 13 shows the robotic drawing results of strokes after B-spline fitting with optimization.

To quantitatively evaluate the stroke optimization method, we utilized the angle of contingence (or the slope change between contiguous straight-line segments at a point) presented by [36] to represent the discrete curvature at the typical turning points of the simulated curve as shown in Fig. 14. The original angles of contingence of the turning points in the simulated curve are , , , , and . The angles of contingence corresponding to the three kinds of physically drawing strokes in Fig. 13 are shown in Tab. II, III and IV. Fig. 15(a-c) show the corresponding changing trends of from Tab. II, III and IV.

| descent height | 1 | 2 | 3 | 4 | 5 | 6 | average error |

| 47.5mm | 140 | 91 | 140 | 90 | 150 | 170 | 7.33 |

| 46.5mm | 145 | 95 | 138 | 92 | 151 | 171 | 9.17 |

| 45.5mm | 149 | 96 | 138 | 93 | 158 | 169 | 11.00 |

| 44.5mm | 158 | 100 | 140 | 92 | 149 | 175 | 12.83 |

| 43.5mm | 164 | 102 | 140 | 93 | 150 | 165 | 12.83 |

| descent height | 1 | 2 | 3 | 4 | 5 | 6 | average error |

| 47.5mm | 160 | 115 | 140 | 96.5 | 147 | 164 | 14.25 |

| 46.5mm | 163 | 122 | 142 | 97 | 145 | 165 | 16.17 |

| 45.5mm | 165 | 123 | 142 | 98 | 148 | 169 | 16.83 |

| 44.5mm | 166 | 125 | 144 | 100 | 147 | 175 | 18.00 |

| 43.5mm | 165 | 127 | 144 | 99 | 152 | 165 | 18.67 |

| descent height | 1 | 2 | 3 | 4 | 5 | 6 | average error |

| 47.5mm | 140 | 97 | 135 | 95 | 150 | 155 | 5.83 |

| 46.5mm | 142.5 | 100 | 135 | 93 | 148 | 158 | 6.58 |

| 45.5mm | 147 | 105 | 136 | 92 | 148 | 158 | 8.16 |

| 44.5mm | 150 | 113 | 136 | 94 | 150 | 160 | 11.00 |

| 43.5mm | 151 | 121 | 138 | 95.5 | 147 | 160 | 12.58 |

From Tab. II and III, we can observe that the total average error of after fitting is , that is much larger than that of angle of contingence () before fitting, though the fitted curve generated more smooth strokes as shown in Fig. 13. From Tab. II and IV, we can conclude that the optimized B-spline curve not only create the minimal total average error , but also generate smooth strokes. Therefore, the proposed B-spline fitting and optimization method has the merits of retaining the shape of the simulated stroke as well as generating physically smooth strokes.

Furthermore, we have compared the drawing results of the “Stanford Bunny” before and after using the optimization method. It can be observed that the optimized strokes are more smooth and natural than the original strokes before optimization as shown in the colored rectangles of Fig. 16.

VIII-D Evaluation of Painting Styles

To test the oriental ink painting styles, we have conducted an experiment to show the effect of Noutan and Kasure on “Stanford Bunny” and “The Utah Teapot” from different viewpoints. Fig. 17(a) shows the gradient shading effect of Noutan style and Fig. 17(b) shows the scratchy effect of Kasure style. The extreme case of Noutan style is using thick ink or light ink only to convey strong or delicate impression as shown in Fig. 17(c, d).

To quantitatively measure the intensity of the two ink painting styles, we set different combinations of dipped ratio and dipping time , and then draw the painting with Noutan and Kasure styles on rice paper, and experts were invited to score each painting.

Fig. 18 shows the results of Noutan experiment. To observe the different effect of parameters, we changed one parameter while keeping the remaining parameter constant. The first row of Fig. 18 shows the score of Noutan effect when is constant and is changing, and the second row of Fig. 18 shows the score of Noutan effect when is constant and is changing. As the dipping time is gradually increased from to , the Noutan intensity decreases and converges to . On the other hand, when the dipped ratio is increased from to , the Noutan intensity gradually decreases from to .

Fig. 19 shows the results of Kasure experiment. The relationship of the dipping time and the dipped ratio are similar to the experiment. Moreover, we can observe that the dipped ratio has a greater impact on style intensity than the dipping time.

Furthermore, we have designed an experiment to demonstrate the effect of robot’s velocity on Kasure style of a stroke and the drawing results are shown in Figure 20. The experiment was conducted with the dipped ratio and the dipping time . The drawing velocity was set from to with an increasing step . From the results, we can observe that the increasing of robot’s velocity will result in more intensive Kasure effect at the initial stage from to . However, the Kasure effect will converge as the speed increases at the closing stage from to . The results showed that robot’s velocity does impact the final painting styles.

However, there are many factors possibly impact the painting styles such as the drawing speed, the property of brush hairs, the quantity of ink absorbed by the brush and the area of drawing strokes. In our work, it is difficult to consider all these factors for controlling a specific painting style. Inspired by Strassmann’s pioneer work of non-photorealistic rendering “Hairy Brushes”, we fixed the drawing velocity and mainly consider the factor ink quantity because the ink supply on each bristle of a brush is assumed to be a reservoir of a finite quantity of fluid and the quantity is decreased as the brush moves through a stroke [34]. Therefore, our framework chooses to generate ink style by controlling ink quantity instead of robot’s velocity.

VIII-E Applications

We also examined the ability of our framework to draw complicated stylized portrait from the standard 3D model “Lucy” as shown in Fig. 21. The results showed that the physically drawing strokes retained the characteristics of reference model after feature extraction, simplification, digital simulation and optimization. Note that the Kasure and Noutan styles have been applied to the hair and shoulder of “Lucy” respectively.

To evaluate the scalability of our robotic drawing framework for arbitrary user-defined model, we collected approximately 40 photos around a sculpture named “Youren” by a normal smartphone, and then reconstructed its point cloud and surface by using SfM (Structure from Motion) and screened Poisson reconstruction algorithms. Fig. 22 shows that a small number of stylized strokes can depict the facial expression of a character, which indicates that our framework has the potential for drawing vivid portraits from varying viewpoints once a 3D model is given.

VIII-F Performance

We calculated the time of line extraction, stroke optimization and robotic drawing of the tested 3D models, each of which has 2 viewpoints, and the quantitative results of our robotic drawing framework is shown in Tab. V.

| model | viewpoint | number of strokes | line extraction | stroke optim. | robotic drawing |

| teapot | V1 | 16 | 86s | 3.9s | 322s |

| V2 | 14 | 78s | 3.8s | 316s | |

| bunny | V1 | 14 | 84s | 3.8s | 303s |

| V2 | 12 | 75s | 3.6s | 356s | |

| Lucy | V1 | 44 | 315s | 14.7s | 723s |

| V2 | 29 | 291s | 8.9s | 839s | |

| Youren | V1 | 51 | 512s | 16.3s | 450s |

| V2 | 76 | 762s | 24.1s | 541s |

Although strokes have various length and thickness, the average execution time of line extraction, stroke optimization and robotic drawing of each stroke is , and . The results showed that the robotic drawing of stroke is the most time-consuming stage because the physical drawing of a stroke with a brush is inherently slow, and the expressive line extraction is the second time-consuming stage because it requires user interaction, and the stroke optimization is the most efficient stage because it is fully automatic.

IX Conclusion

We presented a new robotic drawing framework for converting 3D models to oriental ink paintings. With a combination of automatic contour extraction methods and human-computer interaction process, the framework is flexible for users to create personalized expressive digital strokes in simulation stage. Furthermore, through the proposed stroke optimization, mapping and motion planning methods, the framework is able to draw stylized ink paintings in real execution stage, which is the key contribution of our work.

However, the current framework is not fully automatic because the selection of expressive contours depends on different users and the manually annotated data is not sufficient for training a comprehensive network for arbitrary 3D models. In the future, we are going to collect and train a contour selection network from painting experts, and ultimately achieve an end-to-end framework for a more streamlined solution. Secondly, the current drawing focuses on contours instead of shadows and textures. We intend to learn the diffusion effect of ink paintings by considering advanced NPR skills such as hatching. Another restriction is that the impact of drawing speed is not considered in robotic drawing. We will improve the performance of painting styles by fine-grained velocity-intensity model. Furthermore, it is noticed that the DynamicFusion [37] and SplitFusion [38] are very promising 3D vision works for generating 3D models in real-time. We plan to use these methods to generate 3D models in real-time and draw the corresponding artworks with ink painting style in our future work.

For a better understanding of the results of interactive editing, optimization and robotic drawing, please refer to the supplementary video from the submitted attachment or the link (https://cie.nwsuaf.edu.cn/docs/2023-08/630b0573861443c3ae1366de3cef1263.mp4).

Acknowledgement

We would like to gratefully thank the associate editor and the reviewers for their constructive comments and suggestions. This work was supported in part by the Natural Science Basis Research (NSBR) Plan of Shaanxi under Grant 2022JM-363, the Key Project of Shaanxi Provision-City Linkage under Grant 2022GD-TSLD-53 and the National Natural Science Foundation of China under Grant 61303124.

References

- [1] H. Chen. (2020) CGI ink-painting animation in contemporary China, 1989-2019. [Online]. Available: https://acas.world/2020/07/24/cgi-ink-painting-animation-in-contemporary-china-1989-2019/

- [2] D. Chen, L. Yuan, J. Liao, N. Yu, and G. Hua, “Stylebank: An explicit representation for neural image style transfer,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2017, pp. 1897–1906.

- [3] F. Tang, W. Dong, Y. Meng, X. Mei, F. Huang, X. Zhang, and O. Deussen, “Animated construction of Chinese brush paintings,” IEEE Transactions on Visualization and Computer Graphics, vol. 24, no. 12, pp. 3019–3031, 2017.

- [4] M. Berger, A. Tagliasacchi, L. M. Seversky, P. Alliez, G. Guennebaud, J. A. Levine, A. Sharf, and C. T. Silva, “A survey of surface reconstruction from point clouds,” Computer Graphics Forum, vol. 36, no. 1, pp. 301–329, 2017.

- [5] S. Grabli, E. Turquin, F. Durand, and F. X. Sillion, “Programmable rendering of line drawing from 3D scenes,” ACM Transactions on Graphics, vol. 29, no. 2, pp. 1–20, 2010.

- [6] D. Liu, M. Fisher, A. Hertzmann, and E. Kalogerakis, “Neural strokes: Stylized line drawing of 3D shapess,” in IEEE/CVF International Conference on Computer Vision, 2021, pp. 14 184–14 193.

- [7] T. Lindemeier, J. Metzner, L. Pollak, and O. Deussen, “Hardware-based non-photorealistic rendering using a painting robot,” Computer Graphics Forum, vol. 34, no. 2, pp. 311–323, 2015.

- [8] L. Scalera, S. Seriani, A. Gasparetto, and P. Gallina, “Watercolour robotic painting: a novel automatic system for artistic rendering,” Journal of Intelligent & Robotic Systems, vol. 95, pp. 871–886, 2019.

- [9] T. Löw, J. Maceiras, and S. Calinon, “drozbot: Using ergodic control to draw portraits,” IEEE Robotics and Automation Letters, vol. 7, no. 4, pp. 11 728–11 734, 2022.

- [10] P. Bénard and A. Hertzmann, “Line drawings from 3D models: A tutorial,” Foundations and Trends in Computer Graphics and Vision, vol. 11, no. 1–2, pp. 1–159, 2019.

- [11] T. Saito and T. Takahashi, “Comprehensible rendering of 3D shapes,” in ACM SIGGRAPH 1990, 1990, pp. 197–206.

- [12] G. Winkenbach and D. H. Salesin, “Computer-generated pen-and-ink illustration,” in ACM SIGGRAPH 1994, 1994, pp. 91–100.

- [13] Q. Zhang, Y. Sato, J. Takahashi, K. Muraoka, and N. Chiba, “Simple cellular automaton-based simulation of ink behaviour and its application to Suibokuga-like 3D rendering of trees,” Journal of Visualization and Computer Animation, vol. 10, no. 1, pp. 27–37, 1999.

- [14] D. DeCarlo, A. Finkelstein, S. Rusinkiewicz, and A. Santella, “Suggestive contours for conveying shape,” ACM Transactions on Graphics, vol. 22, no. 3, pp. 848–855, 2003.

- [15] T. Judd, F. Durand, and E. Adelson, “Apparent ridges for line drawing,” in ACM SIGGRAPH 2007, 2007, pp. 19–es.

- [16] M. Uchida and S. Saito, “Stylized line-drawing of 3D models using CNN with line property encoding,” Computers & Graphics, vol. 91, pp. 252–264, 2020.

- [17] E. Simo-Serra, S. Iizuka, and H. Ishikawa, “Real-time data-driven interactive rough sketch inking,” ACM Transactions on Graphics, vol. 37, no. 4, 2018.

- [18] H. Cohen. (1995) The robotic artist: AARON in living color. [Online]. Available: https://dam.org/museum/wp-content/uploads/2021/01/Cohen1995.pdf

- [19] S. Calinon, J. Epiney, and A. Billard, “A humanoid robot drawing human portraits,” in IEEE-RAS International Conference on Humanoid Robots, 2005, pp. 161–166.

- [20] R. C. Luo and Y. J. Liu, “Robot artist performs cartoon style facial portrait painting,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, 2018, pp. 7683–7688.

- [21] A. Karimov, E. Kopets, S. Leonov, L. Scalera, and D. Butusov, “A robot for artistic painting in authentic colors,” Journal of Intelligent & Robotic Systems, vol. 107, no. 3, pp. 34–54, 2023.

- [22] X. Zhang, Y. Li, Z. Zhang, K. Konno, and S. Hu, “Intelligent Chinese calligraphy beautification from handwritten characters for robotic writing,” The Visual Computer, vol. 35, no. 6–8, pp. 1193–1205, 2019.

- [23] F. Gao, J. Zhu, Z. Yu, P. Li, and T. Wang, “Making robots draw a vivid portrait in two minutes,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, 2020, pp. 9585–9591.

- [24] A. Bidgoli, M. L. De Guevara, C. Hsiung, J. Oh, and E. Kang, “Artistic style in robotic painting; a machine learning approach to learning brushstroke from human artists,” in IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), 2020, pp. 412–418.

- [25] D. Song, T. Lee, and Y. J. Kim, “Artistic pen drawing on an arbitrary surface using an impedance-controlled robot,” in IEEE International Conference on Robotics and Automation, 2018, pp. 4085–4090.

- [26] D. Song, J. Park, and Y. J. Kim, “Ssk: Robotic pen-art system for large, nonplanar canvas,” IEEE Transactions on Robotics, vol. 39, no. 4, pp. 3106–3119, 2023.

- [27] R. Liu, W. Wan, K. Koyama, and K. Harada, “Robust robotic 3D drawing using closed-loop planning and online picked pens,” IEEE Transactions on Robotics, vol. 38, no. 3, pp. 1773–1792, 2022.

- [28] P. Schaldenbrand, J. McCann, and J. Oh, “Frida: A collaborative robot painter with a differentiable, real2sim2real planning environment,” in 2023 IEEE International Conference on Robotics and Automation (ICRA), 2023, pp. 11 712–11 718.

- [29] Y. Bao, T. Yang, X. Lin, Y. Fang, Y. Wang, E. Pöppel, and Q. Lei, “Aesthetic preferences for eastern and western traditional visual art: Identity matters,” Frontiers in Psychology, vol. 7, pp. 1–8, 2016.

- [30] F. Cole, K. Sanik, D. DeCarlo, A. Finkelstein, T. Funkhouser, S. Rusinkiewicz, and M. Singh, “How well do line drawings depict shape?” in ACM SIGGRAPH 2009, 2009.

- [31] E. Simo-Serra, S. Iizuka, K. Sasaki, and H. Ishikawa, “Learning to simplify: Fully convolutional networks for rough sketch cleanup,” ACM Transactions on Graphics, vol. 35, no. 4, pp. 1–11, 2016.

- [32] X. He and N. H. C. Yung, “Corner detector based on global and local curvature properties,” Optical Engineering, vol. 47, no. 5, 2008.

- [33] W. Wang, H. Pottmann, and Y. Liu, “Fitting B-spline curves to point clouds by curvature-based squared distance minimization,” ACM Transactions on Graphics, vol. 25, no. 2, pp. 214–238, 2006.

- [34] S. Strassmann, “Hairy brushes,” in ACM SIGGRAPH 1986, 1986, pp. 225–232.

- [35] O. Hock and J. Šedo, Forward and Inverse Kinematics Using Pseudoinverse and Transposition Method for Robotic Arm DOBOT. Intech, 12 2017.

- [36] E. Bribiesca, “A measure of tortuosity based on chain coding,” Pattern Recognition, vol. 46, no. 3, pp. 716–724, 2013.

- [37] R. A. Newcombe, D. Fox, and S. M. Seitz, “Dynamicfusion: Reconstruction and tracking of non-rigid scenes in real-time,” in 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015, pp. 343–352.

- [38] Y. Li, T. Zhang, Y. Nakamura, and T. Harada, “Splitfusion: Simultaneous tracking and mapping for non-rigid scenes,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020, pp. 5128–5134.