A Review of Speaker Diarization: Recent Advances with Deep Learning

Abstract

Speaker diarization is a task to label audio or video recordings with classes that correspond to speaker identity, or in short, a task to identify “who spoke when”. In the early years, speaker diarization algorithms were developed for speech recognition on multispeaker audio recordings to enable speaker adaptive processing. These algorithms also gained their own value as a standalone application over time to provide speaker-specific metainformation for downstream tasks such as audio retrieval. More recently, with the emergence of deep learning technology, which has driven revolutionary changes in research and practices across speech application domains, rapid advancements have been made for speaker diarization. In this paper, we review not only the historical development of speaker diarization technology but also the recent advancements in neural speaker diarization approaches. Furthermore, we discuss how speaker diarization systems have been integrated with speech recognition applications and how the recent surge of deep learning is leading the way of jointly modeling these two components to be complementary to each other. By considering such exciting technical trends, we believe that this paper is a valuable contribution to the community to provide a survey work by consolidating the recent developments with neural methods and thus facilitating further progress toward a more efficient speaker diarization.

keywords:

speaker diarization , automatic speech recognition , deep learning1 Introduction

“Diarize” means making a note or keeping an event in a diary. Speaker diarization, like keeping a record of events in such a diary, addresses the question of “who spoke when” [1, 2, 3] by logging speaker-specific salient events on multiparticipant (or multispeaker) audio data. Throughout the diarization process, the audio data would be divided and clustered into groups of speech segments with the same speaker identity/label. As a result, salient events, such as non-speech/speech transition or speaker turn changes, are automatically detected. In general, this process does not require any prior knowledge of the speakers, such as their real identity or the number of participating speakers in the audio data. Thanks to its feature of separating audio streams by these speaker-specific events, speaker diarization can be effectively employed for indexing or analyzing various types of audio data, e.g., audio/video broadcasts from media stations, conversations in conferences, personal videos from online social media or hand-held devices, court proceedings, business meetings, earnings reports in a financial sector, just to name a few.

Traditionally speaker diarization systems consist of multiple, independent sub-modules as presented in Fig. 1. To mitigate any artifacts in acoustic environments, various front-end processing techniques, for example, speech enhancement, dereverberation, speech separation or target speaker extraction, are employed. Voice or speech activity detection (SAD) is then applied to separate speech from non-speech events. Raw speech signals in the selected speech portion are transformed to acoustic features or embedding vectors. In the clustering stage, the transformed speech portions are grouped and labeled by speaker classes and in the post-processing stage, the clustering results are further refined. Each of these sub-modules is optimized individually in general.

1.1 Historical Development of Speaker Diarization

During the early years of diarization technology (in the 1990s), the research objective was to benefit automatic speech recognition (ASR) on air traffic control dialogues and broadcast news recordings, by separating each speaker’s speech segments and enabling speaker-adaptive training of acoustic models [4, 5, 6, 7, 8, 9, 10]. In this period some fundamental approaches for measuring the distance between speech segments for speaker change detection and clustering, such as generalized likelihood ratio (GLR) [4] and Bayesian information criterion (BIC) [11], were developed and quickly became the golden standard. All these efforts collectively laid out paths to consolidate activities across research groups worldwide, leading to several research consortia and challenges in the early 2000s, among which there were the Augmented Multiparty Interaction (AMI) Consortium [12] supported by the European Commission and the RT Evaluation [13] hosted by the National Institute of Standards and Technology (NIST). These organizations, spanning over from a few years to a decade, fostered further advancements on speaker diarization technologies across different data domains from broadcast news [14, 15, 16, 17, 18] and conversational telephone speech (CTS) [19, 20, 21, 22] to meeting conversations [23, 24, 25, 26, 27]. The new approaches resulting from these advancements include, but not limited to, beamforming [28], information bottleneck clustering (IBC) [27], variational Bayesian (VB) approaches [29], joint factor analysis (JFA) [22].

Speaker specific representation in a total variability space derived from simplified JFA, known as i-vector [30], found great success in speaker recognition and was quickly adopted by speaker diarization systems as feature representation for short speech segments, segmented in an unsupervised fashion. i-Vector successfully replaced its predecessors such as merely mel-frequency cepstral coefficient (MFCC) or speaker factors (or eigenvoices) [31] to bolster clustering performance in speaker diarization, being combined with principal component analysis (PCA) [32, 33], variational Bayesian Gaussian mixture model (VB-GMM) [34], mean shift [35] and probabilistic linear discriminant analysis (PLDA) [36].

Since the advent of deep learning in the 2010s, there has been a considerable amount of research to take advantage of powerful modeling capabilities of the neural networks for speaker diarization. One representative example is the extraction of the speaker embeddings using neural networks, such as the d-vectors [37, 38, 39] or the x-vectors [40], which most often are embedding vector representations based on the bottleneck layer output of a deep neural network (DNN) trained for speaker recognition. The shift from i-vector to these neural embeddings contributed to enhanced performance, easier training with more data [41], and robustness against speaker variability and acoustic conditions. More recently, end-to-end neural diarization (EEND) where individual sub-modules in the traditional speaker diarization systems (c.f., Fig. 1) can be replaced by one neural network gets more attention with promising results [42, 43]. This research direction, although not fully matured yet, could open up unprecedented opportunities to address challenges in the field of speaker diarization, such as, the joint optimization with other speech applications, with overlapping speech, if large-scale data is available for training such powerful neural network-based models.

1.2 Motivation

Till now, there are two well-rounded overview papers in the area of speaker diarization that survey the development of speaker diarization technology with different focuses. In [2], various speaker diarization systems and their subtasks in the context of broadcast news and CTS data are reviewed up to till mid 2000s. Thus, the historical progress of speaker diarization technology development in the 1990s and early 2000s are covered. Contrarily, the focus of [3] was put more on speaker diarization for meeting speech and its respective challenges. This paper thus weighs more in the corresponding technologies to mitigate problems from the perspective of meeting environments, where there are usually more participants than broadcast news or CTS data and multi-modal data is frequently available. Since these two papers were published, speaker diarization systems have gone through a lot of notable changes, especially from the leap-frog advancements in deep learning approaches addressing technical challenges across multiple machine learning domains. We believe that this survey work is a valuable contribution to the community to consolidate the recent developments with neural methods and thus facilitate further progress toward a more efficient diarization.

1.3 Overview and Taxonomy of Speaker Diarization

| Trained based on | Trained based on | |

| Non-diarization Objective | Diarization Objective | |

| Single-module Optimization | Section 2.1–2.6 Front-end [44, 45, 46], speaker embedding [47, 48, 40], SAD [49], etc. | Section 3.1 Affinity matrix refinement [50], IDEC [51], TS-VAD [52], etc. |

| Joint Optimization | Section 2.7 VB-HMM [53], VBx [54] Out of scope Joint front-end & ASR [55, 56, 57, 58, 59, 60], joint speaker identification & speech separation [61, 62], etc. | Section 3.2 UIS-RNN [41], RPN [63], online RSAN [64], EEND [42, 43], etc. Section 4 Joint ASR & speaker diarization. [65, 66, 67, 68], etc. |

Attempting to categorize the existing, most-diverse speaker diarization technologies, both in the space of modularized speaker diarization systems before the deep learning era and those based on neural networks of recent years, a proper grouping would be helpful. The main categorization we adopt in this paper is based on two criteria, resulting total of four categories, as shown in Table 1.3. The first criterion is whether the model is trained based on speaker diarization-oriented objective function or not. Any trainable approaches to optimize models in a multispeaker situation and learn relations between speakers are categorized into the “diarization objective” class. The second criterion is whether multiple modules are jointly optimized toward some objective function. If a single sub-module is replaced into a trainable one, such method is categorized into the “Single-module optimization” class. Conversely, joint modeling of segmentation and clustering [41], joint modeling of speech separation and speaker diarization [64] or fully end-to-end neural diarization system [42, 43] is categorized into the “Joint optimization” class.

Note that our intention of this categorization is to help readers to quickly overview the broad development in the field, and it is not our intention to divide the categories into superior-inferior. Also, while we are aware of many techniques that fall into the category “Non-Diarization Objective” and “Joint Optimization” (e.g., joint front-end and ASR [55, 56, 57, 58, 59, 60] and joint speaker identification and speech separation [61, 62]), we exclude them in the paper to focus on the review of speaker diarization techniques.

1.4 Diarization Evaluation Metrics

1.4.1 Diarization Error Rate

The accuracy of speaker diarization system is measured using diarization error rate (DER) [69] where DER is the sum of three different error types: False alarm (FA) of speech, missed detection of speech and confusion between speaker labels.

| (1) |

To establish a one-to-one mapping between the hypothesis outputs and the reference transcript, Hungarian algorithm [70] is employed. In the 2006 RT evaluation [69], 0.25 s of “no score” collar (also referred to as “score collar”) is set around every boundary of reference segment to mitigate the effect of inconsistent notation and human errors in reference transcript and this evaluation scheme has been most widely used in speaker diarization studies.

1.4.2 Jaccard Error Rate

The Jaccard error rate (JER) was first introduced in the DIHARD II evaluation. The goal of JER is to evaluate each speaker with equal weight. Unlike DER which is estimated for the whole utterance altogether, per-speaker error rates are first computed and then averaged to compute JER. Specifically, JER is computed as follows.

| (2) |

In Eq. (2), is the union of the -th speaker’s speaking time in the reference transcript and the -th speaker’s speaking time in the hypotheses. is the number of speakers in the reference script. Note that the Speaker-Confusion in DER is reflected in the part of in the calculation of JER. Since JER is using union operation between reference and the hypotheses, JER never exceeds 100%, whereas DER can become much larger than 100%. DER and JER are highly correlated but if a subset of speakers are dominant in the given audio recording, JER tends to be higher than the ordinary case.

1.4.3 Word-level Diarization Error Rate

While DER is based on the duration of the speaking time of each speaker, word-level DER (WDER) is designed to measure the error that is caused in the lexical (output transcription) side. The motivation of WDER is the discrepancy between DER and the accuracy of the final transcript output since DER relies on the duration of the speaking time that is not always aligned with the word boundaries. The concept of word-breakage ratio was proposed in Silovsky et al. [71] where word-breakage shares similar idea with WDER. Unlike WDER, word-breakage ratio measures the number of speaker-change points occur inside a word boundary. The work in Park and Georgiou [72] suggested the term WDER, evaluating the diarization output with ground-truth transcription. More recently, the joint ASR and speaker diarization system was evaluated in the WDER format in Shafey et al. [65]. Although the way of calculating WDER would differ over the studies, but the underlying mechanism is that the diarization error is calculated by counting the correctly or incorrectly labeled words.

1.5 Paper Organization

The rest of the paper is organized as follows.

-

•

In Section 2, we overview techniques belonging to the “Non-diarization objective” class in the proposed taxonomy, mostly those used in the traditional, modular speaker diarization systems. While there are some overlaps with the counterpart sections of the aforementioned two survey papers [2, 3] in terms of reviewing notable developments in the past, this section would add more latest schemes in the corresponding components of the speaker diarization systems.

-

•

In Section 3, we discuss advancements mostly leveraging DNNs trained with the diarization objective where single sub-modules are independently optimized (Subsection 3.1) or jointly optimized (Subsection 3.2) toward fully end-to-end speaker diarization.

-

•

In Section 4, we present a perspective of how speaker diarization has been investigated in the context of ASR, reviewing historical interactions between these two domains to peek into the past, present and future of speaker diarization applications.

-

•

Section 5 provides information on speaker diarization challenges and corpora to facilitate research activities and anchor technology advances. We also discuss evaluation metrics such as DER, JER and Word-level DER (WDER) in this section.

-

•

We share a few examples of how speaker diarization systems are employed in both research and industry practices in Section 6 and conclude this work in Section 7, providing summary and future challenges in speaker diarization.

2 Modular Speaker Diarization Systems

This section provides an overview of algorithms for speaker diarization belonging to the “Non-diarization objective” class, as shown in Table 1.3. Each subsection in this section corresponds to the explanation of each module in the traditional speaker diarization system, as shown in Figure 1. In addition to the introductory explanation of each module, this section also summarizes the recent techniques within the module.

2.1 Front-end Processing

This section describes mostly front-end techniques, used for speech enhancement, dereverberation, speech separation, and speech extraction as part of the speaker diarization pipeline. Let be the short-time Fourier Transform (STFT) representation of the source speaker on the frequency bin at frame . The observed noisy signal can be represented by a mixture of the source signals, a room impulse response , and additive noise ,

| (3) |

where denotes the number of speakers present in the audio signal.

The aim of the front-end techniques described in this section is to estimate the original source signal given the observation for the downstream diarization task,

| (4) |

where denotes the -th speaker’s estimated STFT spectrum with frequency bins at frame .

Although there are numerous speech enhancement, dereverberation, and separation algorithms, e.g., [73, 74, 75], herein most of the recent techniques used in the DIHARD challenge series [76, 77, 78], LibriCSS meeting recognition task [79, 80], and the CHiME-6 challenge track 2 [81, 82, 83] are covered.

2.1.1 Speech Enhancement and Denoising

Speech enhancement techniques focus mainly on the suppression of the noise component of the noisy speech, which has shown a significant improvement thanks to deep learning. For example, long short-term memory (LSTM)-based speech enhancement [84, 85] is used as a front-end technique in the DIHARD II baseline [77], i.e.,

| (5) |

where we only consider the single source example (i.e., ) and omit the source index . This is a regression-based approach by minimizing the objective function,

| (6) |

The log power spectrum or ideal ratio mask is often used as the target domain of the output . Also, the speech enhancement used in [86] applies this objective function in each layer based on a progressive manner.

The effectiveness of the speech enhancement techniques can be enhanced via multichannel processing, including minimum variance distortionless response (MVDR) beamforming [73]. [80] demonstrates the significant improvement of the DER from 18.3% to 13.9% in the LibriCSS meeting task based on mask-based MVDR beamforming [87, 88].

2.1.2 Dereverberation

Compared with other front-end techniques, the major dereverberation techniques used in various tasks are based on statistical signal processing methods. One of the most widely used techniques is the weighted prediction error (WPE) based dereverberation [89, 90, 91].

The basic idea of WPE, for the case of single source, i.e., , without noise, is to decompose the original signal model Eq. (3) into the early reflection and late reverberation as follows:

| (7) |

WPE tries to estimate filter coefficients , which maintain the early reflection while suppressing the late reverberation based on the maximum likelihood estimation.

| (8) |

where denotes the number of frames to split the early reflection and late reverberation, and denotes the filter size.

WPE is widely used as one of the gold standard front-end processing methods, e.g., it is part of the DIHARD and CHiME, which are both the baseline and the top-performing systems [76, 77, 78, 81, 82]. Although the performance improvement of WPE-based dereverberation is not significant, it provides solid performance improvement across almost all tasks. Moreover, WPE is based on linear filtering and since it does not introduce signal distortions, it can be safely combined with downstream front-end and back-end processing steps. Similar to the speech enhancement techniques, WPE-based dereverberation demonstrates additional performance improvements when applied on multichannel signals.

2.1.3 Speech Separation

Speech separation is a promising family of techniques when the overlapping speech regions are significant. The effectiveness of multichannel speech separation based on beamforming has been widely confirmed [28, 92, 93]. For example, in the CHiME-6 challenge [81], guided source separation (GSS) [93] based multichannel speech extraction techniques have been used to achieve the top result. On the other hand, single-channel speech separation techniques [44, 45, 46] do not often show any significant effectiveness in realistic multispeaker scenarios, such as the LibriCSS [79] or the CHiME-6 tasks [81], where speech signals are continuous and contain both overlapping and overlap-free speech regions. The single-channel speech separation systems often produce a redundant non-speech or even a duplicated speech signal for the non-overlap regions, and as such the “leakage” of audio causes many false alarms of speech activity. A leakage filtering method was proposed in [94] tackle the problem, where a significant improvement in the diarization performance was observed after including this processing step in the top-ranked system on the VoxCeleb Speaker Recognition Challenge 2020 [95].

2.2 Speech Activity Detection

SAD, also known as voice activity detection (VAD), distinguishes speech from non-speech such as background noise. SAD plays a significant role not only in speaker diarization but also in speaker recognition and speech recognition systems since SAD is a pre-processing step that can create errors that propagate through the whole pipeline. A SAD system consists mostly of two major parts. The first one is a feature extraction front-end, where acoustic features such as zero crossing rate [96], pitch [97], signal energy [98], higher order statistics in the linear predictive coding residual domain [99] or MFCCs are often used. The other part is a classifier, where a model predicts and decides whether the input-frame contains speech or not. A system based on statistical models on spectrum [100], Gaussian mixture models (GMMs) [101] and on Hidden Markov Models (HMMs) [102, 103] has been traditionally used. After the deep learning approaches gained popularity in the speech signal processing field, numerous DNN-based systems, such as the ones based on MLP [104], convolutional neural network (CNN) [105], LSTM [106], have been also proposed with superior performance to the traditional methods.

The performance of SAD largely affects the overall performance of the speaker diarization system as it can create a significant amount of false positive salient events or miss speech segments [107]. A common practice in speaker diarization tasks is to report DER with “oracle SAD” setup which indicates that the system output is using SAD output that is identical to the ground truth. Conversely, the system output with an actual speech activity detector is referred to as “system SAD” output.

2.3 Segmentation

In the context of speaker diarization, speech segmentation is a process of breaking the input audio stream into multiple segments to obtain speaker-uniform segments. Therefore, the output unit of the speaker diarization system is determined via a segmentation process. In general, speech segmentation methods for speaker diarization are divided into two major categories: Segmentation by speaker-change point detection and uniform segmentation.

Segmentation by the speaker-change point detection was the gold standard of the earlier speaker diarization systems, where speaker-change points are detected by comparing two hypotheses: assumes that both the left and right speech windows are from the same speaker, whereas assumes that the two speech windows are from the different speakers. To test these two hypotheses, metric-based approaches [108, 109] were most widely applied. In metric-based approaches, the distribution of the speech feature is assumed to follow a Gaussian distribution with mean and covariance . The two hypotheses and can be then represented as follows:

| (9) | ||||

where is a sequence of speech features in the interest of the hypothesis testing. A slew of criteria for the metric-based approach were proposed to quantify the likelihood of the two hypotheses. The examples include the Kullback Leibler (KL) distance [110], Generalized Likelihood Ratio (GLR) [111, 112, 113] and BIC [108, 114]. Among these criteria, BIC has been the most widely used method followed by numerous variants [115, 116, 117, 118]. Thus, in this section, we introduce BIC as a representative example for a metric-based method. If we apply BIC and to the hypotheses described in Eq. (9), a BIC value between two models from two hypotheses is expressed as follows:

| (10) |

where the sample covariance is from , is from and is from and is the penalty term [108] defined as

| (11) |

where denotes the dimension of the feature; and are frame lengths of each window, respectively and . The penalty weight is generally set to . The change point is set when the following equation becomes true:

| (12) |

In general, if speech segmentation is done using the speaker-change point detection method, the length of each segment is not consistent. Therefore, after the advent of the i-vector [30] and DNN-based embeddings [47, 40], the segmentation based on speaker-change point detection was mostly replaced with uniform segmentation [35, 119, 39], since varying lengths of the segment created an additional variability into the speaker representation and deteriorated the fidelity of the speaker representations.

In uniform segmentation schemes, the given audio stream input is segmented with a fixed window length and overlap length. Thus, the unit duration of the speaker diarization output stays constant. However, the process of uniform segmentation of the input signals for diarization poses some potential problems because it introduces a trade-off error related to the segment length. The segments created from the uniform segmentation need to be sufficiently short to safely assume that they do not contain multiple speakers. However, at the same time it is important to capture sufficient acoustic information to extract reliable speaker representations.

2.4 Speaker Representations and Similarity Measure

Speaker representation plays a crucial role for speaker diarization systems to measure the similarity between speech segments. This section will cover such speaker representation and also the similarity measure because they are tightly connected. We first introduce metric-based approaches for similarity measures which were popular from the late 1990s to early 2000s in Section 2.4.1. We then introduce widely used speaker representations for speaker diarization systems that are usually employed together with the uniform segmentation method described in Section 2.4.2 and Section 2.4.3.

2.4.1 Metric Based Similarity Measure

From the late 1990s to early 2000s, metric-based approaches were most commonly used for the similarity measurement between speech segments for speaker diarization systems. Methods used for speaker segmentation were also applied to measure the similarity between segments, such as KL distance [110], GLR [111, 112, 113], and BIC [108, 114]. As with the case of the segmentation, the BIC-based method, where the similarity between two segments are computed by Eq. (10), was one of the most extensively used metrics due to its effectiveness and ease of implementation. Metric-based approaches are usually employed together with the segmentation approaches based on a speaker-change point detection. The agglomerative hierarchical clustering (AHC) is often applied to obtain the diarization result, which will be detailed in Section 2.5.1.

2.4.2 Joint Factor Analysis, i-vector and PLDA

Before the advent of speaker representations such as i-vector [30] or x-vector [40], Gaussian Mixture Model-based Universal Background Model (GMM-UBM) [120] applied to acoustic features demonstrated success in speaker verification tasks. A UBM consists of a large GMM (typically with 512 to 2048 mixtures) trained to represent the speaker-independent distribution of acoustic features. Thus, a GMM-UBM model can be described with the following quantities: mixture weights, mean values and covariance matrix of the mixtures. The log-likelihood ratio between a speaker-adapted GMM and the speaker-independent GMM-UBM is used for speaker verification. Despite the success on modeling the speaker identity, GMM-UBM based speaker verification systems have suffered from intersession variability [121], which is the variability exhibited by a given speaker from one recording session to another. Such difficulty occurs because the relevance maximum a posteriori (MAP) adaptation step during the speaker enrollment process in the GMM-UBM based speaker verification systems not only captures the speaker-specific characteristics of the speech, but also unwanted channel noise and other nuisances from the acoustic environment.

Joint factor analysis (JFA) [121, 122] was proposed to compensate for the variability issues by separately modeling the inter-speaker variability and the channel or session variability. The JFA approach employs a GMM supervector, which is a concatenated mean of the adapted GMM. For example, suppose a by 1 speaker-independent GMM mean vector , where is the mixture component index and is the dimension of the feature. Then, a supervector has dimension of by 1 by concatenating the F-dimensional mean vector for C mixture components. Thus, the supervector can be described as follows:

| (13) |

In the JFA approach, the given GMM supervector is decomposed into speaker independent, speaker dependent, channel dependent, and residual components. Thus, the ideal speaker supervector can be decomposed as indicated in Eq. (14), where denotes a speaker independent supervector from the UBM, denotes a speaker dependent component matrix, denotes a channel dependent component matrix, and denotes a speaker-dependent residual component matrix. Along with these component matrices, vector is for the speaker factors, vector is for the channel factors, and vector is for the speaker-specific residual factors. All of these vectors have a prior distribution of .

| (14) |

The JFA approach was followed by the study in [30], in which it was discovered that channel factors in the JFA also contain information regarding the speakers. Thus, Dehak et al. [30] proposed a new method combining the channel and speaker spaces into a combined variability space through a total variability matrix. Thus, the total variability matrix models both the channel and the speaker variability, and the latent variable weights the column of the matrix . The variable is referred to as the i-vector and is also considered a speaker representation vector. Each speaker and channel in a GMM supervector can be modeled as follows:

| (15) |

where is a speaker-independent and channel-independent supervector that can be taken as a UBM supervector. The process of extracting an i-vector for the given recording is formulated as a MAP estimation problem [123, 30] using the Baum–Welch statistics extracted using the UBM, mean supervector , and total variability matrix trained from the EM algorithm as parameters. The idea behind a speaker representation was greatly popularized through the use of i-vectors, where the speaker representation vector can contain a numerical feature characterizing the vocal tract of each speaker. The i-vector speaker representations have been employed in not only speaker recognition studies but also in numerous speaker diarization studies [35, 36, 124] and have shown a superior performance compared to metric-based methods such as BIC, GLR, and KL, as mentioned in the previous subsection.

Intersession variability in the i-vector approach has been further compensated using backend procedures, such as a linear discriminant analysis (LDA) [125, 126] and within-class covariance normalization (WCCN) [127, 128], followed by simple cosine similarity scoring. The cosine similarity scoring was later replaced with a probabilistic LDA (PLDA) model in [129]. In the following studies [130, 131], a method applying a Gaussianization of the i-vectors and thus generating Gaussian assumptions in the PLDA, referred to as G-PLDA or simplified PLDA, was proposed for speaker verification. In general, PLDA employs the following modeling for the given speaker representation of the -th speaker in the -th session as indicated below:

| (16) |

Here, is the mean vector, is the speaker variability matrix, is the channel variability matrix, and is a residual component. In addition, and are latent variables specific for the speaker and session, respectively. In G-PLDA, both latent variables, and , are assumed to follow a standard Gaussian prior. During the training process of the PLDA, , , , and are estimated using the expectation maximization (EM) algorithm. Based on the estimated statistics, two hypotheses are tested: hypothesis for a case in which two samples are from the same speaker, and hypothesis for when two samples are from different speakers. Under the hypothesis , the given speaker representations and are modeled as follows with a common latent variable :

| (28) |

On the other hand, under the hypothesis , and are modeled as follows with separate latent variable and :

| (41) |

In G-PLDA, it is assumed that is generated from a Gaussian distribution, which results in the following conditional density function [132].

| (42) |

Using Eq. (16)-(41), the log likelihood ratio can be described as follows:

| (43) |

The log likelihood ratio in the above equation was originally used for speaker verification to choose a hypothesis between and by checking whether is positive or negative. The PLDA for speaker representations also employed in speaker diarization and the log likelihood is used to check the similarity between clusters. Further details regarding the clustering approach using PLDA is described in Section 2.5.1.

2.4.3 Neural Network Based Speaker Representations

Speaker representations for speaker diarization have also been heavily affected by the rise of deep learning approaches. The idea behind DNN-based representation learning was first introduced for face recognition tasks [133, 134]. As the fundamental idea of a neural network-based representation, we can use the deep neural network architecture to map the input signal source (an image or an audio clip) to a dense vector containing floating-point numbers. This is achieved by taking the values from a layer in the neural network model after forward-propagating the input signal to the layer that we take the values from. The mapping process from the input signal to the speaker embedding is based on the nonlinear modeling capability of multiple layers in the DNNs. In so doing, the training process of the DNNs allows the neural networks to learn the mapping without specifying any components or factors, which is in contrast to traditional factor analysis models based on decomposable components. In this sense, the components in JFA are more explainable than the parameters trained in DNN models trained for speaker embedding extraction. In addition, DNN-based speaker representation learning does not involve predefined probabilistic models (e.g., GMM-UBM) for the input acoustic features. In relation to this, DNN-based speaker representation achieves an improved efficiency during the inference phase because the solution used by factor-analysis based methods involves a computationally intensive matrix inversion operation [132], whereas DNN-based embedding extractors involve fewer demanding operations, such as multiple linear transformations, with non-linear function computations for obtaining the speaker representation vector. Thus, the representation learning process has become more straight-forward and the inference speed has been improved compared to the traditional factor-analysis based methods. Among many of the neural-network based speaker representations, d-vector [47] remains one of the most prominent speaker representation extraction frameworks. The stacked filter bank features, which include context frames as an input feature, are employed, and multiple fully connected layers are trained with cross entropy loss. Speaker representation vectors, also referred to as d-vectors, are obtained from the last fully connected layer, as indicated in Fig. 3. The d-vector scheme appears in numerous speaker diarization papers, e.g., in [39, 41].

DNN-based speaker representations are even more improved when using an x-vector [48, 40], which demonstrates a superior performance, winning the NIST speaker recognition challenge 2018 [135] and the first DIHARD challenge [76]. Fig. 3 shows the structure of an x-vector extractor. The time-delay architecture and statistics pooling layer differentiate the x-vector architecture from that of a d-vector. The statistics pooling layer aggregates the frame-level outputs from the previous layer and computes its mean and standard deviation, passing them on to the following layer. Thus, it can allow the extraction of x-vectors from a variable length input. This is advantageous not only for speaker verification but also for speaker diarization because speaker diarization systems are bound to process segments that are shorter than the predetermined uniform segment length when the segment should be truncated at the end of an utterance.

2.5 Clustering

A clustering algorithm is applied to make clusters of the speech segments based on the speaker representation and similarity measure explained in the previous section. Here, we introduce the most commonly used clustering methods for speaker diarization.

2.5.1 Agglomerative Hierarchical Clustering

AHC is a clustering method that has been constantly employed in many speaker diarization systems with different distance metrics such as BIC [108, 136], KL [137] and PLDA [76, 82, 138]. AHC is an iterative process of merging the existing clusters until the clustering process meets a criterion. The AHC process starts with the calculation of the similarity between N singleton clusters. At each step, a pair of clusters that has the highest similarity is merged. The iterative merging process of AHC is illustrated in a dendrogram, which is presented in Fig. 4.

One of the most important aspects of AHC is the stopping criterion. For the speaker diarization task, the AHC process can be stopped using either a similarity threshold or a target number of clusters. Ideally, if PLDA is used as a distance metric, the AHC process should be stopped at in Eq.(43). However, the stopping metric is adjusted to obtain an accurate number of clusters based on a development set. Conversely, if the number of speakers is known or estimated using other methods, the AHC process can be stopped when the clusters created by the AHC process reaches the pre-determined number of speakers .

2.5.2 Spectral Clustering

Spectral clustering is a widely used clustering approach for speaker diarization. While there are many variations, spectral clustering involves the following steps.

-

i.

Affinity matrix calculation: There are many ways to generate affinity matrix depending on the way the affinity value is processed. The raw affinity value is processed by kernels such as , where is a scaling parameter. On the other hand, the raw affinity value could also be masked by zeroing the values below a threshold to only keep the prominent values.

-

ii.

Laplacian matrix calculation [139]: The graph Laplacian can be calculated in two ways: normalized and unnormalized. The degree matrix contains diagonal elements where is the element of the -th row and -th column in an affinity matrix .

-

(a)

Normalized Graph Laplacian:

(44) -

(b)

Unnormalized Graph Laplacian:

(45)

-

(a)

-

iii.

Eigen decomposition: The graph Laplacian matrix is decomposed into the eigenvector matrix and the diagonal matrix that contains eigenvalues. Thus, .

-

iv.

Re-normalization (optional): the rows of is normalized so that where and are the elements of the -th row and -th column in matrices and , respectively.

- v.

-

vi.

Spectral embedding clustering: The -smallest eigenvalues , ,…, and the corresponding eigenvectors , ,…, are stacked to construct a matrix . The row vectors of are referred to as -dimensional spectral embeddings. Finally, the spectral embeddings are clustered using a clustering algorithm. In general k-means clustering [141] is employed for clustering the spectral embeddings.

Among many variations of spectral clustering algorithm, the Ng-Jordan-Weiss (NJW) algorithm [142] is often employed for the speaker diarization task with variation in the kernel for the calculation of the affinity values [143, 144, 33]. Unlike the AHC approach, spectral clustering is mostly used with cosine distance [143, 144, 33, 39, 140]. In addition, the LSTM based similarity measurement with spectral clustering [145] also exhibited competitive performance. Depending on the datasets, the spectral clustering approach with cosine distance measurement outperforms AHC with PLDA [140, 83] while using the same speaker representation for both clustering methods.

2.5.3 Other Clustering Algorithms

The k-means algorithm is often employed in studies on speaker diarization [146, 147, 39, 41, 63] due to its simplicity and ease of implementation. However, the k-means algorithm generally underperforms [39, 41] the well-known clustering algorithms such as spectral clustering and AHC. In addition, there are a few speaker diarization studies employed the mean-shift [148] clustering algorithm, which assigns the given data points to the clusters iteratively by finding the modes in a non-parametric distribution. The Mean-shift clustering algorithm was employed in the speaker diarization task with KL distance in [149], i-vector and cosine distance in [35, 150], and i-vector and PLDA in [151].

2.6 Post-processing

2.6.1 Resegmentation

Resegmentation is a process of refining the speaker boundary that is roughly estimated using the clustering procedure. In [152], the Viterbi resegmentation method based on the Baum-Welch algorithm was introduced. In this method, the estimation of Gaussian mixture model corresponding to each speaker and Viterbi-algorithm-based resgmentation using the estimated speaker GMM are alternately applied.

A method for representing the diarization process based on the variational Bayeian hidden Markov model (VB-HMM) was proposed, and was shown to be superior to Viterbi resegmentation [53, 153]. The VB-HMM-based diarization can be seen as a joint optimization of segmentation and clustering, which will be separately introduced in Section 2.7.

2.6.2 System Fusion

As another direction of post processing, there have been a series of studies on the fusion method of multiple diarization results to improve the diarization accuracy. While it is widely known that the system combination generally yields better result for various systems (e.g., speech recognition [154] or speaker recognition [155]), the combination of multiple diarization hypotheses poses several unique problems. First, speaker labeling is not standardized among different diarization systems. Second, the estimated number of speakers may differ among different diarization systems. Finally, the estimated time boundaries may also be different among multiple diarization systems. System combination methods for speaker diarization systems need to handle these problems during the fusion process of multiple hypotheses.

In [156], a method for selecting the best diarization result among many diarization systems was proposed. In this method, a whole sequence of diarization result for a recording from each diarization system is treated as one object to be clustered. AHC is applied to the set of diarization results, in which the distance of two clusters is measured using the symmetric DER between the diarization results belonging to the two clusters. The iterative merging process of AHC is executed until the number of clusters becomes two. Finally, in the bigger cluster among the two final clusters according to the number of elements in each cluster, the diarization result that has the smallest distance to all other diarization results is selected as the final result. In [157], two diarization systems are combined by finding the matching between two speaker clusters, and then performing resegementation based on the matching result.

More recently, the diarization output voting error reduction (DOVER) method [158] was proposed to combine multiple diarization results based on the voting scheme. In the DOVER method, the speaker labels among different diarization systems are aligned one by one to minimize the DER between the hypotheses (processes 2 and 3 of Fig. 6). After aligning all hypotheses, each system votes its speaker label to each segmented region (each system may have different weights for voting), and the speaker label that gains the highest voting weight is selected for each segmented region (the process 4 of Fig. 6). In case multiple speaker labels get the same voting weight, a heuristic approach is employed to break the ties (such as selecting the result from the first system) is used.

The DOVER method has an implicit assumption that there is no overlapping speech, i.e., at most only one speaker is assigned for each time index. To combine the diarization hypotheses with overlapping speakers, two methods were recently proposed. In [94], the authors proposed the modified DOVER method, in which the speaker labels in different diarization results are first aligned with a root hypothesis, and the speech activity of each speaker is estimated based on the weighted voting score for each speaker for each small segment. Raj et al. [159] proposed a method called DOVER-Lap, in which the speakers of multiple hypotheses are aligned via a weighted k-partite graph matching, and the number of speakers for each small segment is estimated based on the weighted average of multiple systems to select the top- voted speaker labels. Both the modified DOVER and DOVER-Lap showed DER improvement for the speaker diarization result with speaker overlaps.

2.7 Joint Optimization of Segmentation and Clustering

This subsection introduces a VB-HMM-based diarization technique, which can be regarded as a joint optimization of segmentation and clustering, and thus cannot be well categorized in Section 2.1–2.6. The VB-HMM framework was proposed as an extension of the VB-based speaker clustering [160, 161] by introducing HMM to constrain the speaker transitions. In the VB-HMM framework [53], the speech feature is assumed to be generated from HMM where each HMM state corresponds to one of possible speakers. Suppose that we have HMM states, -dimensional variable is introduced where -th element of is 1 if the -th speaker is speaking at the time index , and 0 otherwise. At the same time, the distribution of is modeled based on a hidden variable , where denotes a low dimensional vector for the -th speaker. Given these notations, the joint probability of , , and is decomposed as follows:

| (46) |

where is the emission probability modeled by GMM whose mean vector is represented by , is the transition probability of the HMM, and is the prior distribution of . Because represents the trajectory of speakers, the diarization problem can be expressed as the inference problem of that maximizes the posterior distribution . Since it is intractable to directly solve this problem, the VB method is used to estimate the model parameters that approximate .

Recently, a simplified version of VB-HMM that works on the x-vector, known as VBx, was proposed [54, 162]. In VBx, is calculated using the x-vector based on the PLDA model. While the original VB-HMM works on the granularity of the frame-level feature, VBx works on the granularity of the x-vector, and thus can be seen as a clustering method that jointly models speaker turn and speaker duration.

The VB-HMM diarization was originally designed as a standalone diarization framework. However, it requires parameter initialization to start the VB estimation, and the parameters are usually initialized based on the result of another speaker clustering. In that context, VB-HMM is widely employed as the final step of speaker diarization (e.g., [163, 119, 164]). For example in [164], AHC was first performed to under-cluster the x-vector, and VBx was then applied to obtain a better cluster given the AHC-based result as the initial parameter. Finally, VB-HMM was further applied to refine the boundary obtained by VBx.

3 Recent Advances in Speaker Diarization Using Deep Learning

This section introduces various recent efforts toward deep learning-based speaker diarization techniques. The methods that incorporate deep learning into a single component of speaker diarization, such as clustering or post-processing, are introduced in Section 3.1. The methods that unify several components of speaker diarization into a single neural network are introduced in Section 3.2. For the overview of speaker diarization techniques using deep learning, refer to Table 2.7. It should be noted that there are some works that take additional input of speaker profiles. These methods may not be categorized as a diarization technique in a traditional definition. Nevertheless, we introduce them as they are optimized in a multispeaker situation to learn the relations between speakers and hence categorized as “Trained Based on the Diarization Objective” in Table 1.3.

3.1 Single-module Optimization

3.1.1 Speaker clustering Enhanced by Deep Learning

Enhancing the clustering procedure based on the deep learning is an active research area and several methods have been proposed for speaker diarization. This section will cover the representative works in such a direction.

An approach based on the graph neural network (GNN) was proposed in [50]. As shown in Fig. 7, this method aims at purifying the similarity matrix used in the spectral clustering (Section 2.5.2). Assuming a sequence of speaker embeddings , where is the sequence length, the input to the GNN is . The output of the -th layer of the GNN is now:

| (47) |

where is a normalized affinity matrix added by self-connection, is a trainable weight matrix for the -th layer, and is a nonlinear function. The GNN is trained by minimizing the distance between the reference and the estimated affinity matrices. The distance is calculated using a combination of histogram loss [177] and nuclear norm [178]. The GNN-based speaker diarization method was evaluated on the CALLHOME dataset and an in-house meeting dataset, and significantly outperformed any of the conventional clustering methods.

Besides that, different approaches have been proposed to generate the affinity matrix. In [165], a self-attention-based neural network model was introduced to directly generate a similarity matrix from a sequence of speaker embeddings. In [166], several affinity matrices with different temporal resolutions were fused into a single affinity matrix based on a neural network.

A different approach aiming at improving clustering was proposed in [179], called deep embedded clustering (DEC). The goal of DEC was to transform the input features (herein referred to as speaker embeddings) making them more separable in a given number of clusters/speakers. In order to make cluster differentiable, each embedding is provided with a probability of “belonging” to each of the available speaker clusters, i.e. can be interpreted as the probability of assigning sample to cluster (i.e., a soft assignment):

| (48) |

where is the bottleneck feature, is the degree of freedom of the Student’s distribution, is the centroid of -th cluster and is the soft cluster frequency with . The clusters are iteratively refined based on the target distribution according to the bottleneck features estimated using an autoencoder.

The initial version of DEC had some problems, and refined algorithm called improved DEC (IDEC) was later proposed with better accuracy on speaker diarization [180, 51]. Firstly, there was a potential risk that the neural network is converged to a trivial solution to generates corrupted embeddings. To avoid this risk, Guo et al. [180] proposed to explicitly preserve the local structure of the data by adding a reconstruction loss between the output of the autoencoder and the input feature. Dimitriadis [51] further addressed the issue by introducing the loss function to enforce the distribution of speaker turns being uniform across all speakers, i.e., all speakers contribute equally to the session. This assumption is not always valid for real recordings but it constrains the solution space enough to avoid the empty clusters without affecting the overall performance. Finally, Dimitriadis [51] also proposed an additional loss term that penalizes the distance from the centroid , bringing the behavior of the algorithm closer to k-means.

Overall, the loss function of the IDEC consists of four loss terms, i.e., , the clustering error term that is originally proposed in DEC; , the reconstruction error term [180]; is the uniform “speaker airtime” distribution loss [51]; and , the loss to measure the distance of the bottleneck features from the centroids [51],

| (49) |

where are the weight on the loss functions that is fine-tuned on some held-out data.

3.1.2 Learning the Distance Estimator

In this section a novel approach using a trainable distance function is presented. The basic idea is based on the relational recurrent neural networks (RRNNs). RRNNs were introduced by [181, 182, 183] to address “relational information learning” problems. Such models learn relations between a sequence of input features like the notion of “closer” or “further”, e.g, two points in space are closer than a third one, etc. Speaker diarization can be seen as part of this class of problems, since the final decision depends on the distance between speech segments and speaker profiles or centroids.

There are several issues that potentially limits the accuracy of speaker diarization systems. Firstly, as mentioned in Section 2.3, the duration of segments when extracting speaker embeddings poses a trade-off between the time resolution and the robustness of the extracted speaker representations. Secondly, speaker embedding extractors are not explicitly trained to provide optimal representations for speaker diarization, despite the fact these invariant, discriminative representations are used to separate thousands of speakers [40]. Thirdly, the distance metric is often based on a heuristic approach and/or dependent on certain assumptions that do not necessarily hold, e.g., assuming Gaussianity in the case of PLDA [130]. Finally, the audio chunks are treated independently and any temporal context is simply ignored in conventional clustering methods as described in Section 2.5. These issues can be attributed to the distance metric function, and most of them can be addressed with RRNNs, where a data-driven, memory-based approach bridges the performance gap between the heuristic and the trainable distance estimation approaches.

In this context, an approach for learning the relationship between the speaker cluster centroids (or speaker profiles) and the embeddings is proposed in [167] (Fig. 8). In this work, the diarization process is considered to be a classification task on an already segmented audio, as in Section 2.3, either uniformly [146] or based on estimated speaker-change salient points [184]. The speaker embeddings for each segment, which are assumed to be speaker-homogeneous, are extracted and then compared with all the available speaker profiles or speaker centroids. The most suitable speaker label is assigned to each segment by minimizing the distance-based loss function, i.e., the relationship between embeddings and profiles. As discussed in [167], the RRNN-based distance estimation exhibits consistent improvements in its performance when compared with the more traditional distance estimation approaches such as the cosine distance [30] or the PLDA-based [130] distance. Note that although the task in [167] is speaker identification, an extension to the speaker diarization is rather straightforward when the speaker profiles are pre-estimated, either as centroids using any of the traditional clustering algorithm (Section 2.5 and 3.1.1), or using prior knowledge.

3.1.3 Post Processing Based on Deep Learning

There are a few recent studies on the neural network-based diarization method that is applied on top of the result from a traditional clustering-based speaker diarization. These methods can be categorized as an extension of the post-processing. Medennikov et al. [83, 52] proposed the target-speaker voice activity detection (TS-VAD) to achieve accurate speaker diarization even under noisy conditions with many speaker overlaps. TS-VAD assumes that a set of i-vectors are available for each speaker in the audio, where is the dimension of i-vector and is the number of speakers. As presented in Fig. 9, TS-VAD takes not only a sequence of MFCC, , where is the dimension of MFCC and the length of the sequence, but also a set of i-vectors . Given and , the model outputs a sequence of -dimensional vector where the -th element of represents the probability of the speech activity of the speaker corresponding to at the time frame . In other words, the -th element of is expected to be 1 if the speaker of is speaking at time , and 0 otherwise.

Because TS-VAD requires the i-vectors of speakers, pre-processing to obtain the i-vectors is necessary. The procedure proposed in [83, 52] is as follows:

-

1.

Apply clustering-based diarization.

-

2.

Estimate i-vectors for each speaker given the diarization result.

-

3.

Repeat (a) and (b).

-

(a)

Apply TS-VAD given the estimated i-vectors.

-

(b)

Refine i-vectors given the TS-VAD result.

-

(a)

TS-VAD was proposed as a part of the winning system of CHiME-6 Challenge [81], and showed a significantly better DER compared with the conventional clustering based approach [83]. However, it has a drawback, i.e., the maximum number of speakers that the model can handle is limited by the dimension of the output vector.

As a different approach, Horiguchi et al. proposed the application of the EEND model (detailed in Section 3.2.4) to refine the result of a clustering-based speaker diarization [168]. A clustering-based speaker diarization method can handle a large number of speakers but unable to handle overlapped speech. Conversly, EEND has opposite characteristics. To complementarily use the two methods, The authors in [83] first applied a conventional clustering method. Then, the two-speaker EEND model was iteratively applied for each pair of detected speakers to refine the time boundary of overlapped regions.

3.2 Joint Optimization for Speaker Diarization

3.2.1 Joint Segmentation and Clustering

A model called unbounded interleaved-state recurrent neural networks (UIS-RNN) was proposed, which replaced the segmentation and clustering methods with a trainable model [41]. Given the input sequence of embeddings , UIS-RNN generates the diarization result as a sequence of speaker index for each time frame. The joint probability of and can be decomposed by the chain rule as follows.

| (50) |

To model the distribution of the speaker change, UIS-RNN then introduces a latent variable , where becomes 1 if the speaker indices at time and are different, and 0 otherwise. The joint probability including is then decomposed as follows.

| (51) |

Finally, the term is further decomposed into three components.

| (52) |

Here, denotes the sequence generation probability, and modeled by gated recurrent unit (GRU)-based recurrent neural networks. denotes the speaker assignment probability and modeled by a distance-dependent Chinese restaurant process [185], which can model the distribution of unbounded number of speakers. Finally, represents the speaker change probability and is modeled by the Bernoulli distribution. Since all models are represented by trainable ones, UIS-RNN can be trained in a supervised fashion by finding the parameters that maximize over training data. The inference can be conducted by finding that maximizes given based on the beam search in an online fashion. While UIS-RNN works in an online fashion, it demonstrated better DER than that of the offline system based on spectral clustering.

3.2.2 Joint Segmentation, Embedding Extraction, and Re-segmentation

A speaker diarization method based on the region proposal networks (RPN) was proposed to jointly perform segmentation, speaker embedding extraction, and resegmentation [63]. The RPN was originally proposed to detect multiple objects from a two-dimensional image [186], and one-dimensional variant along with the time-axis is used for speaker diarization.

As can be seen from Fig. 10 (a), the STFT features with a size of time and frequency bin is first converted to the feature map with a size of time, frequency and channels using CNNs. Then, other three types of neural networks are applied on various sizes of sliding windows (named “anchor”) along with the time axis. For each anchor, the three neural networks perform SAD, speaker embedding extraction, and region refinement, respectively. Here, SAD is the task to estimate the probability of speech activity for the anchor region. Speaker embedding extraction is the task to generate an embedding to represent the speaker characteristics of the audio corresponding to the anchor region. Finally, region refinement is the task to estimate the difference between the shape (i.e. duration and center position) of the anchor and that of the corresponding reference region.

The inference procedure by RPN is presented in Fig. 10 (b). RPN is first applied to list the anchors with speech activity probability higher than the pre-determined threshold. The anchors are then clustered using a conventional clustering method (e.g., k-means) based on the speaker embeddings estimated for each anchor. Finally, highly overlapped anchors after region refinement are removed, a method known as the non-maximum suppression.

The RPN-based speaker diarization system has the advantage of handling overlapped speech with possibly any number of speakers. Also, it is much simpler than the conventional speaker diarization system. It was shown in multiple datasets that this system achieved significantly better DER than the conventional clustering-based speaker diarization system [63, 80].

3.2.3 Joint Speech Separation and Diarization

There are also recent researches on the joint modeling of speech separation and speaker diarization. Kounades-Bastian et al. [187, 188] proposed the incorporation of a speech activity model into speech separation based on the spatial covariance model with non-negative matrix factorization. They derived the EM algorithm to estimate separated speech and the speech activity of each speaker from the multichannel overlapped speech. While their method jointly performs speaker diarization and speech separation, it is based on a statistical modeling, and the estimation was conducted solely based on the observation, i.e. without any model training.

Neumann et al. [64, 169] later proposed a trainable model, namely online Recurrent Selective Attention Network (online RSAN), for joint speech separation, speaker counting, and speaker diarization based on a single neural network (Fig. 11). Their neural network takes the input of spectrogram , a residual mask , and a speaker embedding , where is the index of the audio block; , the index of the speaker; , the length of the audio block; and , the maximum frequency bin of the spectrogram. It outputs the speech mask and an updated speaker embedding for the speaker corresponding to . The neural network is applied in an iterative fashion for each audio block , and for each speaker as follows:

-

1.

Repeat (a) and (b) for

( is set to if it was not calculated previously) ii. iii. If , stop iteration.

A separated speech for speaker at audio block can be obtained by where is the element-wise multiplication. The speaker embedding is used to keep track of the speaker of adjacent blocks. Thanks to the iterative approach, this neural network can cope with the variable number of speakers while jointly performing speech separation and speaker diarization. The online RSAN was evaluated by using real meeting dataset with up to six speakers, and showed better results than the clustering-based method [169].

3.2.4 Fully End-to-end Neural Diarization

Recently, the framework called EEND was proposed [42, 43], which performs all the speaker diarization procedures based on a single neural network. The architecture of EEND is shown in Fig. 12. An input to the EEND model is a -length sequence of acoustic features (e.g., log Mel-filterbank), . A neural network then outputs the corresponding speaker label sequence where . Here, represents the speech activity of the speaker at the time frame , and is the maximum number of speakers that the neural network can output. Importantly, and can be both 1 for different speakers and , indicating that these two speakers and are speaking simultaneously (i.e. overlapping speech). The neural network is trained to maximize over the training data by assuming the conditional independence of the output . Because there can be multiple candidates of the reference label by swapping the speaker index , the loss function is calculated for all possible reference labels and the reference label that has the minimum loss is used for the error back-propagation, which is inspired by the permutation free objective used in speech separation [45]. EEND was initially proposed using a bidirectional long short-term memory (BLSTM) network [42], and was soon extended to the self-attention-based network [43] by showing the state-of-the-art DER for two-speaker data such as the two-speaker excerpt from the CALLHOME dataset (LDC2001S97) and the dialogue audio in the corpus of Spontaneous Japanese [189].

EEND has multiple advantages. First, EEND can handle overlapping speech in a sound way. Second, the network is directly optimized toward the maximization of diarization accuracy, by which we can expect a high accuracy. Third, it can be retrained by a real data (i.e. not synthetic data) just by feeding a reference diarization label while it is often not straitforward for the prior works. However, EEND also has several limitations. First, the model architecture limits the maximum number of speakers that the model can cope with. Second, EEND consists of BLSTM or self-attention based neural networks, making it difficult to do online processing. Third, it was empirically suggested that EEND tends to overfit to the distribution of the training data [42].

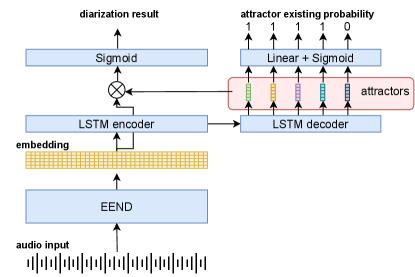

To cope with an unbounded number of speakers, several extensions of EEND have been investigated. Horiguchi et al. [170] proposed an extension of EEND with the encoder-decoder-based attractor (EDA) (Fig. 13). This method applies an LSTM-based encoder-decoder on the output of EEND to generate multiple attractors. Attractors are generated until the attractor existing probability becomes less than the threshold. Then, each attractor is multiplied by the embeddings generated from EEND to calculate the speech activity for each speaker. EEND-EDA was evaluated on CALLHOME (two to six speakers) and DIHARD 2 (one to nine speakers) dataset and showed better performance than the clustering-based baseline system.

On the other hand, Fujita et al. [171] proposed another approach to output the speech activity one after another by using a conditional speaker chain rule. In this method, a neural network is trained to produce a posterior probability , where is the speech activity for the -th speaker. Then, the joint speech activity probability of all speakers can be estimated from the following speaker-wise conditional chain rule as:

| (53) |

During inference, the neural network is repeatedly applied until the speech activity for the last estimated speaker approaches zero. Kinoshita et al. [174] proposed a different approach that combines EEND and speaker clustering. In their method, a neural network is trained to generate speaker embeddings and the speech activity probability. Speaker clustering constrained by the estimated speech activity by EEND is applied to align the estimated speakers among the different processing blocks.

There are also a few recent trials to extend the EEND for online processing. Xue et al. [172] proposed a method using a speaker tracing buffer to better align the speaker labels of adjacent processing blocks. Han et al. [173] proposed a block online version of EEND-EDA [170] by carrying the hidden state of the LSTM-encoder to generate the attractors block by block.

4 Speaker Diarization in the Context of ASR

From a conventional perspective, speaker diarization is considered a pre-processing step for ASR. In the traditional system structures for speaker diarization, presented in Fig. 1, speech inputs are processed sequentially across the diarization components without considering the ASR performance, which is usually measured using the word error rate (WER). WER is the number of misrecognized words (substitution error, insertion error, and deletion error) divided by the number of reference words. One issue is that the tight boundaries of speech segments as the outcomes of speaker diarization have a high chance of causing unexpected word truncation or deletion errors in ASR decoding. In this section we discuss how the speaker diarization systems have been developed in the context of ASR, not only resulting in better WER by preventing speaker diarization from affecting the ASR performance, but also benefiting from ASR artifacts to enhance diarization performance. More recently, there have been a few pioneering proposals made for the joint modeling of speaker diarization and ASR, which will also be introduced in this section.

4.1 Early Works

The lexical information from the ASR output has been employed for the speaker diarization system in a few different ways. First, the earliest approach was the RT03 evaluation [1] which used word boundary information for the purpose of segmentation. In [1], a general ASR system for broadcast news data was built, in which the basic components are segmentation, speaker clustering, speaker adaptation and system combination after ASR decoding from the two sub-systems with the different adaptation methods. The authors used the word boundary information from the ASR system for speech segmentation, and compared it with the BIC-based speech segmentation. While the performance gain by the ASR-based segmentation was insignificant, this was the first attempt to take advantage of ASR output to enhance the diarization performance. In addition, the ASR result was used to refine SAD in IBM’s submission [190] for RT07 evaluation. The system that appeared in [190] incorporates word alignments from the speaker independent ASR module and refines the SAD result to reduce false alarms so that the speaker diarization system can have better clustering quality. The segmentation system in [71] also takes advantage of word alignments from ASR. The authors in [71] focused on the word-breakage problem, in which the words from the ASR output are truncated by segmentation results since the segmentation results and the decoded word sequences are not aligned. Therefore, word-breakage ratio was proposed to measure the rate of change points detected inside intervals corresponding to words. The DER and word-breakage ratio were used to measure the influence of the word truncation problem. While the aforementioned early works of speaker diarization systems that leverage the ASR output focus on the word alignment information to refine the SAD or segmentation result, the speaker diarization system in [191] created a dictionary for the phrases commonly appearing in broadcast news. The phrases in this dictionary provide the identity of who is speaking, who will speak and who spoke in the broadcast news scenario. For example, “This is [name]” indicates who was the speaker of the broadcast news section. Although the early studies on speaker diarization did not fully leverage the lexical information to drastically improve the DER, the idea of integrating the information from ASR output has been adopted by many studies to refine or improve the speaker diarization output.

4.2 Using lexical information from ASR

The more recent speaker diarization systems that take advantage of the ASR transcript have employed a DNN model to capture the linguistic pattern in the given ASR output to enhance the speaker diarization result. The authors in [192] proposed a way of using the linguistic information for the speaker diarization task where participants have distinct roles that are known to the speaker diarization system. Fig. 14 shows the diagram of the speaker diarization system discussed in [192]. In this system, a neural text-based speaker change detector and a text-based role recognizer are employed. By using both linguistic and acoustic information, DER was significantly improved compared with the acoustic only system.

Lexical information from the ASR output was also used for speaker segmentation [72] by employing a sequence-to-sequence model that outputs speaker turn tokens. Based on the estimated speaker turn, the input utterance is segmented accordingly. The experimental results in [72] indicate that using both acoustic and lexical information can be exploited and an extra advantage can be obtained owing to the word boundaries we get from the ASR output.

The authors of [193] presented follow-up research within the above thread. Unlike the system in [72], the lexical information from the ASR module was integrated with the speech segment clustering process by employing an integrated adjacency matrix. The adjacency matrix is obtained from the max operation between the acoustic information created from affinities among audio segments and lexical information matrix created by segmenting the word sequence into word chunks that are likely to be spoken by the same speaker. Fig. 15 presents a diagram that explains how lexical information is integrated in an affinity matrix with acoustic information. The integrated adjacency matrix leads to an improved speaker diarization performance for the CALLHOME American English dataset.

4.3 Joint ASR and Speaker Diarization with Deep Learning

Motivated by the recent success of deep learning and end-to-end modeling, several models have been proposed to jointly perform ASR and speaker diarization. As with the previous section, the ASR results contain a strong cue to improve speaker diarization. On the other hand, speaker diarization results can be used to improve the accuracy of ASR, for example, by adapting the ASR model toward each estimated speaker. Joint modeling can leverage such inter-dependency to improve both ASR and speaker diarization. In the evaluation, a WER metric that counts word hypotheses with speaker-attribution errors as misrecognized words, such as speaker-attributed WER [194] or concatenated minimum-permutation WER (cpWER) [81], is often used. ASR-specific metrics (e.g., speaker-agnostic WER) or diarization-specific metrics (e.g., DER mentioned in Section 1.4.1) are also used complementarily.

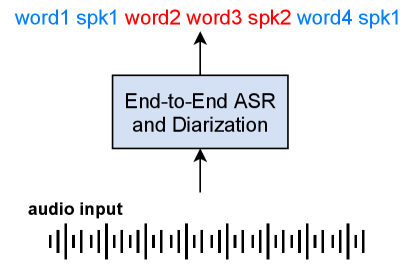

The first approach is the introduction of a speaker tag in the transcription of end-to-end ASR models (Fig. 16). Shafey et al. [65] proposed to insert a speaker role tag (e.g., doctor and patient) into the output of a recurrent neural network-transducer (RNN-T)-based ASR system. This method was evaluated by using doctor-patient conversation, and a significant reduction in WDER was reported with a marginal degradation of WER. Similarly, Mao et al. [66] proposed the insertion of a speaker identity tag into the output of an attention-based encoder-decoder ASR system, and showed an improvement of DER especially when the oracle utterance boundaries were not given. The works by Shafey et al. and Mao et al. showed that the insertion of speaker tags is a simple and promising way to jointly perform ASR and speaker diarization. On the other hand, the speaker roles or speaker identity tags need to be determined and fixed during training. Thus, it is difficult to cope with an arbitrary number of speakers using this approach.

The second approach is a MAP-based joint decoding framework. Kanda et al. [67] formulated the joint decoding of ASR and speaker diarization as follows (see also Fig. 17). Assume that a sequence of observations is represented by , where denotes the number of segments (e.g., generated by applying SAD on a long audio) and denotes the acoustic feature sequence of the -th segment. Further assume that word hypotheses with time boundary information are represented by where is the speech recognition hypothesis corresponding to the segment . Here, contains all the speakers’ hypotheses in the segment where denotes the number of speakers, and represents the speech recognition hypothesis of the -th speaker of the segment . Finally, a tuple of speaker embeddings , where is the -dimensional speaker embedding of the -th speaker, is also assumed. With all these notations, the joint decoding framework of multispeaker ASR and diarization can be formulated as a problem to find most likely as follows:

| (54) | ||||

| (55) | ||||

| (56) |

where the Viterbi approximation is applied to obtain the final equation. This maximization problem is further decomposed into two iterative problems as follows:

| (57) | ||||

| (58) |

where is the iteration index of the procedure. In [67], Eq. (57) is modeled by the target speaker ASR [195, 196, 197, 59] and Eq. (58) is modeled by the overlap-aware speaker embedding estimation. This method obtains a speaker-attributed WER similar to that of the target-speaker ASR with oracle speaker embeddings for two-speaker conversation data of the Corpus of Spontaneous Japanese [189]. On the other hand, it requires an iterative application of the target-speaker ASR and a speaker embedding extraction scheme, which make it challenging to apply the method in online mode.