A response-adaptive multi-arm design for continuous endpoints based on a weighted information measure

Abstract

Multi-arm trials are gaining interest in practice given the statistical and logistical advantages that they can offer. The standard approach is to use a fixed (throughout the trial) allocation ratio, but there is a call for making it adaptive and skewing the allocation of patients towards better performing arms. However, among other challenges, it is well-known that these approaches might suffer from lower statistical power. We present a response-adaptive design for continuous endpoints which explicitly allows to control the trade-off between the number of patients allocated to the “optimal” arm and the statistical power. Such a balance is achieved through the calibration of a tuning parameter, and we explore various strategies to effectively select it. The proposed criterion is based on a context-dependent information measure which gives a greater weight to those treatment arms which have characteristics close to a pre-specified clinical target. We also introduce a simulation-based hypothesis testing procedure which focuses on selecting the target arm, discussing strategies to effectively control the type-I error rate. The potential advantage of the proposed criterion over currently used alternatives is evaluated in simulations, and its practical implementation is illustrated in the context of early Phase IIa proof-of-concept oncology clinical trials.

Keywords information gain best arm identification type-I error control phase II trials patient benefit

1 Introduction

Clinical trials are medical research studies involving patients with the aim of identifying the best treatment for future recommendation. For many rare diseases, they may be the only chance for patients of receiving a new promising treatment promptly. However, the clinical trial dilemma often requires some participants to receive suboptimal treatments in order to be able to estimate the treatment effect and draw reliable conclusions. In other terms, ethical responsibility in clinical trials is based on the balance of two components: the individual ethics, prioritising the allocation of patients to the better treatment to ensure their own well-being, and the collective ethics, focusing on identifying the better treatment with high probability (Antognini and Giovagnoli, 2010; Bartroff and Leung Lai, 2011). Balanced allocation strategies are the most commonly used in practice, due their easy implementation and their even exploration of all the treatments which generally leads to high statistical power (Azriel et al., 2012). Although they comply quite well with the collective objective, their indiscriminate balanced allocation does not allow for a preventive stochastic drop of suboptimal treatments, by often neglecting the individual ethics requirements (Thall et al., 1989). In contrast, response-adaptive designs are able to skew the allocation of patients towards those treatments that are observed to perform better, often without a significant sacrifice in terms of statistical power. In fact, rather than using allocation probabilities fixed throughout the trial, this class of designs sequentially allocates patients to the treatment arms on the basis of the previous patient responses (Rosenberger and Lachin, 1993; Berry and Eick, 1995; Robertson et al., 2023).

We consider the case of a multi-armed clinical trial comparing several alternative treatments and where the primary endpoint is measurable in a continuous scale. These types of measurements are often available in clinical trials, although much of the methodological literature focus on binary endpoints (Rombach et al., 2020) and, in many real applications, continuous endpoints for efficacy tend to be dichotomised (Altman and Royston, 2006). In early phase drug development, common measurements in continuous scale are represented by biomarkers, which consist in measurable indicators of biological conditions and that are often used as primary endpoints in clinical trials to evaluate the efficacy (or its proxy) of treatments (Zhao et al., 2015). While in most applications one targets the highest or the lowest value of the endpoints, in some cases the focus may rather be on an optimal range or a specific target value for the selected biomarker. An example is represented by the levels of glucose in the blood measured through the HbA1c biomarker: values of HbA1c much above a given threshold indicate poor glucose control and risk of complications due to hyperglicemia, while values too low can indicate risk of hypoglycemia (Kaiafa et al., 2021). In this context, we consider clinical trials with a clinical target set by clinicians in advance. In the case of targeting the highest (or lowest) value of an endpoint, can be set to the highest (lowest) value which is clinically attainable.

While it is common in the literature to emphasise comparisons with the control and test hypotheses against it, this approach does not always align with the objectives of some multi-armed trials, such as selecting the best treatment arm. Rather than comparing each arm against the control, we aim at identifying the treatment arm which gives responses on average closer to the target . Under this assumption, competing treatment arms can be ranked from the best to the worst according to the proximity of their true mean responses to the target. In line with this objective, we propose a hypothesis testing procedure which compares the first and second best arms in the ranking, while taking into account the correct identification of these two arms. We also discuss strategies to implement such a hypothesis testing procedure and to control type-I error rate inflation.

In this work, we introduce a class of response-adaptive designs for studies with continuous endpoints aiming at identifying the best treatment arm under ethical constraints (e.g. maximising the number of patients assigned to it). Drawing from the theory of weighted information measures (Kelbert and Mozgunov, 2015; Suhov et al., 2016), we propose to use the information gain - i.e. the difference between the Shannon differential entropy and its weighted version - as a measure for the decision-making in clinical trials. Indeed, the use of suitable parametric weight functions allows to comply with ethical constraints by informing entropy measures about which outcomes are more desirable. In this work, we consider weight functions with a particular interest in arms whose mean responses are in the neighbourhood of . The idea of taking into account the "context" of the experiment directly in the information measure has also been the basis of some recently proposed clinical trial designs with either binary (Kasianova et al., 2021; Mozgunov and Jaki, 2020a; Kasianova et al., 2023) or multinomial responses (Mozgunov and Jaki, 2019, 2020b). In line with these recent developments, we define the information measures based on the Bayesian posterior distribution of the parameter of interest (i.e. the true mean response), which arises from the assumption of objective prior on it. We introduce a parameter in the weight function to control the so-called "exploration vs exploitation" trade-off (Azriel et al., 2011), and we discuss strategies to perform a robust calibration of this tuning parameter according to the objective of the trial.

The current work can be framed within the broader class of response-adaptive designs, relaxing the assumption of independence between competing arms to maximise patient benefit. An exhaustive review of the topic has been recently proposed by Robertson et al. (2023). Multi-Arm Bandit (MAB) approaches are a popular sub-class of response-adaptive designs which can be adopted in multi-armed trials to find a balance between exploration and exploitation. Many MAB designs have been recently proposed both for discrete (Villar et al., 2015; Aziz et al., 2021) and continuous (Smith and Villar, 2018; Williamson and Villar, 2020) responses, but they all focus on the highest (or lowest) response rather than on a specific target . For this reason, we also introduce two extensions of Smith and Villar (2018)’s design that incorporate the information about .

This work is motivated by an early Phase IIa proof-of-concept oncology clinical trial of a novel inhibitor (called A, the name is masked) in combination with one or several currently approved immunotherapy treatments. The working hypothesis is that, due to its mechanism of action, this inhibitor can enhance the effect of an immunotherapy, and several doses of the inhibitor has underwent the Phase I dose-escalation trial. However, the challenge is to select one immunotherapy for the further development in Phase IIb (or Phase III) trial. To answer this question, a multi-arm trial studying the three combination arms of the novel inhibitor with three various approved immunotherapies (called B, C, and D) and one arm of A+B+C was proposed. Due to the expected mechanism of action and to make a rapid decision on the development, the primary endpoint is a PD-marker (at Day 15) that was agreed to be an accurate proxy for the expected interaction effect of the drug on efficacy. A specific value of this PD-marker is being targeted, as lower values would indicate insufficient efficacy, while higher values could lead to adverse effects. The objective of the trial are (i) to find the most promising combination among the four, (ii) check whether the difference between the most promising and the second promising arm is “significant”, and (iii) collect the most information about the most promising arms (due to the constrain on the resources). Taking all three objectives into account, the proposal is to use the response-adaptive design that is presented in this work.

The remainder of the paper is organised as follows. Section 2 illustrates the derivation of the proposed criterion for a particular weight function symmetric around the target , and highlights the central role of the parameter controlling the trade-off between exploration and exploitation. Section 3 introduces a novel simulation-based hypothesis testing procedure which formalises the objective of the trial outlined above, and discusses strategies to control type-I error rates. Section 4 illustrates methods for a robust selection of the tuning parameter . In Section 5, the performance of our criterion is evaluated against several competitors through an extensive simulation study inspired by the motivating setting described above, while Section 6 illustrates the application of our multivariate proposal to a trial with co-primary endpoints. The paper concludes with a discussion in Section 7.

2 Methodology

2.1 Context-dependent information measure

Consider a clinical trial where a continuous endpoint is observed for each of the considered treatment arms. In addition, consider the responses from an arm to be an independent and identically distributed (iid) sequence of realisations of the random variables , with and (). We assume an objective Bayesian setting where is provided with a non-informative prior distribution and is supposed to be known. Notably, we assume for an improper uniform prior on the whole real line, resulting in a posterior distribution , where is the sample mean and . The posterior density of is given by

| (1) |

Assume that , namely that the posterior density in (1) tends to concentrate in the neighbourhood of a certain value as grows. The amount of information needed to estimate is given by the Shannon differential entropy of , namely

| (2) |

However, the previous quantity does not account for the fact that the information about the unknown parameter is not uniformly valuable across the entire parametric space. Indeed, along with the amount of information needed to estimate a parameter, in experimental studies it is also relevant to consider the nature of the outcome itself and how desirable it is. For example, given a pre-specified target mean response , one can consider a context-dependent measure as the weighted Shannon entropy, i.e.

| (3) |

where the weight function, , emphasises the interest on a particular subset of the parametric space (e.g. the neighbourhood of ) rather than on the whole space. Following Mozgunov and Jaki (2020b), the information gain from considering the estimation problem in the sensitive area is given by

| (4) |

This quantity can be interpreted as the amount of additional information needed to estimate the mean response when the degree of interest in the true value of is not uniform across the whole parametric space (i.e. some values of are more desirable than others).

Theorem 1 provides a closed-form expression for the information gain when a family of weight functions having a Gaussian kernel centered around the target is considered, and it offers some insights on its asymptotical behaviour.

Theorem 1.

Let and be the standard and weighted Shannon entropy of the posterior normal distribution in (1) corresponding to arm . Assume a family of weight functions of the form

| (5) |

where , , , is the variance term of the Gaussian kernel and satisfies the normalisation condition . Then,

| (6) |

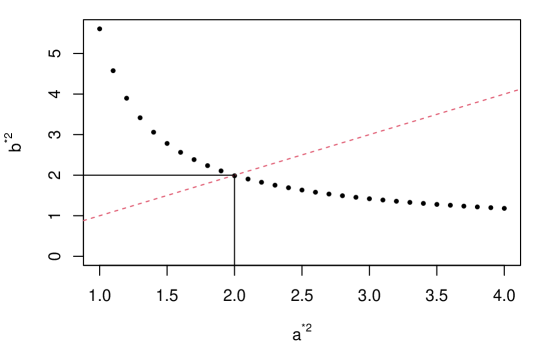

Additionally, if , then: (i) when ; (ii) when ; (iii) when .

The proof is provided in Section A.1 of the Supplementary Material (SM, henceforth). Notice that the function in (5) is symmetric around its maximum , while quantifies how the curve is dispersed around this target mean. Theorem 1 shows that the leading term of the information gain in (6) is always non-positive, with the asymptotic term achieving the maximum value when the sample mean equals the target mean. Notably, as , its asymptotical behaviour varies with . For example, the information gain converges to when , meaning that the context of the study tends to be neglected as more information is collected on a specific arm. For this reason, we focus on values of greater or equal to . When , the information gain diverges to , while, when , the information gain converges to a finite quantity which, for , depends on the squared distance between and and, for , on a standardised version of this distance.

This means that collecting more information about the arm with mean response close to implies a maximisation of the information gain . This property guarantees that the more valuable the learning about , the higher the information gain associated to this arm. In addition, when , is monotonically increasing with (cfr. Figure 1b), reflecting the idea that the more the uncertainty around arm the more the learning value associated with sampling from it. When , as more observations on arm are collected, the value of the information gain decreases, with higher values of leading to a steeper decline of this function (cfr. Figure 1c).

For the desirable properties illustrated above, we suggest the use of the information gain in (6) as patient’s gain criterion to govern patients’ allocation in a sequential experiment.

2.2 Allocation rule

Let be the total number of patients allocated to arm at the end of the trial and the total number of patients treated at the end of the trial. The scheme to allocate the patients to the arms works as follows.

After a burn-in phase where an equal batch of individuals is allocated to each of the arms, the -th patient () is allocated following the rule:

| (7) |

This rule may be seen as a deterministic response-adaptive criterion, where the next patient is almost surely allocated to the arm associated with the highest information gain. The algorithm proceeds until the total number of patients is attained. Finally, the arm whose final sample mean is closest to the target is selected for the final recommendation; namely, . The burn-in phase is necessary to have a first estimate of the sample means of the arms, even more importantly when the sequential design is based on non-informative prior distributions.

The rule in (7) accounts for patient benefit by rewarding arms with mean responses closer to the target, while encouraging the exploration of arms with higher uncertainty. Notably, smaller values of tend to mitigate the impact that different values of the standard deviations have on the information gain, thus can be regarded as a parameter controlling the impact of the response variability on this information measure. Moreover, a too greedy algorithm may lead to an insufficient exploration of the alternative arms, resulting in poor performance in terms of statistical power (Villar et al., 2015). For this reason, a key role in the "exploration vs exploitation" trade-off is played by the parameter which penalises an increasing number of observations on the same arm, offering more chances to other arms to be explored. Notably, a larger corresponds to a higher penalisation of arms with many patients, favouring a more spread allocation. In Section 4, we show some strategies to select in accordance with the trial objectives, while, in Section 5, we offer further insights on how this parameter affects the operating characteristics of the proposed design.

As alternative to (5), a weight function asymmetric around , allowing for a different weighting of values above and below the target, is discussed in Section B of the SM.

2.3 Multivariate generalisation

Let us now consider the case where endpoints are measured for each of the arms of a clinical trial. In addition, consider the responses from arm as an iid sequence of realisations of the random vectors , with mean vector and covariance matrix supposed to be known. Under these assumptions, an improper prior uniform on for will result in a posterior distribution , where is the vector of sample means for the -th arm and . If is the posterior precision matrix, the posterior density of is given by

| (8) |

Theorem 2 provides a closed-form expression for the information gain when a family of weight functions having a multivariate Gaussian kernel centered around the vector of target means is considered.

Theorem 2.

Let and be the standard and weighted Shannon entropy of the density in (8) corresponding to arm . Assume a family of weight functions of the form

| (9) |

where is the precision matrix term of the weight function and satisfying . Then,

| (10) |

The proof is provided in Section A.2 of the SM. Interestingly, for the information gain in (10) coincides with the one in (6) with . The information gain attains higher values in correspondence of vectors of the sample means being close to the vector of the target means, and it is also monotonically increasing with the variances of the endpoints (see section C.1 of the SM). The asymptotical behaviour of (10) is similar to the one of the information gain in (6), thus we consider for the same reasons argued for the univariate case.

In the bivariate case (), the function in (10) can be expressed in scalar terms as

where , and with variances in the diagonal and covariance between the endpoints in the off-diagonal. The behaviour of the information gain as function of the correlation is discussed in Section C.2 of the SM.

We adopt the function (10) as patients’ allocation criterion. As for the univariate case, the next patient of the sequential scheme is assigned to the arm with the highest information gain, while the arm selection is still based on the proximity between the final estimates of the means and the respective target mean values, i.e. the estimated best arm is the one whose final estimated mean vector is closest to the target vector.

3 Simulation-based hypothesis testing procedure

3.1 Hypothesis testing

We assume a generic context where a -armed trial is considered and no control arm is adopted for comparison. Thus, pairwise comparisons may be necessary to test the superiority of an arm over the others, and this can be a computationally demanding task. Here, following the motivating trial and to be aligned with the final recommendation strategy, we propose a testing procedure which only compares the two best performing arms, by also taking into account their correct identification.

Let be the best treatment arm and be the second best treatment arm. In case of tie between two or more treatment arms, the best (or second best) arm is randomly determined among them. Denoting and the true mean responses of arm and arm , respectively, we propose a test procedure which assesses whether is sufficiently much closer to the target than . Formally, we consider the following set of hypotheses:

| (11) |

If is the sample mean of arm at the end of the trial, the estimated best and second best arm are and . The hypotheses in (11) are tested under a Bayesian framework where is rejected at a significance level if the posterior probability that exceeds a fixed cut-off probability . Strategies to calibrate to achieve type-I error control are proposed in Section 3.2.

However, comparing distances between the means of the two estimated best arms is not sufficient to make a claim on the true ones, thus we need to guarantee that the ground truth is correctly identified. For this reason, we propose two definitions of power incorporating their correct identification, under the assumption that .

The first proposal is to consider a conditional power,

| (12) |

where is the posterior probability that . Rejections of the null are counted in (12) only for pseudo-trials whose two best arms are correctly identified. Alternatively, one can consider a two-components power, namely

| (13) |

where correct identification is counted as a "success". Notice that .

3.2 Controlling type-I error: mean and strong control

Let us consider a wide set } of plausible null scenarios, where is characterised by a particular vector of true mean responses. Given a specific design and a maximum tolerated type-I error probability , we define as the individual cut-off value calibrated at level under the null scenario (, namely a threshold value such that . Notice that, for a fixed , this probability can be efficiently evaluated via Monte Carlo simulations.

To guarantee a strong control of the type-I error, one can fix the threshold to the maximum of the individual cut-off probabilities, i.e. . This is a conservative strategy which assures that the probability of incorrectly detecting the presence of a superior arm under the null does not exceed in each of the scenarios in . Ideally, should contain a wide variety of scenarios in order to control as many situations as possible. In practice, there may be scenarios which are thought to be highly unlikely and can be ruled out. As rule of thumb, we suggest to consider null scenarios with not being distant from more than times the largest standard deviation. Remember that for normal responses it is known that the response will be in the interval with probability higher than . Thus, a distance much higher than would imply that values in the neighbourhood of are very unlikely for arm . Furthermore, in some contexts, null scenarios with distances larger than might signify the ineffectiveness of all the treatments and, therefore, having a control on any of them may be out of the trial scope.

In some cases, the strong control of the type-I error may be a too stringent requirement which leads to a loss of power. This is even more evident when there are only few scenarios requiring a substantially higher cut-off value than the rest. Similarly to Daniells et al. (2024), we propose to relax the required type of control, trying to reach an average control of the type-I error probability rather than a strong one. Then the resulting threshold value is such that , where is the individual type-I error probability associated with scenario . In this case, can be regarded as the average type-I error probability for the set of scenarios in . Notice that the simple average can be replaced by a weighted average, giving larger weight to scenarios which are more relevant than others.

4 Robust strategies for selecting

4.1 Strategy

The choice of the penalisation parameter is crucial since to control the trade-off between exploration and exploitation. We consider as "optimal", a value of which maximises a specific operating characteristic of interest (e.g. statistical power, percentage of times the best arm is selected in repeated trials). The optimal value of depends on several parameters, like the vector of true means, the vector of standard deviations, the vector of target means, the total sample size, the number of arms, the burn-in size and the parameter . Apart from the vectors of the true means and true standard deviations, we suppose that all the other parameters are fixed in advance by clinicians. Particular caution must be placed on the vector of standard deviations which may be supposed to be either known (i.e. prior guess used as estimate) or unknown.

Following Mozgunov and Jaki (2020b), we discuss a general approach to select the robust optimal value of which does not require the prior knowledge on the mean responses and the standard deviations. The idea is to consider a large variety of plausible alternative scenarios, each one characterised by a vector of mean responses (and a vector of standard deviations, in case they are assumed to be unknown), and to select the value of associated with the average best performance over all the considered scenarios.

In practice, one considers a set of plausible alternative scenarios, where is characterised by the set of unknown parameters, and a grid of values for . Then, the algorithm to find the robust optimal consists in the following steps:

-

1.

Define an objective function , where is the value assumed by a particular operating characteristic of interest for a fixed and scenario ().

-

2.

Compute , for each scenario and value of in the considered grid.

-

3.

Find the optimal : .

Averaging over a wide set of scenarios offers a robust way to select a suitable value of regardless of the true values of the parameters: although the optimal value of may not be convenient for some particular scenario, this procedure ensures that it is nearly optimal.

4.2 Objective functions

The objective functions should be chosen in accordance with the operating characteristic that one wants to target in the calibration of . If the main objective is to maximise the patient benefit (PB), we propose to target the average percentage of experimentation of the best arm (evaluated over pseudo-trials), namely

| (14) |

where if and only if patient is allocated to the true best arm during the pseudo-trial . Supposing that for any , we adopt the following squared difference as corresponding objective function:

| (15) |

quantifying how far each value is from .

In case the objective is to achieve a pre-specified level of power, than we use

| (16) |

where is either the power defined in (12) or in (13), is the power attained using fixed and equal randomisation (FR) and . The optimal will be then the smallest for which the probability to obtain a power higher than of the power attained by the FR is at least . This avoids a too excessive penalisation of increasing arm sample sizes, once that the requirement in terms of power is met.

5 Simulation study of Phase II clinical trials

5.1 Setting

Let us consider an early Phase IIa proof-of-concept oncology clinical trial such as the one introduced in the motivating example of Section 1. We consider alternative treatments (no control arm) and a total of patients recruited at the end of the trial. We assume that the primary endpoint is PD-marker measured on a continuous scale, and responses from arm closer to the target () are more desirable. For simplicity, we assume for , implying that sample means equal to and () are treated equally by our proposed design. Thus, for a given scenario, the best treatment arm is the one associated with smallest mean response in absolute value.

We investigate the performance of our method over random scenarios under the alternative hypothesis in (11). Each scenario is characterised by a vector of means , where is generated from a , , while variances are assumed to be known and equal to , , for each scenario. Details and a full analysis for the case of unknown variances are presented in Section F of the SM.

Operating characteristics of interest are (i) the type-I error rates in correspondence of specific null scenarios, (ii.a) conditional () and (ii.b) two-components () statistical power (cfr. Section 3.1), (iii) the average percentage of experimentation of the best arm - cfr. (14) - as measure of patient benefit (PB) and (iv.a) the percentage of pseudo-trials with correct identification of the best arm (CS) and of (iv.b) the two best arms (CS). For each given scenario, operating characteristics are assessed over pseudo-trials (Monte Carlo simulations).

In the following, we will denote WE(,) the class of designs proposed in Section 2.2, and we will consider the case of : this will offer insights on how different degrees of discrimination between arm-specific variances affect the resulting operating characteristics.

We compare its performance with several alternative allocation procedures which have good properties either in terms of statistical power or patient benefit. For a fair comparison with our proposal and to narrow down the set of competitors, we restrict to response-adaptive designs based on deterministic allocation rule. Additionally, in line with the objective of the trial, we only consider those adaptive designs that try to skew allocations in favour of arms with means closer to the target. Specifically, we compare with the following designs: (i.) Fixed and equal randomisation (FR), where each patient can be treated in a specific arm with fixed probability ; (ii.) Current belief (CB), where the next patient is treated in the arm with posterior mean closest to the target ; (iii.) Thompson Sampling (TS), where patient is treated in the arm which maximises the adjusted posterior probability of being the best, i.e. where helps to stabilise the resulting allocations; (iv.) Symmetric Gittins Index (SGI) and (v.) Targeted Gittins Index (TGI), two modifications of Smith and Villar (2018)’s proposal involving (full details are provided in Section D of the SM). We use objective priors and fix , in line with the considerations of Wang (1991) and Williamson and Villar (2020) for a trial of size . Notice that, apart from FR, all the alternative designs can be classified as response-adaptive. FR is taken as benchmark for power comparison, due to its even exploration of all the arms and its wide application in real trials. CB and TS are common and intuitive response-adaptive designs, which are generally associated with good performance in terms of patient benefit. Finally, SGI and TGI are modifications of a forward-looking design which is oriented toward a patient benefit objective (Smith and Villar, 2018; Williamson and Villar, 2020).

In absence of prior knowledge about the treatments, the burn-in size is fixed to for all the response-adaptive designs to help sequential algorithms collecting sufficient preliminary information about all the arms. The same burn-in size is chosen for both the case of known and unknown variances, in order to isolate its effect in the comparison between these two settings. Further details are provided in Section E.1 of the SM.

5.2 Calibration of cut-off probabilities

For a given combination of and , we calibrate design-specific cut-off probabilities under the set of null scenarios both considering the strong and the average control of the type-I error at a level . Two types of considerations should be made on this choice of null scenarios: (i) calibration is done under the most challenging situation where there is no sub-optimal arm; (ii) one only considers since, for , values and are treated equally in the definition of best arm. Details on the choice of the null scenarios are presented in Section E.2 of the SM.

Results are reported in Figure 2, where only four WE designs are shown based on the results of Section 5.3.

Response-adaptive designs tend to be associated with higher type-I error probabilities when is small, while FR design is associated with type-I error rates which increase with before reaching a plateau. This different behaviour can be explained by the peculiar nature of response-adaptive designs. Indeed, variability around the arm responses can overly penalise some arms at the beginning of the trial in favour of others. Notably, when is very small (i.e. all true means are very close to the target value), most of the patients are likely to be assigned to the arm which - by chance - performs better at the beginning of the trial: as a result, any other arm may not have the chance to be further explored, thus being falsely identified as inferior at the end of the trial. If a practitioner aims at reducing even more the resulting inflation of the type-I error rate associated with response-adaptive designs in this type of scenarios, a possible solution is to consider higher burn-in sizes (cfr. Section E.1 of the SM), possibly at the expense of a lower patient benefit: although a larger is associated to a more exhaustive exploration of all the arms and to more precise estimates, a too high value may lead to assign a high percentage of patients to sub-optimal treatment arms.

5.3 Robust selection of the parameter

The implementation of the proposed design requires to fix in advance the penalisation parameter . We apply the procedure presented in Section 4 both for the objective function maximising the patient benefit and the one achieving a specific level of statistical power.

We perform two independent analyses for the case of and . After preliminary checks, the sequence of values for is restricted in the interval [, ] with step . For the power objective function, we require that of the power of the FR is achieved with probability higher or equal to . This condition should assure that in most of the scenarios the resulting power is not too below the one achieved by the FR design. As a measure for , we consider the two-components power defined in (13) with design-specific cut-off probabilities guaranteeing an average control of the type-I error rate at over the set of scenarios considered in Section 5.2.

Figure 3 illustrates the result of the optimisation of and .

As anticipated, lower values of are suggested when the criterion optimises the patient benefit, while larger values of are necessary to achieve the power objective. At the same time, higher values of requires higher values of to attain the specific objective to compensate the higher relevance attributed to the variance. Therefore, the value of and will be used when , while and when .

5.4 Numerical results

A wide overview of the operating characteristics of the considered designs is reported in Figure 4, which summarises results across all the randomly generated scenarios.

WE designs achieve good performance in terms of patient benefit and identification of the best arm than their competitors, with WE(, ) and WE(, ) achieving a power almost comparable to FR. The even exploration of all the arms which characterises FR also leads to a higher percentage of correct identification of the two best arms, but with high variability across the scenarios. CB and TS generally perform worse than the other response-adaptive designs: notably, they both achieve high medians in terms of patient benefit but with high variability around them. In contrast, SGI and WE(, ) achieve comparable patient benefit measures, but with much less variability around their medians.

To further investigate the performance of our proposal, we pick two scenarios from the ones considered in Figure 4. Notably, given and , we consider: (i) Scenario I, characterised by , with the worst treatment arm having the highest variability; (ii) Scenario II, characterised by , with the best treatment arm having the highest variability. Table 1 illustrates the operating characteristics of the considered designs under Scenario I and II.

Under Scenario I, TGI, WE(, ), WE(, ) achieve a conditional power higher or equal to FR, while their two-components power drops down due to a higher difficulty in identifying the true second best arm at the end of the trial: indeed, the difference between CS1 and CS2 is higher than in these three response-adaptive designs, while is lower than for FR. Moreover, FR detects the two best arms and claim the superiority of the best one in of the simulated trials, while this percentage is reduced by points when WE(, ) is considered. This moderate sacrifice in terms of power allows WE(, ) to assign - on average - more than out of patients to the best treatment arm (around out with FR), with a large earning in terms of patient benefit. All the other considered response-adaptive designs achieve moderately higher PB at the cost of a much lower two-components power. Notably, the highest PB is achieved by WE(, ), which make this design an appealing alternative whenever one aims at optimising the benefit of the patients, also due to its low variability (s.e. ).

Scenario I: ,

Design

PB (s.e.)

CS

CS

FR

24.99 (0.04)

99.63

97.97

0.89

0.88

CB

81.22 (0.14)

97.10

74.49

0.72

0.53

TS

81.57 (0.13)

96.43

73.90

0.70

0.52

SGI

81.81 (0.07)

99.67

82.75

0.85

0.70

TGI

80.99 (0.08)

94.84

75.17

0.95

0.71

WE(1,0.55)

82.22 (0.06)

99.88

82.49

0.81

0.66

WE(2,0.7)

80.92 (0.07)

99.85

84.46

0.86

0.73

WE(1,0.8)

81.12 (0.06)

99.89

83.36

0.88

0.73

WE(2,1.1)

77.68 (0.08)

99.93

85.57

0.93

0.79

Scenario II: ,

Design

PB (s.e.)

CS

CS

FR

25.05 (0.04)

75.72

75.72

0.07

0.06

CB

38.93 (0.37)

43.31

39.31

0.52

0.21

TS

35.40 (0.37)

47.88

43.89

0.52

0.23

SGI

63.67 (0.28)

76.94

72.49

0.39

0.28

TGI

23.31 (0.30)

78.71

76.33

0.22

0.17

WE(1,0.55)

67.59 (0.26)

82.67

77.86

0.35

0.28

WE(2,0.7)

76.78 (0.14)

91.99

86.67

0.29

0.25

WE(1,0.8)

72.12 (0.17)

88.24

83.81

0.35

0.29

WE(2,1.1)

76.70 (0.11)

91.19

86.51

0.33

0.28

Under Scenario II, WE(, ) and WE(, ) achieve the highest value of PB, but the second one is associated with lower variability around this measure and, thus, can be deemed to be more reliable. CB performs best in terms of conditional power compared to the other designs, but fails in correctly identify the best treatment more than of the times. In contrast, WE designs correctly identifying the two best arms in a high percentage of replicas (i.e. more than ) and are associated with higher values of two-components power. Notice that FR is associated with a much lower power than the other designs, probably due to the relatively lower exploration ( patients on average) of the best arm.

Overall, the considered WE designs performance are comparable to the ones of the other response-adaptive designs, with small differences in terms of patient benefit but larger gain in terms of correct identification of the best arm and two-components power. In addition, WE designs generally tend to achieve a much higher patient benefit than FR due to their ability to skew allocations according to the accrued data, at the cost of moderately lower power. With a selection of targeting the power, WE can result in a statistical power closer to the one of FR, but with remarkably larger values of PB. Finally, when the best arm has also the most variable response, the flexibility of WE designs allows it to achieve an even higher gain over FR in terms of power. Further details on how operating characteristics are affected by different choices of and are given in Section E.3 of the SM.

6 Illustration: co-primary endpoints in Phase II trials

6.1 Setting

In Section 6, we focused on a single quantitative endpoint. However, multiple endpoints are generally collected in clinical trials and we can adopt the design proposed in Section 2.3 to deal with this particular context. In particular, we illustrate the performance of the novel response-adaptive design in the case of an oncology clinical trial with competing drug combinations, a total sample size of and two co-primary endpoints. The first endpoint is a PD-marker (PDM) - e.g., see motivating setting in Section 1 - targeting a specific value , for all , while the second endpoint is the tumour shrinkage rate (TSR, ) whose theoretical target is assumed to be (i.e. full disappearance of the tumour), for all . Variance matrices are assumed to be known and equal to . The goal of the study is to identify the best arm, to claim its superiority and to allocate most of the patients to it during the trial.

As illustration, we consider the design for bivariate endpoints described in Section 2.3 with . We compare its performance to two alternative approaches: (a.) fixed and equal randomisation (FR), (b.) current belief (CB). As already discussed in Section 5, FR is expected to achieve high power due to the even exploration of all the arms, while CB is expected to result in high PB. We choose a burn-in size of patient per arm for response-adaptive designs, and we consider a scenario where and . The four treatment arms can be characterised as follows: the first two treatments have values of the PDM relatively close to the target but associated with a relatively small TSR ( and , respectively); the third has twice the PDM of the first but it is associated with a remarkable average TSR (i.e. ); finally, the fourth is associated with a slightly poorer average PDM (e.g. more distant from the target) than the third treatment, but it has the highest average TSR. Observe that coefficients of variation of the PDM are relatively small (range: 0.5-1.25) if compared with the ones of TSR (1.25-7.5).

The definition of "best" arm in the bivariate case may be more challenging, and it strictly depends on the adopted distance. The means of the two endpoints can vary greatly in magnitude, and the use of a Minkowski distance (e.g. Euclidean, Manhattan) may lead to the endpoint with higher magnitude prevailing over the others, unless some sort of standardisation is applied. Notably, for a given arm , we propose to use a standardised version of the distance between the target vector and the true mean vector, i.e. Therefore, the final recommendation is . Adopting this standardised distance, the fourth treatment arm can be regarded as the best one.

We consider the same operating characteristics as in Section 5 to assess the performance of the considered designs. Hypothesis testing is performed following the same procedure described for the univariate case (cfr. Section 3), with the only exception that is rejected at significance level if the posterior probability that exceeds the cut-off probability , where and are, respectively, the estimated best and second best treatment arms according to distance .

6.2 Type-I error control

Design-specific cut-off probabilities are calibrated under the set of null scenarios , with , to achieve an average control of the type-I error at a level . Figure 5 shows the resulting individual type-I error rates in correspondence of combinations (, ).

The calibration under strong control is available in Section G of the SM. Notably, FR and WE designs are associated with individual type-I error rates which do not exceed , with the exception of WE(0.5) which registers a type-I error rate under the configuration . FR and WE(0.75) have more conservative type-I error rates when the mean of PDM endpoint equals the target in all the treatment arms. On the contrary, there is a large inflation of type-I error rate of CB in correspondence of these scenarios, while elsewhere it is below .

6.3 Results

Table 2 shows the operating characteristics of the considered designs. FR correctly selects the best two arms in a high number of replicas (), but loses power in treating only (on average) patients in these two arms. Both WE(0.5) and CB achieve high patient benefit measure, but the standard error for CB is more than five times larger than for WE(0.5). Such a high standard error suggests an unpredictable behaviour of CB, which highly depends on the response of the first treated patients. Overall, despite of the low burn-in size (), WE designs obtain the best operating characteristics, with WE(0.5) prioritising the patient benefit objective and WE(0.75) achieving the highest power.

| Design | PB (s.e.) | CS | CS | ||

|---|---|---|---|---|---|

| FR | 24.98 (0.04) | 0.82 | 0.82 | 0.41 | 0.34 |

| CB | 64.41 (0.44) | 0.67 | 0.65 | 0.33 | 0.21 |

| WE(0.5) | 77.18 (0.08) | 0.89 | 0.89 | 0.51 | 0.45 |

| WE(0.75) | 49.78 (0.03) | 0.89 | 0.89 | 0.52 | 0.47 |

7 Discussion and future directions

In this work, we proposed a class of design for the selection of arms in a multi-armed setting with continuous outcomes. This is based on a weighted information measure which retains the information about which outcomes are more desirable, a feature of considerable interest in settings with ethical constraints. Particular emphasis was given on a weight function with Gaussian kernel centered around a pre-specified clinical target . The resulting information gain criterion was used to govern the allocation of patients in the arms during the trial, and it showed flexibility in tackling the exploration vs. exploitation trade-off. The careful tuning of a penalisation parameter was of great importance to ensure desirable operating characteristics of the design, and a general procedure to select was illustrated.

In Section 5, we selected the burn-in size based on considerations regarding the need to control type-I error rates, taking into account both the case of known and unknown variances. In Section 6, demonstrate that the proposed methodology can be made fully adaptive. In any case, the burn-in size plays a relevant role in sequential designs since it mitigates the impact of initial random fluctuations that might occur at the start of the trial, though it may also reduce the ethical benefits of full adaptiveness when an optimal treatment truly exists. Future research could explore the joint optimization of and , with the former maximising a patient benefit criterion and the latter targeting a specific power or type-I error rate.

For the criterion presented in Section 2.2, we introduced an additional parameter controlling the impact of different variances on the allocation strategy. We do not recommend a robust selection of , as the simultaneous calibration of multiple tuning parameters (e.g., , and ) could reduce the feasibility of our proposal. In general, we suggest to fix when heteroscedasticity among different arms is thought to be evident. This would mitigate the effect of a too large variance taking the lead over the others.

The choice of the weight function is crucial and should be based on the setting of interest. For example, a multivariate generalisation of the symmetric weight function was presented to evaluate multiple endpoints simultaneously. An asymmetric weight function was also presented in the SM: this type of weight function might be particularly useful in some dose-finding studies, where, for example, overdosing and underdosing may want to be treated in a different way. Although normal (or multinormal) distributions were assumed throughout the work, the general procedure outlined in Section 2 can be adapted to accommodate various types of responses and weight functions.

In line with the primary objective of selecting the best treatment, a hypothesis testing procedure which compares the two best treatments and assesses their correct identification was presented. In this context, two measures of power were defined: the conditional power quantifies the ability of a design to correctly detect the superiority of the best treatment arm over the second best, whenever these two arms are properly identified at the end of the trial; the two-components power takes into account both the ability of identifying the best two arms and correctly detect the superiority of the best one. While the first measure isolates the actual gain in using the best treatment rather than the second best, the second measure provides a more comprehensive assessment decision-making performance of the design. A procedure to calibrate cut-off values was illustrated, along with different strategies to control the type-I error (either achieving a strong or an average control).

We adopted a hybrid approach which combines Bayesian and frequentist methodologies. A Bayesian perspective was employed to define information measures based on the posterior distribution of derived from an objective prior. While non-informative priors allow equal treatment of all therapies and minimise potential subjective bias (Ghosh, 2011), integrating available historical information for some or all treatment arms can be readily implemented within the proposed framework. On the other hand, frequentist properties (such as type-I error rates) of Bayesian hypothesis testing procedures were evaluated. This practice is common, as regulatory bodies often require explicit evidence that frequentist error rates are appropriately controlled (Shi and Yin, 2019). In addition, in the proposed allocation criterion, unknown variances are updated sequentially using their maximum likelihood estimate, similar to the approach adopted in many sequential designs (e.g., Liu et al. (2009)). Although this method has shown good performance in practice, an attempt to make the procedure fully Bayesian is subject of ongoing research.

In this work, we used a deterministic "select the best" rule to allocate patients in the sequential experiments, and we compared our proposed criterion to several allocation procedures which are known to have good properties either in terms of statistical power or patient benefit. For a fair comparison, we only considered response-adaptive designs based on deterministic rules. When appropriately calibrated through a robust selection of the parameter , the proposed class of designs offers a good balance between patient benefit and statistical power, by making the correct decision in a remarkable proportion of replicated trials. In future works, a randomised allocation rule based on the information gain in (4) is worth to be considered. The feasibility of such a criterion is shown in Mozgunov and Jaki (2020b). Other good randomised options can be found in the literature of the "Top-Two" allocation criteria, a class of allocation rules that, at each iteration, randomly assign patients only to one of the two best performing treatments. Top-Two Probability Sampling and Top-Two Thompson Sampling are two common examples (Russo, 2020; Jourdan et al., 2022; Wang and Tiwari, 2023), but more sophisticated criteria based on weighted information measures may be explored.

References

- Antognini and Giovagnoli [2010] Alessandro Baldi Antognini and Alessandra Giovagnoli. Compound optimal allocation for individual and collective ethics in binary clinical trials. Biometrika, 97(4):935–946, 2010.

- Bartroff and Leung Lai [2011] Jay Bartroff and Tze Leung Lai. Incorporating individual and collective ethics into phase i cancer trial designs. Biometrics, 67(2):596–603, 2011.

- Azriel et al. [2012] David Azriel, Micha Mandel, and Yosef Rinott. Optimal allocation to maximize the power of two-sample tests for binary response. Biometrika, 99(1):101–113, 2012.

- Thall et al. [1989] PF Thall, R Simon, and SS Ellenberg. A two-stage design for choosing among several experimental treatments and a control in clinical trials. Biometrics, 45(2):537–547, 1989.

- Rosenberger and Lachin [1993] William F Rosenberger and John M Lachin. The use of response-adaptive designs in clinical trials. Controlled clinical trials, 14(6):471–484, 1993.

- Berry and Eick [1995] Donald A Berry and Stephen G Eick. Adaptive assignment versus balanced randomization in clinical trials: a decision analysis. Statistics in medicine, 14(3):231–246, 1995.

- Robertson et al. [2023] David S Robertson, Kim May Lee, Boryana C López-Kolkovska, and Sofía S Villar. Response-adaptive randomization in clinical trials: from myths to practical considerations. Statistical science: a review journal of the Institute of Mathematical Statistics, 38(2):185, 2023.

- Rombach et al. [2020] Ines Rombach, Ruth Knight, Nicholas Peckham, Jamie R Stokes, and Jonathan A Cook. Current practice in analysing and reporting binary outcome data—a review of randomised controlled trial reports. BMC medicine, 18:1–8, 2020.

- Altman and Royston [2006] Douglas G Altman and Patrick Royston. The cost of dichotomising continuous variables. Bmj, 332(7549):1080, 2006.

- Zhao et al. [2015] Xuemei Zhao, Vijay Modur, Leonidas N Carayannopoulos, and Omar F Laterza. Biomarkers in pharmaceutical research. Clinical chemistry, 61(11):1343–1353, 2015.

- Kaiafa et al. [2021] Georgia Kaiafa, Stavroula Veneti, George Polychronopoulos, Dimitrios Pilalas, Stylianos Daios, Ilias Kanellos, Triantafyllos Didangelos, Stamatina Pagoni, and Christos Savopoulos. Is hba1c an ideal biomarker of well-controlled diabetes? Postgraduate medical journal, 97(1148):380–383, 2021.

- Kelbert and Mozgunov [2015] Mark Yakovlevich Kelbert and Pavel Mozgunov. Asymptotic behaviour of the weighted renyi, tsallis and fisher entropies in a bayesian problem. Eurasian Mathematical Journal, 6(2):6–17, 2015.

- Suhov et al. [2016] Yuri Suhov, Izabella Stuhl, Salimeh Yasaei Sekeh, and Mark Kelbert. Basic inequalities for weighted entropies. Aequationes mathematicae, 90:817–848, 2016.

- Kasianova et al. [2021] Ksenia Kasianova, Mark Kelbert, and Pavel Mozgunov. Response adaptive designs for phase ii trials with binary endpoint based on context-dependent information measures. Computational Statistics & Data Analysis, 158:107187, 2021.

- Mozgunov and Jaki [2020a] Pavel Mozgunov and Thomas Jaki. Improving safety of the continual reassessment method via a modified allocation rule. Statistics in medicine, 39(7):906–922, 2020a.

- Kasianova et al. [2023] Ksenia Kasianova, Mark Kelbert, and Pavel Mozgunov. Response-adaptive randomization for multiarm clinical trials using context-dependent information measures. Biometrical Journal, 65(8):2200301, 2023.

- Mozgunov and Jaki [2019] Pavel Mozgunov and Thomas Jaki. An information theoretic phase i–ii design for molecularly targeted agents that does not require an assumption of monotonicity. Journal of the Royal Statistical Society Series C: Applied Statistics, 68(2):347–367, 2019.

- Mozgunov and Jaki [2020b] Pavel Mozgunov and Thomas Jaki. An information theoretic approach for selecting arms in clinical trials. Journal of the Royal Statistical Society Series B: Statistical Methodology, 82(5):1223–1247, 2020b.

- Azriel et al. [2011] David Azriel, Micha Mandel, and Yosef Rinott. The treatment versus experimentation dilemma in dose finding studies. Journal of Statistical Planning and Inference, 141(8):2759–2768, 2011.

- Villar et al. [2015] Sofia Villar, Jack Bowden, and James Wason. Multi-armed bandit models for the optimal design of clinical trials: Benefits and challenges. Statistical Science, 30:199–215, 2015.

- Aziz et al. [2021] Maryam Aziz, Emilie Kaufmann, and Marie-Karelle Riviere. On multi-armed bandit designs for dose-finding trials. Journal of Machine Learning Research, 22(14):1–38, 2021.

- Smith and Villar [2018] Adam L Smith and Sofía S Villar. Bayesian adaptive bandit-based designs using the gittins index for multi-armed trials with normally distributed endpoints. Journal of applied statistics, 45(6):1052–1076, 2018.

- Williamson and Villar [2020] S Faye Williamson and Sofía S Villar. A response-adaptive randomization procedure for multi-armed clinical trials with normally distributed outcomes. Biometrics, 76(1):197–209, 2020.

- Daniells et al. [2024] Libby Daniells, Pavel Mozgunov, Helen Barnett, Alun Bedding, and Thomas Jaki. How to add baskets to an ongoing basket trial with information borrowing. arXiv preprint arXiv:2407.06069, 2024.

- Wang [1991] You-Gan Wang. Sequential allocation in clinical trials. Communications in Statistics-Theory and Methods, 20(3):791–805, 1991.

- Ghosh [2011] Malay Ghosh. Objective priors: An introduction for frequentists. Statistical Science, 26(2):187–202, 2011.

- Shi and Yin [2019] Haolun Shi and Guosheng Yin. Control of type i error rates in bayesian sequential designs. Bayesian Analysis, 14(2):399–425, 2019.

- Liu et al. [2009] Guohui Liu, William F Rosenberger, and Linda M Haines. Sequential designs for ordinal phase i clinical trials. Biometrical Journal: Journal of Mathematical Methods in Biosciences, 51(2):335–347, 2009.

- Russo [2020] Daniel Russo. Simple bayesian algorithms for best-arm identification. Operations Research, 68(6):1625–1647, 2020.

- Jourdan et al. [2022] Marc Jourdan, Rémy Degenne, Dorian Baudry, Rianne de Heide, and Emilie Kaufmann. Top two algorithms revisited. Advances in Neural Information Processing Systems, 35:26791–26803, 2022.

- Wang and Tiwari [2023] Jixian Wang and Ram Tiwari. Adaptive designs for best treatment identification with top-two thompson sampling and acceleration. Pharmaceutical Statistics, 22(6):1089–1103, 2023.

- Gittins et al. [2011] John Gittins, Kevin Glazebrook, and Richard Weber. Multi-armed bandit allocation indices. John Wiley & Sons, 2011.

Supplementary Material

A Proofs

A.1 Proof of Theorem 1

Proof.

Consider the generic arm . It is well known that the Shannon entropy of a normal distribution with mean and variance is given by

while the weighted Shannon entropy depends on the adopted weight function.

To begin, one can derive the explicit formula of the (normalised) weight function. For this purpose, the following equation is useful:

| (17) |

where and and find that the normalisation condition is satisfied by

| (18) |

Notably,

with being the pdf in of a normal distribution with mean and variance .

As a consequence, the weighted Shannon entropy can be written as

Exploiting the fact that , the weighted Shannon entropy can be finally expressed as

The resulting information gain is then

By observing that , one can easily derive the final expression of the information gain for the weight function in (18), i.e.

If , then the result immediately follows.

∎

A.2 Proof of Theorem 2

Proof.

Consider the generic arm . The Shannon entropy of a multivariate normal distribution with -dimensional mean vector and covariance matrix is given by

Remind that and . To derive the (normalised) weight function, the following result is of particular interest:

| (19) |

where , and .

Then, using the result in (19), the normalisation condition is satisfied by

Notably,

with being the pdf in of a normal distribution with mean vector and precision matrix .

As a consequence, the weighted Shannon entropy can be written as

To solve the integral in the last line of the previous expression, the following three steps are followed:

-

1.

exploiting the properties of the trace of a matrix:

-

2.

adding and subtracting a same quantity :

-

3.

combining the previous results to obtain a sum of three tractable integrals:

where

Then,

and, after replacing the values of and ,

If , the result immediately follows.

∎

B Asymmetric weight function

The allocation rule for single endpoints presented in the main text has the property to give the same importance to values of the sample mean being above or below the target mean of a same constant value . More formally, if and , then and , ceteris paribus. However, the assumption of symmetric behaviour around the target may not be tenable whenever the investigator desires to weight values above and below the target in a different way.

To comply with this different context of the study, we propose the following family of piece-wise asymmetric weight functions:

| (20) |

where both pieces are proportional to truncated normal distributions with mean , whereas the left piece is truncated in with variance while the right piece is truncated in with variance , with , and with such that . Notice that the symmetric weight function can be seen as a special case of the asymmetric one (cfr. (20)) when . In Theorem 3, we illustrate the derivation of the corresponding information gain, .

Theorem 3.

Consider the same assumption as in Theorem 1 (cfr. main text), but assuming a family of weight functions as the one in (20). Then the corresponding expression of the information gain is given by

| (21) |

with

and and being, respectively, the cdf and the pdf of a standard normal distribution.

When , the information gain in 21 does not generally attain its maximum in correspondence of (cfr. Figure B.1).

Therefore, one needs to find combinations of values and that lead to an information gain having this desirable property (henceforth, referred to as optimal combinations).

B.1 Optimal combinations of a and b

Here, we focus on the case where is required. Once we fix , the corresponding value leading to an information gain which attains its maximum in is such that

| (22) |

The closed-form derivation of is cumbersome, thus we solve (22) via numerical optimisation. Figure B.2 suggests that under the constraint , the value does exist and is unique if and only if .

The parameters and can be thus characterised as asymmetry parameters. For example, the larger (analogously, ), the more skewed to the left (right) the resulting information gain and the higher the penalisation of values of higher (lower) than (cfr. Figure 21).

The practical selection of (or , when ) should depend on the objective of the trial.

Finally, Figure B.4 shows that the optimal combination () remains the same regardless of , and .

B.2 Proof of Theorem 3

Proof.

Consider the generic arm . First, one needs to derive the normalisation constant such that

where

-

•

-

•

Exploiting the equation in (17), the first integral can be re-written as

and, similarly,

where is the cdf of a standard normal distribution, , and , .

After some computations, the normalisation constant of the weight function can be expressed as

where and .

Now, let’s derive the weighted Shannon entropy:

where

-

•

-

•

To solve the two integrals, the following four results are particularly useful:

-

1.

Once again, exploiting the equation in (17) and assuming that is the pdf in of a normal distribution with mean and variance (), one obtains that

-

2.

Let’s define two probability density functions:

-

(a)

, which is the pdf of a truncated in and computed in ;

-

(b)

, which is the pdf of a truncated in and computed in .

-

(a)

-

3.

In addition, let’s define

-

(a)

the truncated means:

-

(b)

the truncated variances:

-

(a)

-

4.

For :

Combining the previous four results, one obtains that

and, similarly,

Finally,

The resulting information gain immediately follows.

∎

C Multivariate generalisation: how information gain changes as function of the main parameters (case )

C.1 Means and variances

Figure C.1 and C.2 show the information gain (bivariate case) as function of the vector of means and the vector of variances, respectively. Similarly to the univariate case, the information gain achieves its maximum when sample means are equal to their corresponding target values. Additionally, the larger the variances, the higher the information gain.

C.2 Correlation

The maximum of the information gain as function of - ceteris paribus - is attained in

with the convention that . In other words, for the -th arm, the maximum of the information gain is attained in correspondence of the correlation being equal to the ratio of the standardised differences between the endpoint-specific target and sample mean. If variances of the two endpoints are equal and the sample means are at the same distance from the target, then the maximum is attained in when the two endpoint-specific sample means are equal and when they are unequal (cfr. Figure C.3a and C.3b, respectively). Intuitively, in the first case responses of the two endpoints tend to be on the same side with respect to their target means (e.g. on average, both above or both below the corresponding target): this would suggest that a positive correlation may exist between the two endpoints and, coherently, higher positive correlations lead to a higher information gain. Conversely, when responses of the two endpoints tend to be on different sides with respect to their target means (e.g. on average, one above and one below the corresponding target), higher negative correlations lead to a higher information gain. Finally, when at least one of the two sample means equals the corresponding target, the information gain is symmetric around the null correlation point (cfr. Figure C.3c).

D Target-oriented modified Gittins index rules

Smith and Villar [2018]’s design is oriented toward a patient benefit objective and, thus, it is worth to be considered as competitor to our proposal. In Smith and Villar [2018]’s formulation, the allocation rule proceeds as follows: if larger responses are desirable, the next patient is assigned to the arm () with largest Gittins index (GI), , where the values of the standardised index can be interpolated from the tables printed in Gittins et al. [2011, pp. 261–262] for several possible combinations of current sample size and . Vice versa, if smaller responses are desirable, the next patient is assigned to the arm with smallest quantity . The quantity is a measure of the learning reward associated with keep sampling an arm which has already been sampled times, with being a tuning parameter quantifying the value of learning. However, this design cannot be directly adopted to our setting where desirable values are the ones as close as possible to . Thus, we propose two heuristic extensions of the rule above: the symmetric Gittins index criterion (SGI) and the targeted Gittins index criterion (TGI).

The first proposal is to use an allocation rule which assigns the next patient to the arm which minimises the quantity

| (23) |

which has the property of being symmetrical around its maximum (i.e. it takes the same value in correspondence of and , , ceteris paribus. The basic idea dwells in discounting the learning component from the distance between the current sample mean and the target mean.

The second proposal arises from considering a targeted Gittins index for arm (), namely the Gittins index that would be associated to arm if its response were : as goes to infinity, the value of learning goes to and the targeted Gittins index would tend to by the Law of Large Numbers. For this reason, our proposal is to adopt the following rule which allocates the next patient to the arm which minimised the distance between the current Gittins index and the asymptotical targeted Gittins index (i.e., ):

| (24) |

where the (respectively, ) is adopted when the current sample mean is below (above) the target. Interestingly, as tends to infinity, tends to which is the key quantity of our adopted recommendation rule.

E Additional results for the simulation study with single endpoint

E.1 Burn-in size and type-I error

Figure E.1 shows how the type-I error rate decreases as the burn-in size () increases. We choose the scenario where all true means are equal to the target: this scenario can be considered challenging for our proposed design, as it is generally associated with a high inflation of the Type I error rate. The drop is surprisingly rapid when passing from to , meaning that an equal allocation of of the patients to each arm prior to the adaptive allocation may be useful to stabilise the algorithm and reduce the its dependence from the initial values. This is even more evident in the case of unknown variances since an additional parameter must be estimated in each arm.

For the full simulation study, we adopt which seems to offer a good balance between protecting patients from default allocations to sub-optimal arms and control of type-I error. Notably, in the considered example, the choice leads to a small difference between type-I error rates of the known and unknown cases.

E.2 Choice of the null scenarios used for calibration of cut-off probabilities

Recall that we focus on the set of null scenarios for the calibration of the cut-off probabilities. Our strategy consists in considering values of from to increasing in a quadratic way. This reflects the interest in having a larger number of scenarios where is closer to , which are frequently associated with high inflation or deflation of the type-I error. Indeed, as increases, the type-I error rate tends to stabilise.

In practice, we first consider a grid of evenly spaced values from to ; then, we take the square of these values to obtain the final grid of values for .

E.3 How and affect operating characteristics

Figure E.2 illustrates how the parameter has a key role in regulating the trade-off between patient benefit (individual objective) and power requirements (collective objective). Notably, in Scenario I, the power tends to increase and the patient benefit to decrease with as result of the more spread allocation of patients in the various treatment arms. Moreover, if one compares the operating characteristics under the two different choices of , the case of uniformly leads to higher power but lower patient benefit than the case of . A reasonable explanation dwells in the fact that a high gives more chance to the arm with highest variance to be explored: while this generally helps improving the power, it may slightly reduce the exploitation of the best treatment when the most variable arm is suboptimal as in Scenario I. In contrast, Scenario II tells a partially different story: WE designs with lower are uniformly (across ’s) dominated by the ones with higher in terms of patient benefit since the best treatment is also the one with most variable response; unsurprisingly, the opposite tends to happen in terms of power. The only unexpected behaviour is represented by the patient benefit increasing with when , which is in net contrast with the value optimising the patient benefit criterion. Once again, it is important to remind that this value of was selected by averaging across a large number of possible configurations, and the ones like Scenario II can be regarded as outliers.

F Unknown variance case with single endpoint

When the variance of the response is supposed to be unknown, we rely on the unbiased sample variance ( as plug-in estimate of the true one. This requires a minimum burn-in of patients per arm to obtain a first estimate of the arm-specific variances when response-adaptive designs are used. However, in practice, a higher burn-in may be needed to achieve better operating characteristics when the variances are supposed to be unknown (cfr. Section E.1). Here, we adopt the same setting as in Section 5 of the main text, apart from the scenarios which will be now characterised by the generic set , where is generated from and from , . We run a simulation study over alternative scenarios.

F.1 Type-I error control and cut-off probabilities

We consider the set of null scenarios whose elements are all the possible combinations of values and . Figure F.1 and F.2 show the type-I error rates for each considered design in correspondence of the scenarios in when cut-off probabilities are calibrated - respectively - under strong and average control of the type-I error at level .

Interestingly, most of the design are associated with an inflation of the type-I error in correspondence when arms have different variances, and this is even more evident in the case of . The magnitude of this inflation strongly depends on the particular vector of means: for example, when , most of the response-adaptive designs are associated with higher type-I error rates with respect to the case of or . An interesting exception is represented by WE(, ), which is associated with higher type-I error rates when and all the variances are equal. Finally, FR is associated with higher type-I error rates when becomes large.

F.2 Selection of

Figure F.3 illustrates the result of the optimisation of the patient benefit and power criteria for and . The probability to achieve of FR power does not achieve the threshold , therefore we choose in order to maximise this probability. The proposed values of are for and for .

F.3 Numerical results

Figure F.4 shows the results of the simulation study in the case of unknown variances. As anticipated, the uncertainty about the estimates of the unknown variances is reflected in poorer performance of all the methods. Similarly to the case of known variances (cfr. main text), WE designs with optimising the power criterion - i.e. WE(, ) and WE(, ) - are associated with high percentage of identification of the best arm at the end of the trial. TGI, WE(, ) and WE(, ) exhibit similar distributions of the two-components power, but TGI obtains lower performance in terms of identification of the best arm and patient benefit. Surprisingly, in few random scenarios WE designs may perform even poorer than FR in terms of average percentage of experimentation of the best arm (i.e. patient benefit measure): specifically, this occurs in scenarios for WE(, ) and only in scenarios for WE(, ). However, it is interesting to notice that these scenarios are all characterised by the mean of the best treatment being relatively far from the target and close to the one of the other treatments (the median two-components power under FR is in this subset of scenarios).

Overall, WE(1,1.2) achieves slightly lower power than FR but with more satisfactory performance in terms of correct identification of the best arm and, above all, patient benefit.

Now, we assess the operating characteristics of the considered designs in two specific scenarios:

-

i

Scenario Ibis, characterised by and , with the worst treatment arm having the highest variability but the second worst having the lowest variability;

-

ii

Scenario IIbis, characterised by and , with the best two treatment arms having the highest variability.

Table F.1 illustrates the operating characteristics of the considered designs under Scenario Ibis and IIbis. In both cases, WE(1,1.2) and WE(2,1.45) perform well in terms of correct identification of the best arm at the end of the trial and power: in Scenario I, their two-components power is points lower than FR, but high a remarkable gain in terms of patients benefit, while in Scenario II they both achieve the highest two-components power, along with SGI. Interestingly, SGI results in slightly better operating characteristics than WE(1,0.55) and WE(2,0.75) in the two considered scenarios. TGI achieves relatively high conditional power (higher than FR), but performs poorly in terms of patient benefit measure, with a corresponding high standard error.

Scenario Ibis (unknown variances): ,

Design

PB (s.e.)

CS

CS

FR

24.99 (0.04)

94.49

88.81

0.57

0.50

CB

74.31 (0.24)

90.40

59.71

0.37

0.22

TS

75.10 (0.23)

89.01

58.52

0.37

0.22

SGI

74.42 (0.17)

95.80

66.58

0.50

0.33

TGI

70.33 (0.24)

86.60

59.59

0.58

0.34

WE(1,0.55)

74.23 (0.18)

95.88

66.73

0.44

0.29

WE(2,0.75)

69.93 (0.22)

93.98

66.23

0.39

0.26

WE(1,1.2)

71.95 (0.16)

97.80

71.11

0.54

0.39

WE(2,1.45)

69.84 (0.17)

97.04

71.46

0.54

0.39

Scenario IIbis (unknown variances): ,

Design

PB (s.e.)

CS

CS

FR

25.05 (0.04)

78.25

78.03

0.23

0.18

CB

60.26 (0.35)

71.87

58.59

0.30

0.17

TS

60.09 (0.35)

71.00

57.40

0.30

0.17

SGI

62.85 (0.27)

80.72

67.42

0.35

0.24

TGI

54.69 (0.36)

75.43

64.86

0.32

0.21

WE(1,0.55)

62.67 (0.29)

80.08

66.59

0.31

0.21

WE(2,0.75)

60.95 (0.29)

79.39

66.57

0.24

0.16

WE(1,1.2)

63.60 (0.23)

85.40

73.56

0.34

0.25

WE(2,1.45)

62.69 (0.23)

84.83

73.46

0.33

0.24

G Additional results for illustration with co-primary endpoints

Figure G.1 shows the individual type-I error rates in correspondence of combinations of values and when cut-off probabilities are derived under strong control of type-I error probability. Results are in line with those discussed in the main text for the case of average control of the type-I error.