A Real Time Super Resolution Accelerator with Tilted Layer Fusion

Abstract

Deep learning based superresolution achieves high-quality results, but its heavy computational workload, large buffer, and high external memory bandwidth inhibit its usage in mobile devices. To solve the above issues, this paper proposes a real-time hardware accelerator with the tilted layer fusion method that reduces the external DRAM bandwidth by 92% and just needs 102KB on-chip memory. The design implemented with a 40nm CMOS process achieves 1920x1080@60fps throughput with 544.3K gate count when running at 600MHz; it has higher throughput and lower area cost than previous designs.

Index Terms:

Convolutional Neural Networks (CNNs), deep learning accelerators, layer fusion, real-time, super-resolutionI Introduction

Deep learning based superresolution (SR) has attracted significant attention recently due to its superior reconstructed image over the traditional methods [1, 2, 3]. Many state-of-the-art models [4, 5, 6] have gotten better and better quality, but their large model size and high computational complexity prevent them from real-time hardware implementation. Instead, this paper adopts the hardware-friendly Anchor-based Plain Net (APBN) [7] due to its 8-bit quantized weights, decent PSNR performance (2dB better than the widely used quantized FSRCNN [8]), and regular network structure, when compared to other lightweight models [9, 10, 8].

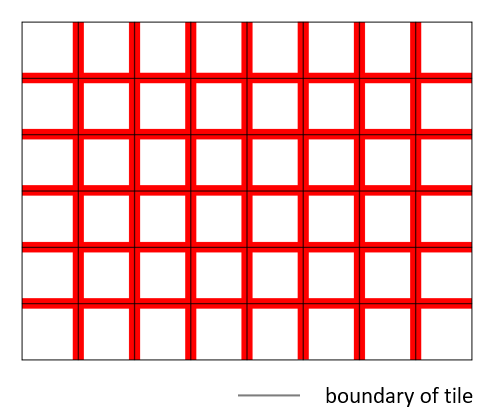

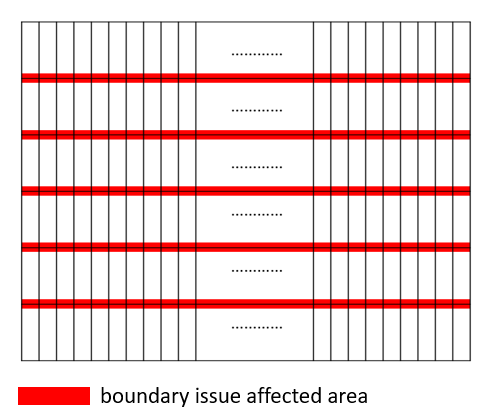

Due to the growing interest in applying superresolution in real-time applications, various SR hardware accelerators have been studied. Reference [11] adopts the depth-wise convolution for small model size and line-by-line processing for small buffer cost. Reference [12] adopts the constant kernel size Winograd convolution for regular hardware design. However, both are designed in a layer-by-layer model execution style which needs to store the layer output to DRAM and load it again for the next layer. It results in high DRAM access amount, especially for high definition image output. Reference [13] proposes a selective caching based layer fusion that partitions the input into tiles and uses layer fusion [14] for processing to avoid intermediate DRAM access. However, it still needs large on-chip memory for tile boundary data in the layer fusion. Block convolution [15] ignores these boundary data but will have significant information loss as shown in Fig. 1(a). Thus, it needs a large tile size and the corresponding large buffer size.

To solve the above problems, we propose an SR accelerator with tilted layer fusion that executes the layer fusion in a tilted addressing between layers. The layer fusion will start its next layer execution as soon as the required input data is ready, which can avoid intermediate data access to the external DRAM due to all these data residing on chip. In practice, the input is usually partitioned into tiles for layer fusion [14], which will need to store the tile boundary data or recompute them to keep the performance intact. The proposed approach will not keep all these boundary data as in [14] or discard them as in [15] to avoid large buffer size or information loss. Instead, we keep the left and right boundary data but with the tilted layer fusion to reduce the buffer size as well as performance loss, as shown in Fig. 1(b). The corresponding hardware uses a simple input broadcasting scheme to reduce control and area overhead. The final results can achieve 1920x1080@60FPS throughput with a much lower area cost compared to previous designs.

II Proposed Tilted Layer Fusion Method

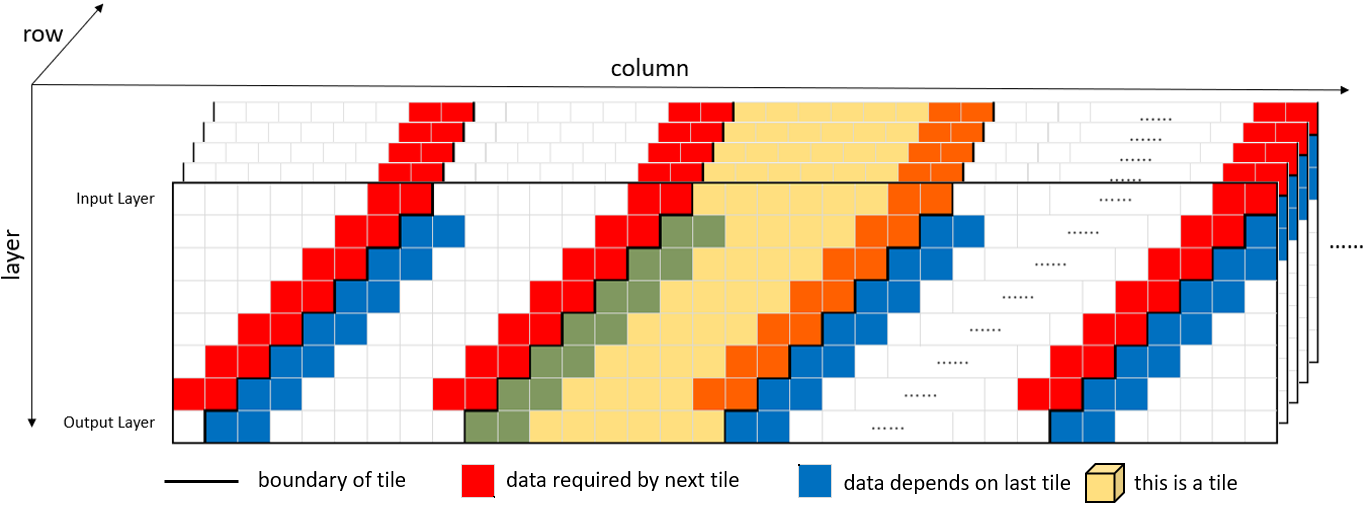

Fig. 2 shows a 3D view for all layer inputs by stacking feature maps along the layer axis to demonstrate how the tilted layer fusion works. In which, every block represents a pixel of the feature map in a corresponding layer. Notice that the vertical axis is the layer axis and the horizontal axis is the column axis in a feature map.

As shown in Fig. 2, the feature map input is partitioned into parallelepipedal tiles along the layer instead of rectangular tiles in [14] so that the area of a tile on the next layer will be shifted one pixel left. With this, the required data for the next layer at the right boundary (red pixels) will be ready for computation without waiting for other boundary data.

For the left boundary of the tile (blue pixels), its computation will need data from the left and top neighboring data (red pixels). However, with layer fusion, the left and top neighboring data are just recently finished, which implies we can preserve these data in a limited size buffer until they are not needed anymore.

With the above approach, we can keep the information at the left and right boundaries while only discard information at the top and bottom boundaries, as shown in Fig. 1(b). The performance penalty is marginal, less than 0.2dB based on our simulation. Due to this small information loss, the required tile size could be much smaller than previous approaches, and thus it has a smaller buffer size. In this paper, the tile size is selected to be 8x60, and the ignored boundary rows are just 5 rows for the target 640x360 input image as shown in Fig. 1(b), which has a negligible impact on the performance penalty.

III System Architecture

III-A Overview

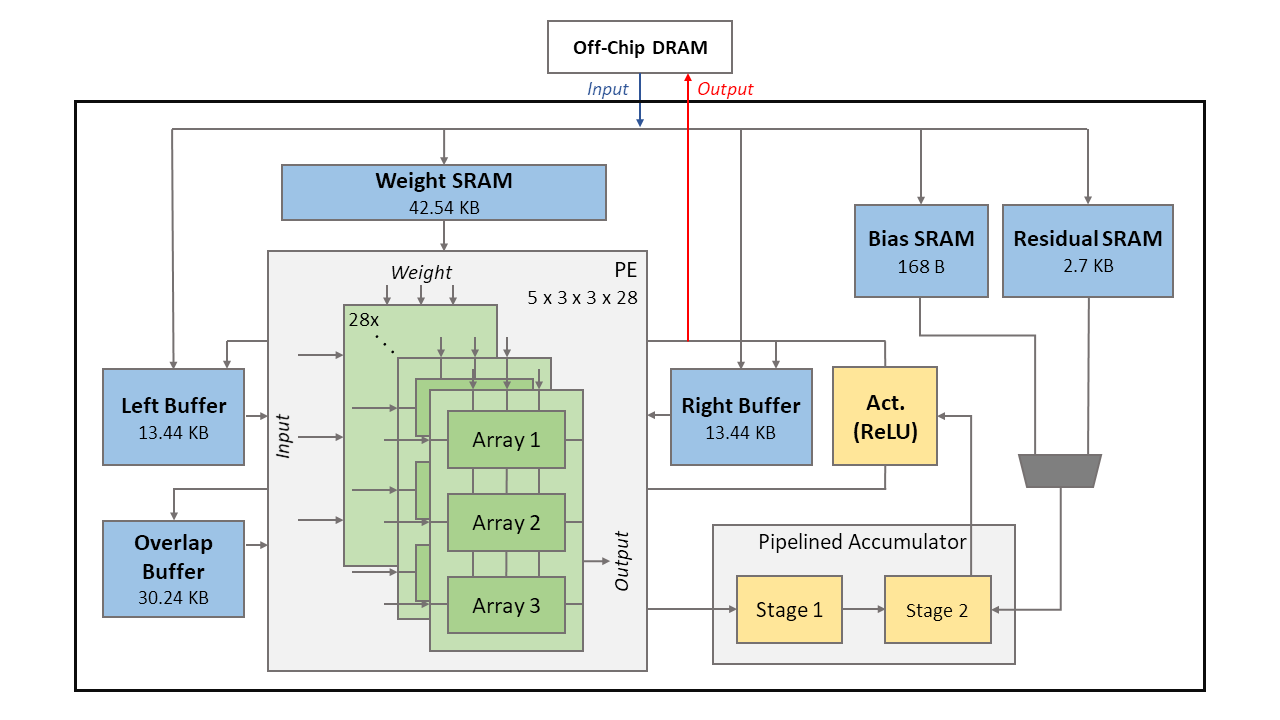

The adopted model [7] has seven layers. The first six layers are 3x3 convolutions with ReLU activation, and the final layer is a 3x3 convolution followed by a residual-like structure. Based on this model, we propose its hardware architecture as shown in Fig. 3. The proposed system architecture consists of 28 PE blocks, 2-stage pipelined accumulator, activation block, ping-pong buffer, overlap buffer, weight SRAM, bias SRAM, and residual SRAM. This design accesses 8-bit input images, weights, and biases from off-chip DRAM and then stores them to the corresponding SRAM buffers.

The whole processing is as follows. First, to process a layer, one of the ping-pong buffers will serve as an input provider to supply input to 28 PE blocks for simultaneously processing 28 channels of input. The output of 28 PE blocks will be sent to the 2-stage pipelined accumulator to complete the convolutions. The results will then go through the activation block for ReLU activation function. Then, the results will be stored in the other ping-pong buffer. Second, to process the next layer, the previous output buffer will serve as input, and the previous input buffer will serve as output. The whole processing is repeated. With this, all intermediate data will be within the chip and save external DRAM access.

III-B PE Blocks

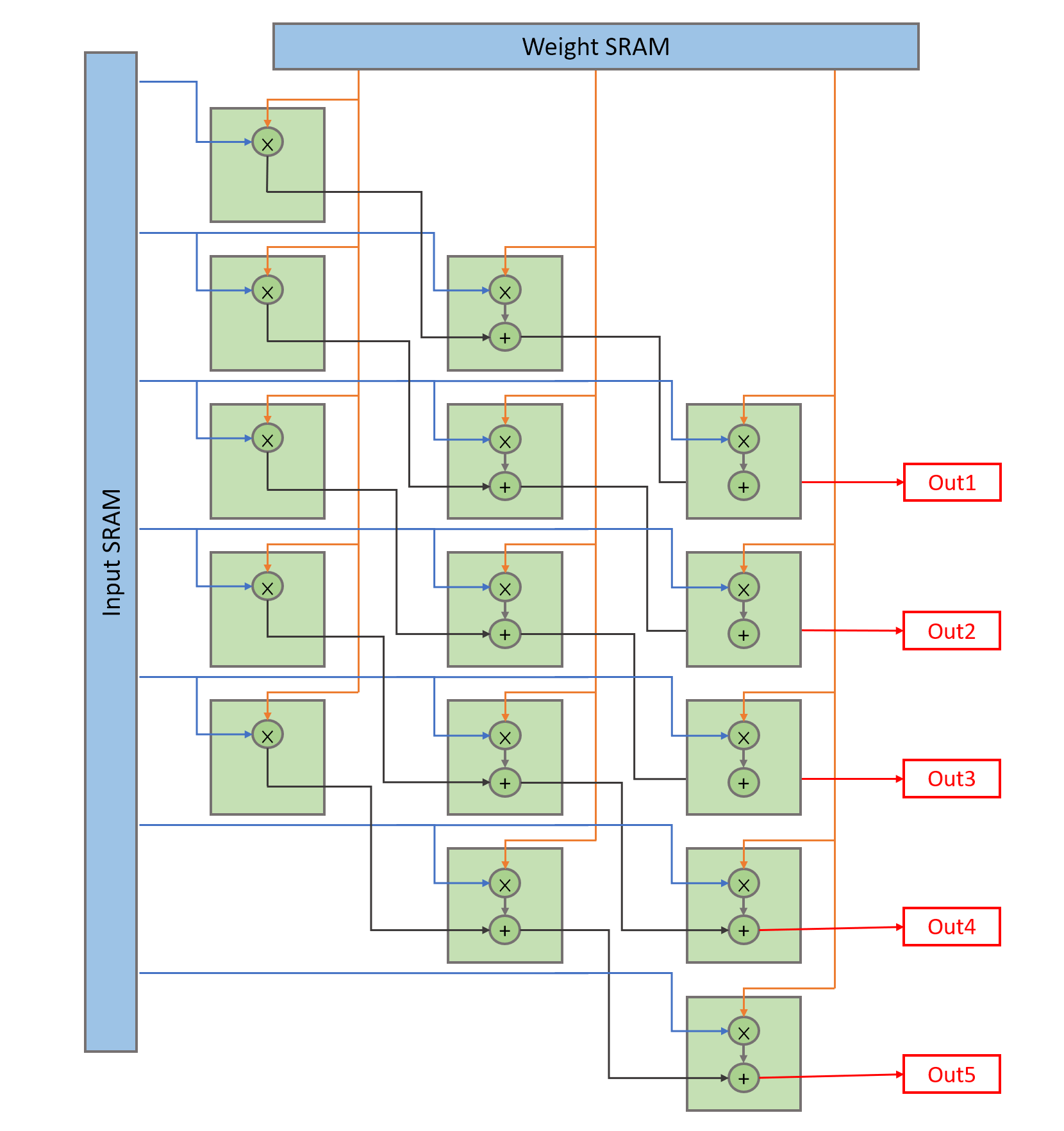

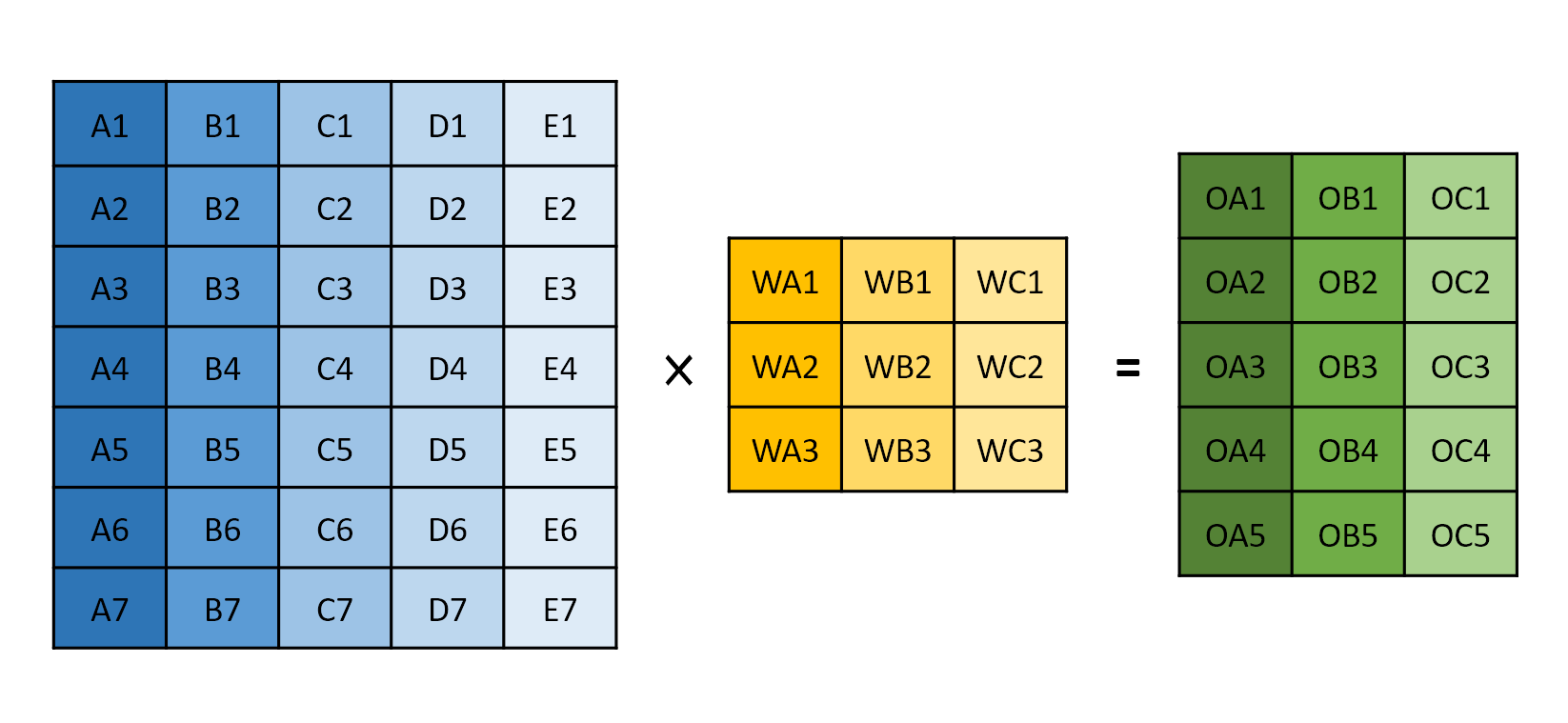

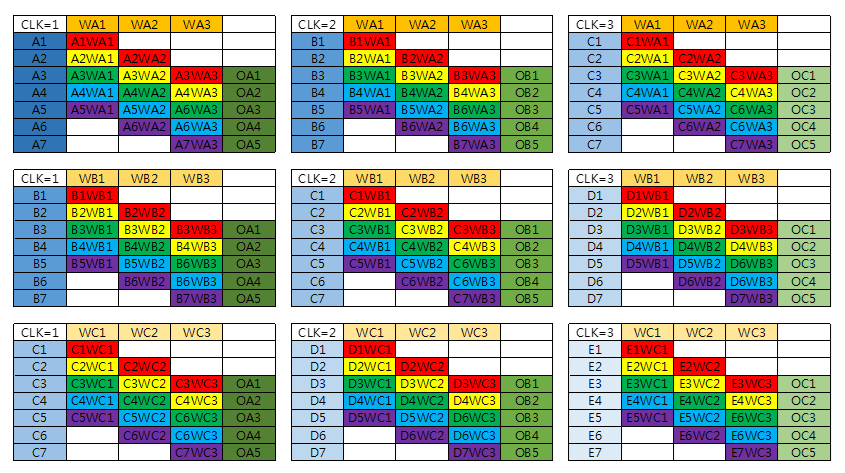

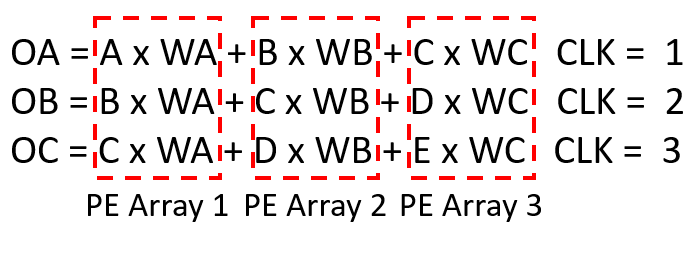

This design has 28 PE blocks, where each PE block consists of three PE arrays, and a PE array has 5x3 MACs. To ease the arrangement of these MACs, we adopt the input broadcasting as shown in Fig. 4(a), where seven input images are broadcasted horizontally, and three weights are broadcasted vertically. These two are multiplied and then added up along the diagonal direction to generate five partial sums for the accumulators.

In the adopted model [7], all the intermediate layers have 28 channels except for the first layer(3 channels) and the final layer(27 channels). By splitting MACs into 28 PE blocks, and letting one PE block process a channel of input at a time, our design can reach an average of hardware utilization with little control overhead. The three PE arrays within one PE block can finish a 3x3 convolution in a cycle, which will be further discussed later.

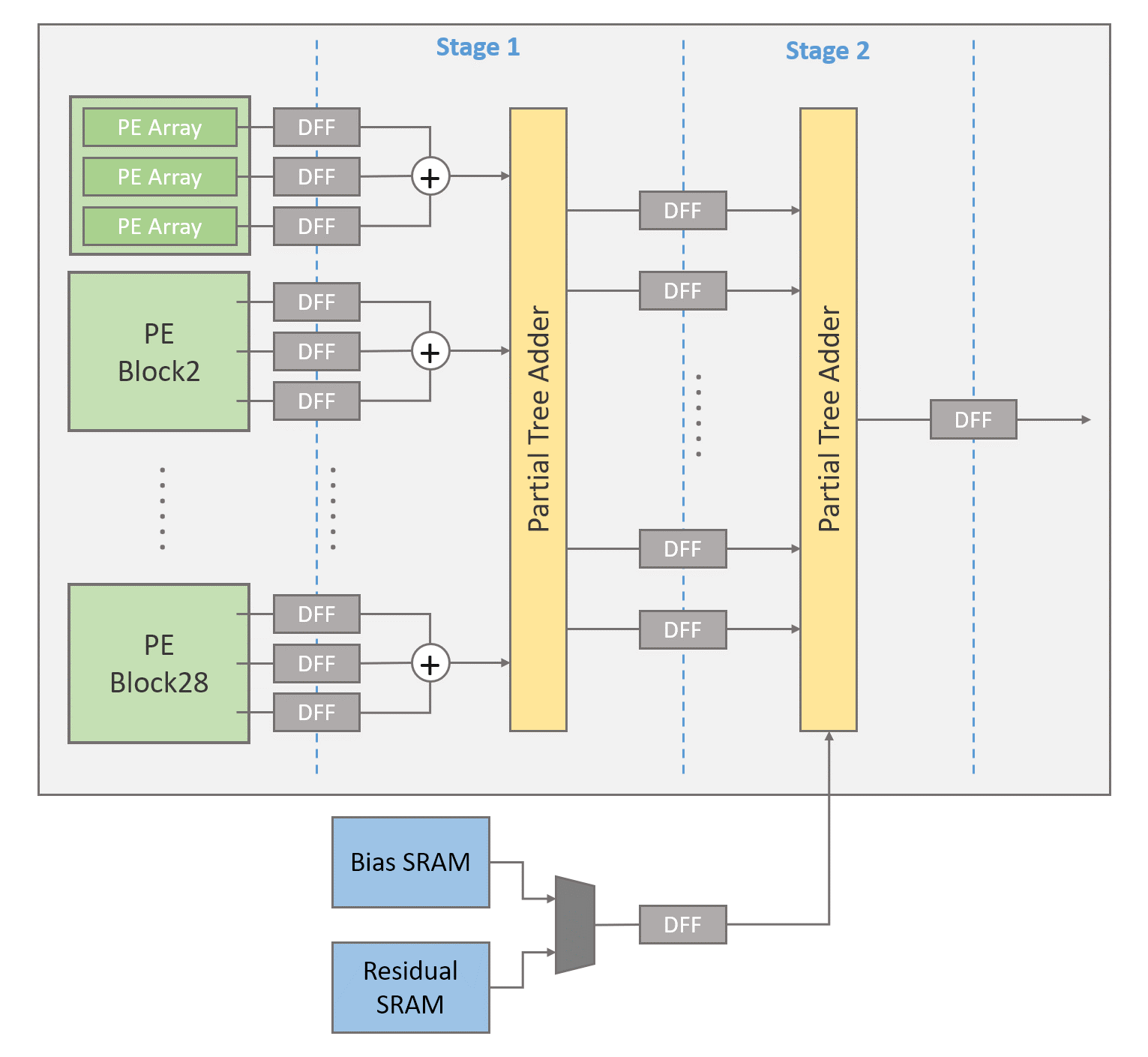

III-C Accumulator

Fig. 4(b) shows the accumulator, which is two-stage pipelined to shorten the critical path. Three partial sums from three PE arrays of the same PE(channel) are added up first, and then the 28 output channels from 28 PE blocks are summed up with a tree adder divided into two partial tree adders. In the second stage of the 2-stage pipelined accumulator, a multiplexer selects whether biases or residuals should be added depending on the current working layer.

III-D Data Flow of Convolution

Fig. 6(a) shows the data flow of a PE block process example from Fig. 5. In our data flow, a column of an input image is broadcasted horizontally while a column of filter weights is broadcasted vertically. With this data flow, the products to be summed up together to complete a convolution are along the diagonal direction. Hence, we use parallelogramical arranged PEs. This design can reduce the control overhead with the expense of allowing two more input images to the PE array.

This vectorwise scheduling is illustrated by the mathematical equations in Fig. 6(b). By sending three consecutive columns of input images and filter weights to three PE arrays, the convolution of one column of output is completed within a cycle. In this case, the example in Fig. 5 only takes three cycles to be completed. To sum up, this data flow achieves high parallelism, simple control, regular structure, and high hardware utilization.

III-E I/O Ping-Pong Buffers

The ping pong buffer stores a tile of data for each layer. When it starts computing a new tile, the system loads the input image data from the external DRAM to the left ping pong buffer. Then, the data are consecutively loaded from the left ping pong buffer to PEs for computation and then stored in the right ping pong buffer after finishing all operations of the current layer. After that, the role of two ping pong buffers is switched to the next layer of computing. This process is repeated layer by layer until finishing the output layer.

III-F Overlap Buffer

The overlap buffer is a queue-style addressing memory for left and right boundary data. For easy addressing, the current computing layer is regarded as the back layer of the queue, and the last layer is the front layer of the queue. When computing, the data required by the first two columns of the tile are loaded from the front layer, and the results of the last two columns are stored in the back layer. After finishing the computation of a layer, it pops the front layer and the next layer becomes the new back of the queue.

We implement the queue data structure on the overlap buffer by saving the address of the front layer. This addressing helps us calculate the corresponding address of each pixel in both front and back layers in the queue.

| [11] | [12] | [16] | SRNPU [13] | Our Work | |||||

| SR Method |

|

Modified IDN |

|

Tile-Based | Anchor-Based | ||||

| Layer Fusion | None | None | Fused-Layer |

|

Tilted Layer Fusion | ||||

| Technology |

|

32 nm |

|

65 nm | 40 nm | ||||

| Frequency [MHz] | 150 | 200 | 100 | 200 | 600 | ||||

| SRAM [KB] | 194 | - | 945 | 572 | 102 | ||||

| Throughput [Mpixels/s] | 600 | 124.4 | 520 | 65.9 | 124.4 | ||||

| Number of Macs | - | 2048 | - | 1152 | 1260 | ||||

| Gate Count | - | 3113.7 k | - | - | 544.3 k | ||||

| Normalized Area [] | - | - | - | 6.06 | 3.11 | ||||

| Target Resolution | 4K UHD (60fps) | FHD (60 fps) | QHD (120fps) | FHD (30fps) | FHD (60fps) | ||||

| *A 2-input NAND gate is counted as one equivalent gate. | |||||||||

| *Normalized area is calculated by scaling design to 40nm process. | |||||||||

IV Analysis and Experimental Results

IV-A Buffer Size Analysis

The buffers in this design serve two purposes. One is for storing the values of feature maps and residuals; the other is for model weights. The following analysis will focus on improving the former since the latter depends on the adopted model. This analysis assumes that the classical layer fusion [15] uses a 60x60 tile, which is similar to the one used in [15] and has the same tile height as our 8x60 tile size.

In the following, , , and represent the memory size of ping-pong buffer, overlap buffer, and residual buffer, respectively. is the number of rows of a tile (length of a tile), is the number of columns of a tile (width of a tile), represents the number of layers, and represents the number of channels of layer ; for example, represents the number of channels of the input layer.

IV-A1 Ping-Pong Buffers

As mentioned before, the classical layer fusion method [15] cannot have a small tile size due to information loss or recomputation. In this work, the information loss in the horizontal direction has been eliminated by tilted layer fusion, which implies that the tile size restriction on the horizontal direction no longer exists. Therefore, we can reduce the width of the tile as we wish, which helps reduce the buffer size significantly. In the extreme case, the width of the tile can be a single column. In this paper, the width of a tile is selected as 8 columns.

The size of each ping pong buffer is

| (1) |

In our case, is 8 while the classical layer fusion needs to be 60. Our design brings a significant advantage on buffer size cost.

IV-A2 Overlap Buffer

In the tilted layer fusion method, the results of one layer have to be reserved for computation of the following layer of the next tile. Therefore, it requires a memory that can contain number of layer+2 (7+2 for our model) layers of data. In each layer, 60x2x28 bytes of memory are required to store all data in the last two columns of the tile. The overlap buffer size is

| (2) |

Although the proposed method requires additional memory for the overlap buffer, it is still a good trade-off due to memory reduction on ping-pong buffers.

IV-A3 Residual Buffer

Because of the tilted layer fusion, the residual buffer has to store more columns of data. Therefore, the residual buffer size is

| (3) |

Table II shows the comparison summary of the buffer cost. The proposed approach can save nearly 60% of the buffer cost.

| Tilted Layer Fusion | Classical Layer Fusion | |

|---|---|---|

| Weight Buffer | 42.54KB | 42.54KB |

| Ping-Pong Buffers | 26.88KB | 201.6KB |

| Overlap Buffer | 30.24KB | - |

| Residual Buffer | 2.7KB | 10.8KB |

| Total | 102.36KB | 254.94KB |

IV-B Implementation Result and Comparison

Table I shows the implementation result of the proposed design and the comparison with other designs. This design is synthesized with Synopsys Design Compiler under the TSMC 40nm CMOS process. It achieves 60 fps for x3 scale FHD image generation while running at the 600 MHz clock frequency. The total chip area only occupies 3.11 with 102 KB on-chip SRAM.

Compared to designs without layer fusion, [11] and [12], the required memory bandwidth is significantly reduced. The amount of off-chip DRAM access of this design is reduced from 5.03 GB/sec to 0.41 GB/sec, a total reduction of . Thus, even DDR2 DRAM can work well with this design. Compared to designs with layer fusion [13], this design has a smaller on-chip SRAM due to the adoption of the tilted layer fusion and has a lower area cost.

V Conclusion

In this paper, we present an area-efficient hardware accelerator for real-time superresolution applications. It can upscale 640x360 input LR images to 1920x1080 (FHD) HR images at 60 fps. The trade-off between the required memory bandwidth and on-chip memory is resolved by the proposed tilted layer fusion. Compared to previous designs, this design is capable of finishing 60 fps FHD image generation with only 102.36 KB on-chip SRAM while requiring external memory bandwidth is as small as DDR2 transfer rate. The architecture adopts regular vectorwise data flow and diagonalized PE structure to reduce the control overhead. Thus, the presented accelerator can operate at 600 MHz clock frequency and only occupy 3.11 area.

References

- [1] C. Dong, C. C. Loy, K. He, and X. Tang, “Image super-resolution using deep convolutional networks,” IEEE transactions on pattern analysis and machine intelligence, vol. 38, no. 2, pp. 295–307, 2015.

- [2] J. Kim, J. K. Lee, and K. M. Lee, “Accurate image super-resolution using very deep convolutional networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 1646–1654.

- [3] B. Lim, S. Son, H. Kim, S. Nah, and K. Mu Lee, “Enhanced deep residual networks for single image super-resolution,” in Proceedings of the IEEE conference on computer vision and pattern recognition workshops, 2017, pp. 136–144.

- [4] T. Tong, G. Li, X. Liu, and Q. Gao, “Image super-resolution using dense skip connections,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 4799–4807.

- [5] Y. Zhang, K. Li, K. Li, L. Wang, B. Zhong, and Y. Fu, “Image super-resolution using very deep residual channel attention networks,” in Proceedings of the European conference on computer vision (ECCV), 2018, pp. 286–301.

- [6] M. Xiao, S. Zheng, C. Liu, Y. Wang, D. He, G. Ke, J. Bian, Z. Lin, and T.-Y. Liu, “Invertible image rescaling,” in European Conference on Computer Vision. Springer, 2020, pp. 126–144.

- [7] Z. Du, J. Liu, J. Tang, and G. Wu, “Anchor-based plain net for mobile image super-resolution,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 2494–2502.

- [8] C. Dong, C. C. Loy, and X. Tang, “Accelerating the super-resolution convolutional neural network,” in European conference on computer vision. Springer, 2016, pp. 391–407.

- [9] N. Ahn, B. Kang, and K.-A. Sohn, “Fast, accurate, and lightweight super-resolution with cascading residual network,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 252–268.

- [10] Z. Hui, X. Gao, Y. Yang, and X. Wang, “Lightweight image super-resolution with information multi-distillation network,” in Proceedings of the 27th ACM International Conference on Multimedia, 2019, pp. 2024–2032.

- [11] Y. Kim, J.-S. Choi, and M. Kim, “A real-time convolutional neural network for super-resolution on FPGA with applications to 4k UHD 60 fps video services,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 29, no. 8, pp. 2521–2534, 2018.

- [12] P.-W. Yen, Y.-S. Lin, C.-Y. Chang, and S.-Y. Chien, “Real-time super resolution CNN accelerator with constant kernel size Winograd convolution,” in 2020 2nd IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS). IEEE, 2020, pp. 193–197.

- [13] J. Lee, J. Lee, and H.-J. Yoo, “SRNPU: An energy-efficient CNN-based super-resolution processor with tile-based selective super-resolution in mobile devices,” IEEE Journal on Emerging and Selected Topics in Circuits and Systems, vol. 10, no. 3, pp. 320–334, 2020.

- [14] M. Alwani, H. Chen, M. Ferdman, and P. Milder, “Fused-layer CNN accelerators,” in 2016 49th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO). IEEE, 2016, pp. 1–12.

- [15] G. Li, Z. Liu, F. Li, and J. Cheng, “Block convolution: towards memory-efficient inference of large-scale CNNs on FPGA,” IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 2021.

- [16] J.-W. Chang, K.-W. Kang, and S.-J. Kang, “An energy-efficient FPGA-based deconvolutional neural networks accelerator for single image super-resolution,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 30, no. 1, pp. 281–295, 2018.