A real-time multi-constraints obstacle avoidance method using LiDAR

Abstract

Obstacle avoidance is one of the essential and indispensable functions for autonomous mobile robots. Most of the existing solutions are typically based on single condition constraint and cannot incorporate sensor data in a real-time manner, which often fail to respond to unexpected moving obstacles in dynamic unknown environments. In this paper, a novel real-time multi-constraints obstacle avoidance method using Light Detection and Ranging(LiDAR) is proposed, which is able to, based on the latest estimation of the robot pose and environment, find the sub-goal defined by a multi-constraints function within the explored region and plan a corresponding optimal trajectory at each time step iteratively, so that the robot approaches the goal over time. Meanwhile, at each time step, the improved Ant Colony Optimization(ACO) algorithm is also used to re-plan optimal paths from the latest robot pose to the latest defined sub-goal position. While ensuring convergence, planning in this method is done by repeated local optimizations, so that the latest sensor data from LiDAR and derived environment information can be fully utilized at each step until the robot reaches the desired position. This method facilitates real-time performance, also has little requirement on memory space or computational power due to its nature, thus our method has huge potentials to benefit small low-cost autonomous platforms. The method is evaluated against several existing technologies in both simulation and real-world experiments.

keywords:

real-time obstacle avoidance\sepLiDAR\seponline path planning\sepmulti-constraints\sepmobile robot, and

1 Introduction

In recent years, the autonomous mobile robot technique has made considerable progress and keeps attracting more attention from engineers and researchers around the world. The characteristics of robots, like cost, size, flexibility, safety, etc., drive them to be increasingly popular on various applications from different fields, such as military reconnaissance, surveillance, transportation, traffic monitoring[1, 2].

One of the major challenges robots having is to avoid obstacles and perform path planning in dynamic environments. Robots should be able to perceive the surrounding environment, be prepared for potential threats, identify new obstacles in the scene, and modify or re-plan trajectory with the latest knowledge, ideally also achieving optimality in some measurable sense at a low cost on memory space and computation.

Generally, path planning can be divided into two categories; one is global path planning that generates an optimal off-line path with prior knowledge of the environment. Even if the environment is pre-mapped, this type of path planning algorithms still suffers from unexpected obstacles that are moving or simply weren’t mapped. The other type does not require the environment to be pre-mapped, but rather, assuming a dynamic environment, work with real-time sensor data to perform online planning locally[3]. For this type of planning, characteristics of sensor data should be taken into consideration during the system design stage to best utilize the information available.

Therefore, the challenges for robot obstacle avoidance algorithms are: (1) insufficient environment information in dynamic environments; (2) sensors’ information is often not effectively utilized at each local optimization.

A novel multi-constraints autonomous obstacle avoidance method using LiDAR is proposed in this paper, which enables robots equipped with LiDAR to avoid obstacles autonomously in dynamic situations at a lower cost. It establishes a customized cost function with multi-constraints to analyze effectively the real-time scanning data of the LiDAR, and then extract an optimal sub-goal within the scanning area. Meanwhile, the improved ACO is used to quickly re-plan an optimal sub-path from the current robot position to the sub-goal position. The said process is done iteratively at each step until the pre-specified goal is reached. In our system set up, single-beam 2-dimensional LiDAR, instead of multi-beam 3-dimensional LiDAR, is used because the performance of the former is sufficient for detecting the surroundings and identify obstacles, and it has lower cost and lower requirements on computation and storage. Both simulation and experiments are conducted, to validate our method and demonstrate its effectiveness and availability.

The rest of the paper is organized as follows. Section 2 reviews some important research results related to obstacle avoidance algorithms. Section 3 presents the proposed algorithm. Section 4 demonstrates the simulation and experiment results. Section 5 concludes the paper.

2 Related work

Several research studies focus on obstacle avoidance for robot path planning[4], the methods for solving path planning are as follows: probabilistic, heuristic, and meta-heuristic methods. Probabilistic mainly consists of Rapidly-exploring Random Trees(RRT)[5], Probabilistic Roadmaps (PRM)[6]. In the heuristic and meta-heuristic approaches are the Artificial Neural Networks(ANN)[7], Genetic Algorithms(GA)[8], Simulated Annealing[9], ACO[10], Bacterial Foraging Optimization(BFO)[11]. Each of the methods presented above has its strengths and weaknesses. In many situations, some of them are combined to derive the desired path planner in the most effective mode[12, 13].

The Artificial Potential Field(APF) method was a sophisticated and efficient obstacle avoidance method, first proposed by Oussama Khatib[14] and applied it to obstacle avoidance[11, 15, 16], while it is extremely easy to fall into the local minima. Bence Kovács[17] presented a method from animal motion attributes based on APF for robot path planning, and the Bug algorithm was used to solve the local minima problem of APF. However, it has not solved the disadvantages that the Bug algorithm is incapable of exploiting the sensor’s data and still cannot ensure that the path is optimal. Dieter Fox proposed a Dynamic Window Approach(DWA) in[18, 19], which took into account the inertia factor of the robot and is suitable for robots with high speed and robots with limited motor torque; but, it cannot be used in unstructured dynamic environments. In[11] BFO algorithm produced impressive simulation results for obstacle avoidance on a mobile robot path planning, this method requires many samples for estimation, the more samples, the greater the memory consumption, the higher the complexity of the algorithm[20]; conversely, the fewer the particles, the worse the path smoothness. Reference[21] proposed an obstacle avoidance bubble bug algorithm (BBA), it defined a bubble around the robot, the radius of the bubble indicates the range of the sensor, the strategy was to limit the robot to the maximum distance from the obstacle all the time. Some scholars are working to solve the problem of robot navigation and obstacle avoidance by Simultaneous Localization and Mapping (SLAM)[22, 23] based on LiDAR or vision[24, 25, 26, 27].

In contrast to the mentioned approaches, this paper focuses on building a multi-constraints mathematical model to best utilize the real-time scanning information of LiDAR in a dynamic environment. As a result, the robot is able to, in a real-time manner, computes the optimal path to avoid unexpected threats and uncertain obstacles at a lower cost.

3 Proposed method

In this section, the cost function with multi-constraints is introduced to analyze the local known information, including the current position and orientation (together, the pose) of the robot, the LiDAR scanning area (obstacle areas and collision-free space), and the goal position.

3.1 Environment model

LiDAR is mounted on the top of the robot and used to detect environment information in a 2D scanning plane, with a maximum scanning range. Note that the 2D LiDAR can only scan one plane. In practice, if the height of the obstacle is less than the height of the LiDAR, it cannot be detected. Therefore, the installation height of LiDAR needs to weigh the actual application environment and should not be fixed. At each scanning period, LiDAR gives 360-degree surrounding scanning and generates data points, also called lase-point cloud. Scanning data can be expressed as , where the and respectively denotes the relative distance and orientation centered on the robot, with the LiDAR scanned objects for at the time . As shown in figure 1, the original data of the LiDAR in polar coordinates is shown, where the green dots indicate the obstacles, represents the LiDAR scanning radius, and the white triangle denotes the robot.

Within the scanning area, every object can be simplified as multiple points. If there are obstacles, a series of point sets are generated to represent obstacles; otherwise, there are no data points. It is not convenient to use the original LiDAR data directly to represent surroundings because of the data points are discrete. A single point does not indicate an obstacle, but clusters the data points. There have been several studies focusing on clustering methods for point cloud data. In this paper, the scanning area is equally divided into sectors, and each sector is given a unique number . Generally, that is meaningful to equally divide the scanning area, the smaller the sector division, the more flexible the path planning, while the amount of calculation is increased. All data points will be distributed in the sector corresponding to the within the scanning area. In the way, only need to pay attention to which sectors are distributed with data points; the remaining sectors belong to collision-free space. A local grid map is constructed within the LiDAR scanning area, which is used to analyze the current environment.

The scanning area is further divided into small grid cells that can accurately locate the obstacle in the specific grid units. Because roughly creating a grid map in a circular scanning area of radius may produce some incomplete grid units, making it impossible to assign a corresponding coordinate position to some grid units. In order to solve the problem that the incomplete grid cells cannot be assigned coordinates, in this paper, the largest square space in a circular scanning area is used to replace the LiDAR scanning area, and then the square is divided into several grid cells. So each clustered data points can be allocated in a specific grid.

In this paper, the robot moves on the horizontal ground and obtains real-time pose information by reading the odometry. Its position and orientation can be simplified as in the 2D space at time , and indicates the yaw angle. In fact, given the error of the odometry, the application environment is limited to small indoor scenes. It can be easily calculated that grid units position based on the at time .

| (1) |

In the grid map, an expansion coefficient is set for each obstacle, and if a grid unit is occupied by an obstacle, its adjacent eight grid units are not included in the collision-free space. However, how to logically re-plan an optimal sub-path among these collision-free grids to achieve the avoidance obstacles at a lower cost is the problem to be solved in this paper.

3.2 The cost function

The robot can re-plan an optimal path online toward sub-goal and successfully avoid unexpected obstacles or threats at a lower cost. As a sub-goal selected at a specific moment, it employs the key criterion for convergence to the goal position. On the other hand, the sub-goal is locally optimal and globally convergent, because the cost function based on multi-constraints is capable of analyzing thoroughly the detection information of the LiDAR. Concerning known local environment information, each grid cell in collision-free space is estimated by the cost function, the result of the cost function denotes the cost of the corresponding grid cell as a sub-goal, the smaller the value, the smaller the cost.

The significance of the customized cost function consisting of and is to comprehensively evaluate the real-time information, including the current pose information of the robot, the LiDAR scanning area, and the goal position, to generate the sub-goal. is the goal position information, denotes the robot pose information at the time and indicates the position information of the - grid unit in collision-free space at the time .

is used to calculate the euclidean distance between and at time , an essential contribution is to ensure the global convergence of sub-goal. In the specific implementation, the scanning information is maximized to achieve the local optimal obstacle avoidance result. Only the most marginal grid units in the collision-free space are selected, which can promote the processing efficiency. The higher the distance to the obstacle, the more flexible the path to avoid the obstacle, which means a more substantial area around the robots are unobstructed.

returns the azimuth angle from to at time , which is angle with the yaw of robots. As one of the constraint factors, embodies the angle deviation relationship between the robot and the grid cells in collision-free space.

generates the azimuth angle from to at time , which is used to indicate the magnitude of deviation of the selected grid unit from the goal position. The additional contribution of constraints factors and are to ensure the smoothness of the path.

The proposed cost function is defined as Eq.(2):

| (2) |

and is the weight coefficient respectively.

The above three constraints are not directly added after calculation, but are normalized first, and then added. Since the cost function is composed of multiple constraints, and different constraints represent information in different dimensions, such as distance and different angle. To prevent a certain constraint from being too dominant in the cost function,and the normalized method is used to smooth the constraint in this paper.

| (3) |

Since the latest local known information is different each time, the number of available grids is different each time.

Finally, with the appropriate weight parameters, the normalized constraints are introduced into the cost function to estimate the cost for each available grid cell and choose the minimum cost.

| (4) |

Based on the latest local known information each time, the grid cell corresponding to the minimum cost value is selected as the sub-goal , which satisfies the optimization criterion such as distance, time, and cost in a limited scanning area, so the robot is capable of immediately responding to any moving obstacles within the scanning area of LiDAR. In the grid map of the scanning area, the path of the robot is connected by multiple grid cells. Although the robot is capable of moving in any direction in a 2D plane, the motion model of the robot is further defined in this paper, assuming that the robot is only allowed to move along the front, rear, left, right, and diagonal lines between adjacent grids. The resolution of the grid cell is small enough not to affect the smoothness of the path. Therefore, how to quickly re-plan a sub-path from the current robot position to the sub-goal position is urgently needed. Generally, a straight motion is considered as the primary solution because straight-line motion usually indicates that the path is the shortest, while the shortest path is not necessarily the optimal path in many practical problems.

3.3 Improved ACO algorithm

The improved ACO, in this paper, is used to plan optimal sub-path from the latest robot position to the latest defined sub-goal, The robot moves one step along the sub-path, then sets the current position as the start point, extracts the local sub-goal position based on the latest local known information, and calls ACO to re-plan the sub-path to avoid moving obstacles until it reaches the goal position. The flowchart of the proposed method is shown in figure .

Note that the next sub-goal position is calculated if and only if the robot reaches the sub-goal point, which will cause a delay in updating the environmental information, and the robot will not be able to respond to moving obstacles before reaching the sub-goal position.

The ACO algorithm has the characteristics of global optimization, parallelism, and strong robustness, and has been widely used in offline path planning problems. In this paper, the ACO algorithm is used to online re-plan the optimal sub-path of the robot from the current position to the sub-goal position in the grid map of the scanning area. The fundamental definition of the ACO algorithm is typically given by Eq.(5):

| (5) |

where the is the transition probability of ant moving from to at the time denotes the pheromone from to at the time ; is the relative importance of pheromone, is the relative importance of heuristic factor, is a collection of positions that allows the ant to walk next; and represent the heuristic factor from to at the time ,

| (6) |

is the distance from to .

After all ants complete once iteration, the pheromone update method is as follows:

| (7) |

| (8) |

| (9) |

where the is the rate of pheromone evaporation, and is the residual pheromone factor; is the amount of deposited pheromone from to at time , and is the amount of deposited pheromone from to by ant at time ; is positive constant; and are the set of ants colony and the number of iterations, respectively; indicates the path length by ant in this iteration.

Based on the traditional ACO algorithm, to enhance the stability of robot movement, corner heuristic information is introduced into the path transition probability function to reduce the number of large corners and the number of corners in the path. The improved path transition probability function is as follows:

| (10) |

is the corner heuristic function from to at time t. and are inversely proportional.

| (11) |

| (12) |

In the pheromone update phase, on the one hand, the traditional ACO algorithm updates the pheromone of all ants, including the worst ants, which will be misleading for the next generation. On the other hand, only the length of the path is considered, as shown in Eq. (7), (8) and (9). To coordinate the smoothness of the path and the convergence speed of our method. each ant gets a score based on its path length and number of corners, and ranks the score in descending order. The improved pheromone update rule is that the pheromone increment is determined by weighting the path length and the number of path corners, which can ensure the smoothness of the sub-path. At the same time, only some good ants with higher scores are updated to avoid the impact of the worst ants on the offspring ants, thereby improving the convergence speed of the algorithm.

| (13) |

| (14) |

is the weighted score of the path length and number of corners of ant , is the sort function to sort from large to small, and are the positive weight coefficients, indicates the number of corners of the path generated by ant . is the ant order after reordering

The implementation steps of the improved ACO method are as follows:

Step 1: Algorithm initialization, and are initialized to the ant colony start position and sub-goal position respectively, and the search space is the known grid map of the scanning area.

Step 2: Calculate the path transition probability of each ant according to the and choose the next feasible position according to the roulette rule. If is the sub-goal point, the step 3 is performed; otherwise, still, execute the step 2.

Step 3: After all ants have completed an iteration, records the path trajectory generated by ant in the - iteration. Find the ant in that does not reach the sub-goal point as the set . Randomly select an ant to re-modify its path trajectory in this iteration.

| (15) |

| (16) |

is the optimal path before the - iteration. When set is valid, randomly select one ant in and assign to it ; if all ants reach the target point, randomly select anyone to assign.

Step 4: According to the to calculate the score of each ant, and then update the pheromone of the selected ant according to the .

Step 5: Determine whether the number of iterations reaches the maximum number of iterations . If it does, the step 6 is performed. Otherwise, steps 2 to 5 are executed cyclically.

Step 6: The optimal sub-path described by , represents the position of the grid cell corresponding to , is the position of the grid cell corresponding to sub-goal .

| (17) |

adding to the desired path, the robot moves one step along the sub-path to the next position . Repeat the steps 1 to 6 until the robot reaches the goal position.

The convergence speed of improved ACO algorithm is shown in figure . The black solid line shows the convergence speed of the improved ACO algorithm. The concept of corners is involved in both the transition probability phase and the pheromone update phase to build up the smoothness of the path. Update only some good ants to avoid the impact of the worst ants on the offspring ants. It can be seen that the improved ACO has faster convergence speed. At the same time, it should be pointed out that it is not advisable to assign multiple ants in once iteration, which will increase the probability that the algorithm falls into the local minima.

4 Experimental results

4.1 Simulation results

Before the real experiment of the robot, a simulation of the algorithm is implemented in MATLAB to compare the effect of different parameters on the results. In order to simulate the actual environment more realistically, an obstacle map is loaded by a grid map in the simulation program, which corresponds to a 40m square real area. Black areas indicate the collision-free area, and the white grids denote the obstacles, which also called occupied units. In the simulation of this paper, each grid cell in the grid map corresponds to a 1.5m square area. Eight grid cells adjacent to the occupied unit are excluded from collision-free space as a safe distance.

4.1.1 Simulation 1 - environment test

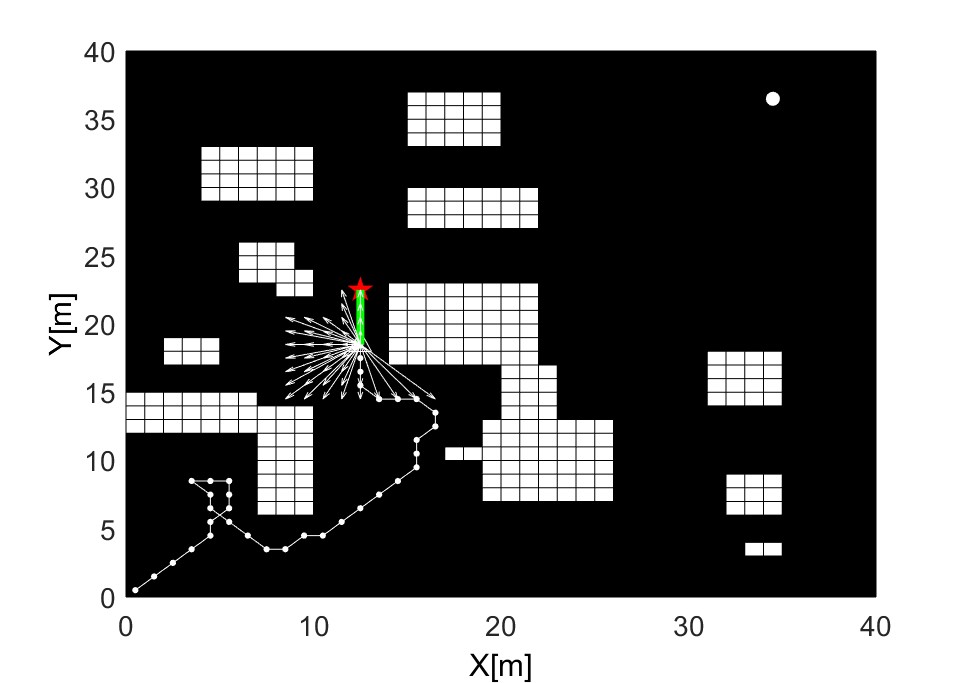

In order to demonstrate the availability and optimality of the proposed algorithm under multiple obstacles situation, figure 4 shows the simulation results of the proposed algorithm in more detail in the form of multiple consecutive instants.

In real application, the robot only knows the information within the LiDAR detection range at a certain moment. The detection radius of the LiDAR is four grid cells centered on the robot, which includes a total of 80 grid cells adjacent to each other. The simulation program window shows that the white vector arrows denote the LiDAR scanning area, and the solid white circle is the goal position. The red pentagram marks the sub-goal position calculated by the cost function proposed in this paper at a specific moment, and the solid green line is the corresponding sub-path that re-planed by the improved ACO algorithm in real-time. Figure 5 shows that the robot adjusts the pose in real-time during the movement to meet the current state based on the latest local information.

Figure 5(a) shows the paths generated by different algorithms, including the mainstream APF and BFO obstacle avoidance algorithms. By comparing the three paths, it is possible to see that the improved obstacle avoidance algorithm generates a solution with fewer fluctuations and shorter lengths.

For the given scenario, by computing the real-time distance between the robot and the goal position to compare the convergence characteristics of the three algorithms, as shown in figure 5(b). Considering path length, smoothness, and convergence properties. It can be concluded that the path generated by the proposed algorithm is not only globally convergent but also locally optimal.

4.1.2 Simulation 2 - tune parameters on the evaluation results

The cost function and the improved ACO algorithm both contain different weight parameters as described in section 3 in this paper. In this part, the effects of different azimuth factors and smoothing factor on robot real-time path are mainly discussed. The distance factor ensures that the sub-goal is globally convergent, while the azimuth factors are responsible for the smoothness of the global path. To compares the effects of different weight parameters on the simulation results. Three sets of parameters are selected for simulation comparison. Finally, a set of optimal weight parameters is determined. Table 1 lists three different sets of parameters.

| groups | Parameters | ||||

|---|---|---|---|---|---|

| First group | 4 | 1.8 | 1 | 1 | 0 |

| Second group | 4 | 1.8 | 1 | 0.7 | 0.3 |

| Third group | 4 | 2 | 3 | 0.7 | 0.3 |

As shown in figure 6, the simulation program generates paths based on three different groups of parameters. It should be noted that this path is only one of the results of the simulation program that runs multiple times. Figure 6 shows that different parameters have a significant influence on the path length and number of turns.

The differences between the first group and the second group of parameters are that the first group does not take into account the influence of the number of turns in the sub-path planning. While, the second group of parameters incorporates the turning factor, with the appropriate parameters, the result is smoother than the other path. In the third group, the azimuth factors are used to increase the smoothness of the path, but if its weight is too large, it will affect the convergence of the algorithm, as shown in figure 6.

In order to quantitatively analyze the different paths generated by the simulation, 10 simulations were performed for each set of parameters. Table 2 lists the path length and the number of turns of the global path generated by the simulation using different parameters, respectively. The simulation results consist of the best, worst, and average value.

| Results | Group 1 | Group 2 | Group 3 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

corners |

|

corners |

|

corners | |||||||

| Best | 78.84 | 28 | 73.8 | 22 | 85.14 | 29 | ||||||

| Worst | 88.68 | 35 | 79.25 | 27 | 98.91 | 51 | ||||||

| Average | 81.2 | 35 | 75.8 | 25 | 89.84 | 41 | ||||||

With appropriate weight parameters, the simulation results have demonstrated that the proposed algorithm is capable of avoiding obstacles successfully and generating paths with a shorter length and few fluctuations.

4.2 Robot experiments

Refer to the above simulation results, the robot experiment platform of this paper is shown in figure 7(a). The proposed algorithm needs real-time information and process data online, including robot state, real-time environment information. So a LiDAR and an onboard computer installed on the EAI robot. EAI is a differential drive mobile robot with a maximum speed of 0.8 m/s and the drive wheel diameter of 125 mm, and this differential drive allows the implementation and measurements of path-planning algorithms. The architecture of the robot platform is illustrated in detail in figure 7(b). According to the actual experiment environment, the installation height of the LiDAR in two experiments is 0.6m. Also, the LiDAR scans the environmental information around the robot in real-time at 5Hz and publishes it. The onboard computer runs complex algorithms at high frequency, including LiDAR data collection and processing, obstacle analysis, and online path planning.

In order to highlight the accuracy and practicality of the proposed algorithm to solve unexpected threats or uncertain obstacles, the first experiment scene was set in a real narrow corridor of 2.1m wide with static obstacles consisting of multiple cubes of . EAI robot radius is 0.203m and a safe distance of 0.2m from the obstacle. Further, the detection distance of the LiDAR is set to 1.2m to reduce the response time of the robot in scene 1. Scenario 2 is selected to verify the availability of the proposed algorithm in an unstructured, dynamic environment. Two real experimental scenarios with different effects are shown in figure 8.

The onboard computer records the trajectory data of the robot, the real experiment environment is displayed in a certain proportion in MATLAB, and the saved robot trajectory is drawn at the same time. The complex U-shaped obstacles and L-shaped obstacles are used to verify the robustness and practicality of the proposed algorithm in figure 8(a), the closest distance between obstacles is about 0.9m, and the maximum width is 1.2m. Due to the extremely narrow corridor, the robot speed is 0.2m/s. In fact, the robot analyzes the LiDAR data in real-time to make the best decision in line with the current state. Similarly, the implementation of the algorithm is presented in the form of multiple consecutive instants in figure 9.

In the real experiment, a camera is mounted on the top of the robot and records the motion of the robot in video form. The robot successfully avoids the obstacle and reaches the goal position with minimal cost and smooth route, as shown in figure 10. However, the other methods fail to work well with LiDAR data, and the path length is long and not smooth enough.

In order to analyze the experiment results quantitatively, table 3 lists the results of each algorithm running five times at a speed of 0.2m/s. The recorded video can be downloaded from the link in Appendix A.

| Algorithm |

|

|

|

||||||

|---|---|---|---|---|---|---|---|---|---|

| Proposed method | 15.5 | 16.2 | 81 | ||||||

|

16 | 17.06 | 85.3 | ||||||

| APF | 18.2 | 18.5 | 92.5 | ||||||

| BFO | 16.6 | 17.6 | 88 |

It can be seen from table 3 that the method proposed in this paper takes less time, which means that the convergence speed is faster. Also, the smooth characteristics of the path have been compared in Table 2. Thus, considering time, path length, smoothness, and convergence properties, that the path generated by the proposed algorithm is not only globally convergent but also locally optimal.

As shown in figure 8(b), experiment 2 aims to verify the feasibility and robustness of the proposed algorithm under an unstructured, dynamic scenario with sudden external disturbances. In real experiments, the robot moves at a speed of 0.4 m/s and 0.6 m/s without external interference. Then, when the robot moves again at a speed of 0.6 m/s, a plurality of unexpected moving obstacles appears around the robot to hinder its movement. The trajectory of the robot is converted to convergence to illustrate the robustness of the algorithm, as shown in figure 11. At the same time, the corresponding video can be downloaded from the link in Appendix B.

In Figure 11, in the absence of external disturbance, the convergence curve is relatively smooth, corresponding to the trajectory generated by the robot moving at a speed of 0.4 m/s and 0.6 m/s, respectively. For external disturbances, although there is jitter in the convergence curve due to sudden external disturbances, the jitter is minimal, which further demonstrate that our method can effectively deal with dynamic obstacles under an unstructured, changing scenario.

5 Conclusions

In this paper, a new multi-constraints obstacle avoidance method using LiDAR was proposed. The main contributions of this method are its cost function for optimization is able to utilize the real-time LiDAR scanning data and the latest state estimation of the robot more comprehensively in dynamic environments. Compared with the traditional method, the advantage is that since the sub-goals and sub-paths are only related to the local regions, the optimal path is achieved at a lower cost in terms of computation and storage, thereby avoiding the construction of a complex global environment model and reducing the complexity.

The real experiments and simulation results also very strongly validated the feasibility and advantages of our method in contrast to other state-of-art solutions for this problem.

The future work related to this proposed method include incorporating more dynamic characteristics of obstacles, finding better ways to fine-tune parameters of the cost function, testing the method to a even more complex environment, and implementing the method on a drone to extend its performance to 3D space. Another potentially interesting research direction is to include the "unseen" area of environments into optimization cost function, by extracting features of the point cloud to better describe the obstacles and then predicting potential threats to the planned path of the robot, based on features of the obstacles.

6 Appendix A

Supplementary video material for experiment 1 related to this article can be found online at https://youtu.be/8hr3ltbr11I

7 Appendix B

moving obstacles video for experiment 2 related to this article can be found online at https://youtu.be/MFt9mqylN8w

References

- [1] Van Brummelen J, O’Brien M, Gruyer D, et al. Autonomous vehicle perception: The technology of today and tomorrow[J]. Transportation research part C: emerging technologies, 2018, 89: 384-406.

- [2] Lu D L. Vision-enhanced lidar odometry and mapping[D]. Carnegie Mellon University Pittsburgh, PA, 2016.

- [3] Montiel O, Orozco-Rosas U, Sepúlveda R. Path planning for mobile robots using Bacterial Potential Field for avoiding static and dynamic obstacles[J]. Expert Systems with Applications, 2015, 42(12): 5177-5191.

- [4] Tajti F, Szayer G, Kovács B, et al. Mobile robot performance analysis for indoor robotics[J]. Periodica Polytechnica Civil Engineering, 2015, 59(2): 123-131.

- [5] Karaman S, Frazzoli E. Sampling-based algorithms for optimal motion planning[J]. The international journal of robotics research, 2011, 30(7): 846-894.

- [6] Ramachandran R K, Wilson S, Berman S. A probabilistic approach to automated construction of topological maps using a stochastic robotic swarm[J]. IEEE Robotics and Automation Letters, 2017, 2(2): 616-623.

- [7] Mac T T, Copot C, Tran D T, et al. Heuristic approaches in robot path planning: A survey[J]. Robotics and Autonomous Systems, 2016, 86: 13-28.

- [8] Senniappan Karuppusamy N, Kang B Y. Minimizing airtime by optimizing tool path in computer numerical control machine tools with application of A* and genetic algorithms[J]. Advances in Mechanical Engineering, 2017, 9(12): 1687814017737448.

- [9] Zhang K, Collins E G, Barbu A. An efficient stochastic clustering auction for heterogeneous robotic collaborative teams[J]. Journal of Intelligent & Robotic Systems, 2013, 72(3-4): 541-558.

- [10] Liu J, Yang J, Liu H, et al. An improved ant colony algorithm for robot path planning[J]. Soft Computing, 2017, 21(19): 5829-5839.

- [11] Hossain M A, Ferdous I. Autonomous robot path planning in dynamic environment using a new optimization technique inspired by bacterial foraging technique[J]. Robotics and Autonomous Systems, 2015, 64: 137-141.

- [12] Zhou C, Li F, Cao W, et al. Design and implementation of a novel obstacle avoidance scheme based on combination of CNN-based deep learning method and liDAR-based image processing approach[J]. Journal of Intelligent & Fuzzy Systems, 2018, 35(2): 1695-1705.

- [13] Mei Y. Study on the application and improvement of ant colony algorithm in terminal tour route planning under Android platform[J]. Journal of Intelligent & Fuzzy Systems, 2018 (Preprint): 1-8.

- [14] Khatib O. Real-time obstacle avoidance for manipulators and mobile robots[M]//Autonomous robot vehicles. Springer, New York, NY, 1986: 396-404.

- [15] Zhang T, Zhu Y, Song J. Real-time motion planning for mobile robots by means of artificial potential field method in unknown environment[J]. Industrial Robot: An International Journal, 2010, 37(4): 384-400.

- [16] Mac T T, Copot C, De Keyser R, et al. The development of an autonomous navigation system with optimal control of an UAV in partly unknown indoor environment[J]. Mechatronics, 2018, 49: 187-196.

- [17] Kovács B, Szayer G, Tajti F, et al. A novel potential field method for path planning of mobile robots by adapting animal motion attributes[J]. Robotics and Autonomous Systems, 2016, 82: 24-34.

- [18] Fox D, Burgard W, Thrun S. The dynamic window approach to collision avoidance[J]. IEEE Robotics & Automation Magazine, 1997, 4(1): 23-33.

- [19] Özdemir A, Sezer V. Follow the Gap with Dynamic Window Approach[J]. International Journal of Semantic Computing, 2018, 12(01): 43-57.

- [20] Mohajer B, Kiani K, Samiei E, et al. A new online random particles optimization algorithm for mobile robot path planning in dynamic environments[J]. Mathematical Problems in Engineering, 2013, 2013.

- [21] Zohaib M, Iqbal J, Pasha S M. A NOVEL GOAL-ORIENTED STRATEGY FOR MOBILE ROBOT NAVIGATION WITHOUT SUB-GOALS CONSTRAINT[J]. REVUE ROUMAINE DES SCIENCES TECHNIQUES-SERIE ELECTROTECHNIQUE ET ENERGETIQUE, 2018, 63(1): 106-111.

- [22] Durrant-Whyte H, Bailey T. Simultaneous localization and mapping: part I[J]. IEEE robotics & automation magazine, 2006, 13(2): 99-110.

- [23] Bailey T, Durrant-Whyte H. Simultaneous localization and mapping (SLAM): Part II[J]. IEEE robotics & automation magazine, 2006, 13(3): 108-117.

- [24] Hess W, Kohler D, Rapp H, et al. Real-time loop closure in 2D LIDAR SLAM[C]//2016 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2016: 1271-1278.

- [25] Harik E H, Korsaeth A. Combining Hector SLAM and Artificial Potential Field for Autonomous Navigation Inside a Greenhouse[J]. Robotics, 2018, 7(2): 22.

- [26] Lowe T, Kim S, Cox M. Complementary perception for handheld slam[J]. IEEE Robotics and Automation Letters, 2018, 3(2): 1104-1111.

- [27] Weng J F, Su K L. Development of a SLAM based automated guided vehicle[J]. Journal of Intelligent & Fuzzy Systems, 2019 (Preprint): 1-13.