222 \jmlryear2023 \jmlrworkshopACML 2023

A Pragmatic Look at Deep Imitation Learning

Abstract

The introduction of the generative adversarial imitation learning (GAIL) algorithm has spurred the development of scalable imitation learning approaches using deep neural networks. Many of the algorithms that followed used a similar procedure, combining on-policy actor-critic algorithms with inverse reinforcement learning. More recently there have been an even larger breadth of approaches, most of which use off-policy algorithms. However, with the breadth of algorithms, everything from datasets to base reinforcement learning algorithms to evaluation settings can vary, making it difficult to fairly compare them. In this work we re-implement 6 different IL algorithms, updating 3 of them to be off-policy, base them on a common off-policy algorithm (SAC), and evaluate them on a widely-used expert trajectory dataset (D4RL) for the most common benchmark (MuJoCo). After giving all algorithms the same hyperparameter optimisation budget, we compare their results for a range of expert trajectories. In summary, GAIL, with all of its improvements, consistently performs well across a range of sample sizes, AdRIL is a simple contender that performs well with one important hyperparameter to tune, and behavioural cloning remains a strong baseline when data is more plentiful.

keywords:

imitation learning; inverse reinforcement learning; benchmarking1 Introduction

Several years ago, Henderson et al. (2018) formally brought attention to the reproducibility crisis in deep reinforcement learning (DRL). Some solutions have been to settle on evaluation protocols for common benchmarks (Machado et al., 2018), improving the statistical tools with which we evaluate results (Agarwal et al., 2021), or simply just providing reliable algorithm implementations (Raffin et al., 2021). Still, sometimes it is necessary to take a step back and evaluate the (claimed) progress within different areas of machine learning (ML; Oliver et al., 2018; Musgrave et al., 2020). In on-policy RL it has already been observed that implementation details can matter more than the algorithmic contributions of novel algorithms (Engstrom et al., 2020; Andrychowicz et al., 2021), and so in turn we have opted to take a pragmatic look at (deep) imitation learning (IL), creating a single, open source codebase to fairly compare algorithms.111https://github.com/Kaixhin/imitation-learning

IL is the branch of ML that is concerned with learning from “demonstration” data (Hussein et al., 2017). In other words, there exists agents, acting in environments, from which we collect data, in order to train our own agent. IL is intimately linked with RL—learning to act optimally in a given environment—and hence is often analysed with respect to concepts that are foundational to RL, such as states, actions, policies, reward functions, value functions, etc. (Sutton and Barto, 2018). Indeed, one of the most prominent approaches to IL is a technique known as inverse reinforcement learning (IRL; Arora and Doshi, 2021).

In the same way that deep learning (DL) has enabled RL to scale to high-dimensional state and action spaces (Arulkumaran et al., 2017), DL has also enabled IL to be applied to more complex domains. The most famous of these algorithms is generative adversarial IL (GAIL; Ho and Ermon, 2016), which uses the generative adversarial framework (Goodfellow et al., 2014) to learn a reward function which can then be used with IRL. In their work, they show strong results against a range of baselines, including the simplest IL method, behavioural cloning (BC; Pomerleau, 1988). Although BC underperformed GAIL, they noted that it “was able to reach satisfactory performance with enough data”—a common observation. However, to “generate the datasets, … [they] subsampled the expert trajectories”. While this reduces the number of samples available, it is not the same as providing fewer trajectories without subsampling, as the former tends to result in better coverage of the state space. Pragmatically, collecting trajectory data is a bottleneck for IL, so subsampling should be avoided—and in this case BC’s performance improves. IL results where trajectory data has been subsampled is not directly comparable to results where no subsampling has occurred, and unsurprisingly practitioners may miss critical details revealed in an appendix.222Never mind implementation details not revealed within a paper.

Another major issue in the reproducibility of IL algorithms is encountered in the GAIL paper—they generated their own expert trajectories using an RL agent. Out of the works that we review herein, nearly all generate their own expert data. And naturally, as time passes, the RL algorithms used within IL algorithms get replaced with more performant versions. One of the most significant of these changes is the move from on-policy RL algorithms, as used within the original work on GAIL, to more data-efficient off-policy algorithms (Kostrikov et al., 2019; Blondé and Kalousis, 2019). Do some of the on-policy IL algorithms that were competitive with GAIL (Kim and Park, 2018; Wang et al., 2019; Brantley et al., 2020) still perform competitively when converted to be off-policy?

In this work, we review 6 different deep IL algorithms: GAIL (Ho and Ermon, 2016), generative moment matching imitation learning (GMMIL; Kim and Park, 2018), random expert distillation (RED; Wang et al., 2019), disagreement-regularised imitation learning (DRIL; Brantley et al., 2020), adversarial reward-moment imitation learning (AdRIL; Swamy et al., 2021), and primal Wasserstein imitation learning (PWIL; Dadashi et al., 2021). To minimise differences between them, we update GMMIL, RED, and DRIL to be off-policy, give them access to absorbing state indicators (Kostrikov et al., 2019), and use soft-actor critic (SAC; Haarnoja et al., 2018a) as the base RL algorithm for all methods. We then evaluate them on the standard MuJoCo continuous control benchmark environments (Todorov et al., 2012; Brockman et al., 2016), using the D4RL expert trajectory datasets (Fu et al., 2020) for reproducibility. For a fairer comparison, we give each algorithm the same hyperparameter optimisation budget, and then run them with the best settings for several seeds, and report results using current best practices (Agarwal et al., 2021). Given the many improvements to GAIL, as well as prior effort performing extensive hyperparameter tuning on adversarial IL methods (Orsini et al., 2021), unsurprisingly it remains one of the best IL algorithms to use. With more trajectories, AdRIL performs similarly to GAIL, whilst remaining simple to implement and tune (unlike GAIL). And, as observed many times before, BC becomes a competitive baseline with enough data.

2 Background

2.1 Imitation Learning

The goal of IL is to train a policy333In our case, a neural network with parameters ., , mapping states to a distribution over actions , to mimic an expert policy , given either the expert policy itself, or more commonly, a fixed dataset , of trajectories generated by the expert, where denotes the number of expert trajectories provided.

A common assumption within IL is that both the expert and our agent inhabit a Markov decision process (MDP), defined by the tuple : and are the state and action spaces, is the state transition dynamics, is the reward function, is the initial state distribution, and is the discount factor (used to weight immediate vs. future rewards). The expert policy is optimal in the sense that , where the return at timestep , , is the discounted sum of rewards following a policy from state until the end of the episode at timestep : . While in RL the goal is to interact with the environment in order to find (Sutton and Barto, 2018), in IL we do not have access to , and must instead find assuming that we have access to optimal trajectories.444In this work we do not consider the more complex settings that include suboptimal demonstrations and/or noisy observations. All following methods, unless specified otherwise, can be implemented using neural networks, providing flexible function approximation that can scale to large state and/or action spaces.

2.2 Reduction to Supervised Learning

The simplest method, BC (Pomerleau, 1988), reduces IL to a supervised learning problem. Using to denote the expert’s actions, BC can be formulated as minimising the 1-step deviation from the expert trajectories:

| (1) |

where can be, as in maximum likelihood estimation, the negative log likelihood.

BC is very simple, and benefits from a fixed objective over a stationary data distribution. However, as is only trained on , it can fail catastrophically when it diverges from the states covered by . In order to mitigate this, must be evaluated on the environment in order to correct for discrepancies between and .

Interactive IL methods solve this issue of compounding errors (Ross et al., 2011) by iterating over running in the environment, calculating on ’s state distribution, and using supervised learning on the new data (Daumé et al., 2009; Ross and Bagnell, 2010; Ross et al., 2011). While these approaches solve the data distribution issue, they require access to an interactive expert during training, which may not be available in many scenarios.

2.3 Inverse Reinforcement Learning

IRL instead overcomes this distribution shift by using RL to train to mimic in the environment. The procedure consists of iterating between the following two steps:

-

1.

Construct a reward function555In the parametric case, parameterised by , but potentially nonparametric. using , and optionally

-

2.

Train using RL

RL is more complicated than the typical supervised learning setting. In particular, as the policy evolves, the data distribution changes. In the case of IRL, changing over time can introduce further non-stationarity.

The basic objective of IRL can be stated as:

| (2) |

where is the return with respect to the learned reward function . However, this is underspecified (Ng et al., 2000); e.g., any policy is trivially optimal for . IRL algorithms therefore incorporate one or several of the following three properties.

Firstly, one can match the state-action distribution under , known as the expert’s occupancy measure (Syed et al., 2008; Ho and Ermon, 2016), or, alternatively, feature expectations (Ng et al., 2000). This is achieved using the learned reward function, and is hence dependent on the expressivity of . In particular, the constant function is underspecified and allows an infinite set of solutions. Secondly, one can “penalise” following trajectories taken by (previous iterations of) (Ng et al., 2000). This allows to focus on relevant parts of the state-action space, but implicitly assumes that current/past versions of are suboptimal. Thirdly, one can use the maximum entropy principle (Jaynes, 1957) to find a unique best solution out of the set of solutions that match the expert’s occupancy measure/feature expectations (Ziebart et al., 2008). Using the Lagrangian multiplier , and denoting as the entropy, this results in the following modified RL objective: . Entropy regularisation is a classic technique in RL (Williams and Peng, 1991).

A simple algorithm that uses these properties is soft Q imitation learning (SQIL; Reddy et al., 2020). SQIL uses the constant reward function:

| (3) |

which encourages not just imitating the expert’s actions, but also visiting the same states. Building upon the maximum entropy model of expert behaviour (Ziebart et al., 2008), Reddy et al. (2020) show that their algorithm can be interpreted as regularised BC with a sparsity penalty on the reward function (Piot et al., 2014).666Due to being +1 at expert state-action pairs, and 0 elsewhere. The full SQIL algorithm (for continuous action spaces) trains a SAC agent on half-half mixed batches of expert and agent data with its constant reward function. While simple, the downside of the constant reward function is that as the agent improves, its transitions still get labelled with zero rewards, potentially leading to a collapse in performance with over-training.

2.4 Adversarial Imitation Learning

Adversarial IL methods instead learn a reward function online using adversarial training, motivated by maximum entropy occupancy measure matching (Ho and Ermon, 2016). In generative adversarial network training (Goodfellow et al., 2014), the “generator” is trained to output samples that fool the “discriminator” , whilst the discriminator is trained to discriminate between samples from the generator and the data distribution. This is a minimax game, in which the equilibrium solution corresponds to minimising the Jensen-Shannon divergence between the generated and real distributions. In GAIL, plays the role of the generator, and the discriminator is trained on state-action pairs from and : . Under this formulation, higher values indicate how “expert” believes its input to be.

There are several options for constructing from . Prominent examples include those introduced in GAIL, adversarial inverse reinforcement learning (AIRL; Fu et al., 2018) (corresponding to the reverse Kullback-Leibler (KL) divergence ), and forward KL AIRL (FAIRL; Ghasemipour et al., 2020) (Table 1). As discussed by Kostrikov et al. (2019) and empirically investigated by Jena et al. (2020), there is a potential reward bias in these functions. They note that positive , i.e., , biases agents towards survival, whereas negative , i.e., biases agents towards early termination. This bias means that even constant reward functions can outperform either of these depending on the type of the environment. We recommend the original works for discussions on the properties of various reward functions (Kostrikov et al., 2019; Jena et al., 2020; Ghasemipour et al., 2020). Kostrikov et al. (2019) also make the observation that many IL algorithms do not correctly handle terminal states, and propose appending an absorbing state indicator to states, which allows IRL algorithms to properly estimate values for terminal states. This requires processing complete trajectories from both the expert and the agent, and allowing both the RL agent and the discriminator to learn from the indicator feature.

| Positive (bounded) | Negative (bounded) | ||

|---|---|---|---|

| GAIL | ✗(-) | ✓(✗) | |

| AIRL | ✓(✗) | ✓(✗) | |

| FAIRL | ✓(✓) | ✓(✗) |

While GAIL implicitly returns a reward function, if trained to optimality then will return 0.5 for state-action pairs from both and . Finn et al. (2016) propose changing the form of the to , allowing the optimal reward function to be recovered as . AIRL makes a practical algorithm from this by changing to operate over state-action pairs, as in GAIL, and also further disentangling the recovered reward function as the sum of a reward approximator and a reward shaping term (Ng et al., 1999) : , where is the successor state.

One of the most significant improvements to adversarial IL methods came from moving to more sample-efficient off-policy RL algorithms (Kostrikov et al., 2019; Blondé and Kalousis, 2019), which perform updates on batches of data stored in an experience replay memory (Lin, 1992). The discriminator can similarly be more efficiently trained on replay data, and although this should include an importance weighting term to account for the change in data distribution, in practice this is not needed (Kostrikov et al., 2019).

There are countless more advances within adversarial IL, making it difficult to know which techniques increase performance robustly. Orsini et al. (2021) performed a large-scale hyperparameter search over many of these methods. The key takeaways were that off-policy RL algorithms help improve sample efficiency, discriminator regularisation is key, and that hyperparameter choices which are optimal for AI-generated trajectories are not always the same for human-generated trajectories—a valuable distinction that lies out of the scope of this work. Their work also shows the importance of large-scale empirical evaluation, as their results overturned theoretical claims about the importance of discriminator regularisation (Blondé et al., 2022).

2.5 Distribution Matching Imitation Learning

One disadvantage of adversarial training is the requirement for the discriminator, which is also undergoing training as part of the minimax game, to provide a useful training signal to the generator. There are several other IRL algorithms that also attempt to match the expert and agent’s state-action distributions, but use non-adversarial methods.

One solution is to replace the discriminator with a nonparametric model (Li et al., 2015; Dziugaite et al., 2015). Specifically, distribution matching can be achieved by minimising the maximum mean discrepancy (MMD; Gretton et al., 2012) defined over a reproducing kernel Hilbert space (RKHS). Given distributions, and , and a mapping from features to an RKHS , the MMD is the distance between the mean embeddings of the features: . Using a kernel function , one can calculate .

GMMIL (Kim and Park, 2018) extends this principle to the IL setting. Dropping terms that are constant with respect to , GMMIL has the reward function:

| (4) |

where and are the number of state-action pairs from and , respectively.

Two disadvantages of GMMIL are that 1) the “discriminator” cannot learn relevant features, and 2) it has complexity. RED (Wang et al., 2019) solves these issues by building upon random network distillation (RND; Burda et al., 2018). In RND, a predictor network is trained to minimise the mean squared error (MSE) against a fixed, randomly initialised network . Empirically, the MSE indicates how out-of-distribution new data is. RED uses a Gaussian function over the MSE, resulting in

| (5) |

where is a bandwidth hyperparameter. Wang et al. (2019) interpret RND as an approximate support estimation method, and hence the RED reward function encourages the agent to have a support over its state-action distribution that matches the expert’s.

Similarly to RED, DRIL (Brantley et al., 2020) constructs a reward function based on the disagreement between models trained on the expert data, and can also be interpreted as a support estimation method. However, unlike the other methods which operate over the joint distribution of state-action pairs, DRIL builds simply upon BC, operating over . DRIL first trains an ensemble of different policies using the BC objective (Equation 1) on the expert data, and then uses a function of the (negative of the) variance between the policies to estimate a reward for the agent:

| (6) |

where the is a top quantile of the uncertainty cost computed over the expert dataset. While deep ensembles are known to produce reasonable uncertainty estimates (i.e., variance in outputs) on out-of-distribution data (Lakshminarayanan et al., 2017), it is also possible to approximate them using sampling with dropout (Srivastava et al., 2014). Brantley et al. (2020) showed empirically that this performed comparatively to using independent models.

The Wasserstein distance is another way of defining a distance between two probability distributions on a given metric space , and minimising it can be interpreted as finding the optimal coupling, , for transporting probability mass from one distribution to other, whilst minimising the transport cost given by a metric on (Villani, 2009).777Note that in this subsection alone we use for couplings, in line with optimal transport literature. PWIL (Dadashi et al., 2021) aims to minimise the Wasserstein-2 distance between the agent and expert’s state-action distributions:

| (7) |

where is the (time-dependent) optimal transport cost.

The optimal coupling for policy , , requires the full trajectory generated by , so Dadashi et al. (2021) define a greedy coupling that transports probability mass at each timestep , allowing the cost to be calculated online as the agent interacts with the environment. The cost with the greedy coupling, , is an upper bound to the Wasserstein distance, and hence optimising it still minimises the distance between the agent and expert’s state-action distribution. The reward can be defined by applying a monotonously decreasing function to the cost:

| (8) |

where and are reward scale hyperparameters, and is set to the Euclidean distance between Z-score normalised state-action pairs.

Another view on distribution matching, known as moment matching888Matching the moments of the model distribution to the empirical target distribution., can be achieved through optimising integral probability metrics (IPM; Müller, 1997). IPMs provide a distance function between two distributions, , for a function class containing functions . Different function classes recover different IPMs; for instance, with RKHS gives the MMD and with bounded Lipschitz constant gives the Wasserstein distance.

Swamy et al. (2021) use this to provide a more general view on IL, arguing that training an agent to match the moments of the expert’s reward or action-value distributions will achieve the same performance. When the agent is able to interact with the environment, Swamy et al. (2021) show that it is possible to do reward moment-matching, with a form that is similar to GMMIL, penalising the difference in moments between the agent and expert’s state-action pairs. However, in their view on IL they also focus on the moment matching happening within a minimax game between the agent and the reward function, which motivates the need to update the reward function. Solving for a closed-form reward function in an RKHS with the indicator kernel function, the AdRIL reward function is:

| (9) |

where the final term assigns a negative reward to state-action pairs from old trajectories, inversely proportional to the number of rounds of updates (a hyperparameter that corresponds to a fixed number of updates), and the current number of agent trajectories, . AdRIL can therefore be considered an improvement upon SQIL’s constant reward function, obviating the need for early stopping (Reddy et al., 2020).

3 Experiments

3.1 Environments + Data

We evaluate all algorithms on the popular MuJoCo simulated robotics benchmarks: Ant, HalfCheetah, Hopper, and Walker2D (Todorov et al., 2012; Brockman et al., 2016). To improve reproducibility and enable fairer comparison against other reported results, we use the D4RL “expert-v2” trajectory datasets (Fu et al., 2020).999Although this benchmark was developed for offline RL, we use it for IL by ignoring the saved rewards. When loading the expert data we process each episode to distinguish between “true” and time-dependent terminations (Pardo et al., 2018), and provide absorbing state indicators (Kostrikov et al., 2019); these are also tracked for agent episodes. By default, we maximise available data by not subsampling expert transitions. We choose 3 different trajectory “budgets” for the IL algorithms to learn from: 5, 10 and 25 expert trajectories.

3.2 Algorithms + Hyperparameter Search + Evaluation

All IL algorithms use SAC (Haarnoja et al., 2018a), with automatic entropy tuning (Haarnoja et al., 2018b), as the base RL agent, as theoretically required by SQIL and AdRIL, and as was empirically shown to be performant for adversarial IL algorithms (Orsini et al., 2021). The actor applies a tanh transformation to scale actions , and dual critics are trained to reduce value overestimation (Fujimoto et al., 2018). We also include BC as a baseline, with the same actor architecture. All algorithms are optimised with AdamW (Loshchilov and Hutter, 2019). We use PyTorch (Paszke et al., 2019) for all of our code.

We group the IL algorithms and their variants into 6 key methods: AdRIL, DRIL, GAIL, GMMIL, PWIL, and RED. Our hyperparameter search spaces were determined based on hyperparameter ranges within the original works, a large subset of options tried by Orsini et al. (2021), and general hyperparameters such as learning rate and batch size, resulting in 7-18 hyperparameters to tune per algorithm. For each trajectory budget, we give each algorithm 30 hyperparameter evaluations using Bayesian optimisation (Balandat et al., 2020), with the minimum of the cumulative reward for each of the 4 environments used as the optimisation objective, which can be seen as minimising regret over a set of environments. We then evaluate agents over 10 seeds with the best hyperparameters found, and report performance according to best practices (Agarwal et al., 2021). We therefore train 2880 agents for the final results, not including the extensive training and testing of agents performed while replicating and augmenting IL algorithms.

DRIL, GAIL and RED include several options for their trained discriminators, including network hidden size, depth, activation function, dropout, and weight decay. The GAIL discriminator has additional options, detailed below.

AdRIL options include balanced sampling (alternating sampling expert and agent data batches vs. mixed batches, Swamy et al., 2021), and the discriminator update frequency , which determines the number of “rounds”. We also include “0” in the search space, which if chosen reverts to using the SQIL reward function.

DRIL options include the quantile cutoff . As per the original work (Brantley et al., 2020), we include an auxiliary BC loss during training. For simplicity we use the dropout ensemble. As using absorbing state indicators requires importance sampling, we adapt the BC loss used within DRIL to account for importance weights.

GAIL options include reward shaping (Fu et al., 2018), subtracting from the predicted reward (Fu et al., 2018), the GAIL, AIRL and FAIRL reward functions (Ho and Ermon, 2016; Fu et al., 2018; Ghasemipour et al., 2020), discriminator gradient penalty (Kostrikov et al., 2019; Blondé and Kalousis, 2019), discriminator spectral normalisation (Blondé et al., 2022), discriminator entropy bonus (Orsini et al., 2021), binary cross-entropy, Mixup, and nn-PUGAIL discriminator loss functions (Ho and Ermon, 2016; Chen et al., 2021; Xu and Denil, 2021), and 3 additional hyperparameters for these loss functions (Mixup alpha , positive class prior , and non-negative margin ).

To adapt GMMIL for absorbing states, we adapt it to use the weighted MMD (Yan et al., 2017). Due to the complexity of MMD, it is prohibitive to use the entire expert dataset per update in the off-policy setting, and hence we randomly sample a batch of expert transitions per update. For this reason we also restrict the maximum batch size of GMMIL’s hyperparameter search space.

PWIL options include the reward scale and reward bandwidth scale . To make PWIL more comparable to the other algorithms, we use the “nofill” variant, which does not prefill the replay buffer with expert transitions (Dadashi et al., 2021).

To adapt RED for absorbing states, we train its discriminator with a weighted MSE loss.

The hyperparameter search spaces and optimised hyperparameters can be found documented in the codebase.

3.3 Results

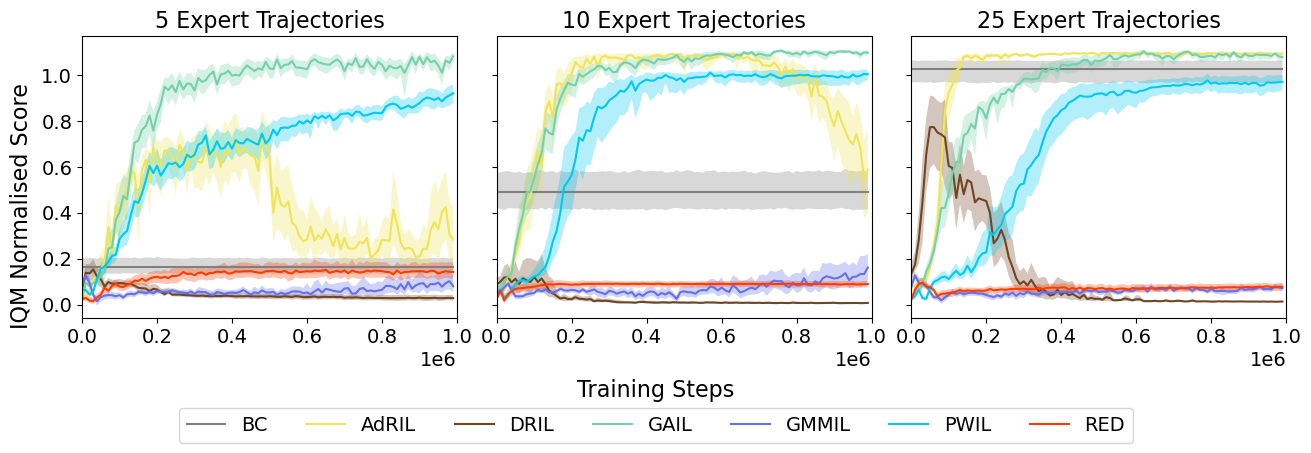

All IL algorithms (except BC) are trained with environment interaction steps, and are evaluated 30 times with a deterministic policy every steps. To aggregate test returns, we calculate the interquartile mean (IQM) over the 30 evaluation episodes, and then once again over seeds, reporting the final IQM ± 95% confidence interval (CI) using 1000 stratified bootstrap samples (Agarwal et al., 2021). The final returns over all environments are reported in Table 2.101010For reference we also include the results of our SAC implementation, trained for steps. We also show performance over time in Figure 1, where the returns are aggregated over environments, normalised by the D4RL environment min and max reference scores: , with the reference scores produced by random and expert agents.

| # Trajectories | Ant | HalfCheetah | Hopper | Walker2D | |

|---|---|---|---|---|---|

| SAC | |||||

| 5 | BC | ||||

| AdRIL | |||||

| DRIL | |||||

| GAIL | |||||

| GMMIL | |||||

| PWIL | |||||

| RED | |||||

| 10 | BC | ||||

| AdRIL | |||||

| DRIL | |||||

| GAIL | |||||

| GMMIL | |||||

| PWIL | |||||

| RED | |||||

| 25 | BC | ||||

| AdRIL | |||||

| DRIL | |||||

| GAIL | |||||

| GMMIL | |||||

| PWIL | |||||

| RED |

Overall, GAIL performs robustly across all trajectory budgets, presumably due to the large amount of research that has gone into improving the performance and robustness of adversarial IL methods. However, AdRIL, which is practically much simpler (and faster), performs the same (with higher sample efficiency) with higher budgets, as near-expert data from the agent is less likely to overpower the effect of the expert data. This can also be seen in the hyperparameter optimisation, with the discriminator update frequency going from 0 (reverting to the SQIL reward function) to 12500 to 25000 as the trajectory budget increased. PWIL also performed well across budgets. Hyperparameter optimisation set the reward () and reward bandwidth () scales to 1 for 5 trajectories, but increased the values for these for 10 and 25 trajectories. However, we note that since each environment step requires computing the reward between the current state-action pair and a subset of the expert data, starting with the entire dataset at the start of each episode, it is computationally expensive for high budgets. BC scales well as the number of trajectories increase.

Unfortunately, we were unable to successfully optimise off-policy versions of DRIL, GMMIL and RED. In an early version of our codebase we were able to optimise their original, on-policy versions successfully, so with considerable effort put into hyperparameter tuning and trying additional regularisation strategies, we believe that their is some fundamental issue caused from going from training on on-policy to off-policy returns. One would expect that for successful training the inferred rewards for the agent’s trajectories should increase over time, but this was observed for runs of these methods as well, and is therefore not predictive of successful imitation. Q-values are a function of the predicted rewards, so did not provide further diagnostic insights. We also created variants of DRIL and RED in which the discriminators were trained online, similarly to GAIL, but were unsuccessful; however, there are many ways to do so, and our attempts do not preclude a successful online discriminator variant from being developed. Finally, we note that DRIL performs better at the start of training, which we can attribute to the BC auxiliary loss; experiments with uncertainty-only DRIL (UO-DRIL; Brantley et al., 2020) did not show this trend, with scores remaining low during the entirety of training.

Some weak trends we noticed from hyperparameter optimisation were that both batch and discriminator sizes increased with the trajectory budget. We hypothesise that the former is due to added stochasticity in optimisation aiding when data is scarce, whilst the latter is due to the need to prevent overfitting in low-data regimes. However, we caution that these trends do not always hold, as, for example, the optimal batch size for GAIL decreased with the trajectory budget.

4 Discussion

In this paper, we took a pragmatic look at deep IL methods, reviewing the relationships between the different approaches, updated older methods to use more data-efficient off-policy RL algorithms, and finally performed a fair comparison between them on a standard benchmark. As BC is simple and does not involve environment interaction, we recommend that it should always be considered as a baseline. AdRIL is an attractive option for deep IL due to its simplicity and strong performance, although it has one critical hyperparameter that needs tuning. And although the myriad of options for GAIL make it more complicated to work with, we have empirical data on what does and doesn’t work (Orsini et al., 2021).

Although we were only able to test extensively on standard environments with expert data, we plan to release our framework to enable further, fair experiments on different environments, datasets, algorithms. Valuable open questions in the field of IL remain in the use of proxy reward functions for evaluating IL (Hussenot et al., 2021), and how best to learn from human demonstration data (Orsini et al., 2021).

This work was supported by JST, Moonshot R&D Grant Number JPMJMS2012.

References

- Agarwal et al. (2021) Rishabh Agarwal, Max Schwarzer, Pablo Samuel Castro, Aaron C Courville, and Marc Bellemare. Deep Reinforcement Learning at the Edge of the Statistical Precipice. In NeurIPS, 2021.

- Andrychowicz et al. (2021) Marcin Andrychowicz, Anton Raichuk, Piotr Stańczyk, Manu Orsini, Sertan Girgin, Raphael Marinier, Léonard Hussenot, Matthieu Geist, Olivier Pietquin, Marcin Michalski, et al. What Matters in On-policy Reinforcement Learning? A Large-scale Empirical Study. In ICLR, 2021.

- Arora and Doshi (2021) Saurabh Arora and Prashant Doshi. A Survey of Inverse Reinforcement Learning: Challenges, Methods and Progress. Artif. Intell., 297:103500, 2021.

- Arulkumaran et al. (2017) Kai Arulkumaran, Marc Peter Deisenroth, Miles Brundage, and Anil Anthony Bharath. Deep Reinforcement Learning: A Brief Survey. IEEE SPM, 34(6):26–38, 2017.

- Balandat et al. (2020) Maximilian Balandat, Brian Karrer, Daniel Jiang, Samuel Daulton, Ben Letham, Andrew G Wilson, and Eytan Bakshy. BoTorch: A Framework for Efficient Monte-Carlo Bayesian Optimization. In NeurIPS, 2020.

- Blondé and Kalousis (2019) Lionel Blondé and Alexandros Kalousis. Sample-efficient Imitation Learning via Generative Adversarial Nets. In AISTATS, 2019.

- Blondé et al. (2022) Lionel Blondé, Pablo Strasser, and Alexandros Kalousis. Lipschitzness is All You Need to Tame Off-policy Generative Adversarial Imitation Learning. Mach. Learn., 111(4):1431–1521, 2022.

- Brantley et al. (2020) Kianté Brantley, Wen Sun, and Mikael Henaff. Disagreement-regularized Imitation Learning. In ICLR, 2020.

- Brockman et al. (2016) Greg Brockman, Vicki Cheung, Ludwig Pettersson, Jonas Schneider, John Schulman, Jie Tang, and Wojciech Zaremba. OpenAI Gym. arXiv:1606.01540, 2016.

- Burda et al. (2018) Yuri Burda, Harrison Edwards, Amos Storkey, and Oleg Klimov. Exploration by Random Network Distillation. In ICLR, 2018.

- Chen et al. (2021) Annie S Chen, HyunJi Nam, Suraj Nair, and Chelsea Finn. Batch Exploration with Examples for Scalable Robotic Reinforcement Learning. IEEE RA-L, 6(3):4401–4408, 2021.

- Dadashi et al. (2021) Robert Dadashi, Leonard Hussenot, Matthieu Geist, and Olivier Pietquin. Primal Wasserstein Imitation Learning. In ICLR, 2021.

- Daumé et al. (2009) Hal Daumé, John Langford, and Daniel Marcu. Search-based Structured Prediction. Mach. Learn., 75(3):297–325, 2009.

- Dziugaite et al. (2015) Gintare Karolina Dziugaite, Daniel M Roy, and Zoubin Ghahramani. Training Generative Neural Networks via Maximum Mean Discrepancy Optimization. In UAI, 2015.

- Engstrom et al. (2020) Logan Engstrom, Andrew Ilyas, Shibani Santurkar, Dimitris Tsipras, Firdaus Janoos, Larry Rudolph, and Aleksander Madry. Implementation Matters in Deep Policy Gradients: A Case Study on PPO and TRPO. In ICLR, 2020.

- Finn et al. (2016) Chelsea Finn, Paul Christiano, Pieter Abbeel, and Sergey Levine. A Connection Between Generative Adversarial Networks, Inverse Reinforcement Learning, and Energy-based Models. arXiv:1611.03852, 2016.

- Fu et al. (2018) Justin Fu, Katie Luo, and Sergey Levine. Learning Robust Rewards with Adversarial Inverse Reinforcement Learning. In ICLR, 2018.

- Fu et al. (2020) Justin Fu, Aviral Kumar, Ofir Nachum, George Tucker, and Sergey Levine. D4RL: Datasets for Deep Data-driven Reinforcement Learning. arXiv:2004.07219, 2020.

- Fujimoto et al. (2018) Scott Fujimoto, Herke Hoof, and David Meger. Addressing Function Approximation Error in Actor-critic Methods. In ICML, 2018.

- Ghasemipour et al. (2020) Seyed Kamyar Seyed Ghasemipour, Richard Zemel, and Shixiang Gu. A Divergence Minimization Perspective on Imitation Learning Methods. In CoRL, 2020.

- Goodfellow et al. (2014) Ian J Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative Adversarial Networks. In NeurIPS, 2014.

- Gretton et al. (2012) Arthur Gretton, Karsten M Borgwardt, Malte J Rasch, Bernhard Schölkopf, and Alexander Smola. A Kernel Two-sample Test. JMLR, 13(1):723–773, 2012.

- Haarnoja et al. (2018a) Tuomas Haarnoja, Aurick Zhou, Pieter Abbeel, and Sergey Levine. Soft Actor-critic: Off-policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor. In ICML, 2018a.

- Haarnoja et al. (2018b) Tuomas Haarnoja, Aurick Zhou, Kristian Hartikainen, George Tucker, Sehoon Ha, Jie Tan, Vikash Kumar, Henry Zhu, Abhishek Gupta, Pieter Abbeel, et al. Soft Actor-critic Algorithms and Applications. arXiv:1812.05905, 2018b.

- Henderson et al. (2018) Peter Henderson, Riashat Islam, Philip Bachman, Joelle Pineau, Doina Precup, and David Meger. Deep Reinforcement Learning that Matters. In AAAI, 2018.

- Ho and Ermon (2016) Jonathan Ho and Stefano Ermon. Generative Adversarial Imitation Learning. In NeurIPS, 2016.

- Hussein et al. (2017) Ahmed Hussein, Mohamed Medhat Gaber, Eyad Elyan, and Chrisina Jayne. Imitation Learning: A Survey of Learning Methods. ACM CSUR, 50(2):1–35, 2017.

- Hussenot et al. (2021) Leonard Hussenot, Marcin Andrychowicz, Damien Vincent, Robert Dadashi, Anton Raichuk, Lukasz Stafiniak, Sertan Girgin, Raphael Marinier, Nikola Momchev, Sabela Ramos, et al. Hyperparameter Selection for Imitation Learning. In ICML, 2021.

- Jaynes (1957) Edwin T Jaynes. Information Theory and Statistical Mechanics. Phys. Rev., 106(4):620, 1957.

- Jena et al. (2020) Rohit Jena, Siddharth Agrawal, and Katia Sycara. Addressing Reward Bias in Adversarial Imitation Learning with Neutral Reward Functions. In Deep RL Workshop, NeurIPS, 2020.

- Kim and Park (2018) Kee-Eung Kim and Hyun Soo Park. Imitation Learning via Kernel Mean Embedding. In AAAI, 2018.

- Kostrikov et al. (2019) Ilya Kostrikov, Kumar Krishna Agrawal, Debidatta Dwibedi, Sergey Levine, and Jonathan Tompson. Discriminator-actor-critic: Addressing Sample Inefficiency and Reward Bias in Adversarial Imitation Learning. In ICLR, 2019.

- Lakshminarayanan et al. (2017) Balaji Lakshminarayanan, Alexander Pritzel, and Charles Blundell. Simple and Scalable Predictive Uncertainty Estimation using Deep Ensembles. In NeurIPS, 2017.

- Li et al. (2015) Yujia Li, Kevin Swersky, and Rich Zemel. Generative Moment Matching Networks. In ICML, 2015.

- Lin (1992) Long-Ji Lin. Self-improving Reactive Agents Based on Reinforcement Learning, Planning and Teaching. Mach. Learn., 8(3-4):293–321, 1992.

- Loshchilov and Hutter (2019) Ilya Loshchilov and Frank Hutter. Decoupled Weight Decay Regularization. In ICLR, 2019.

- Machado et al. (2018) Marlos C Machado, Marc G Bellemare, Erik Talvitie, Joel Veness, Matthew Hausknecht, and Michael Bowling. Revisiting the Arcade Learning Environment: Evaluation Protocols and Open Problems for General Agents. JAIR, 61:523–562, 2018.

- Müller (1997) Alfred Müller. Integral Probability Metrics and Their Generating Classes of Functions. Adv. Appl. Probab., 29(2):429–443, 1997.

- Musgrave et al. (2020) Kevin Musgrave, Serge Belongie, and Ser-Nam Lim. A Metric Learning Reality Check. In ECCV, 2020.

- Ng et al. (1999) Andrew Y Ng, Daishi Harada, and Stuart Russell. Policy Invariance Under Reward Transformations: Theory and Application to Reward Shaping. In ICML, 1999.

- Ng et al. (2000) Andrew Y Ng, Stuart J Russell, et al. Algorithms for Inverse Reinforcement Learning. In ICML, 2000.

- Oliver et al. (2018) Avital Oliver, Augustus Odena, Colin A Raffel, Ekin Dogus Cubuk, and Ian Goodfellow. Realistic Evaluation of Deep Semi-supervised Learning Algorithms. In NeurIPS, 2018.

- Orsini et al. (2021) Manu Orsini, Anton Raichuk, Léonard Hussenot, Damien Vincent, Robert Dadashi, Sertan Girgin, Matthieu Geist, Olivier Bachem, Olivier Pietquin, and Marcin Andrychowicz. What Matters for Adversarial Imitation Learning? In NeurIPS, 2021.

- Pardo et al. (2018) Fabio Pardo, Arash Tavakoli, Vitaly Levdik, and Petar Kormushev. Time Limits in Reinforcement Learning. In ICML, 2018.

- Paszke et al. (2019) Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In NeurIPS, 2019.

- Piot et al. (2014) Bilal Piot, Matthieu Geist, and Olivier Pietquin. Boosted and Reward-regularized Classification for Apprenticeship Learning. In AAMAS, 2014.

- Pomerleau (1988) Dean A Pomerleau. ALVINN: An Autonomous Land Vehicle in a Neural Network. In NeurIPS, 1988.

- Raffin et al. (2021) Antonin Raffin, Ashley Hill, Adam Gleave, Anssi Kanervisto, Maximilian Ernestus, and Noah Dormann. Stable-baselines3: Reliable Reinforcement Learning Implementations. JMLR, 22(1):12348–12355, 2021.

- Reddy et al. (2020) Siddharth Reddy, Anca D Dragan, and Sergey Levine. SQIL: Imitation Learning via Reinforcement Learning with Sparse Rewards. In ICLR, 2020.

- Ross and Bagnell (2010) Stéphane Ross and Drew Bagnell. Efficient Reductions for Imitation Learning. In AISTATS, 2010.

- Ross et al. (2011) Stéphane Ross, Geoffrey Gordon, and Drew Bagnell. A Reduction of Imitation Learning and Structured Prediction to No-regret Online Learning. In AISTATS, 2011.

- Srivastava et al. (2014) Nitish Srivastava, Geoffrey Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. JMLR, 15(1):1929–1958, 2014.

- Sutton and Barto (2018) Richard S Sutton and Andrew G Barto. Reinforcement Learning: An Introduction. MIT Press, 2018.

- Swamy et al. (2021) Gokul Swamy, Sanjiban Choudhury, J Andrew Bagnell, and Steven Wu. Of Moments and Matching: A Game-theoretic Framework for Closing the Imitation Gap. In ICML, 2021.

- Syed et al. (2008) Umar Syed, Michael Bowling, and Robert E Schapire. Apprenticeship Learning using Linear Programming. In ICML, 2008.

- Todorov et al. (2012) Emanuel Todorov, Tom Erez, and Yuval Tassa. MuJoCo: A Physics Engine for Model-based Control. In IROS, 2012.

- Villani (2009) Cédric Villani. Optimal Transport: Old and New. Springer, 2009.

- Wang et al. (2019) Ruohan Wang, Carlo Ciliberto, Pierluigi Vito Amadori, and Yiannis Demiris. Random Expert Distillation: Imitation Learning via Expert Policy Support Estimation. In ICML, 2019.

- Williams and Peng (1991) Ronald J Williams and Jing Peng. Function Optimization using Connectionist Reinforcement Learning Algorithms. Conn. Sci., 3(3):241–268, 1991.

- Xu and Denil (2021) Danfei Xu and Misha Denil. Positive-unlabeled Reward Learning. In CoRL, 2021.

- Yan et al. (2017) Hongliang Yan, Yukang Ding, Peihua Li, Qilong Wang, Yong Xu, and Wangmeng Zuo. Mind the Class Weight Bias: Weighted Maximum Mean Discrepancy for Unsupervised Domain Adaptation. In CVPR, 2017.

- Ziebart et al. (2008) Brian D Ziebart, Andrew L Maas, J Andrew Bagnell, and Anind K Dey. Maximum Entropy Inverse Reinforcement Learning. In AAAI, 2008.