A polynomial-time algorithm for learning nonparametric causal graphs

Abstract

We establish finite-sample guarantees for a polynomial-time algorithm for learning a nonlinear, nonparametric directed acyclic graphical (DAG) model from data. The analysis is model-free and does not assume linearity, additivity, independent noise, or faithfulness. Instead, we impose a condition on the residual variances that is closely related to previous work on linear models with equal variances. Compared to an optimal algorithm with oracle knowledge of the variable ordering, the additional cost of the algorithm is linear in the dimension and the number of samples . Finally, we compare the proposed algorithm to existing approaches in a simulation study.

1 Introduction

Modern machine learning (ML) methods are driven by complex, high-dimensional, and nonparametric models that can capture highly nonlinear phenomena. These models have proven useful in wide-ranging applications including vision, robotics, medicine, and natural language. At the same time, the complexity of these methods often obscure their decisions and in many cases can lead to wrong decisions by failing to properly account for—among other things—spurious correlations, adversarial vulnerability, and invariances (Bottou, 2015; Schölkopf, 2019; Bühlmann, 2018). This has led to a growing literature on correcting these problems in ML systems. A particular example of this that has received widespread attention in recent years is the problem of causal inference, which is closely related to these issues. While substantial methodological progress has been made towards embedding complex methods such as deep neural networks and RKHS embeddings into learning causal graphical models (Huang et al., 2018; Mitrovic et al., 2018; Zheng et al., 2020; Yu et al., 2019; Lachapelle et al., 2019; Zhu and Chen, 2019; Ng et al., 2019), theoretical progress has been slower and typically reserved for particular parametric models such as linear (Chen et al., 2019; Wang and Drton, 2020; Ghoshal and Honorio, 2017, 2018; Loh and Bühlmann, 2014; van de Geer and Bühlmann, 2013; Aragam and Zhou, 2015; Aragam et al., 2015, 2019) and generalized linear models (Park and Raskutti, 2017; Park, 2018).

In this paper, we study the problem of learning directed acyclic graphs (DAGs) from data in a nonparametric setting. Unlike existing work on this problem, we do not require linearity, additivity, independent noise, or faithfulness. Our approach is model-free and nonparametric, and uses nonparametric estimators (kernel smoothers, neural networks, splines, etc.) as “plug-in” estimators. As such, it is agnostic to the choice of nonparametric estimator chosen. Unlike existing consistency theory in the nonparametric setting (Peters et al., 2014; Hoyer et al., 2009; Bühlmann et al., 2014; Rothenhäusler et al., 2018; Huang et al., 2018; Tagasovska et al., 2018; Nowzohour and Bühlmann, 2016), we provide explicit (nonasymptotic) finite sample complexity bounds and show that the resulting method has polynomial time complexity. The method we study is closely related to existing algorithms that first construct a variable ordering (Ghoshal and Honorio, 2017; Chen et al., 2019; Ghoshal and Honorio, 2018; Park and Kim, 2020). Despite this being a well-studied problem, to the best of our knowledge our analysis is the first to provide explicit, simultaneous statistical and computational guarantees for learning nonparametric DAGs.

Contributions

Figure 1(a) illustrates a key motivation for our work: While there exist methods that obtain various statistical guarantees, they lack provably efficient algorithms, or vice versa. As a result, these methods can fail in simple settings. Our focus is on simultaneous computational and statistical guarantees that are explicit and nonasymptotic in a model-free setting. More specifically, our main contributions are as follows:

-

•

We show that the algorithms of Ghoshal and Honorio (2017) and Chen et al. (2019) rigourously extend to a model-free setting, and provide a method-agnostic analysis of the resulting extension (Theorem 4.1). That is, the time and sample complexity bounds depend on the choice of estimator used, and this dependence is made explicit in the bounds (Section 3.2, Section 4).

-

•

We prove that this algorithm runs in at most time and needs at most samples (Corollary 4.2). Moreover, the exponential dependence on can be improved by imposing additional sparsity or smoothness assumptions, and can even be made polynomial (see Section 4 for discussion). This is an expected consequence of our estimator-agnostic approach.

- •

- •

-

•

Finally, we run a simulation study to evaluate the resulting algorithm in a variety of settings against seven state-of-the-art algorithms (Section 5).

Our simulation results can be summarized as follows: When implemented using generalized additive models (Hastie and Tibshirani, 1990), our method outperforms most state-of-the-art methods, particularly on denser graphs with hub nodes. We emphasize here, however, that our main contributions lay in the theoretical analysis, specifically providing a polynomial-time algorithm with sample complexity guarantees.

Related work

The literature on learning DAGs is vast, so we focus only on related work in the nonparametric setting. The most closely related line work considers additive noise models (ANMs) (Peters et al., 2014; Hoyer et al., 2009; Bühlmann et al., 2014; Chicharro et al., 2019), and prove a variety of identifiability and consistency guarantees. Compared to our work, the identifiability results proved in these papers require that the structural equations are (a) nonlinear with (b) additive, independent noise. Crucially, these papers focus on (generally asymptotic) statistical guarantees without any computational or algorithmic guarantees. There is also a closely related line of work for bivariate models (Mooij et al., 2016; Monti et al., 2019; Wu and Fukumizu, 2020; Mitrovic et al., 2018) as well as the post-nonlinear model (Zhang and Hyvärinen, 2009). Huang et al. (2018) proposed a greedy search algorithm using an RKHS-based generalized score, and proves its consistency assuming faithfulness. Rothenhäusler et al. (2018) study identifiability of a general family of partially linear models and prove consistency of a score-based search procedure in finding an equivalence class of structures. There is also a recent line of work on embedding neural networks and other nonparametric estimators into causal search algorithms (Lachapelle et al., 2019; Zheng et al., 2020; Yu et al., 2019; Ng et al., 2019; Zhu and Chen, 2019) without theoretical guarantees. While this work was in preparation, we were made aware of the recent work (Park, 2020) that proposes an algorithm that is similar to ours—also based on (Ghoshal and Honorio, 2017) and (Chen et al., 2019)—and establishes its sample complexity for linear Gaussian models. In comparison to these existing lines of work, our focus is on simultaneous computational and statistical guarantees that are explicit and nonasymptotic (i.e. valid for all finite and ), for the fully nonlinear, nonparametric, and model-free setting.

Notation

Subscripts (e.g. ) will always be used to index random variables and superscripts (e.g. ) to index observations. For a matrix , is the th column of . We denote the indices by , and frequently abuse notation by identifying the indices with the random vector . For example, nodes are interchangeable with their indices (and subsets thereof), so e.g. is the same as .

2 Background

Let be a -dimensional random vector and a DAG where we implicitly assume . The parent set of a node is defined as , or simply for short. A source node is any node such that and an ancestral set is any set such that . The graph is called a Bayesian network (BN) for if it satisfies the Markov condition, i.e. that each variable is conditionally independent of its non-descendants given its parents. Intuitively, a BN for can be interpreted as a representation of the direct and indirect relationships between the , e.g. an edge indicates that depends directly on , and not vice versa. Under additional assumptions such as causal minimality and no unmeasured confounding, these arrows may be interpreted causally; for more details, see the surveys (Bühlmann, 2018; Schölkopf, 2019) or the textbooks (Lauritzen, 1996; Koller and Friedman, 2009; Spirtes et al., 2000; Pearl, 2009; Peters et al., 2017).

The goal of structure learning is to learn a DAG from i.i.d. observations . Throughout this paper, we shall exploit the following well-known fact: To learn , it suffices to learn a topological sort of , i.e. an ordering such that . A brief review of this material can be found in the supplement.

Equal variances

Recently, a new approach has emerged which was originally cast as an approach to learn equal variance DAGs (Ghoshal and Honorio, 2017; Chen et al., 2019), although it has since been generalized beyond the equal variance case (Ghoshal and Honorio, 2018; Park and Kim, 2020; Park, 2020). An equal variance DAG is a linear structural equation model (SEM) that satisfies

| (1) |

for some weights . Under the model (1), a simple algorithm can learn the graph by first learning a topological sort . For these models, we have the following decomposition of the variance:

| (2) |

Thus, as long as , we have . It follows that as long as does not depend on , it is possible to identify a source in by simply minimizing the residual variances. This is the essential idea behind algorithms based on equal variances in the linear setting (Ghoshal and Honorio, 2017; Chen et al., 2019). Alternatively, it is possible to iteratively identify best sinks by minimizing marginal precisions. Moreover, this argument shows that the assumption of linearity is not crucial, and this idea can readily be extended to ANMs, as in (Park, 2020). Indeed, the crucial assumption in this argument is the independence of the noise and the parents ; in the next section we show how these assumptions can be removed altogether.

Layer decomposition of a DAG

Given a DAG , define a collection of sets as follows: , and for , is the set of all source nodes in the subgraph formed by removing the nodes in . So, e.g., is the set of source nodes in and . This decomposes into layers, where each layer consists of nodes that are sources in the subgraph , and is an ancestral set for each . Let denote the number of “layers” in , be the corresponding layers. The quantity effectively measure the depth of a DAG. See Figure 1(b) for an illustration.

Learning is equivalent to learning the sets , since any topological sort of can be determined from , and from any sort , the graph can be recovered via variable selection. Unlike a topological sort of , which may not be unique, the layer decomposition is always unique. Therefore, without loss of generality, in the sequel we consider the problem of identifying and learning .

3 Identifiability and algorithmic consequences

This section sets the stage for our main results on learning nonparametric DAGs: First, we show that existing identifiability results for equal variances generalize to a family of model-free, nonparametric distributions. Second, we show that this motivates an algorithm very similar to existing algorithms in the equal variance case. We emphasize that various incarnations of these ideas have appeared in previous work (Ghoshal and Honorio, 2017; Chen et al., 2019; Ghoshal and Honorio, 2018; Park and Kim, 2020; Park, 2020), and our effort in this section is to unify these ideas and show that the same ideas can be applied in more general settings without linearity or independent noise. Once this has been done, our main sample complexity result is presented in Section 4.

3.1 Nonparametric identifiability

In general, a BN for need not be unique, i.e. is not necessarily identifiable from . A common strategy in the literature to enforce identifiability is to impose structural assumptions on the conditional distributions , for which there is a broad literature on identifiability. Our first result shows that identifiability is guaranteed as long as the residual variances do not depend on . This is a natural generalization of the notion of equality of variances for linear models (Peters and Bühlmann, 2013; Ghoshal and Honorio, 2017; Chen et al., 2019).

Theorem 3.1.

If does not depend on , then is identifiable from .

The proof of Theorem 3.1 can be found in the supplement. This result makes no structural assumptions on the local conditional probabilities . To illustrate, we consider some examples below.

Example 1 (Causal pairs, (Mooij et al., 2016)).

Consider a simple model on two variables: with . Then as long as is nonconstant, Theorem 3.1 implies the causal order is identifiable. No additional assumptions on the noise or functional relationships are necessary.

Example 2 (Binomial models, (Park and Raskutti, 2017)).

Assume and with . Then Theorem 3.1 implies that if does not depend on , then is identifiable.

Example 3 (Generalized linear models).

The previous example can of course be generalized to arbitrary generalized linear models: Assume , where and is the partition function. Then Theorem 3.1 implies that if does not depend on , then is identifiable.

Example 4 (Additive noise models, (Peters et al., 2014)).

Finally, we observe that Theorem 3.1 generalizes existing results for ANMs: In an ANM, we have with . If , then an argument similar to (2) shows that ANMs with equal variances are identifiable. Theorem 3.1 applies to more general additive noise models with heteroskedastic, uncorrelated (i.e. not necessarily independent) noise.

Unequal variances

Early work on this problem focused on the case of equal variances (Ghoshal and Honorio, 2017; Chen et al., 2019), as we have done here. This assumption illustrates the main technical difficulties in proving identifiability, and it is well-known by now that equality of variances is not necessary, and a weaker assumption that allows for heterogeneous residual variances suffices in special cases (Ghoshal and Honorio, 2018; Park and Kim, 2020). Similarly, the extension of Theorem 3.1 to such heterogeneous models is straightforward, and omitted for brevity; see Appendix B.1 in the supplement for additional discussion and simulations. In the sequel, we focus on the case of equality for simplicity and ease of interpretation.

3.2 A polynomial-time algorithm

The basic idea behind the top-down algorithm proposed in (Chen et al., 2019) can easily be extended to the setting of Theorem 3.1, and is outlined in Algorithm 1. The only modification is to replace the error variances from the linear model (1) with the corresponding residual variances (i.e. ), which are well-defined for any with finite second moments.

A natural idea to translate Algorithm 1 into an empirical algorithm is to replace the residual variances with an estimate based on the data. One might then hope to use similar arguments as in the linear setting to establish consistency and bound the sample complexity. Perhaps surprisingly, this does not work unless the topological sort of is unique. When there is more than one topological sort, it becomes necessary to uniformly bound the errors of all possible residual variances—and in the worst case there are exponentially many ( to be precise) possible residual variances. The key issue is that the sets in Algorithm 1 are random (i.e. data-dependent), and hence unknown in advance. This highlights a key difference between our algorithm and existing work for linear models such as (Ghoshal and Honorio, 2017, 2018; Chen et al., 2019; Park, 2020): In our setting, the residual variances cannot be written as simple functions of the covariance matrix , which simplifies the analysis for linear models considerably. Indeed, although the same exponential blowup arises for linear models, in that case consistent estimation of the covariance matrix provides uniform control over all possible residual variances (e.g., see Lemma 6 in (Chen et al., 2019)). In the nonparametric setting, this reduction no longer applies.

To get around this technical issue, we modify Algorithm 1 to learn one layer at a time, as outlined in Algorithm 2 (see Section 2 for details on ). As a result, we need only estimate , which involves regression problems with at most nodes. We use the plug-in estimator (3) for this, although more sophisticated estimators are available (Doksum et al., 1995; Robins et al., 2008). This also necessitates the use of sample splitting in Step 3(a) of Algorithm 2, which is necessary for the theoretical arguments but not needed in practice.

The overall computational complexity of Algorithm 2, which we call NPVAR, is , where is the complexity of computing each nonparametric regression function . For example, if a kernel smoother is used, and thus the overall complexity is . For comparison, an oracle algorithm that knows the true topological order of in advance would still need to compute regression functions, and hence would have complexity . Thus, the extra complexity of learning the topological order is only , which is linear in the dimension and the number of samples. Furthermore, under additional assumptions on the sparsity and/or structure of the DAG, the time complexity can be reduced further, however, our analysis makes no such assumptions.

-

1.

Set and for , let

-

2.

Return the DAG that corresponds to the topological sort .

Input: , .

-

1.

Set , , , .

-

2.

Set .

-

3.

For :

-

(a)

Randomly split the samples in half and let .

-

(b)

For each , use the first half of the sample to estimate via a nonparametric estimator .

-

(c)

For each , use the second half of the sample to estimate the residual variances via the plug-in estimator

(3) -

(d)

Set and .

-

(a)

-

4.

Return .

3.3 Comparison to existing algorithms

Compared to existing algorithms based on order search and equal variances, NPVAR applies to more general models without parametric assumptions, independent noise, or additivity. It is also instructive to make comparisons with greedy score-based algorithms such as causal additive models (CAM, (Bühlmann et al., 2014)) and greedy DAG search (GDS, (Peters and Bühlmann, 2013)). We focus here on CAM since it is more recent and applies in nonparametric settings, however, similar claims apply to GDS as well.

CAM is based around greedily minimizing the log-likelihood score for additive models with Gaussian noise. In particular, it is not guaranteed to find a global minimizer, which is as expected since it is based on a nonconvex program. This is despite the global minimizer—if it can be found—having good statistical properties. The next example shows that, in fact, there are identifiable models for which CAM will find the wrong graph with high probability.

Example 5.

Consider the following three-node additive noise model with :

| (4) |

In the supplement (Appendix D), we show the following: There exist infinitely many nonlinear functions for which the CAM algorithm returns an incorrect order under the model (4). This is illustrated empirically in Figure 1(a) for the nonlinearities and . In each of these examples, the model satisfies the identifiability conditions for CAM as well as the conditions required in our work.

We stress that this example does not contradict the statistical results in Bühlmann et al. (2014): It only shows that the algorithm may not find a global minimizer and as a result, returns an incorrect variable ordering. Correcting this discrepancy between the algorithmic and statistical results is a key motivation behind our work. In the next section, we show that NPVAR provably learns the true ordering—and hence the true DAG—with high probability.

4 Sample complexity

Our main result analyzes the sample complexity of NPVAR (Algorithm 2). Recall the layer decomposition from Section 2 and define . Let .

Condition 1 (Regularity).

For all and all , (a) , (b) , (c) , and (d) .

These are the standard regularity conditions from the literature on nonparametric statistics (Györfi et al., 2006; Tsybakov, 2009), and can be weakened (e.g. if the and are unbounded, see (Kohler et al., 2009)). We impose these stronger assumptions in order to simplify the statements and focus on technical details pertinent to graphical modeling and structure learning. The next assumption is justified by Theorem 3.1, and as we have noted, can also be weakened.

Condition 2 (Identifiability).

does not depend on .

Our final condition imposes some basic finiteness and consistency requirements on the chosen nonparametric estimator , which we view as a function for estimating from an arbitrary distribution over the pair .

Condition 3 (Estimator).

The nonparametric estimator satisfies (a) and (b) .

This is a mild condition that is satisfied by most popular estimators including kernel smoothers, nearest neighbours, and splines, and in particular, Condition 3(a) is only used to simplify the theorem statement and can easily be relaxed.

Once the layer decomposition is known, the graph can be learned via standard nonlinear variable selection methods (see Appendix A in the supplement).

A feature of this result is that it is agnostic to the choice of estimator , as long as it satisfies Condition 3. The dependence on is quantified through , which depends on the sample size and represents the rate of convergence of the chosen nonparametric estimator. Instead of choosing a specific estimator, Theorem 4.1 is stated so that it can be applied to general estimators. As an example, suppose each is Lipschitz continuous and is a standard kernel smoother. Then

Thus we have the following special case:

Corollary 4.2.

Assume each is Lipschitz continuous. Then can be computed in time and as long as .

This is the best possible rate attainable by any algorithm without imposing stronger regularity conditions (see e.g. §5 in (Györfi et al., 2006)). Furthermore, can be replaced with the error of an arbitrary estimator of the residual variance itself (i.e. something besides the plug-in estimator (3)); see Proposition C.4 in Appendix C for details.

To illustrate these results, consider the problem of finding the direction of a Markov chain whose transition functions are each Lipschitz continuous. Then , so Corollary 4.2 implies that samples are sufficient to learn the order—and hence the graph as well as each transition function—with high probability. Since for any Markov chain, this particular example maximizes the dependence on ; at the opposite extreme a bipartite graph with would require only . In these lower bounds, it is not necessary to know the type of graph (e.g. Markov chain, bipartite) or the depth .

Choice of

The lower bound is not strictly necessary, and is only used to simplify the lower bound in (5). In general, taking sufficiently small works well in practice. The main tradeoff in choosing is computational: A smaller may lead to “splitting” one of the layers . In this case, NPVAR still recovers the structure correctly, but the splitting results in redundant estimation steps in Step 3 (i.e. instead of estimating in one iteration, it takes multiple iterations to estimate correctly). The upper bound, however, is important: If is too large, then we may include spurious nodes in the layer , which would cause problems in subsequent iterations.

Nonparametric rates

Theorem 4.1 and Corollary 4.2 make no assumptions on the sparsity of or smoothness of the mean functions . For this reason, the best possible rate for a naïve plug-in estimator of is bounded by the minimax rate for estimating . For practical reasons, we have chosen to focus on an agnostic analysis that does not rely on any particular estimator. Under additional sparsity and smoothness assumptions, these rates can be improved, which we briefly discuss here.

For example, by using adaptive estimators such as RODEO (Lafferty and Wasserman, 2008) or GRID (Giordano et al., 2020), the sample complexity will depend only on the sparsity of , i.e. , where is the th partial derivative. Another approach that does not require adaptive estimation is to assume and define . Then , and the resulting sample complexity depends on instead of . For a Markov chain with this leads to a substantial improvement.

Instead of sparsity, we could impose stronger smoothness assumptions: Let denote the smallest Hölder exponent of any . Then if , then one can use a one-step correction to the plug-in estimator (3) to obtain a root- consistent estimator of (Robins et al., 2008; Kandasamy et al., 2015). Another approach is to use undersmoothing (Doksum et al., 1995). In this case, the exponential sample complexity improves to polynomial sample complexity. For example, in Corollary 4.2, if we replace Lipschitz with the stronger condition that , then the sample complexity improves to .

5 Experiments

Finally, we perform a simulation study to compare the performance of NPVAR against state-of-the-art methods for learning nonparametric DAGs. The algorithms are: RESIT (Peters et al., 2014), CAM (Bühlmann et al., 2014), EqVar (Chen et al., 2019), NOTEARS (Zheng et al., 2020), GSGES (Huang et al., 2018), PC (Spirtes and Glymour, 1991), and GES (Chickering, 2003). In our implementation of NPVAR, we use generalized additive models (GAMs) for both estimating and variable selection. One notable detail is our implementation of EqVar, which we adapted to the nonlinear setting by using GAMs instead of subset selection for variable selection (the order estimation step remains the same). Full details of the implementations used as well as additional experiments can be found in the supplement. Code implementing the NPVAR algorithm is publicly available at https://github.com/MingGao97/NPVAR.

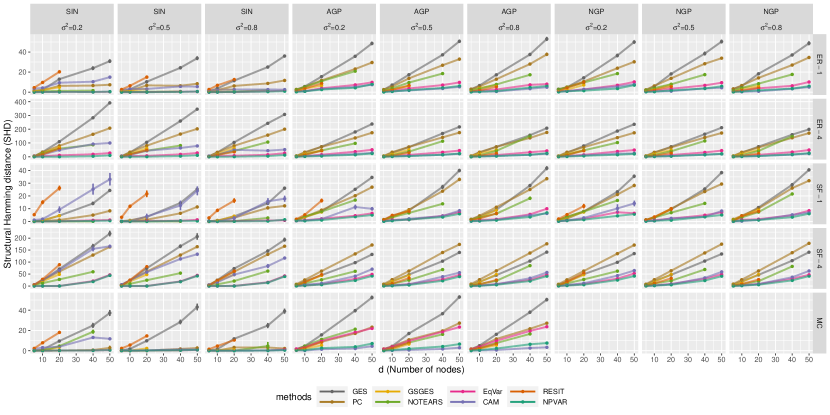

We conducted a series of simulation on different graphs and models, comparing the performance in both order recovery and structure learning. Due to space limitations, only the results for structure learning in the three most difficult settings are highlighted in Figure 2. These experiments correspond to non-sparse graphs with non-additive dependence given by either a Gaussian process (GP) or a generalized linear model (GLM):

-

•

Graph types. We sampled three families of DAGs: Markov chains (MC), Erdös-Rényi graphs (ER), and scale-free graphs (SF). For MC graphs, there are exactly edges, whereas for ER and SF graphs, we sample graphs with edges on average. This is denoted by ER4/SF4 for in Figure 2. Experiments on sparser DAGs can be found in the supplement.

-

•

Probability models. For the Markov chain models, we used two types of transition functions: An additive sine model with and a discrete model (GLM) with and . For the ER and SF graphs, we sampled from both additive GPs (AGP) and non-additive GPs (NGP).

Full details as well as additional experiments on order recovery, additive models, sparse graphs, and misspecified models can be found in the supplement (Appendix E).

Structure learning

To evaluate overall performance, we computed the structural Hamming distance (SHD) between the learned DAG and the true DAG. SHD is a standard metric used for comparison of graphical models. According to this metric, the clear leaders are NPVAR, EqVar, and CAM. Consistent with existing results, existing methods tend to suffer as the edge density and dimension of the graphs increase, however, NPVAR is more robust in these settings. Surprisingly, the CAM algorithm remains quite competitive for non-additive models, although both EqVar and NPVAR clearly outperform CAM. On the GLM model, which illustrates a non-additive model with non-additive noise, EqVar and NPVAR performed the best, although PC showed good performance with samples. Both CAM and RESIT terminated with numerical issues on the GLM model.

These experiments serve to corroborate our theoretical results and highlight the effectiveness of the NPVAR algorithm, but of course there are tradeoffs. For example, algorithms such as CAM which exploit sparse and additive structure perform very well in settings where sparsity and additivity can be exploited, and indeed outperform NPVAR in some cases. Hopefully, these experiments can help to shed some light on when various algorithms are more or less effective.

Misspecification and sensitivity analysis

We also considered two cases of misspecification: In Appendix B.1, we consider an example where Condition 2 fails, but NPVAR still successfully recovers the true ordering. This experiment corroborates our claims that this condition can be relaxed to handle unequal residual variances. We also evaluated the performance of NPVAR on linear models as in (1), and in all cases it was able to recover the correct ordering.

6 Discussion

In this paper, we analyzed the sample complexity of a polynomial-time algorithm for estimating nonparametric causal models represented by a DAG. Notably, our analysis avoids many of the common assumptions made in the literature. Instead, we assume that the residual variances are equal, similar to assuming homoskedastic noise in a standard nonparametric regression model. Our experiments confirm that the algorithm, called NPVAR, is effective at learning identifiable causal models and outperforms many existing methods, including several recent state-of-the-art methods. Nonetheless, existing algorithms such as CAM are quite competitive and apply in settings where NPVAR does not.

We conclude by discussing some limitations and directions for future work. Although we have relaxed many of the common assumptions made in the literature, these assumptions have been replaced by an assumption on the residual variances that may not hold in practice. An interesting question is whether or not there exist provably polynomial-time algorithms for nonparametric models in under less restrictive assumptions. Furthermore, although the proposed algorithm is polynomial-time, the worst-case dependence on the dimension is of course limiting. This can likely be reduced by developing more efficient estimators of the residual variance that do not first estimate the mean function. This idea is common in the statistics literature, however, we are not aware of such estimators specifically for the residual variance (or other nonlinear functionals of ). Furthermore, our general approach can be fruitfully applied to study various parametric models that go beyond linear models, for which both computation and sample efficiency would be expected to improve. These are interesting directions for future work.

Acknowledgements

We thank the anonymous reviewers for valuable feedback, as well as Y. Samuel Wang and Edward H. Kennedy for helpful discussions. B.A. acknowledges the support of the NSF via IIS-1956330 and the Robert H. Topel Faculty Research Fund. Y.D.’s work has been partially supported by the NSF (CCF-1439156, CCF-1823032, CNS-1764039).

Appendix A Reduction to order search

The fact that DAG learning can be reduced to learning a topological sort is well-known. For example, this fact is the basis of exact algorithms for score-based learning based on dynamic programming Silander and Myllymaki (2006); Ott et al. (2004); Perrier et al. (2008); Singh and Moore (2005) as well as recent algorithms for linear models (Chen et al., 2019; Ghoshal and Honorio, 2017, 2018). See also (Shojaie and Michailidis, 2010). This fact has also been exploited in the overdispersion scoring model developed by Park and Raskutti (2017) as well as for nonlinear additive models (Bühlmann et al., 2014). In fact, more can be said: Any ordering defines a minimal I-map of via a simple iterative algorithm (see §3.4.1, Algorithm 3.2 in (Koller and Friedman, 2009)), and this minimal I-map is unique as long as satisfies the intersection property. This is guaranteed, for example, if has a positive density, but holds under weaker conditions (see (Peters, 2015) for necessary and sufficient conditions assuming has a density and (Dawid, 1980), Theorem 7.1, for the general case). This same algorithm can then be used to reconstruct the true DAG from the true ordering . As noted in Section 2, a further reduction can be obtained by considering the layer decomposition , from which all topological orders of can be deduced.

Once the ordering is known, existing nonlinear variable selection methods (Lafferty and Wasserman, 2008; Rosasco et al., 2013; Giordano et al., 2020; Miller and Hall, 2010; Bertin and Lecué, 2008; Comminges and Dalalyan, 2011) suffice to learn the parent sets and hence the graph . More specifically, given an order , to identify , let , where . The parent set of is given by the active variables in this conditional expectation, i.e. , where is the partial derivative of with respect to the th argument.

Appendix B Proof of Theorem 3.1

The key lemma is the following, which is easy to prove for additive noise models via (2), and which we show holds more generally in non-additive models:

Lemma B.1.

Let be an ancestral set in . If does not depend on , then for any ,

Proof of Lemma B.1.

Let , , and be the subgraph of formed by removing the nodes in the ancestral set . Then

There are two cases: (i) , and (ii) . In case (i), it follows that and hence . Since is conditionally independent of its nondescendants (e.g. ancestors) given its parents, it follows that and hence

In case (ii), it follows that

where again we used that is conditionally independent of its nondescendants (e.g. ancestors) given its parents to replace conditioning on with conditioning on .

Now suppose is in case (i) and is in case (ii). We wish to show that . Then

where we have invoked the assumption that does not depend on to conclude . This completes the proof. ∎

Proof of Theorem 3.1.

Let denote the set of sources in and note that Lemma B.1 implies that if , then for any . Thus, is identifiable. Let denote the subgraph of formed by removing the nodes in . Since , is an ancestral set in . After conditioning on , we can thus apply Lemma B.1 once again to identify the sources in , i.e. . By repeating this procedure, we can recursively identify , and hence any topological sort of . ∎

B.1 Generalization to unequal variances

In this appendix, we illustrate how Theorem 3.1 can be extended to the case where residual variances are different, i.e. is not independent of . Let be the descendant of node and . Note also that for any nodes and in the same layer of the graph, if we interchange the position of and in some true ordering consistent with the graph to get and , both and are correct orderings.

The following result is similar to existing results on unequal variances (Ghoshal and Honorio, 2018; Park and Kim, 2020; Park, 2020), with the exception that it applies to general DAGs without linearity, additivity, or independent noise.

Theorem B.2.

Suppose there exists an ordering such that for all and , the following conditions holds:

-

1.

If and are not in the same layer , then

(6) -

2.

If and are in the same layer , then either or (6) holds.

Then the order is identifiable.

In this condition not only do we need to control the descendants of a node, but also the other non-descendants that have not been identified.

Before proving this result, we illustrate it with an example.

Example 6.

Let’s consider a very simple case: A Markov chain with three nodes such that

Here we have unequal residual variances. We now check that this model satisfies the conditions in Theorem B.2. Let and note that the true ordering is . Starting from the first source node , we have

Then for the second source node ,

Thus the condition is satisfied.

If instead we have , the condition would be violated. It is easy to check that nothing changes for . For the second source node , things are different:

Thus, the order of and would be flipped for this model.

This example is easily confirmed in practice. We let range from 50 to 1000, and check if the estimated order is correct for the two models ( and ). We simulated this 50 times and report the averages in Figure 3.

Proof of Theorem B.2.

We first consider the case where every node in the same layer has a different residual variance, i.e. . We proceed with induction on the element of the ordering . Let ,

When and , must be a source node and we have for all ,

Thus the first node to be identified must be , as desired.

Now suppose the the first nodes in the ordering are correctly identified. The parent of node must have been identified in or it is a source node. Then we have for all ,

Then the node to be identified must be . The induction is completed.

Finally, if are in the same layer and , then this procedure may choose either or first. For example, if is chosen first, then will be incorporated into the same layer as . Since both these nodes are in the same layer, swapping and in any ordering still produces a valid ordering of the DAG. The proof is complete. ∎

Appendix C Proof of Theorem 4.1

The proof of Theorem 4.1 will be broken down into several steps. First, we derive an upper bound on the error of the plug-in estimator used in Algorithm 2 (Appendix C.1), and then we derive a uniform upper bound (Appendix C.2). Based on this upper bound, we prove Theorem 4.1 via Proposition C.4 (Appendix C.3). Appendix C.4 collects various technical lemmas that are used throughout.

C.1 A plug-in estimate

Let be a pair of random variables and be a data-dependent estimator of the conditional expectation . Assume we have split the sample into two groups, which we denote by and for clarity. Given these samples, define an estimator of by

| (7) |

Note here that depends on , and is independent of the second sample . We wish to bound the deviation .

Define the target and its plug-in estimator

Letting denote the true marginal distribution with respect to , we have and , where is the empirical distribution. We will also make use of more general targets for general functions .

Finally, as a matter of notation, we adopt the following convention: For a random variable , is the usual -norm of as a random variable, and for a (nonrandom) function , , where is a fixed base measure such as Lebesgue measure. In particular, . The difference of course lies in which measure integrals are taken with respect to. Moreover, we shall always explicitly specify with respect to which variables probabilities and expectations are taken, e.g. to disambiguate , , and .

We first prove the following result:

Proposition C.1.

Assume . Then

| (8) |

Proof.

Finally, we conclude the following:

Corollary C.2.

If , then

C.2 A uniform bound

For any and , define and the corresponding plug-in estimator from (7). By Proposition C.2, we have for ,

| (12) |

Thus we have the following result:

Proposition C.3.

Assume for all and :

-

1.

;

-

2.

;

-

3.

.

Then

| (13) |

C.3 Proof of Theorem 4.1

Recall and is the plug-in estimator defined by (7). Let be such that

| (14) |

For example, Proposition C.3 implies . Recall also , where is the smallest number such that for all .

Theorem 4.1 follows immediately from Proposition C.4 below, combined with Proposition C.3 to bound by .

Proposition C.4.

Define as in (14). Then for any threshold , we have

Proof.

Define . It follows that

Clearly, if then . By definition, we have , i.e. is the smallest “gap” between any source in a subgraph and the rest of the nodes.

Now let and consider :

Now for any ,

and for any and ,

Thus, with probability , we have

Now, as long as , we have , which implies that .

Finally, we have

Recall that . Then by a similar argument

Recalling , we have just proved that . A similar argument proves that . Since , the inequality implies

as desired. ∎

C.4 Technical lemmas

Lemma C.5.

Proof.

Write as a telescoping sum:

Lemma C.6.

Fix and and suppose . Then

The exponent satisfies and as , and the constant as . Thus, if , then

Proof.

Use log-convexity of -norms with . ∎

Lemma C.7.

Assume and . Then

| (15) |

If additionally , then

| (16) |

Proof.

Note that

Thus

This proves the first inequality. The second follows from taking in Lemma C.6 and using for . ∎

Appendix D Comparison to CAM algorithm

In this section, we justify the claim in Example 5 that there exist infinitely many nonlinear functions for which the CAM algorithm returns an incorrect graph under the model (4). To show this, we first construct a linear model on which CAM returns an incorrect ordering. Since CAM focuses on nonlinear models, we then show that this extends to any sufficiently small nonlinear perturbation of this model.

The linear model is

| (17) |

The graph is

which corresponds to the adjacency matrix

The IncEdge step of the CAM algorithm (§5.2, (Bühlmann et al., 2014)) is based on the following score function:

The algorithm starts with the empty DAG (i.e. for all ) and proceeds by greedily adding edges that decrease this score the most in each step. For example, in the first step, CAM searches for the pair that maximizes , and adds the edge to the estimated DAG. The second proceeds similarly until the estimated order is determined. Thus, it suffices to study the log-differences .

The following are straightforward to compute for the model (17):

Then

Now, if is chosen first, then the order is incorrect and we are done. Thus suppose CAM instead chooses , then in the next step it would update the score for to be

Therefore, for the next edge, CAM would choose , which also leads to the wrong order. Thus regardless of which edge is selected first, CAM will return the wrong order.

Thus, when CAM is applied to data generated from (17), it is guaranteed to return an incorrect ordering. Although the model (17) is identifiable, it does not satisfy the identifiability condition for CAM (Lemma 1, (Bühlmann et al., 2014)), namely that the structural equation model is a nonlinear additive model. Thus, we need to extend this example to an identifiable, nonlinear additive model.

Since this depends only on the scores , it suffices to construct a nonlinear model with similar scores. For this, we consider a simple nonlinear extension of (17): Let be an arbitrary bounded, nonlinear function, and define . The nonlinear model is given by

| (18) |

This model satisfies both our identifiability condition (Condition 2) and the identifiability condition for CAM (Lemma 1, (Bühlmann et al., 2014)).

We claim that for sufficiently small , the CAM algorithm will return the wrong ordering (see Proposition D.1 below for a formal statement). It follows that the scores corresponding to the model (18) can be made arbitrarily close to , which implies that CAM will return the wrong ordering for sufficiently small .

In Figure 4, we illustrate this empirically. In addition to the model (18), we also simulated from the following model, which shows that this phenomenon is not peculiar to the construction above:

| (19) |

In all eight examples, NPVAR perfectly recovers the ordering while CAM is guaranteed to return an inconsistent order for sufficiently large (i.e. once the scores are consistently estimated).

Proposition D.1.

Proof sketch of Proposition D.1.

The proof is consequence of the fact that the differences are continuous functions of . We sketch the proof for ; the remaining cases are similar.

We have

Let be the standard normal density. We only need to analyze and compare in two parts:

Since ,

Thus

so that

as claimed. ∎

Appendix E Experiment details

In this appendix we outline the details of our experiments, as well as additional simulations.

E.1 Experiment settings

For graphs, we used

-

•

Markov chain (MC). Graph where there is one edge from to for all nodes .

-

•

Erdős Rényi (ER). Random graphs whose edges are added independently with specified expected number of edges.

-

•

Scale-free networks (SF). Networks simulated according to the Barabasi-Albert model.

For models, we refer to the nonlinear functions in SEM. We specify the nonlinear functions in for all , where with variance

-

•

Additive sine model (SIN): where .

-

•

Additive Gaussian process (AGP): where is a draw from Gaussian process with RBF kernel with length-scale one.

-

•

Non-additive Gaussian process (NGP): is a draw from Gaussian process with RBF kernel with length-scale one.

-

•

Generalized Linear Model (GLM): This is a special case with non-additive model and non-additive noise. We specify the model by a parameter . Given , if is a source node, . For the Markov chain model we define:

or vice versa.

We generated graphs from each of the above models with edges each. These are denoted by the shorthand XX-YYY-, where XX denotes the graph type, YYY denotes the model, and indicates the graphs have edges on average. For example, ER-SIN-1 indicates an ER graph with (expected) edges under the additive sine model. SF-NGP-4 indicates an SF graph with (expected) edges under a non-additive Gaussian process. Note that there is no difference between additive or non-additive GP for Markov chains, so the results for MC-NGP- are omitted from the Figures in Appendix E.5.

Based on these models, we generated random datasets with samples. For each simulation run, we generated samples for graphs with nodes.

E.2 Implementation and baselines

Code implementing NPVAR can be found at https://github.com/MingGao97/NPVAR. We implemented NPVAR (Algorithm 2) with generalized additive models (GAMs) as the nonparametric estimator for . We used the gam function in the R package mgcv with P-splines bs=’ps’ and the default smoothing parameter sp=0.6. In all our implementations, including our own for Algorithm 2, we used default parameters in order to avoid skewing the results in favour of any particular algorithm as a result of hyperparameter tuning.

We compared our method with following approaches as baselines:

-

•

Regression with subsequent independence test (RESIT) identifies and disregards a sink node at each step via independence testing (Peters et al., 2014). The implementation is available at http://people.tuebingen.mpg.de/jpeters/onlineCodeANM.zip. Uses HSIC test for independence testing with alpha=0.05 and gam for nonparametric regression.

-

•

Causal additive models (CAM) estimates the topological order by greedy search over edges after a preliminary neighborhood selection (Bühlmann et al., 2014). The implementation is available at https://cran.r-project.org/src/contrib/Archive/CAM/. By default, CAM applies an extra pre-processing step called preliminary neighborhood search (PNS). Uses gam to compute the scores and for pruning, and mboost for preliminary neighborhood search. The R implementation of CAM does not use the default parameters for gam or mboost, and instead optimizes these parameters at runtime.

-

•

NOTEARS uses an algebraic characterization of DAGs for score-based structure learning of nonparametric models via partial derivatives (zheng2018notears; Zheng et al., 2020). The implementation is available at https://github.com/xunzheng/notears. We used neural networks for the nonlinearities with a single hidden layer of 10 neurons. The training parameters are lambda1=0.01, lambda2=0.01 and the threshold for adjacency matrix is w_threshold=0.3.

-

•

Greedy equivalence search with generalized scores (GSGES) uses generalized scores for greedy search without assuming model class (Huang et al., 2018). The implementation is available at https://github.com/Biwei-Huang/Generalized-Score-Functions-for-Causal-Discovery/. We used cross-validation parameters parameters.kfold = 10 and parameters.lambda = 0.01.

-

•

Equal variance (EqVar) algorithm identifies source node by minimizing conditional variance in linear SEM (Chen et al., 2019). The implementation is available at https://github.com/WY-Chen/EqVarDAG. The original EqVar algorithm estimates the error variances in a linear SEM via the covariance matrix , and then uses linear regression (e.g. best subset selection) to learn the structure of the DAG. We adapted this algorithm to the nonlinear setting in the obivous way by using GAMs (instead of subset selection) for variable selection. The use of the covariance matrix to estimate the order remains the same.

-

•

PC algorithm and greedy equivalence search (GES) are standard baselines for structure learning (Spirtes and Glymour, 1991; Chickering, 2003). The implementation is available from R package pcalg. For PC algorithm, use correlation matrix as sufficient statistic. Independence test is implemented by gaussCItest with significance level alpha=0.01. For GES, set the score to be GaussL0penObsScore with lambda as , which corresponds to the BIC score.

The experiments were conducted on an Intel E5-2680v4 2.4GHz CPU with 64 GB memory.

E.3 Metrics

We evaluated the performance of each algorithm with the following two metrics:

-

•

: The percentage of runs in which the algorithm gives a correct topological ordering, over runs. This metric is only sensible for algorithms that first estimate an ordering or return an adjacency matrix which does not contain undirected edges, including RESIT, CAM, EqVar and NOTEARS.

-

•

Structural Hamming distance (SHD): A standard benchmark in the structure learning literature that counts the total number of edge additions, deletions, and reversals needed to convert the estimated graph into the true graph.

Since there may be multiple topological orderings of a DAG, in our evaluations of order recovery, we check whether or not the order returned is any of the possible valid orderings. For PC, GES, and GSGES, they all return a CPDAG that may contain undirected edges, in which case we evaluate them favourably by assuming correct orientation for undirected edges whenever possible. Since CAM, RESIT, EqVar and NPVAR each first estimate a topological ordering then estimate a DAG. To estimate a DAG from an ordering, we apply the same pruning step to each algorithm for a fair comparison, which is adapted from (Bühlmann et al., 2014). Specifically, given an estimated ordering, we run a gam regression for each node on its ancestors, then determine the parents of the node by the -values with significance level cutoff=0.001 for estimating the DAG.

E.4 Timing

For completeness, runtime comparisons are reported in Tables 1 and 2. Algorithms based on linear models such as EqVar, PC, and GES are by far the fastest. These algorithms are also the most highly optimized. The slowest algorithms are GSGES and RESIT. Timing comparisons against CAM are are difficult to interpret since by default, CAM first performs preliminary neighbourhood search, which can easily be applied to any of the other algorithms tested. The dramatic difference with and without this pre-processing step by comparing Tables 1 and 2: For , with this extra step CAM takes just over 90 seconds, whereas without it, on the same data, it takes over 8.5 hours. For comparison, NPVAR takes around two minutes (i.e. without pre-processing or neighbourhood search).

| Algorithm | Runtime (s) | ||

|---|---|---|---|

| EqVar | 20 | 1000 | |

| PC | 20 | 1000 | |

| GES | 20 | 1000 | |

| NPVAR | 20 | 1000 | |

| NOTEARS | 20 | 1000 | |

| CAM (w/ PNS) | 20 | 1000 | |

| CAM (w/o PNS) | 20 | 1000 | |

| RESIT | 20 | 1000 | |

| GSGES | 20 | 1000 |

| Algorithm | Runtime (s) | ||

|---|---|---|---|

| EqVar | 40 | 1000 | |

| GES | 40 | 1000 | |

| PC | 40 | 1000 | |

| NOTEARS | 40 | 1000 | |

| CAM (w/ PNS) | 40 | 1000 | |

| NPVAR | 40 | 1000 | |

| CAM (w/o PNS) | 40 | 1000 |

E.5 Additional experiments

Here we collect the results of our additional experiments. Since the settings MC-AGP- and MC-NGP- are equivalent (i.e. since there is only parent for each node), the plots for MC-NGP- are omitted. Some algorithms might be skipped due to high computational cost or numerical issue.

-

•

Figure 5: SHD vs. with fixed, across all graphs and models tested.

-

•

Figure 6: SHD vs. with fixed, across all graphs and models tested.

-

•

Figure 7: SHD vs. with fixed, across all graphs and models tested.

-

•

Figure 8: SHD vs. with fixed, across all graphs and models tested with GSGES and RESIT skipped (due to high computational cost).

-

•

Figure 9: SHD vs. with fixed, across all graphs and models tested with GSGES, RESIT and NOTEARS skipped (due to high computational cost).

-

•

Figure 10: SHD vs. with fixed, across all graphs and models tested with GSGES, RESIT and NOTEARS skipped (due to high computational cost).

-

•

Figure 11: SHD vs. with fixed, across all graphs and models tested with GSGES, RESIT and NOTEARS skipped (due to high computational cost).

-

•

Figure 12: SHD vs. with ranging from to on GLM with CAM and RESIT skipped (due to numerical issues).

-

•

Figure 13: SHD vs. with fixed, across all graphs and models tested.

-

•

Figure 14: SHD vs. with fixed, across all graphs and models tested.

-

•

Figure 15: Ordering recovery vs. with fixed, across all graphs and models tested.

-

•

Figure 16: Ordering recovery vs. with fixed, across all graphs and models tested.

-

•

Figure 17: Ordering recovery vs. with fixed, across all graphs and models tested.

-

•

Figure 18: Ordering recovery vs. with fixed, across all graphs and models tested with RESIT skipped (due to high computational cost).

-

•

Figure 19: Ordering recovery vs. with fixed, across all graphs and models tested with RESIT and NOTEARS skipped (due to high computational cost).

-

•

Figure 20: Ordering recovery vs. with fixed, across all graphs and models tested with RESIT and NOTEARS skipped (due to high computational cost).

-

•

Figure 21: Ordering recovery vs. with fixed, across all graphs and models tested with RESIT and NOTEARS skipped (due to high computational cost).

References

- Aragam and Zhou (2015) B. Aragam and Q. Zhou. Concave Penalized Estimation of Sparse Gaussian Bayesian Networks. Journal of Machine Learning Research, 16(69):2273–2328, 2015.

- Aragam et al. (2015) B. Aragam, A. A. Amini, and Q. Zhou. Learning directed acyclic graphs with penalized neighbourhood regression. arXiv:1511.08963, 2015.

- Aragam et al. (2019) B. Aragam, A. Amini, and Q. Zhou. Globally optimal score-based learning of directed acyclic graphs in high-dimensions. In Advances in Neural Information Processing Systems 32, pages 4450–4462. 2019.

- Bertin and Lecué (2008) K. Bertin and G. Lecué. Selection of variables and dimension reduction in high-dimensional non-parametric regression. Electronic Journal of Statistics, 2:1224–1241, 2008.

- Bottou (2015) L. Bottou. Two big challenges in machine learning. ICML 2015 keynote lecture, 2015.

- Bühlmann (2018) P. Bühlmann. Invariance, causality and robustness. arXiv preprint arXiv:1812.08233, 2018.

- Bühlmann et al. (2014) P. Bühlmann, J. Peters, and J. Ernest. CAM: Causal additive models, high-dimensional order search and penalized regression. Annals of Statistics, 42(6):2526–2556, 2014.

- Chen et al. (2019) W. Chen, M. Drton, and Y. S. Wang. On causal discovery with an equal-variance assumption. Biometrika, 106(4):973–980, 09 2019. ISSN 0006-3444. doi: 10.1093/biomet/asz049.

- Chicharro et al. (2019) D. Chicharro, S. Panzeri, and I. Shpitser. Conditionally-additive-noise models for structure learning. arXiv preprint arXiv:1905.08360, 2019.

- Chickering (2003) D. M. Chickering. Optimal structure identification with greedy search. Journal of Machine Learning Research, 3:507–554, 2003.

- Comminges and Dalalyan (2011) L. Comminges and A. S. Dalalyan. Tight conditions for consistent variable selection in high dimensional nonparametric regression. In Proceedings of the 24th Annual Conference on Learning Theory, pages 187–206, 2011.

- Dawid (1980) A. P. Dawid. Conditional independence for statistical operations. Annals of Statistics, pages 598–617, 1980.

- Doksum et al. (1995) K. Doksum, A. Samarov, et al. Nonparametric estimation of global functionals and a measure of the explanatory power of covariates in regression. The Annals of Statistics, 23(5):1443–1473, 1995.

- Ghoshal and Honorio (2017) A. Ghoshal and J. Honorio. Learning identifiable gaussian bayesian networks in polynomial time and sample complexity. In Advances in Neural Information Processing Systems 30, pages 6457–6466. 2017.

- Ghoshal and Honorio (2018) A. Ghoshal and J. Honorio. Learning linear structural equation models in polynomial time and sample complexity. In A. Storkey and F. Perez-Cruz, editors, Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics, volume 84 of Proceedings of Machine Learning Research, pages 1466–1475, Playa Blanca, Lanzarote, Canary Islands, 09–11 Apr 2018. PMLR.

- Giordano et al. (2020) F. Giordano, S. N. Lahiri, and M. L. Parrella. GRID: A variable selection and structure discovery method for high dimensional nonparametric regression. Annals of Statistics, to appear, 2020.

- Györfi et al. (2006) L. Györfi, M. Kohler, A. Krzyzak, and H. Walk. A distribution-free theory of nonparametric regression. Springer Science & Business Media, 2006.

- Hastie and Tibshirani (1990) T. J. Hastie and R. J. Tibshirani. Generalized additive models, volume 43. CRC press, 1990.

- Hoyer et al. (2009) P. O. Hoyer, D. Janzing, J. M. Mooij, J. Peters, and B. Schölkopf. Nonlinear causal discovery with additive noise models. In Advances in neural information processing systems, pages 689–696, 2009.

- Huang et al. (2018) B. Huang, K. Zhang, Y. Lin, B. Schölkopf, and C. Glymour. Generalized score functions for causal discovery. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pages 1551–1560. ACM, 2018.

- Kandasamy et al. (2015) K. Kandasamy, A. Krishnamurthy, B. Poczos, L. Wasserman, et al. Nonparametric von mises estimators for entropies, divergences and mutual informations. In Advances in Neural Information Processing Systems, pages 397–405, 2015.

- Kohler et al. (2009) M. Kohler, A. Krzyżak, and H. Walk. Optimal global rates of convergence for nonparametric regression with unbounded data. Journal of Statistical Planning and Inference, 139(4):1286–1296, 2009.

- Koller and Friedman (2009) D. Koller and N. Friedman. Probabilistic graphical models: principles and techniques. MIT press, 2009.

- Lachapelle et al. (2019) S. Lachapelle, P. Brouillard, T. Deleu, and S. Lacoste-Julien. Gradient-based neural dag learning. arXiv preprint arXiv:1906.02226, 2019.

- Lafferty and Wasserman (2008) J. Lafferty and L. Wasserman. Rodeo: sparse, greedy nonparametric regression. Annals of Statistics, 36(1):28–63, 2008.

- Lauritzen (1996) S. L. Lauritzen. Graphical models. Oxford University Press, 1996.

- Loh and Bühlmann (2014) P.-L. Loh and P. Bühlmann. High-dimensional learning of linear causal networks via inverse covariance estimation. Journal of Machine Learning Research, 15:3065–3105, 2014.

- Miller and Hall (2010) H. Miller and P. Hall. Local polynomial regression and variable selection. In Borrowing Strength: Theory Powering Applications–A Festschrift for Lawrence D. Brown, pages 216–233. Institute of Mathematical Statistics, 2010.

- Mitrovic et al. (2018) J. Mitrovic, D. Sejdinovic, and Y. W. Teh. Causal inference via kernel deviance measures. In Advances in Neural Information Processing Systems, pages 6986–6994, 2018.

- Monti et al. (2019) R. P. Monti, K. Zhang, and A. Hyvarinen. Causal discovery with general non-linear relationships using non-linear ica. arXiv preprint arXiv:1904.09096, 2019.

- Mooij et al. (2016) J. M. Mooij, J. Peters, D. Janzing, J. Zscheischler, and B. Schölkopf. Distinguishing cause from effect using observational data: methods and benchmarks. The Journal of Machine Learning Research, 17(1):1103–1204, 2016.

- Ng et al. (2019) I. Ng, Z. Fang, S. Zhu, and Z. Chen. Masked gradient-based causal structure learning. arXiv preprint arXiv:1910.08527, 2019.

- Nowzohour and Bühlmann (2016) C. Nowzohour and P. Bühlmann. Score-based causal learning in additive noise models. Statistics, 50(3):471–485, 2016.

- Ott et al. (2004) S. Ott, S. Imoto, and S. Miyano. Finding optimal models for small gene networks. In Pacific symposium on biocomputing, volume 9, pages 557–567. Citeseer, 2004.

- Park (2018) G. Park. Learning generalized hypergeometric distribution (ghd) dag models. arXiv preprint arXiv:1805.02848, 2018.

- Park (2020) G. Park. Identifiability of additive noise models using conditional variances. Journal of Machine Learning Research, 21(75):1–34, 2020.

- Park and Kim (2020) G. Park and Y. Kim. Identifiability of gaussian linear structural equation models with homogeneous and heterogeneous error variances. Journal of the Korean Statistical Society, pages 1–17, 2020.

- Park and Raskutti (2017) G. Park and G. Raskutti. Learning quadratic variance function (QVF) dag models via overdispersion scoring (ODS). The Journal of Machine Learning Research, 18(1):8300–8342, 2017.

- Pearl (2009) J. Pearl. Causality. Cambridge university press, 2009.

- Perrier et al. (2008) E. Perrier, S. Imoto, and S. Miyano. Finding optimal bayesian network given a super-structure. Journal of Machine Learning Research, 9(Oct):2251–2286, 2008.

- Peters (2015) J. Peters. On the intersection property of conditional independence and its application to causal discovery. Journal of Causal Inference, 3(1):97–108, 2015.

- Peters and Bühlmann (2013) J. Peters and P. Bühlmann. Identifiability of Gaussian structural equation models with equal error variances. Biometrika, 101(1):219–228, 2013.

- Peters et al. (2014) J. Peters, J. M. Mooij, D. Janzing, and B. Schölkopf. Causal discovery with continuous additive noise models. Journal of Machine Learning Research, 15(1):2009–2053, 2014.

- Peters et al. (2017) J. Peters, D. Janzing, and B. Schölkopf. Elements of causal inference: foundations and learning algorithms. MIT press, 2017.

- Robins et al. (2008) J. Robins, L. Li, E. Tchetgen, A. van der Vaart, et al. Higher order influence functions and minimax estimation of nonlinear functionals. In Probability and statistics: essays in honor of David A. Freedman, pages 335–421. Institute of Mathematical Statistics, 2008.

- Rosasco et al. (2013) L. Rosasco, S. Villa, S. Mosci, M. Santoro, and A. Verri. Nonparametric sparsity and regularization. The Journal of Machine Learning Research, 14(1):1665–1714, 2013.

- Rothenhäusler et al. (2018) D. Rothenhäusler, J. Ernest, P. Bühlmann, et al. Causal inference in partially linear structural equation models. The Annals of Statistics, 46(6A):2904–2938, 2018.

- Schölkopf (2019) B. Schölkopf. Causality for machine learning. arXiv preprint arXiv:1911.10500, 2019.

- Shojaie and Michailidis (2010) A. Shojaie and G. Michailidis. Penalized likelihood methods for estimation of sparse high-dimensional directed acyclic graphs. Biometrika, 97(3):519–538, 2010.

- Silander and Myllymaki (2006) T. Silander and P. Myllymaki. A simple approach for finding the globally optimal bayesian network structure. In Proceedings of the 22nd Conference on Uncertainty in Artificial Intelligence, 2006.

- Singh and Moore (2005) A. P. Singh and A. W. Moore. Finding optimal bayesian networks by dynamic programming. 2005.

- Spirtes and Glymour (1991) P. Spirtes and C. Glymour. An algorithm for fast recovery of sparse causal graphs. Social Science Computer Review, 9(1):62–72, 1991.

- Spirtes et al. (2000) P. Spirtes, C. Glymour, and R. Scheines. Causation, prediction, and search, volume 81. The MIT Press, 2000.

- Tagasovska et al. (2018) N. Tagasovska, T. Vatter, and V. Chavez-Demoulin. Nonparametric quantile-based causal discovery. arXiv preprint arXiv:1801.10579, 2018.

- Tsybakov (2009) A. Tsybakov. Introduction to nonparametric estimation. Springer Series in Statistics, New York, page 214, 2009. cited By 1.

- van de Geer and Bühlmann (2013) S. van de Geer and P. Bühlmann. -penalized maximum likelihood for sparse directed acyclic graphs. Annals of Statistics, 41(2):536–567, 2013.

- Wang and Drton (2020) Y. S. Wang and M. Drton. High-dimensional causal discovery under non-gaussianity. Biometrika, 107(1):41–59, 2020.

- Wu and Fukumizu (2020) P. Wu and K. Fukumizu. Causal mosaic: Cause-effect inference via nonlinear ica and ensemble method. arXiv preprint arXiv:2001.01894, 2020.

- Yu et al. (2019) Y. Yu, J. Chen, T. Gao, and M. Yu. Dag-gnn: Dag structure learning with graph neural networks. arXiv preprint arXiv:1904.10098, 2019.

- Zhang and Hyvärinen (2009) K. Zhang and A. Hyvärinen. On the identifiability of the post-nonlinear causal model. In Proceedings of the twenty-fifth conference on uncertainty in artificial intelligence, pages 647–655. AUAI Press, 2009.

- Zheng et al. (2020) X. Zheng, C. Dan, B. Aragam, P. Ravikumar, and E. Xing. Learning Sparse Nonparametric DAGs. In Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics, volume 108 of Proceedings of Machine Learning Research, pages 3414–3425, 2020.

- Zhu and Chen (2019) S. Zhu and Z. Chen. Causal discovery with reinforcement learning. arXiv preprint arXiv:1906.04477, 2019.