A penalty scheme to solve constrained non-convex optimization problems in

Abstract. We investigate non-convex optimization problems in with two-sided pointwise inequality constraints. We propose a regularization and penalization method to numerically solve the problem. Under certain conditions, weak limit points of iterates are stationary for the original problem. In addition, we prove optimality conditions for the original problem that contain Lagrange multipliers to the inequality constraints. Numerical experiments confirm the theoretical findings.

Keywords. Bounded variation, inequality constraints, optimality conditions, Lagrange multipliers, regularization scheme.

MSC 2020 classification. 49K30, 49M05, 65K10.

1 Introduction

Let be an open bounded set with Lipschitz boundary. We consider the possibly non-convex optimization problem of the form

| (1.1) |

Mostly, we will work with

| (1.2) |

where . The function space setting is , i.e., the space of functions of bounded variation that consists of -functions with weak derivative in the Banach space of real Borel measures on . The term denotes the -seminorm, which is equal to the total variation of the measure , i.e., . The functional is assumed to be smooth and can be non-convex. In particular, we have in mind to choose as the reduced smooth part of an optimal control problem, incorporating the state equation. We will give more details on the assumptions on the optimal control problem in Section 2. Problem (1.1) is solvable, and existence of solutions to (1.1) can be shown by the direct method of the calculus of variations, see Theorem 2.4.

The purpose of the paper is two-fold:

- (A)

- (B)

Both of these goals rely on the same approximation method. The algorithmic scheme consists of the following two parts. First, we approximate the non-differentiable total variation term by a smooth approximation and apply a continuation strategy. Second, we address the box constraints with a classical penalty method. Of course, solutions to (1.1) and appearing subproblems are not unique due to the lack of convexity, which makes the analysis challenging. In general, only stationary points of these non-convex problems can be computed. Under suitable assumptions, limit points of the generated sequences of stationary points of the subproblems and of the associated multipliers satisfy a certain necessary optimality condition for the original problem.

In addition, we apply this regularization and penalization approach to local minima of the original problem. This allows us to prove optimality conditions that contains Lagrange multipliers to the inequality constraints, see Theorem 4.3. Such a result is not available in the literature. Admittedly, we have to make the assumption that the constraints and are constant functions.

Regularization by total variation is nowadays a standard tool in image analysis. Following the seminal contribution [17], much research was devoted to study such kind of optimization problems. We refer to [9, 10] for a general introduction and an overview on total variation in image analysis. Optimal control problems in -spaces were studied for instance in [5, 8, 7]. These control problems are subject to semilinear equations, which results in non-convex control problems. Finite element discretization and convergence analysis for optimization problems in were investigated for instance in [5, 3, 11]. An extensive comparisons of algorithms to solve (1.1) with the choice can be found in [4], see also [16]. In [18], the one-sided obstacle problem in is analyzed under low regularity requirements on the obstacle. Interestingly, we could not find any results, where the existence of Lagrange multipliers to the inequality constraints in is addressed.

One natural idea to regularize (1.1) is to replace the non-differentiable -seminorm by a smooth approximation. This was introduced in the image processing setting in [1] with the functional

which is widely used in the literature. Our regularization method is similar, with the exception that our functional guarantees existence of solutions in . A similar scheme was employed in the recent work [12], where a path-following inexact Newton method for a convex PDE-constrained optimal control problem in is studied.

Let us comment on the structure of this work. In Section 2 we give a brief introduction to the function space and recall some useful facts. Furthermore, we prove existence of solutions and a necessary first-order optimality condition for the optimization problem (1.1). In Section 3, we introduce the regularization scheme for (1.1) and show that limit points of the suggested smoothing and penalty method satisfy a stationary condition for the original problem, see Theorem 3.16. In Section 4, we apply the regularization scheme to derive an optimality condition for locally optimal solutions of (1.1), see Theorem 4.3. These conditions are stronger than the conditions proven in Section 2, since they contain Lagrange multipliers to the inequality constraints. Finally, we provide numerical results and details regarding the implementation of the method in Section 5.

2 Preliminaries and Background

In this section we want to provide some definitions and results regarding the mathematical background of the paper. For details we refer also to, e.g., [2, 11, 8, 5]. First, let us recall that is the dual space of . The noram of a measure is given by

The space of functions of bounded variation is a non-reflexive Banach space when endowed with the norm

where we define the total variation of by

| (2.1) |

Here, denotes the Euclidean norm on . In the definition (2.1), is a linear and continuous map. Functions in are not necessarily continuous, as an example we mention the characteristic functions of a set with sufficient regularity. If , then and

The Banach space and have some useful properties, which are recalled in the following.

Proposition 2.1.

Let be an open bounded set with Lipschitz boundary.

-

1.

The space is continuously embedded in for , while the embedding is compact for .

-

2.

Let be bounded in with in . Then

holds.

-

3.

For : , there is a sequence such that

(2.2) that is, is dense in with respect to the intermediate convergence (2.2).

Proof.

Notation.

Frequently, we will use the following standard notations from convex analysis. The indicator function of a convex set is denoted by . The normal cone of a convex set at a point is denoted by , and denotes the convex subdifferential of a convex function . It is well known that holds for convex sets . Moreover, we introduce the notation

which will be used thoughout the paper. In addition, we will denote the positive and negative part of by and .

2.1 Standing assumption

In order to prove existence of solutions of (1.1) and to analyze the regularization scheme later on, we need some assumptions on the ingredients of the optimal control problem (1.1). Let us start with collecting those in the following paragraph.

Assumption A.

-

(A1)

is bounded from below and weakly lower semicontinuous.

-

(A2)

is weakly coercive in , i.e., for all sequences with and it follows .

-

(A3)

is a convex and closed subset of with .

-

(A4)

is continuously Fréchet differentiable.

-

(A5)

with and .

Here, assumptions (A1)–(A3) will be used to prove existence of solutions of (1.1). Condition (A4) is necessary to derive necessary optimality conditions. The assumption (A5) will be used in Section 3 to prove boundedness of Lagrange multipliers associated to the inequality constraints in .

Example 2.2.

We consider

where is defined as the unique weak solution to the elliptic partial differential equation

Let us assume that is an uniformly elliptic operator with bounded coefficients and are Carathéodory functions, continuously differentiable with respect to such that derivatives are bounded on bounded sets and that is monotonically increasing with respect to . Then it is well known that is covered by Assumption A, see for instance [6].

Example 2.3.

Another example is given by the functional

with as the solution of the parabolic equation

Assuming again an uniformly elliptic operator and measurable functions of class w.r.t. with bounded derivatives, such that is monotonically increasing, the functional satisfies Assumption A. We refer to [20, Chapter 5], [7].

2.2 Existence of solutions of (1.1)

Next, we show that under suitable assumptions on the function , Problem (1.1) has at least one solution in .

Proof.

The proof is standard. We recall it by following the lines of the proof of [5, Theorem 2.1]. Consider a minimizing sequence . Since is bounded from below by (A1), is bounded. By (A2), is bounded in , and hence is bounded in . By Proposition 2.1, there is a subsequence and with in and in . Due to (A3), is weakly closed in , and follows. By weak lower semicontinuity (A1) and Proposition 2.1, we obtain

therefore, is a solution. ∎

2.3 Necessary optimality conditions

Next, we provide a first-order necessary optimality condition for (1.1). A similar result with proof can be found in [5, Theorem 2.3].

Theorem 2.5.

3 Regularization scheme

In this section, we introduce the regularization scheme for (1.1). We will use a smoothing of the -norm as well as a penalization of the constraints . In order to approximate the -norm by smooth functions, we introduce the following family of smooth functions with . For we define by

| (3.1) |

where denotes the Euclidian norm in . As a first direct consequence of the above definition we have:

Lemma 3.1.

For , is a family of twice continuously differentiable functions from to with the following properties:

-

(1)

is convex.

-

(2)

and for all .

-

(3)

For all , is monotonically increasing and as

-

(4)

is coercive, i.e., as .

-

(5)

for all .

Proof.

Properties (1)–(4) are immediate consequences of the definition. (5) can be proven as follows:

∎

The -minimization problem (1.1) is then approximated by

| () |

The choice (3.1) of guarantees the existence of solutions of this problem in . Note that the standard approximation does Lemma 3.1((5)), which ensures that is weakly coercive in . The existence of solutions (and the fact that is a measurable function) is important for the subsequent analysis.

Due to the presence of the inequality constraints, Problem () is difficult to solve. Following existing approaches in the literature, see, e.g., [14, 19], we will use a smooth penalization of these constraints. We define the smooth function by

| (3.2) |

where . Due to the inequalities

| (3.3) |

it can be considered as an approximation of . In addition, one verifies for all and .

Let us introduce

| (3.4) |

Using this function, we define the penalized problem by

| () |

If is a local solution of () then it satisfies

| (3.5) |

where we used the abbreviations

| (3.6) |

Existence of solutions of () and necessity of (3.5) for local optimality can be proven by standard arguments.

We will investigate the behavior of a penalty and smoothing method to solve (1.1). Since () is a non-convex problem, it is unrealistic to assume that one can compute global solutions. Instead, the iterates will be chosen as stationary points of (). Hence, we are interested in the behavior of stationary points and corresponding multipliers , as and .

The resulting method then reads as follows.

Algorithm 3.3.

Choose and .

- 1.

-

2.

Choose

-

3.

If a suitable stopping criterion is satisfied: Stop. Else set and go to Step 1.

In view of Corollary 3.2, the algorithm is well-defined. In the following, we assume that the algorithm generates an infinite sequence of iterates . Here, we are interested to prove that weak limit points are stationary, i.e., they satisfy the optimality condition (2.3) for (1.1). Throughout the subsequent analysis, we assume that assumptions (A1)–(A5) are satisfied

3.1 A-priori bounds

In order to investigate the sequences of iterates and its (weak) limit points, it is reasonable to derive bounds of iterates , i.e., solutions of (3.5), first. To this end, we will study solutions of the nonlinear variational equation

| (3.8) |

for , , and . The functions , are defined in (3.6) as

Let us start with the following lemma. It shows that the supports of the multipliers , do not overlap if the penalty parameter is large enough.

Lemma 3.4.

Let be a solution of (3.8) to . Suppose . Then it holds

Proof.

Let such that . Then it holds and . Consequently, follows. ∎

Under the assumptions that the bounds and are constant, we can prove the following series of helpful results regarding the boundedness of iterates. Let us start with the boundedness of the multiplier sequences. This result is inspired by related results for the -obstacle problem, see, e.g., [13, Lemma 5.1], see also [19, Lemma 2.3]. The results for -obstacle problems require the assumption . It is not clear to us, how the following proof can be generalized to non-constant obstacles .

Lemma 3.5.

Let be a solution of (3.8) to . Suppose . Then it holds

Proof.

To show boundedness of the multipliers in , we test the optimality condition (3.8) with and , respectively. We get for

Due to Lemma 3.4, the last term is zero. It remains to analyze the first term. Here, we find

where we used Lemma 3.1((5)) and . This proves . Similarly, can be proven. ∎

Corollary 3.6.

Let be a solution of (3.8) to . Suppose . Then it holds

Proof.

Due to the definition of , we have for all . This implies

and the claim follows by Lemma 3.5 above. ∎

Corollary 3.7.

Let be a solution of (3.8) to . Suppose . Then it holds

Proof.

Lemma 3.8.

Let be a solution of (3.8) to . Suppose . Then it holds

Proof.

We test the optimality condition (3.5) with and use Lemma 3.1((5)) to get the estimate

The claim follows with the estimate of Lemma 3.5. ∎

3.2 Preliminary convergence results

As next step, we derive convergence properties of solutions of

| (3.10) |

where

| (3.11) |

From the results of the previous section, we immediately obtain that is bounded in , and and are bounded in . Moreover, strong limit points of satisfy the inequality constraints in (1.2) due to Corollary 3.6. In a first result, we need to lift the strong convergence of (a subsequence of) in , which is a consequence of the compact embedding , , to strong convergence in .

Proof.

We are now in the position to prove existence of suitably converging subsequence under assumption (3.11).

Theorem 3.10.

Proof.

By Lemmas 3.5, Corollary 3.7, and Lemma 3.8, is bounded in , and and are bounded in . Then we can choose as a subsequence that converges strongly in by Proposition 2.1. Due to Lemma 3.9 this convergence is strong in . Now, extracting additional weakly converging subsequences from and finishes the proof. ∎

The next result shows that limit points of satisfy the usual complementarity conditions.

Proof.

We will now show that weak limit points of solutions to (3.10) satisfy a stationary condition similar to the one for the original problem (1.1). We will utilize this result twice: first we apply it to iterates of Algorithm 3.3, second we will use it to prove a optimality condition for (1.1) that has a different structure than that of Theorem 2.5.

Theorem 3.12.

Proof.

The system (3.12) is a consequence of Corollary 3.6, Lemma 3.11, and the non-negativity of . In order to pass to the limit in (3.10), we need to analyze the term involving . Here, we argue similar as in the proof of [8, Theorem 10]. Let . Then, we find

Let us define by

Clearly, the sequence is bounded in , and there exists a subsequence converging weak-star in . W.l.o.g. we can assume in . Since is bounded in , we obtain

Then we can pass to the limit in (3.10) to find

which is satisfied for all . This implies

Let now . By convexity of , we have

| (3.13) |

Here, we find and

cf., Proposition 2.1. Here, we used that is bounded in due to Lemma 3.8 and (3.11). Using the equation (3.10), we find

Then we can pass to the limit in (3.13) to obtain

for all . Due to the density result of Proposition 2.1 with respect to intermediate convergence (2.2), the inequality holds for all . Consequently, . Using the chain rule as in Theorem 2.5, we find

which proves the existence of with the claimed properties. ∎

3.3 Convergence of iterates

We are now going to apply the results of the previous two sections to the iterates of Algorithm 3.3. In terms of (3.10), we have to set . As can be seen from, e.g., Theorem 3.12, the boundedness of in will be crucial for any convergence analysis. Unfortunately, this boundedness can only be guaranteed in exceptional cases. Here, we prove it under the assumption that is globally Lipschitz continuous. In Section 3.4 we show that convexity of or global optimality of is sufficient.

Lemma 3.13.

Let be globally Lipschitz continuous with modulus . Assume . Then and are bounded in .

Proof.

Due to the Lipschitz continuity of , we have

By Corollary 3.7, we find for sufficiently large

If is such that , then which proves the claim. ∎

The next observation is a simple consequence of previous results and shows the close relation between boundedness of in and and the boundedness of in .

Lemma 3.14.

Assume . Then the following statements are equivalent:

-

(1)

is bounded in ,

-

(2)

is bounded in and ,

-

(3)

is pre-compact in .

Proof.

Similarly to Theorem 3.10, we have the following result on the existence of converging subsequences.

Theorem 3.15.

Suppose and . Let solve (3.5). Assume that is bounded in . Then there is a subsequence such that , , and in .

Proof.

This result can be proven with similar arguments as Theorem 3.10. ∎

We finally arrive at the following convergence result for iterates of Algorithm 3.3 which is a consequence of Theorem 3.12.

Theorem 3.16.

Proof.

By assumption, we have . The proof is now a direct consequence of Theorem 3.12. ∎

3.4 Global solutions

The next theorem shows that global optimality is sufficient to obtain boundedness of iterates. We note that if is convex, solutions of (3.5) are global solutions to the penalized problem ().

Theorem 3.17.

Proof.

We introduce the notation

Set . Then for large enough. Let be a global minimizer of . This implies

Since is bounded from below, there is such that

This proves that is bounded in by Lemma 3.1((5)). By construction, we have

This implies

and the boundedness of in is now a consequence of identity (3.9). ∎

4 Optimality condition by regularization

Let us assume is locally optimal to (1.1). In this section, we want to show that there is a sequence of solutions of certain regularized problems converging to . This will allow us to prove optimality conditions for that are similar to the systems obtained in Theorems 3.12 and 3.16. Again, we work under the assumptions (A1)–(A5).

The solution satisfies the necessary optimality condition

| (4.1) |

see also Theorem 2.5. It is easy to see that (4.1) implies that is the unique solution to the linearized, strictly convex problem

| (4.2) |

In fact, let be the solution of (4.2). Then we have the following optimality condition

which is satisfied by . Let us approximate (4.2) by the family of unconstrained convex problems

| (4.3) |

The optimality condition for the unique solution to (4.3) is given by

| (4.4) |

for all .

Corollary 4.1.

Suppose and . Suppose is the corresponding sequence of global solutions to the penalized problems (4.3). Then is bounded in .

Proof.

The claim follows by a similar argumentation as in the proof of Theorem 3.17. ∎

Lemma 4.2.

Suppose and . Let be the corresponding sequence of global solutions to the penalized problems (4.3). Then in , and the sequences and are bounded in .

Proof.

Due to Corollary 4.1, is bounded in . Suppose for the moment in and in . By Lemma 3.9 applied to and , we obtain in . By Theorem 3.10, the corresponding sequences and are bounded in . Suppose and in . By Theorem 3.12, we have

Due to the complementarity conditions (3.12) of Theorem 3.12, we get

Since , we have by the monotonicity of the subdifferential

which implies .

With similar arguments, we can show that every subsequence of contains another subsequence that converges in to . Hence, the convergence of the whole sequence follows. ∎

This convergence result enables us to prove that satisfies an optimality condition similar to those of Theorems 3.12 and 3.16.

Proof.

Clearly, the optimality conditions of Theorem 4.3 are stronger than those of Theorem 2.5. However, the proofs above only work on the strong assumptions that the bounds and are constant functions. Here, it is not clear to us, under which assumptions the above techniques carry over to non-constant and .

5 Numerical tests

In this section, the suggested algorithm is tested with selected examples. To this end, we implemented Algorithm 3.3 in python using FEnicCS, [15]. Our examples are carried out in the optimal control setting. In particular, is given by the reduced tracking type functional

where is the weak solution operator of some elliptic partial differential equation (PDE) specified below. To solve the partial differential equation, the domain is divided into a regular triangular mesh, and the PDE as well as the control are discretized with piecewise linear finite elements. If not mentioned otherwise, the computations are done on a 128 by 128 grid, which results in a mesh size of .

Let us define by

with as defined in (3.4). It is given in our tests by the specific choice

let us recall that we use the following function to approximate the -seminorm:

Concerning the continuation strategy for the parameters and in Algorithm 3.3, we set in the initialization and decrease by factor after each iteration. The penalty parameter is increased by factor after every iteration and is initialized with . Algorithm 3.3 is stopped if the following termination criterion is satisfied:

| (5.1) |

where the residuals are given by

and

with as in the proof of Theorem 3.12. Here, the residuum measures the violation of the box-constraints and of the complementarity condition in Theorem 3.16. Let us discuss the choice of the residuum . It can be interpreted as a residual in the subgradient inequality. Since , we have for . Hence, . This implies

Hence, can be interpreted is an element of the -subdifferential to the error level .

5.1 Globalized Newton Method for the subproblems

To solve the variational subproblems of form (), i.e.,

we use a globalized Newton method. Let us recall the notation

and introduce

and

The Newton method with a line search strategy is given as follows:

Algorithm 5.1 (Global Newton method).

Set , , , , . Choose .

-

1.

Compute the search direction by solving

(5.2) where

and

If : set .

-

2.

(line search) Find such that

-

3.

Set .

-

4.

If a suitable stopping criteria is satisfied: Stop.

-

5.

Set and go to step 1.

Let us provide details regarding the implementation of Algorithm (5.1). In the initialization of Algorithm 5.1, we set , , and . In addition, we employed the following termination criterion:

That is, if there is no sufficient change between consecutive iterates, we assume that the method resulted in a stationary (minimal) point of the subproblem.

5.2 Example 1: linear elliptic PDE

First, we consider the optimal control problem

| (5.3) |

subject to

and the box constraints

Note that (5.3) as well as the subproblem

| (5.4) | ||||

are convex and uniquely solvable. Let us introduce the adjoint state as the solution of the partial differential equation

Applying Algorithm 5.1 to the reduced functional of problem (5.2) results in the following system of equations that has to be solved in each Newton step:

where is given by

The equation is the optimality system to problem (5.2). The derivative of in direction is given by

The solution of the Newton step (5.2) is then given by .

We adapt the example problem data from [11]. Here, and

In the computations we set and . This example (without additional box constraints) was also used in [12].

In Table 1, we see the convergence behavior of iterates. Here, the errors

are presented, where is the final iterate after the algorithm terminated at step and . Furthermore, we observe and as and .

| 12 | 1.11 | |||

|---|---|---|---|---|

| 13 | 0.80 | |||

| 14 | 0.56 | |||

| 15 | 0.34 | |||

| 16 | 0.17 | |||

| 17 | 0.07 | |||

| 18 | 0.02 | |||

| 19 | — | — |

Figure 1 shows the optimal control. The result is in agreement with the results obtained in [12]. In Figure 2 the computed optimal controls are depicted for the unconstrained case (left), i.e., constraints are inactive during the computation process. The right plot shows the optimal control , when lower and upper bound are set to and .

5.3 Example 2: Semilinear elliptic optimal control problem

Let us now consider the following problem with semilinear state equation. That is, we study the minimization problem

where is given by the standard tracking type functional , and is the weak solution of the semilinear elliptic state equation

The adjoint state is given now as solution to the equation

For this example, system of equations (5.2) for the state , the adjoint state and the control variable is given by

with

The derivative in direction is given with

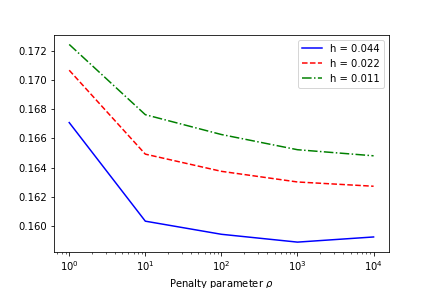

The data is given as in Example 1. The optimal control is depicted in Figure 3. It is close to the solution of Example 1. Let us consider the performance of the algorithm on different levels of discretization for this example. Table 2 shows the number of outer iterations (it), as well as the total number of newton iterations (newt) needed until the stopping criterion (5.1) holds for increasing meshsizes. The last column shows the final objective value . The residuals and behaved as in Example 1.

| it | newt | ||||

|---|---|---|---|---|---|

| 0.088 | 16 | 182 | 0.0596 | ||

| 0.044 | 19 | 201 | 0.0685 | ||

| 0.022 | 19 | 314 | 0.0737 | ||

| 0.011 | 19 | 486 | 0.0767 |

5.4 Experiments with non-constant constraints

So far our analysis and numerical experiments are restricted to the case where are constant functions. This assumption was needed to show the boundedness of multipliers in in Lemma 3.5, which is crucial for the final result Theorem 3.16. For this section we tested Algorithm 3.3 also for non-constant functions .

Here, we consider again the linear optimal control problem and data from Example 1 with different choices for :

| (5.5) | ||||

| (5.6) |

In Figure 4 the behavior of the quantity is plotted along the iterations, i.e., for increasing , for different discretization levels. In Figure 5, the respective solution plots are shown. While the multipliers seem to be bounded for one example, their norm grows with (and thus with ) for the other example. Clearly, more research has to be done to develop necessary and sufficient conditions for the boundedness of the multipliers.

Acknowledgement

The authors are grateful to Gerd Wachsmuth for an inspiring discussion that led to an improvement of Theorem 3.12 and subsequent results.

References

- [1] R. Acar and C. R. Vogel. Analysis of bounded variation penalty methods for ill-posed problems. Inverse Problems, 10(6):1217–1229, 1994.

- [2] H. Attouch, G. Buttazzo, and G. Michaille. Variational analysis in Sobolev and BV spaces, volume 6 of MPS/SIAM Series on Optimization. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA; Mathematical Programming Society (MPS), Philadelphia, PA, 2006. Applications to PDEs and optimization.

- [3] S. Bartels. Total variation minimization with finite elements: convergence and iterative solution. SIAM J. Numer. Anal., 50(3):1162–1180, 2012.

- [4] S. Bartels and M. Milicevic. Iterative finite element solution of a constrained total variation regularized model problem. Discrete Contin. Dyn. Syst. Ser. S, 10(6):1207–1232, 2017.

- [5] E. Casas, K. Kunisch, and C. Pola. Regularization by functions of bounded variation and applications to image enhancement. Appl. Math. Optim., 40(2):229–257, 1999.

- [6] E. Casas, R. Herzog, and G. Wachsmuth. Optimality conditions and error analysis of semilinear elliptic control problems with cost functional. SIAM J. Optim., 22(3):795–820, 2012.

- [7] E. Casas, F. Kruse, and K. Kunisch. Optimal control of semilinear parabolic equations by BV-functions. SIAM J. Control Optim., 55(3):1752–1788, 2017.

- [8] E. Casas and K. Kunisch. Analysis of optimal control problems of semilinear elliptic equations by BV-functions. Set-Valued Var. Anal., 27(2):355–379, 2019.

- [9] A. Chambolle, V. Caselles, D. Cremers, M. Novaga, and T. Pock. An introduction to total variation for image analysis. In Theoretical foundations and numerical methods for sparse recovery, volume 9 of Radon Ser. Comput. Appl. Math., pages 263–340. Walter de Gruyter, Berlin, 2010.

- [10] T. F. Chan, S. Esedoglu, F. E. Park, and A. M. Yip. Total variation image restoration: Overview and recent developments. In N. Paragios, Y. Chen, and O. D. Faugeras, editors, Handbook of Mathematical Models in Computer Vision, pages 17–31. Springer, 2006.

- [11] C. Clason and K. Kunisch. A duality-based approach to elliptic control problems in non-reflexive Banach spaces. ESAIM Control Optim. Calc. Var., 17(1):243–266, 2011.

- [12] D. Hafemeyer and F. Mannel. A path-following inexact Newton method for optimal control in BV, 2020.

- [13] D. Kinderlehrer and G. Stampacchia. An introduction to variational inequalities and their applications, volume 88 of Pure and Applied Mathematics. Academic Press, Inc. [Harcourt Brace Jovanovich, Publishers], New York-London, 1980.

- [14] K. Kunisch and D. Wachsmuth. Sufficient optimality conditions and semi-smooth Newton methods for optimal control of stationary variational inequalities. ESAIM Control Optim. Calc. Var., 18(2):520–547, 2012.

- [15] H. P. Langtangen and A. Logg. Solving PDEs in Python, volume 3 of Simula SpringerBriefs on Computing. Springer, Cham, 2016. The FEniCS tutorial I.

- [16] M. Milicevic. Finite Element Discretization and Iterative Solution of Total Variation Regularized Minimization Problems and Application to the Simulation of Rate-Independent Damage Evolutions. PhD thesis, Universität Freiburg, 2018.

- [17] L. I. Rudin, S. Osher, and E. Fatemi. Nonlinear total variation based noise removal algorithms. Phys. D, 60(1-4):259–268, 1992. Experimental mathematics: computational issues in nonlinear science (Los Alamos, NM, 1991).

- [18] C. Scheven and T. Schmidt. On the dual formulation of obstacle problems for the total variation and the area functional. Ann. Inst. H. Poincaré Anal. Non Linéaire, 35(5):1175–1207, 2018.

- [19] A. Schiela and D. Wachsmuth. Convergence analysis of smoothing methods for optimal control of stationary variational inequalities with control constraints. ESAIM Math. Model. Numer. Anal., 47(3):771–787, 2013.

- [20] F. Tröltzsch. Optimal control of partial differential equations, volume 112 of Graduate Studies in Mathematics. American Mathematical Society, Providence, RI, 2010. Theory, methods and applications, Translated from the 2005 German original by Jürgen Sprekels.