A Parameter Setting Heuristic for the Quantum Alternating Operator Ansatz

Abstract

Parameterized quantum circuits are widely studied approaches to tackling challenging optimization problems. A prominent example is the Quantum Alternating Operator Ansatz (QAOA), a generalized approach that builds on the alternating structure of the Quantum Approximate Optimization Algorithm. Finding high-quality parameters efficiently for QAOA remains a major challenge in practice. In this work, we introduce a classical strategy for parameter setting, suitable for cases in which the number of distinct cost values grows only polynomially with the problem size, such as is common for constraint satisfaction problems. The crux of our strategy is that we replace the cost function expectation value step of QAOA with a classical model that also has parameters which play an analogous role to the QAOA parameters, but can be efficiently evaluated classically. This model is based on empirical observations of QAOA, where variable configurations with the same cost have the same amplitudes from step to step, which we define as Perfect Homogeneity. Perfect Homogeneity is known to hold exactly for problems with particular symmetries. More generally, high overlaps between QAOA states and states with Perfect Homogeneity have been empirically observed in a number of settings. Building on this idea, we define a Classical Homogeneous Proxy for QAOA in which this property holds exactly, and which yields information describing both states and expectation values. We classically determine high-quality parameters for this proxy, and then use these parameters in QAOA, an approach we label the Homogeneous Heuristic for Parameter Setting. We numerically examine this heuristic for MaxCut on unweighted Erdős-Rényi random graphs. For up to QAOA levels we demonstrate that the heuristic is easily able to find parameters that match approximation ratios corresponding to previously-found globally optimized approaches. For levels up to we obtain parameters using our heuristic with approximation ratios monotonically increasing with depth, while a strategy that uses parameter transfer instead fails to converge with comparable classical resources. These results suggest that our heuristic may find good parameters in regimes that are intractable with noisy intermediate-scale quantum devices. Finally, we outline how our heuristic may be applied to wider classes of problems.

I Introduction

The Quantum Alternating Operator Ansatz (QAOA) [1] is a widely-studied parameterized quantum algorithm for tackling combinatorial optimization problems. It uses the alternating structure of Farhi et al.’s Quantum Approximate Optimization Algorithm [2], a structure that was also studied in special cases in much earlier work [3, 4]. Recent work has explored regimes for which suitable parameters for QAOA are difficult to pre-compute. This includes extensive research viewing QAOA as a variational quantum-classical algorithm (VQA), in which results from runs on quantum hardware are fed into a classical outer loop algorithm for updating the parameters. Optimizing parameters for VQAs can be quite challenging, as one typically needs to search over a large parameter space with a complex cost landscape. While for a successful algorithm, one does not necessarily need to find optimal parameters, but rather good enough parameters for a given target outcome, finding good parameters can be difficult due to the number of local optima [5, 6], and in some cases barren plateaus [7, 8]. Moreover, the high levels of noise present on current quantum devices can exacerbate these challenges [9].

We propose a novel approach to QAOA parameter optimization that maps the QAOA circuit to a simpler classical model. The crux of our approach is that in the parameter objective function (as introduced below), we replace the cost function expectation value, which is typically evaluated either on quantum hardware or using expensive classical evaluation, with a simpler model that has parameters that play an analogous role to the QAOA parameters, but can be efficiently evaluated classically. This approach is based on the observation, originally due to Hogg [3, 4], that one may leverage a classical “mean-field” or “homogeneous” model where variable configurations with the same cost have the same amplitudes from step to step, which we define as Perfect Homogeneity. Extending this idea, we define a Classical Homogeneous Proxy for QAOA (“proxy” for short) in which this property holds exactly, and which yields both information describing states and expectation values. We then classically determine high-quality parameters for this proxy, and then use these parameters in QAOA, an approach we label the Homogeneous Heuristic for Parameter Setting (“heuristic” for short). This heuristic is visualized in Fig. 1.

The heuristic is appropriate for classes of constraint satisfaction problems (CSPs) in which instances consists of a number of clauses polynomial in the number of variables, with each clause acting on a subset of the variables. For such problems, the number of distinct cost function values, and thus the computation time of the proxy, is polynomially bounded in the number of variable as desired. We describe the proxy for four such classes of CSPs: random kSAT, MaxCut on unweighted Erdős-Rényi model graphs, random MaxEkLin2, and random Max-k-XOR. For these examples, the proxy leverages information only about the class (as well as the number of variables and clauses), rather than problem instance, so that the proxy describes states and expectation values for the entire class. We then explore the heuristic for parameter setting on classes of MaxCut problems. This heuristic returns a set of parameters for the entire class, which can then be tested on instances from that class. Our results indicate that for MaxCut, the heuristic on -node graphs is able to – for ,, and – identify parameters yielding approximation ratios comparable to those returned by the commonly-used strategy of transferring globally-optimized parameters [10, 11]. We then demonstrate out to depth that the heuristic identifies parameters yielding monotonically increasing approximation ratios as the depth of the algorithm increases. A parameter setting strategy that uses an identical outer loop parameter update scheme but does not leverage the proxy fails to converge given identical classical resources.

The practical implications of this work is that for classes of optimization problems such as CSPs with a fixed number of randomly selected clauses on a fixed number of variables, the Classical Homogeneous Proxy for QAOA can be computed with solely classical resources in time polynomially scaling with respect to as well as . Thus, the proxy avoids the issue of exponentially growing resources (with respect to or ) of using exact classical evaluation of expectation values, and avoids the noise present on NISQ devices. The Homogeneous Heuristic for Parameter Setting leverages this proxy, so the subroutine evaluating cost expectation values may be much quicker. However, the parameter landscape may still be challenging to optimize over, especially in cases in which the number of free parameters grows with the problem size. Nevertheless, we demonstrate for MaxCut that our heuristic is able to outperform previous results of parameter transfer [10], indicating the potential of our heuristic approach (which may also be combined with other advancements in parameter setting, as discussed briefly later in the paper) to achieve further improvements in practice.

Quantum Alternating Operator Ansatz: We here briefly describe the structure of QAOA [2, 3, 4, 1]. Given a cost function to optimize, a QAOA circuit consists of repeated applications of a mixing and cost Hamiltonian and in alternation, parameterized by parameters :

| (1) |

where is an easily-prepared initial state. There is freedom in choosing the Hamiltonians , and , although typically (as is followed in this work), is chosen such that for all , and is a mixer that is simple to implement on hardware. More general operators and initial states for QAOA are proposed in [1]. In this work we choose the X mixer , where represents the total number of qubits in the circuit, and the starting state is chosen to be the uniform superposition over all bitstrings, as originally considered in [2]. The goal of QAOA is then to produce a state such that repeated sampling in the computational basis yields either an optimal or high-quality approximate solution to the problem. Finding such good parameters is the parameter setting problem and may be approached in a number of ways with different tradeoffs, ranging from black-box optimization techniques to application specific approaches. We refer to fixed QAOA parameters as a parameter schedule.

We now describe QAOA as a VQA: given some classical cost function on variable bitstrings , and a quantum circuit ansatzë with parameters , one defines a parameter objective function and optimizes over with respect to . In order to optimize over , a two-part cycle is typically employed. First, a classical outer loop chooses one or more parameters for which evaluations of or its partial derivatives are requested, based on initial or prior cycle information. Then, a subroutine evaluates and its derivatives for all requested by the outer loop. Given these evaluations, the parameter update scheme can then update the current best and choose a new set of parameters to test. Throughout this work we refer to the outer loop as the parameter update scheme and the inner subroutine as parameter objective function evaluation. Typically in QAOA, is taken to be . This choice of measures the expected cost function value obtained from sampling the QAOA state, which we refer to as the typical parameter objective function. In this work, we will replace it with what we define as the homogeneous parameter objective function, which utilizes the Classical Homogeneous Proxy for QAOA.

Related Work: There have been numerous parameter setting strategies proposed for QAOA. Most of these strategies focus on improving the parameter update scheme, while keeping the typical parameter objective function. These strategies range from the simplest approach of directly applying classical optimizers to approaches incorporating more sophisticated machine learning techniques [12, 13, 14, 15, 16, 17, 18, 19, 20]. In practice, however, efficiently finding high-quality parameters remains a challenging task. Global optimization strategies often get stuck in navigating the parameter optimization landscape due to barren plateaus [7, 9, 8] or multitudes of local optima [5, 6], and the problem becomes especially challenging as the number of parameters grows due to the curse of dimensionality. In some circumstances parameter optimization can even become NP-hard [21]. There have been multiple methods proposed that attempt to alleviate these issues. The first of these include initializing parameters to be close to parameters informed by previous information or intuition [22, 23, 24]. One such example is to use parameters that represent a discretized linear quantum annealing schedule [25, 26]. Another example is based on the principle that globally optimal parameters for random QAOA instances drawn from the same distribution tend to concentrate [27, 2, 13, 28]. Thus, if globally optimal parameters are known for one, or many instances of a specific class of problems, these exact parameters (or median parameters for more than one instance) empirically perform well on a new, unseen instance from the same class [29, 11]. Another approach for improving parameter setting is re-parameterizing the circuit, which places constraints on the allowed parameter schedules, thereby reducing the number of parameters so that they are optimized more easily. In some cases, this re-parameterization can be informed by optimized parameters for typical instances, or by connections to quantum annealing [13, 30, 24, 23, 26].

An alternative paradigm for parameter setting are methods that modify the parameter objective function itself. Indeed, one can find closed form expressions for expected cost as a function of parameters in some cases, for example MaxCut at [31], or for high-girth regular graphs [32]. Moreover, when applicable one can take advantage of problem locality considerations (such as graphs of bounded vertex degree for MaxCut) to compute the necessary expectation values without requiring the full quantum state vector [2, 31]. In these cases, then, one could optimize parameters with respect to these expressions rather than by evaluating the entire dynamics. Other examples of parameter objective function modification include using conditional value at risk [33] and Gibbs-like functions [34], which are closely related to the usual parameter objective function. Similar in spirit to our approach, recent work [35, 36, 37] has proposed the use of surrogate models, which use quantum circuit measurement outcomes to construct an approximate parameter objective function. In contrast, our approach is a entirely classical, and the parameters it outputs may be used directly, or possibly improved further, given access to a quantum device. Additionally, a related perspective was recently proposed in [38], wherein the authors study the connection between single-layer QAOA states and pseudo-Boltzmann states where computational basis amplitudes are also expressed as functions of the corresponding costs, i.e., perfectly homogeneous in our terminology. While [38] provides additional motivation for our approach, the authors there do not consider cases beyond and so their results do not immediately apply to parameter setting in the same way.

Outline of paper: In Sec. II we define the Classical Homogeneous Proxy for QAOA. In Sec. III, we demonstrate how to implement the proxy for classes of CSPs with a fixed, polynomial number of randomly drawn clauses. In Sec. IV we introduce the Homogeneous Heuristic for Parameter Setting. In Sec. V we provide numerical evidence for the efficacy of the proxy and the heuristic applied to MaxCut on unweighted Erdős-Rényi model graphs. Finally in Sec. VI we present the results of the heuristic for the MaxCut on the same class of graphs. We conclude in Sec. VII with a discussion of our results and several future research directions.

II Classical Homogeneous Proxy for QAOA

This section summarizes and generalizes the approach of [3], updating the notation to match current notation for QAOA and allowing for easier extension to other CSPs. In this section, we describe the Classical Homogeneous Proxy for QAOA from a sum-of-paths perspective [3, 39] using a set of classical equations that ensure what we presently define as Perfect Homogeneity.

Definition 1.

Perfect Homogeneity: For a given state , where is the amplitude of bitstring when written in the computational basis, has Perfect Homogeneity if and only if the the amplitude of all can be written solely as a function of , i.e. . Then .

Perfect Homogeneity trivially holds for QAOA states with non-degenerate cost functions where each bitstring has a unique cost , as well as the maximally degenerate case where the cost function is constant. A less trivial example is the class of cost functions that depend only on the Hamming weight of each bitstring, , such as the Hamming ramp . For this class, symmetry arguments [23] imply that the QAOA state retains Perfectly Homogeneity for any choice of QAOA circuit depth and parameters. Indeed, useful intuition for homogeneity can be gained from the symmetry perspective; in [23, Lem. 1] it is shown that for a classical cost function with symmetries compatible with the QAOA mixer and initial state, bitstrings that are connected by these symmetries will have identical QAOA amplitudes. In this case the corresponding QAOA states belong to a Hilbert space of reduced dimension. Perfect Homogeneity is an even stronger condition: all bitstrings with the same cost have identical amplitudes, not just those connected by mixer-compatible symmetries. Hence, QAOA states can be expected to be Perfectly Homogeneous only in limited cases, though these states may be near to Perfectly Homogeneous states in some settings [3]. We consider QAOA applied to cost functions with polynomially many distinct cost values, such that states may not have Perfect Homogeneity. Classically, however, we may assert Perfect Homogeneity to construct our proxy.

Additional intuition comes from the case of strictly -local problem Hamiltonians (such as, for instance, MaxCut), where the QAOA state is easily shown to be Perfectly Homogeneous to leading-order in , independent of , with amplitude of bitstring given by in the case. Similar analysis for the higher case also yields Perfect Homogeneity to first order in the parameters. This connection is considered further in Sec. V.2.

Given this intuition, we begin from the sum-of-paths perspective [39] for QAOA to provide preliminary analysis for our approach.

II.1 Classical Homogeneous Proxy for QAOA from the Sum-of-Paths Perspective for QAOA

The amplitude of a bitstring at step induced by applying a layer of QAOA with parameters to a QAOA state with layers can be expressed succinctly as [39]

| (2) |

where is the Hamming distance between bitstrings and , are the mixing operator matrix elements, is the cost of bitstring , and the sum is over all bitstrings in the computational basis.

Grouping the terms in Eq. (2), we can rewrite the sum as

| (3) |

where the sum over runs from and the sum over runs over the set of unique costs allowed by the cost function, which we describe in Sec. II.2. If it is the case that the amplitudes of all bitstrings with cost are identical, then we can substitute with for all . This is exactly Perfect Homogeneity as described in Def. 1. For the rest of this text we use this upper case to distinguish a function of cost rather than the bitstring itself. This substitution yields

| (4) |

where represents the number of bitstrings with cost that are of Hamming distance from . We note that for this equation, and all following equations, this evolution is no longer restricted to unitary evolution. As such, the values and no longer represent strictly quantum amplitudes, but rather track an object that is an analogue to the quantum amplitude. We now introduce the major modification to the sum-of-paths equations. For this, we replace each distribution with some distribution where , where we again adopt the upper case to distinguish a new distribution that solely depends on the cost of the bitstring. This distribution will generally differ from the original, but in this work we exhibit cases where is efficiently computable and can adequately replaces for the purpose of parameter setting. These cases are discussed in Sec. III and the effectiveness of the replacement is numerically explored in Sec. V.

Using distributions to replace , then, we can further rewrite the sum as

| (5) |

where is used to make explicit that the substitutions yield an expression for that depends solely on the cost of bitstring . This leads to a crucial point for our analysis: if we start in a state observing Perfect Homogeneity, and if the distributions can be replaced by distributions , which solely depend on the cost of , then subsequent layers retain the Perfect Homogeneity of the state, yielding analogues of amplitudes . Thus, Eq. (5) is a recursive equation yielding Perfectly Homogeneous analogues of quantum states, which we label the Classical Homogeneous Proxy for QAOA. We here note that importantly, the proxy will not return the analogue of amplitude of some bitstring , but rather the analogue of amplitude of bitstrings with cost , as implicit knowledge of which bitstrings have cost would solve the underlying objective function. In Sec. V we argue that there are cases where these analogue of amplitudes are close to all with .

II.2 Cost Distributions

In order to evaluate Eq. (5), we note that the set of costs to which indices and belong must be defined. Ideally, this set represents exactly the unique objective function values allowed by the underlying cost function. For optimization problems, however, this set is unknown, and is precisely what we would like to solve. Instead, we can form a reasonable estimate by determining upper and lower bounds on the cost function value, as well as by excluding energies which can be efficiently determined to be excluded by the cost function. In this work we denote this estimated set of unique costs as . As an example, for CSPs with binary valued clauses, the cost function counts the number of satisfied clauses, trivially yielding , where is the number of clauses. The set also allows us to define the initial state for the algorithm. QAOA typically begins with a uniform superposition over all bitstrings , such that for all . Thus we can set for all in .

While Eq. (5) yields analogues of amplitudes , one may also wish to use the Classical Homogeneous Proxy for QAOA to return an estimate of expected value of the cost function. This requires computing a distribution for all in , representing the probability a randomly chosen bitstring has cost , averaged over the entire class. Much like in the case of computing , this computation is approximate, as a perfect computation of this distribution would solve the underlying objective function. Examples of estimations of are shown in Sec. III. In order to compute our estimate of expected value of the cost, then, we simply sum over costs , weighting by the expected number of bitstrings with cost () and the squared analogue to the amplitude ,

| (6) |

with the tilde indicating the use of the proxy and that this is no longer a strictly normalized quantum expectation value. This is exactly the equation we use for the homogeneous parameter objective function as described in the introduction. Note that does not give a bona fide probability distribution unless it is re-normalized by dividing by at each step; nevertheless we have found these factors remain close to unity for the parameter setting experiments considered below and so we neglect them going forward, which yields further computational savings. It is straightforward to introduce these factors into Eq. 6 if desired in other applications.

The set and estimate to cost are critically determined by the class of problems one is working with. Examples of these values for a sample of classes is given in Sec. III.

II.3 Algorithm for Computing the Classical Homogeneous Proxy for QAOA

Formalizing the results in this section, we present Algorithm 1, which describes how given QAOA parameters a set of possible costs , and assumed cost distribution we can compute the Classical Homogeneous Proxy for QAOA using Eq. (5) and Eq. 6.

Time Complexity: Given as well as for all in , the calculation of each amplitude using Eq. (5) is . Thus, the calculation of all amplitudes is . Computing all elements then is . If desired, the evaluation of the cost function, given in Eq. (6), involves a sum over elements, thus is .

Thus, if is , then Algorithm 1 is efficient. Indeed, we show such a case in the following section, demonstrating how to calculate the necessary pre-computations of , as well as and for all in .

III Cost distributions for Randomly Drawn CSPs

In this section we demonstrate how to compute and for a number of random CSPs. For the Classical Homogeneous Proxy of Sec. II.1, we assumed that for all in , we are given distributions that suitably estimate for all with cost . Obtaining these distributions can be viewed as a pre-computation step, and when derived from the properties of a particular problem class they may then be applied for any instance within. Here, we identify common random classes of optimization problems where can be efficiently computed for the entire class. Particularly, we focus on CSPs with a fixed number of Boolean clauses, each acting on variables selected at random from the set of variables. The types of allowed clauses is determined by the problem, for example SAT problems consider disjunctive clauses. We note that the analysis here generalizes a similar method in [3] applied to 3-SAT, allowing for easy extension to any CSP matching the above criteria. For these problems, the procedure is as follows, noting that all calculations done here are averaged over the entire class: we first can rewrite the expected number of bitstrings with cost at distance from a random bitstring of cost as,

| (7) |

where represents the probability that a bitstring at distance from a bitstring with cost has cost . This probability can then be rewritten as

| (8) |

where represents the probability that two bitstrings separated with Hamming distance have costs and and represents the probability that a randomly chosen bitstring has cost . The numerator can be calculated as follows:

| (9) |

where represents the probability that two randomly chosen bitstrings with Hamming distance have satisfied clauses in common, along with cost and . This expression can be evaluated via the multinomial distribution

| (10) |

where , , and represent the probability that a randomly selected clause is satisfied by both, one, or neither of the bitstrings separated by Hamming distance , respectively. Since , one only needs to calculate two of these three variables. The previous equations then allow computing for any value as

| (11) |

We summarize this approach in Algorithm 2.

Time Complexity: There are distributions with elements each. For fixed , , and , we must sum over terms that can be evaluated in steps, given by the time complexity of evaluating factorials of . Thus the evaluation of all distributions is . We once again note that if is , Algorithm 2 runs in polynomial time.

We now demonstrate Algorithm 2 for several common problem classes.

III.1 MaxCut

We first analyze MaxCut on Erdős-Rényi random graphs . In this model, a graph is generated by scanning over all possible edges in an node graph, and including each edge with independent probability . The MaxCut problem on is to partition the nodes into two sets such that the number of cut edges crossing the partition is maximized. With respect to the class of graphs the cost function to be maximized over configurations is

| (12) |

where are independent binary random variables that take on value with probability and with probability . We use this cost function to evaluate the relevant distributions in Eq. (11). We start by noting for a fixed graph with edges we have , and the expected number of edges in graphs drawn from is , with a standard deviation proportional to to the square root of this value as determined by the binomial distribution. Hence, as becomes large the expected number of edges concentrates to , and so for simplicity in the remainder of this work we set . Note that in practice, to accommodate the possibility of a given instance with maximum cut greater than this quantity, one may increase by several standard deviations as desired.

We can then easily calculate , the probability a random bitstring has cost for Eq. (12). For a bitstring drawn uniformly at random, the probability of satisfying any given clause is , as there are two satisfying assignments ( and ), and two non-satisfying assignments ( and ). Thus, the probability also follows a binomial distribution and is simply

| (13) |

We can then calculate , and , where we show all three for didactic purposes (since ). To see this, consider two randomly chosen bitstrings and , separated by Hamming distance . We note that there are bits in common between and and bits different. Thus requires that either both and are from the set of same bits or the set of different bits, which has probability

| (14) |

From this expression, we can easily see that . Since represents the probability that , for a random clause and a random bitstring, the probability that is and the same is true for by symmetry. Thus we have

| (15) |

For completion, we can calculate , which is as shown in Sec. II.1. For this term we need , which can be accomplished if one of or is chosen from the bits in common and the other is chosen from the differing bits. This probability is

| (16) |

Thus, , which is the probability of specifically satisfying and not satisfying (or vice versa) is half of , so

| (17) |

Using these quantities is then obtained from Eq. 11.

III.2 MaxE3Lin2/Max-3-XOR

We next consider the MaxE3Lin2 problem which generalizes MaxCut. QAOA for MaxE3Lin2 was analyzed by Farhi et al. [2], who showed an advantage over classical approximation algorithms, only to inspire better classical approaches [40, 41]. The analogous random class of MaxE3Lin2 problems has cost function

| (18) |

where the , are independent random variables with equal probability of being or .

Using Eq. (18) we can again calculate the relevant probability distributions. First note that a random bitstring will satisfy a individual clause (i.e. term in the sum) with probability , as has an equal probability to be or . Thus,

| (19) |

using the binomial distribution. Then we note that, as in Sec. III.1, the probability of for two random bitstrings with is given by

| (20) |

since the value of is preserved if none or two of are flipped, which is satisfied if are all from the identical bits, or two of are chosen from the differing bits. Thus, we can easily compute , since there is an equal chance . can then be calculated by a similar argument or by taking . We note that the probability distributions calculated here are exactly equivalent to those for the Max-3-XOR problem, where all in Eq. (18) are taken to be .

III.3 MaxEkLin2/Max-k-XOR

The MaxEkLin2 problem is a further generalization for each fixed for fixed of MaxE3Lin2, where we replace the with in the cost function and the sum is taken over hyperedges of size . This class of problems was previously studied for QAOA in [42, 43]. For each , is the same as for MaxE3Lin2 above. However, is given by

| (21) |

where the terms in the sum represent all possible ways to choose an even number of bits to flip from the bits in the clause out of total bits. Then, again, we have again and . A similar calculation for the Max-k-XOR problem again yields identical probability distributions.

III.4 Rand-k-SAT

The case of random k-SAT is analyzed by Hogg in [3]. This cost function is defined as the sum over clauses of a logical OR of variables randomly drawn from a set of variables, each of which may be negated. In the notation used in this paper, the distributions of interest are

| (22) |

IV Homogeneous Heuristic for Parameter Setting

Leveraging the Classical Homogeneous Proxy for QAOA, here we propose a strategy for finding good algorithm parameters, which we call the Homogeneous Heuristic for Parameter Setting, as pictured in Fig. 1 and formalized in Algorithm 3.

Here, a “suitable parameter update scheme” is intended to encapsulate a wide variety of general or specific approaches proposed for this in the literature, “Desired” denotes that the while loop can be iterated until the update scheme terminates or some desired convergence criteria is met, and “Constraints on ” denotes any restrictions on the domain of values allowed for , including restrictions to schedules of a prescribed form such as linear ramps introduced in Sec. V.2.

With the heuristic, we replace the typical cost expectation value with the homogeneous parameter objective function, where each function evaluation takes time as determined by Algorithms 1 and 2. This heuristic is purposefully defined in broad terms, in order to maintain complete freedom in the choice of parameter update schemes. Thus, one can still apply a myriad of approaches explored in parameter setting literature, such as parameter initialization, re-parameterization, and the use of different global or local optimization algorithms.

On the other hand, we emphasize that while our approach can significantly speed up the parameter setting task, it is by no means a panacea. Indeed, in cases where the number of parameters to optimize grows with the problem size (e.g., when ), this problem suffers generically from the curse of dimensionality, as well as other potential difficulties such as barren plateaus or plentiful local optima. Hence the incorporation of a variety of parameter setting strategies or approximations that seek to ameliorate these difficulties within our approach is well-motivated.

V Numerically Investigating the Classical Homogeneous Proxy for QAOA for MaxCut

In this section, we explore the application of the Classical Homogeneous Proxy for QAOA to MaxCut on Erdős-Rényi model graphs as considered in Sec. III.1. We first numerically study the accuracy of replacing distributions with distributions as calculated via the methods presented in Sec. III. We then numerically show that the proxy maintains large overlaps with full classical statevector simulation of QAOA for certain parameter schedules. Finally, we provide a toy example for a small graph at , empirically showing that the homogeneous and typical parameter objective function correlate significantly for important parameter regimes.

V.1 Viability of Replacement Distance and Cost Distributions

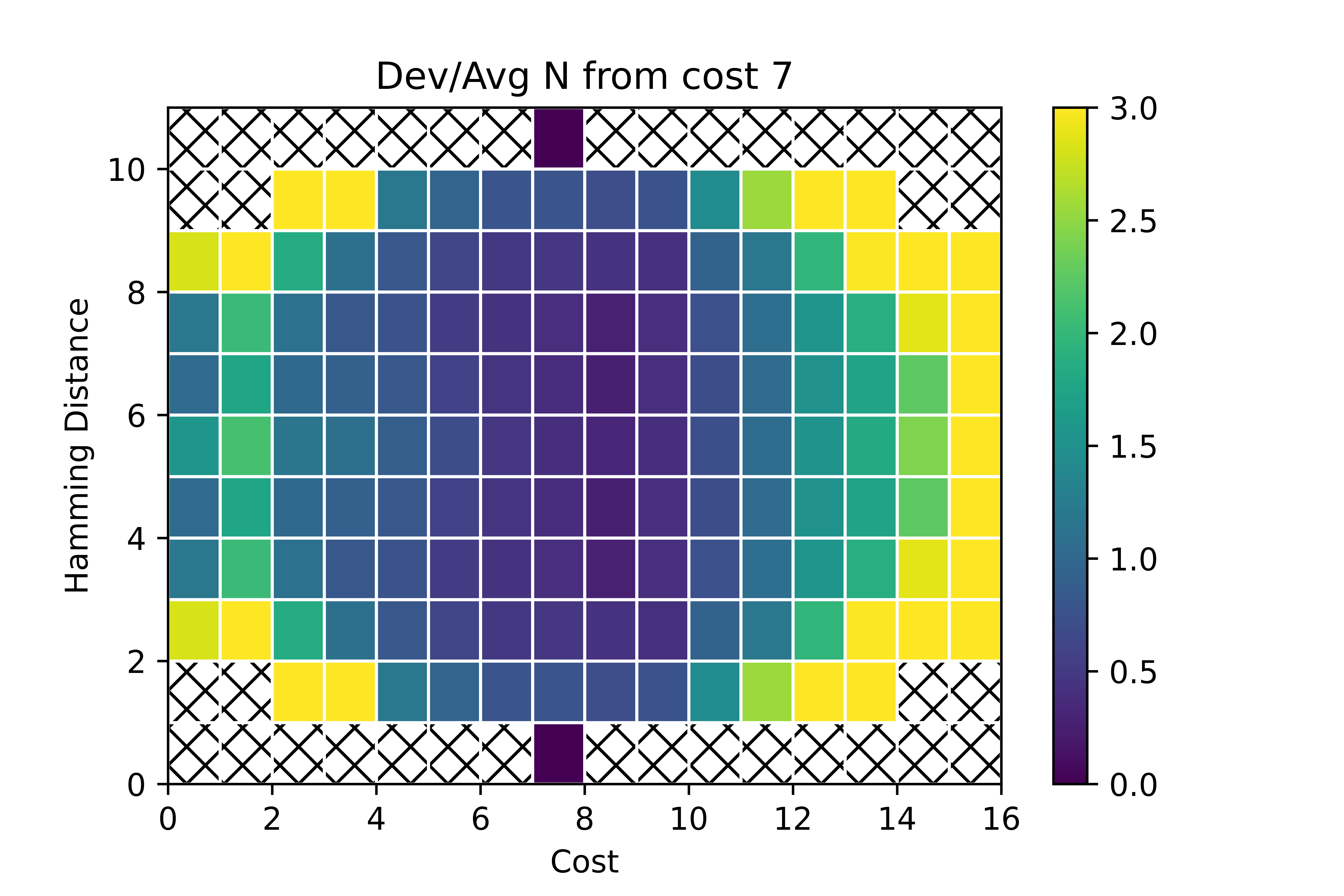

For these experiments, we first choose ten Erdős-Rényi model graphs, and calculate the for all bitstrings in , all d in and all in , with being an upper bound on the maximum cost (here we use , as described in Sec. III.1, in this case ). For each cost , we consider for all states with cost across all graphs. In order to evaluate the viability of replacing with , we present the following intuition: the better that estimates the average of over all with , and the less that deviates over with , the better should estimate for all with . We thus aim to numerically demonstrate the extent to which both the analytically derived estimate the average and to which deviates from its average. We first demonstrate the latter. To do this, we take the element-wise averages of these distributions. This average is one way of computing the distributions , as described in Sec. II.1. We also take the element-wise standard deviations of these distributions. In Fig. 2 we display the element-wise ratio of standard deviation to mean of these distributions for , chosen because is maximal near , such that this term has large weight in Eq. (5).

From the figure, we see that for costs near () and distances near (), there is minimal deviation in the distributions among multiple instances of Erdős-Rényi model graphs and multiple bitstrings with cost . We note that the relative deviation is highest at the edges of the plot, where , , , and . We note, however, that at these points, the expected value of is smaller, such that the contribution of these deviations to the sum in Eq. (4) is less than those with distance and cost near the center of the distribution. As an example, there are bitstrings at distance from a given bitstring , as opposed to bitstrings at distance . A similar argument can be made using the values of derived in Sec. III. This numerical evidence thus suggests that replacing with determined via an averaging over all with cost over multiple instances may introduce deviations from Eq. (5) precisely in cases that contribute to less Eq. (2), allowing for near-homogeneous evolution.

This result provides evidence that the deviation in is small for the “bulk” of contributions to the sum in Eq. (2), such that we can replace with , the average over with for the entire class of problems. We then would like to understand how the analytically derived expressions for in Sec. III estimate these averaged distributions. We perform the comparison of computed by averaging and the methods in Sec. III for MaxCut on Erdős-Rényi model graphs in Fig. 3, showing the Pearson correlation coefficient between the two distributions for each in . We likewise display , in order to elucidate the dominant distributions in the sum of Eq. (5).

From the figure we see that for dominant terms (with high ), the two distributions align well visually, corresponding to a high correlation coefficient. For less important terms, the analytical distributions do not match the average over many instances, but the effect of this mismatch may be reduced due to the lesser weight on these terms as determined by in the sums of equation Eq. (5).

Combined, Figs. 2 and 3 show that for dominant terms, there is little deviation in distributions from their average , and the analytically computed distributions match these average distributions well. Thus, they together indicate that the analytical methods for calculating should approximate with for terms that dominate the sum in Eq. (5).

V.2 Numerical Overlaps

To further investigate the viability of the Classical Homogeneous Proxy for QAOA, we perform numerical simulations of the proxy on MaxCut on Erdős-Rényi graphs , and display the squared overlap between classical full statevector simulation and the proxy as a function of for various parameter schedules motivated by QAOA literature. For this analysis, we choose linear ramp parameter schedules, inspired by quantum annealing. In particular, we fix a starting and ending point for and , which is kept constant regardless of , and the schedule is defined as

| (23) |

for each layer . Given these linear ramp schedules, the squared overlaps between the replace and original quantities are calculated as follows. First, statevector simulation was performed using HybridQ, an open-source package for large-scale quantum circuit simulation [44]. Next, the distributions are computed according to Eq. III. Then, for the purposes of comparison, we compute the proxy slightly differently from above, by starting with the uniform superposition over all bitstrings and simply plugging in for all in Eq. (4), keeping all amplitudes at each step . This allows us to compute the overlap between the proxy and true state using standard quantum state overlap, although we lose the gain in complexity from performing the proxy on only the set of unique costs, as we are working with bitstrings rather than costs. Then, using this method, we plot the squared overlaps between the replace and original quantities as a function of with varying values of and in Fig. 4.

From the figure, we can see that the overlap gradually decreases as the number of QAOA layers increases. However, the decline is less dramatic when and are lower in magnitude. Thus, we see that as we move towards the regime for these problems (or, more precisely [39]), the true QAOA state remains closer to the proxy even as the algorithm progresses, as remarked in Sec II. We stress that this behavior is empirical and the numerics are limited to the MaxCut examples analyzed presently. This behavior does however align with the analytical fact mentioned in Sec. II that to first order in , QAOA states are Perfectly Homogeneous for strictly k-local Hamiltonians.

V.3 Parameter Objective Function Landscapes at Low Depth

In order to provide an explicit illustrative example for the efficacy of the Homogeneous Heuristic for Parameter Setting in Alg. 3, we depict the both the typical and homogeneous parameter objective function as a function of and for a randomly drawn graph and QAOA with . In this example, our aim is to visualize similarities between the two parameter objective functions rather than to exhaustively find optimal parameters for the graph. As such, we borrow , , and , optimized from a node instance in Sec. VI.1. These landscapes are shown in Fig. 5.

It is visually clear that the two landscapes have significant differences. For the typical parameter objective function, there exists a clearly defined, Gaussian-like peak (yellow) and valley (blue). For the homogeneous parameter objective function, there exists a similarly-located peak, albeit vertically compressed, and the corresponding valley comprises almost the entire rest of the landscape. However, we can see that in the small regime in particular, the landscapes qualitatively look very similar. This behavior is suggested by Fig. 4, where we see quantitatively that the extent to which the Classical Homogeneous Proxy for QAOA overlaps with the true QAOA evolution grows as decreases. Additionally, as seen in previous studies of QAOA parameters [13, 14, 11, 10], optimal values of tend to remain relatively small (exact values are not described as they depend on , , and the cost function being used), especially at the beginning of the algorithm. This suggests that, while the homogeneous and typical parameter objective functions may deviate significantly in general, they maintain significant correlations in relevant parameter regimes, specifically those which are near the maximum in the landscape. Indeed, for the task of parameter setting, we expect qualitative feature mapping of the landscape to be much more important than a precise matching of objective function values.

It is also worthwhile to consider the difference in computational resources needed to produce the two plots. For the typical parameter objective function, in order to classically evaluate the evolution of the algorithm in each cell of the presented 30 by 30 landscapes, we perform full statevector simulation. Farhi et al. show in [2], that in order to compute Pauli observable expectation values for the typical parameter objective function, one only needs to include qubits that are within the reverse causal cone generated by the qubits involved in the observable and the quantum circuit implementing QAOA. However, for the example analyzed here, at , this reverse causal cone includes all qubits for each observable, so in order to classically compute the evolution, we perform a full-state simulation. Thus, deriving evolution of the proxy took roughly one-fiftieth of the time required for simulating full QAOA. We note that it is possible to efficiently evaluate each cell on an actual quantum computer, and that if one only wants expectation values given parameters rather than the full state evolution, there are more efficient classical methods (e.g. [2, 31]). Current difficulties for this approach, however include noise resulting both from finite sampling error as well as the effects of imperfect quantum hardware.

VI Results

In this section we present numerical evidence supporting the Homogeneous Heuristic for Parameter Setting, again using MaxCut on Erdős-Rényi model graphs as a target application. Due to the array of possible techniques implementing the parameter update scheme as mentioned in Sec. IV, we do not wish to provide an exhaustive comparison of the heuristic to previous literature, but rather demonstrate regimes where the heuristic provides parameters that are either comparable with previous results, or that yield increasing performance out to larger values of where we are not aware of prior methods in the literature successfully returning well-performing parameter schedules.

VI.1 Global Optimization at Low-Depth

Here we present results of the Homogeneous Heuristic for Parameter Setting, as well as comparisons to the transfer of parameters method outlined in Lotshaw et al. [10], implemented using the QAOAKit software package [11]. Lotshaw et al. show that using one set of median (over the entire dataset at a given and ) parameters performs similarly to optimized parameters for each instance. Thus, we directly pull the obtained parameters from QAOAKit, which first calculates optimal parameters for all connected non-isomorphic graphs up to size at ,, and . For each , the median over all parameters is calculated, and these median parameters are directly applied to ten Erdős-Rényi graphs from , yielding average and standard deviation of expectation values for these median parameters over the ten graphs. To compare with these transferred parameters, we display the approximation ratio achieved by parameters that are optimized with the heuristic, as described in Sec. IV, over the same ten graphs. Here the approximation ratio is defined as follows,

| (24) |

i.e., the expected cost value returned by true QAOA, divided by the true optimal value , as determined via brute force search over all bitstrings. For this experiment, as well as all experiments below, the state throughout the algorithm and are computed via full statevector simulation, even for parameters returned via the heuristic. The comparison between the heuristic and parameter transfer is shown in Fig. 6.

As we can see in Fig. 6, for low depth, the heuristic performs comparably well to parameter transfer. On an instance-by-instance basis, the approximation ratio achieved by homogeneous parameter setting minus that achieved by parameter transfer was , , and for , , and , respectively. We do not see statistically significant differences between the two methods for any three of the depths analyzed, although the average performance of the heuristic is slightly higher in the latter two cases. This numerical evidence indicates that the method is competitive. Furthermore, the optimal parameters in the transfer case require the optimization of smaller QAOA instances, which clearly may incur some tradeoff between the size of problem one wishes to train parameters on versus the accuracy of the parameter transfer onto larger and larger instances. The parameters for comparison were pulled directly from QAOAKit data-tables, so our purpose here is not to provide a full timing comparison between the two methods. However, this demonstrates that our polynomially-scaling heuristic performs comparably with other techniques used in literature.

VI.2 Parameter Optimization at Higher Depth

To elucidate how the Homogeneous Heuristic for Parameter Setting scales with QAOA depth , we further depict box plots of the approximation ratio for a new set of ten Erdős-Rényi graphs at up to . For these experiments, we further restrict to linear ramp parameter schedules as described in Eq. (23), to reduce the number of parameters from to . We introduce this re-parameterization because having free parameters, even for this relatively moderately sized , results in optimization routines that do not converge in a reasonable time on the device as specified below. The results for these runs are shown in Fig. 7.

From this figure, we see that the heuristic, when implemented with linear ramp schedules, results in monotonic improvement of approximation ratios as increases. Notably, for this regime of , , we were not able to find previous works that efficiently returned optimized parameter schedules, even when restricted to linear ramps. Thus, these results demonstrate a regime in which the heuristic is able to return parameters that appear intractable for current devices and strategies, whether quantum, classical, or hybrid.

Numerical details: For our simulations in this section, all calculations (excluding those pulled from the QAOAKit database) were performed using a laptop with Intel i7-10510U CPUs @ 1.80GHz and 16 GB of RAM, with no parallelization utilized. For the node graphs, all experiments clocked in under hours, where the longest times were for fully parameterized circuits (6 parameters). Parameters were seeded using linear ramp schedules from [13] and parameter optimization was performed using the standard Broyden–Fletcher–Goldfarb–Shanno algorithm [45] from the SciPy package [46].

VII Discussion

In this work we formalized the concepts of Perfect Homogeneity and the Classical Homogeneous Proxy for QAOA. We demonstrated how to derive the necessary quantities and efficiently evaluate the proxy for combinatorial satisfaction problems with a fixed, polynomial number of randomly chosen clauses. We then provided numerical evidence to support the use of the proxy for estimating the evolution and cost expectation value of QAOA. Finally, we applied these results to construct the Homogeneous Heuristic for QAOA, and implemented this strategy for a class of MaxCut instances on graphs up to and . Our results show that the heuristic on this class easily yields parameters at , , and that are comparable to those returned by parameter transfer. We further demonstrated that we are able to optimize parameters out to by restricting to a linear ramp schedule, obtaining the desirable property of monotonically increasing approximation ratios as the number of QAOA layers is increased. Notably, we found that the proxy seems to well-estimate both the state and cost expectation of QAOA in the particular cases when remains relatively small throughout the algorithm, as well as for quantum annealing-inspired linear ramp schedules. These ramp schedules have been frequently proposed as empirically well-performing schedules [13, 10, 26], which supports that the proxy may more accurately estimate QAOA expectation values for important parameter regimes and schedules of interest, even if these estimates may diverge somewhat in the case of arbitrarily chosen parameters.

Several interesting research questions and future directions directly follow from our results. An immediate question is to better understand the relationship between the problem class specified, the resulting distributions and used for the proxy, and the effect on the parameters returned by the Homogeneous Heuristic for QAOA, especially with respect to a given problem instance to be solved. For example, a fixed instance can be drawn from a number of different possible classes, so changing the class considered can have a significant effect on the parameters returned and resulting performance. One approach to address this issue would be to extend the derivations of and to incorporate instance-specific information beyond just the problem class. A naive example in this vein would be to estimate the distributions via Monte Carlo sampling of bitstrings and their costs for the given instance. Furthermore, including instance-specific information appears a promising route to explicitly extending the heuristic beyond random problem classes, which can be used to study parameter schedules and performance in the worst-case setting. Finally, it is worthwhile to explore adaptations of our approach to cases where the number of unique possible costs may become large. In this case, one could imagine binning together costs close in value such that an effective cost function with much fewer possible costs is produced, and to which the proxy may be applied.

In terms of generalizing both the methods and scope of our approach, we first re-emphasize that parameter optimization for parameterized quantum circuits consists of two primary components: a parameter update scheme outer loop, and a parameter objective function evaluation subroutine. The inner subroutine is typically evaluated using the quantum computer. The key idea of our approach is to replace the inner subroutine with an efficiently computable classical strategy based on the assumption of Perfect Homogeneity. Hence a natural extension is to consider other efficiently computable proxies for the inner loop. For example, in cases where the problem instance comes with a high degrees of classical symmetries, the dimension of the effective Hilbert space can be drastically reduced, and so the evaluation and optimization of the typical parameter objective can be sped up significantly [23]. Similarly, different proxies may follow from related ideas and results in the literature such as the small-parameter analysis of [39], the pseudo-Boltzmann approximation of [38], and classical or quantum surrogate models [35, 36]. We remark that a promising direction that appears relatively straightforward in light of our results is to extend the analysis of [38] to QAOA levels beyond . Finally, an important direction is to explicitly generalize our approach to algorithms beyond QAOA and, more generally, problems beyond combinatorial optimization, such as the parameter setting problem for Variational Quantum Eigensolvers. Generally, it is important to better understand and characterize regimes where such classical proxies are most advantages, such as when the noisy computation and measurements of real-world quantum devices is taken into account, as well as to what degree undesirable effects such as barren plateaus may apply when such proxies are utilized for parameter setting.

Acknowledgements

We are grateful for support from NASA Ames Research Center. We acknowledge funding from the NASA ARMD Transformational Tools and Technology (TTT) Project. Part of the project was funded by the Defense Advanced Research Projects Agency (DARPA) via IAA8839 annex 114. JS, SH, TH are thankful for support from NASA Academic Mission Services, Contract No. NNA16BD14C. We also acknowledge XSEDE computational Project No. TG-MCA93S030 on Bridges-2 at the Pittsburgh supercomputer center.

References

- [1] Stuart Hadfield, Zhihui Wang, Bryan O’Gorman, Eleanor G. Rieffel, Davide Venturelli, and Rupak Biswas. From the Quantum Approximate Optimization Algorithm to a Quantum Alternating Operator Ansatz. Algorithms, 12(2):34, February 2019. Number: 2 Publisher: Multidisciplinary Digital Publishing Institute.

- [2] Edward Farhi, Jeffrey Goldstone, and Sam Gutmann. A Quantum Approximate Optimization Algorithm. arXiv:1411.4028 [quant-ph], 10(2), November 2014. arXiv: 1411.4028.

- [3] Tad Hogg. Quantum search heuristics. Physical Review A, 61(5):052311, April 2000. Publisher: American Physical Society.

- [4] Tad Hogg and Dmitriy Portnov. Quantum optimization. Information Sciences, 128(3):181–197, October 2000.

- [5] M. Cerezo, Andrew Arrasmith, Ryan Babbush, Simon C. Benjamin, Suguru Endo, Keisuke Fujii, Jarrod R. McClean, Kosuke Mitarai, Xiao Yuan, Lukasz Cincio, and Patrick J. Coles. Variational quantum algorithms. Nature Reviews Physics, 3(9):625–644, September 2021. Number: 9 Publisher: Nature Publishing Group.

- [6] David Wierichs, Christian Gogolin, and Michael Kastoryano. Avoiding local minima in variational quantum eigensolvers with the natural gradient optimizer. Physical Review Research, 2(4):043246, November 2020. arXiv:2004.14666 [quant-ph].

- [7] Jarrod R. McClean, Sergio Boixo, Vadim N. Smelyanskiy, Ryan Babbush, and Hartmut Neven. Barren plateaus in quantum neural network training landscapes. Nature Communications, 9(1):4812, November 2018. Number: 1 Publisher: Nature Publishing Group.

- [8] Martin Larocca, Piotr Czarnik, Kunal Sharma, Gopikrishnan Muraleedharan, Patrick J. Coles, and M. Cerezo. Diagnosing barren plateaus with tools from quantum optimal control. Technical Report arXiv:2105.14377, arXiv, March 2022. arXiv:2105.14377 [quant-ph] type: article.

- [9] Samson Wang, Enrico Fontana, M. Cerezo, Kunal Sharma, Akira Sone, Lukasz Cincio, and Patrick J. Coles. Noise-induced barren plateaus in variational quantum algorithms. Nature Communications, 12(1):6961, November 2021. Number: 1 Publisher: Nature Publishing Group.

- [10] Phillip C. Lotshaw, Travis S. Humble, Rebekah Herrman, James Ostrowski, and George Siopsis. Empirical performance bounds for quantum approximate optimization. arXiv:2102.06813 [physics, physics:quant-ph], February 2021. arXiv: 2102.06813.

- [11] Ruslan Shaydulin, Kunal Marwaha, Jonathan Wurtz, and Phillip C. Lotshaw. QAOAKit: A Toolkit for Reproducible Study, Application, and Verification of the QAOA. arXiv:2110.05555 [quant-ph], November 2021. arXiv: 2110.05555.

- [12] Sami Khairy, Ruslan Shaydulin, Lukasz Cincio, Yuri Alexeev, and Prasanna Balaprakash. Learning to Optimize Variational Quantum Circuits to Solve Combinatorial Problems. Proceedings of the AAAI Conference on Artificial Intelligence, 34(03):2367–2375, April 2020. Number: 03.

- [13] Leo Zhou, Sheng-Tao Wang, Soonwon Choi, Hannes Pichler, and Mikhail D. Lukin. Quantum Approximate Optimization Algorithm: Performance, Mechanism, and Implementation on Near-Term Devices. Physical Review X, page 021067, June 2020. Publisher: American Physical Society.

- [14] Gavin E. Crooks. Performance of the Quantum Approximate Optimization Algorithm on the Maximum Cut Problem. arXiv:1811.08419 [quant-ph], November 2018. arXiv: 1811.08419.

- [15] Ruslan Shaydulin, Ilya Safro, and Jeffrey Larson. Multistart Methods for Quantum Approximate optimization. In 2019 IEEE High Performance Extreme Computing Conference (HPEC), pages 1–8, September 2019. ISSN: 2643-1971.

- [16] Mahabubul Alam, Abdullah Ash-Saki, and Swaroop Ghosh. Accelerating quantum approximate optimization algorithm using machine learning. In 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), pages 686–689. IEEE, 2020.

- [17] Guillaume Verdon, Michael Broughton, Jarrod R. McClean, Kevin J. Sung, Ryan Babbush, Zhang Jiang, Hartmut Neven, and Masoud Mohseni. Learning to learn with quantum neural networks via classical neural networks. arXiv:1907.05415 [quant-ph], July 2019. arXiv: 1907.05415.

- [18] Max Wilson, Rachel Stromswold, Filip Wudarski, Stuart Hadfield, Norm M. Tubman, and Eleanor G. Rieffel. Optimizing quantum heuristics with meta-learning. Quantum Machine Intelligence, 3(1):13, April 2021.

- [19] Andrea Skolik, Jarrod R McClean, Masoud Mohseni, Patrick van der Smagt, and Martin Leib. Layerwise learning for quantum neural networks. Quantum Machine Intelligence, 3(1):1–11, 2021.

- [20] Javier Rivera-Dean, Patrick Huembeli, Antonio Acín, and Joseph Bowles. Avoiding local minima in variational quantum algorithms with neural networks. arXiv preprint arXiv:2104.02955, 2021.

- [21] Lennart Bittel and Martin Kliesch. Training variational quantum algorithms is NP-hard. Physical Review Letters, 127(12):120502, 2021.

- [22] Michael Streif and Martin Leib. Training the quantum approximate optimization algorithm without access to a quantum processing unit. Quantum Science and Technology, 5(3):034008, May 2020. Publisher: IOP Publishing.

- [23] Ruslan Shaydulin, Stuart Hadfield, Tad Hogg, and Ilya Safro. Classical symmetries and the Quantum Approximate Optimization Algorithm. Quantum Information Processing, 20(11):359, November 2021. arXiv: 2012.04713.

- [24] Lucas T. Brady, Christopher L. Baldwin, Aniruddha Bapat, Yaroslav Kharkov, and Alexey V. Gorshkov. Optimal Protocols in Quantum Annealing and Quantum Approximate Optimization Algorithm Problems. Physical Review Letters, 126(7):070505, February 2021. Publisher: American Physical Society.

- [25] Stefan H. Sack and Maksym Serbyn. Quantum annealing initialization of the quantum approximate optimization algorithm. Quantum, 5:491, July 2021. Publisher: Verein zur Förderung des Open Access Publizierens in den Quantenwissenschaften.

- [26] Jonathan Wurtz and Peter J. Love. Counterdiabaticity and the quantum approximate optimization algorithm. arXiv:2106.15645 [quant-ph], July 2021. arXiv: 2106.15645.

- [27] Fernando G. S. L. Brandao, Michael Broughton, Edward Farhi, Sam Gutmann, and Hartmut Neven. For Fixed Control Parameters the Quantum Approximate Optimization Algorithm’s Objective Function Value Concentrates for Typical Instances. arXiv:1812.04170 [quant-ph], December 2018. arXiv: 1812.04170.

- [28] Xinwei Lee, Yoshiyuki Saito, Dongsheng Cai, and Nobuyoshi Asai. Parameters Fixing Strategy for Quantum Approximate Optimization Algorithm. arXiv:2108.05288 [quant-ph], August 2021. arXiv: 2108.05288.

- [29] Alexey Galda, Xiaoyuan Liu, Danylo Lykov, Yuri Alexeev, and Ilya Safro. Transferability of optimal QAOA parameters between random graphs. arXiv:2106.07531 [quant-ph], June 2021. arXiv: 2106.07531.

- [30] Lucas T Brady, Lucas Kocia, Przemyslaw Bienias, Aniruddha Bapat, Yaroslav Kharkov, and Alexey V Gorshkov. Behavior of analog quantum algorithms. arXiv preprint arXiv:2107.01218, 2021.

- [31] Zhihui Wang, Stuart Hadfield, Zhang Jiang, and Eleanor G. Rieffel. Quantum approximate optimization algorithm for MaxCut: A fermionic view. Physical Review A, 97(2):022304, February 2018. Publisher: American Physical Society.

- [32] Kunal Marwaha. Local classical MAX-CUT algorithm outperforms p=2 QAOA on high-girth regular graphs. Quantum, 5:437, April 2021.

- [33] Panagiotis Kl Barkoutsos, Giacomo Nannicini, Anton Robert, Ivano Tavernelli, and Stefan Woerner. Improving Variational Quantum Optimization using CVaR. Quantum, 4:256, April 2020. arXiv:1907.04769 [quant-ph].

- [34] Li Li, Minjie Fan, Marc Coram, Patrick Riley, and Stefan Leichenauer. Quantum optimization with a novel Gibbs objective function and ansatz architecture search. Physical Review Research, 2(2):023074, April 2020.

- [35] Kevin J Sung, Jiahao Yao, Matthew P Harrigan, Nicholas C Rubin, Zhang Jiang, Lin Lin, Ryan Babbush, and Jarrod R McClean. Using models to improve optimizers for variational quantum algorithms. Quantum Science and Technology, 5(4):044008, 2020.

- [36] Ryan Shaffer, Lucas Kocia, and Mohan Sarovar. Surrogate-based optimization for variational quantum algorithms, April 2022. Number: arXiv:2204.05451 arXiv:2204.05451 [quant-ph].

- [37] Juliane Mueller, Wim Lavrijsen, Costin Iancu, et al. Accelerating noisy VQE optimization with gaussian processes. arXiv preprint arXiv:2204.07331, 2022.

- [38] Pablo Díez-Valle, Diego Porras, and Juan José García-Ripoll. QAOA pseudo-Boltzmann states. arXiv:2201.03358 [quant-ph], March 2022. arXiv: 2201.03358.

- [39] Stuart Hadfield, Tad Hogg, and Eleanor G Rieffel. Analytical framework for quantum alternating operator ansätze. arXiv preprint arXiv:2105.06996, 2021.

- [40] Boaz Barak, Ankur Moitra, Ryan O’Donnell, Prasad Raghavendra, Oded Regev, David Steurer, Luca Trevisan, Aravindan Vijayaraghavan, David Witmer, and John Wright. Beating the random assignment on constraint satisfaction problems of bounded degree. arXiv preprint arXiv:1505.03424, 2015.

- [41] Matthew Hastings. Classical and quantum bounded depth approximation algorithms. Quantum Information and Computation, 19:1116–1140, November 2019.

- [42] Kunal Marwaha and Stuart Hadfield. Bounds on approximating MaxXOR with quantum and classical local algorithms. Quantum, 6:757, 2022.

- [43] Chi-Ning Chou, Peter J Love, Juspreet Singh Sandhu, and Jonathan Shi. Limitations of local quantum algorithms on random Max-k-XOR and beyond. In 49th International Colloquium on Automata, Languages, and Programming (ICALP 2022), 2022.

- [44] Salvatore Mandrà, Jeffrey Marshall, Eleanor G. Rieffel, and Rupak Biswas. HybridQ: A Hybrid Simulator for Quantum Circuits. arXiv:2111.06868 [quant-ph], November 2021. arXiv: 2111.06868.

- [45] R. (Roger) Fletcher. Practical methods of optimization. Chichester ; New York : Wiley, 1987.

- [46] Pauli Virtanen, Ralf Gommers, Travis E. Oliphant, Matt Haberland, Tyler Reddy, David Cournapeau, Evgeni Burovski, Pearu Peterson, Warren Weckesser, Jonathan Bright, Stéfan J. van der Walt, Matthew Brett, Joshua Wilson, K. Jarrod Millman, Nikolay Mayorov, Andrew R. J. Nelson, Eric Jones, Robert Kern, Eric Larson, C. J. Carey, İlhan Polat, Yu Feng, Eric W. Moore, Jake VanderPlas, Denis Laxalde, Josef Perktold, Robert Cimrman, Ian Henriksen, E. A. Quintero, Charles R. Harris, Anne M. Archibald, Antônio H. Ribeiro, Fabian Pedregosa, and Paul van Mulbregt. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nature Methods, 17(3):261–272, March 2020.