A Novel Variational Lower Bound For

Inverse Reinforcement Learning

Abstract

Inverse reinforcement learning (IRL) seeks to learn the reward function from expert trajectories, to understand the task for imitation or collaboration thereby removing the need for manual reward engineering. However, IRL in the context of large, high-dimensional problems with unknown dynamics has been particularly challenging. In this paper, we present a new Variational Lower Bound for IRL (VLB-IRL), which is derived under the framework of a probabilistic graphical model with an optimality node. Our method simultaneously learns the reward function and policy under the learned reward function by maximizing the lower bound, which is equivalent to minimizing the reverse Kullback-Leibler divergence between an approximated distribution of optimality given the reward function and the true distribution of optimality given trajectories. This leads to a new IRL method that learns a valid reward function such that the policy under the learned reward achieves expert-level performance on several known domains. Importantly, the method outperforms the existing state-of-the-art IRL algorithms on these domains by demonstrating better reward from the learned policy.

1 Introduction

Reinforcement learning (RL) is a popular method for automating decision making and control. However, to achieve practical effectiveness, significant engineering of reward features and reward functions has traditionally been necessary. Recently, the advent of deep reinforcement learning has eased the need for feature engineering for policies and value functions, and demonstrated encouraging outcomes for various complex tasks such as vision-based robotic control and video games like Atari and Minecraft. Despite these advancements, reward engineering continues to be a significant hurdle to the application of reinforcement learning in practical contexts.

The problem at hand is to learn a reward function that explains the task performed by the expert, where the learner only has access to a limited number of expert trajectories and cannot ask for more data. While imitation learning is a popular technique for training agents to mimic expert behavior, conventional methods such as behavior cloning and generative adversarial imitation learning Ho & Ermon (2016) often do not explicitly learn the underlying reward function, which is essential for a deeper understanding of the task. To overcome this limitation, researchers have developed inverse reinforcement learning (IRL) to infer the reward function from expert trajectories. IRL offers several advantages over direct policy imitation, including the ability to analyze imitation learning (IL) algorithms, deduce agents’ intentions, and optimize rewards in new environments.

In this paper, we present a new probabilistic graphical model involving both the reward function and optimality as key random variables, which can serve as a framework for representing IRL as a probabilistic inference problem. Within the context provided by this model, our main contributions are:

-

1.

A lower bound on the likelihood of the reward model given the expert trajectories as data, which is derived from first principles, and involves minimizing the reverse Kullback–Leibler divergence between an approximated distribution of optimality given the reward function and the true distribution of optimality given trajectories.

-

2.

A novel IRL algorithm which learns the reward function as variational inference that optimizes the lower bound in domains where the state and action spaces can be continuous.

-

3.

Improved learning performance of the algorithm compared to state of the art techniques as seen through the policy given the learned reward, which is demonstrated on multiple well-known continuous domains of various complexity.

Our novel lower bound can not only serve as a point of departure for the formulation of other principled bounds but also stimulate discussions and design of new IRL methods that utilize such bounds.

2 A Novel Variational Lower Bound

Inspired by the significant impact of variational lower bounds such as ELBO in RL, we present a variational lower bound on the likelihood of the reward function. To derive this lower bound from first principles, we first introduce a graphical model to represent the problem in Section 2.1 followed by the derivation of the lower bound in Section 2.2. We discuss the appropriateness of an inherent approximation in Section 2.3. All proof can be found in Appendix A.

2.1 A Probabilistic Graphical Model of IRL

Inspired by the graphical model for forward RL as shown in Levine (2018) and a general lack of such modeling of IRL, we aim to fill this gap in this section. Recall that we are now interested in learning the reward function given the state and action trajectories from the expert. Therefore, it is necessary to embed the reward function explicitly in the graphical model, as shown in Figure 1. The reward value is conditioned on state and action respectively denoting the state and action in the trajectory. We introduce an optimality random variable , whose value is conditioned on the reward value . The optimality follows the definition of Levine (2018) in that the optimality variable is a binary random variable, where denoting that the observed action at time step is optimal given the state, and denotes that it is not optimal. We will discuss the reward function in more detail in Section 2.3.

The choice of a probabilistic graphical model to represent the relationships between the state, action, reward, and optimality variables in IRL is motivated by the desire to capture the complex dependencies between these variables in a lucid manner. Indeed, the graphical model has a natural property when it comes to analyzing optimality. Specifically, the graphical model represents the conditional independence of the optimality from the state and action variables, given the reward variable. In other words, when given the state-action pair , we sum over the reward value to get the marginal conditional distribution of the optimality , that is . However, if the reward value is given, then the state-action pair is of course unnecessary in order to get the marginal distribution of the optimality , that is .

Given the conditional independence in a probabilistic graphical model, which is similar to a Bayesian network, we may write:

| (1) |

which leads to the following marginal:

| (2) | ||||

2.2 Variational Lower Bound for IRL

We use the graphical models of Fig. 1 to formulate the log-likelihood of the observed trajectories. 111In practice, using full trajectories to estimate the reward function in IRL may lead to high variance especially when the trajectories are long. On the other hand, using single state-action pairs to infer the reward function can lead to more stable and efficient estimates, because each observed pair provides a separate estimate of the reward function that can be combined to reduce variance. Here, we are aided by Eqs. 1 and 2 in our derivation, which we detail below.

Here, we use variational distribution to approximate the true distribution . We may think of as an approximation of the true distribution of optimality given the trajectories, and we will discuss in more detail in Section 2.3. We may rewrite the equation above by introducing the expectation and applying Jensen’s inequality:

| (3) |

We end up with the following Evidence Lower BOund (ELBO):

| (4) |

The motivation for using to approximate is quite intuitive that both state-action pair and reward value can explain the optimality. An interpretation of is that the probability can be viewed as the confidence with which the state-action pair comes from the expert. Analogously, is the confidence with which the reward value belongs to the expert. Additionally, to optimize the reward function, we have to incorporate the reward function into the log-likelihood of trajectories.

Note that if we take the partial derivative of Eq. 4 with respect to , we obtain,

| (5) |

because the remaining terms in equation 3 are constant w.r.t. . The derivation from first principles above leads to the following theorem.

Theorem 1.

Optimizing the evidence lower bound of the log-likelihood of trajectories w.r.t is equivalent to optimizing the reverse KL divergence between and w.r.t .

2.3 Approximated Distribution of Optimality

Next, we discuss the approximation of the distribution over optimality under the framework of the probabilistic graphical model and offer insights.

Given a state-action pair obtained from some trajectory , informs whether the action is optimal at state , or in other words, whether the state-action pair comes from the expert’s trajectory. This term represents the true distribution in the reverse KL divergence contained in the lower bound. To make this classification, we may simply use binary logistic regression, . In algorithms such as GAIL and AIRL, the input to the classifier consists of trajectories from both the expert and the learner. The classifier utilizes these trajectories as input to make predictions about the optimality of the state-action pair.

| (6) |

Similarly, given reward value , the approximation informs whether the reward value leads to optimal behavior, i.e., whether it is induced by the expert or not.

Recall that the reward value is the feedback from the environment. Therefore, we propose this reward value as our first approach to estimate the optimality as defined in Eq. 7.

| (7) |

Note that the left hand side of Eq. 7 is conditioned on reward value , which is distributed according to the distribution . Thus, we may apply a parameterization trick to sample a specific reward value without losing track of the gradient propagation under the probabilistic graphical model. The dashed line in Fig. 1 denotes the reparameterization trick and represents the sampled specific reward value from the distribution of . To illustrate this, consider the simplistic case where the reward value distribution is a univariate Gaussian: and let . In this case, a valid reparameterization is (reward values are distributed around the mean), where is an auxiliary noise variable, .

However, it is not sufficient to use the reward values to represent the optimality because, in an optimal trajectory, not every state-action pair has the highest reward value at each time step; often we may perform an action that obtains longer term gain while forgoing greedy rewards. Hence, a more robust way of computing the optimality given the distribution over reward value is needed. Here, the advantage function is a candidate for the solution:

| (8) |

In practice, we can use actor-critic-based policy to retrieve the value function estimation, such as PPO, SAC, or TD3. . As the reward value is a component of the advantage function, we can continue to keep track of the reward function. Therefore, the optimality of reward value can be expressed as:

| (9) |

where and is the Sigmoid function to normalize the advantage function.

2.4 Understanding the Relationship between Two Distributions over Optimality

Notice that the two distributions over optimality and have different definitions according to Eqs. 1 and 2. In this subsection, we demonstrate the validity of using to approximate . We begin with Lemma 1 which establishes an upper bound that is utilized later, and whose proof is in the Appendix.

Lemma 1.

, where and denotes the variance of the distribution of .

We directly use Lemma 1 to arrive at the theorem below and present its proof in the appendix.

Theorem 2.

If , then the approximation distribution , where and , approximates the true distribution with an approximation error that is bounded by Var.

Note that the approximation error is bounded by the variance of instead of and the motivation of using reward to approximate state-action pair comes from the Figure.1, in which holds the connectivity that node is connected to node and node . Without the connectivity, even if the variance of is zero, the approximation error cannot be bounded. More detail can be found in Appendix A.2. It is also the connectivity that holds so that we can derive Eq. 1 and Eq. 2.

From Theorem 2, we know that we can use to approximate with a bounded approximation error w.r.t . Additionally, if the variance of is small enough, then we can use to estimate . Subsequently, the objective function of VLB-IRL is defined in the following:

| (10) |

where is the Sigmoid function to normalize the advantage function and is the hyperparameter to control the contribution of variance in the objective function.

2.5 The Reward Ambiguity Problem

In this section, we discuss the reward ambiguity problem. As deduced in Ho & Ermon (2016), IRL is a dual of an occupancy measure matching problem and the induced optimal policy is the primal optimum. In the following, we further draw the conclusion that IRL is a dual of the optimality matching problem and the reward function induced by the optimality best explains the expert .

Lemma 2.

is maximal if and only if for any given , the action from the expert among all actions has the highest probability, .

The lemma follows from the definition of the classifier as discussed in Section 2.3, which classifies whether the state-action pairs are from the expert or not.

Theorem 3.

The reward function best explains the expert policy if is maximal and is identical to , where .

2.6 The Noisy Expert Trajectories Problem

Recall that the objective function of VLB-IRL consists of a reverse KL divergence between and . Compared to the mean-seeking behavior induced by minimizing forward KL divergence, minimizing reverse KL divergence has the behavior that it is mode-seeking, which tends to be more robust to overfitting and can provide better estimates of the true posterior distribution if the posterior distribution is noisy. This is beneficial for IRL because trajectories could be noisy and may contain suboptimal actions, which challenges the learning. By emphasizing the modes of the data distribution, the reverse KL divergence helps identify the most likely explanations for the observed behavior, even in the presence of noise or uncertainty.

Compared to existing state-of-the-art algorithms, most of them use a single neural network to estimate the reward function. However, in VLB-IRL, we use two separate neural networks to update the reward function. The first neural network is the classifier, defined in Eq. 7. The second neural network is the approximation optimality, defined in Eq. 7 and Eq. 9. The architecture of two separate neural networks has a natural property that is robust to overfitting and has better generalization. It is essential for the IRL algorithm with the presence of noise in expert trajectories.

Another major difference is that most prior algorithms focus on learning identical state-action marginal distributions and consequently end up learning the expert’s noisy state-action representation as well. However, in VLB-IRL, since the true distribution represents the optimality conditioned on the state-action pair, it has the ability to distinguish and generalize the noisy trajectories.

3 VLB-IRL Algorithm

We present an algorithm, which we call variational lower bound for IRL, VLB-IRL (Algorithm 1), for optimizing Eq. 10. To do so, we introduce function approximations for and : we fit a parameterized policy with weights , a parameterized classifier with weights and a parameterized reward function with weights . From line 4 to Line 5, we collect learner policy trajectories and save them into the buffer. From line 8 to line 9, we update the classifier and reward function defined in Section 2.3. At line 10, we update the learner policy based on the learned reward function.

4 Experiments

To ascertain the efficiency of our method VLB-IRL, we use multiple Mujoco benchmark domains (Todorov et al., 2012), such as LunarLander, Hopper, Walker2d, HalfCheetah, and Ant. We also use another more realistic, robot-centered, and goal-based environment, Assistive Gym (Erickson et al., 2020), to further analyze the performance of VLB-IRL. In our experiments, we compare the average true reward accrued over 50 episodes by our method versus the current state-of-the-art methods such as AIRL (Fu et al., 2018a), -IRL (Ni et al., 2020), EBIL (Liu et al., 2021), IQ-Learn (Garg et al., 2021) and GAIL (Ho & Ermon, 2016). Through these experiments, we examine two main scenarios: VLB-IRL’s ability to learn an optimal reward function as compared to the baselines when noise-free optimal expert trajectories are available. VLB-IRL’s capacity to generalize and learn a robust reward function as compared to the baselines, when noisy expert trajectories are provided.

4.1 Mujoco domains

As part of our generalizability analysis, we consider both continuous and discrete control environments from the Mujoco library and use twin-delayed DDPG (TD3) (Fujimoto et al., 2018) and proximal policy optimization (PPO) (Schulman et al., 2017) for continuous and discrete domains respectively 222The same RL algorithms are used for expert trajectory generation.. We readily obtain the expertly trained RL models from the popular RL library RL-Baselines3 Zoo (Raffin, 2020) and generate several expert trajectories employing them. Using the generated expert trajectories, each method was trained with 5 random seeds and for each seed, the model with the highest return was saved. At the end of the training, we evaluate these models on 50 test episodes. We use MLP for the reward function approximation. The hyperparameters and other relevant details needed for reproducibility are provided in the Appendix B. and our code is openly available on GitHub333Double blind review note: we will share our code once our paper gets accepted.

Performance on optimal trajectories For our first set of experiments, we directly use the optimal expert trajectories obtained from the aforementioned pretrained models. Table 1 shows the summary of results obtained and the statistically best average reward accrued per domain is highlighted in bold. Our method VLB-IRL improves upon the baselines indicating that VLB-IRL’s learned reward function successfully explains the expert’s policy.

| Algorithm | LunarLander | Hopper | Walker2d | HalfCheetah | Ant |

|---|---|---|---|---|---|

| Random | |||||

| GAIL | |||||

| AIRL | |||||

| -IRL | - | ||||

| IQ-Learn | - | ||||

| VLB-IRL(ours) | |||||

| Expert |

Learning performance We find that VLB-IRL continues to perform well in a limited data regime. The empirical result demonstrates that VLB-IRL exhibits fairly good sample efficiency. With increasing amount of data, the standard deviation of the return from the learned policy becomes lower as shown in Figure 2, indicating better stability in the learned policy, which is a direct result of a better reward function. To further analyze the training progress of VLB-IRL, we use Inverse Learning Error (ILE) from Arora & Doshi (2021a), as where is the expert policy and is the learned policy. Here we use the norm and the result is presented in Figure 2. The decreasing ILE shows that both the learned reward function is getting closer to the true reward function and the learned policy is more similar to the expert policy.

Performance on noisy trajectories In order to further test VLB-IRL’s generalizability, in our second set of experiments, we provide noisy trajectories and examine if the learned reward function is robust enough to learn the noisy expert’s preferences and perhaps outperform them. The noisy trajectories are generated by using A2C for discrete environments and PPO for continuous environments in the library RL-Baselines3 Zoo. Compared to PPO for discrete environments and TD3 for continuous environments, they are less optimal and contain noisy actions in the trajectories. Compare the expert performance in Table 1 and Table 2 for detail. The results presented in Table 2 show that VLB-IRL’s accrues comparable or higher reward on average as compared to the noisy expert.

| Algorithm | LunarLander | Hopper | Walker2d | HalfCheetah | Ant |

|---|---|---|---|---|---|

| Random | |||||

| GAIL | |||||

| -IRL | - | ||||

| EBIL | |||||

| IQ-Learn | - | ||||

| VLB-IRL(ours) | |||||

| Noisy Expert |

For Hopper, HalfCheetah, and Ant environments, the VLB-IRL’s learned policy outperforms the noisy expert by , , and respectively. However, previous IRL techniques fail to generalize well when noisy trajectories are provided and we suspect that they fall short due to their optimization objective and divergence metric listed in Table 4.

4.2 Realistic Scenario

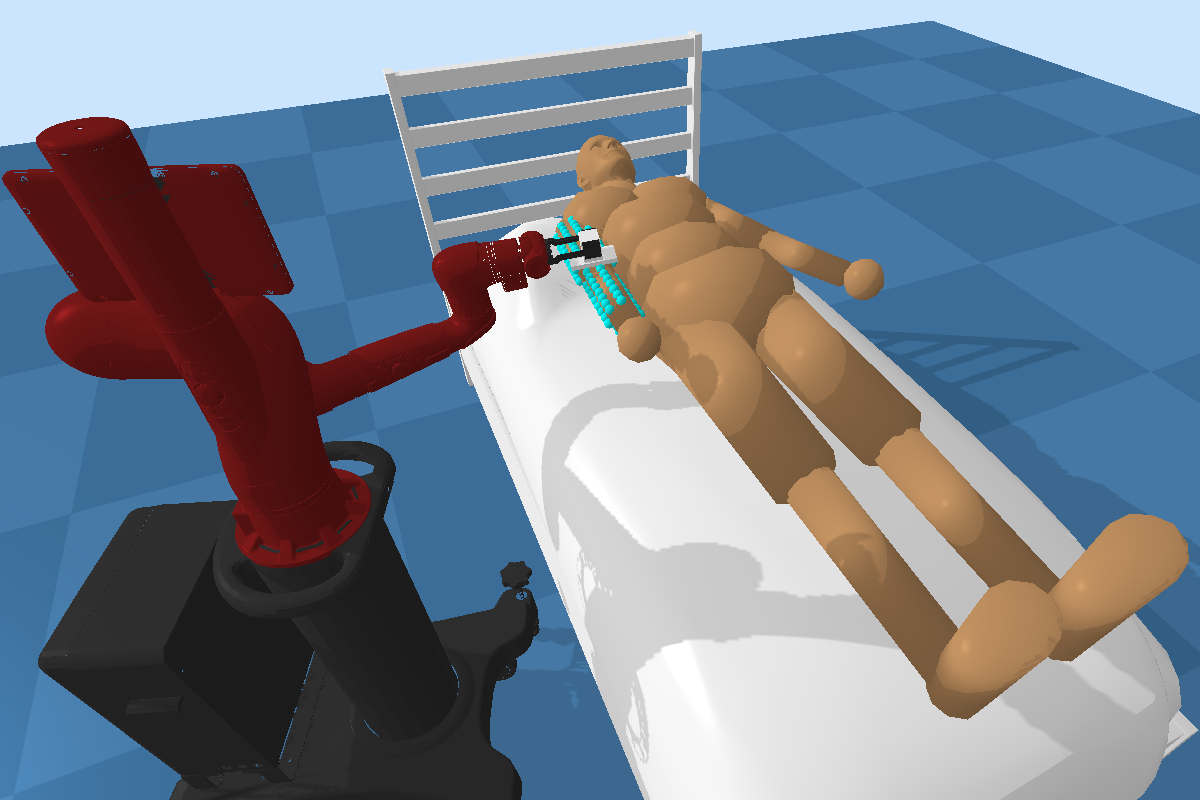

To further examine the performance of VLB-IRL, we use Assistive Gym (Erickson et al., 2020), a more realistic, robot-centered, and goal-based environment. For our experiments, we use environments with an active robot and a static human, such as FeedingSaywer, BedBathingSawyer, and ScratchItchSawyer. A description of the environments can be found in Figure.3. We use SAC to train an expert policy and use the trained expert policy to generate 50 expert episodes. The detailed hyperparameter can be found in Appendix B.

The challenge in Assistive Gym compared to Mujoco benchmark is that it is a goal-based environment and the agent only receives a positive reward after the completion of the goal, unlike Mujoco where the agent can receive positive rewards at every timestep. This goal-based environment will generate much more noisy expert trajectories. Table 3 shows that VLB-IRL outperforms all other baselines and VLB-IRL is the only method that can successfully achieve the goal.

| Algorithm | FeedingSawyer | BedBathingSawyer | ScratchItchSawyer | |

|---|---|---|---|---|

| Random | ||||

| GAIL | ||||

| AIRL | ||||

| -IRL | ||||

| IQ-Learn | ||||

| VLB-IRL(ours) | ||||

| Expert |

5 Related Work

IRL was introduced more than two decades ago and Abbeel & Ng (2004)’s Apprenticeship Learning, which sought to learn the reward function with the maximum margin, provided significant early impetus to IRL’s development. Subsequently, Bayesian methods for IRL, which viewed the trajectories as observations, were introduced Ramachandran & Amir (2007). Subsequently, Choi & Kim (2011) searches for the maximum-a-posteriori reward function instead of settling for the posterior mean. As it is hard to integrate over the entire reward space, the posterior mean may not be an ideal proposal for the reward inference. However, Bayesian methods are now known to be severely impacted by the issue of being unable to scale to large domains. Toward this challenge, recently Chan & van der Schaar (2021) scales Bayesian IRL to learn in the context of complex state spaces and mitigates reward uncertainty by using a variational approximation of the posterior distribution over reward and can be executed entirely offline.

Fu et al. (2018a) presents adversarial IRL (AIRL), a practical and scalable IRL algorithm based on an adversarial reward learning formulation, which has received significant attention and is one of our baseline techniques in this paper. More recently, Ghasemipour et al. (2019) demonstrated that the objective in AIRL is equivalent to minimizing the reverse KL divergence between the joint state-action distribution induced by the learned policy and that of the expert. However, this has been disputed by Ni et al. (2020), which claims that AIRL is not optimizing reverse KL divergence in the state-action marginal. Ni et al. (2020) also introduces -IRL that optimizes the -divergence measure, and is one of our baseline methods as well.

Although VLB-IRL and AIRL can be identified as adversarial method, VLB-IRL and AIRL come from different frameworks. AIRL comes from generative adversarial network guided cost learning (GAN-GCL) and the discriminator is interpreted as . By training via binary logistic regression and placing a special structure, i.e. advantage function, on the discriminator, the reward can be recovered. VLB-IRL comes from the graphical model and the graphical model naturally hold the conditional independence, leading to two proposed interpretation of optimality, i.e. and . By optimizing the reverse KL divergence in ELBO of log-likelihood of trajectory , i.e. , the reward can be recovered. In practice, VLB-IRL is more flexible. AIRL only accepts stochastic policy, while VLB-IRL is compatible with both stochastic and deterministic policy.

More recently, IQ-Learn has been proposed by Garg et al. (2021). IQ-Learn is a method for dynamics-aware IL that avoids adversarial training by learning a single Q-function, implicitly representing both reward and policy. However, it is not an IRL method. It sacrifices reward estimation accuracy by indirectly recovering rewards through a soft Q-function approximator, which relies heavily on dynamic environmental factors and doesn’t strictly adhere to the soft-Bellman equation.

| Algorithm | Optimization Space | Optimization Objective | Divergence measure | Type |

|---|---|---|---|---|

| GAIL | Jensen-Shannon | IL | ||

| AIRL | Forward KL | IRL | ||

| -IRL | -divergence | IRL | ||

| EBIL | Reverse KL | IRL | ||

| IQ-Learn | -divergence | IL | ||

| VLB-IRL(ours) | Reverse KL | IRL |

6 Conclusion

In this paper, we derive a novel variational lower bound on the log-likelihood of the trajectories for IRL and present an algorithm that maximizes this lower bound. By posting IRL through the framework of a probabilistic graphical model with an optimality node, the optimization dual problem becomes minimizing the reverse KL divergence between an approximate distribution of optimality given the reward function and the true distribution of optimality given the trajectories. In particular, it models IRL as an inference problem within the framework of PGMs. Our experiments demonstrate that VLB-IRL has better generalization in the presence of noisy expert trajectories compared to popular baselines. In addition, when (optimal) expert trajectories are available, VLB-IRL maintains a performance advantage compared to existing algorithms. The formulation of the graphical model opens up the possibility for a wide variety of new IRL techniques that can interpret the framework in various ways. Extending VLB-IRL to multi-agent environments and extending the interpretation of the optimality node for multi-agent learning is an intriguing direction.

A limitation of the VLB-IRL is that the tightness of its lower bound to the true log-likelihood is yet to be established, and VLB-IRL is not guaranteed to converge to the optimum, which is a limitation shared by many other adversarial IRL algorithms as well. Another common limitation of the adversarial algorithm is the unstable training progress.

References

- Abbeel & Ng (2004) Pieter Abbeel and Andrew Y Ng. Apprenticeship learning via inverse reinforcement learning. In Proceedings of the twenty-first international conference on Machine learning, pp. 1, 2004.

- Arora & Doshi (2021a) Saurabh Arora and Prashant Doshi. A survey of inverse reinforcement learning: Challenges, methods and progress. Artificial Intelligence, 297:103500, 2021a. ISSN 0004-3702. doi: https://doi.org/10.1016/j.artint.2021.103500.

- Arora & Doshi (2021b) Saurabh Arora and Prashant Doshi. A survey of inverse reinforcement learning: Challenges, methods and progress. Artificial Intelligence, 297:103500, 2021b.

- Bengio & LeCun (2007) Yoshua Bengio and Yann LeCun. Scaling learning algorithms towards AI. In Large Scale Kernel Machines. MIT Press, 2007.

- Chan & van der Schaar (2021) Alex James Chan and Mihaela van der Schaar. Scalable bayesian inverse reinforcement learning. In 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, May 3-7, 2021. OpenReview.net, 2021.

- Choi & Kim (2011) Jaedeug Choi and Kee-Eung Kim. Map inference for bayesian inverse reinforcement learning. Advances in neural information processing systems, 24, 2011.

- Choi & Kim (2012) Jaedeug Choi and Kee-Eung Kim. Nonparametric bayesian inverse reinforcement learning for multiple reward functions. Advances in neural information processing systems, 25, 2012.

- Erickson et al. (2020) Zackory Erickson, Vamsee Gangaram, Ariel Kapusta, C. Karen Liu, and Charles C. Kemp. Assistive gym: A physics simulation framework for assistive robotics. IEEE International Conference on Robotics and Automation (ICRA), 2020.

- Finn et al. (2016) Chelsea Finn, Sergey Levine, and Pieter Abbeel. Guided cost learning: Deep inverse optimal control via policy optimization. In International conference on machine learning, pp. 49–58. PMLR, 2016.

- Fu et al. (2018a) Justin Fu, Katie Luo, and Sergey Levine. Learning robust rewards with adverserial inverse reinforcement learning. In 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, April 30 - May 3, 2018, Conference Track Proceedings. OpenReview.net, 2018a.

- Fu et al. (2018b) Justin Fu, Avi Singh, Dibya Ghosh, Larry Yang, and Sergey Levine. Variational inverse control with events: A general framework for data-driven reward definition. In S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett (eds.), Advances in Neural Information Processing Systems, volume 31. Curran Associates, Inc., 2018b.

- Fujimoto et al. (2018) Scott Fujimoto, Herke Hoof, and David Meger. Addressing function approximation error in actor-critic methods. In International conference on machine learning, pp. 1587–1596. PMLR, 2018.

- Garg et al. (2021) Divyansh Garg, Shuvam Chakraborty, Chris Cundy, Jiaming Song, and Stefano Ermon. IQ-learn: Inverse soft-q learning for imitation. In A. Beygelzimer, Y. Dauphin, P. Liang, and J. Wortman Vaughan (eds.), Advances in Neural Information Processing Systems, 2021.

- Ghasemipour et al. (2019) Seyed Kamyar Seyed Ghasemipour, Shane Gu, and Richard Zemel. Understanding the relation between maximum-entropy inverse reinforcement learning and behaviour cloning, 2019.

- Ghasemipour et al. (2020) Seyed Kamyar Seyed Ghasemipour, Richard Zemel, and Shixiang Gu. A divergence minimization perspective on imitation learning methods. In Conference on Robot Learning, pp. 1259–1277. PMLR, 2020.

- Goodfellow et al. (2016) Ian Goodfellow, Yoshua Bengio, Aaron Courville, and Yoshua Bengio. Deep learning, volume 1. MIT Press, 2016.

- Guan et al. (2021) Ziwei Guan, Tengyu Xu, and Yingbin Liang. When will generative adversarial imitation learning algorithms attain global convergence. In Arindam Banerjee and Kenji Fukumizu (eds.), The 24th International Conference on Artificial Intelligence and Statistics, AISTATS 2021, April 13-15, 2021, Virtual Event, volume 130 of Proceedings of Machine Learning Research, pp. 1117–1125. PMLR, 2021.

- Haarnoja et al. (2018) Tuomas Haarnoja, Aurick Zhou, Pieter Abbeel, and Sergey Levine. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Jennifer G. Dy and Andreas Krause (eds.), Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholmsmässan, Stockholm, Sweden, July 10-15, 2018, volume 80 of Proceedings of Machine Learning Research, pp. 1856–1865. PMLR, 2018.

- Hinton et al. (2006) Geoffrey E. Hinton, Simon Osindero, and Yee Whye Teh. A fast learning algorithm for deep belief nets. Neural Computation, 18:1527–1554, 2006.

- Ho & Ermon (2016) Jonathan Ho and Stefano Ermon. Generative adversarial imitation learning. Advances in neural information processing systems, 29, 2016.

- Jain et al. (2019) Vinamra Jain, Prashant Doshi, and Bikramjit Banerjee. Model-free IRL using maximum likelihood estimation. In The Thirty-Third AAAI Conference on Artificial Intelligence, AAAI 2019, The Thirty-First Innovative Applications of Artificial Intelligence Conference, IAAI 2019, The Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2019, Honolulu, Hawaii, USA, January 27 - February 1, 2019, pp. 3951–3958. AAAI Press, 2019. doi: 10.1609/aaai.v33i01.33013951.

- Kim et al. (2021) Kuno Kim, Shivam Garg, Kirankumar Shiragur, and Stefano Ermon. Reward identification in inverse reinforcement learning. In Marina Meila and Tong Zhang (eds.), Proceedings of the 38th International Conference on Machine Learning, ICML 2021, 18-24 July 2021, Virtual Event, volume 139 of Proceedings of Machine Learning Research, pp. 5496–5505. PMLR, 2021.

- Levine (2018) Sergey Levine. Reinforcement learning and control as probabilistic inference: Tutorial and review. arXiv preprint arXiv:1805.00909, 2018.

- Liu et al. (2021) Minghuan Liu, Tairan He, Minkai Xu, and Weinan Zhang. Energy-based imitation learning. In Proceedings of the 20th International Conference on Autonomous Agents and MultiAgent Systems, AAMAS ’21, pp. 809–817. International Foundation for Autonomous Agents and Multiagent Systems, 2021.

- Mnih et al. (2013) Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Alex Graves, Ioannis Antonoglou, Daan Wierstra, and Martin Riedmiller. Playing atari with deep reinforcement learning, 2013.

- Ni et al. (2020) Tianwei Ni, Harshit Sikchi, Yufei Wang, Tejus Gupta, Lisa Lee, and Ben Eysenbach. f-irl: Inverse reinforcement learning via state marginal matching. In Conference on Robot Learning, 2020.

- Qureshi et al. (2019) Ahmed Hussain Qureshi, Byron Boots, and Michael C. Yip. Adversarial imitation via variational inverse reinforcement learning. In 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019. OpenReview.net, 2019.

- Raffin (2020) Antonin Raffin. Rl baselines3 zoo. https://github.com/DLR-RM/rl-baselines3-zoo, 2020.

- Ramachandran & Amir (2007) Deepak Ramachandran and Eyal Amir. Bayesian inverse reinforcement learning. In Proceedings of the 20th International Joint Conference on Artifical Intelligence, IJCAI’07, pp. 2586–2591. Morgan Kaufmann Publishers Inc., 2007.

- Schlaginhaufen & Kamgarpour (2023) Andreas Schlaginhaufen and Maryam Kamgarpour. Identifiability and generalizability in constrained inverse reinforcement learning. In Andreas Krause, Emma Brunskill, Kyunghyun Cho, Barbara Engelhardt, Sivan Sabato, and Jonathan Scarlett (eds.), International Conference on Machine Learning, ICML 2023, 23-29 July 2023, Honolulu, Hawaii, USA, volume 202 of Proceedings of Machine Learning Research, pp. 30224–30251. PMLR, 2023.

- Schulman et al. (2017) John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347, 2017.

- Todorov et al. (2012) Emanuel Todorov, Tom Erez, and Yuval Tassa. Mujoco: A physics engine for model-based control. In 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5026–5033. IEEE, 2012. doi: 10.1109/IROS.2012.6386109.

- Viano et al. (2021) Luca Viano, Yu-Ting Huang, Parameswaran Kamalaruban, Adrian Weller, and Volkan Cevher. Robust inverse reinforcement learning under transition dynamics mismatch. In Marc’Aurelio Ranzato, Alina Beygelzimer, Yann N. Dauphin, Percy Liang, and Jennifer Wortman Vaughan (eds.), Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021, NeurIPS 2021, December 6-14, 2021, virtual, pp. 25917–25931, 2021.

- Ziebart et al. (2008) Brian D. Ziebart, Andrew L. Maas, J. Andrew Bagnell, and Anind K. Dey. Maximum entropy inverse reinforcement learning. In Dieter Fox and Carla P. Gomes (eds.), Proceedings of the Twenty-Third AAAI Conference on Artificial Intelligence, AAAI 2008, Chicago, Illinois, USA, July 13-17, 2008, pp. 1433–1438. AAAI Press, 2008.

Appendix A Proof

A.1 Proof for Lemma 1

Proof.

Let and we have the following Taylor expansion:

| (11) |

where . Replacing with the random variable and taking the expectation results in,

If function has a bounded second derivative, for all , then we have

∎

A.2 Proof for Theorem 2

A.3 Proof for Theorem 3

Proof.

If is maximal, from Lemma 2, we obtain that

| (17) |

In addition, if is identical to ,

| (18) |

Here, parameterized by has the highest value given for any , which best explains the expert policy . ∎

Appendix B Implementation and Hyperparameter

B.1 Implementation

In this section, we discuss several implementation details for making training stable and getting a better reward function and learner policy.

Iterative updates. Many algorithms for IRL exhibit a nested structure, involving an inner loop and an outer loop. The inner loop focuses on finding the optimal policy given parameterized rewards. However, this step is costly and we can avoid this by applying the iterative updates. We borrow the idea from Finn et al. (2016), updating the reward function and learning policy in parallel without finding the optimal policy given parameterized rewards.

Learner Policy Rollouts Buffer. There are two reasons to use the learner policy rollouts buffer. First, recall that in Section 2.3, we get the approximation of the distribution of optimality given trajectory, by using a classifier classifying whether a trajectory comes from an expert or learner. In supervised learning with deep neural networks, it is common practice to compute gradient estimates based on a minibatch of samples rather than individual samples. It is essential to ensure that the samples are independently and identically distributed (i.i.d) from a fixed distribution. However, if we sample minibatches from the most recent learner policy, the i.i.d assumption cannot be held anymore because they are correlated. This is similar to the scenario described in Mnih et al. (2013). By applying the learner policy rollouts buffer, we can alleviate the problems of correlated data and non-stationary distributions. Second, to avoid catastrophic forgetting, which impedes the training process heavily, the simplest solution is to store past experiences. By including previous experiences in the training set, the neural network benefits from a diverse range of samples, leading to a reduced risk of catastrophic forgetting.

B.2 Hyperparameter and Other Implementation Details

Environment: We use the LunarLander-v2, Hopper-v3, Ant-v3, HalfCheetah-v3, Walker2d-v3 from OpenAI Gym. We use FeedingSawyer-v1, BedBathingSawyer-v1, ScratchItchSawyer-v1 from Assistive Gym.

Expert Policy: For OpenAI Gym environments, we readily obtain the expertly trained RL models from the popular RL library RL-Baselines3 Zoo (Raffin, 2020). We use TD3 and PPO for continuous and discrete action environments for optimal trajectories collection respectively. We use PPO and A2C for continuous and discrete action environments for sub-optimal trajectories collection respectively. For Assistive Gym environments, we train the expert policy using SAC from Stable-Baselines3. We use policy network as and action noise with . The remaining hyperparameter remains the default value.

Training Details: We train 6 algorithms namely VLB-IRL, IQ-Learn, GAIL, AIRL, -IRL, and EBIL to recover the expert reward function and imitate the expert behavior using the given expert trajectories.

For OpenAI Gym environments, we train VLB-IRL using Algorithm 1. We use TD3 and PPO for continuous and discrete action environments from the popular RL library Stable-Baselines3 respectively. The hyperparameter for learner policy is listed in Table 5 and Table 7 and the unmentioned hyperparameters are the default value in the Stable-Baselines3 package. The hyperparameters for learner policy are borrowed from the RL-Baselines3 Zoo, which includes a collection of hyperparameters for various kinds of algorithms and environments. The hyperparameter for the reward function network and the classifier are listed in Table 8 and Table 10 respectively.

For Assistive Gym environments, we train VLB-IRL using Algorithm 1. We use SAC from the popular RL library Stable-Baselines3. The hyperparameter for learner policy is listed in Table 6

For GAIL and AIRL, we use the Python package Imitation, which provides clean implementations of imitation and reward learning algorithms. For IQ-Learn, EBIL, and -IRL, we refer to their authors’ official implementation. We use the same hyperparameter setting for learner policy and reward function network. as VLB-IRL except for AIRL. AIRL only accepts stochastic policy, which is not true for TD3. Therefore, we use SAC as the underlying RL algorithm. The hyperparameter for SAC is borrowed from Ni et al. (2020).

| TD3 | Hopper | Ant | HalfCheetah | Walker2d |

|---|---|---|---|---|

| Learning rate | ||||

| Gamma | 0.99 | 0.98 | 0.98 | 0.98 |

| Batch size | 256 | 256 | 256 | 256 |

| Gradient steps | 1000 | 1000 | 1000 | 1000 |

| Net arch | [400, 300] | [400, 300] | [400, 300] | [400, 300] |

| Buffer size | 2000000 | 200000 | 200000 | 200000 |

| Action noise |

| SAC | FeedingSawyer | BedBathingSawyer | ScratchItchSawyer |

|---|---|---|---|

| Learning rate | |||

| Gamma | 0.97 | 0.97 | 0.97 |

| Batch size | 256 | 256 | 256 |

| Net arch | [512, 512] | [512, 512] | [512, 512] |

| Action noise |

| PPO | LunarLander |

|---|---|

| Learning rate | |

| Gamma | 0.99 |

| Batch size | 100 |

| Entropy coefficient |

| MLP | LunarLander | Hopper | Ant | HalfCheetah | Walker2d |

|---|---|---|---|---|---|

| Learning rate | |||||

| Net arch | [16, 16] | [16, 16] | [32, 32] | [32, 32] | [32, 32] |

| Optimizer | Adam | Adam | Adam | Adam | Adam |

| Batch size | 64 | 256 | 256 | 256 | 256 |

| Activation | LeakyReLU | LeakyReLU | LeakyReLU | LeakyReLU | LeakyReLU |

| MLP | FeedingSawyer | BedBathingSawyer | ScratchItchSawyer |

|---|---|---|---|

| Learning rate | |||

| Net arch | [64, 64] | [64, 64] | [64, 64] |

| Optimizer | Adam | Adam | Adam |

| Batch size | 256 | 256 | 256 |

| Activation | LeakyReLU | LeakyReLU | LeakyReLU |

| MLP | LunarLander | Hopper | Ant | HalfCheetah | Walker2d |

|---|---|---|---|---|---|

| Learning rate | |||||

| Net arch | [16, 16] | [16, 16] | [32, 32] | [32, 32] | [32, 32] |

| Optimizer | Adam | Adam | Adam | Adam | Adam |

| Batch size | 64 | 256 | 256 | 256 | 256 |

| Activation | LeakyReLU | LeakyReLU | LeakyReLU | LeakyReLU | LeakyReLU |

| MLP | FeedingSawyer | BedBathingSawyer | ScratchItchSawyer |

|---|---|---|---|

| Learning rate | |||

| Net arch | [64, 64] | [64, 64] | [64, 64] |

| Optimizer | Adam | Adam | Adam |

| Batch size | 256 | 256 | 256 |

| Activation | LeakyReLU | LeakyReLU | LeakyReLU |