A Novel Multi-Teacher Knowledge Distillation for Real-Time Object Detection using 4D Radar

Abstract

Accurate 3D object detection is crucial for safe autonomous navigation, requiring reliable performance across diverse weather conditions. While LiDAR performance deteriorates in challenging weather, Radar systems maintain their reliability. Traditional Radars have limitations due to their lack of elevation data, but the recent 4D Radars overcome this by measuring elevation alongside range, azimuth, and Doppler velocity, making them invaluable for autonomous vehicles. The primary challenge in utilizing 4D Radars is the sparsity of their point clouds. Previous works address this by developing architectures that better capture semantics and context in sparse point cloud, largely drawing from LiDAR-based approaches. However, these methods often overlook a unique advantage of 4D Radars: the dense Radar tensor, which encapsulates power measurements across three spatial dimensions and the Doppler dimension. Our paper leverages this tensor to tackle the sparsity issue. We introduce a novel knowledge distillation framework that enables a student model to densify its sparse input in the latent space by emulating an ensemble of teacher models. Our experiments demonstrate a 25% performance improvement over the state-of-the-art RTNH model on the K-Radar dataset. Notably, this improvement is achieved while still maintaining a real-time inference speed.

Index Terms:

4D Radar, 3D object detection, Knowledge distillation, Radar preprocessingI Introduction

3D Object detection is vital for enabling accurate autonomous navigation by ensuring precise positioning of surrounding road users, and robust performance in all weather conditions is essential for overall safety [1, 2, 3]. While LiDARs have traditionally been the primary sensing modality for this application due to their ability to measure distances accurately in 3D, they struggle to function properly in adverse conditions like snow, rain, and fog due to their reliance on lights that are not harmful to human eyes [4]. In contrast, Radars remain unaffected by these conditions as they utilize electromagnetic waves. However, conventional Radars have a significant limitation: their measurements are confined to a single plane, rendering them incapable of detecting objects in 3D [5]. Recent advancements in the Radar technology have led to the development of 4D Radars, which add elevation measurements to the conventional range, azimuth, and Doppler velocity data. This addition lifts Radar measurements to full 3D space, making 4D Radars a promising solution for robust 3D object detection [5, 6, 7].

The major limitation of 4D Radars is the sparsity of their point clouds. Prior Radar-based object detection methods address this issue through devising architectures that are better at capturing semantic and context information in sparse point clouds. RPFA-Net [8] builds upon the Pillar Feature Net of PointPillar [9], incorporating a self-attention module [10] to improve the modeling of relationships among Radar points within each pillar. Similarly, SMURF [11] extends PointPillar by complementing pillar-based features with density-based features extracted through Kernel Density Estimation, mitigating the impact of point cloud sparsity. RTNH [7] utilizes 4D Radar tensor data to demonstrate that the density of the Radar point cloud significantly affects model performance[12], and employs a sparse 3D convolution-based architecture to integrate height-related information into Radar features. MVFAN [13] introduces a multi-branch architecture that extracts features from both Bird’s-Eye View (BEV) and cylindrical view representations of the Radar point cloud. Taking this multi-view approach further, SMIFormer [14] extracts features from BEV, Front View (FV), and Side View (SV) representations, fusing them using multi-view interaction transformers. Leverage the Doppler velocity of Radar points to compensate for the motion of dynamic objects, RadarMFNet [15] aggregates multiple point clouds to obtain a denser input.

These approaches are heavily influenced by LiDAR-based object detection techniques, overlooking a unique feature of 4D Radars. Unlike LiDARs that directly produce point clouds, 4D Radars generate a dense 4D tensor containing power measurements across three spatial dimensions (range, azimuth, and elevation) and the Doppler dimension. We refer to this tensor as 4DRT throughout this paper. To create Radar point clouds, the dense 4DRT undergoes a preprocessing step to retain only high-power measurements.

A straightforward solution to the sparsity challenge would be to relax the definition of ”high-power measurement,” resulting in denser point clouds. As shown in Fig.1, this increased density directly improves detection performance. However, this approach comes at the cost of higher memory consumption and, more critically, longer inference times. The resulting performance-efficiency trade-off renders this straightforward solution impractical, especially for applications with strict real-time requirements and resource constraints, such as autonomous driving.

In this paper, we leverage 4DRT to address the sparsity of Radar point clouds without sacrificing efficiency for performance. We make the following contributions:

-

•

the first multi-teacher knowledge distillation framework using 4D Radar, that enables a student model to densify its sparse input in the latent space,

-

•

enabling Radar-based detection models that operate directly on highly sparse point clouds, ensuring low memory consumption and high inference speed without compromising detection performance,

-

•

eliminating of the need for extrinsic calibration between Radars and other modalities for the knowledge distillation,

-

•

comprehensive experiments on the large-scale K-Radar dataset [7], results show a 25% improvement in detection performance over the state-of-the-art RTNH model when operating on sparse point clouds, while maintaining a real-time inference speed of 30 frames per second (FPS) on an NVIDIA RTX 3090 GPU.

II Related Works

II-A Radar Processing

To provide the context of our work, we briefly outline the Radar processing procedure illustrated in Fig.2, which transforms electromagnetic wave responses into Radar point clouds. The process begins by converting the received signal from analog to digital, followed by two Fast Fourier Transforms (FFT) to generate a 4-dimensional tensor (4DRT) containing range, azimuth, elevation, and Doppler measurements, as introduced in Sec.I. Finally, preprocessing (Alg.1) averages the Doppler dimension and retains a high-percentile subset of measurements, reducing noise while balancing sparsity, as shown in Fig.3.

II-B Methods Using Raw Radar Data

As we exploit the dense 4D Radar tensor that have not undergone the preprocessing, our method belongs to the category of methods using raw Radar data. Since Radar data undergoes a multi-stage processing before yielding sparse point clouds, methods in this category take the ADC signal, or the Range-Doppler Map (RDM), or the 4DRT, as their input. The early work FFT-RadNet [16] directly detect objects in the RDM. Since this tensor lacks the explicit azimuth, which is crucial for 3D object detection, FFTRadNet implicitly estimate the azimuth in its latent space by forcing an axis of its feature map to represent the azimuth value. ADCNet [17] and T-FFTRadNet [18] go one step further by using the ADC signal. Based on a learnable form of the discrete Fourier transform, they devise a learnable signal processing module that transforms the input ADC signal into a latent representation of the range-azimuth-Doppler map. DPFT [19] takes the 4DRT as its input and project this tensor to the range-azimuth plane and the azimuth-elevation plane for feature extraction. The two resulting feature maps are fused with the camera’s through the deformable attention mechanism [20].

While the methods above use the raw Radar data for both training and inference, our method only requires the raw data for training. Specifically, we vary the percentile of the preprocessing to obtain high-density point clouds for training teacher models. During inference, the student model uses directly the preprocessed sparse point cloud. This ensures a low memory consumption and a high inference speed of the student model.

II-C Distillation Methods

The idea of the knowledge distillation framework [21] is to use the feature map of a model, referred to as the teacher, to guide the feature computation of another model, referred to as the student. This is implemented by complemeting the task-specific loss function of the student with addtional terms measuring the similarity between its feature maps and the teacher’s. Prior works on knowledge distillation for Radar-based object detection distill LiDAR-based or camera-based models to leverage their relatively dense representation. RadarDistill [22] align the representation of a Radar and a LiDAR in BEV. Then, the Radar model is forced to emulate the representation of the LiDAR model in key regions. CRKD [23] take the similar key-region-based approach to transfer knowledge from a camera-based model. A development made by CRKD is the enforcement of the similarity at the global level through the application of the L1 loss on the affinity matrix of the representation of camera and of Radar.

Unlike cross-modal distillation methods, our approach requires no extrinsic calibration between Radar and other modalities, enhancing practicality given the complexity of Radar calibration [24, 25]. Moreover, differences in sensing capabilities, such as Radar’s ability to measure radial velocity versus RGB cameras’ light intensity perception, create unobservable features for the student, complicating distillation.

III Proposed Approach

We name our distillation framework 4D Multi Teachers (4DMT). This section begins with an overview on different blocks of our method and how data flows through them. Then, the detail of each block is presented.

III-A Overview of 4DMT

Our knowledge distillation scheme named 4DMT, shown in Fig.4, consists of teacher models and a student model. These models take Radar point clouds as their input. It worths noticing that each model (teachers and the student) use point clouds having a different density level. Teacher models and the student model use an identical (but not shared) backbone to compute the feature maps of their input Radar point cloud in BEV. Their backbone has a multi-stage architecture that progressively increases the semantic level of the feature map at each stage while reducing its spatial resolution. This is done through a chain of sparse 3D convolutions [26] similar to RTNH. An anchor-based detection head [27] detects objects using the feature map of the last stage.

To make input point clouds for each teacher, we choose a different percentile of power that the preprocessing module extracts from the 4DRT. Teacher models are trained using the object detection loss of RTNH. During the training of the student model, teacher models are not subjected to the weight update. An Aggregation Module, detailed in Sec.III-B, combines the output of the backbone of every teacher into a single feature map that plays the role of the target feature map of the student.

Similar to teacher models, we use a different percentile to make input point clouds for the student model. As the student model is intended to be deployed on resource-constrained hardware and has to satisfy a strict real-time requirement, we choose a high percentile, resulting in very sparse point clouds. Beside the backbone and the detection head, the student has a Densify Module, detailed in Sec.III-C, that upsamples the output of its backbone to alleviate the impact of the sparsity of its input. The loss function of the student is the weighted sum of the distillation loss, presented in Sec.III-D, and the same detection loss that used to train teacher models. During inference, the teachers and the aggregation module are removed.

III-B Aggregation Module

The use of multiple teachers, each of which operates on a different level of point cloud densities, results in a diverse set of features for distillation. On the other hand, the sparsity of the student’s point clouds make certain features to be distilled uncomputable as certain regions of the environment are unobservable to the students. Therefore, it is necessary to select appropriate teacher features for distillation based on the student’s input. We achieve this with the Aggregation Module.

For a teacher -th, its feature map at every stage is upsampled to obtain the same spatial dimension, then concanated along the channel dimension to make the tensor . Aggregation Module adaptively selects the useful features for distillation at each location in BEV from the set of all through a series of 1-by-1 convolutions and channel concatenation operation, resulting a unified tensor .

III-C Densify Module

The backbone of our models (teachers and the student) use a mix of two implementations of the sparse convolution: [28] and [29]. The former allows occupancy leaking from one layer to another by performing the convolution on any location whose neighborhood (defined by the convolution filter) has at least one active (non-zero value) sites. The result of the occupancy leaking is a reduction in the sparsity level of the feature map in deeper stages of the backbone.

Thanks to the occupancy leaking and a relatively high density of their input point clouds, the last feature map of the backbone of each teacher has a relatively high density. On the other hand, input point clouds of the student have so few points that the last feature map of its backbone is still sparse, despite the occupancy leaking. As these feature maps are used for knowledge distillation, their density mismatch makes the optimization of the distillation loss more challenging. Inspired by prior works [30, 31], we propose a densify module that upsample the output of the student’s backbone.

Our densify module first reduces the resolution of its input using a deformable convolution [32] and a ConvNeXt layer [33], where the deformable convolution adapts to irregular inputs, and the ConvNeXt layer efficiently refines the extracted features. It then restores the resolution with a transposed convolution, producing a denser feature map better aligned with the teacher’s representation for improved knowledge distillation and object detection. The output of this module, referred to as , is used to compute the distillation loss and to detect objects.

III-D Loss Function

The loss function used to train the student model is a weighted sum of the object detection loss and the knowledge distillation loss

| (1) |

Here, and are hyperparameters that adjust the balance between the object detection loss and the knowledge distillation loss. They are set to 1 in our experiments.

is the Mean Squared Error (MSE) between the aggregation of teachers’ feature map and the student’s densified feature map . Since features in regions where objects exist are more important for detecting objects than background, we mask both and using ground truth when calculating the distillation loss. Specifically, we transform each ground truth object into a 2D Gaussian and splat it to the BEV to obtain a heatmap. This heatmap is multiplied element-wise with and to realize the masking operation.

| (2) |

We use the loss function defined by RTNH [7] as the detection loss .

IV Experiments

IV-A Experimental Setup

IV-A1 Implementation Details

Due to the limitation of our hardware, we set the number of teachers to 3. To generate point clouds for training teacher models, we set the percentile of Alg.1 to 3 following values: 95, 90, and 80. These choices of the percentile is to make the average number of points in point clouds of teachers have the order of , resulting in a high precision. In contrast, the student uses a 99.9 percentile, reducing the point count by an order of magnitude.

We use the same architecture for the backbone of teacher models and the student model. The backbone has three stages. Each stage halves the spatial dimensions (width and height) while doubling the number of channels. The number of channels of the feature map at the output of each stage is respectively 64, 128, and 256.

To make the output of the backbone of a teacher model, the outputs from its backbone’s second and third stages are upsampled by the transposed convolution with 256 filters to match the spatial dimensions of the first stage’s output. The number of channels in the first stage’s output is also increased to 256 using a 1-by-1 convolution. Finally, these three feature maps are concatenated along the channel dimensions to obtain .

To make the output of the student model, the Densify Module first increases the number of channels of the output of the student’s backbone to 256 while reducing its spatial dimension by 4 times. The resulting feature map is passed through a stack of three ConvNeXt [33] blocks. The number of convolution filters of these ConvNeXt blocks are 256. Finally, we use transposed convolutions to upsample the output of the ConvNeXt stack to the same size as the output of the backbone.

Teacher models and the student model are trained in the same setting as the baseline RTNH [7]. The training epoch, learning rate, and batch size are 30, 0.001, and 8, respectively. All experiments were conducted on an NVIDIA RTX 3090 GPU. In the tables presented in this section, the best results are marked by bold font.

IV-A2 Datasets and Evaluation Metrics

We conduct our experiments on the K-Radar dataset [7], a multi-modal large-scale dataset collected under various driving environments, including adverse weather conditions. It provides access to dense 4DRT, which is crucial for our approach. K-Radar contains a total of 35,000 frames, equally split into training and testing sets, and includes 100,000 3D bounding box annotations. The annotations cover five categories: sedan, bus or truck, pedestrian, motorcycle, and bicycle. Considering the object distribution within the dataset, we focus on two categories: sedan and bus or truck. For the region of interest (RoI), we use ranges of 0 to 72 m along the x-axis, -16 to 16 m along the y-axis, and -2 to 7.6 m along the z-axis. We compute Average Precision (AP) following the protocol provided by the K-Radar benchmark, and evaluate inference speed in FPS to assess the resource efficiency of our model.

IV-B Impact of Knowledge Distillation

To showcase the impact of knowledge distillation on the student model, we compare the detection performance and the resource efficiency of our 4DMT, which is an RTNH model enhanced by our knowledge distillation framework, and two original RTNH. Our 4DMT and one RTNH use point clouds produced at the 99.9th percentile while while the other RTNH baseline uses a lower percentile of 95. The lower percentile results in point clouds that are, on average, 50 times denser. The result presented in Tab.I shows that our 4DMT achieves the highest 3D AP in the Sedan category, especially 25% improvement compared to the RTNH baseline with the same density level.

| Networks | Sedan | Bus or Truck | FPS | ||

| BEV | 3D | BEV | 3D | ||

| RTNH99.9 | 44.36 | 36.11 | 26.78 | 23.09 | 40 |

| RTNH95 | 48.08 | 44.08 | 43.51 | 30.80 | 25 |

| 4DMT99.9 | 48.33 | 45.48 | 36.72 | 27.83 | 30 |

Furthermore, our 4DMT has the second highest inference speed which is 30 FPS on NVIDIA RTX 3090. These results show that our distillation framework strikes a good balance between precision and efficiency. The underperformance of our 4DMT compared to RTNH using denser point clouds (95-th percentile) in the ”Bus or Truck” category is explained by the misclassification of large vehicles as multiple smaller vehicles due to the sparsity of point clouds at 99.9-th percentile. This is illustrated in Fig.5,

| Networks | Sedan | ||

|---|---|---|---|

| BEV | 3D | ||

| One- teacher | Teacher 1 | 47.46 | 44.96 |

| Teacher 2 | 46.95 | 43.53 | |

| Teacher 3 | 47.37 | 44.84 | |

| Multi-teacher (1, 2, 3) | 48.33 | 45.48 | |

IV-C Ablation Study

IV-C1 The Impact of Using Multiple Teachers

The purpose of this study is to showcase the effectiveness of disilling multiple teachers compared to the conventional single-teacher distillation. The result in Tab.II confirms that the performance of the multi-teacher model is higher than that of the single-teacher model. We hypothesize that the diversity of features for distillation thanks to the available of multiple teachers enables the Aggregation Module to choose features that are feasible for the student to compute. Therefore, the optimization of the distillation loss is less challenging.

IV-C2 Analysis of qualitative results

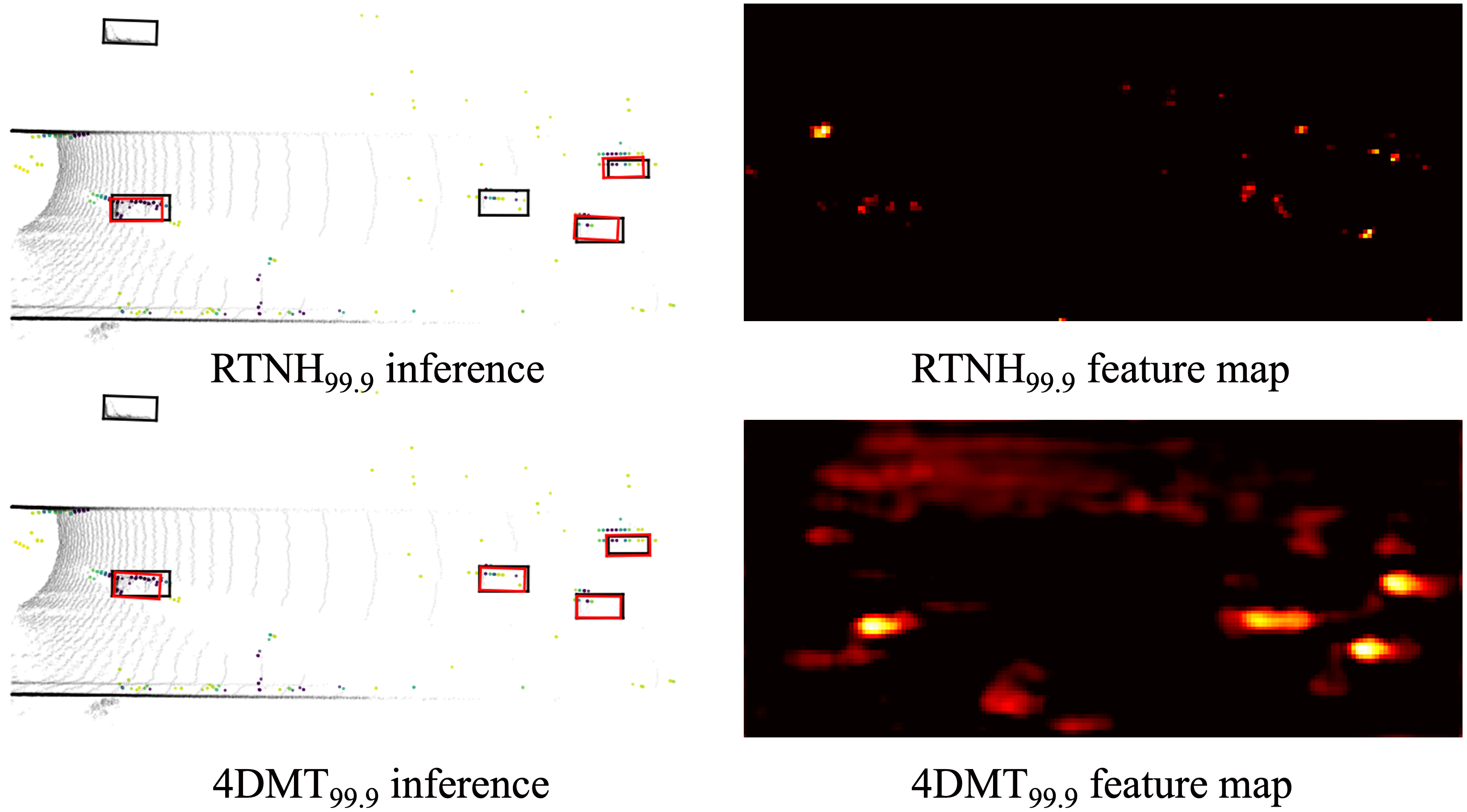

To qualitatively prove the capacity of densifying sparse point clouds in the latent space of models trained by our knowledge distillation framework, we compared the BEV feature map extracted from the RTNH basic model with the BEV feature map extracted from 4DMT. As shown in Fig.6, the BEV feature map extracted from the RTNH model trained with sparse data does not capture information about the object well. In contrast, the BEV feature map of our 4DMT exhibits information about the object well despite its sparse input.

By conducting a comparative evaluation of RTNH and 4DMT across diverse driving scenarios (e.g., weather conditions, road environments, lighting), we demonstrate that the proposed framework leverages the characteristics of 4D Radar for more robust object detection under various driving conditions. As shown in Fig.7, (a), (c), and (d), conventional RTNH fails to extract sufficient feature information from sparse data, leading to a failure in detecting objects in inference results. On the other hand, 4DMT utilizes knowledge transferred from multiple teachers to extract object feature information from sparse data, being able to detect objects at a higher precision. In Fig.7, (b), (e), and (f), it can be seen that RTNH produces more false positives than 4DMT because it fails to distinguish 4D Radar noise (i.e., side lobe) from measurements corresponding to objects. Through quantitative comparisons, we validate that the proposed multi-teacher knowledge distillation framework effectively leverages 4D Radar data to perform robust and accurate object detection in various environments.

IV-C3 Analysis of 4DMT Performance Based on 4D Radar Data Density

In this study, we conduct experiments comparing the performance of 4DMT99.9, 4DMT99, and 4DMT95, which use preprocessed data with the percentile of 99.9, 99, and 95, respectively, against RTNH. Through this comparison, we demonstrate the effectiveness of the proposed multi-teacher knowledge distillation framework across various data densities. As shown in Tab. III, 4DMT consistently outperforms RTNH across all data densities in both BEV and 3D AP of Sedan class. This performance gap suggests that the multi-teacher knowledge distillation framework in 4DMT effectively leverages sparse Radar data, leading to more accurate object detection, especially as data density decreases. This demonstrates the robustness and versatility of 4DMT when handling data at various levels of sparsity.

| Networks | 99.9 Percentile | 99 Percentile | 95 Percentile | |||

|---|---|---|---|---|---|---|

| BEV | 3D | BEV | 3D | BEV | 3D | |

| RTNH | 44.36 | 36.11 | 52.43 | 43.20 | 48.08 | 44.08 |

| 4DMT | 48.33 | 45.48 | 55.00 | 46.96 | 55.64 | 47.48 |

V Conclusions

In conclusion, we have proposed a novel 4D Radar-based multi-teacher knowledge distillation framework that effectively addresses the challenges of data sparsity and real-time performance in 3D object detection. By utilizing three teacher models with varying point cloud densities and introducing aggregation and densify modules, our student model learns rich feature representations from sparse input data. The experimental results on the K-Radar benchmark demonstrate a significant 25% improvement in 3D Average Precision for the Sedan class, showcasing the efficacy of our approach.

While our framework has shown promising results, we employed a simple preprocessing method to extract point clouds from the dense 4DRT. This may limit the potential performance of the model. Future work should focus on developing more sophisticated preprocessing techniques that take into account various characteristics of 4D Radars to more effectively select data point from the 4DRT.

References

- [1] A. Geiger, P. Lenz, and R. Urtasun, “Are we ready for autonomous driving? the kitti vision benchmark suite,” in 2012 IEEE conference on computer vision and pattern recognition. IEEE, 2012, pp. 3354–3361.

- [2] H. Caesar, V. Bankiti, A. H. Lang, S. Vora, V. E. Liong, Q. Xu, A. Krishnan, Y. Pan, G. Baldan, and O. Beijbom, “nuscenes: A multimodal dataset for autonomous driving,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 11 621–11 631.

- [3] M. J. Mirza, C. Buerkle, J. Jarquin, M. Opitz, F. Oboril, K.-U. Scholl, and H. Bischof, “Robustness of object detectors in degrading weather conditions,” in 2021 IEEE International Intelligent Transportation Systems Conference (ITSC). IEEE, 2021, pp. 2719–2724.

- [4] M. Bijelic, T. Gruber, and W. Ritter, “A benchmark for lidar sensors in fog: Is detection breaking down?” in 2018 IEEE intelligent vehicles symposium (IV). IEEE, 2018, pp. 760–767.

- [5] A. Palffy, E. Pool, S. Baratam, J. F. Kooij, and D. M. Gavrila, “Multi-class road user detection with 3+ 1d radar in the view-of-delft dataset,” IEEE Robotics and Automation Letters, vol. 7, no. 2, pp. 4961–4968, 2022.

- [6] L. Zheng, Z. Ma, X. Zhu, B. Tan, S. Li, K. Long, W. Sun, S. Chen, L. Zhang, M. Wan et al., “Tj4dradset: A 4d radar dataset for autonomous driving,” in 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC). IEEE, 2022, pp. 493–498.

- [7] D.-H. Paek, S.-H. Kong, and K. T. Wijaya, “K-radar: 4d radar object detection for autonomous driving in various weather conditions,” Advances in Neural Information Processing Systems, vol. 35, pp. 3819–3829, 2022.

- [8] B. Xu, X. Zhang, L. Wang, X. Hu, Z. Li, S. Pan, J. Li, and Y. Deng, “Rpfa-net: A 4d radar pillar feature attention network for 3d object detection,” in 2021 IEEE International Intelligent Transportation Systems Conference (ITSC). IEEE, 2021, pp. 3061–3066.

- [9] A. H. Lang, S. Vora, H. Caesar, L. Zhou, J. Yang, and O. Beijbom, “Pointpillars: Fast encoders for object detection from point clouds,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 12 697–12 705.

- [10] A. Vaswani, “Attention is all you need,” Advances in Neural Information Processing Systems, 2017.

- [11] J. Liu, Q. Zhao, W. Xiong, T. Huang, Q.-L. Han, and B. Zhu, “Smurf: Spatial multi-representation fusion for 3d object detection with 4d imaging radar,” IEEE Transactions on Intelligent Vehicles, 2023.

- [12] D.-H. Paek, S.-H. Kong, and K. T. Wijaya, “Enhanced k-radar: Optimal density reduction to improve detection performance and accessibility of 4d radar tensor-based object detection,” in 2023 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2023, pp. 1–6.

- [13] Q. Yan and Y. Wang, “Mvfan: Multi-view feature assisted network for 4d radar object detection,” in International Conference on Neural Information Processing. Springer, 2023, pp. 493–511.

- [14] W. Shi, Z. Zhu, K. Zhang, H. Chen, Z. Yu, and Y. Zhu, “Smiformer: Learning spatial feature representation for 3d object detection from 4d imaging radar via multi-view interactive transformers,” Sensors, vol. 23, no. 23, p. 9429, 2023.

- [15] B. Tan, Z. Ma, X. Zhu, S. Li, L. Zheng, S. Chen, L. Huang, and J. Bai, “3-d object detection for multiframe 4-d automotive millimeter-wave radar point cloud,” IEEE Sensors Journal, vol. 23, no. 11, pp. 11 125–11 138, 2022.

- [16] J. Rebut, A. Ouaknine, W. Malik, and P. Pérez, “Raw high-definition radar for multi-task learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 17 021–17 030.

- [17] B. Yang, I. Khatri, M. Happold, and C. Chen, “Adcnet: Learning from raw radar data via distillation,” arXiv preprint arXiv:2303.11420, 2023.

- [18] J. Giroux, M. Bouchard, and R. Laganiere, “T-fftradnet: Object detection with swin vision transformers from raw adc radar signals,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 4030–4039.

- [19] F. Fent, A. Palffy, and H. Caesar, “Dpft: Dual perspective fusion transformer for camera-radar-based object detection,” IEEE TRANSACTIONS ON INTELLIGENT VEHICLES, 2024.

- [20] X. Zhu, W. Su, L. Lu, B. Li, X. Wang, and J. Dai, “Deformable {detr}: Deformable transformers for end-to-end object detection,” in International Conference on Learning Representations, 2021.

- [21] G. Hinton, “Distilling the knowledge in a neural network,” arXiv preprint arXiv:1503.02531, 2015.

- [22] G. Bang, K. Choi, J. Kim, D. Kum, and J. W. Choi, “Radardistill: Boosting radar-based object detection performance via knowledge distillation from lidar features,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, pp. 15 491–15 500.

- [23] L. Zhao, J. Song, and K. A. Skinner, “Crkd: Enhanced camera-radar object detection with cross-modality knowledge distillation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, pp. 15 470–15 480.

- [24] J. Domhof, J. F. Kooij, and D. M. Gavrila, “A joint extrinsic calibration tool for radar, camera and lidar,” IEEE Transactions on Intelligent Vehicles, vol. 6, no. 3, pp. 571–582, 2021.

- [25] X. Li, Y. Liu, V. Lakshminarasimhan, H. Cao, F. Zhang, and A. Knoll, “Globally optimal robust radar calibration in intelligent transportation systems,” IEEE Transactions on Intelligent Transportation Systems, vol. 24, no. 6, pp. 6082–6095, 2023.

- [26] B. Liu, M. Wang, H. Foroosh, M. Tappen, and M. Pensky, “Sparse convolutional neural networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 806–814.

- [27] Y. Yan, Y. Mao, and B. Li, “Second: Sparsely embedded convolutional detection,” Sensors, vol. 18, no. 10, p. 3337, 2018.

- [28] B. Graham, “Sparse 3d convolutional neural networks,” in British Machine Vision Conference, 2015.

- [29] B. Graham, M. Engelcke, and L. Van Der Maaten, “3d semantic segmentation with submanifold sparse convolutional networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 9224–9232.

- [30] T. Wang, X. Hu, Z. Liu, and C.-W. Fu, “Sparse2dense: Learning to densify 3d features for 3d object detection,” Advances in Neural Information Processing Systems, vol. 35, pp. 38 533–38 545, 2022.

- [31] G. Bang, K. Choi, J. Kim, D. Kum, and J. W. Choi, “Radardistill: Boosting radar-based object detection performance via knowledge distillation from lidar features,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, pp. 15 491–15 500.

- [32] J. Dai, H. Qi, Y. Xiong, Y. Li, G. Zhang, H. Hu, and Y. Wei, “Deformable convolutional networks,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 764–773.

- [33] Z. Liu, H. Mao, C.-Y. Wu, C. Feichtenhofer, T. Darrell, and S. Xie, “A convnet for the 2020s,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, pp. 11 976–11 986.