A Novel Approach to Curiosity and Explainable Reinforcement Learning via Interpretable Sub-Goals

Abstract

Two key challenges within Reinforcement Learning involve improving (a) agent learning within environments with sparse extrinsic rewards and (b) the explainability of agent actions. We describe a curious subgoal focused agent to address both these challenges. We use a novel method for curiosity produced from a Generative Adversarial Network (GAN) based model of environment transitions that is robust to stochastic environment transitions. Additionally, we use a subgoal generating network to guide navigation. The explainability of the agent’s behavior is increased by decomposing complex tasks into a sequence of interpretable subgoals that do not require any manual design. We show that this method also enables the agent to solve challenging procedurally-generated tasks that contain stochastic transitions above other state-of-the-art methods.

I Introduction

Deep Reinforcement Learning (DRL) has been tremendously successful. Agents have been trained to defeat a champion Go player [1], outperform humans in Atari games [2, 3] and to perform a variety of difficult robotic tasks [4, 5]. Two of the major challenges in DRL are the problem of sparse rewards and the lack of explanablity provided by the algorithms. Random exploration in environments with extremely sparse rewards, which is the core exploration method in many RL approaches, is unlikely to receive a sufficient reward signal to successfully train the agent [6]. The Deep Neural Networks (DNNs) utilized by DRL also lack explainablity of their decision making. Several works have focused on investigating methods for local interpretability, most notably attribution methods, primarily in supervised learning [7, 8, 9]. These methods generally show which inputs were most influential in producing the DNN’s output as per the algorithms criteria. Similar methods have been utilized in DRL in addition to reward decomposition [10] and action influence [11].

To improve upon the tools available to increase exploration and explainability with DRL, we present a novel method for goal orientated environments in which the agent learns to propose subgoals. The agent is composed of a subgoal generating network, a navigator network that learns to move towards the current subgoal, and a Generative Adversarial Network (GAN) tasked with next state generation. The GAN serves to build an understanding of object interaction and the effects of actions within the environment. Subgoals are set relative to the observation space, where a goal value for one element of the space is selected by the subgoal generator. The navigator network takes actions within the environment with the intent of fulfilling the chosen subgoal. The decomposition of a complex task into a sequence of simpler subgoals increases the human interpretability of the agent’s policies and decisions. Exploration efficiency and performance is also increased because of the additional feedback the subgoals provide.

Many authors have pointed out that agents that quantify novelty through prediction errors tend to get attracted to transitions that are stochastic in nature [12, 13, 14] decreasing the effectiveness of curiosity driven exploration. We address this by implementing a modification of BicycleGAN [15] as our prediction network. BicycleGAN is a multimodal image generator, able to generate a variety of possible images from the same input. With BicycleGAN, our agent predicts possible next states and determines impossible transitions removing the need to make a single deterministic prediction.

We evaluate our model on procedurally generated environments, which are naturally challenging as agents need to deal with a parameterized family of tasks, resulting in large observation spaces and making memorizing trajectories infeasible. It is in these environments that the agent must learn to generalize its policies.

II Related Work

Intrinsic motivation has been a popular method to improve the exploration abilities of agents within environments yielding sparse rewards. Novelty and curiosity are two of the most prominent sources of intrinsic motivation. Where novelty is primarily driven by reaching unfamiliar states, curiosity seeks to better understand or predict environment dynamics. One of the methods to power curiosity is next state prediction, where future states that have greater prediction errors receive greater rewards to encourage the agent to take actions within states that it is not yet able to accurately predict the result of.

Multi-Goal Hierarchical Reinforcement learning (MGHL) [16] by Xing utilizes a manager network, which sets auxiliary control tasks as subgoals that direct a worker network. The manager primarily sets subgoals where the worker is rewarded for either changing the selected pixel blocks or changing high level features where the magnitude of the reward is proportional to the magnitude of the change irrespective of the direction. We contrast this to our work where our agent generates subgoals by selecting one observation element and one particular value where the resulting subgoal is to change the chosen observation element to the chosen value.

Another similar sub-task orientated work is the Modular Multitask Reinforcement Learning with Policy Sketches [17]. The approach uses a high level policy to select sub-tasks that a worker network learns to carry out. In contrast to our work, the high level policy requires hand crafted sub tasks for each unique environment and does not include any predictive models of the environment.

Adversarially Motivated Intrinsic Goals (AMIGo) [18] sees a teacher network generate goal positions for a student network to learn to reach. The teacher network is rewarded by generating goals that the student is able to reach; but not for those reached too quickly. In contrast to our model which only uses the agent’s partial view, the teacher network sets goals relative to the full observation space. Additionally, the primary purpose of the teacher network is to drive exploration with a single goal increasing in difficulty rather than increase the explainability of the agent through a sequence of subgoals.

III Curious Sub-Goal focused Agent

Our Curious Sub-Goal focused agent (CSG) is composed of three subsystems: a next state generator powered by a GAN, the agent navigation network, and a subgoal generating network.

III-A Next State Generation

Previous works on next state prediction primarily focus on producing a deterministic prediction and comparing this prediction to the actual next state to produce a loss function, with an optimizer using the loss function to improve future predictions. This method of prediction does poorly in environments with a lot of noise present or if there are stochastic elements present. By using a GAN to generate the next state, the network is not predicting the next state but instead producing one possible next state. In taking this approach the agent is able to learn which transitions are possible and which are impossible, and in doing so gain robustness to noise and stochasticity.

In order to be able to predict a variety of possible transitions we learn a low-dimensional latent space which encapsulates the stochastic aspects of the transition. We then learn a deterministic mapping . Where is the action at time step , is the state at time step , and is a generated configuration of what the state could be at time step . We draw the latent vector from a prior distribution ; in this work we use a standard Gaussian distribution . A discriminator network, , is trained to detect synthetic transitions generated by : , where , and corresponds to the probability that is synthetic ().

We use the generator and discriminator as a source for an intrinsic curiosity reward similar to those produced from next state prediction error. In order to produce in informative curiosity reward, we force the generator to attempt to generate a prediction that matches the true next state . We directly map to the latent space using an encoding function, . The generator then uses a sample of the encoded latent space , the state and action to synthesize the desired output . This process can be likened to the reconstruction of using an auto-encoder. We define the reconstruction error of to be , which is used as a component of the curiosity reward function.

We define the curiosity based reward utilizing the reconstruction error and the discriminator’s output predicting a synthetic transition:

| (1) |

where is a scaling hyper parameter.

III-B Navigation Network

We consider the traditional RL framework of a Markov Decision Process with a state space , a set of actions and a transition function which specifies the distribution over next states given a current state and action. At each time-step , the agent in state takes an action by sampling from a goal-conditioned stochastic policy represented as a neural network with parameters where is the goal. We assume that some goal verification function can be specified such that , if the state satisfies the goal , and 0 otherwise. The undiscounted intrinsic goal reward is defined to be:

| (2) |

where is a hyper-parameter. The navigation network is trained to maximize the discounted expected reward, :

| (3) |

where is the discount factor and is the curiosity reward.

III-C Sub-Goal Generation

The subgoal generator neural network is defined as , where is the current goal, and is the initial goal set by the environment. Possible goals are set relative to the agent’s observation space. It selects an input position and a goal value for that position. The navigator network is then tasked with altering the selected input to be the goal value. A new subgoal can be proposed at the start of an episode, once the previous one is reached or the previous one is abandoned. The agent abandons subgoals if it exceeds a hyper-parameter that sets the step limit to attempt to reach the goal. Finally, the network can also choose to set no subgoal, which is taken when the network selects the current goal as the subgoal.

The subgoal generator network is trained to maximize the discounted expected reward:

| (4) |

where is the discount factor, is the curiosity reward, is the extrinsic reward within the environment, is the goal verification function, and is a scaling parameter which determines the penalty applied to the extrinsic reward when the goal does not align with the reward.

IV Experiments

We use a modified version of the TorchBeast implementation of IMPALA [19] (https://github.com/facebookresearch/torchbeast) as the framework for our code.

We establish our proof of concept within the challenging and scaleable environment of minigrid (https://github.com/maximecb/gym-minigrid). This provides a good test bed for the agent as the observations are symbolic rather than high dimensional allowing for clear and interpretable subgoals.

IV-A Environments

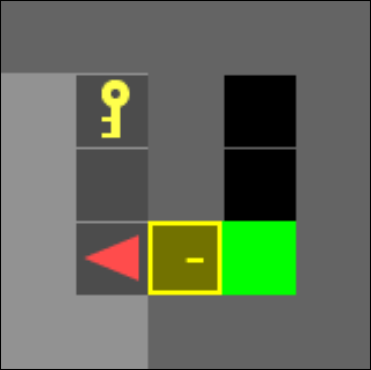

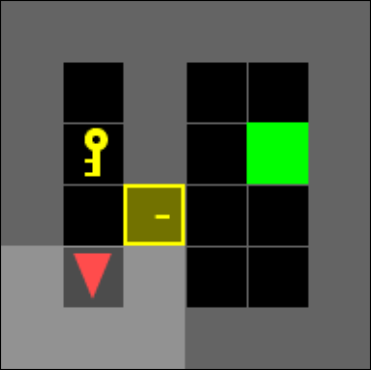

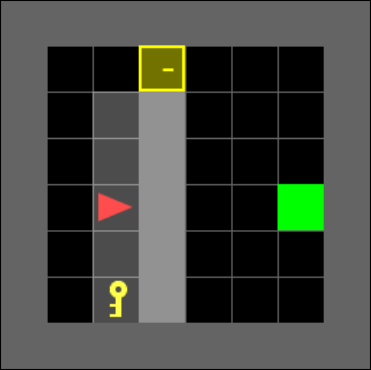

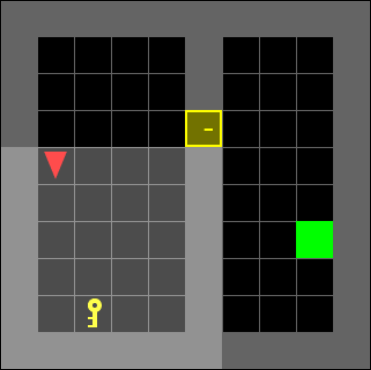

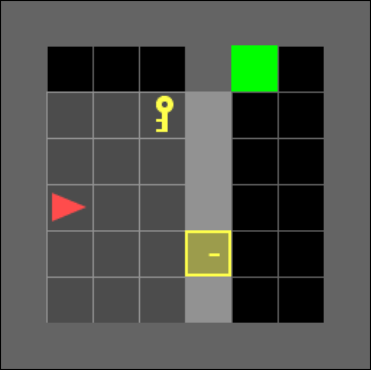

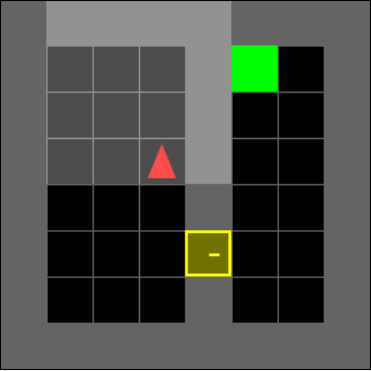

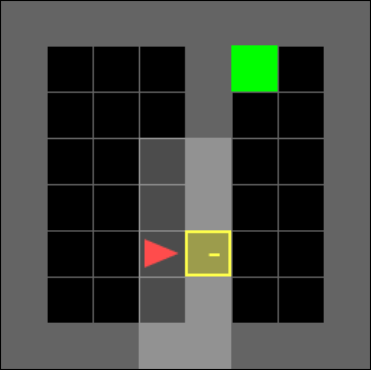

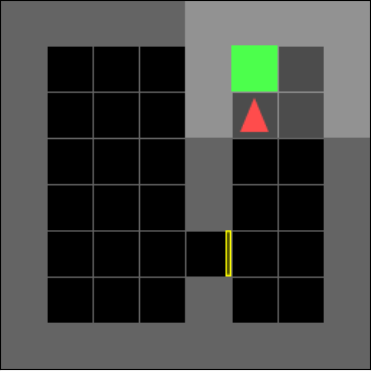

We use a modified version of Door & Key environments within the minigrid package (Figure 1). The environment randomizes the position of the dividing wall, door, and key, as well as the agent’s starting position and orientation. We alter the environment to also randomize the location of the agent’s target position within the second room. The randomization adds increased difficulty by increasing the number of possible trajectories and adds stochastic transitions into the environment.

The environments are comprised of tiles, where each tile contains at most one of the following objects: door, key, wall, goal. If it contains none, it is the ‘empty’ object. The agent has limited vision, seeing within a square with the agent positioned on a center edge of the square. The agent cannot see behind walls or closed doors even if they fall within the vision range. Such objects are assigned as ‘unseen’ rather than their true values. All objects and their attributes are transformed using an embedding layer unique for each network before being fed into the remainder of the network. The navigator is able to perform the following actions: turn left, turn right, move forward, pick up an object, drop an object, or toggle (interact with objects such as opening a door).

IV-B Implementations

The navigator and subgoal generator have a network architecture consisting of a fully connected layer with rectified linear units, two LSTM layers, followed by a final fully connected layer. They are trained using the TorchBeast [20] implementation of IMPALA [19].

The GAN’s encoder simply consists of three fully connected layers interleaved with a hyperbolic tangent activation function. Similarly, both the generator and discriminator consist of four fully connected layers interleaved with a hyperbolic tangent activation function. In addition, the singular output from the final layer of the discriminator is activated by a sigmoid function. We train the GAN using the same methods as BicycleGAN [15], with one exception. As BicylceGAN is designed for continuous data sets, we replace the standard generator loss function with the MaliGAN [21] gradient estimator.

For comparison, we implement 3 alternate methods. We use IMPALA without any intrinsic motivation as a standard deep RL baseline. Additionally, we include two alternative methods utilizing intrinsic motivation. Random Network Distillation Exploration (RND) [22] which uses next state feature prediction error as a curiosity based form of intrinsic motivation, where features are produced from a random neural network. AMIGO is also trained where the teacher and student network architectures match those described in the AMIGO paper [18] and accompanying code https://github.com/facebookresearch/adversarially-motivated-intrinsic-goals. The AMIGO networks receive the full absolute view of the environment, as opposed to the agent centric partial view the other methods receive. Both RND and IMPALA share the same network architecture as our navigator and subgoal generator networks. All three alternate methods are trained using the TorchBeast framework.

| Model | Door & Key Mean Extrinsic Reward ( size) | |||

|---|---|---|---|---|

| CSG | ||||

| IMPALA | ||||

| RND | ||||

| AMIGO | ||||

IV-C Results and Discussion

We use the mean extrinsic reward at the end of training to evaluate the performance of each agent. Where the extrinsic reward defined in the environment is . We summarize our results in Table I.

IMPALA is unable to reach the goal once the environment space becomes too large given the lack of intrinsic motivation for exploration. However it performs better in the low dimensional spaces due to the lack of reward noise the other methods utilize. RND and AMIGO perform comparatively across all environment dimensions. Our agent, CSG, performs better than both RND and AMIGO in the highest dimensional environment.

Subgoal: Pick up key

New subgoal: Go to door.

New subgoal: Go to goal.

Final step before episode ends

The novel subgoal generation has the added benefit of increasing the agent’s explainablity. Figure 2 highlights an example set of goals used to direct the navigator network in order to solve the environment. The subgoals listed are the human interpretation of the subgoal generator output. In figure 2 the agent receives a field of view:

where is an object. The field of view moves with the agent such that the agent is always positioned at , with directly in front. At time step , in Figure 2(a), the subgoal generator selects to be changed to the object index value corresponding to the yellow key, which can be interpreted as the agent holding the key, and hence the subgoal is to pick up the key. Similarly, at time step , in Figure 2(b), the subgoal generator selects to be changed to the object index value corresponding to the locked yellow door, which we interpret as the agent setting going to the door as its subgoal. Finally, at time step , in Figure 2(c), is selected to be changed to the object index value corresponding to the green goal zone as its subgoal, which is also the overarching goal set by the environment.

The ability to generate explicit subgoals has two benefits: the first is to developers, as it gives a window into the training process, showing stages along the way to the agent’s final functionality, that are accessible to human language, logic and reasoning. Therefore, more interventions are possible, giving the ability to craft and debug the training process at a finer grain than conventional methods. The second benefit is to end users of the agent. These users will be able to understand in human terms how and why the agent made the decisions it did, leading to greater trust in the agent. Along this track there is a future long-term benefit to end users in that their feedback can be integrated as reward to train the agent, giving them the ability to teach the agent according to their preferences. Users will get a greater measure of control and insight into the agent’s reasoning and decision criteria.

V Conclusion

In this work we propose a method for developing a curious subgoal focused agent that has a robust method for driving curiosity and that decomposes complex tasks using interpretable subgoals increasing both exploration and explainability. In future work we would like to expand the testing environments to cement CSG as a new state of the art hard exploration method.

In this implementation of the CSG, applications are limited. They are restricted to discrete observation spaces with basic dynamics. However, it may be easily incorporated to run with any modern DRL agent operating within these constraints as it needs no hand-crafted features or demonstrations. In future work we also would like to include abstract concepts and representations as possible subgoals. For example if the pixel input version of gridworld were used, we would like to enable concepts for ‘key’ and ‘door’ created by the agent to be used rather than any specific pixel.

References

- [1] D. Silver, J. Schrittwieser, K. Simonyan, I. Antonoglou, A. Huang, A. Guez, T. Hubert, L. Baker, M. Lai, A. Bolton, Y. Chen, T. Lillicrap, F. Hui, L. Sifre, G. van den Driessche, T. Graepel, and D. Hassabis, “Mastering the game of go without human knowledge,” Nature, vol. 550, no. 7676, pp. 354–359, Oct 2017. [Online]. Available: http://www.nature.com/articles/nature24270

- [2] J. Schrittwieser, I. Antonoglou, T. Hubert, K. Simonyan, L. Sifre, S. Schmitt, A. Guez, E. Lockhart, D. Hassabis, T. Graepel, T. Lillicrap, and D. Silver, “Mastering atari, go, chess and shogi by planning with a learned model,” arXiv, pp. 1–21, 2019. [Online]. Available: https://arxiv.org/pdf/1911.08265v2.pdf

- [3] A. P. Badia, B. Piot, S. Kapturowski, P. Sprechmann, A. Vitvitskyi, D. Guo, and C. Blundell, “Agent57: Outperforming the atari human benchmark,” arXiv, 2020. [Online]. Available: https://arxiv.org/pdf/2003.13350v1.pdf

- [4] Y. Duan, X. Chen, R. Houthooft, J. Schulman, and P. Abbeel, “Benchmarking deep reinforcement learning for continuous control,” 33rd International Conference on Machine Learning, ICML 2016, vol. 3, pp. 2001–2014, 2016. [Online]. Available: https://arxiv.org/pdf/1604.06778v3.pdf

- [5] A. Raffin and F. Stulp, “Generalized State-Dependent Exploration for Deep Reinforcement Learning in Robotics,” arXiv, pp. 1–21, 2020. [Online]. Available: https://arxiv.org/pdf/2005.05719v1.pdf

- [6] M. J. Mataric, “Reward Functions for Accelerated Learning,” W. W. Cohen and H. B. T. M. L. P. . Hirsh, Eds. San Francisco (CA): Morgan Kaufmann, 1994, pp. 181–189. [Online]. Available: http://www.sciencedirect.com/science/article/pii/B9781558603356500301

- [7] M. T. Ribeiro, S. Singh, and C. Guestrin, “”Why should i trust you?” Explaining the predictions of any classifier,” Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, vol. 13-17-Augu, pp. 1135–1144, 2016. [Online]. Available: https://arxiv.org/pdf/1602.04938.pdf

- [8] M. Ancona, C. Öztireli, and M. Gross, “Explaining deep neural networks with a polynomial time algorithm for Shapley values approximation,” 36th International Conference on Machine Learning, ICML 2019, vol. 2019-June, pp. 400–409, 2019. [Online]. Available: https://arxiv.org/pdf/1903.10992.pdf

- [9] M. Sundararajan, A. Taly, and Q. Yan, “Axiomatic attribution for deep networks,” 34th International Conference on Machine Learning, ICML 2017, vol. 7, pp. 5109–5118, 2017. [Online]. Available: https://arxiv.org/pdf/1703.01365.pdf

- [10] Z. Juozapaitis, A. Koul, A. Fern, M. Erwig, and F. Doshi-Velez, “Explainable Reinforcement Learning via Reward Decomposition,” Proceedings of the IJCAI 2019 Workshop on Explainable Artificial Intelligence, pp. 47—-53, 2019. [Online]. Available: https://web.engr.oregonstate.edu/{~}erwig/papers/ExplainableRL{\_}XAI19.pdf

- [11] P. Madumal, T. Miller, L. Sonenberg, and F. Vetere, “Explainable Reinforcement Learning Through a Causal Lens,” 2019. [Online]. Available: http://arxiv.org/abs/1905.10958

- [12] D. Pathak, P. Agrawal, A. A. Efros, and T. Darrell, “Curiosity-driven exploration by self-supervised prediction,” 34th International Conference on Machine Learning, ICML 2017, vol. 6, pp. 4261–4270, 2017. [Online]. Available: https://arxiv.org/pdf/1705.05363.pdf

- [13] N. Bougie and R. Ichise, “Skill-based curiosity for intrinsically motivated reinforcement learning,” Machine Learning, vol. 109, no. 3, pp. 493–512, Mar 2020. [Online]. Available: http://link.springer.com/10.1007/s10994-019-05845-8

- [14] Y. Burda, H. Edwards, D. Pathak, A. Storkey, T. Darrell, and A. A. Efros, “Large-scale study of curiosity-driven learning,” arXiv, 2018. [Online]. Available: https://arxiv.org/pdf/1808.04355.pdf

- [15] J. Y. Zhu, R. Zhang, D. Pathak, T. Darrell, A. A. Efros, O. Wang, and E. Shechtman, “Toward multimodal image-to-image translation,” Advances in Neural Information Processing Systems, vol. 2017-Decem, no. 1, pp. 466–477, 2017. [Online]. Available: https://arxiv.org/pdf/1711.11586.pdf

- [16] L. Xing, “Learning and Exploiting Multiple Subgoals for Fast Exploration in Hierarchical Reinforcement Learning,” arXiv, 2019. [Online]. Available: https://arxiv.org/pdf/1905.05180.pdf

- [17] J. Andreas, D. Klein, and S. Levine, “Modular multitask reinforcement learning with policy sketches,” 34th International Conference on Machine Learning, ICML 2017, vol. 1, pp. 229–239, 2017. [Online]. Available: https://arxiv.org/pdf/1611.01796.pdf

- [18] A. Campero, R. Raileanu, H. Küttler, J. B. Tenenbaum, T. Rocktäschel, and E. Grefenstette, “Learning with AMIGo: Adversarially Motivated Intrinsic Goals,” 2020. [Online]. Available: http://arxiv.org/abs/2006.12122

- [19] L. Espeholt, H. Soyer, R. Munos, K. Simonyan, V. Mnih, T. Ward, B. Yotam, F. Vlad, H. Tim, I. Dunning, S. Legg, and K. Kavukcuoglu, “IMPALA: Scalable Distributed Deep-RL with Importance Weighted Actor-Learner Architectures,” 35th International Conference on Machine Learning, ICML 2018, vol. 4, pp. 2263–2284, 2018. [Online]. Available: https://arxiv.org/pdf/1802.01561.pdf

- [20] H. Küttler, N. Nardelli, T. Lavril, M. Selvatici, V. Sivakumar, T. Rocktäschel, and E. Grefenstette, “TorchBeast: A PyTorch platform for distributed RL,” arXiv, pp. 1–10, 2019. [Online]. Available: https://arxiv.org/pdf/1910.03552.pdf

- [21] T. Che, Y. Li, R. Zhang, R. Devon Hjelm, W. Li, Y. Song, and Y. Bengio, “Maximum-likelihood augmented discrete generative adversarial networks,” arXiv, 2017. [Online]. Available: https://arxiv.org/pdf/1702.07983.pdf

- [22] Y. Burda, H. Edwards, A. Storkey, and O. Klimov, “Exploration by random network distillation,” arXiv, pp. 1–17, 2018. [Online]. Available: https://arxiv.org/pdf/1810.12894.pdf