A novel algorithm for optimizing bundle adjustment in image sequence alignment

Abstract

The Bundle Adjustment (BA) model is commonly optimized using a nonlinear least squares method, with the Levenberg-Marquardt (L-M) algorithm being a typical choice. However, despite the L-M algorithm’s effectiveness, its sensitivity to initial conditions often results in slower convergence when applied to poorly conditioned datasets, motivating the exploration of alternative optimization strategies. This paper introduces a novel algorithm for optimizing the BA model in the context of image sequence alignment for cryo-electron tomography, utilizing optimal control theory to directly optimize general nonlinear functions. The proposed Optimal Control Algorithm (OCA) exhibits superior convergence rates and effectively mitigates the oscillatory behavior frequently observed in L-M algorithm. Extensive experiments on both synthetic and real-world datasets were conducted to evaluate the algorithm’s performance. The results demonstrate that the OCA achieves faster convergence compared to the L-M algorithm. Moreover, the incorporation of a bisection-based update procedure significantly enhances the OCA’s performance, particularly in poorly initialized datasets. These findings indicate that the OCA can substantially improve the efficiency of 3D reconstructions in cryo-electron tomography.

Key words: Bundle adjustment, Electron tomography, Image sequence alignment, Optimal control algorithm.

1 Introduction

Electron tomography (ET) techniques is an important branch of cryo-electron microscopy. It utilizes the 3D reconstruction method in simultaneous localization and mapping (SLAM) to reconstruct the 3D structure of organisms by photographing biological samples at different oblique angles. ††footnotetext: This work was supported by the Original Exploratory Program Project of National Natural Science Foundation of China (62450004), the Joint Funds of the National Natural Science Foundation of China (U23A20325), and the Natural Science Foundation of Shandong Province (ZR2021ZD14, ZR2024MF045). (Corresponding author: Huanshui Zhang.) H. Zhang is with the College of Electrical Engineering and Automation, Shandong University of Science and Technology, Qingdao 266590, China, and also with the School of Control Science and Engineering, Shandong University, Jinan, Shandong 250061, China (e-mail: [email protected]). H. Wang is with the College of Electrical Engineering and Automation, Shandong University of Science and Technology, Qingdao 266590, China (e-mail: [email protected];). H. Xu is with the College of Mathematics and Systems Science, Shandong University of Science and Technology, Qingdao 266590, China (e-mail: [email protected];)This method enables the acquisition of relatively accurate biological structural information. The most critical step is the alignment of image sequence, which has a direct impact on the final 3D structure restoration accuracy. Image sequence alignment can be divided into two main methods: marker-free alignment [1] and marker-based alignment [2, 3]. In the marker-based alignment , the bundle adjustment is the core, and the nonlinear least square method is usually used to minimize the reprojection error and optimize the target parameters.

Up to now, there exists a variety of alternative algorithms for solving nonlinear least squares problems, including the Newton method, Gauss-Newton method, and L-M algorithm, among others. The L-M algorithm is commonly employed in many practical applications. Recently, an optimization algorithm based on optimal control has been proposed [4]. This novel approach transforms the optimization problem into an optimal control problem by designing a cost function closely related to the objective function. An optimal controller adjusts a first-order difference equation to minimize the objective function. This method offers several advantages, such as relatively flexible initial value selection, rapid convergence rates, and the avoidance of oscillatory behavior commonly associated with gradient methods. Notably, when the initial value is appropriately chosen, the OCA can yield different local minima by adjusting the input weight matrix.

At present, the OCA remains primarily at the theoretical level and has not yet seen widespread application. This paper explores the practical application of the OCA by optimizing the BA model in cryo-ET and comparing the results with those obtained using the L-M algorithm. The remainder of this paper is organized as follows. Section 2 provides a review of recent research on BA and offers a brief introduction to the steps of the L-M algorithm. Section 3 discusses the projection model employed in BA within the context of cryo-ET, along with the underlying principles of the OCA. In Section 4, we present a comparative experiment between the L-M algorithm and OCA, demonstrating that the latter exhibits faster convergence. Finally, Section 5 outlines future directions for the development of OCA and explores its potential applications.

2 Related work and L-M algorithm

Bundle adjustment refers to the extraction of optimal 3D models and camera parameters (both intrinsic and extrinsic) from visual reconstruction data. It involves adjusting the camera orientation and the spatial positions of feature points such that the optimal alignment of light rays, reflected from each feature point, is achieved. These rays are eventually projected onto the camera’s optical center[5].

The BA was first introduced by Brown and Duane[6], with its cost function formulated as the sum of squares of multiple nonlinear functions. Given that the minimization process is carried out using nonlinear least squares, the L-M algorithm is the most commonly employed optimization technique. Recent studies have primarily concentrated on expanding the application scenarios of the BA model. For example, Liu et al.[7] propose a new cost function that extends the applicability of BA to lidar mapping. However, relatively few works have addressed the fundamental aspects of the problem by exploring alternative algorithmic structures to replace the L-M algorithm. In this regard, Li et al.[8] introduce a Broyden–Fletcher–Goldfarb–Shanno algorithm to approximate the cost function. Similarly, Zhou et al. [9] propose a point-to-plane cost function aimed at jointly optimizing depth camera poses and plane parameters for 3D reconstruction.

The aforementioned approaches, including the L-M algorithm, can be regarded as variations of the Gauss-Newton method. Consequently, the linearization of the cost function is an inherent aspect of these methods, which leads to increased errors and a higher number of iterations. In contrast, the OCA, introduced in the subsequent section, does not rely on such linearization techniques.

2.1 Levenberg-Marquardt algorithm

The L-M algorithm is a widely used algorithm for solving non-linear least squares problems, which combines the concepts of gradient descent and the Gauss-Newton algorithm to provide a robust approach for parameter estimation.

Let , the objective is to minimize the sum of squared residuals

| (1) |

The update rule for the parameters is given by

| (2) | ||||

where is the Jacobian matrix of partial derivatives, is the identity matrix and is referred to as damping parameter, which controls the behavior of the algorithm.

A larger makes the update resemble gradient descent while a smaller approximates the Gauss-Newton method. This adaptability allows the L-M algorithm to efficiently handle a wide range of problem conditions.

In this approach, we employ the trust-region method to adjust . First, we define a quadratic function at the current iteration point to represent the predicted value

| (3) |

The step is then computed based on the current value of , and the ratio of the actual decrease to the predicted decrease is evaluated as

| (4) |

Equation (4) reflects the degree to which the linearized model aligns with the nonlinear objective function.

-

•

When approaches 1, the quadratic model provides a good approximation of the objective function at , suggesting that should be reduced.

-

•

When approaches 0, the quadratic model poorly approximates the objective function at , indicating that should be increased.

-

•

When is neither close to 0 nor 1, is considered to be appropriately chosen, and no adjustment is necessary.

Typically, the threshold values for are set to 0.25 and 0.75. Based on this, the update rule for in the L-M method is as follows

| (5) |

In summary, the complete flow of the L-M algorithm is as follows

3 Method

This section is divided into two parts. The first part introduces the projection model of cryo-ET, a crucial component in BA. The second part explains the principles of the OCA and extends the algorithm by incorporating a parameter update mechanism.

3.1 Projection model

An essential component of BA is the camera projection model, which maps the 3D coordinates of objects in the real world onto 2D coordinates in an image. In this paper, we focus on the application of a projection model specifically for cryo-ET image sequence[10]

| (6) |

where is the 3D coordinates of the fiducial markers, is the 2D projection of the fiducial markers in the image, is the image scale change, is the translation during projection and P represents the orthogonal projection matrix. R denotes the rotation matrix of the projection process, the details of R and P as follows

is called as camera parameters and is called as point parameters.

When sufficient projection data for a single 3D point is available, triangulation can be used to approximate the coordinates of that point. However, due to various uncertainties, the initial estimates of the 3D coordinates may not be entirely accurate. When this estimated 3D point is reprojected onto the 2D image, a discrepancy, or error, arises in comparison to the observed point.

Let the observed point be denoted as and the point obtained by reprojection can be expressed as follows

| (7) |

where represents the initial estimation of parameters. Thus the reprojection error can be written as

| (8) |

To account for all observations, we can introduce a subscript to represent the error for each observation. Let denotes the data generated by the -th marker in the -th image. The overall cost function can be expressed as

| (9) |

During the sample collection process, some marker points may become obscured at certain angles and thus will not be visible. To represent the visibility of these points, we introduce a visibility indicator . If the projection of the -th marker is visible in the -th image, ; otherwise, .

Solving this least-squares problem involves jointly optimizing both the camera parameters and the point parameters , a process known as Bundle Adjustment.

The effectiveness of the adjustments is evaluated using the following average residual formula

| (10) |

where denotes the 1-norm, and are the adjusted parameters. A smaller value indicates a lower reprojection error.

3.2 Optimal control algorithm

Suppose is a nonlinear function with a continuous second derivative. Consider the following optimization problem

| (11) |

Generally it can be solved by the following iteration

| (12) |

where is step size.

If we interpret as a control input in a control system, the iteration (12) exhibits a structure analogous to that of the following discrete-time linear time-invariant system

| (13) |

By designing a performance index related to the cost function [4], the optimization problem (11) can be reformulated as the following optimal control problem,

| (14) | ||||

where represents the terminal cost function, is the terminal time, and is given. The positive definite matrix denotes the control weight.

Based on the Pontryagin Maximum Principle, the optimal trajectory satisfies a set of forward and backward equations. Solving these equations yields the optimal controller for the problem (14)

| (15) |

Thus, we can derive the following iterative algorithm

| (16) |

Although the above iteration is implicit, we can obtain an approximate explicit solution through Taylor expansion. First, take the first-order Taylor expansion of at

| (17) |

| (18) |

| (19) |

where , and is the Hessian matrix of the objective function . By replacing all with for , we obtain the following optimal control algorithm to address BA

| (20) | |||

The optimal control algorithm has been demonstrated to exhibit a superlinear convergence property[11], and the algorithm operates through the following stages

The selection of an appropriate weight matrix is critical for the effective operation of the OCA. For convenience, is generally defined as , where is a constant. Under the condition that , smaller values of lead to faster convergence of the OCA. In the simulation experiments presented in Section 4.2, a set of poorly initialized data was generated, with initial points positioned far from the extremum points. In such cases, a larger is typically required to ensure algorithmic convergence; however, this also results in a slower convergence rate for the OCA. To resolve this issue, we propose an update procedure based on the bisection method, which allows the weight matrix to progressively decrease as the number of iterations increases. This approach ensures algorithmic convergence while simultaneously enhancing convergence speed. The detailed update strategy is presented as follows.

It is important to note that this update procedure does not affect the convergence of OCA. Given that is non-increasing, it follows that the spectral radius is also non-increasing. As decreases, the convergence rate of OCA improves. For a detailed proof, the reader is referred to [11].

4 Result

In this section, we apply the OCA and the L-M algorithm to the collected datasets and compare the convergence speed and accuracy of the two methods based on the experimental results. The algorithms and data used in this study were implemented in MATLAB R2023b, with the integration of the third-party CasADi[12] software tool.

4.1 Real datasets experiment

We selected three real-world cryo-electron microscopy datasets, Centriole††http://bio3d.colorado.edu/imod/files/tutorialData-1K.tar.gz, VEEV††https://doi.org/10.5281/zenodo.11172321 and Vibrio ††https://doi.org/10.5281/zenodo.11172858 to evaluate our algorithms. The Centriole dataset consists of a tilted sequence of 64 projections, with the projected images tilted between -61° and +65° at 2° intervals. Each projection is a 1024x1024-pixel image, with each pixel corresponding to 1.01 nm. The VEEV dataset consists of a tilted sequence of 21 projections, with the projected images tilted between -50° and +50° at 5° intervals. Each projection is a 1536x2048-pixel image, with each pixel corresponding to 0.2 nm. The Vibrio dataset[13] consists of a tilted sequence of 41 projections. The tomographic reconstructions of these samples were performed using the incline program from the MarkerAuto software suite[3].

Table 1 presents the experimental results of the real datasets, indicating that the Optimal Control algorithm has a faster convergence speed compared to the L-M algorithm.

| Data | Method | R/ | Iteration | Initial residual | Final residual |

|---|---|---|---|---|---|

| Centriole | OCA | 4 | 1.729723 | 1.196488 | |

| L-M | 9 | 1.196488 | |||

| VEEV | OCA | 28 | 10.412596 | 1.202426 | |

| L-M | 236 | 1.202426 | |||

| Vibrio | OCA | 5 | 10.431754 | 1.123689 | |

| L-M | 7 | 1.123689 |

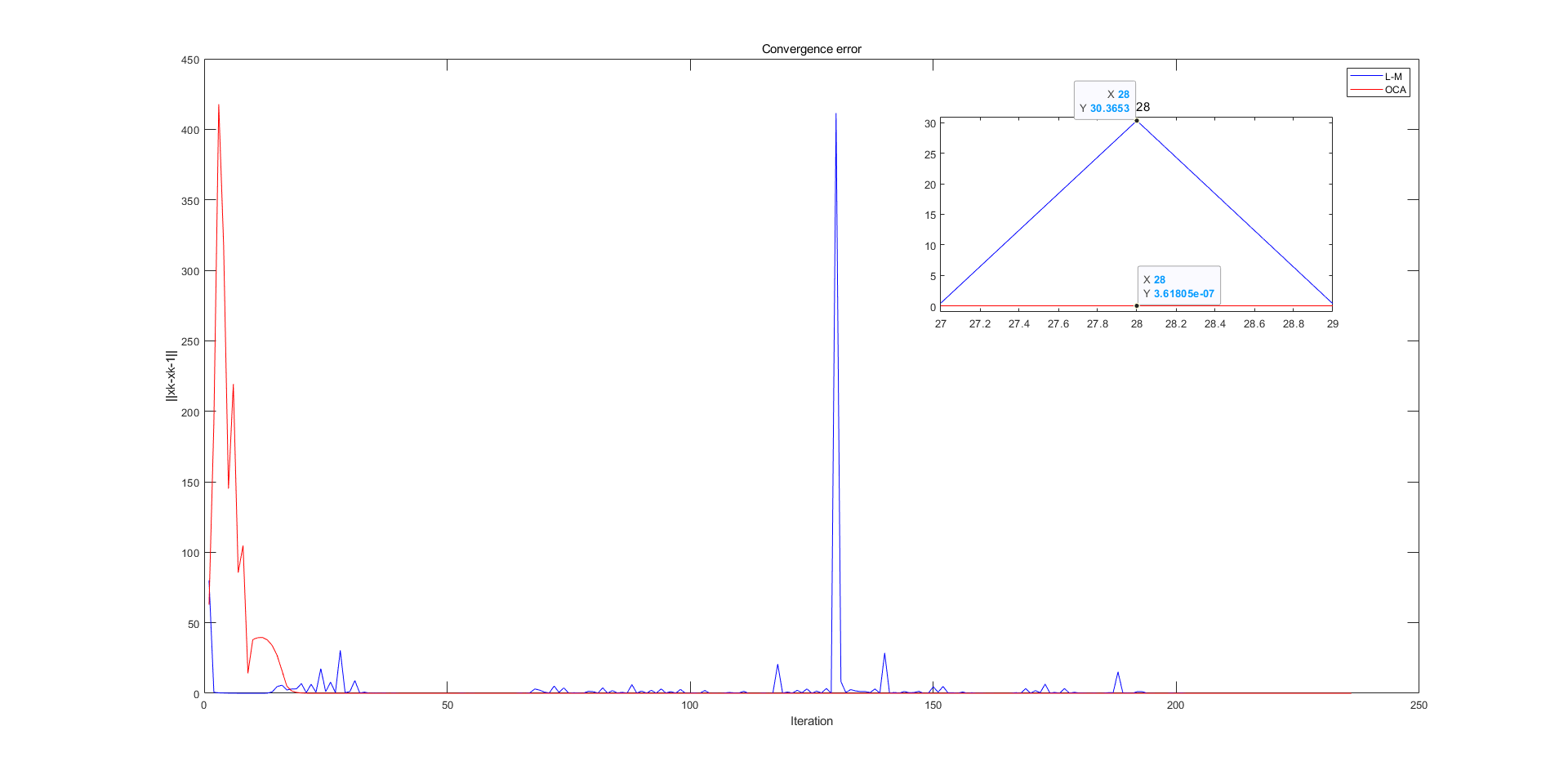

Using the VEEV dataset as an illustrative example, Figure 1 presents the error in the parameters to be optimized at each iteration, represented as . Notably, when , the OCA satisfied the convergence criterion of . In contrast, the L-M algorithm exhibited oscillatory behavior, which led to an increased number of iterations. Consequently, it only met the convergence criterion at .

4.2 Simulated datasets experiment

To obtain a broader range of experimental results, we randomly constructed 3D points and projection images at various angles for simulated dataset experiments, where and . The projection images are sized pixels, and the 3D points are distributed within an space. To enhance the realism of the simulated dataset, we processed the constructed data as follows

-

•

Step 1 Randomly select 5%–30% of the 2D projection points as invisible.

-

•

Step 2 Add Gaussian noise with a standard deviation of to the 2D projection points, where .

-

•

Step 3 Add noise to the camera parameters, with noise magnitude based on the average of each parameter (avg), uniformly distributed in the range , where .

-

•

Step 4 Select 5% of the 2D projection points as outliers and add Gaussian noise with a mean of and a standard deviation of .

For convenience, we denote the dataset with parameters as “21c_5%_20p_0.2%.” To ensure a fair comparison, the weight matrix in the OCA and the damping factor in the L-M algorithm were iteratively adjusted to promote faster convergence. The results of this experiment are presented in Table 2.

| Noise | Method | Iteration | Initial residual | Final residual | |

|---|---|---|---|---|---|

| 21c_5% | OCA | 6 | 11.178429 | 2.051380 | |

| _20p_0.2% | L-M | 8 | 2.051380 | ||

| 21c_5% | OCA | 6 | 14.683220 | 12.377970 | |

| _20p_2% | L-M | 24 | 12.377970 | ||

| 41c_5% | OCA | 5 | 8.411878 | 1.920106 | |

| _20p_0.2% | L-M | 6 | 1.920106 | ||

| 41c_5% | OCA | 5 | 15.658034 | 12.106996 | |

| _20p_2% | L-M | 17 | 12.106996 | ||

| 21c_5% | OCA | 6 | 8.085499 | 1.992593 | |

| _40p_0.2% | L-M | 6 | 1.992593 | ||

| 21c_5% | OCA | 8 | 14.191696 | 11.834401 | |

| _40p_2% | L-M | 16 | 11.834401 | ||

| 41c_5% | OCA | 5 | 7.968274 | 1.860539 | |

| _40p_0.2% | L-M | 6 | 1.860539 | ||

| 41c_5% | OCA | 5 | 16.647453 | 13.511510 | |

| _40p_2% | L-M | 9 | 13.511510 |

When large noise is introduced into the simulated dataset, the initial estimates of the camera parameters and the 2D point coordinates exhibit significant deviations. This suggests that the initial parameters are considerably distant from the extremum, necessitating a greater number of iterations for both the OCA and L-M algorithm. It is worth noting that, in the L-M algorithm, the damping factor is continuously updated, whereas the weight matrix in the OCA remains constant throughout the iterations. If we allow the matrix in the OCA to be updated gradually similar to in the L-M algorithm, the OCA could potentially achieve faster convergence. The detailed experimental results are displayed in the Table 3.

| Noise | Method | Iteration | Initial residual | Final residual | |

|---|---|---|---|---|---|

| 21c_10% | OCA | 8 | 75.677900 | 67.390553 | |

| _20p_10% | L-M | 108 | 67.390553 | ||

| 41c_10% | OCA | 9 | 68.691760 | 58.815270 | |

| _20p_10% | L-M | 146 | 58.815270 | ||

| 21c_10% | OCA | 8 | 70.903339 | 65.472015 | |

| _40p_10% | L-M | 168 | 65.472015 | ||

| 41c_10% | OCA | 8 | 79.020956 | 73.499860 | |

| _40p_10% | L-M | 26 | 73.499860 |

5 Discussion

In this paper, we introduce a novel algorithm, OCA, to optimize the BA model for the image sequence alignment of cryo-ET . We extended the OCA from a purely theoretical framework to practical applications. Through experiments on real-world datasets, the OCA exhibited a notably faster convergence rate compared to the widely adopted L-M algorithm, underscoring its practical significance. Furthermore, in experiments with simulated datasets, the advantages of the OCA became even more pronounced as the noise level increased. This indicates that, in real-world scenarios with poor initial estimates, the OCA may offer substantial benefits.

The BA model used for cryo-ET image sequence alignment involves parameters of relatively low magnitude, with matrices of only a few hundred dimensions. However, the successful application of the OCA in this context suggests its broader potential within the field of computer vision. While the implicit iterative formula of the OCA theoretically guarantees the optimal solution, as described in (16), it is technically challenging to implement. At present, several explicit iterative formulas have been proposed [11], and many explicit solutions remain to be explored. This presents a promising avenue for future research.

References

- [1] R. Guckenberger. Determination of a common origin in the micrographs of tilt series in three-dimensional electron microscopy. Ultramicroscopy, 9(1):167–173, 1982.

- [2] Michael C. Lawrence. Least-Squares Method of Alignment Using Markers, pages 197–204. Springer US, Boston, MA, 1992.

- [3] Renmin Han, Liansan Wang, Zhiyong Liu, Fei Sun, and Fa Zhang. A novel fully automatic scheme for fiducial marker-based alignment in electron tomography. Journal of Structural Biology, 192(3):403–417, 2015.

- [4] Huanshui Zhang and Hongxia Wang. Optimization methods rooting in optimal control. arXiv preprint arXiv:2312.01334, 2023.

- [5] Bill Triggs, Philip F. McLauchlan, Richard I. Hartley, and Andrew W. Fitzgibbon. Bundle adjustment — a modern synthesis. In Bill Triggs, Andrew Zisserman, and Richard Szeliski, editors, Vision Algorithms: Theory and Practice, pages 298–372. Springer Berlin Heidelberg, 2000.

- [6] Duane Brown. The bundle adjustment-progress and prospect. In XIII Congress of the ISPRS, Helsinki, 1976.

- [7] Zheng Liu and Fu Zhang. Balm: Bundle adjustment for lidar mapping. IEEE Robotics and Automation Letters, 6(2):3184–3191, 2021.

- [8] Yanyan Li, Qiang Wang, and Yanbiao Sun. Bundle adjustment method using sparse bfgs solution. Remote Sensing Letters, 9, 05 2018.

- [9] Lipu Zhou, Daniel Koppel, Hul Ju, Frank Steinbruecker, and Michael Kaess. An efficient planar bundle adjustment algorithm. In 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pages 136–145. IEEE, 2020.

- [10] Renmin Han, Xiaohua Wan, Zihao Wang, Yu Hao, Jingrong Zhang, Yu Chen, Xin Gao, Zhiyong Liu, Fei Ren, Fei Sun, and Fa Zhang. Autom: A novel automatic platform for electron tomography reconstruction. Journal of Structural Biology, 199(3):196–208, 2017.

- [11] Hongxia Wang, Yeming Xu, Ziyuan Guo, and Huanshui Zhang. Superlinear optimization algorithms, 2024.

- [12] Joel AE Andersson, Joris Gillis, Greg Horn, James B Rawlings, and Moritz Diehl. Casadi: a software framework for nonlinear optimization and optimal control. Mathematical Programming Computation, 11:1–36, 2019.

- [13] Davi R. Ortega, Catherine M. Oikonomou, H. Jane Ding, Prudence Rees-Lee, Alexandria, and Grant J. Jensen. Etdb-caltech: A blockchain-based distributed public database for electron tomography. PLOS ONE, 14(4):1–15, 04 2019.