A note on parallel preconditioning for the all-at-once solution of Riesz fractional diffusion equations

Abstract

The -step backward difference formula (BDF) for solving systems of ODEs can be formulated as all-at-once linear systems that are solved by parallel-in-time preconditioned Krylov subspace solvers (see McDonald, Pestana, and Wathen [SIAM J. Sci. Comput., 40(2) (2018): A1012-A1033] and Lin and Ng [arXiv:2002.01108, 2020, 17 pages]). However, when the BDF () method is used to solve time-dependent PDEs, the generalization of these studies is not straightforward as -step BDF is not selfstarting for . In this note, we focus on the 2-step BDF which is often superior to the trapezoidal rule for solving the Riesz fractional diffusion equations, and show that it results into an all-at-once discretized system that is a low-rank perturbation of a block triangular Toeplitz system. We first give an estimation of the condition number of the all-at-once systems and then, capitalizing on previous work, we propose two block circulant (BC) preconditioners. Both the invertibility of these two BC preconditioners and the eigenvalue distributions of preconditioned matrices are discussed in details. An efficient implementation of these BC preconditioners is also presented, including the fast computation of dense structured Jacobi matrices. Finally, numerical experiments involving both the one- and two-dimensional Riesz fractional diffusion equations are reported to support our theoretical findings.

keywords:

Backwards difference formula, all-at-once discretization, parallel-in-time preconditioning, Krylov subsp-ace solver, fractional diffusion equation.1 Introduction

In this paper, we are particularly interested in the efficient numerical solution of evolutionary partial differential equations (PDEs) with both first order temporal derivative and space fractional-order derivative(s). These models arise in various scientific applications in different fields including physics [1], bioengineering [2], hydrology [3], and finance [4], etc., owing to the potential of fractional calculus to describe rather accurately natural processes which maintain long-memory and hereditary properties in complex systems [5, 2]. In particular, fractional diffusion equations can provide an adequate and accurate description of transport processes that exhibit anomalous diffusion, for example subdiffusive phenomena and Lévy fights [6], which cannot be modelled properly by second-order diffusion equations. As most fractional diffusion equations can not be solved analytically, approximate numerical solutions are sought by using efficient numerical methods such as, e.g., (compact) finite difference [3, 8, 7, 9, 10, 11], finite element [12] and spectral (Galerkin) methods [13, 14, 15].

Many numerical techniques proposed in the literature for solving this class of problems are the common time-stepping schemes. They solve the underlying evolutionary PDEs with space fractional derivative(s) by marching in time sequentially, one level after the other. As many time steps may be usually necessary to balance the numerical errors arising from the spatial discretization, these conventional time-stepping schemes can be very time-consuming. This concern motivates the recent development of parallel-in-time (PinT) numerical solutions for evolutionary PDEs (especially with space fractional derivative(s)) including, e.g., the inverse Laplace transform method [16], the MGRIT method [17, 18, 12, 19], the exponential integrator [20] and the parareal method [21]. A class of PinT methods, i.e., the space-time methods, solves the evolutionary PDEs at all the time levels simultaneously by performing an all-at-once discretization that results into a large-scale linear system that is typically solved by preconditioned Krylov subspace methods; refer e.g., to [22, 24, 29, 27, 23, 25, 28, 26, 30, 31] for details. However, most of them only focus on the numerical solutions of one-dimensional space fractional diffusion equations [23, 25, 30, 32] due to the huge computational cost required for high-dimensional problems.

Recently, McDonald, Pestana and Wathen proposed in [33] a block circulant (BC) preconditioner to accelerate the convergence of Krylov subspace methods for solving the all-at-once linear system arising from -step BDF temporal discretization of evolutionary PDEs. Parallel experiments with the BC preconditioner in [33] are reported by Goddard and Wathen in [34]. In [35], a generalized version of the BC preconditioner has been proposed by Lin and Ng who introduced a parameter into the top-right block of the BC preconditioner that can be fine-tuned to handle efficiently the case of very small diffusion coefficients. Both the BC and the generalized BC preconditioners use a modified diagonalization technique that is originally proposed by Maday and Rønquist [22, 24]. The investigations in [33, 34, 35, 36] mainly focus on the 1-step BDF (i.e. the backward Euler method) for solving the underlying evolutionary PDEs, which results in an exact block lower triangular Toeplitz (BLTT) all-at-once system. On the other hand, when the BDF () method is used to discretize the evolutionary PDEs, the complete BLTT all-at-once systems cannot be obtained as implicit schemes based on the BDF () for evolutionary PDEs are not selfstarting [13, 39, 40, 37, 41]. For example, when we establish the fully discretized scheme based on popular BDF2 for evolutionary PDEs, we often need to use the backward Euler method to compute the solution at the first time level [42, 43, 44, 10].

In this study, we particularly consider the second-order accurate implicit difference BDF2 scheme for solving the one- and two-dimensional Riesz fractional diffusion equations (RFDEs). Although the Crank-Nicolson (C-N) method [8] is a very popular solution option for solving such RFDEs, it is still only -stable rather than -stable [40, 45]. By contrast, the BDF2 scheme with stiff decay can be more “stable” and slightly cheaper because it is always unconditionally stable and the numerical solution is often guaranteed to be positive and physically more reliable near initial time for the numerical solutions of evolutionary PDEs with either integral or fractional order spatial derivatives; see [10, 37, 42, 46] for details. After the spatial discretization of Riesz fractional derivative(s), we reformulate the BDF2 scheme for the semi-discretized system of ODEs into an all-at-once system, where its coefficient matrix is a BLTT matrix with a low-rank perturbation. Then, we tightly estimate the condition number of the all-at-once systems and adapt the generalized BC (also including the standard BC) preconditioner for such an all-at-once system. Meanwhile, the invertibility of the generalized BC preconditioner is discussed apart from the work in [33, 35], and the eigenvalue distributions of preconditioned matrices dictating the convergence rate of Krylov subspace methods is preliminarily investigated. From these discussions, we derive clear arguments explaining the better performance of the generalized BC preconditioner against the BC preconditioner, especially for very small diffusion coefficient.

The practical implementation of the generalized BC preconditioner requires to solve a sequence of dense complex-shifted linear systems with real symmetric negative definite (block) Toeplitz coefficient matrices, which arise from the numerical discretization of Riesz fractional derivatives. Because solving such Toeplitz systems is often very time-consuming, classical circulant preconditioners have been adapted to accelerate the convergence of iterative solutions, especially for level-1 Toeplitz systems. However, circulant preconditioners are often less efficient to extend for solving high-dimensional model problems (i.e., block Toeplitz discretized systems) [28, 48]. In this work, by approximating the real symmetric (block) Toeplitz matrix by the (block) -matrix [47, 48], that can be efficiently diagonalized using the discrete sine transforms (DSTs), we present an efficient implementation for the generalized BC preconditioner that does avoid any dense matrix storage and only needs to solve the first half of the sequence of complex-shifted systems. Estimates of the computational cost and of the memory requirements of the associated generalized BC preconditioner are given. Our numerical experiments suggest that the BDF2 all-at-once system utilized the preconditioned Krylov subspace solvers can be a competitive solution method for RFDEs.

The rest of this paper is organized as follows. In the next section, the all-at-once linear system derived from the BDF2 scheme for solving the RFDEs is presented. Meanwhile, the invertibility of the pertinent all-at-once system is proved and its condition number is estimated. In Section 3, the generalized BC preconditioner is adapted and discussed, both the properties and the efficient implementation of the preconditioned system are analyzed. In Section 4, numerical results are reported to support our findings and the effectiveness of the proposed preconditioner. Finally, the paper closes with conclusions in Section 5.

2 The all-at-once system of Riesz fractional diffusion equations

In this section, we present the development of a numerical scheme for initial-boundary problem of Riesz fractional diffusion equations that preserves the positivity of the solutions. Then, in the next section, we discuss its efficient parallel implementation.

2.1 The all-at-once discretization of Riesz fractional diffusion equation

The governing Riesz fractional diffusion equations 111Here it is worth noting that if the Riesz fractional derivative is replaced with the fractional Laplacian, we can just follow the work in [50] for the spatial discretization of one- or two-dimensional model problem and our proposed numerical techniques in the next sections are easy and simple to be adapted for such an extension. of the anomalous diffusion process can be written as

| (2.1) |

where may represent, for example, a solute concentration, and constant the diffusion coefficient. This equations is a superdiffusive model largely used in fluid flow analysis, financial modelling and other applications. The Riesz fractional derivative is defined by [51]

| (2.2) |

in which and are the left and right Riemann-Liouville fractional derivatives of order given, respectively, by [5]

and

As , it notes that Eq. (2.1) degenerates into the classical diffusion equation [51].

Next, we focus on the numerical solution of Eq. (2.1). We consider a rectangle discretized on the mesh , where , and . We denote by any grid function.

A considerable amount of work has been devoted in the past years to the development of fast methods for the approximation of the Riesz and Riemann-Liouville (R-L) fractional derivatives, such as the first-order accurate shifted Grünwald approximation [3, 51] and the second-order accurate weighted-shifted Grünwald difference (WSGD) approximation [9, 52]. Due to the relationship between the two kinds of fractional derivatives, all the numerical schemes proposed for the R-L fractional derivatives can be easily adapted to approximate the Riesz fractional derivative. Although the solution approach described in this work can accomodate any spatial discretized method, we choose the so-called fractional centred difference formula [8] of the Riesz fractional derivatives for clarity.

For any function , we denote

| (2.3) |

where the -dependent weight coefficient is defined as

| (2.4) |

As noted in [8], and the fractional centred difference formula exists for any . Some properties of the coefficient and the operator are presented in the following lemmas.

Lemma 2.2.

At this stage, let be a solution to the problem (2.1) and consider Eq. (2.1) at the set of grid points with . The first-order time derivative at the point is approximated by the second-order backward difference formula, i.e.,

| (2.6) |

then we define and to obtain

| (2.7) |

where are small and satisfy the inequality

Note that we cannot omit the small term in the derivation of a two-level difference scheme that is not selfstarting from Eq. (2.7) due to the unknown information of . One of the most popular strategies to compute the first time step solution is to use a first-order backward Euler scheme (which is only used once). This yields the following implicit difference scheme [37, 10] for Eq. (2.1):

| (2.8) |

where

In order to implement the proposed scheme, here we define and ; then can be written into the matrix-vector product form , where

| (2.9) |

It is easy to prove that is a symmetric positive definite (SPD) Toeplitz matrix (see [8]). Therefore, it can be stored with only entries and the fast Fourier transforms (FFTs) can be applied to carry out the matrix-vector product in operations. The matrix-vector form of the 2-step BDF method with start-up backward Euler method for solving the model problem (2.1) can be formulated as follows:

| (2.10a) | ||||

| (2.10b) | ||||

We refer to matrix as the Jacobian matrix. In particular, we note that the numerical scheme (2.10) has been proved to be unconditionally stable and second-order convergent in the discrete norm [10]. Note that it is computationally more efficient than the C-N scheme presented in [8] as it requires one less matrix-vector multiplication per time step.

Instead of computing the solution of (2.10) step-by-step, we try to get an all-at-once approximation by solving the following linear system:

| (2.11) |

where and are two identity matrices of order and , respectively. We denote the above linear system as follows:

| (2.12) |

where with

| (2.13) |

and it is clear that is invertible, because its all diagonal blocks (i.e, either or ) are invertible [33, 34].

2.2 Properties of the all-at-once system

In this subsection, we investigate the properties of the discrete all-at-once formulation (2.11). This will guide us to discuss the design of an efficient solver for such a large linear system.

Lemma 2.3.

For the matrix in (2.13), we have the following estimates,

-

1)

;

-

2)

.

Proof. Consider the following matrix splitting and then perform the matrix factorization,

| (2.14) |

where the vector . According to the Sherman-Morrison formula [53], we can write

| (2.15) |

On the other hand, we can compute the inverse of as follows,

| (2.16) |

Therefore, we know that

| (2.17) |

and

| (2.18) |

The explicit expression of has the following form:

| (2.19) |

Since is a lower triangular Toeplitz matrix, is the absolute sum of elements of its last row, i.e.,

| (2.20) |

Analogously, is the absolute sum of elements of its first column, i.e.,

| (2.21) |

Therefore, the above estimates are proved.

Theorem 2.1.

Suppose that . Then, the following bounds hold for the critical singular values of in (2.11):

| (2.22) |

so that .

Proof. Since , we may claim that for any vector . In particular, using the properties of the Kronecker product, we may estimate . The latter is computed using the spectral norm of , which can be bounded by the following inequality:

| (2.23) |

According to Lemma 2.3, we have

| (2.24) |

which proves the second bound.

The first estimate is derived similarly. By applying the triangle inequality, we can write

| (2.25) |

whereas

| (2.26) |

so the spectral norms are not greater than 4. Finally, the proof is completed by recalling that .

The condition number can be expected to vary linearly with the main properties of the system, such as number of time steps, length of time interval, and norm of the Jacobian matrix [39, 40, 54]. More refined estimates could be provided by taking into account particular properties of . In Theorem 2.1, the time interval may not necessarily be equal to the whole observation range of an application. The global time scheme (2.11) could be applied by splitting the desired interval into a sequence of subintervals for “large” time steps of size each, solving by (2.11) for , extracting the last snapshot and restarting the method using as the initial state for the next interval. The optimal value of should provide the fastest computation [39]. Another way to accelerate the solution is to reduce the condition number of the linear system by preconditioning. This standard computational aspect will be considered in the next section.

3 Parallel-in-time (PinT) preconditioners

According to Theorem 2.1, when an iterative method, namely a Krylov subspace method, is used for solving the all-at-once system (2.11), it can converge very slowly or even stagnate. Therefore, in this section we look at preconditioners that can be efficiently implemented in the PinT framework.

3.1 The structuring-circulant preconditioners

Since the matrix defined in (2.13) can be viewed as the sum of a Toeplitz matrix and a rank-1 matrix, it is natural to define our first structuring-circulant preconditioner as

| (3.1) |

where

| (3.2) |

is a -circulant matrix of Strang type that can be diagonalized as

| (3.3) |

with

| (3.4a) | |||

| where , ‘’ denotes the conjugate transpose of a matrix, is the first column of , and | |||

| (3.4b) | |||

The matrix in (3.4b) is the discrete Fourier matrix, and is unitary. In addition, it is worth noting that if we choose very small, then the condition number of increases quickly and therefore large roundoff errors may be introduced in our proposed method; see [36, 19] for a discussion of these issues. Using the property of the Kronecker product, we can factorize as

| (3.5) |

and this implies that we can compute via the following three steps:

where (with ) and is the -th eigenvalue of . The first and third steps only involve matrix-vector multiplications with subvectors, and these can be computed simultaneously by the FFTs222It notes that there are also parallel FFTs available at http://www.fftw.org/parallel/parallel-fftw.html. in CPUs [54]. The major computational cost is spent in the second step, however this step is naturally parallel for all the time steps.

Next, we study the invertibility of the matrix . According to Eq. (2.13) and because matrix is negative definite, the following result assures the invertibility of 333It is unnecessary to keep that , which is introduced in [35, Remark 4]..

Proposition 3.1.

For and , it holds that , where the equality is true if and only if .

Proof. Set , then , we have

| (3.6) |

where the penultimate inequality holds due to . Moreover, if , then it should hold that , i.e., and , which completes the proof.

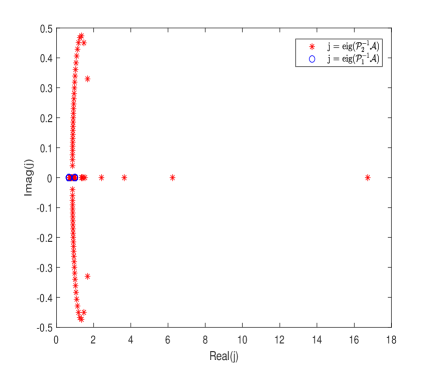

In practice, we often choose [35]; then it holds that according to Proposition 3.1. In Fig. 1, we plot the complex quantities on the complex plane. We see that for , it holds and consequently all the linear systems involved at Step-(b) are positive definite, thus not difficult to solve [31]. As shown in [36], a multigrid method using Richardson iterations as a smoother with an optimized choice of the damping parameter is very effective. By the way, a detailed convergence analysis with multigrid precondioning in the context of the algebra considered in this paper is presented in [38].

In addition, it is not hard to derive the following result.

Theorem 3.1.

Let and , where is an identity matrix of order , then it follows that .

Proof. We consider that

| (3.7) |

where , which completes the proof.

Theorem 3.1 implies that the generalized minimal residual (GMRES) method by Saad and Schultz [56] can compute the exact solution of the preconditioned system (if it is diagonalizable) in at most iterations [56, 23, 33]. Although it should be noted that such a convergence behavior of the preconditioned GMRES is not sharp when is not small, the result can give useful clues on the effectiveness of the preconditioner to approximate the all-at-once matrix . Moreover, in Section 4 we will show numerically that most of the eigenvalues of the preconditioned matrix actually cluster at point 1 of the spectrum, supporting the theoretical findings of this section on the good potential of the proposed preconditioner to accelerate the iterative solution of .

Remark 3.1.

Remark 3.2.

We remark that the invertibility of (or ) depends completely on the matrix . However, if we choose , the real parts of will be very close to zero, cf. Proposition 3.1. Meanwhile, if the diffusion coefficient is very small, the eigenvalues of will be increasingly close to zero. This fact will make the matrices potentially very ill-conditioned (even singular444If the model problem (2.1) incorporates Neumann boundary condition and we set , the matrices will be singular because of the singular matrix .). Under this circumstance, the BC preconditioner should be a bad preconditioner (see [35] and Section 4) while the preconditioner with should be preferred in a practical implementation;

Remark 3.3.

If one wants to improve the proposed preconditioner further, then the polynomial preconditioner that has potential to reduce communication costs in Krylov subspace solvers [56] can be derived:

| (3.8) |

where and assume that , then the -step polynomial preconditioner will be available and inherently parallel. Moreover, such a preconditioner can be easily adapted for the all-at-once systems arising from the given non-uniform temporal discretizations; refer to [34] for a short discussion.

3.2 Efficient implementation of the PinT preconditioner

In this subsection, we discuss on how to implement the Krylov subspace method more efficiently for solving the left (or right) preconditioned system (or ), . We recall that the kernel operations of a Krylov subspace algorithm are the matrix-vector products and for some given vector . First, we present a fast implementation for computing the second matrix-vector product.

| (3.9) |

where the operation and denote the vectorization of the matrix obtained by stacking the columns of into a single column vector. The first term can be calculated directly in storage and operations due to the sparse matrix . Owing to the Toeplitz structure of matrix , the second term can be evaluated in operations and storage. This means that the computation of requires the same operations and storage of . Clearly, the algorithm complexity of can be further alleviated by a parallel implementation.

Next, we focus on the fast computation of for a given vector . According to Step-(a)–Step-(c) described in Section 3.1, the first and third steps of the implementation of require operations and storage. If the matrix can be diagonalized (or approximated) via fast DSTs, Step-(b) can be directly solved with operations and storage, except for the different complex shifts.

Similar to [35, pp. 10-11], it is worth noting that only half of shifted linear systems in Step-(b) need to be solved. From Step-(a), we know that the right hand sides in Step-(b) can be expressed as

| (3.10) |

We recall the expression of coefficient matrices in Step-(b):

| (3.11) |

Let “” denote conjugate of a matrix or a vector. Then,

| (3.12) |

That means the unknowns in Step-(b) hold equalities: for . Therefore, only the first multi-shifted linear systems in Step-(b) need to be solved, thus the number of core processors required in the practical parallel implementation is significantly reduced.

Finally, we discuss the efficient solution of the sequence of shifted linear systems in Step-(b). Since is a real negative definite Toeplitz matrix, it can be approximated efficiently by a -matrix [47] that is a real symmetric matrix and can be diagonalized as follows:

| (3.13) |

From the relations and , we have

| (3.14) |

where the vector is the first column of . The set of shifted linear systems at Step-(b) is reformulated as the following sequence:

| (3.15) |

that can be solved by fast DSTs without storing any dense matrix. In addition, since the DST only involves real operations, so the above preconditioner matrix diagonalization does not affect Eqs. (3.10)–(3.12) at all. Overall, the fast computation of using Eq. (3.15) requires operations and memory units.

Remark 3.4.

It is worthwhile noting that such an implementation is also suitable for solving the all-at-once system that arises from the spatial discretizations using the compact finite difference, finite element and spectral methods by only substituting in Eq. (3.15) the identity matrix with the mass matrix. Fortunately, for one-dimensional problems, the mass matrix is a SPD Toeplitz tridiagonal matrix [25, 30, 13], which can be naturally diagonalized by fast DSTs. For high-dimensional models, the mass matrix will be a SPD Toeplitz block tridiagonal matrix in the Kronecker product form, thus it can be still diagonalized via fast DSTs; refer, e.g., to [11];

Remark 3.5.

Finally, it is essential to mention that although the algorithm and theoretical analyses are presented for one-dimensional model (2.1), they can be naturally extended for two-dimensional model (refer to the next section for a discussion) because the above algorithm and theoretical analyses are mainly based on the temporal discretization.

4 Numerical experiments

In this section, we present the performance of the proposed -circulant-based preconditioners for solving some examples of one- and two-dimensional model problems (2.1). In all our numerical experiments reported in this section, following the guidelines given in [35] we set for the preconditioners and . All our experiments are performed in MATLAB R2017a on a Gigabyte workstation equipped with Intel(R) Core(TM) i9-7900X CPU @3.3GHz, 32GB RAM running the Windows 10 Education operating system (64bit version) using double precision floating point arithmetic (with machine epsilon equal to ). The adaptive Simpler GMRES (shortly referred to as Ad-SGMRES) method [55] is employed to solve the right-preconditioned systems in Example 1, while the BiCGSTAB method [56, pp. 244-247] method is applied to the left-preconditioned systems in Example 2 using the built-in function available in MATLAB555In this case, since Ad-SGMRES still requires large amounts of storage due to the orthogonalization process, we have also used the BiCGSTAB method as an alternative iterative method for solving non-symmetric systems.. The tolerance for the stopping criterion in both algorithms is set as , where is the residual vector at th Ad-SGMRES or BiCGSTAB iteration. The iterations are always started from the zero vector. All timings shown in the tables are obtained by averaging over 20 runs666The code in MATLAB will be available at https://github.com/Hsien-Ming-Ku/Group-of-FDEs..

In the tables, the quantity ‘Iter’ represents the iteration number of Ad-SGMRES or BiCGSTAB, ‘DoF’ is the number of degrees of freedom, or unknowns, and ‘CPU’ is the computational time expressed in seconds. The 2-norm of the true relative residual (called in the tables TRR) is defined as , and the numerical error (Err) between the approximate and the exact solutions at the final time level reads , where is the approximate solution when the preconditioned iterative solvers terminate and is the exact solution on the mesh. These notations are adopted throughout this section.

Example 1. The first example is a Riesz fractional diffusion equation (2.1) with coefficients , , and . The source term is given by

The exact solution is known and it reads as . The results of our numerical experiments with the Ad-SGMRES method preconditioned by and for solving the all-at-once discretized systems (2.12) are reported in Tables 1–3.

| DoF | Iter | CPU | TRR | Err | Iter | CPU | TRR | Err | ||

|---|---|---|---|---|---|---|---|---|---|---|

| 1/128 | 8,128 | 7 | 0.033 | -9.794 | 9.7599e-5 | 19 | 0.085 | -9.412 | 9.7599e-5 | |

| 1/256 | 16,320 | 7 | 0.054 | -9.257 | 9.4838e-5 | 19 | 0.117 | -9.347 | 9.4838e-5 | |

| 1/512 | 32,704 | 8 | 0.116 | -10.017 | 9.4147e-5 | 19 | 0.247 | -9.535 | 9.4147e-5 | |

| 1/1024 | 65,472 | 8 | 0.156 | -9.537 | 9.3974e-5 | 19 | 0.359 | -9.163 | 9.3975e-5 | |

| 1/128 | 32,512 | 7 | 0.107 | -10.366 | 9.5721e-6 | 19 | 0.258 | -9.378 | 9.5722e-6 | |

| 1/256 | 65,280 | 7 | 0.158 | -9.580 | 6.8110e-6 | 19 | 0.385 | -9.318 | 6.8111e-6 | |

| 1/512 | 130,816 | 7 | 0.445 | -9.020 | 6.1205e-6 | 19 | 1.236 | -9.248 | 6.1208e-6 | |

| 1/1024 | 261,888 | 8 | 0.787 | -9.763 | 5.9481e-6 | 19 | 1.958 | -9.156 | 5.9482e-6 | |

| 1/128 | 130,048 | 6 | 0.323 | -9.020 | 5.0121e-6 | 19 | 0.964 | -9.368 | 5.0138e-6 | |

| 1/256 | 261,120 | 7 | 0.756 | -9.895 | 1.2888e-6 | 19 | 2.123 | -9.309 | 1.2890e-6 | |

| 1/512 | 523,264 | 7 | 1.715 | -9.326 | 5.9821e-7 | 19 | 4.749 | -9.242 | 5.9870e-7 | |

| 1/1024 | 1,047,552 | 8 | 3.245 | -10.056 | 4.2607e-7 | 19 | 8.103 | -9.153 | 4.2613e-7 | |

| DoF | Iter | CPU | TRR | Err | Iter | CPU | TRR | Err | ||

|---|---|---|---|---|---|---|---|---|---|---|

| 1/128 | 8,128 | 8 | 0.038 | -9.892 | 1.0514e-4 | 15 | 0.085 | -9.044 | 1.0515e-4 | |

| 1/256 | 16,320 | 8 | 0.054 | -9.755 | 9.8789e-5 | 15 | 0.095 | -9.176 | 9.8789e-5 | |

| 1/512 | 32,704 | 8 | 0.112 | -9.697 | 9.7199e-5 | 15 | 0.198 | -9.513 | 9.7199e-5 | |

| 1/1024 | 65,472 | 8 | 0.157 | -9.349 | 9.6802e-5 | 16 | 0.308 | -9.563 | 9.6802e-5 | |

| 1/128 | 32,512 | 7 | 0.112 | -9.545 | 1.4536e-5 | 16 | 0.225 | -9.831 | 1.4536e-5 | |

| 1/256 | 65,280 | 7 | 0.158 | -9.103 | 8.1809e-6 | 15 | 0.319 | -9.323 | 8.1810e-6 | |

| 1/512 | 130,816 | 8 | 0.515 | -9.925 | 6.5922e-6 | 15 | 0.979 | -9.265 | 6.5922e-6 | |

| 1/1024 | 261,888 | 8 | 0.829 | -9.587 | 6.1950e-6 | 16 | 1.649 | -9.605 | 6.1950e-6 | |

| 1/128 | 130,048 | 7 | 0.392 | -9.982 | 1.3161e-5 | 15 | 0.827 | -9.008 | 1.3162e-5 | |

| 1/256 | 261,120 | 7 | 0.779 | -9.423 | 3.2696e-6 | 15 | 1.637 | -9.184 | 3.2692e-6 | |

| 1/512 | 523,264 | 7 | 1.744 | -9.049 | 9.0813e-7 | 15 | 3.769 | -9.381 | 9.0882e-7 | |

| 1/1024 | 1,047,552 | 8 | 3.221 | -9.878 | 5.1171e-7 | 16 | 6.658 | -9.631 | 5.1160e-7 | |

| DoF | Iter | CPU | TRR | Err | Iter | CPU | TRR | Err | ||

|---|---|---|---|---|---|---|---|---|---|---|

| 1/128 | 8,128 | 7 | 0.034 | -9.255 | 1.2052e-4 | 11 | 0.052 | -9.305 | 1.2052e-4 | |

| 1/256 | 16,320 | 7 | 0.047 | -9.101 | 1.0303e-4 | 11 | 0.070 | -9.195 | 1.0303e-4 | |

| 1/512 | 32,704 | 8 | 0.113 | -10.012 | 9.8653e-5 | 11 | 0.151 | -9.123 | 9.8653e-5 | |

| 1/1024 | 65,472 | 8 | 0.159 | -10.024 | 9.7559e-5 | 11 | 0.225 | -9.058 | 9.7559e-5 | |

| 1/128 | 32,512 | 7 | 0.112 | -9.831 | 3.8671e-5 | 11 | 0.153 | -9.408 | 3.8671e-5 | |

| 1/256 | 65,280 | 7 | 0.155 | -9.500 | 1.1924e-5 | 11 | 0.235 | -9.313 | 1.1924e-5 | |

| 1/512 | 130,816 | 7 | 0.456 | -9.287 | 7.5514e-6 | 11 | 0.690 | -9.255 | 7.5518e-6 | |

| 1/1024 | 261,888 | 7 | 0.696 | -9.261 | 6.4585e-6 | 11 | 1.093 | -9.212 | 6.4587e-6 | |

| 1/128 | 130,048 | 6 | 0.331 | -9.416 | 3.9387e-5 | 11 | 0.588 | -9.433 | 3.9387e-5 | |

| 1/256 | 261,120 | 6 | 0.666 | -9.216 | 9.8111e-6 | 11 | 1.187 | -9.368 | 9.8118e-6 | |

| 1/512 | 523,264 | 7 | 1.736 | -9.936 | 2.4178e-6 | 11 | 2.669 | -9.317 | 2.4178e-6 | |

| 1/1024 | 1,047,552 | 7 | 2.850 | -9.941 | 7.4549e-7 | 11 | 4.396 | -9.300 | 7.4557e-7 | |

According to Tables 1–3, we note that the used method with preconditioner converges much faster than with in terms of both CPU and Iter on this Example 1, with different values of ’s. In terms of accuracy (the values TRR and Err), the two preconditioned Ad-SGMRES methods are almost comparable. The results indicate that introducing the adaptive parameter indeed helps improve the performance of . Moreover, the -matrix approximation of the Jacobian matrix in (3.5) is numerically effective. The iteration number of Ad-SGMRES- varies only slightly when increases (or decreases), showing almost matrix-size independent convergence rate. Such favourable numerical scalability property of Ad-SGMRES- is completely in line with our theoretical analysis presented in Section 3.1, where the eigenvalues distribution of the preconditioned matrices (also partly shown in Fig. 2) is independent of the space-discretization matrix . On the other hand, if the diffusion coefficient becomes smaller than , the difference of performance between the preconditioners and will be more remarkable; refer to Remark 4, however we do not investigate these circumstance in the present study.

Example 2. (the 2D problem [52, 13]) Consider the following Riesz fractional diffusion equation

| (4.1) |

where , , , such that the source term is exactly defined as

with and . The exact solution is .

By a similar derivation to the technique described in Section 2.1, we can establish an implicit difference scheme for solving this model problem. For simplicity, we set ; then, it is easy to derive the Jacobian matrix in the following Kronecker product form

| (4.2) |

where the two SPD Toeplitz matrices are defined by

Then, it is not hard to know that is a SPD matrix, which has no impact on the algorithm and theoretical analyses described in Sections 2–3. The only difference is that the direct application of for Krylov subspace solvers must be prohibited, because it requires the huge computational cost and memory storage for solving a sequence of dense shifted linear systems in Step-(b).

In order to reduce the computational expense, we can follow Eq. (3.13) to approximate the Jacobian matrix in (4.2) by the following -matrix

| (4.3) |

where and [47, 48, 49]. Again, instead of solving the shifted linear systems in Step-(b), we solve the following sequence of shifted linear systems,

| (4.4) | |||

| (4.5) |

where , efficiently by fast discrete sine transforms avoiding the storage of any dense matrices. The numerical results reported in Tables 4–6 with illustrate the effectiveness and robustness of the PinT preconditioner for solving Eq. (4.5).

| DoF | Iter | CPU | TRR | Err | Iter | CPU | TRR | Err | ||

|---|---|---|---|---|---|---|---|---|---|---|

| 1/64 | 254,016 | 4.0 | 1.006 | -9.195 | 1.2627e-4 | 12.0 | 2.544 | -9.290 | 1.2628e-4 | |

| 1/128 | 1,032,256 | 4.5 | 5.325 | -10.066 | 8.0645e-5 | 12.0 | 12.312 | -9.132 | 8.0646e-5 | |

| 1/256 | 4,161,600 | 4.5 | 17.597 | -9.219 | 7.8998e-5 | 12.0 | 42.376 | -9.134 | 7.8999e-5 | |

| 1/512 | 16,711,744 | 5.0 | 115.94 | -9.814 | 7.8611e-5 | 12.5 | 262.03 | -9.151 | 7.8612e-5 | |

| 1/64 | 1,016,064 | 4.0 | 4.027 | -9.544 | 7.4633e-5 | 12.0 | 10.355 | -9.264 | 7.4633e-5 | |

| 1/128 | 4,129,024 | 4.0 | 19.419 | -9.134 | 2.1246e-5 | 12.0 | 49.827 | -9.102 | 2.1246e-5 | |

| 1/256 | 16,646,400 | 5.0 | 79.796 | -9.446 | 7.8953e-6 | 12.0 | 173.68 | -9.092 | 7.8954e-6 | |

| 1/512 | 66,846,976 | 4.5 | 426.03 | -9.174 | 5.0729e-6 | 12.5 | 1050.3 | -9.204 | 5.0732e-6 | |

| 1/64 | 4,064,256 | 4.0 | 16.246 | -10.084 | 7.1404e-5 | 12.0 | 41.638 | -9.244 | 7.1404e-5 | |

| 1/128 | 16,516,096 | 4.0 | 78.194 | -9.861 | 1.8016e-5 | 12.0 | 203.61 | -9.084 | 1.8017e-5 | |

| 1/256 | 66,585,600 | 4.0 | 265.24 | -9.567 | 4.6657e-6 | 12.0 | 753.81 | -9.072 | 4.6661e-6 | |

| 1/512 | 267,387,904 | 4.0 | 2520.7 | -9.157 | 1.3276e-6 | 12.5 | 6989.3 | -9.209 | 1.3282e-6 | |

| DoF | Iter | CPU | TRR | Err | Iter | CPU | TRR | Err | ||

|---|---|---|---|---|---|---|---|---|---|---|

| 1/64 | 254,016 | 4.0 | 1.004 | -9.297 | 1.5758e-4 | 11.0 | 2.487 | -9.255 | 1.5758e-4 | |

| 1/128 | 1,032,256 | 4.5 | 5.322 | -9.978 | 8.1963e-5 | 11.0 | 11.416 | -9.227 | 8.1963e-5 | |

| 1/256 | 4,161,600 | 5.0 | 19.756 | -10.122 | 7.9075e-5 | 11.5 | 41.569 | -9.379 | 7.9075e-5 | |

| 1/512 | 16,711,744 | 5.0 | 115.82 | -9.676 | 7.8490e-5 | 11.5 | 240.18 | -9.345 | 7.8490e-5 | |

| 1/64 | 1,016,064 | 4.0 | 4.058 | -9.585 | 1.0778e-4 | 11.0 | 9.745 | -9.127 | 1.0778e-4 | |

| 1/128 | 4,129,024 | 4.0 | 19.609 | -9.106 | 2.9441e-5 | 11.0 | 46.275 | -9.162 | 2.9441e-5 | |

| 1/256 | 16,646,400 | 4.5 | 73.342 | -9.454 | 9.8515e-6 | 11.5 | 168.30 | -9.259 | 9.8515e-6 | |

| 1/512 | 66,846,976 | 4.5 | 424.74 | -9.300 | 5.1188e-6 | 11.5 | 965.63 | -9.245 | 5.1183e-6 | |

| 1/64 | 4,064,256 | 4.0 | 16.343 | -10.060 | 1.0466e-4 | 11.0 | 39.182 | -9.100 | 1.0466e-4 | |

| 1/128 | 16,516,096 | 4.0 | 78.225 | -9.813 | 2.6328e-5 | 11.0 | 188.46 | -9.126 | 2.6328e-5 | |

| 1/256 | 66,585,600 | 4.0 | 265.75 | -9.582 | 6.7380e-6 | 11.5 | 733.89 | -9.256 | 6.7382e-6 | |

| 1/512 | 267,387,904 | 4.0 | 2517.9 | -9.282 | 1.8400e-6 | 11.5 | 6278.1 | -9.224 | 1.8401e-6 | |

| DoF | Iter | CPU | TRR | Err | Iter | CPU | TRR | Err | ||

|---|---|---|---|---|---|---|---|---|---|---|

| 1/64 | 254,016 | 4.0 | 1.047 | -9.492 | 2.3321e-4 | 11.5 | 2.513 | -10.177 | 2.3321e-4 | |

| 1/128 | 1,032,256 | 4.0 | 4.976 | -9.058 | 9.5250e-5 | 11.5 | 12.175 | -10.188 | 9.5251e-5 | |

| 1/256 | 4,161,600 | 4.5 | 17.826 | -9.887 | 7.9355e-5 | 11.5 | 41.627 | -10.208 | 7.9355e-5 | |

| 1/512 | 16,711,744 | 4.5 | 114.13 | -9.473 | 7.8240e-5 | 11.0 | 231.76 | -9.154 | 7.8240e-5 | |

| 1/64 | 1,016,064 | 4.0 | 4.035 | -10.062 | 1.8715e-4 | 11.0 | 9.673 | -9.339 | 1.8715e-4 | |

| 1/128 | 4,129,024 | 4.0 | 19.567 | -9.425 | 4.9102e-5 | 11.5 | 48.305 | -9.977 | 4.9102e-5 | |

| 1/256 | 16,646,400 | 4.0 | 66.497 | -9.150 | 1.4579e-5 | 11.0 | 161.38 | -9.064 | 1.4578e-5 | |

| 1/1024 | 66,846,976 | 4.0 | 380.78 | -9.011 | 5.9522e-6 | 11.0 | 921.53 | -9.115 | 5.9518e-6 | |

| 1/64 | 4,064,256 | 4.0 | 16.225 | -10.092 | 1.8428e-4 | 11.0 | 38.684 | -9.027 | 1.8428e-4 | |

| 1/128 | 16,516,096 | 4.0 | 78.158 | -9.905 | 4.6224e-5 | 11.5 | 198.25 | -10.077 | 4.6224e-5 | |

| 1/256 | 66,585,600 | 4.0 | 266.02 | -9.760 | 1.1700e-5 | 11.5 | 734.78 | -10.020 | 1.1701e-5 | |

| 1/512 | 267,387,904 | 4.0 | 2519.5 | -9.551 | 3.0690e-6 | 11.0 | 6117.6 | -9.100 | 3.0691e-6 | |

Similar to Example 1, Tables 4–6 show that the preconditioner converges much faster in terms of both CPU and Iter than on this example, with different values of ’s. The accuracy of two preconditioned BiCGSTAB methods is almost comparable with respect to TRR and Err. Once again, the results indicate that introducing the adaptive parameter indeed helps improve the performance of ; the faster convergence rate of is computational attractive especially when the diffusion coefficients become smaller than – cf. Remark 4. The -matrix approximation to the Jacobian matrix in (3.5) remains very effective for this two-dimensional model problem (4.1). For more details, one can attempt to play it by our accessible MATLAB codes. The iteration number of BiCGSTAB- varies only slightly when increases (or decreases), showing also for this problem almost matrix-size independent convergence rate. Such favourable numerical scalability property confirms our theoretical analysis since in Section 3.1 the eigenvalue distribution of the preconditioned matrices is independent of the space-discretization matrix .

5 Conclusions

In this note, we revisit the all-at-once linear system arising from the BDF temporal discretization for evolutionary PDEs. In particular, we present the BDF2 scheme for the RFDEs model as our case study, where the resultant all-at-once system is a BLTT linear system with a low-rank matrix perturbation. The conditioning of the all-at-once coefficient matrix is studied and tight bounds are provided. Then, we adapt the generalized BC preconditioner for such all-at-once systems, proving the invertibility of the preconditioner matrix unlike in previous studies. Our analysis demonstrates the superiority of the generalized BC preconditioner to the BC preconditioner. Moreover, the spectral properties of the preconditioned system and the convergence behavior of the preconditioned Krylov subspace solver have been investigated. By the -matrix approximation of the dense Jacobian matrix , we derive a memory-effective implementation of the generalized BC preconditioner for solving the one- and two-dimensional RFDEs model problems. Numerical results have been reported to show the effectiveness of the generalized BC preconditioner.

On the other hand, according to the analysis and implementation of the generalized BC preconditioner, the BDF2 scheme implemented via PinT preconditioned Krylov solvers can be easily extended to other spatial discretizations schemes for RFDEs (with other boundary conditions). Furthermore, our study may inspire the development of new parallel numerical methods preserving the positivity for certain evolutionary PDEs. As an outlook for the future, the extension of the generalized BC preconditioner and of its parallel implementation for solving nonlinear RFDEs [57, 32] (even in cases when non-uniform temporal steps are chosen in combination with the BDF2 scheme; refer to [34, 18] for a short discussion) remains an interesting topic of further research.

Acknowledgments

The authors would like to thank Dr. Xin Liang and Prof. Shu-Lin Wu for their insightful suggestions and encouragements. This research is supported by NSFC (11801463 and 61876203) and the Applied Basic Research Project of Sichuan Province (2020YJ0007). Meanwhile, the first author is grateful to Prof. Hai-Wei Sun for his helpful discussions during the visiting to University of Macau.

References

- [1] S.Ş. Bayın, Definition of the Riesz derivative and its application to space fractional quantum mechanics, J. Math. Phys., 57(12) (2016), Article No. 123501, 12 pages. DOI: 10.1063/1.4968819.

- [2] R.L. Magin, Fractional Calculus in Bioengineering, Begell House Publishers, Danbury, CT (2006).

- [3] M.M. Meerschaert, C. Tadjeran, Finite difference approximations for two-sided space-fractional partial differential equations, Appl. Numer. Math., 56(1) (2006), pp. 80-90.

- [4] E. Scalas, R. Gorenflo, F. Mainardi Fractional calculus and continuous-time finance, Physica A, 284(1-4) (2000), pp. 376-384.

- [5] A.A. Kilbas, H.M. Srivastava, J.J. Trujillo, Theory and applications of fractional differential equations, Elsevier, Amsterdam, Netherlands (2006).

- [6] R. Metzler, J. Klafter, The random walk’s guide to anomalous diffusion: a fractional dynamics approach, Phys. Rep., 339(1) (2000), pp. 1-77.

- [7] M. Chen, Y. Wang, X. Cheng, W. Deng, Second-order LOD multigrid method for multidimensional Riesz fractional diffusion equation, BIT, 54(3) (2014), pp. 623-647.

- [8] C. Çelik, M. Duman, Crank-Nicolson method for the fractional diffusion equation with the Riesz fractional derivative, J. Comput. Phys., 231(4) (2012), pp. 1743-1750.

- [9] W.Y. Tian, H. Zhou, W. Deng, A class of second order difference approximations for solving space fractional diffusion equations, Math. Comp., 84(294) (2015), pp. 1703-1727.

- [10] H.-L. Liao, P. Lyu, S. Vong, Second-order BDF time approximation for Riesz space-fractional diffusion equations, Int. J. Comput. Math., 95(1) (2018), pp. 144-158.

- [11] D. Hu, X. Cao, A fourth-order compact ADI scheme for two-dimensional Riesz space fractional nonlinear reaction-diffusion equation, Int. J. Comput. Math., 97(9) (2020), pp. 1928-1948. DOI: 10.1080/00207160.2019.1671587.

- [12] X. Yue, S. Shu, X. Xu, W. Bu, K. Pan, Parallel-in-time multigrid for space-time finite element approximations of two-dimensional space-fractional diffusion equations, Comput. Math. Appl., 78(11) (2019), pp. 3471-3484.

- [13] Y. Xu, Y. Zhang, J. Zhao, Backward difference formulae and spectral Galerkin methods for the Riesz space fractional diffusion equation, Math. Comput. Simul., 166 (2019), pp. 494-507.

- [14] Y. Xu, Y. Zhang, J. Zhao, General linear and spectral Galerkin methods for the Riesz space fractional diffusion equation, Appl. Math. Comput., 364 (2020), Article No. 124664, 12 pages. DOI: 10.1016/j.amc.2019.124664.

- [15] J. Zhao, Y. Zhang, Y. Xu, Implicit Runge-Kutta and spectral Galerkin methods for Riesz space fractional/distributed-order diffusion equation, Comput. Appl. Math., 39(2) (2020), Article No. 47, 27 pages. DOI: 10.1007/s40314-020-1102-3.

- [16] H.-K. Pang, H.-W. Sun, Fast numerical contour integral method for fractional diffusion equations, J. Sci. Comput., 66(1) (2016), pp. 41-66.

- [17] M.J. Gander, M. Neumüller, Analysis of a new space-time parallel multigrid algorithm for parabolic problems, SIAM J. Sci. Comput., 38(4) (2016), pp. A2173-A2208.

- [18] R.D. Falgout, M. Lecouvez, C.S. Woodward, A parallel-in-time algorithm for variable step multistep methods, J. Comput. Sci., 37 (2019), Article No. 101029, 12 pages. DOI: 10.1016/j.jocs.2019.101029.

- [19] S.-L. Wu, T. Zhou, Acceleration of the MGRiT algorithm via the diagonalization technique, SIAM J. Sci. Comput., 41(5) (2019), pp. A3421-A3448.

- [20] M.E. Farquhar, T.J. Moroney, Q. Yang, I.W. Turner, GPU accelerated algorithms for computing matrix function vector products with applications to exponential integrators and fractional diffusion, SIAM J. Sci. Comput., 38(3) (2016), pp. C127-C149.

- [21] S.-L. Wu, T. Zhou, Fast parareal iterations for fractional diffusion equations, J. Comput. Phys., 329 (2017), pp. 210-226.

- [22] Y. Maday, E.M. Rønquist, Parallelization in time through tensor-product space-time solvers, C. R. Math., 346(1-2) (2008), pp. 113-118.

- [23] X.-M. Gu, T.-Z. Huang, X.-L. Zhao, H.-B. Li, L. Li, Strang-type preconditioners for solving fractional diffusion equations by boundary value methods, J. Comput. Appl. Math., 277 (2015), pp. 73-86.

- [24] M.J. Gander, 50 Years of Time Parallel Time Integration, in Multiple Shooting and Time Domain Decomposition Methods (T. Carraro, M. Geiger, S. Körkel, et al., eds.), Contributions in Mathematical and Computational Sciences, vol 9. Springer, Cham, Switzerland (2017), pp. 69-113. DOI: 10.1007/978-3-319-23321-5_3.

- [25] S.-L. Lei, Y.-C. Huang, Fast algorithms for high-order numerical methods for space-fractional diffusion equations, Int. J. Comput. Math., 94(5) (2017), pp. 1062-1078.

- [26] T. Breiten, V. Simoncini, M. Stoll, Low-rank solvers for fractional differential equations, Electron. Trans. Numer. Anal., 45 (2016), pp. 107-132.

- [27] A.T. Weaver, S. Gürol, J. Tshimanga, M. Chrust, A. Piacentini, “Time”-parallel diffusion-based correlation operators, Q. J. R. Meteorol. Soc., 144(716) (2018), pp. 2067-2088.

- [28] D. Bertaccini, F. Durastante, Block structured preconditioners in tensor form for the all-at-once solution of a finite volume fractional diffusion equation, Appl. Math. Lett., 95 (2019), pp. 92-97.

- [29] I. Podlubny, A. Chechkin, T. Skovranek, Y.Q. Chen, B.M.V. Jara, Matrix approach to discrete fractional calculus II: Partial fractional differential equations, J. Comput. Phys., 228(8) (2009), pp. 3137-3153.

- [30] M. Ran, C. Zhang, A high-order accuracy method for solving the fractional diffusion equations, J. Comp. Math., 38(2) (2020), pp. 239-253.

- [31] L. Banjai, D. Peterseim, Parallel multistep methods for linear evolution problems, IMA J. Numer. Anal., 32(3) (2012), pp. 1217-1240.

- [32] Y.-L. Zhao, P.-Y. Zhu, X.-M. Gu, X.-L. Zhao, H.-Y. Jian, A preconditioning technique for all-at-once system from the nonlinear tempered fractional diffusion equation, J. Sci. Comput., 83(1) (2020), Article No. 10, 27 pages. DOI: 10.1007/s10915-020-01193-1.

- [33] E. McDonald, J. Pestana, A. Wathen, Preconditioning and iterative solution of all-at-once systems for evolutionary partial differential equations, SIAM J. Sci. Comput., 40(2) (2018), pp. A1012-A1033.

- [34] A. Goddard, A. Wathen, A note on parallel preconditioning for all-at-once evolutionary PDEs, Electron. Trans. Numer. Anal., 51 (2019), pp. 135-150.

- [35] X.-L. Lin, M. Ng, An all-at-once preconditioner for evolutionary partial differential equations, arXiv preprint, arXiv:2002.01108, submitted to SIAM J. Sci. Comput., 4 Feb. 2020, 17 pages.

- [36] S.-L. Wu, H. Zhang, T. Zhou, Solving time-periodic fractional diffusion equations via diagonalization technique and multigrid, Numer. Linear Algebra Appl., 25(5) (2018), Article No. e2178, 24 pages. DOI: 10.1002/nla.2178.

- [37] O. Bokanowski, K. Debrabant, Backward differentiation formula finite difference schemes for diffusion equations with an obstacle term, IMA J. Numer. Anal., to appear, 6 Jul. 2020, 29 pages. DOI: 10.1093/imanum/draa014.

- [38] M. Donatelli, M. Mazza, S. Serra-Capizzano, Spectral analysis and structure preserving preconditioners for fractional diffusion equations, J. Comput. Phys., 307 (2016), pp. 262-279.

- [39] K. Burrage, Parallel and Sequential Methods for Ordinary Differential Equations, Oxford University Press, New York, NY (1995).

- [40] U.M. Ascher, L.R. Petzold, Computer Methods for Ordinary Differential Equations and Differential-Algebraic Equations, SIAM, Philadelphia, PA (1998).

- [41] C. Lubich, D. Mansour, C. Venkataraman, Backward difference time discretization of parabolic differential equations on evolving surfaces, IMA J. Numer. Anal., 33(4) (2013), pp. 1365-1385.

- [42] E. Emmrich, Error of the two-step BDF for the incompressible Navier-Stokes problem, ESAIM-Math. Model. Numer. Anal., 38(5) (2004), 757-764.

- [43] V. Kučera, M. Vlasák, A priori diffusion-uniform error estimates for nonlinear singularly perturbed problems: BDF2, midpoint and time DG, ESAIM-Math. Model. Numer. Anal., 51(2) (2017), pp. 537-563.

- [44] H. Nishikawa, On large start-up error of BDF2, J. Comput. Phys., 392 (2019), pp. 456-461.

- [45] Q. Zhang, Y. Ren, X. Lin, Y. Xu, Uniform convergence of compact and BDF methods for the space fractional semilinear delay reaction-diffusion equations. Appl. Math. Comput., 358 (2019), pp. 91-110.

- [46] C. Li, M.-M. Li, X. Sun, Finite difference/Galerkin finite element methods for a fractional heat conduction-transfer equation, Math. Meth. Appl. Sci., to appear, 30 Oct. 2019, 20 pages. DOI: 10.1002/mma.5984.

- [47] D. Bini, F. Di Benedetto, A new preconditioner for the parallel solution of positive definite Toeplitz systems, Proceedings of the Second Annual ACM Symposium on Parallel Algorithms and Architectures (SPAA ’90), ACM, New York, NY (1990), pp. 220-223. DOI: 10.1145/97444.97688.

- [48] F. Di Benedetto, Preconditioning of block Toeplitz matrices by sine transforms, SIAM J. Sci. Comput., 18(2) (1997), pp. 499-515.

- [49] S. Serra, Superlinear PCG methods for symmetric Toeplitz systems, Math. Comp., 68(226) (1999), pp. 793-803.

- [50] Z. Hao, Z. Zhang, R. Du, Fractional centered difference scheme for high-dimensional integral fractional Laplacian, J. Comput. Phys., 424 (2021), Article No. 109851, 17 pages. DOI: 10.1016/j.jcp.2020.109851.

- [51] Q. Yang, F. Liu, I. Turner, Numerical methods for fractional partial differential equations with Riesz space fractional derivatives, Appl. Math. Model., 34(1) (2010), pp. 200-218.

- [52] Z.-P. Hao, Z.-Z. Sun, W.-R. Cao, A fourth-order approximation of fractional derivatives with its applications, J. Comput. Phys., 281 (2015), pp. 787-805.

- [53] W.W. Hager, Updating the inverse of a matrix, SIAM Rev. 31(2) (1989), pp. 221-239.

- [54] X.-M. Gu, S.-L. Wu, A parallel-in-time iterative algorithm for Volterra partial integro-differential problems with weakly singular kernel, J. Comput. Phys., 417 (2020), Article No. 109576, 17 pages. DOI: 10.1016/j.jcp.2020.109576.

- [55] P. Jiránek, M. Rozložník, Adaptive version of Simpler GMRES, Numer. Algorithms, 53(1) (2010), pp. 93-112.

- [56] Y. Saad, Iterative Methods for Sparse Linear Systems, 2nd ed., SIAM, Philadelphia, PA (2003).

- [57] M.J. Gander, L. Halpern, Time parallelization for nonlinear problems based on diagonalization, in Domain Decomposition Methods in Science and Engineering XXIII (C.-O. Lee, X.-C. Cai, D.E. Keyes, et al., eds.), Lecture Notes in Computational Science and Engineering, vol. 116, Springer, Cham, Switzerland (2017), pp. 163-170. DOI: 10.1007/978-3-319-52389-7_15.