A Non-Stationary Bandit-Learning Approach to Energy-Efficient Femto-Caching with Rateless-Coded Transmission

Abstract

The ever-increasing demand for media streaming together with limited backhaul capacity renders developing efficient file-delivery methods imperative. One such method is femto-caching, which, despite its great potential, imposes several challenges such as efficient resource management. We study a resource allocation problem for joint caching and transmission in small cell networks, where the system operates in two consecutive phases: (i) cache placement, and (ii) joint file- and transmit power selection followed by broadcasting. We define the utility of every small base station in terms of the number of successful reconstructions per unit of transmission power. We then formulate the problem as to select a file from the cache together with a transmission power level for every broadcast round so that the accumulated utility over the horizon is maximized. The former problem boils down to a stochastic knapsack problem, and we cast the latter as a multi-armed bandit problem. We develop a solution to each problem and provide theoretical and numerical evaluations. In contrast to the state-of-the-art research, the proposed approach is especially suitable for networks with time-variant statistical properties. Moreover, it is applicable and operates well even when no initial information about the statistical characteristics of the random parameters such as file popularity and channel quality is available.

Keywords: Change detection, femto-caching, mortal and piece-wise stationary multi-armed bandits, rateless coding, stochastic knapsack problem.

I Introduction

To cope with the ever-increasing demand for mobile services, future wireless networks deploy dense small cells to underlay the legacy macro cellular networks [2], where small base stations (SBS) connect to the core network via a backhaul link. This concept takes advantage of low-power short-range base stations that potentially offload the macrocell traffic [3], improve the cell edge performance, and increase the uplink capacity. Moreover, transmission over short-length links enhances power efficiency and mitigates security concerns. However, these advantages come at some cost, and the system designers face several challenges to realize the concept of dense small cell networks. On the one hand, a massive number of devices sharing limited wireless resources in combination with a dense deployment of SBSs results in an excessive cost of information acquisition and computations, rendering centralized control mechanisms infeasible. On the other hand, in a dense small cell network, not all SBSs can access a power grid. Consequently, the SBSs store or harvest the required energy for providing wireless services. Naturally, the SBSs must consume energy efficiently to reduce the cost and the requirement of frequent batteries recharge, also to remain environment-friendly.

Besides, the significant growth in the demand for media streaming in combination with limited backhaul capacity renders developing efficient file-delivery approaches imperative [4]. The mobile data traffic caused by on-demand multimedia transmission exhibits the asynchronous content reuse property [5]; that is, the users request a few popular files at different times, but at relatively small intervals. The concept of wireless caching takes advantage of this property: instead of frequently fetching the popular files from the core network, different network entities store and re-transmit the files upon demand. The three major strategies for wireless caching are as follows: (i) femto-caching, (ii) D2D-caching, and (iii) coded-caching (or, coded-multicast) [6]. In femto-caching, SBSs save popular files to reduce the dependency on the high-speed backhaul. In D2D-caching, small devices store different files locally and provide each other with the files on-demand. Thus, in D2D-caching, the nearby devices share the burdens. The third method combines caching of files on the users’ devices with the common multicast transmission of network-coded data. Despite the great potential to gain resource efficiency while improving the users’ satisfaction levels, there are various challenges associated with caching. A challenging issue is the cache placement problem, which refers to selecting a subset of available files to store.

I-A Related Works

In the past few years, different methods and aspects of wireless caching have attracted a great deal of attention from the research community. In the following, we briefly review the cutting-edge research. In [6], the authors provide an overview of the basics as well as the state-of-the-art of D2D-caching and compare various methods from a practical point of view. In [7], Zhang et al. investigate the problem of joint scheduling and power allocation in D2D-caching. They decompose the problem into three sub-problems and solve each sub-problem in a centralized manner using convex optimization. D2D-caching is also the topic of [8]. Assuming that the knowledge of the network’s topology and files’ popularity is available, the authors use stochastic geometry to design communication protocols. Probabilistic cache placement for D2D-caching is discussed in [9]. Similar to [8], the authors assume that a central controller knows the network’s topology and files’ popularity. Based on this assumption, they propose a solution to the formulated problem. In [10], the authors consider the bandwidth allocation problem in proxy-caching. They propose a centralized solution based on auction theory that requires information about the files’ popularity. Similarly, [11] proposes a centralized heuristics for joint cache placement and server selection problem in proxy-caching. The developed solution necessitates knowledge of files’ popularity. Physical layer caching in wireless ad-hoc networks is the focus of [12]. The authors propose a caching scheme that potentially enhances the capacity of wireless ad-hoc networks by changing the underlying topology. They also analyze asymptotic scaling laws. Caching methods based on network coding (coded-multicast) are investigated in [13] and [14], as examples. The former considers femto-caching in conjunction with opportunistic network coding and derives the optimal cache placement. The latter introduces the concept of global caching gain against the conventional local caching gain. The authors suggest a coded-caching scheme that exploits both gains. The focus of this paper is on femto-caching; thus, in what follows, we confine our attention to research works that study this caching method.

Reference [15] introduces the femto-caching comprehensively. In [16], the authors formulate the cache placement as an integer programming and solve it by developing a centralized method. Assuming that the files’ popularity is known, [17] studies offline D2D- and femto-caching. In both scenarios, they investigate the joint design of the transmission and caching policies by formulating a continuous-time optimization problem. The topic of [18] is dynamic femto-caching for mobile users in a network with a time-varying topology. It proposes two algorithms for sub-optimal cache placement. One of the algorithms is centralized. The other one, despite being decentralized, requires knowledge of the popularity profile, current network topology, and the cache of other SBSs. Resource allocation for a small cell caching system is investigated in [19], by using stochastic geometry to perform the analysis. In [20], the authors investigate the trade-off between two crucial performance metrics of caching systems, namely, delivery delay and power consumption. They formulate a joint optimization problem involving power control, user association, and file placement. They solve the problem by decomposing it into two smaller problems. The focus of [21] is on energy efficiency in cache-enabled small cell networks. They discuss different factors such as interference level, backhaul capacity, content popularity, and cache capacity concerning their impact on energy efficiency. They then investigate the required conditions under which caching contributes to energy efficiency.

While a great majority of research studies assume that SBSs are provided with some prior knowledge, for instance, about the files’ popularity, there are only a few papers that do not rely on such assumptions when approaching different aspects of wireless caching. For example, [22] defines the offloading time as the time overhead incurred due to the unavailability of the file requested by a typical user. Then, for an unknown popularity profile, a learning approach is proposed to minimize the offloading time. As another example, in [23], the authors study the learning of a distributed caching scheme in small cell networks. Reference [24] develops a learning-based cache placement scheme in small cell networks, where the SBS observes only the number of requests for the cached files (i.e., the cache hits), and not the requests for the other files (i.e., cache misses). Using the features of the requested content, the SBS learns which files to cache to maximize the cache hits in the future. In [25], the authors use an online approach to learn the files’ popularity. Learning-based cache placement is also studied in [26] and [27]. Although the aforementioned papers deal with unknown popularity, the developed methods do not include the power consumption, the time-variations in the files’ popularity, and the possible gain of broadcast transmission. Table I summarizes the contribution of some research papers.

| Reference | Problem | Approach | Information | Concentration |

|---|---|---|---|---|

| [16] | Cache placement | Convex optimization | Local CSI, Popularity | Centralized |

| [28] | Cache placement, Multicast | Randomized rounding | Global CSI, Popularity | Centralized |

| [29] | Cache placement, Overlapping helpers | Heuristics | Popularity | Centralized |

| [17] | Transmission and caching policy | Optimization | Popularity | Centralized |

| [18] | Cache placement | Heuristics | Popularity, Topology | Centralized |

| [19] | Resource allocation | Stochastic geometry | Popularity, Topology | Distributed |

| [30] | Energy efficiency | Heuristics | Popularity, Topology | Centralized |

| Our Work | Cache placement, Resource allocation | MAB, Statistics | None | Distributed |

I-B Our Contribution

A large body of cutting-edge research considers popularity as a known Zipf-like distribution. Such an assumption is, however, unrealistic due to the following reasons: (i) To a large extent, file popularity is dynamic and often vanishes over time; (ii) In general, the popularity of a file is an outcome of the decisions of a large population. Even if possible, such a variable is very costly to predict, especially due to massive production at a rapid pace.

Moreover, the great majority of current research assumes that SBSs have access to an unlimited power resource; nevertheless, in dense small cell networks, many SBSs rely on a limited power supply. The scarcity of supply renders the power efficiency crucial. Therefore, it is essential to serve as many users as possible with a specific amount of power. This goal is difficult to achieve particularly in dense small cell networks, where due to a large number of users, acquiring global channel state information (CSI) at SBSs is remarkably expensive. In addition to efficiency, sophisticated power control has a significant impact on mitigating transmission impairments such as interference; nonetheless, most previous works exclude such physical-layer issues from the caching problem, disregarding the fact that the transmission policy of SBSs impacts the realized benefits of caching. More precisely, such exclusion might prevent developing optimal caching strategies: On the one hand, transmission power impacts the coverage area of every SBS and the number of successful downloads of a cached file. On the other hand, due to the dense deployment, even SBSs close to each other share the spectrum resources, so that the inter-cell interference in every small cell depends on the transmission power of the neighboring SBSs.

We enhance the state-of-the-art as summarized in the following. Firstly, in comparison to the existing research, we employ a more general and realistic system model. In particular,

-

•

We take the limited file and power storage capacities of SBSs into account.

-

•

In our setting, the SBSs do not have any prior information about the statistical characteristics of the files’ popularity or channel quality.

-

•

We consider a dynamic network model where the number of users associated with every SBS is a random variable.

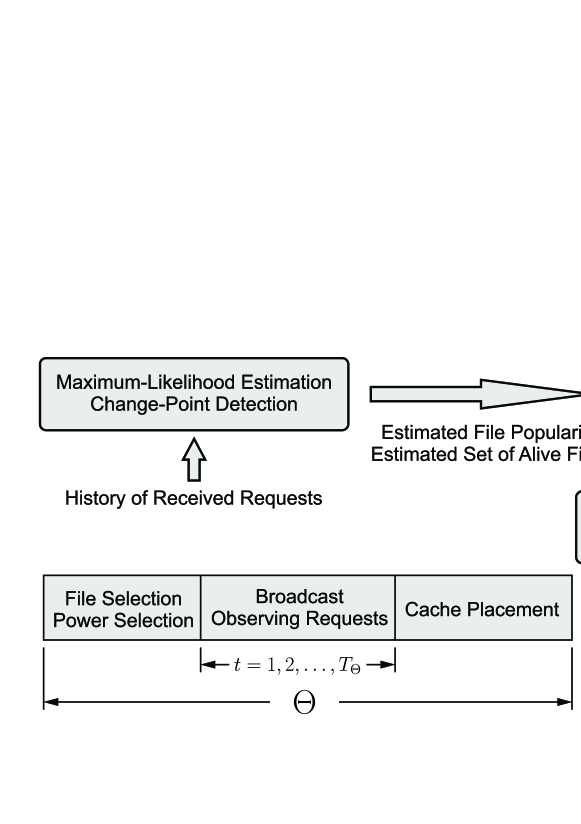

Every SBS uses rateless coding to broadcast a file. When exploiting rateless codes, the receiver accumulates mutual information to decode the message [31]. While the required time for decoding different blocks varies with channel conditions, rateless coding can guarantee zero outage probability, which makes it suitable for applications such as delay-constrained multimedia transmission over fading channels [32], [33]. We analyze the outage probability when rateless coding is applied. Afterward, we formulate the joint cache placement and power control problem in cache-enabled small cell networks. To deal with the NP-hardness of the formulated problem, we exploit the fact that caching systems usually work in two consecutive phases: (i) placement phase, and (ii) delivery phase [27], [13]. Therefore we decompose the challenge into two problems: (i) cache placement, and (ii) broadcast-file and -power selection, as described below:

-

•

To efficiently solve the online cache placement problem, we concentrate on the dynamics of file popularity, noting the fact that the popularity of every file is time-varying. We propose an algorithmic solution using a stochastic knapsack problem in combination with time-series analysis and statistical methods such as maximum likelihood estimation and change detection. We design the procedure and select the parameters carefully to achieve a good performance in terms of detecting variations in file popularity and fast reaction to changes.

-

•

In the delivery phase, to efficiently exploit the limited power supply at every SBS, we cast the selection of the optimal (file, transmit power) pair as a multi-armed bandit (MAB) problem with mortal arms. We adjust the algorithm and parameters so that it exhibits low regret, implying efficient performance in terms of quality of service (QoS) satisfaction and energy consumption.

We further establish the applicability of our approach through extensive numerical analysis.

I-C Paper Organization

In Section II, we introduce the network model. Afterward, in Section III, we design and analyze our rateless-coded transmission protocol. Section IV includes the description and analysis of the caching and files’ popularity models. In Section V, we formulate the joint cache placement and power control problem, which is then decomposed into two problems: (i) cache placement in the placement phase, and (ii) joint file- and transmit power selection in the delivery phase. In Section VI, we solve the former problem by developing an algorithm based on the stochastic knapsack problem and some statistical methods. In Section VII, we cast and solve the latter problem as a multi-armed bandit game. We dedicate Section VIII to the numerical analysis. Section IX concludes the paper.

I-D Notation

Throughout the paper, random variables are shown by uppercase letters while any realization is shown by lowercase. For example, and represent a random variable and its realization, respectively. The probability density or mass function (pdf) and the cumulative density function (cdf) of a random variable are shown by and , respectively. By we denote the probability of some event . Moreover, represents the expected value of . We show a set and its cardinality by a unique letter, and distinguish them by using calligraphic and italic fonts, such as and , respectively. For any variable , represents its estimated value, which is calculated, for example, using maximum likelihood estimation. Matrices are shown by bold uppercase letters, for instance ; thus denotes a matrix with rows and columns. Moreover, denotes the -th row of matrix . stands for the element of matrix located at -th row and -th column. Unit vectors are shown by bold lowercase letters, for example, a. The Hadamard product of two matrices and is shown as . By , we mean that matrix is the element-wise square root of matrix . Table II summarizes the variables that appear frequently in this paper.

| Symbol | Description |

|---|---|

| Set of SBSs (Deterministically deployed) | |

| Small cell radius | |

| Set of power levels for SBSs | |

| Transmit power of SBS | |

| Set of users (Poisson-distributed) | |

| Channel coefficient of link (Rayleigh-distributed) | |

| Distance between SBS and user | |

| Set of users assigned to SBS (Distribution by (1)) | |

| Set of files to be potentially cached | |

| Number of data blocks of file | |

| Minimum required number of successfully-received packets to recover the file with probability | |

| Probability of successful file recovery if at least packets are successfully received | |

| Maximum number of rateless-coded packets produced by SBS to broadcast file | |

| SINR of user assigned to SBS (Expression and distribution by (2) and (3)) | |

| Outage probability of the link | |

| Minimum required transmission rate of user (Deterministic) | |

| Probability of successful recovery of file transmitted by SBS at user | |

| Time required by user to recover file transmitted by SBS at power | |

| Maximum order statistic of random variables , (Distribution by (12)) | |

| Total energy spent by SBS for broadcasting file at power | |

| ; | Cache size of SBS ; Size of file in bits |

| Number of requests for file from SBS at time instant (Distribution by (19)) | |

| Set of alive files at broadcast round | |

| ; | The broadcast file and transmit power of SBS at round |

| Energy spent by SBS at round | |

| Number of users that recover the file successfully at broadcast round | |

| Utility of SBS at round | |

| Log-likelihood ratio for observations from time up to time | |

| ; | Alarm time, Change time |

| Action set of SBS at broadcast round | |

| Action of SBS at broadcast round | |

| ; | Optimal action and Maximum achievable reward of SBS at broadcast round |

| Regret of SBS at broadcast round | |

| The index of arm at broadcast round | |

| Number of times arm is played up to round | |

| Average reward of arm up to round | |

| Number of requests for file from SBS at broadcast round |

II Network Model

We study the downlink operation in an underlay cache-enabled small cell network with a set of SBSs. The service area consists of multiple grids with a radius of . The deployment of SBSs is deterministic such that there exists only one SBS in every area with a radius of . All SBSs are connected to a macro base station (MBS), which has access to the core network. Each SBS selects its transmission power from a finite set of power-levels including elements belonging to . As it is conventional in underlay networks, SBSs use the MBS’s licensed channels if they are idle; thus, provided that the sensing is perfect, there is no interference incurred by- or to the MBS. The SBSs, however, might cause inter-cell interference to each other as they do not coordinate before transmissions.

There exists a random set of users in the network. Users are quasi-static and their geographical arrangement follows a spatial homogeneous Poisson point process (HPPP) with density , which is unknown to the SBSs. We consider a frequency non-selective fading channel model. For a link connecting user and SBS , we denote the random channel coefficient by . The channel coefficient follows a Rayleigh distribution with unknown parameter . Therefore the channel gain is exponentially-distributed with unknown parameter . The MBS, the SBSs, and the users do not have any prior knowledge, even statistical, about the channel quality.111Rayleigh fading is the most reasonable and widely-applied statistical model for propagation in an urban environment. Therefore, we use the Rayleigh distribution to develop the mathematical formulation and to enable the analysis. Note that it does not contradict our claim of the absence of prior statistical information at SBSs since we do not allow knowledge about the statistical characteristics of the distribution such as the expected value. It is worth noting that the analysis can be performed for any other fading model by following the same lines. Besides, the proposed joint cache placement and resource allocation method is applicable even if there is no mathematical model and analysis. Throughout the paper, we regard interference as noise. For the simplicity of notation and calculus, we neglect the large-scale fading (path loss), assuming that there is an inner power-control loop that compensates the path loss. This simplification does not affect our analysis, and the entire analysis can be performed in the same way also including the path loss.

Let denote the distance between SBS and user . Then user is associated with SBS if . In words, every user is assigned to the nearest SBS. The distance-based user association is a widely applied association method (see [34] and the references therein) since it is simple and practical. The set of users assigned to every SBS is denoted by . Recall that (i) users are distributed according to an HPPP with density , and (ii) there is only one SBS in the area of radius . Consequently, the number of users assigned to SBS follows the distribution

| (1) |

It is worth mentioning that in ultra-dense small cell networks, a user might be located inside the intersection of the coverage areas of multiple SBSs. Hence, if the SBSs transmit the same file with specific coding constraints, the user benefits from multiple useful signals for rapid file reconstruction. However, we consider a scenario where each user is associated with only one SBS and receives recoverable signals only from that SBS. Therefore, each SBS decides on the files to cache independent of others. Also, note that the channel impairments such as interference can sometimes yield a packet delivery failure for some of the users assigned to an SBS.

III Transmission Model

Let be the finite set of files to cache. Every file consists of data blocks of the same size.222Depending on the applied standard for wireless transmission, the size of each data block might change from a few Kilobytes (e.g., 1.5 Kilobytes for IPv4) and dozens of Kilobytes (e.g., 64 Kilobytes for IPv6). The exact size of the data block does not play a role in our proposed caching and transmission scheme since, if required, a data block of any size can be further segmented to blocks with a different size (often smaller) that is appropriate for rateless coding and transmission. Naturally, the receiver then re-integrates the blocks to each other as necessary to rebuild the original one. All SBSs apply rateless codes to transmit any file , as described below.

Every SBS first encodes the message at the packet-level using Luby-Transform (LT) rateless code [35]. LT codes are the first practical realization of fountain codes that allow successful recovery of any message with very small overhead. In LT codes, every coded packet is the exclusive-or of neighbors. The number is called the degree. It is selected according to some specific probability distribution. After being appended with a cyclic redundancy check (CRC) sequence, each LT-coded packet is encoded by a physical-layer channel code of rate bits/channel-use and is transmitted over the channel in a time slot. The receiver attempts to decode the packets at the physical layer first. If the CRC is incorrect, the corresponding packet is discarded. We assume that CRC bits are sufficient so that every error can be detected.333The overhead of CRC depends on the type being used, or, in other words, the number of CRC checksum bits. The checksum usually consists of 8, 16, or 32 bits in length and is appended to the message. In general, taking both overhead and error detection performance into account, CRC is considered superior to many other error detection codes such as parity check. The accumulation of correctly received packets is used by the decoder to recover the source file. Assume that the receiver desires to successfully reconstruct the message with probability . Then it requires to accumulate (at least) any LT-coded packets, provided that the number (i.e., the degree) is selected appropriately [35]. Here, is the discrete random decoding overhead, rendering a random variable as well; nonetheless, for point to point transmission, it can be deterministic. A common value is so that [36]. The receiver then recovers the original message using, for instance, the belief propagation algorithm. It then informs the SBS via an acknowledgment (Ack) packet.444To decode the rateless-coded transmission, a receiver requires to possess channel state information. In our setting, each user receives signal only from one SBS, whereas the SBS transmits to multiple users; as such, it is plausible to assume that the receiver can obtain the CSI whereas the SBS incurs high cost for CSI acquisition. Also, some other articles such as [37] suggest utilizing rateless codes to overcome the channel uncertainty at the transmitter. Moreover, some researchers address the problem of decoding rateless-coded data under imperfect CSI at the receiver. For example, in [38], the authors discuss rateless codes for quasi-static fading channels in conjunction with imperfect CSI at the receiver. If the time and energy are unconstrained, rateless coding results in zero file-reconstruction failure also in fading channels. However, in a realistic scenario, either the energy is limited or the application is delay-sensitive. For example, in [33], the authors apply rateless codes to wireless streaming with a sequence of playback deadlines. We also take such restrictions into account by assuming the following: In every broadcast round, SBS generates at most rateless-coded packets to broadcast any file . Every SBS selects as a deterministic value. In essence, is a deadline for the users interested in file to accumulate rateless-coded packets while SBS is broadcasting file . The users with a higher channel quality are more likely to succeed in accumulating rateless-coded packets out of transmitted ones. Naturally, shall be larger for larger files that consist of more packets. By selecting , SBS balances the trade-off between the energy consumption for packet transmission on the one hand and the number of successful file reconstructions at the users’ side on the other hand. Thus, the SBS stops transmitting as soon as one of the following events occurs:

-

•

All assigned users that have requested file recover it.

-

•

data packets are transmitted.

Let user be assigned to some SBS , and the SBS transmits at some power level . Then in the signal-to-interference-plus-noise ratio (SINR) yields

| (2) |

where is the power of additive white Gaussian noise. Note that attains a lower-bound in the worst-case scenario, where every SBS , transmits with the maximum power, i.e., . The lower-bound, , is useful when SBS is not aware of the transmit power of other SBSs. The pdf of , denoted by , is given by Proposition 1.

Proposition 1.

The pdf of SINR, , is given by

| (3) | ||||

where for all we define

| (4) |

and

| (5) |

Proof:

See Appendix X-A. ∎

For a sufficiently long block length, the outage probability is a good approximation for the packet error probability. Let denote the minimum transmission rate required by user . The outage probability yields

| (6) |

where . In the general case, the solution of this integral at every desired point can be calculated numerically. For special cases, one can also calculate the closed-form solution. For example, for the interference-limited region where the noise power is negligible compared to the interference power, by using (3), we can write (6) as

| (7) |

where .555Depending on some factors such as the density of small base stations, small cell networks operate in different regions [39]. Naturally, the user experiences the maximum outage probability at . A receiver can reconstruct a file with probability if it successfully receives at least rateless-coded packets out of packets transmitted by SBS . Hence, for a user , the probability of successful decoding (file reconstruction) yields

| (8) |

The quality of the wireless channel is random. Therefore, for each user, the required time until receiving enough packets for file reconstruction is a random variable. Moreover, when broadcasting a file , each SBS transmits only a limited number of rateless-coded packets. Such restriction, in combination with random channel quality, renders the number of successful file reconstructions at the users’ side as random. Consequently, the total power required by an SBS to transmit a file is also a random variable. In the following sections, we analyze these random variables.

III-A Characterization of Energy Consumption

Let be the set of users that are associated with SBS and are interested in receiving a file . Moreover, denotes the number of rateless-coded packets transmitted by SBS with power until some user accumulates packets. Then is a random variable following a negative binomial distribution, with the pdf

| (9) |

Thus the cdf of yields

| (10) | ||||

where is the regularized incomplete beta function. Assume that SBS continuously transmits rateless-coded packets until every user receives packets successfully, i.e., there is no limit as . The number of rateless-coded packets required for that event is the maximum order statistic of the random variables , . For a deterministic value , the cdf of the maximum order statistic of independent and non-identically distributed (i.ni.d) random variables, denoted by , , is given by [40]

| (11) |

where the summation extends over all permutations of random variables for which and . In our formulation, however, (corresponding to here) is itself a random variable following a distribution given by (1); thus at every broadcast round, the largest reconstruction time, denoted by , has a pdf as

| (12) | ||||

In practice, time and energy are constrained. Thus, every SBS transmits at most rateless-coded packets of any file , unless all users send the Ack signal to the SBS before , implying that no further transmission is required. Using (12), we calculate the probability of this event as

| (13) | ||||

Therefore, the duration of a broadcast round in which an SBS transmits a file with power is a random variable defined as

| (14) | ||||

In (14), the first case corresponds to transmitting packets, whereas the second case occurs if the transmission ends sooner than that. By , we denote the duration of some . The total energy spent by SBS is a random variable defined as

| (15) |

Note that the maximum total energy is given by

| (16) |

III-B Characterization of the Number of File Reconstructions

Let denote the set of interested users that recover the file successfully, i.e.,

| (17) |

Since the channel quality is random, is a random variable. As discussed in Section III, one of the following events occurs:

-

•

All of the requesting users reconstruct the broadcast file by some time , or

-

•

A subset of users , where , reconstruct the file by the time .

The first event implies that the maximum order statistic of random variables is equal to some , which happens with probability . In the second event, the -th order statistic of random variables is equal to , which happens with probability . Based on the argument above, can be characterized as

| (18) |

IV File Popularity and Caching Model

Every SBS has a finite cache size, denoted by . Moreover, every file has a finite size . Files are labeled in a way that . To gain access to a file that is not already stored in its cache, every SBS has to fetch it from the MBS. To avoid the energy-cost of fetching and to reduce the backhaul traffic, also to improve the users’ satisfaction level through reducing the delay, the SBSs try to avoid repetitive fetching, by storing the most popular files in their cache. The vast majority of previous works models the file popularity by a Zipf-like distribution with a time-invariant skewness parameter. However, such a model stands in contrast to the regular experience where the popularity of most data files changes over time. For example, as a familiar pattern, the number of requests for a video clip tends to increase initially, remain static for a while and then decrease. Therefore, in our work, we model the file popularity as a piece-wise stationary random variable. Details follow.

For an SBS that serves users, the number of requests for a file follows a Poisson distribution with parameter ; that is, the total number of requests for each file is time-variant and depends on the number of users in the small cell. Every user can request multiple files. The SBSs have no prior information about the popularity of each file.666Traditionally, Poisson distribution describes the random arrival process, e.g., user arrival or service demand in a telecommunication network. Therefore, here we use it to model the request arrival for each file, although we consider the general setting in which the parameter of this distribution, i.e., the intensity is unknown. Moreover, it should be emphasized that based on the intensity, Poisson distribution produces values in . Let be the total number of requests for file submitted to SBS at time instant . Then

| (19) | ||||

where the equality follows by (1) and the definition of Poisson distribution.

Assumption 1.

The average file popularity is piece-wise constant so that is a step function that remains fixed over intervals of unknown length and suffers ruptures at change points. The values of before and after change times, as well as the change points, are unknown a priori.

The time-variations in the files’ popularity correspond to the notion of concept drift in machine learning [41], which describes the changes in the statistical characteristics or distribution of the problem instances over time. For example, [42] considers this notion. In essence, big data applications are often characterized by concept drift, in which trending topics change over time. Moreover, [43] analyzes and illustrates some examples of concept drift in video streaming. Through data analysis, the authors show that the popularity of a video decreases by moving away from the posting date, or in other words, as the content’s age increases.

If and are intensities before and after the change, we have , with being a sufficiently large constant. Note that the changes in the popularity can be continuous; however, by assuming a step-wise model, we neglect small changes in popularity and tune our algorithm to detect abrupt changes rather than small ones. In such a setting, the change detection performs better, since the tradeoff between a false alarm and misdetection can be balanced easier. Moreover, the complexity of caching reduces as well. More precisely, if even small changes are taken into account, the cache placement problem (knapsack problem) has to be solved unnecessarily very often, which results in excessive complexity. Based on this analytical model, in the following, we define the file type.

Definition 1 (File Type).

At any time instant , a file is alive (i.e., still popular) if . Otherwise, it is dead; that is, it is not popular anymore.

We use to denote the set of files that are considered to be alive by SBS , at the beginning of a broadcast round . We assume that there always exists at least one alive file, i.e., for all . Naturally, as we later explain in Section VI, every SBS selects the set of files for storage from the set of alive files, meaning that . Thus, is selected by SBS based on its cache size . Intuitively, an SBS with larger storage capacity would have smaller , implying that it is able to store also some files with low popularity.

V Problem Formulation

Let and denote the broadcast file and transmit power of SBS at broadcast round . Then, is the total energy spent during the broadcast round . Moreover, let be the number of successful file deliveries. We define the utility of SBS at round as

| (20) |

i.e., in terms of the number of successful reconstructions per energy consumption. Ideally, every SBS performs the cache placement and power allocation jointly. That is, it selects a file to cache, and at the same time it assigns some power to broadcast that file (in future based on the users’ request). Then the optimization problem of every SBS can be formulated as follows:

| (21) |

subject to the following constraint

| (22) |

for all . Solving the optimization problem in (21) is however not feasible since by the following reasons the objective function is not available: (i) The files’ popularity, the network’s structure (including number of users), and the CSI are not known a priori; (ii) Each SBS is only aware of its own transmit power level as well as the file being broadcast, and not those of other SBSs. In such a scenario, a natural solution would be to incorporate online learning methods that maximize the expected utility, that is,

| (23) |

subject to (22), where the expectation is taken with respect to random utility, as well as any other possible randomness in the decision-making strategy.

In general, efficient learning of the optimal decision involves finding a balance between gathering information about every possible strategy (exploration) on one hand and accumulating utility (exploitation) on the other hand. In other words, it is desired to learn as fast and efficient as possible, to minimize the cost of information shortage. In this sense, the problem formulated in (23) is inefficient to solve due to the following reasons: (i) Each (file, power level) pair, i.e., , is defined as an action (strategy), yielding actions in total; thus, from the computational point of view, such formulation imposes excessive complexity due to a large number of actions; (ii) To estimate the potential gain of each action, every file has to be fetched from the core network and transmitted at various power levels. It is evident that such exploration is very costly in terms of power, not only since fetching is power-consuming, but also since a substantial amount of limited power is wasted during exploration. Moreover, frequent fetching by densely deployed SBSs yields large backhaul traffic; (iii) The dynamic file popularity is not taken into account.

Based on the discussion above and in agreement with many previous works such as [27] and [13], we consider the caching system to work in two consecutive phases: (i) cache placement phase, and (ii) delivery phase. Accordingly, we decompose the cache placement and power control problem into two sub-problems listed below, which are solved repeatedly and in parallel to converge to the optimal solution:

-

•

Cache Placement: During every broadcast round , every SBS observes the requests for every file . Then, at the end of each broadcast round, the SBS updates its cache, if necessary. Later in Section VI, we explain that the necessity is defied based on the variations in the files’ popularity.

-

•

Joint Broadcast File Selection and Power Allocation: At every broadcast round , SBS selects a file to be transmitted, together with a power for broadcast.

A summary of cache placement and delivery protocol is provided in Algorithm 1. The approach is also illustrated in Fig. 1. The two problems are described in detail and are solved in Section VI and Section VII, respectively. In Section VII-A, we discuss the effect of decoupling the cache placement and power control problems on the performance.777Note that the frequency of updating the cache (if necessary at all) is not necessarily similar to that of broadcasting. In other words, an SBS can decide to update the cache in some specific intervals, for example, in every rounds of broadcasting. Our proposed algorithm is also applicable in this case.

VI Cache Placement

As described in Section V, we perform the cache placement based on file popularity, or in other words, the requests. By (23), at every broadcast round, a file is selected from the cache; this implies that the dynamic cache placement is performed, if necessary, at broadcast (delivery) rounds and not at every single transmission round. Let be the popularity of file at the beginning of the broadcast round . For an SBS , the cache placement problem is stated as follows: Given the set of files , where each file has a size and a value , desired is to decide whether to include the file in cache () or not, so that the total size of selected files does not exceed the cache capacity , while the total value is maximized. Note that at every cache updating round, the saved files are selected from the set of alive files. Such a strategy reduces the complexity without affecting the performance adversely. Formally, for , the optimization problem yields

| (24) |

Considering each file as an item whose value is defined in terms of popularity, the cache placement problem is a 0-1 knapsack problem, which is a combinatorial optimization problem. Note that in formulating the knapsack problem, the value of every item (file) can be also defined in terms of the number of requests; Nonetheless, according to our model, the number of requests is stochastic, which renders the knapsack problem stochastic; Hence, to simplify the problem, we use the intensity of requests for each file , , as the item’s value, keeping in mind that is deterministic, despite changing over time.

Being under intensive investigation, a variety of efficient algorithmic solutions are developed for the knapsack problem [44]. The cache placement problem stated by (24) is however more challenging than the conventional knapsack problem. In particular, according to our system model (see Section IV), (i) the SBSs do not have any prior knowledge on files popularity indicator , and (ii) files’ popularity is time-varying so that (24) has to be solved sequentially. Against such difficulties, we notice that at every time , every SBS receives the requests for every file . This side-information that every SBS obtains at almost no cost can be used to estimate the file popularity; i.e., to predict how many users would attempt to recover the file if it is broadcast. Let be the estimated value of at the end of broadcast round .888It is worth noting that in some previous works, for example, in [27] and [24], the authors assume the following: Every SBS observes the cache hits, i.e., the number of requests for the files in the cache, whereas the number of cache drops, i.e., the number of requests for the files that do not belong to the cache, is not observable. However, such an assumption is unnecessary in many cases, where the users submit the requests for files to the corresponding SBS, while not knowing the cache status of the SBS. Even in the setting where the requests are submitted to some entity other than the SBS, such information can be transferred to the SBS with negligible cost. To update the cache for the broadcast round , the SBS uses the historical data. Hence the optimization problem (24) is restated as

| (25) |

For cache placement, we use the SBS’s observations in combination with some statistical tests, as described below.

VI-A Maximum Likelihood Estimation

Consider a time period of length , with its time instances being labeled as . Let be a set of samples of the popularity of file , where shows the number of requests for file submitted to SBS at time . Assume that the popularity is stationary during the time interval . Then the maximum likelihood estimate of yields

| (26) |

VI-B Change Point Detection

As described before, for any file , the number of requests is a random variable following a Poisson distribution with parameter . Therefore, the sequence of requests , , are i.ni.d random variables. Assume that the value of the parameter is initially at some reference time , and at some unknown point of time, , its value changes to . Change point detection basically detects the change and estimates the change time. Generally, it is assumed that is known, while might be known or unknown; in the latter case, the change magnitude or basically can be estimated as well. Formally, after each sampling, change point detection tests the following hypotheses about the parameter :

| (27) | ||||

In this paper, we utilize the generalized likelihood ratio (GLR) test for change detection [45], as described in the following.999In addition to the GLR test, there exist several other methods for change-point detection. This includes Page-Hinkley test (P-H) [46], cumulative sum control chart (CUSUM) [47], sequential probability ratio test (CPRT), and the like. In this paper, we opt to use the GLR test since it applies to a wide range of probability distributions for the random variable under analysis. Moreover, it is suitable when the parameter’s value before the change point as well as the change magnitude are unknown. For unknown , the log-likelihood ratio for the observations from time up to time is given by

| (28) |

where is the probability of which depends on the scalar parameter . For Poisson distribution, (28) yields

| (29) |

In (29), two unknown variables are present. The first one is the change time and the second one is the change magnitude (value of ). These values can be calculated by using the maximum likelihood estimation; that is, we perform the following double maximization [45]:

| (30) |

Then the alarm time, , is defined as

| (31) |

where is an appropriately-selected constant.101010The constant addresses the tradeoff between false alarm and detection delay, and should be determined so that a balance is achieved. Then the change point, , and the mean value after the change, , are estimated as [45]

| (32) |

VI-C The Algorithm

During the initialization period of length , the SBS gathers observations of the requests for each file as statistical samples. Using this sample set and the maximum likelihood estimation described in Section VI-A, the SBS estimates an initial popularity for every file , denoted by . The SBS then solves the knapsack problem (25) to initialize the cache for . In the course of each broadcast round , and at every transmission trial, the SBS observes the requests for every file, which serve as new samples of popularity.111111Note that similar to the Ack signals, requests can be submitted to each SBS also while transmitting, for instance through a control channel. The new samples are used to detect any changes in , and to estimate the change magnitude (i.e., the value of after the change), as explained in Section VI-B. At the end of each broadcast round , the final value of represents ; Formally, . Afterward, if necessary (i.e., upon the occurrence of some change), the SBS again solves the knapsack problem (25) and updates the cache. Algorithm 2 provides a summary of the procedure.

-

•

Select the length of initial observation .

-

•

For every file , the request vector is initialized with zero.

-

•

Let the initial set of alive files . Moreover, let the total run time so far be .

-

•

Let denote the total number of requests for each file during broadcast round .

-

•

Select the threshold for the popularity index to determine alive files, i.e., .

-

•

Let denote the length of broadcast round (unknown a priori, see Algorithm 3).

-

•

For every file : (i) The request vector is initialized with zero vector; (ii) Let the change detection flag ; (iii) Let the change time .

-

•

Let the estimated set of alive files .

| (33) |

It is worth mentioning that our proposed scheme to update the cache is event-triggered. More precisely, detecting a change in files’ popularity results in an attempt to re-optimize the cache, i.e., to solve the knapsack problem (25). Based on the magnitude of the change(s), the re-optimization might yield an urge to fetch some new files from the core network via the backhaul link. Although an SBS incurs some cost for fetching new files, for example, to reimburse the resulted backhaul traffic, the situation is inevitable when the popularity of files is time-variant; Nevertheless, every SBS (or an MBS controlling the backhaul link) can reduce the burden of fetching by adapting its responsiveness to the changes in files’ popularity. In other words, the SBS might decide to react only to a change whose magnitude is larger than some threshold value of . Naturally, larger values of result in less sensitivity and hence the cache is updated less frequently. In the limit case, i.e., for very large values of , our approach becomes similar to conventional caching schemes when the cache update is performed at specific time-intervals (time-organized rather than event-based). Finally, it should be noted that the knapsack problem (25) regards the size of every file as a cost factor; as fetching the large files naturally yields more backhaul traffic, one can conclude that the backhaul limitation is also taken into consideration in the optimization problem for cache placement.

VII Broadcast-File Selection and Power Control

Multi-armed bandit is a class of sequential optimization problems with incomplete information. The seminal problem involves an agent that is given a finite set of arms (actions, interchangeably), each producing some finite reward upon being pulled. Given no prior information, the agent selects arms sequentially, one at every round, and observes the reward of the played arm. Provided with this limited feedback, the agent aims at satisfying some optimality condition which is usually designed based on the problem’s specific characteristics, such as the random nature of the reward generating process, duration of arms’ availability, and so on. As a result of a lack of information, at each trial, the player may choose some inferior arm in terms of average reward, thus experiencing some regret. The regret is the difference between the reward that would have been achieved had the agent selected the best arm and the actually achieved reward.

In this paper, we model the joint file and power level selection as a multi-armed bandit problem, where every (file, power level) pair is regarded as one action. At every broadcast round , a file is selected from the cache . The transmit power is selected from the set of power levels . Thus the agent has a set of actions , which includes elements. For simplicity, at every broadcast round , we denote the action as . The utility of this action is then given by (20). Ideally, every SBS desires to select the broadcast file and power level so as to maximize its expected accumulated utility through the entire horizon. Formally,

| (34) |

However, due to the lack of prior information and also by the time-varying nature of the problem, (34) is infeasible. As a result, every SBS would opt for a less ambitious goal, as we describe below.

At every broadcast round , we define the optimal action as

| (35) |

which results in a reward

| (36) |

The regret of playing action at round is given by

| (37) |

Let be the set of selection policies. Moreover, assume that SBS uses some policy to select some action at successive rounds . Also, let denote the regret caused by using policy at round . Then the goal of every SBS is to minimize its expected accumulated regret over the entire transmission horizon by using an appropriate decision-making strategy. Formally,

| (38) |

Recall that after some broadcast rounds, the cache is updated based on the new estimations of the time-varying files’ popularity. Consequently, the bandit’s action set might vary over the broadcast rounds as well, since the cache, together with the set of power levels, determines the set of available actions; In other words, while some action might be included in the action set for some broadcast round , there is a chance that it does not exist anymore in round , simply since the file is omitted from the cache as a result of losing its popularity. This type of bandit problem is referred to as multi-armed bandit with mortal arms, i.e., a setting in which some arms might disappear and some other arms might appear with time. To solve the selection problem, we use the algorithm summarized in Algorithm 3, which is an adapted version of a policy that appears in [48].

The algorithm is based on the upper-confidence bound policy (UCB) [49]. Basically, in UCB, at every selection round, an index is calculated for every action, which corresponds to an upper-bound of a confidence interval for the expected reward of that arm. At every round, the index of an arm depends on the number of rounds that specific arm is selected so far, together with its achieved rewards. Formally, assume that the action space is not time-varying and let be the number of times that an action is played so far, i.e.,

| (39) |

where is the indicator function that returns one if the condition holds and zero otherwise. Then, the average reward from pulling any action yields

| (40) |

The UCB policy associates an index to each action at round [49]

| (41) |

with and being appropriate constants. The second term on the right-hand side of (41) is a padding function that guarantees enough exploration. The action with the largest index is selected to be played in the next round, i.e.,

| (42) |

The time-variation of the action set and the mortality of arms render the described UCB policy insufficient; Hence, based on [48], we introduce the following adaptation into the procedure:

-

•

If the cache is changed, play each new action (i.e., broadcast the new files with the available power levels);

-

•

Set for all actions (old and new) but keep the average reward of the old files;

-

•

Continue by the UCB procedure.

The following proposition states the regret bound of the decision-making policy described in Algorithm 3.

Proposition 2.

The expected regret of Algorithm 3 is , where is the number of times a new file has appeared in the cache during the broadcast rounds .

Proof:

See Appendix X-B. ∎

VII-A Discussion on Optimality and Complexity

As the final remark, we discuss the effect of decoupling the cache placement and power control. As described in Section V, to solve the joint cache placement and the pair (broadcast file, power level) selection, we decompose the cache placement and power control problems. A decoupling of the optimization of the cache placement and the delivery phases reduces the complexity significantly and boosts the power efficiency. Despite these benefits and although such an approach is used also by some previous works, such decomposition might raise some concerns with respect to the optimality of the solution in terms of utility. Naturally, approximation and probabilistic analysis introduce a possible reduction in optimality which cannot be avoided; however, the following proposition describes the conditions under which the decomposition does not affect the optimality.

Proposition 3.

A decoupling of the optimization of cache placement and delivery phases does not introduce any adverse effect on the optimality in terms of utility if

| (43) |

Proof:

See Appendix X-C. ∎

Computationally, the proposed scheme consists of three main blocks: (i) The knapsack problem (25), which is an integer programming that accepts a fully polynomial-time approximation scheme in the number of alive files () and the cache capacity [50]; (ii) The change detection, which is performed through maximum likelihood estimation that has a polynomial complexity in terms of the samples [51]; and (iii) The multi-armed bandit problem, whose solution involves calculating the arms’ indexes and finding the largest index at every round, which, similar to the other blocks, has linear complexity in the number of actions, i.e., (file, power) pairs [52].

VIII Numerical Results

We consider a small cell with one SBS where the users dynamically join and leave the network. At each time, the randomly-distributed users are drawn from a Poisson process with a density of . As an example, a snapshot is depicted in Fig. 2. The SBS transmits using a power selected from the set . The SBS has a cache capacity of . There are files to be cached potentially. There are two change points for popularity, and . The files’ characteristics, including the size, popularity density before the change and popularity density after the change points, are summarized in Table III. As an example, for File A, File B and File I, i.e., two files whose popularity vanishes and one file whose popularity increases, the requests are shown in Fig. 3 as a function of time.

| File’s Label | A | B | C | D | E | F | G | H | I | J |

|---|---|---|---|---|---|---|---|---|---|---|

| Size | 1 | 1 | 2 | 5 | 6 | 3 | 5 | 4 | 3 | 7 |

| Popularity Before Change | 5 | 6 | 3 | 4 | 6 | 0.1 | 1 | 4 | 7 | 5 |

| Popularity After First Change | 5 | 0.1 | 3 | 4 | 6 | 0.1 | 1 | 4 | 7 | 5 |

| Popularity After Second Change | 0.1 | 0.1 | 3 | 4 | 6 | 0.1 | 1 | 4 | 12 | 5 |

The set of alive files, as well as the cache, are shown in Fig. 4, for both before and after the popularity change. From the figure, we can conclude the following: After a change occurs, the proposed cache placement method detects the change; Consequently, it replaces the file with the lost popularity with some popular file. By doing this, a cache update is triggered to adapt the cache to the occurred change.

Fig. 5 shows the utility of the proposed approach (mortal bandits with change-point detection), compared to the following methods: (i) Optimal: Given the full statistical information, the best option is selected using the exhaustive search; (ii) Greedy: After some initialization rounds which take place in the round-robin manner, the best arm so far is selected for the rest of the horizon; (iii) Greedy with Fixed Exploration: At every round, with probability the best arm so far, is selected and with probability an arm uniformly at random. The value of remains fixed over the entire horizon, which implies that the probability of exploration does not change; (iv) Greedy with Decreasing Exploration: This method is similar to the previous one, except that tends to zero with rate , meaning that the probability of exploration decreases in time. From the figure, it is clear that the proposed approach detects the changes with an acceptable delay. Moreover, it adapts the set of alive files and following that, the cache. The algorithm then finds the optimal (file, power level) pair so that the average utility is almost equal to that of the optimal solution. Although the optimal solution performs slightly better than bandit in terms of the average utility, it imposes excessive computational complexity and cost for information acquisition. Moreover, Fig. 6 shows the actions taken by the proposed algorithm (bandit) compared to those taken by the exhaustive search (optimal) approach. Most of the rounds in which the bandit algorithm does not select the optimal action belong to a short time interval right after the change, i.e., before the algorithm detects the change and adjusts the action.

Finally, we discuss the applicability and performance of rateless coding in delay-sensitive transmission, after SBS selects a file and a power level for transmission. Consider a large file with video content including segments, where each segment consists of data blocks. Due to the large size of the file , for video transmission, the video-player does not wait for the entire video, i.e., all the blocks to arrive before playback; i.e., each segment has some playback deadline . The blocks belonging to each segment are transmitted using rateless coding over the fading channel where we regard interference as noise. Therefore, for each segment at least packets shall be arrived by the deadline, otherwise an outage occurs. In [53] and [54], the authors show that the outage performance has a strong relationship with the SNR or SINR (or, the transmission power and the channel quality) as well as the playback deadline (or, indirectly ). More precisely, a transition phase can be observed after which the outage of rateless transmission reduces abruptly to zero. Fig. 7 illustrates this effect, where we simulate the outage probability for delay-sensitive transmission of a video content with segments through a fading channel as a function of SINR. Each segment consists of data blocks. Selecting with , any segment should be decoded correctly after at most channel uses (transmissions rounds), otherwise an outage occurs. From the figure, one can conclude that for different levels of delay-tolerance, different signal strengths are necessary to ensure zero outage.

IX Summary and Conclusion

We considered a femto-caching scenario in a dynamic small cell network and studied a joint cache placement, broadcast file selection, and power control problem. We developed a solution for the formulated problem based on combinatorial optimization and multi-armed bandit theory. Theoretical and numerical results show that, in contrast to the conventional strategies, the proposed approach performs well under uncertainty, i.e., when the information about several variables such as files’ popularity and channel characteristics is not available a priori. Moreover, it successfully adapts to time-varying popularity. The exact level of performance improvement depends on several factors such as the speed of variations in the files’ popularity. Future research directions include an extension of the model and solution to the game-theoretical case where every user is served by multiple SBSs, implying that the cache placement and transmission can be done more efficiently.

X Appendix

X-A Proof of Proposition 1

Let user be associated to SBS . There is one desired signal, say , which is exponentially-distributed with parameter . Moreover, there are interference signals , where . Hence the total interference power yields . Since is an exponentially-distributed random variable with parameter , is the sum of i.ni.d exponential random variables. The pdf of is given by [55]

| (44) |

with given by (5). Let . Then by using the relation , the pdf of can be calculated as

| (45) | ||||

For , we have . Therefore the proposition follows by (44).

X-B Proof of Proposition 2

We follow the same line as in [48]. As long as the cache does not change, the algorithm behaves like the standard UCB policy, which has a regret equal to . Removing a file from the cache does not affect this regret bound. Including a new file in the cache is equivalent to introducing new actions, since each action is defined as a (file, power level) pair. However, all of these new actions remain in the action set as long as the file remains in the cache, and disappear afterward. For broadcast rounds, the maximum number of rounds a file remains in the cache is ; In other words, if a file remains in the cache for some duration , the incurred regret is . Therefore, the regret is bounded as .

X-C Proof of Proposition 3

By decoupling the cache placement and delivery phases, the set of files is divided into two parts: (i) The files that are stored in the cache, i.e., all ; and (ii) The files that are not stored in the cache, i.e., all . Assuming that the distance between the estimated file’s popularity and the true file popularity tends to zero, solving the knapsack problem (25) returns the optimal cache placement. Also, by Proposition 2, the bandit strategy finds the most efficient pair (broadcast file, power level) that maximizes the expected utility in terms of (20). Therefore, a sub-optimality occurs only if one of the files that are not included in the cache produces an expected utility larger than that of the pair found by the MAB policy. Formally, to guarantee the optimality, one needs to have

| (46) |

for all and . The right-hand side of (46) can be upper-bounded as

| (47) | ||||

where the second inequality follows from the approximation with and denoting the covariance and variance respectively, together with the fact that and are independent random variables with positive expected values. The third inequality follows from the prerequisite of decoding a rateless-coded message (see Section III) and transmission with minimum power level.

References

- [1] S. Maghsudi and M. van der Schaar, “A bandit learning approach to energy-efficient femto-caching under uncertainty,” in IEEE Global Communications Conference, 2019, pp. 1–6.

- [2] S. Maghsudi and D. Niyato, “On transmission mode selection in D2D-enhanced small cell networks,” IEEE Wireless Communications Letters, vol. 6, no. 5, pp. 618–621, October 2017.

- [3] S. Maghsudi and D. Niyato, “On power-efficient planning in dynamic small cell networks,” IEEE Wireless Communications Letters, vol. 7, no. 3, pp. 304–307, June 2018.

- [4] M. Van der Schaar, D. S. Turaga, and R. Wong, “Classification-based system for cross-layer optimized wireless video transmission,” IEEE Transactions on Multimedia, vol. 8, no. 5, pp. 1082–1095, Oct 2006.

- [5] M. Zink, K. Suh, Y. Gu, and J. Kurose, “Characteristics of YouTube network traffic at a campus network - measurements, models, and implications,” Computer Networks, vol. 53, no. 4, pp. 501–514, March 2009.

- [6] M. Ji, G. Caire, and A. F. Molisch, “Wireless device-to-device caching networks: Basic principles and system performance,” IEEE Journal on Selected Areas in Communications, vol. 34, no. 1, pp. 176–189, Jan 2016.

- [7] L. Zhang, M. Xiao, G. Wu, and S. Li, “Efficient scheduling and power allocation for D2D-assisted wireless caching networks,” IEEE Transactions on Communications, vol. 64, no. 6, pp. 2438 – 2452, June 2016.

- [8] A. Altieri, P. Piantanida, L. R. Vega, and C. G. Galarza, “A stochastic geometry approach to distributed caching in large wireless networks,” in International Symposium on Wireless Communications Systems, August 2014, pp. 863–867.

- [9] H. J. Kang and C. G. Kang, “Mobile device-to-device (D2D) content delivery networking: A design and optimization framework,” Journal of Communications and Networks, vol. 16, no. 5, pp. 568–577, October 2014.

- [10] J. Dai, F. Liu, B. Li, and J. Liu, “Collaborative caching in wireless video streaming through resource auctions,” IEEE Journal on Selected Areas in Communications, vol. 30, no. 2, pp. 458–466, February 2012.

- [11] Q. Zhang, Z. Xiang, W. Zhu, and L. Gao, “Cost-based cache replacement and server selection for multimedia proxy across wireless internet,” IEEE Transactions on Multimedia, vol. 6, no. 4, pp. 587–598, Aug 2004.

- [12] A. Liu and V. K. N. Lau, “Asymptotic scaling laws of wireless Ad Hoc network with physical layer caching,” IEEE Transactions on Wireless Communications, vol. 15, no. 3, pp. 1657–1664, March 2016.

- [13] M. A. Maddah-Ali and U. Niesen, “Fundamental limits of caching,” IEEE Transactions on Information Theory, vol. 60, no. 5, pp. 2856–2867, May 2014.

- [14] Y. N. Shnaiwer, S. Sorour, N. Aboutorab, P. Sadeghi, and T. Y. Al-Naffouri, “Network-coded content delivery in femtocaching-assisted cellular networks,” in IEEE Global Communications Conference, December 2015, pp. 1–6.

- [15] N. Golrezaei, K. Shanmugam, A. G. Dimakis, A. F. Molisch, and G. Caire, “Femtocaching: Wireless video content delivery through distributed caching helpers,” in IEEE International Conference on Computer Communications, March 2012, pp. 1107–1115.

- [16] X. Peng, J. C. Shen, J. Zhang, and K. B. Letaief, “Backhaul-aware caching placement for wireless networks,” in IEEE Global Communications Conference, December 2015, pp. 1–6.

- [17] M. Gregori, J. Gomez-Vilardebo, J. Matamoros, and D. Gunduz, “Wireless content caching for small cell and D2D networks,” IEEE Journal on Selected Areas in Communications, vol. 34, no. 5, pp. 1222–1234, May 2016.

- [18] T. Wang, L. Song, and Z. Han, “Dynamic femtocaching for mobile users,” in IEEE Wireless Communications and Networking Conference, March 2015, pp. 861–865.

- [19] J. Li, W. Chen, M. Xiao, F. Shu, and X. Liu, “Efficient video pricing and caching in heterogeneous networks,” IEEE Transactions on Vehicular Technology, vol. 65, no. 10, pp. 8744–8751, October 2016.

- [20] H. Wu and H. Lu, “Delay and power tradeoff with consideration of caching capabilities in dense wireless networks,” IEEE Transactions on Wireless Communications, vol. 18, no. 10, pp. 5011–5025, October 2019.

- [21] D. Liu and C. Yang, “Energy efficiency of downlink networks with caching at base stations,” IEEE Journal on Selected Areas in Communications, vol. 34, no. 4, pp. 907–922, April 2016.

- [22] B. N. Bharath, K. G. Nagananda, and H. V. Poor, “A learning-based approach to caching in heterogenous small cell networks,” IEEE Transactions on Communications, vol. 64, no. 4, pp. 1674–1686, April 2016.

- [23] A. Sengupta, S. Amuru, R. Tandon, R. M. Buehrer, and T. C. Clancy, “Learning distributed caching strategies in small cell networks,” in International Symposium on Wireless Communications Systems, August 2014, pp. 917–921.

- [24] S. Muller, O. Atan, M. van der Schaar, and A. Klein, “Context-aware proactive content caching with service differentiation in wireless networks,” IEEE Transactions on Wireless Communications, vol. 16, no. 2, pp. 1024–1036, February 2017.

- [25] S. Li, J. Xu, M. van der Schaar, and W. Li, “Trend-aware video caching through online learning,” IEEE Transactions on Multimedia, vol. 18, no. 12, pp. 2503–2516, December 2016.

- [26] P. Blasco and D. Gunduz, “Learning-based optimization of cache content in a small cell base station,” in IEEE International Conference on Communications, June 2014, pp. 1897–1903.

- [27] S. Muller, O. Atan, M. van der Schaar, and A. Klein, “Smart caching in wireless small cell networks via contextual multi-armed bandits,” in IEEE International Conference on Communications, May 2016, pp. 1–7.

- [28] K. Poularakis, G. Iosifidis, V. Sourlas, and L. Tassiulas, “Exploiting caching and multicast for 5G wireless networks,” IEEE Transactions on Wireless Communications, vol. 15, no. 4, pp. 2995–3007, April 2016.

- [29] J. N. Shim, B. Y. Min, K. Kim, J. Jang, and D. K. Kim, “Advanced femto-caching file placement technique for overlapped helper coverage,” in Vehicular Technology Conference, May 2014, pp. 1–5.

- [30] C. Yang, Z. Chen, Y. Yao, B. Xia, and H. Liu, “Energy efficiency in wireless cooperative caching networks,” in IEEE International Conference on Communications, June 2014, pp. 4975–4980.

- [31] S. Maghsudi and S. Stanczak, “A delay-constrained rateless coded incremental relaying protocol for two-hop transmission,” in IEEE Wireless Communications and Networking Conference, April 2012, pp. 168–172.

- [32] S. Maghsudi and S. Stanczak, “On network-coded rateless transmission: Protocol design, clustering and cooperator assignment,” in International Symposium on Wireless Communication Systems, August 2012, pp. 306–310.

- [33] J. Castura, Y. Mao, and S. Draper, “On rateless coding over fading channels with delay constraints,” in IEEE International Symposium on Information Theory, July 2006, pp. 1124–1128.

- [34] S. Maghsudi and E. Hossain, “Distributed user association in energy harvesting small cell networks: A probabilistic bandit model,” IEEE Transactions on Wireless Communications, vol. 16, no. 3, pp. 1549–1563, March 2017.

- [35] M. Luby, “LT codes,” in IEEE Symposium on Foundations of Computer Science, November 2002, pp. 271–280.

- [36] D. Willkomm, J. Gross, and A. Wolisz, “Reliable link maintenance in cognitive radio systems,” in IEEE International Symposium on New Frontiers in Dynamic Spectrum Access Networks, November 2005, pp. 371–378.

- [37] Y. Sun, C. E. Koksal, S. Lee, and N. B. Shroff, “Network control without CSI using rateless codes for downlink cellular systems,” in IEEE International Conference on Computer Communications, April 2013, pp. 1016–1024.

- [38] A. Venkiah, P. Piantanida, C. Poullia, P. Duhamel, and D. Declercq, “Rateless coding for quasi-static fading channels using channel estimation accuracy,” in IEEE International Symposium on Information Theory, July 2008, pp. 2257–2261.

- [39] B. Yang, G. Mao, M. Ding, X. Ge, and X. Tao, “Dense small cell networks: From noise-limited to dense interference-limited,” IEEE Transactions on Vehicular Technology, vol. 67, no. 5, pp. 4262–4277, May 2018.

- [40] H. A. David and H. N. Nagaraja, Order Statistics, John Wiley & Sons, 2003.

- [41] L. L. Minku, A. P. White, and X. Yao, “The impact of diversity on online ensemble learning in the presence of concept drift,” IEEE Transactions on Knowledge and Data Engineering, vol. 22, no. 5, pp. 730–742, May 2010.

- [42] C. Tekin and M. van der Schaar, “Distributed online big data classification using context information,” in Annual Allerton Conference on Communication, Control, and Computing, 2013, pp. 1435–1442.

- [43] G. Tyson, Y. Elkhatib, N. Sastry, and S. Uhlig, “Measurements and analysis of a major adult video portal,” ACM Transactions on Multimedia Computing Communications and Applications, vol. 12, no. 2, pp. 1–25, Jan 2016.

- [44] S. Maghsudi and E. Hossain, “Distributed user association in energy harvesting small cell networks: An exchange economy with uncertainty,” IEEE Transactions on Green Communications and Networking, vol. 1, no. 3, pp. 294–108, September 2017.

- [45] M. Basseville and I.V. Nikiforov, Detection of Abrupt Changes:Theory and Application, Prentice-Hall, 1993.

- [46] E. S. Page, “Continuous inspection schemes,” Biometrika, vol. 41, no. 1-2, pp. 100–115, June 1954.

- [47] G. A. Barnard, “Control charts and stochastic processes,” Journal of the Royal Statistical Society, Series B (Methodological), vol. 21, no. 2, pp. 239–271, June 1959.

- [48] Z. Bnaya, R. Puzis, R. Stern, and A. Felner, “Volatile multi-armed bandits for guaranteed targeted social crawling,” in Proceedings of the AAAI Conference on Late-Breaking Developments in the Field of Artificial Intelligence, June 2013, pp. 8–10.

- [49] P. Auer, N. Cesa-Bianchi, and P. Fischer, “Finite-time analysis of the multiarmed bandit problem,” Machine Learning, vol. 47, no. 2-3, pp. 235–256, May 2002.

- [50] H. Kellerer, U. Pferschy, and D. Pisinger, Knapsack Problems, Springer, 2004.

- [51] D. Ciuonzo, P. S. Rossi, and P. Willett, “Generalized Rao test for decentralized detection of an uncooperative target,” IEEE Signal Processing Letters, vol. 24, no. 5, pp. 678–682, May 2017.

- [52] D. E. Knuth, The Art of Computer Programming, Volume 3: Sorting and Searching, Addison Wesley Longman Publishing Co., 2nd edition, 1998.

- [53] J. Castura and Y. Mao, “Rateless coding over fading channels,” IEEE Communications Letters, vol. 10, no. 1, pp. 46–48, 2006.

- [54] S. Maghsudi and S. Stanczak, “On channel selection for energy-constrained rateless-coded D2D communications,” in European Signal Processing Conference, Aug 2015, pp. 1028–1032.

- [55] Y. Yao and A. U. H. Sheikh, “Outage probability analysis for microcell mobile radio systems with cochannel interferers in Rician/Rayleigh fading environment,” Electronics Letters, vol. 26, no. 13, pp. 864–866, June 1990.