A Non-parametric Skill Representation with Soft Null Space Projectors for Fast Generalization

Abstract

Over the last two decades, the robotics community witnessed the emergence of various motion representations that have been used extensively, particularly in behavorial cloning, to compactly encode and generalize skills. Among these, probabilistic approaches have earned a relevant place, owing to their encoding of variations, correlations and adaptability to new task conditions. Modulating such primitives, however, is often cumbersome due to the need for parameter re-optimization which frequently entails computationally costly operations. In this paper we derive a non-parametric movement primitive formulation that contains a null space projector. We show that such formulation allows for fast and efficient motion generation and adaptation with computational complexity without involving matrix inversions, whose complexity is . This is achieved by using the null space to track secondary targets, with a precision determined by the training dataset. Using a 2D example associated with time input we show that our non-parametric solution compares favourably with a state-of-the-art parametric approach. For demonstrated skills with high-dimensional inputs we show that it permits on-the-fly adaptation as well.

I Introduction

Generalization is a fundamental challenge in machine learning, which in robot skill learning translates into modifying a behavior when new conditions arise. Since their inception with dynamic movement primitives (DMP) [1], skill representations in robotics have targeted generalization. In DMPs this is done by design, by representing motion dynamics with a second-order linear system that ensures convergence to a pre-specified, alterable end-goal. Alternative approaches using mixture models and hidden Markov models [2] are also endowed with strong generalization capabilities particularly under the framework of task-parameterized movement models [3, 4]. Despite their popularity, these approaches lack analytical solutions to the adaptation problem, especially when via-points are not located at the start or end of the movement. In these situations, re-optimization of model parameters is required which can be prohibitively costly, e.g., reinforcement learning was used to optimize the parameters of DMP [5] and GMM [6]. In [7] DMPs were combined in sequence to address the via-point issue, demanding several DMPs for a single skill, as opposed to traditional solutions requiring only one DMP.

A second line of approaches leverages probability theory to provide increased adaptation capabilities. Paraschos et al. introduced probabilistic movement primitives (ProMP) [8]. ProMP assumes that demonstrations of a task are approximated by a parametric trajectory amounting to a weighted sum of basis functions and uses Gaussian conditioning to compute optimal weights to pass through new, desired via-points. ProMP has been extended to handle obstacle avoidance [9, 10], where in [9] a signed distance between a robot arm and an obstacle was used similarly to CHOMP [11], and in [10] a random exploration and update scheme, initialized by ProMP weight distributions, was provided similarly to STOMP [12]. In [13] constrained ProMP was studied by formulating external constraints into a nonlinear optimization problem. In [14], we introduced a non-parametric, kernelized movement primitives (KMP) formulation which uses kernel functions to mitigate the need to carefully define basis functions, a well-known issue in ProMP and related techniques relying on fixed basis functions. This is the case especially if the trajectory is not driven by time but by a multi-dimensional input, such as the position or posture of a human in the robot workspace, where the number of required basis functions grows quickly with the number of input variables [15]. We argue that formulations that allow to seamlessly encode dependencies on human motions, and not only time, have an important role to play in the near future as human-robot interaction gains traction [16, 17, 18, 19].

Despite providing an elegant solution to adapt a (potentially multi-dimensional-input) trajectory to pass through regions that were not previously shown to the robot, the computational cost of dealing with such adaptations by KMP is non-negligible. This is because it relies on a kernel matrix that, depending on factors like the task and the features being encoded (e.g. robot end-effector poses, joint angles, velocities), may be costly to invert. It should be noted that this is an intrinsic limitation of many non-parametric methods, including Gaussian processes [20].

In this paper we address the fast adaptation problem by deriving a variant of KMP that contains a null space projector. We start from the original problem formulation, described in Section II, and modify the optimization objective such that a null space projector appears naturally (Section III-A). Null space projectors play an important role in robot control [21, 22, 23, 24, 25] and we re-use some of those core ideas here in a different domain. Subsequently we show that the projector can be kernelized too, providing an elegant and computationally efficient solution to probabilistic trajectory adaptation (Section III-B), including in problems with multi-dimensional inputs. We also discuss some of its properties (Section IV), particularly the fact that idempotence of the projector is not guaranteed and depends on the training data (thus we refer to it as a soft null space projector). While on the surface this might appear to be a limitation, it allows for covariance-weighted exploration trivially. Finally we compare our approach to two baselines in 2D and 3D examples (Sections V–VI).

II Kernelized Movement Primitives

In the KMP framework we are interested in estimating a model that predicts the value of an output variable given observations of an input from a set of datapoints typically, but not necessarily, collected from human demonstrations. Examples of include time or human hand positions, while is often the robot end-effector pose and its derivatives [14]. Similarly to ProMP, KMP assumes a parametric approximation of a trajectory by a weighted sum of basis functions, i.e., , where are weights and is a vector of basis functions whose values depend on . Moreover, . ProMP finds using maximum likelihood estimation (MLE) with samples of obtained from demonstrations. KMP departs from ProMP by further assuming that a reference trajectory distribution is available to model , where are given inputs. It then finds by minimizing the objective . In this paper we focus on finding the optimal values of , which is done by solving the regularized weighted least squares problem (see [14] for the detailed derivation):

| (1) |

where is a scalar. Differentiating the objective in (1) and equating to zero results in

| (2) |

where we have

| (3) |

The underscored term in Eq. (2) can be interpreted as a regularized right pseudo-inverse of with regularization term .

For a test input , the expectation of under the assumption of a parametric trajectory is given by . By replacing (2) and applying the kernel trick [15], we obtain

| (4) | ||||

| (5) |

where , , and is a kernel function from hereon assumed to be the squared-exponential .

From (1), (5) it follows that if, for a certain , the covariance is small, the expectation at will be close to . This provides a principled way for trajectory modulation. Indeed, if, for a new input , one wants to ensure that the expected trajectory passes through a desired it suffices to manually add the pair to the reference distribution provided that is small enough. While this solves the adaptation problem trivially, it comes at the cost of having to invert the term every time a new point is added. This hinders the applicability of KMP in scenarios that require a fast evaluation of variations of the demonstrated trajectories. We propose to alleviate this issue by formulating trajectory modulation as acting on the null space of the movement primitive.

III A null space formulation of KMP

III-A KMP with null space as the solution to a least squares problem

We take inspiration from the robotics literature on null space projectors [24, 23, 22] and extend the original problem (1) with an additional cost term to keep the solution close to a desired one denoted by , i.e.,

| (6) |

The solution can be obtained similarly to (1) by solving , leading to

| (7) |

By assuming , (7) is re-written as

| (8) |

Moreover, by using the Woodbury identity111 the inverse in the second term of (8) takes the form

| (9) |

which plugged back into (8) results in

| (10) |

Equation (10) corresponds to a typical least squares solution with null space where for a linear system we have as solution where satisfies a secondary goal and .

III-B Kernelizing the null space solution

As for the original solution of KMP, the optimal solution is applied to a new test point to predict the expectation of the output as

| (11) |

Using the kernel trick we can write

| (12) |

For the sake of the derivation, we convert the null space desired weights into a desired set of points in trajectory space. From the assumption of a parametric trajectory we define an arbitrary number of desired secondary target points as or, in matrix form,

| (13) |

Next we approximate the optimal values of given the desired secondary target points using the right pseudo-inverse of , i.e.

| (14) |

Plugging (14) back in (12) yields

with , , and .

Finally, for a single desired secondary target and a squared-exponential kernel we have and (III-B) becomes222To contrast our solution with the ‘classical’ KMP [14], throughout the rest of the paper we refer to (15) as the null space KMP (NS-KMP). In addition, we refer to as the null space reference.

| (15) |

Notice that (15) corresponds to (5) with the additional null space term . Equation (15) thus modulates the expectation (5) in a computationally efficient manner. We leverage this property by modifying such that variations of the original trajectory are achieved, similarly to what is done in robot null space exploration [26, 27, 28]. However, unlike the null spaces found in robot control which are often strict (see [24] for an overview) the KMP null space possesses a set of properties derived from its probabilistic foundations that dictate how modulates (5).

IV Properties of the kernelized null space projector

IV-A Idempotence and ‘soft’ null space

A general property of null space projectors is idempotence, i.e. for a null space projector we have [25]. The projector derived in Section III-A is not guaranteed to have this property since, generally, . It should be noted, however, that as idempotence is fulfilled, which can be verified trivially. In practice, this means that the larger , the more the null space reference is allowed to deform the original trajectory. For this reason we refer to it as a soft null space projector. In robotics, this is known to happen when computing null space projectors using the regularized pseudo-inverse of the Jacobian matrix. Indeed, in those cases, the null space tasks are known to affect the precision of the main one [24].

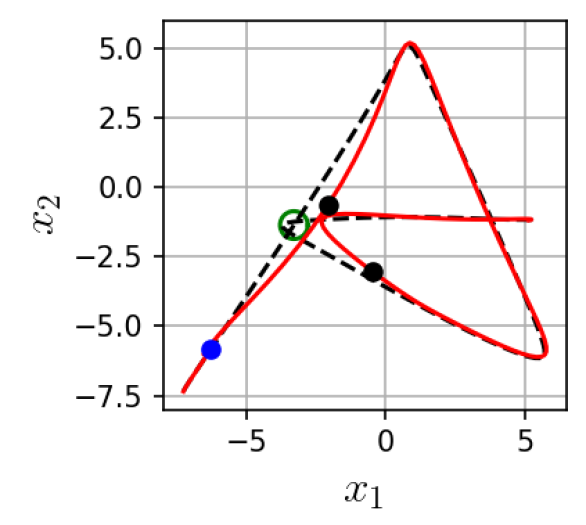

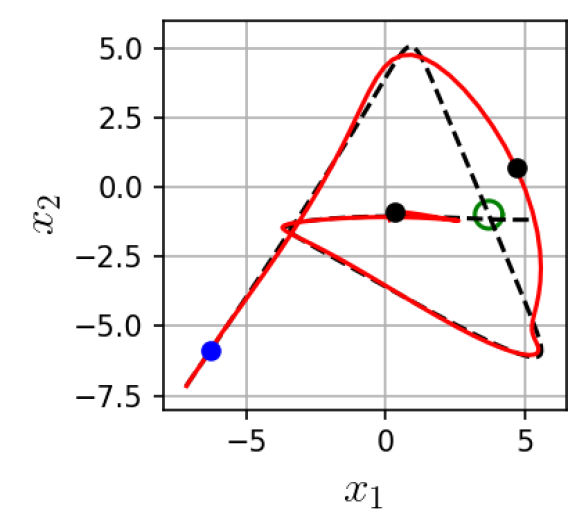

IV-B Covariance-weighted null space modulation

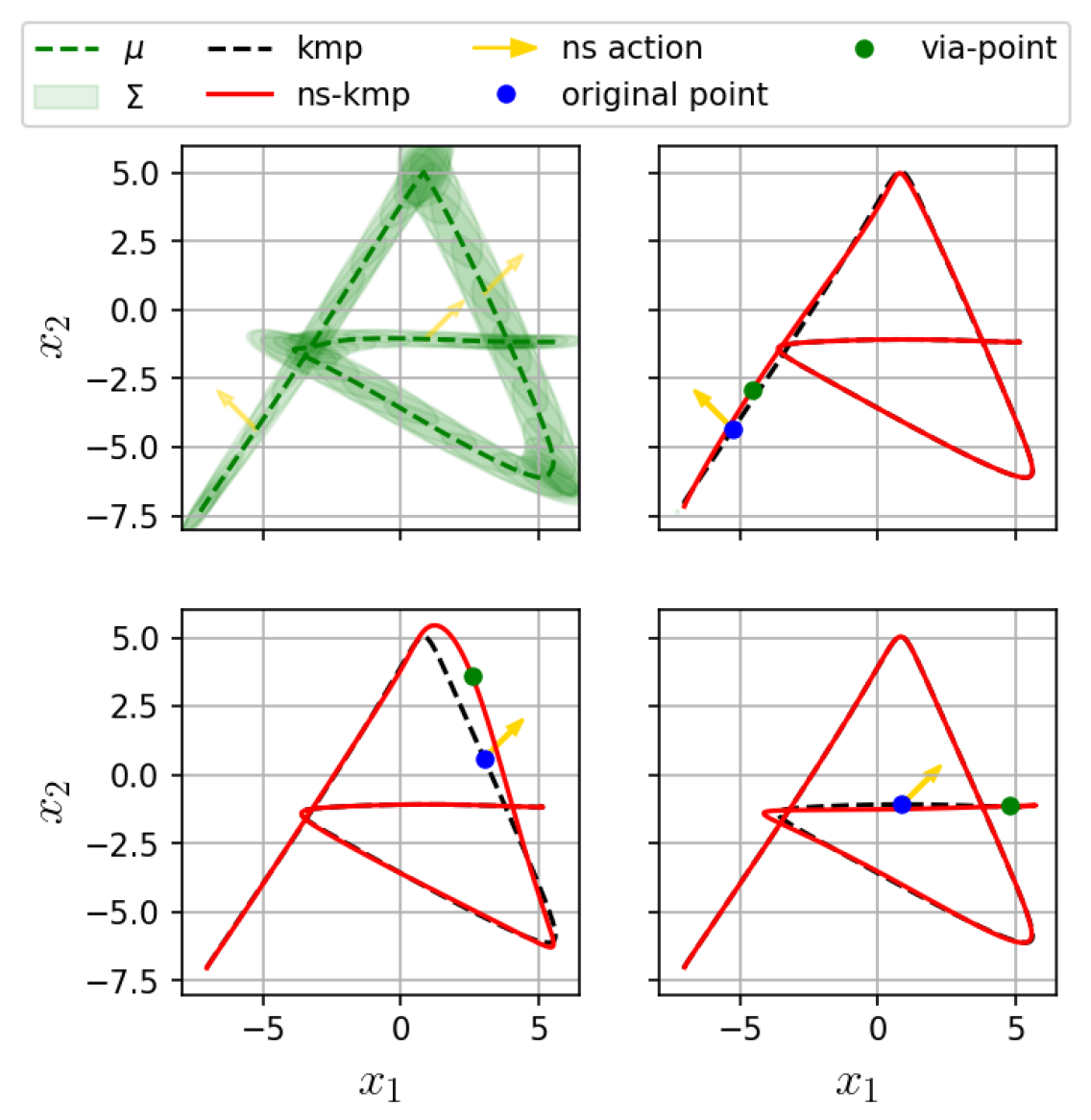

Unlike the robot control case, where regularization typically appears as a diagonal matrix, are full covariance matrices. As a consequence, a null space reference generally does not affect all dimensions of the trajectory equally. The covariance at a given point influences both the magnitude and the directions along which a null space reference can modulate the trajectory. Figure 1 illustrates this property. The top-left image shows a trajectory distribution in 2D computed from examples of drawing a letter ‘A’ [29]. That distribution was used as a reference trajectory distribution in a KMP. Notice how the covariance changes throughout the trajectory. In the top-right and bottom images, we show the effect of applying a null space reference (yellow arrow), with the same magnitude, at different points along the trajectory. The dashed line is computed from (5) and the solid, red one from (15). The covariance in the null space projector constrains the modulated trajectory to deform less (more) along the directions where the variance is lower (higher). Additionally the modulation is applied along the axes of . This provides a principled way to use the variations in the original dataset to design optimal exploration strategies. Moreover, unlike typical exploration strategies that require the re-computation of model parameters or computationally costly operations to include new points in the trajectory, here adding new points is done inexpensively with (15). Indeed, the parameter , that contains a potentially large matrix inverse operation, is computed only once from the reference trajectory distribution.

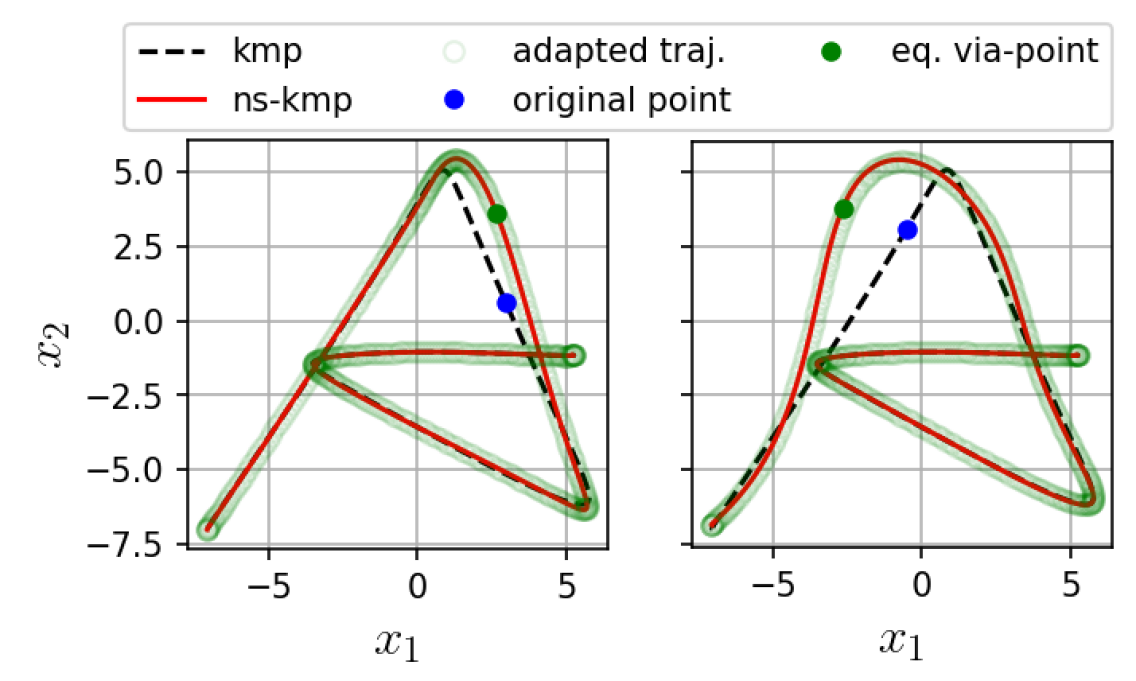

IV-C Equivalence to classical KMP adaptation

An important final property of NS-KMP is that null space modulation is equivalent to classical KMP modulation [14] given the appropriate via-point. For the sake of the explanation let us consider an input where a null space reference was applied, resulting in an observation after the null space projector. We have concluded empirically that by treating the pair as a via-point in the classical KMP framework [14], and adding it to the reference trajectory distribution with a low covariance, generates the same trajectory as the null-space-generated one. Figure 2 illustrates this property. This result suggests that NS-KMP is, indeed, a computationally-efficient tool for via-point-based motion adaptation.

V Experiment I

We first report results on the re-planning of a 2D trajectory, learned from demonstrations, after an obstacle appears. We tackle this problem using null space exploration (NS-KMP) or by adding via-points (KMP, ProMP) to the demonstrated trajectory distribution. We compare the time performance of KMP, NS-KMP and ProMP.

V-A Setup

We use the handwritten letter dataset available in [29], particularly the dataset for letter ‘A’, to generate a trajectory distribution from demonstrations. It contains 10 demonstrations with time and 2D position , which we use to train a mixture model with 8 components and retrieve a reference trajectory distribution using Gaussian mixture regression. Moreover and . In both KMP and NS-KMP implementations we use hyperparameters , while for ProMP we use 20 basis functions. The implementation was done in Python 3.8 running on an 8-core 11-Gen Intel Core i7-11850H @ 2.50GHz processor.

We use the learned models to simulate a robot planning a trajectory within a finite-horizon. At time , the robot uses KMP, NS-KMP or ProMP to compute a trajectory for the horizon . We use with increments between time steps. At a randomly chosen time instant in the horizon, an obstacle (a circle with radius 0.5) with position uniformly sampled from appears (only once) along the planned path. The robot then tests alternative paths looking for one that does not collide with the obstacle. In NS-KMP, this is done by sampling values of input and null space reference and computing the corresponding new trajectory using (15). The sampling interval for the input is and for the two dimensions of (we discuss this range of values in Section VII). In KMP and ProMP, finding alternative paths is done by sampling via-points in the neighborhood of the obstacle and inputs in the same range as for NS-KMP. To efficiently perform the exploration, including sampling null space references and via-points we used TPE [30], particularly the implementation available in the Optuna package [31]. We define a simple objective function to minimize where is the sequence of 2D points in the planned trajectory and and are collision and continuity terms that receive values of 1000 if a collision happens in or the norm between any two consecutive points in exceeds the radius of the obstacle and 0 otherwise. The optimizer returns the first solution with zero cost.

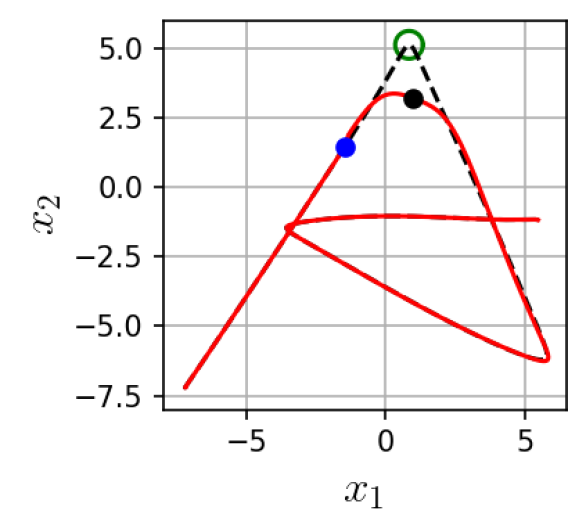

V-B Results using one via-point

Figure 3(a) shows example trajectories obtained from NS-KMP. We observe that the obstacle is avoided correctly in different segments of the trajectory. Table I summarizes the time required by KMP, NS-KMP and ProMP to predict a trajectory from one null space reference or via-point (first row) and to find a solution to avoid the obstacle (second row). Each entry corresponds to the mean and standard deviation of 40 points. In the case of NS-KMP one prediction corresponds to computing , and (15). For KMP it is the time of computing new matrices , and (5), including the inversion. For ProMP it is the time to evaluate the basis functions at the new input, recompute the weight distribution and use it to compute a new trajectory (see [8]). Note that in this experiment we did not allow obstacles to appear at points where the trajectory intersects with itself (see these cases in Fig. 3(b)) since they require two via-points. The prediction times show that the parametric model ProMP was the fastest, closely followed by our non-parametric NS-KMP. Moreover, we observed comparable times between NS-KMP and ProMP to find a good trajectory (second row, 1 via-point (s)), with KMP (first column) taking two orders of magnitude longer.

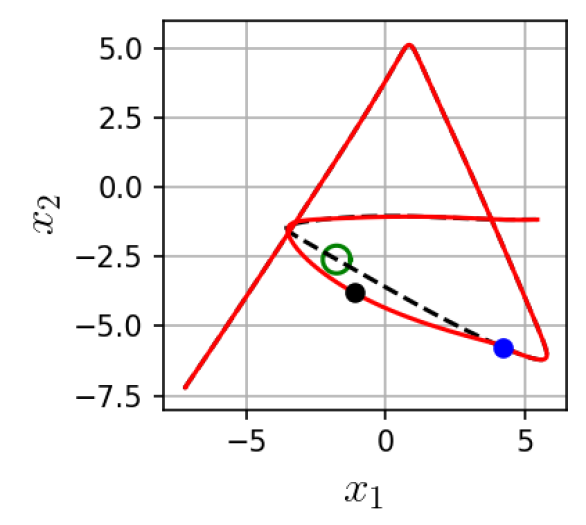

V-C Results for collision avoidance with two via-points

Finally we investigated modulation by more than one via-point. For this we focused on the cases where the obstacle appears at places where the trajectory intersects with itself (Fig. 3(b)). Given the high computational cost of KMP shown in V-B we compare NS-KMP and ProMP only. Figure 3(b) shows that NS-KMP is capable of avoiding obstacles by modulating the planned trajectory with two via-points. As seen in Table I, last row, NS-KMP and ProMP achieved times within the same order of magnitude, with a slight disadvantage to ProMP.

| KMP | NS-KMP (ours) | ProMP | |

|---|---|---|---|

| Prediction (ms) | |||

| 1 via-point (s) | |||

| 2 via-point (s) | - |

VI Experiment II

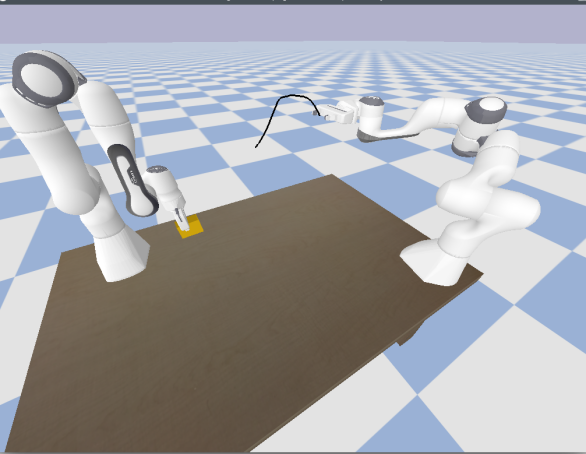

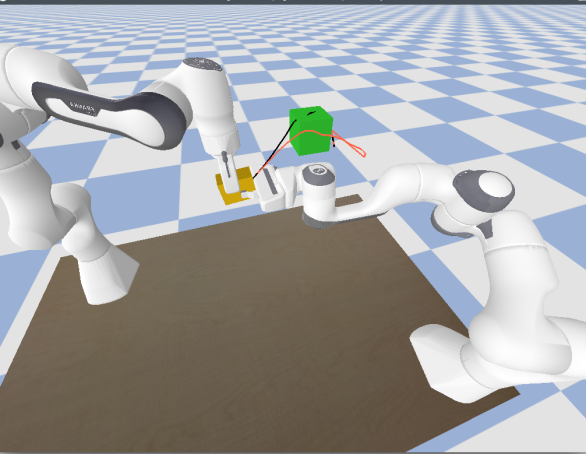

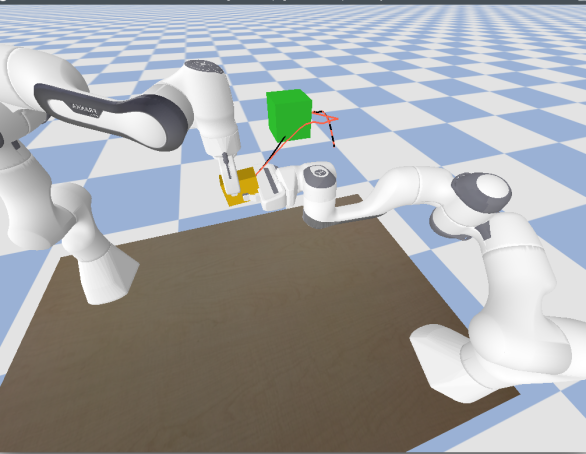

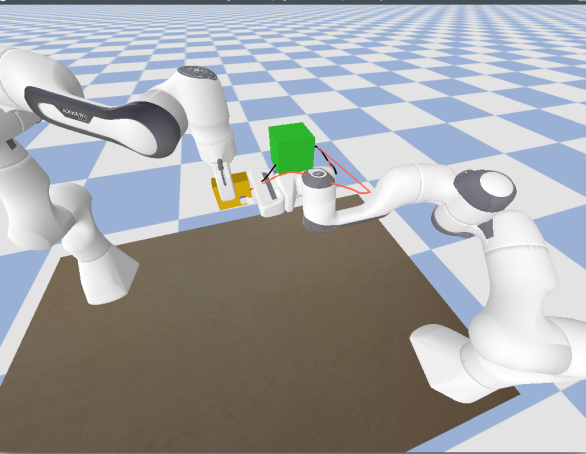

In a second experiment we investigate the ability of NS-KMP to modulate trajectories generated from multi-dimensional inputs. We consider a handover task where the end-effector position of one robot is computed from the one of another robot and adapted after an obstacle appears.

VI-A Setup

In order to train a NS-KMP we used the dataset collected from handover demonstrations in [32], with 5 demonstrations in the form of human hand and robot end-effector positions and . The NS-KMP thus uses and . In order to simulate the human-robot handover task, the data was rescaled to fit the robot workspaces in the pybullet setup depicted in Fig. 4. As illustrated in Fig. 4, the left robot plays the role that the human played in the demonstrations by picking up an object (yellow box) and handing it over to the robot on the right. The latter starts with a planned motion (black line) computed using as input the trajectory planned by the left robot (unavailable in a real scenario with a human, only shown for illustration). In each trial the motion is interrupted by a cube-shaped obstacle with an axial diagonal of that appears randomly (both in time and location) once along the black line. All the NS-KMP parameters were the same as in V. The optimization framework was also the same, however was sampled from the range and the inputs (i.e. the left robot end-effector position) were sampled uniformly from the ones in the reference trajectory distribution. As in [14], we do not use ProMP as baseline here due to the difficulty in parameterizing basis functions for multi-dimensional inputs. Instead, we consider the optimization of Gaussian components in GMM as a baseline [6]. Specifically, we optimize the mean of one randomly chosen Gaussian until the collision cost is zero. We do not evaluate classical KMP in this section as the arguments that caused it to underperform in V-B are still valid (see also the analysis of computational complexity in Section VII-B).

VI-B Results

In 20 trials, the average time to compute a solution with NS-KMP after the obstacle appears was . Fig. 4, top-right and bottom, shows original and adapted trajectories. The GMM baseline yielded .

VII Discussion

VII-A Analysis of experimental results

The results in Section V revealed that our solution, despite not outperforming ProMP ( faster at predicting), computes trajectories given a new desired point at the millisecond level (Table I, first row) with a non-optimized Python implementation. We believe this to be a noteworthy result for a non-parametric approach. Moreover, ProMP and NS-KMP performed at a similar level in finding a 1-via-point solution. However, ProMP is only faster, against for the individual prediction, suggesting that ProMP needs to try more via-points than NS-KMP to find a solution. This could be related to our choice of sampling via-points for ProMP around the obstacle, which may not be as efficient as the covariance-weighted exploration by NS-KMP. The reason for such choice is that we observed that the trajectories generated by ProMP often had arbitrary shapes (seriously violating the structure of the training data) if the search space of the via-point is not bounded or is large. The same reasoning applies to the 2-via-point case where NS-KMP took less time to find successful via-points.

Adaptation of learned multi-dimensional-input skills was a novelty introduced by KMP [14] and the results in Section VI show that NS-KMP provides a fast solution to adapting this type of skills. The GMM baseline was slower and the resulting trajectories were prone to distortions since only one Gaussian was being optimized at a time. The presented times for GMM can thus be seen as a lower bound since optimizing further parameters would require higher amounts of time. Despite the successful adaptation with NS-KMP we verified that, for our choice of hyperparameters, the time to compute a successful trajectory increases when the object appears closer to the handover location due to the decrease in variance (the null space ‘hardens’).

Some additional practical aspects of NS-KMP are worth discussing. As described in V–VI the magnitude of could be as high as 1000 or 2000 depending on the problem. Due to the probabilistic nature of the soft null space projector, one does not know a priori the required magnitude of . It should thus be treated as a hyperparameter to be tuned depending on the problem. Additionally, while covariance-weighted null space exploration seems to alleviate the need to manually define exploration strategies, in some scenarios one might need to violate the priors imposed by the data, e.g. if the object has a much larger size. In other words it may be desirable to ‘soften’ the null space. This can be achieved by manually increasing , particularly in the neighborhood of the concerned region.

VII-B Analysis of computational complexity

Classical KMP [14] predicts the expectation of using (5). When a new desired point described by the mean and covariance is needed, the reference trajectory distribution is concatenated with ,, leading to an extended reference trajectory with length . Consequently, KMP needs to recompute the term since the updated includes , see (3). Note that the dimension of and is and therefore the computational complexity of classical KMP is . Recall that in (15) we only search in the null space, where remains the same and therefore can be computed beforehand in an offline fashion. Therefore, the computation complexity of (15) is , which is significantly faster than classical KMP.

VIII Conclusion

We presented a formulation of kernelized movement primitives with a soft null space projector (NS-KMP). Such projector allows a secondary goal to modulate a primary one, in this case a learned trajectory. This modulation occurs in proportion to the covariance in the data. We leveraged the theoretical properties of this projector, as well as its computational efficiency, in letter-writing and handover tasks to quickly discover demonstration-guided, collision-free motions. NS-KMP, as a non-parametric approach, outperformed classical KMP in terms of computational complexity and performed competitively against the parametric approach ProMP. Moreover, we showed that NS-KMP provides fast adaptation in a fraction of a second in tasks where multi-dimensional input signals drive the robot behavior – an important part of human-robot interaction.

In future work we will leverage the scalability of NS-KMP to solve adaptation problems in higher-dimensional settings, such as humanoid robot control. We will also investigate how to integrate more diversified types of multi-dimensional inputs into the NS-KMP framework such as EMG signals [33]. Finally, we will investigate similar null space projectors for Gaussian processes.

Acknowledgements

This work has received funding from the European Union’s Horizon 2020 Research and Innovation Programme under Grant Numbers 951992 (project VeriDream) and 101070600 (project SoftEnable), as well as under the Marie Skłodowska-Curie grant agreement No 101018395. We would also like to thank Alexander Dietrich for the discussions on the algebraic properties of null space projectors and Antonin Raffin for assisting with Optuna.

References

- [1] A. J. Ijspeert, J. Nakanishi, and S. Schaal, “Movement imitation with nonlinear dynamical systems in humanoid robots,” in Proc. IEEE Intl Conf. on Robotics and Automation (ICRA), 2002, pp. 1398–1403.

- [2] S. Calinon, F. Guenter, and A. Billard, “On learning, representing and generalizing a task in a humanoid robot,” IEEE Transactions on Systems, Man and Cybernetics, Part B. Special issue on robot learning by observation, demonstration and imitation, vol. 37, no. 2, pp. 286–298, 2007.

- [3] S. Calinon, “A tutorial on task-parameterized movement learning and retrieval,” Intelligent Service Robotics, vol. 9, no. 1, pp. 1–29, January 2016.

- [4] Y. Huang, J. Silvério, L. Rozo, and D. G. Caldwell, “Generalized task-parameterized skill learning,” in Proc. IEEE Intl Conf. on Robotics and Automation (ICRA), 2018, pp. 5667–5474.

- [5] F. Stulp and O. Sigaud, “Robot skill learning: From reinforcement learning to evolution strategies,” Paladyn, Journal of Behavioral Robotics, vol. 4, no. 1, pp. 49–61, 2013.

- [6] F. Guenter, M. Hersch, S. Calinon, and A. Billard, “Reinforcement learning for imitating constrained reaching movements,” Advanced Robotics, vol. 21, no. 13, pp. 1521–1544, 2007.

- [7] M. Saveriano, F. Franzel, and D. Lee, “Merging position and orientation motion primitives,” in Proc. IEEE Intl Conf. on Robotics and Automation (ICRA), 2019, pp. 7041–7047.

- [8] A. Paraschos, C. Daniel, J. Peters, and G. Neumann, “Probabilistic movement primitives,” in Advances in Neural Information Processing Systems (NIPS), 2013, pp. 2616–2624.

- [9] R. A. Shyam, P. Lightbody, G. Das, P. Liu, S. Gomez-Gonzalez, and G. Neumann, “Improving local trajectory optimisation using probabilistic movement primitives,” in Proc. IEEE/RSJ Intl Conf. on Intelligent Robots and Systems (IROS), 2019, pp. 2666–2671.

- [10] T. Löw, T. Bandyopadhyay, J. Williams, and P. V. Borges, “PROMPT: Probabilistic motion primitives based trajectory planning.” in Proc. Robotics: Science and Systems (R:SS), Virtual, July 2021.

- [11] M. Zucker, N. Ratliff, A. D. Dragan, M. Pivtoraiko, M. Klingensmith, C. M. Dellin, J. A. Bagnell, and S. S. Srinivasa, “CHOMP: Covariant hamiltonian optimization for motion planning,” The International Journal of Robotics Research, vol. 32, no. 9-10, pp. 1164–1193, 2013.

- [12] M. Kalakrishnan, S. Chitta, E. Theodorou, P. Pastor, and S. Schaal, “STOMP: Stochastic trajectory optimization for motion planning,” in Proc. IEEE Intl Conf. on Robotics and Automation (ICRA), 2011, pp. 4569–4574.

- [13] F. Frank, A. Paraschos, P. van der Smagt, and B. Cseke, “Constrained probabilistic movement primitives for robot trajectory adaptation,” IEEE Trans. on Robotics, vol. 38, no. 4, pp. 2276–2294, 2022.

- [14] Y. Huang, L. Rozo, J. Silvério, and D. G. Caldwell, “Kernelized movement primitives,” International Journal of Robotics Research, vol. 38, no. 7, pp. 833–852, 2019.

- [15] C. M. Bishop, Pattern Recognition and Machine Learning (Information Science and Statistics). Secaucus, NJ, USA: Springer, 2006.

- [16] G. Maeda, M. Ewerton, G. Neumann, R. Lioutikov, and J. Peters, “Phase estimation for fast action recognition and trajectory generation in human–robot collaboration,” The International Journal of Robotics Research, vol. 36, no. 13-14, pp. 1579–1594, 2017.

- [17] Y. Cui, J. Poon, J. V. Miro, K. Yamazaki, K. Sugimoto, and T. Matsubara, “Environment-adaptive interaction primitives through visual context for human–robot motor skill learning,” Autonomous Robots, vol. 43, no. 5, pp. 1225–1240, 2019.

- [18] J. Silvério, Y. Huang, L. Rozo, S. Calinon, and D. G. Caldwell, “Probabilistic learning of torque controllers from kinematic and force constraints,” in Proc. IEEE/RSJ Intl Conf. on Intelligent Robots and Systems (IROS), 2018, pp. 1–8.

- [19] J. Silvério, Y. Huang, L. Rozo, and D. G. Caldwell, “An uncertainty-aware minimal intervention control strategy learned from demonstrations,” in Proc. IEEE/RSJ Intl Conf. on Intelligent Robots and Systems (IROS), 2018, pp. 6065–6071.

- [20] C. E. Rasmussen and C. K. I. Williams, Gaussian processes for machine learning. Cambridge, MA, USA: MIT Press, 2006.

- [21] B. Siciliano and J.-J. Slotine, “A general framework for managing multiple tasks in highly redundant robotic systems,” in Fifth International Conference on Advanced Robotics ‘Robots in Unstructured Environments’, 1991, pp. 1211–1216.

- [22] C. W. Wampler, “Manipulator inverse kinematic solutions based on vector formulations and damped least-squares methods,” IEEE Transactions on Systems, Man, and Cybernetics, vol. 16, no. 1, pp. 93–101, 1986.

- [23] R. Mayorga, K. Ma, and A. Wong, “A robust local approach for the obstacle avoidance of redundant robot manipulators,” in Proc. IEEE/RSJ Intl Conf. on Intelligent Robots and Systems (IROS), vol. 3, 1992, pp. 1727–1734.

- [24] A. S. Deo and I. D. Walker, “Overview of damped least-squares methods for inverse kinematics of robot manipulators,” Journal of Intelligent and Robotic Systems, vol. 14, no. 1, pp. 43–68, 1995.

- [25] A. Dietrich, C. Ott, and A. Albu-Schäffer, “An overview of null space projections for redundant, torque-controlled robots,” The International Journal of Robotics Research, vol. 34, no. 11, pp. 1385–1400, 2015.

- [26] D.-H. Park, H. Hoffmann, P. Pastor, and S. Schaal, “Movement reproduction and obstacle avoidance with dynamic movement primitives and potential fields,” in Proc. IEEE Intl Conf. on Humanoid Robots (Humanoids), 2008, pp. 91–98.

- [27] Y. Huang, J. Silvério, L. Rozo, and D. G. Caldwell, “Hybrid probabilistic trajectory optimization using null-space exploration,” in Proc. IEEE Intl Conf. on Robotics and Automation (ICRA), Brisbane, Australia, May 2018, pp. 7226–7232.

- [28] M. Schneider and W. Ertel, “Robot learning by demonstration with local Gaussian process regression,” in Proc. IEEE/RSJ Intl Conf. on Intelligent Robots and Systems (IROS), 2010, pp. 255–260.

- [29] S. Calinon and D. Lee, Learning Control. Dordrecht: Springer Netherlands, 2019, pp. 1261–1312.

- [30] J. Bergstra, R. Bardenet, Y. Bengio, and B. Kégl, “Algorithms for hyper-parameter optimization,” in Advances in Neural Information Processing Systems (NIPS), J. Shawe-Taylor, R. Zemel, P. Bartlett, F. Pereira, and K. Weinberger, Eds., vol. 24. Curran Associates, Inc., 2011.

- [31] T. Akiba, S. Sano, T. Yanase, T. Ohta, and M. Koyama, “Optuna: A next-generation hyperparameter optimization framework,” in Proceedings of the 25rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 2019.

- [32] J. Silvério, Y. Huang, F. J. Abu-Dakka, L. Rozo, and D. G. Caldwell, “Uncertainty-aware imitation learning using kernelized movement primitives,” in Proc. IEEE/RSJ Intl Conf. on Intelligent Robots and Systems (IROS), 2019, pp. 90–97.

- [33] J. Vogel, A. Hagengruber, M. Iskandar, G. Quere, U. Leipscher, S. Bustamante, A. Dietrich, H. Hoppner, D. Leidner, and A. Albu-Schaffer, “EDAN: An EMG-controlled daily assistant to help people with physical disabilities,” in Proc. IEEE/RSJ Intl Conf. on Intelligent Robots and Systems (IROS), 2020, pp. 4183–4190.