[email protected] (T. Cui), [email protected] (X. Xiang)

A Neural Network with Plane Wave Activation for Helmholtz Equation

Abstract

This paper proposes a plane wave activation based neural network (PWNN) for solving Helmholtz equation, the basic partial differential equation to represent wave propagation, e.g. acoustic wave, electromagnetic wave, and seismic wave. Unlike using traditional activation based neural network (TANN) or activation based neural network (SIREN) for solving general partial differential equations, we instead introduce a complex activation function , the plane wave which is the basic component of the solution of Helmholtz equation. By a simple derivation, we further find that PWNN is actually a generalization of the plane wave partition of unity method (PWPUM) by additionally imposing a learned basis with both amplitude and direction to better characterize the potential solution. We firstly investigate our performance on a problem with the solution is an integral of the plane waves with all known directions. The experiments demonstrate that: PWNN works much better than TANN and SIREN on varying architectures or the number of training samples, that means the plane wave activation indeed helps to enhance the representation ability of neural network toward the solution of Helmholtz equation; PWNN has competitive performance than PWPUM, e.g. the same convergence order but less relative error. Furthermore, we focus a more practical problem, the solution of which only integrate the plane waves with some unknown directions. We find that PWNN works much better than PWPUM at this case. Unlike using the plane wave basis with fixed directions in PWPUM, PWNN can learn a group of optimized plane wave basis which can better predict the unknown directions of the solution. The proposed approach may provide some new insights in the aspect of applying deep learning in Helmholtz equation.

keywords:

Helmholtz equation, deep learning, finite element method, plane wave method.65D15, 65M12, 65M15

1 Introduction

We propose and study a plane wave activation based neural network (PWNN) for solving the Helmholtz boundary value problem

| (1) | ||||

where is the wavenumber, is the boundary condition and is a lipschitz domain. We remark that the proposed approach is a basic technique for Helmholtz equation. To clarify the discussion, we only consider a simple case of Helmholtz equation, i.e. the Helmholtz boundary value problem (1). We will develop PWNN for some general Helmholtz equations, e.g. unbounded Helmholtz equation, Helmholtz scattering equation, at the future.

As a famous and significant partial differential equation (PDE), Helmholtz equation (1) appears in diverse scientific and engineering applications, since its solutions can represent the phenomenon of wave propagation, e.g. acoustic wave, electromagnetic wave, and seismic wave. The numerical methods for solving Helmholtz equation have attracted great interests of computational mathematicians, many numerical method like finite element method [24, 18], finite difference method [21], boundary element method [23, 27], plane wave method [2, 6, 13, 15], are developed for solving Helmholtz equation in different ways.

Since deep learning has made great success in engineering, researchers have become increasingly interested in using neural network to solve PDEs. Many relevant papers have been published around different topics in recent years. A deep Ritz method is presented by [11], it can use the variational formulation of elliptic PDEs and use the resulting energy as an objective function for the optimization of the parameters of the neural network. [32] convert the problem of finding the weak solution of PDEs into an operator norm minimization problem induced from the weak formulation, then parameterized the weak solution and the test function in the weak formulation as the primal and adversarial networks respectively, this method is called weak adversarial network (WAN). The physics-informed neural networks (PINN) is presented by [25], which take initial conditions and boundary conditions as the penalties of the optimization objective loss function. To deal with the noisy data, [31] proposes the Bayesian Physics-Informed Neural Networks (B-PINN), it use the Hamiltonian Monte Carlo or the variational inference as a posteriori estimator. Compared with PINNs, B-PINNs obtain more accurate predictions in scenarios with large noise. Unlike using variational formulation, this network needs high-order derivative information, it calculated the high-order differential in the framework of TensorFlow by using the backpropagation algorithm and automatic differentiation. The network can give a high accuracy solution for both forward and inverse problems. [28] proposes a deep Galerkin method. Due to the excessive computational cost of high-order differentiation of the neural network, the network bypasses this issue through the Monte Carlo method. [29] proposes sinusoidal representation networks (SIREN), in which sin(x) is used as activation function, and it performs better than other activation function like Sigmoid, Tanh or ReLU in Poisson equation and the Helmholtz and wave equations. In addition, deep learning tools are used to solve various practical problems related to PDE recently [26, 14, 5]. In conclusion, these existing works all focused on the design of the objective of using neural network to solve the general PDEs, not to develop new neural network for a specific PDE.

Motivated by the traditional activation based neural network (TANN), with Sigmoid, Tanh or ReLU as acitvation function [25], we introduce the PWNN method which use the plane wave function as activation function, since the plane wave is the basic component of the solution of Helmholtz equation. In addition, we find that the PWNN with one hidden layer is a more generalized form of the plane wave partition of unity method [2, 6, 13, 15] (PWPUM). In the plane wave method, the direction of the basis function is closely related to the accuracy of the solution. In PWPUM, the direction of basis function can only be selected averagely. [4, 3] proposes NMLA to calculate the local direction of the true solution. A ray-FEM method is presented by [12], it first uses the standard finite element method to solve the low-frequency problem, then uses NMLA to calculate the local direction of the low-frequency numerical solution, and finally uses it as the direction of plane wave basis function to solve the high-frequency problem. [20] investigate the use of energy function to approximate the Helmholtz equation (LSM), the bases used in the discrete method are either plane waves of Bessel functions. [1] proposes a wave tracking strategy (LSM-WT), in each element, a same rotation angle is added to all plane wave basis functions with uniformly selected direction, by solving the double minimization problem of energy function in [20], the error can be effectively reduced. Based on this idea, we use neural network to construct this double minimization problem, using Monte Carlo method instead of integration. We call this method PWPUM-WT. Unlike using the plane wave basis with fixed directions in PWPUM, PWNN may combine several plane waves with learned amplitudes and directions by using plane wave acivation. As the direction of each basis function can be rotated by different angles, PWNN is more flexible than PWPUM-WT. Our experiment show the proposed PWNN method is more efficient than TANN, SIREN and higher accuracy than PWPUM, PWPUM-WT method.

2 From TANN to PWNN

This section introduces plane wave activation based neural network (PWNN) from the view of traditional activation based neural network (TANN). We first introduce a well known neural network based framework for solving PDEs, i.e. the physics-informed neural networks [25]. The entire neural network consists of layers, where layer is the input layer and layer is the output layer. Layers are the hidden layers. All of the layers have an activation function, excluding the output layer. As we known, the activation function can take the form of Sigmoid, Tanh (hyperbolic tangents) or ReLU (rectified linear units).

We denote as a list of integers, with representing the lengths of input signal and output signal of the neural network. Define function , ,

where and . Thus, we can simply represent a deep fully connected feedforward neural network using the composite function ,

where is the activation function and represents the collection of all parameters. We only consider , a two-dimensional scalar problem for Helmholtz equation (1).

Solving a general PDE defined as

| (2) | ||||

by DNN is a physics-informed minimization problem with the objective consisting of two terms as follows:

| (3) | ||||

where and are the collocation points in the inside and on the boundary of domain , respectively. is a hyperparameter, by adjusting to make the internal and boundary initial errors of the same order, usually there will be a better result. The domain term and boundary term enforce the condition that the desired optimized neural network satisfies and , respectively. The effective and efficient stochastic gradient descents [19] with minibatches are recommended to solve the optimization problem (3).

For Helmholtz equation, since the solution is with complex value, we make a slight modification on the network architecture by redefining . We may consider directly use the complex neural network [30] at the future. By this definition, we have . Now, we let and , and let the activation function be or in minimization problem (3) to produce a TANN or PWNN based numerical method for solving Helmholtz equation (1) respectively.

As the key issue of numerically solving PDEs, a basic and interesting question is as follows: is it possible to use to approximate the solution of PDE? A well-known answer is the universal approximation property (UAP): if the solution is bounded and continuous, then can approximate to any desired accuracy, given the increasing hidden neurons [17, 9, 16]. We should notice that this conclusion required a simple assumption on the activation function, i.e. should be bounded and monotonically-increasing function. However, it’s easy to check that the plane wave activation doesn’t satisfy this assumption. We open a theoretical problem that whether PWNN has the UAP at the future research.

3 From PWNN to PWPUM

In this section , we will try to bridges PWNN to the plane wave partition of unity method (PWPUM), which is the famous and classical numerical method with complexity and approximation analysis. If we consider a simpler PWNN with one hidden layer, let , , , and , , , we will have the output function with the form

| (4) |

Notice that can be equivalently learned by learning , we can ignore in (4) to obtain an equivalent output formulation

| (5) |

with . So of PWNN with one hidden layer is determined by solving the following problem,

| (6) |

Here we write the loss function in integral form. In fact, we use Monte Carlo method instead of integration in neural network. In the standard finite element method or other methods, there will also be errors in numerical integration. This part of the error is only a small part when not pursuing too high accuracy, e.g. relative error less than 1e-8.

Now we come to introduce the plane wave partition of unity method [2, 6, 13, 15] (PWPUM). For the Helmholtz boundary value equation with wavenumber , , let

| (7) |

the PWPUM is to find a numerical solution with the form

| (8) |

where we define the parameters which need to be optimized. We define the discrete function space with form (8) depending on as . Instead of directly solving an physics-informed optimization problem (3) in PWNN, PWPUM instead find , such that, ,

| (9) | ||||

By the formulation of in (8) and the definition of in (7), we have , yielding that the first volume integral term of (9) vanishes. Since the Helmholtz operator and the discrete function space are both linear, we can take the test function , . Then, to find satisfying (9) is to solve a linear system with ,and

Actually, one may check that the above linear system is equivalent to solving the following problem:

| (10) |

In fact, the basis function of PWPUM is plane wave function and the wave number is strictly equal to . The internal integral error is strictly equal to 0, so this optimization problem is independent of .

For the PWPUM method, the direction of the plane wave basis function is uniformly selected because the prior information of the solution is not known. Because any direction may be the direction of the exact solution, PWPUM may need a lot of basis functions to achieve the required accuracy. In order for PWPUM to use the direction that can be changed, [1] proposes a wave tracking strategy (LSM-WT), in each element, a same rotation angle is added to all plane wave basis functions with uniformly selected direction. Here we can also use this wave tracking strategy in one element, the form of solution (8) becomes:

| (11) |

where

and the optimization problem (10) becomes:

| (12) |

In addition, if we limit in 5 to , then this is the form of PWPUM-WT, the output formulation is equal to in (11), with .

Apparently, comparing PWNN (5), PWPUM (8) and PWPUM-WT (11) will show that PWPUM-WT is a generalization of PWPUM. Furthermore, PWNN is a generalization of PWPUM-WT. Unlike using the plane wave basis with fixed directions (7) in PWPUM, PWNN will learn a group of optimized plane waves in (5) with learned both amplitude and direction . Since the approximation analysis of PWPUM was well addressed, see Theorem 3.9 of [15], and the optimization problem of PWNN (6) is obviously a generalization of that in PWPUM (10), it’s trivial to prove that the approximation error of PWNN with one hidden layer is no more than that of PWPUM theoretically. Actually, we can only consider learning the directions by adding a regularization term, like in the minimization objective (3). However, motivated by the dispersion correction technique [7, 8], i.e. using different wavenumber in the discretization scheme may reduce the pollution effect [10] of the Helmholtz problems with high wavenumber, we ignore adding this regularization term.

4 Experiments

We experimental investigate the performance of PWNN from two aspects: the exact solution of equation (1) is a combination of plane waves with all known directions (KD) or unknown directions (UD). Apparently, the UD problem is more common in practical problems. For instance, if we consider a Helmholtz scattering equation with complicated scatters, i.e. the propagation of acoustic wave in a complicated situation, we would not expect to accurately predict the directions of the multi reflection waves in advance. Considering that the sin activation based neural network (SIREN) performs better than the traditional activation based neural network (TANN) in some cases, we add the comparison between PWNN and SIREN in the experiments. The experimental results on problems KD and UD demonstrate that: PWNN works much better than TANN and SIREN, i.e. the introduction of plane wave as activation unit is indeed helpful for Helmholtz equation; PWPUM-WT has limited advantages over PWPUM, i.e. adding only one degree of freedom of rotation angle is not enough. PWNN has competitive performance with PWPUM under problem KD but outperforms PWPUM under problem UD, i.e. PWNN is indeed a generalization of PWPUM but more practical. We introduce the experimental setting first.

Experimental setting. We simply set the network architectures as having equal units for each layer. The used later indicates the hidden layers in the network, i.e. . The indicates the units per layer, i.e. . We chose Tanh as activation function in TANN. In both TANN and PWNN, we use limited-memory BFGS algorithm [22] to update parameters. L-BFGS is an improved algorithm for quasi-Newton method: BFGS. In BFGS, the approximate Hesse matrix is stored at every step, which wastes a lot of storage space in high dimensional cases. In L-BFGS, only the recent steps’ iterative information is saved for calculation to reduce the storage space of data. Here we set . The stop criterion of the inner iteration is , or that we exceeded the maximum number of allowed iterations, set as 50000 here. denotes the number of training points in the inside and on the boundary. The training points in the inside are randomly selected, while the training points on the boundary are uniformly selected. The test points used to estimate the relative errors are uniformly sampled by row and column, with 10000 in all. We remark that this paper ignores the detailed discussion of computational cost, since all the numerical examples only take up to several minutes to get satisfying results. We may consider comparing the computational cost of TANN, PWNN, and PWPUM for a 3D Helmholtz problem at the future.

4.1 Problem with known directions (KD)

We consider a square domain . Let the analytical solutions are the circular waves given, in polar coordinates , by

| (13) |

where denotes the Bessel function of the first kind and order , we choose . It’s easy to check that . We substitute into (1) to compute . We denote the numerical solution by and define:

which denote relative error between predicted and the exact solution in length, then we define the accuracy as:

We present the results of solving Helmholtz equation (1) by TANN, SIREN and PWNN. Table 1,2,3 shows the relative error between predicted and the exact solution for different network architectures, while the total numbers of training points are fixed to and , denotes the wave number of exact solution. Here we take and respectively for all these networks. We choose and increase with in order to improve the approximation of the model and reduce the generalization error to deal with the problem of higher . As expected, when the numbers of is fixed, more leads to smaller relative errors on the whole. Also, for a fixed number of , relative errors is likely to decreases with the increase of . The accuracy acquired by PWNN is higher than that of SIREN and TANN with the same numbers of and , especially for . With the increase of wave number , the accuracy of PWNN is improved more. When , a multi-layer PWNN achieves higher accuracy than one hidden layer. But for larger , one hidden layer performs better. This means that the approximation ability of one hidden layer PWNN is enough at this case, increasing the number of will make network parameters difficult to optimize.

| network | 5 | 10 | 20 | |

|---|---|---|---|---|

| TANN | 1 | 1.8e-1 | 8.2e-3 | 4.5e-3 |

| 2 | 9.5e-2 | 3.4e-3 | 1.8e-3 | |

| 3 | 2.0e-2 | 2.3e-3 | 2.0e-3 | |

| 4 | 1.6e-2 | 2.8e-3 | 3.0e-3 | |

| SIREN | 1 | 8.5e-2(0.33) | 6.5e-3(0.10) | 8.3e-3(0.27) |

| 2 | 1.4e-2(0.83) | 1.7e-3(0.30) | 3.2e-3(0.25) | |

| 3 | 6.9e-3(0.46) | 3.0e-3(0.12) | 1.7e-3(0.07) | |

| 4 | 5.6e-3(0.46) | 2.0e-3(0.15) | 1.1e-3(0.44) | |

| PWNN | 1 | 1.8e-1(0.00) | 4.1e-4(1.30) | 6.9e-6(2.81) |

| 2 | 1.8e-3(1.72) | 4.7e-4(0.86) | 4.4e-4(0.61) | |

| 3 | 2.8e-3(0.85) | 2.9e-4(0.90) | 5.9e-4(0.53) | |

| 4 | 1.8e-3(0.95) | 4.1e-4(0.83) | 6.7e-4(0.65) |

| network | 10 | 20 | 40 | |

|---|---|---|---|---|

| TANN | 1 | 4.3e-1 | 8.2e-2 | 2.6e-2 |

| 2 | 3.9e-2 | 7.1e-3 | 4.0e-3 | |

| 3 | 2.4e-2 | 4.7e-3 | 3.9e-3 | |

| 4 | 2.1e-2 | 4.3e-3 | 2.9e-3 | |

| SIREN | 1 | 1.0e-1(0.63) | 5.7e-2(0.16) | 3.1e-2(0.08) |

| 2 | 5.7e-3(0.84) | 4.7e-3(0.09) | 4.1e-3(0.01) | |

| 3 | 3.1e-3(0.89) | 2.6e-3(0.26) | 3.4e-3(0.06) | |

| 4 | 5.0e-3(0.62) | 2.5e-3(0.24) | 1.2e-3(0.38) | |

| PWNN | 1 | 1.5e-1(0.46) | 1.7e-5(3.68) | 5.4e-6(3.68) |

| 2 | 1.1e-3(1.55) | 7.3e-4(0.99) | 4.0e-4(1.00) | |

| 3 | 9.3e-4(1.41) | 2.4e-4(1.29) | 1.2e-3(0.51) | |

| 4 | 4.2e-3(0.70) | 1.4e-4(1.49) | 9.1e-4(0.50) |

| network | 20 | 40 | 80 | |

|---|---|---|---|---|

| TANN | 1 | 6.0e-1 | 3.2e-1 | 1.4e-1 |

| 2 | 6.8e-2 | 3.8e-2 | 3.3e-2 | |

| 3 | 3.5e-2 | 9.5e-3 | 1.0e-2 | |

| 4 | 1.9e-2 | 1.4e-2 | 9.2e-3 | |

| SIREN | 1 | 5.2e-1(0.06) | 2.5e-2(1.10) | 2.8e-1(0.30) |

| 2 | 2.4e-1(0.55) | 1.2e-2(0.50) | 2.5e-2(0.12) | |

| 3 | 1.2e-2(0.46) | 9.4e-3(0.00) | 1.5e-2(0.18) | |

| 4 | 9.6e-3(0.30) | 1.2e-2(0.07) | 5.0e-3(0.26) | |

| PWNN | 1 | 1.8e-1(0.52) | 7.9e-7(5.60) | 4.7e-6(4.47) |

| 2 | 1.4e-2(0.69) | 1.2e-3(1.50) | 1.0e-3(1.52) | |

| 3 | 1.7e-3(1.31) | 5.2e-4(1.26) | 1.5e-3(0.82) | |

| 4 | 1.7e-3(1.04) | 2.2e-4(1.80) | 1.0e-3(0.96) |

| network | 200 | 500 | 1000 | 2000 | |

|---|---|---|---|---|---|

| TANN | 204 | 4.5e-1(5.1e-2) | 4.2e-1(7.5e-2) | 3.6e-1(8.0e-2) | 3.9e-1(6.7e-2) |

| 504 | 4.5e-1(5.4e-2) | 4.0e-1(6.7e-2) | 3.8e-1(8.6e-2) | 3.2e-1(8.6e-2) | |

| 1004 | 4.4e-1(6.1e-2) | 3.9e-1(6.6e-2) | 3.4e-1(7.4e-2) | 3.2e-1(8.3e-2) | |

| 2004 | 4.5e-1(5.7e-2) | 3.8e-1(8.1e-2) | 3.4e-1(9.0e-2) | 3.1e-1(9.4e-2) | |

| SIREN | 204 | 5.3e-1(8.1e-2) | 5.2e-1(9.5e-2) | 5.2e-1(1.3e-1) | 4.8e-1(1.3e-1) |

| 504 | 5.0e-1(1.0e-1) | 4.8e-1(1.3e-1) | 4.7e-1(1.4e-1) | 4.8e-1(1.4e-1) | |

| 1004 | 4.9e-1(1.3e-1) | 4.6e-1(1.4e-1) | 4.7e-1(1.4e-1) | 4.3e-1(1.5e-1) | |

| 2004 | 5.1e-1(6.8e-2) | 5.0e-1(9.2e-2) | 4.9e-1(1.0e-1) | 4.0e-1(1.7e-1) | |

| PWNN | 204 | 7.7e-7(2.2e-7) | 7.4e-7(2.5e-7) | 6.9e-7(1.6e-7) | 6.8e-7(1.8e-7) |

| 504 | 7.4e-7(2.1e-7) | 6.6e-7(2.1e-7) | 6.6e-7(1.8e-7) | 6.5e-7(2.2e-7) | |

| 1004 | 7.9e-7(2.6e-7) | 6.7e-7(1.9e-7) | 6.5e-7(2.2e-7) | 6.3e-7(1.8e-7) | |

| 2004 | 7.7e-7(2.6e-7) | 7.0e-7(1.6e-7) | 6.5e-7(1.9e-7) | 6.7e-7(1.9e-7) |

| network | 200 | 500 | 1000 | 2000 | |

|---|---|---|---|---|---|

| TANN | 204 | 1.6e-1(8.2e-2) | 6.6e-2(2.2e-2) | 4.6e-2(1.2e-2) | 4.1e-2(1.2e-2) |

| 504 | 1.5e-1(7.8e-2) | 5.7e-2(1.6e-2) | 4.5e-2(1.2e-2) | 3.5e-2(9.7e-3) | |

| 1004 | 1.6e-1(1.0e-1) | 5.7e-2(1.7e-2) | 3.8e-2(1.2e-2) | 3.3e-2(1.0e-2) | |

| 2004 | 1.4e-1(5.3e-2) | 5.4e-2(2.0e-2) | 4.2e-2(1.9e-2) | 3.3e-2(9.5e-3) | |

| SIREN | 204 | 6.9e-2(3.7e-2) | 3.7e-2(1.2e-2) | 2.8e-2(7.0e-3) | 2.7e-2(9.0e-3) |

| 504 | 6.0e-2(3.5e-2) | 3.4e-2(1.0e-2) | 2.3e-2(6.7e-3) | 2.4e-2(7.0e-3) | |

| 1004 | 5.7e-2(2.8e-2) | 3.0e-2(8.0e-3) | 2.2e-2(5.7e-3) | 1.9e-2(6.6e-3) | |

| 2004 | 5.5e-2(2.1e-2) | 2.8e-2(8.7e-3) | 2.2e-2(5.7e-3) | 1.9e-2(5.8e-3) | |

| PWNN | 204 | 2.1e-1(2.3e-1) | 2.5e-3(5.7e-4) | 1.8e-3(5.0e-4) | 1.4e-3(7.6e-4) |

| 504 | 2.2e-1(2.4e-1) | 2.5e-3(5.5e-4) | 1.7e-3(4.0e-4) | 1.4e-3(3.3e-4) | |

| 1004 | 2.2e-1(2.2e-1) | 2.5e-3(4.9e-4) | 1.8e-3(4.0e-4) | 1.3e-3((2.9e-4)) | |

| 2004 | 2.3e-1(2.5e-1) | 2.5e-3(4.7e-4) | 1.8e-3(3.5e-4) | 1.4e-3(4.1e-4) |

It can be seen that the performance of single-layer TANN and SIREN is poor, while the performance of single-layer PWNN is better than that of multi-layer PWNN when is not very low. Next, we fix and respectivhoely choose for TANN, SIREN and PWNN then compare them under different numbers of training data.

In Table 4, 5, we fixed the architecture for TANN, SIREN and PWNN, and give the relative error under different numbers of training data. Here we randomly generate 50 groups of internal sample points, calculate the average value and standard deviation of relative error. In most cases, we can see that the influence of random selection of internal sample points on the error is acceptable. It can be seen that the error of TANN and SIREN is sensitive to the number of sample points, and the error does not necessarily decrease with the increase of the number of sample points, although the overall trend is downward. This makes the selection of sample points more difficult. Single-layer PWNN is insensitive to the number of sample points, while multi-layer PWNN is sensitive to that. With few internal sample points, the standard deviation of relative error of multi-layer PWNN is large. Obviously, this is because multi-layer PWNN is more difficult to optimize than single-layer PWNN. Until a more suitable optimization method is found, single-layer PWNN is a better choice than multi-layer. We know that in the standard finite element method, in order to characterize the wave property of the solution in the region, it is necessary to choose DOFs as . It is also necessary to take (sample points inside) here in TANN, SIREN and multi-layer PWNN. In Table 5, when , because of the small number of internal sample points, the expression of internal wave propagation is not enough, which makes the error larger for both TANN and SIREN. But for single-layer PWNN, as it adopts the form of plane wave basis function, we can select much less and achieve a good accuracy. This will reduce a lot of computational cost, because the internal sample points need to calculate the second derivative. In fact, in the later example, we use sinlge-layer PWNN to solve a large wave number problem (), and we only choose the training data as and , this is difficult for other networks to do. As for multi-layer PWNN, although it can also represent the form of plane wave basis function, it also needs more internal sample points due to the increased optimization difficulty.

The relative error of TANN, SIREN and PWNN for different and wave number are shown in Figure 1, 2. are respectively taken as 1, 2 for TANN, SIREN and PWNN. We set the x-axis to , in order to observe can the increased wave number be handled by increasing the network structure accordingly. In Figure 1, is fixed in each subfigure, PWNN is basically more accurate than TANN and SIREN under the same conditions. In Figure 2, activation function is fixed in each subfigure. It can be seen that with the increase of , the accuracy of single-layer PWNN increases under the same , while that of TANN and SIREN decreases. This proves that PWNN is more scalable than TANN and SIREN for solve Helmholtz equations. Moreover, single-layer PWNN has greater advantages when dealing with high frequency problems.

Figure 4 shows the training process of TANN, SIREN and PWNN. are respectively taken as 1, 2, and for TANN, SIREN and PWNN. It is obvious that the loss of PWNN decreases faster and converges faster than that of TANN and SIREN.

Based on the above experimental results, we can draw a conclusion that PWNN works much better than TANN and SIREN on varying architectures or the number of training samples for solving Helmholtz equation.

We now compare one hidden layer PWNN with PWPUM and PWPUM-WT in the domain . Here indicates the units in PWNN’s hidden layer and the number of plane wave basis in PWPUM at the same time. Relative error of PWPUM, PWPUM-WT and PWNN under different is shown in Table 6, 7, while the numbers of training points in PWNN are maintained as and for the wave number in exact solution 13 and and for . As expected, more corresponds with smaller relative errors for PWPUM, PWPUM-WT and PWNN, PWPUM-WT performs better than PWPUM, and PWNN gives a best output among these three methods under the same number of .

The difference between PWPUM and PWNN is that PWNN will learn a group of optimized plane waves . After training PWNN with optimized , we can fix the directions in PWPUM (8) to . Then use PWPUM to test whether using these directions can get smaller relative errors than the uniformly selected directions, we call this method ‘PWPUM with o.d. (optimized directions)’. We compute the numerical solutions in two cases of exact solution 13: and . The relative error of PWPUM, PWPUM-WT, PWNN and ‘PWPUM with o.d.’ for growing number of in these two cases is shown in Figure 5. It can be seen that PWNN has the same convergence order but less relative error than PWPUM and PWPUM-WT. As ‘PWPUM with o.d.’ has higher accuracy than PWPUM and PWPUM-WT, we come to a conclusion that optimized directions are better than the uniformly selected directions or add a rotation angle to it. These experimental results can guarantee that PWNN has competitive performance with PWPUM for the KD problem.

| PWPUM | PWPUM-WT | PWNN | |

|---|---|---|---|

| 5 | 6.1e-1 | 5.8e-1(0.02) | 4.8e-1(0.10) |

| 7 | 5.9e-1 | 5.3e-1(0.05) | 4.3e-1(0.14) |

| 9 | 5.3e-1 | 4.3e-1(0.09) | 2.6e-1(0.31) |

| 11 | 3.5e-1 | 1.8e-1(0.29) | 1.1e-1(0.50) |

| 13 | 2.4e-1 | 6.0e-2(0.60) | 1.6e-2(1.18) |

| 15 | 3.7e-2 | 1.3e-2(0.45) | 1.4e-3(1.42) |

| 17 | 5.8e-3 | 2.0e-3(0.46) | 9.6e-5(1.78) |

| 19 | 2.3e-4 | 2.2e-4(0.02) | 3.1e-5(0.87) |

| PWPUM | PWPUM-WT | PWNN | |

|---|---|---|---|

| 100 | 7.8e-1 | 3.4e-1(0.36) | 1.2e-1(0.81) |

| 105 | 7.8e-1 | 3.3e-1(0.37) | 5.3e-2(1.17) |

| 110 | 4.6e-1 | 1.5e-1(0.49) | 4.0e-4(3.06) |

| 115 | 1.2e-1 | 4.7e-2(0.41) | 3.7e-4(2.51) |

| 120 | 1.9e-2 | 5.4e-3(0.55) | 1.0e-4(2.28) |

| 125 | 3.3e-3 | 5.8e-4(0.76) | 2.7e-5(2.09) |

4.2 Problem with unknown directions (UD)

Actually, the exact solution of (1) usually does not cover all directions. We focus a more practical problem, the solution of which only integrate the plane waves with some unknown directions. Consider a square domain , let the exact solution be

| (14) |

each is a randomly selected two-dimensional vector satisfies , denotes the number of directions of exact solution, and the coefficient of each direction is 1. Our training dataset consists of and .

To assess the strength of our approach, we give several different groups , denotes the number of directions of exact solution and denotes the units in PWNN’s hidden layer and the number of plane wave basis in PWPUM at the same time. We let respectively and choose four groups of : for each . In each group, we randomly generate 50 exact solutions with directions, then use PWPUM, PWPUM-WT and PWNN with the corresponding number of to solve these corresponding Helmholtz equations.

| PWPUM | PWPUM-WT | PWNN | |

|---|---|---|---|

| Average | 2.9e-1 | 1.2e-1(0.38) | 3.3e-5(3.94) |

| Max in PWNN | 2.6e-1 | 9.3e-2(0.45) | 3.9e-4(2.82) |

| Min in PWNN | 4.3e-1 | 1.2e-1(0.55) | 1.1e-8(7.59) |

| PWPUM | PWPUM-WT | PWNN | |

| Average | 1.3e-1 | 1.3e-2(1.00) | 4.4e-6(4.47) |

| Max in PWNN | 7.6e-2 | 7.7e-3(0.99) | 2.7e-5(3.45) |

| Min in PWNN | 4.2e-2 | 1.8e-2(0.37) | 2.5e-8(6.23) |

| PWPUM | PWPUM-WT | PWNN | |

| Average | 2.4e-1 | 9.5e-2(0.40) | 1.0e-3(2.38) |

| Max in PWNN | 7.0e-2 | 5.2e-2(0.13) | 4.3e-3(1.21) |

| Min in PWNN | 3.0e-1 | 1.6e-1(0.27) | 3.7e-5(3.91) |

| PWPUM | PWPUM-WT | PWNN | |

| Average | 1.1e-1 | 1.2e-2(0.96) | 4.7e-6(4.37) |

| Max in PWNN | 2.8e-2 | 9.5e-3(0.47) | 4.2e-5(2.82) |

| Min in PWNN | 3.4e-2 | 1.5e-2(0.36) | 9.8e-8(5.54) |

| PWPUM | PWPUM-WT | PWNN | |

|---|---|---|---|

| Average | 5.8e-1 | 4.8e-1(0.08) | 3.0e-1(0.29) |

| Max in PWNN | 6.4e-1 | 4.4e-1(0.16) | 4.8e-1(0.12) |

| Min in PWNN | 7.0e-1 | 4.4e-1(0.20) | 1.7e-3(2.61) |

| PWPUM | PWPUM-WT | PWNN | |

| Average | 4.4e-1 | 2.8e-1(0.20) | 8.8e-4(2.70) |

| Max in PWNN | 3.8e-1 | 3.0e-1(0.10) | 2.1e-2(0.26) |

| Min in PWNN | 4.9e-1 | 2.6e-1(0.28) | 1.7e-9(8.46) |

| PWPUM | PWPUM-WT | PWNN | |

| Average | 5.9e-1 | 4.9e-1(0.08) | 3.0e-1(0.29) |

| Max in PWNN | 6.5e-1 | 4.6e-1(0.15) | 6.3e-1(0.01) |

| Min in PWNN | 6.1e-1 | 5.2e-1(0.07) | 7.0e-2(0.94) |

| PWPUM | PWPUM-WT | PWNN | |

| Average | 4.0e-1 | 2.6e-1(0.20) | 4.3e-3(1.97) |

| Max in PWNN | 4.3e-1 | 2.0e-1(0.33) | 2.4e-2(1.25) |

| Min in PWNN | 4.5e-1 | 2.4e-1(0.27) | 1.8e-7(6.40) |

The relative errors for each group are summarized in Table 8, 9. ‘Average’ represents the average of these relative errors in 50 times. ‘Max in PWNN’ or ‘Min in PWNN’ represents the relative errors between the predicted and the exact solution that makes the PWNN solution’s the largest or smallest in this group, respectively.

When , PWNN works much better than PWPUM and PWPUM-WT in every group. For , because increases and the approximation space remains unchanged, the errors of various methods are increasing. PWNN keeps good accuracy when , while PWPUM and PWPUM-WT can not achieve the relative error less than 0.1. How to use as few as possible to learn the direction of the exact solution in the case of large wave number will be our future research work.

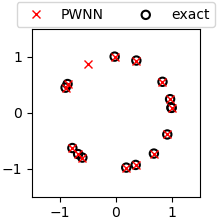

Figure 6 prints the directions of exact solutions and PWNN solutions in ‘Max’ (‘Max in PWNN’) and ‘Min’ (‘Min in PWNN’) cases of , ,, and . In ‘Min’ case, the optimized directions are almost the same as the directions of exact solution. In ‘Max’ case, the optimized directions also basically the same as most of the directions of exact solution, except some very close directions.

Next, we randomly generate exact solutions in two cases: and . The total numbers of training points in PWNN are fixed to and . of PWPUM, PWPUM-WT and one hidden layer PWNN for growing number of is shown in Figure 7. It is obvious that when is not very large, e.g. , PWNN gives much better solution than PWPUM. Since it is theoretically guaranteed that the upper bound of relative error of PWPUM will decrease with the increase of , and PWNN itself has the error of generalization and optimization, PWPUM works better than PWNN when is large, e.g. . However, to achieve an acceptable relative error, e.g. 1e-3, PWNN only needs to use a much smaller than PWPUM. In UD problem, the performance of PWPUM-WT are not much better than that of PWPUM, which proves that in some cases, adding only one degree of freedom of rotation angle is not enough.

Based on the experimental results, we can conclude that, unlike using the plane wave basis with fixed directions in PWPUM, PWNN can find almost the best directions. Thus PWNN is indeed a generalization of PWPUM but more practical.

5 Conclusion

This paper proposed a plane wave activation based neural network (PWNN) for Helmholtz equation. We compared PWNN to traditional activation based neural network (TANN), sin based neural network (SIREN) and plane wave partition of unity method (PWPUM). We draw lessons from LSM-WT and propose PWPUM-WT, which can be regarded as a simplified PWNN. The experimental results can guarantee the improvement of introducing plane wave activation for Helmholtz equation and the improvement of PWNN on PWPUM for more practical problems. This paper only provide a basic technique for Helmholtz equation. We will develop PWNN for some general Helmholtz equations at the future.

Acknowledgements

This research was partially supported by National Natural Science Foundation of China with grant 11831016 and was partially supported by Science Challenge Project, China TZZT2019-B1.1.

References

- [1] M. Amara, S. Chaudhry, J. Diaz, R. Djellouli, and S. L. Fiedler. A local wave tracking strategy for efficiently solving mid- and high-frequency helmholtz problems. Computer Methods in Applied Mechanics Engineering, 276:473–508, 2014.

- [2] Ivo M Babuska and J. M. Melenk. The partition of unity method. International Journal for Numerical Methods in Engineering, 40(4):727–758, 1997.

- [3] Jean-David Benamou, Francis Collino, and Simon Marmorat. Numerical microlocal analysis revisited. 01 2011.

- [4] Jean David Benamou, Francis Collino, and Olof Runborg. Numerical microlocal analysis of harmonic wavefields. Journal of Computational Physics, 199(2):717–741, 2004.

- [5] Jens Berg and Kaj Nyström. A unified deep artificial neural network approach to partial differential equations in complex geometries. Neurocomputing, 317:28–41, 2018.

- [6] Annalisa Buffa and Peter Monk. Error estimates for the ultra weak variational formulation of the helmholtz equation. Mathematical Modelling and Numerical Analysis, 42(6):925–940, 2008.

- [7] Pierre-Henri Cocquet, Martin J. Gander, and Xueshuang Xiang. A finite difference method with optimized dispersion correction for the helmholtz equation. International Conference on Domain Decomposition Methods, pages 205–213, 2017.

- [8] Pierre-Henri Cocquet, Martin J. Gander, and Xueshuang Xiang. Dispersion correction for helmholtz in 1d with piecewise constant wavenumber. International Conference on Domain Decomposition Methods, 2019.

- [9] George Cybenko. Approximation by superpositions of a sigmoidal function. Mathematics of control, signals and systems, 2(4):303–314, 1989.

- [10] Arnaud Deraemaeker, Ivo M Babuska, and Philippe Bouillard. Dispersion and pollution of the fem solution for the helmholtz equation in one, two and three dimensions. International Journal for Numerical Methods in Engineering, 46(4):471–499, 1999.

- [11] Weinan E, Jiequn Han, and Arnulf Jentzen. Deep learning-based numerical methods for high-dimensional parabolic partial differential equations and backward stochastic differential equations. Communications in Mathematics and Statistics, 5(4):349–380, 2017.

- [12] Jun Fang, Jianliang Qian, and Leonardo Zepeda-Nú?ez…. Learning dominant wave directions for plane wave methods for high-frequency helmholtz equations. Research in the Mathematical ences, 4(1), 2017.

- [13] Claude J. Gittelson, Ralf Hiptmair, and Ilaria Perugia. Plane wave discontinuous galerkin methods: Analysis of the h-version. Mathematical Modelling and Numerical Analysis, 43(2):297–331, 2009.

- [14] Sam Greydanus, Misko Dzamba, and Jason Yosinski. Hamiltonian neural networks. arXiv: Neural and Evolutionary Computing, 2019.

- [15] R. Hiptmair, A. Moiola, and I. Perugia. Plane wave discontinuous galerkin methods for the 2d helmholtz equation: Analysis of the -version. SIAM Journal on Numerical Analysis, 49(1):264–284, 2011.

- [16] Kurt Hornik. Approximation capabilities of multilayer feedforward networks. Neural networks, 4(2):251–257, 1991.

- [17] Kurt Hornik, Maxwell Stinchcombe, and Halbert White. Multilayer feedforward networks are universal approximators. Neural networks, 2(5):359–366, 1989.

- [18] Frank Ihlenburg and Ivo Babuska. Finite element solution of the helmholtz equation with high wave number part i: The h-version of the fem. Computers Mathematics With Applications, 30(9):9–37, 1995.

- [19] Yann A LeCun, Léon Bottou, Genevieve B Orr, and Klaus-Robert Müller. Efficient backprop. In Neural networks: Tricks of the trade, pages 9–48. Springer, 2012.

- [20] Peter Monk and Daqing Wang. A least-squares method for the helmholtz equation. Computer Methods in Applied Mechanics and Engineering, 175:121–136, 1999.

- [21] Gregory A Newman and David L Alumbaugh. Frequency-domain modelling of airborne electromagnetic responses using staggered finite differences. Geophysical Prospecting, 43(8):1021–1042, 1995.

- [22] Jorge Nocedal. Updating quasi-newton matrices with limited storage. Mathematics of Computation, 35(151):773–782, 1980.

- [23] P. W. Partridge, C. A. Brebbia, and L. C. Wrobel. The Dual Reciprocity Boundary Element Method. Elsevier Applied Science, 1992.

- [24] Preston and T. Finite elements for electrical engineers. Computer Aided Engineering Journal, 1(5):164, 1984.

- [25] M Raissi, P Perdikaris, and GE Karniadakis. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational Physics, 378:686–707, 2019.

- [26] Keith Rudd, Gianluca Di Muro, and Silvia Ferrari. A constrained backpropagation approach for the adaptive solution of partial differential equations. IEEE transactions on neural networks and learning systems, 25(3):571–584, 2013.

- [27] Liang Shen and Yijun Liu. An adaptive fast multipole boundary element method for three-dimensional acoustic wave problems based on the burton-miller formulation. Computational Mechanics, 40(3):461–472, 2007.

- [28] Justin Sirignano and Konstantinos Spiliopoulos. Dgm: A deep learning algorithm for solving partial differential equations. Journal of Computational Physics, 375:1339–1364, 2018.

- [29] Vincent Sitzmann, Julien N. P Martel, Alexander W Bergman, David B Lindell, and Gordon Wetzstein. Implicit neural representations with periodic activation functions. 2020.

- [30] Chiheb Trabelsi, Olexa Bilaniuk, Ying Zhang, Dmitriy Serdyuk, Sandeep Subramanian, Joao Felipe Santos, Soroush Mehri, Negar Rostamzadeh, Yoshua Bengio, and Christopher J Pal. Deep complex networks. In ICLR 2018 : International Conference on Learning Representations 2018, 2018.

- [31] Liu Yang, Xuhui Meng, and George Em Karniadakis. B-pinns: Bayesian physics-informed neural networks for forward and inverse pde problems with noisy data. arXiv: Machine Learning, 2020.

- [32] Yaohua Zang, Gang Bao, Xiaojing Ye, and Haomin Zhou. Weak adversarial networks for high-dimensional partial differential equations. arXiv: Numerical Analysis, 2019.