This work has been submitted to the IEEE for possible publication. Copyright may be transferred without notice, after which this version may no longer be accessible.

A Neural Network Model of the Entorhinal Cortex and Hippocampus for Event-order Memory Processing

Abstract

To solve a navigation task based on experiences, we need a mechanism to associate places with objects and to recall them along the course of action. In a reward-oriented task, if the route to a reward location is simulated in mind after experiencing it once, it might be possible that the reward is gained efficiently. One way to solve it is to incorporate a biologically plausible mechanism. In this study, we propose a neural network that stores a sequence of events associated with a reward. The proposed network recalls the reward location by tracing them in its mind in order. We simulated a virtual mouse that explores a figure-eight maze and recalls the route to the reward location. During the learning period, a sequence of events relating to firing along a passage was temporarily stored in the heteroassociative network, and the sequence of events is consolidated in the synaptic weight matrix when a reward is fed. For retrieval, the sequential activation of conjunctive cue-place cells toward the reward location is internally generated by an impetus input. In the figure-eight maze task, the location of the reward was estimated by mind travel, irrespective of whether the reward is in the counterclockwise or distant clockwise route. The mechanism of efficiently reaching the goal by mind travel in the brain based on experiences is beneficial for mobile service robots that perform autonomous navigation.

Keywords Entorhinal cortex, hippocampus, long-term memory

1 Introduction

Robot technology has made remarkable progress, but it has yet to reach the point where robots can interact with humans on an equal footing. Home service robots are expected to learn about an event and accumulate experiences as an episode. An environment is considered different from house to house and from person to person. A circumstance may be less likely to be repeated. Currently, artificial intelligence is mainly aimed at generalizing and processing a large amount of information and an individual preference is unconsidered. Several studies relating to neural networks for robots that perform services in human living spaces have emerged [1, 2, 3].

Humans tend to memorize impressive events they encountered in the past, and they tend to retrieve a series of experienced events in a certain circumstance. Storage of information expressing when, where, and what we did is called episodic memory. Each series of episodes is considered to be linked intermittently [4]. The medial temporal lobe contributes to episodic memory. Any damage to the medial temporal lobe causes severe impairment of episodic memory formation [5].

Episodic memory-related neurons, such as place cells [6] and time cells [7], have been found in the medial temporal lobe of mice and rats. Place cells in the hippocampus fire at a specific location [8]. Tolman [9] introduced a cognitive map: the idea that mice or rats form maps in their brain as they move through space. In discovering place cells, O’Keefe et al. [6] proposed that a cognitive map exists in the hippocampus. Place cells are considered a component of the cognitive map. Speed cells [10] and head-direction cells [11] are also found in the medial temporal lobe. Speed cells in the medial entorhinal cortex represent movement speed. The self-position can be estimated by performing path integration based on the input from these cells [12].

Synaptic plasticity, a function that enhances or attenuates synapses by cell activity, is deeply involved in episode memory. Information transmission in the brain is performed via synapses, and various learning rules have been proposed based on this idea of synaptic plasticity. Hebb’s rule is a typical learning rule of synaptic plasticity [13]. Synaptic plasticity that depends on the relative timing between presynaptic and postsynaptic neurons is called spike-timing-dependent plasticity (STDP). However, the time window for the Hebb’s rule is narrow. The interval between presynaptic and postsynaptic spikes must be within a few tens of milliseconds. Memorizing episodes involves remembering events that happened at the same time and those that happened in sequence over time. Events that occur more than a few tens of seconds apart are also linked by the hippocampus as episodic memories. For learning, there is also a distant reward problem. Typically, there is a couple of minutes of the temporal gap between the neural activity that determined the action and reward. To solve the distal reward problem, we need a mechanism that ties events together.

In this study, we propose a neural network model that consolidates a temporal order of cue-place association. A physiology-based simplified model assuming the entorhinal cortex and hippocampus is used to associate cues with places. Additionally, as a part other than the hippocampus, we propose a long-term memory matrix in which a sequential relationship of event occurrence is preserved over time. Furthermore, we use computer simulations to verify whether the proposed model retrieves the order of the association information of places with objects.

2 Neural network model

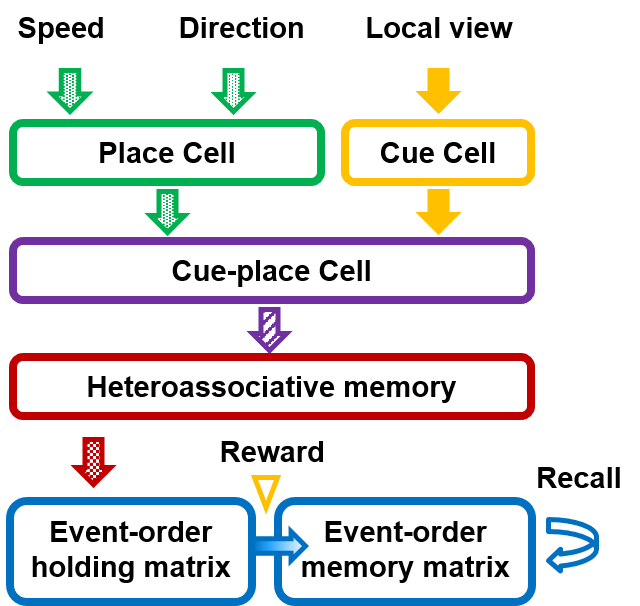

Fig. 1 depicts a connection diagram of the proposed neural network model. Signals of own speed and head orientation and an external cue signal are inputted into the neural network. The speed signal and head-direction signal are integrated into place cells. The cue signal triggers cue cells. The outputs of the place and cue cells are integrated into cue-place cells. In this study, the integrated cue-place information was treated as an event. The events are stored along with the movement of the passage. Neuronal activities of proximal cue-place cells are associated with a sequence of events in the heteroassociative network.

The output of the heteroassociative memory network is temporarily held in the event-order holding (EH) matrix within a certain time after the event occurs. When a reward is given, associated event information in the EH matrix is fixed in the event-order memory (EM) matrix. Fig. 2 depicts the timing chart that illustrates the reinforcement of firing patterns occurring on a millisecond timescale, even if the reward is delayed by a few seconds. This property is known as slow synaptic processes, which act as synaptic eligibility traces [14, 15] or synaptic tags [16]. A synaptic eligibility trace set a flag between pre- and postsynaptic neurons at a synapse that leads to a weight change only if an additional gating signal, such as a reward, is present. It is found in the hippocampus [17], cortex [18], and striatum [19, 20]. We introduce the EH matrix in which each matrix component works as eligibility trace. The cue-place cells fire at the timing of the corresponding events and , and a temporal difference of these firing patterns is converted into event-order information by weighting eligibility variables between spikes in the EH matrix, as shown by the middle traces in Fig. 2. These eligibility variables exponentially decay over time. When a reward is fed, the eligibility variables are sampled, and they are converted into a variable representing the ordinal relationship between events in the EM matrix, as shown by bottom traces in Fig. 2.

2.1 Place cells

Place cells fire when a mouse enters a particular place in an environment. In this study, the figure-eight maze includes place cells, and their receptive fields were predetermined. , where and are the maximum values in the -axis and -axis directions, respectively. A receptive field of a place cell is expressed by an activity bump, which is approximated by a 2-dimensional Gaussian distribution of firing rate.

| (1) |

where is the centroid of -th place cell (), and is the variance of the distribution. The velocity and the head direction drives the location of the activity bump;

| (2) | |||

| (3) |

where is the unit time.

2.2 Cue cells

Cue cells fire in response to a non-spatial input derived by the signal of local view, which is called an environmental cue. Once an environmental cue signal is recognized, the firing rate of a -th cue cell () is made, and this rate at a constant frequency.

| (4) |

where is the total number of an environmental cue. For simplification, one cue cell is associated with one environmental cue information.

2.3 Cue-place cells

Cue-place cells integrate the neuronal activity of place and cue cells. The outputs of the place and cue cell corresponding to the -th position and -th cue stimulus, respectively, are combined in the cue-place cells. The firing rate of the cue-place cells is clipped at the certain value as follows:

| (5) |

where is the total number of events, and . This means that the cue-place cell fires where the cue signal occurs.

2.4 Heteroassociative network

The heteroassociative network is a matrix in which components are modulated between related cue-place cells. Firings of cue-place cells are seconds apart, even in related events. The STDP rule is only tied together limited to a postsynaptic firing that occurs within a few tens of milliseconds after a presynaptic firing [21, 22]. If a presynaptic firing is held several seconds, two temporarily distant firings can be linked. Neural activity is potentially held several tens of seconds by cue-holding neurons [23] or time cells [7]. Here we assume that the neural activity of the cue-place cell is sustained by the cue-holding neuron-like mechanism. Thus, even if the postsynaptic cell fires a few seconds after the presynaptic cell fires, the synaptic connections are strengthened. However, we introduce an exponential decay function for synaptic potentiation. The synaptic weight modulation between presynaptic and postsynaptic cells is as follows:

| (6) |

where is the time difference between presynaptic and postsynaptic firing timings, and is the learning rate.

It would be mismanagement of resources if all neuron firing times were recorded to store the order in which events occur. So, we propose a method to extract event-order information. The synaptic weight matrix of the heteroassociative memory network is modulated based on correlations between presynaptic and postsynaptic neurons. The temporal proximity between a featured event (post) and events that precede it (pre) is retained as the magnitude of the synaptic weight (). The component of is described below:

| (11) |

| (12) | |||

| (13) |

where is the period for seeking event pairs. is the neural activity of the post event, and is the neural activity of the pre event.

2.5 Event-order holding matrix

All the synaptic weights expressed by the matrix of the heteroassociative memory network are fixed in the EM matrix via the EH matrix. If all events throughout the learning period are stored, a huge amount of computational resources are consumed to express the order of the events. To solve this problem, we proposed the EH matrix using a synaptic eligibility trace [24].

The sequence information for each event, which decays over time, represented by a heteroassociative memory network, is summed up in the EH matrix. The matrix of the eligibility variable is stimulated by .

| (14) |

The eligibility variable exponentially decays with the time constant . The initial value is an zero matrix.

2.6 Event-order memory matrix

The order information of past events is transferred from the EH matrix to the EM matrix . We introduce a mechanism to store events synchronized with the reward timing in the memory. The component represents the strength of the relationship between the events and .

| (15) |

When a reward is given at , the eligibility variable allows a change of . The initial value is an zero matrix. This change is gated by the reward-associated value of dopamine.

| (16) |

where exponentially reduces with time constant , and is the delta function. If , ; otherwise .

2.7 Recall scheme using synaptic weights stored in the EM matrix

Herein, we describe how to recall event-order information from the EM matrix . Let us consider recall procedures to extract events associated with impetus inputs event according to the order of stored events. A recall matrix is prepared to recall the event-sequence information stored in the EM matrix , where is an iteration number of recall procedures. The recall matrix is formed by operating from the right on the initial matrix . By repeatedly applying the EM matrix to the recall matrix, the events are recalled in the order starting from the impetus input . Recall procedures are shown in the following equations:

| (17) | |||

| (22) | |||

| (23) | |||

| (24) |

where ) is a component of the impetus input to recall . If the input value exceeds the threshold value , equals the input value; otherwise, equals . Repeating this recall process starting from the impetus input , temporally distant events can be recalled.

3 Experimental results

3.1 Experimental setup

We conducted computer simulations in which a virtual mouse traveled in the figure-eight maze, as shown in Fig. 3. Each aisle is numbered in the order in which it moves. The mouse moves counterclockwise from the center aisle, returns to its initial position, and then moves clockwise. Since the center aisle was visited twice, two numbers were assigned.

a. Route recall task

b. Reward recognition task

c. Constructed-route retrieval task

The map size to be simulated was . In the maze, 2500 place cells were assigned. = 10. A moving speed of 8 – 10 cm/s was set. Movable directions were RIGHT, UP, LEFT and DOWN. The head direction was considered as an environmental cue since any object seen when the head is in an orientation is a cue. Also, the reward was used as an environmental cue for the same reason. The number of cues is 5. The number of events prepared was 12,500. The unit time was set at s. The time constant is set at 4 s. Although it takes time to move a virtual mouse, if the time window of the learning function is long enough, the activities of the cue-place cells are connected along the route of the excursion. We note that is 2 s throughout the simulation. The time constant for the EH matrix is 8 s and the time constant for the EM matrix is 3 s. Table 1 shows the number of neurons and synapses in the network in our experiments.

| Block name | Number |

|---|---|

| Speed cell | 1 |

| Head direction cell | 1 |

| Place cell | 2,500 () |

| Cue cell | 5 |

| Cue-place cell | 12,500 () |

| Heteroassociative memory network | 250,000 () |

| Event-order holding matrix | 156,250,000 () |

| Event-order memory matrix | 156,250,000 () |

* = 20

In this study, three tasks were conducted. The first experiment was a path recall task. We verified whether it was possible to recall the moved routes in the order in which they were experienced using the proposed model. The second experiment was a reward recognition task. We verified whether the rewarded route could be recognized if the reward was given differently depending on the route traveled. The third task was a constructed-route retrieval task. We tested whether the mouse could integrate the traveled routes to reach the reward when it traveled the first route, took a break, and then traveled the next route.

3.1.1 Route recall task

During the learning phase, the virtual mouse proceeds in the aisle number order , and then . The initial location was the center aisle. The reward was given when the mouse returns to the initial location. In the recall phase, which is after the learning phase, an impetus input to recall was set, and the recall procedure was executed. is defined by , and , where , and .

3.1.2 Reward recognition task

In the route recall task, the virtual mouse traveled counterclockwise and clockwise along the aisle numbers during the learning phase. The initial location was . We prepared two different timings for giving rewards. In the first timing, the virtual mouse was rewarded in the center aisle after the counterclockwise excursion. In the second timing, the reward was given after the clockwise excursion.

3.1.3 Constructed-route retrieval after two single-loop tasks

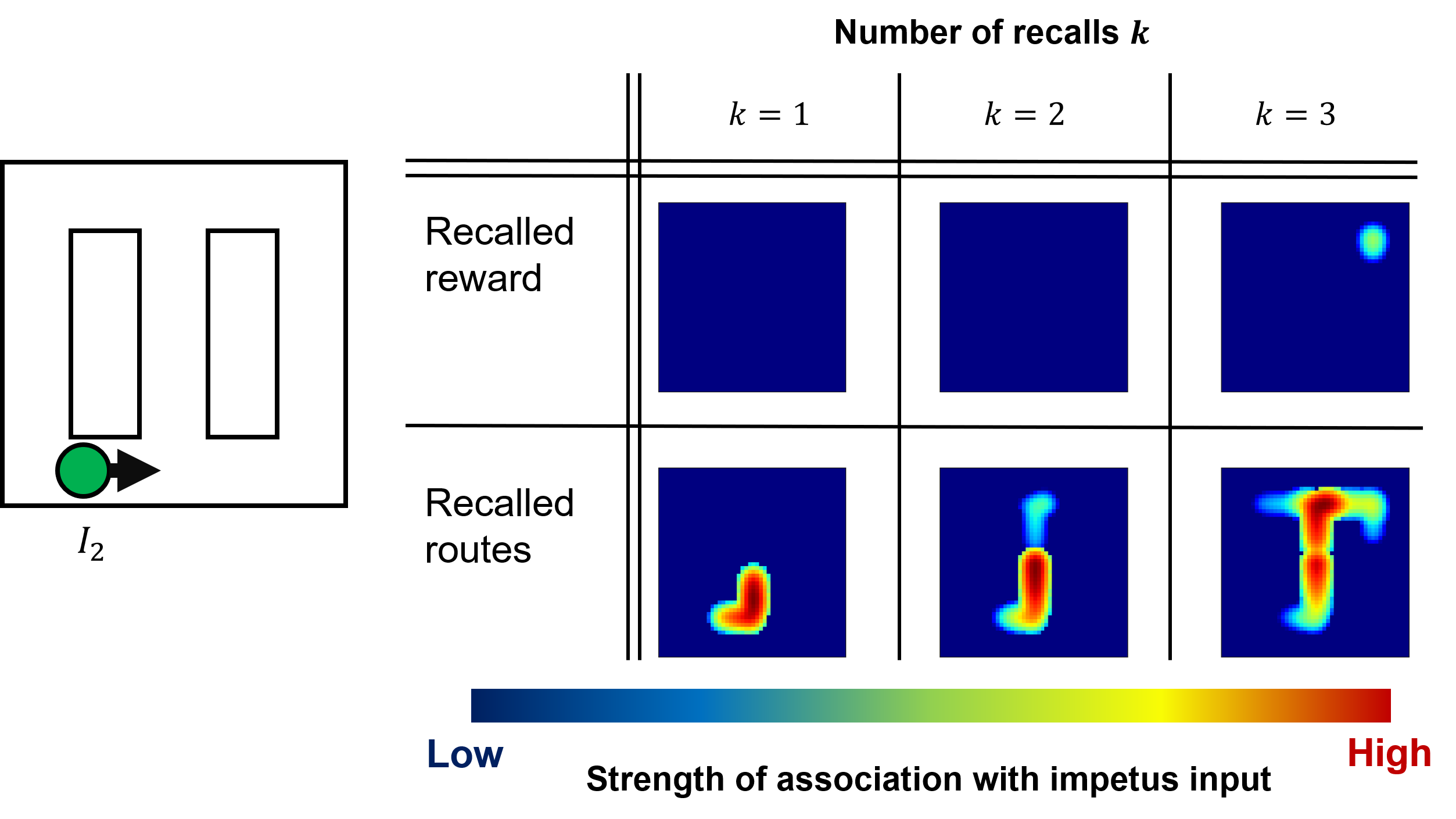

Fig. 3c depicts an overview of the route used in the constructed-route retrieval task. The virtual mouse took 100 s break after completing the counterclockwise route, and then it traveled the right aisle clockwise. No reward is fed in the counterclockwise movement, but it is fed only when the point in the clockwise movement is passed.

In the recall phase, the impetus input was given to the lower-left point () in the counterclockwise route.

Numerical simulations were performed by a multi-core CPU (Intel Core i7 6700K) with a graphic processing unit (NVIDIA, GTX 1050Ti ).

3.2 Route recall task

Fig. 4 depicts the value of displayed as a heat map. The matrix is obtained by repeatedly applying the recall procedures starting from the impetus input , which is or . The heat map represents the recalled spatial receptive field. The recalled procedure was iterated up to three times at each event. The number of events acquired by recalls increased with an increase in the number of recalls. Starting from the impetus input , the routes taken by the associated events were recalled. The length of the recalled routes increases with an increase in the number of recalls . The target event location was recalled by tracing the events in the order of temporal proximity to the trigger event.

When the impetus input was , as shown in Fig. 4, the counterclockwise and clockwise routes were equally recalled at the 3-way junction in the upper center. Since the sequence information of the learned routes was degraded in the EH matrix with time, the relationship strength obtained by recall in the clockwise route should be higher than that in the counterclockwise route. However, the same relationship strength was obtained for both routes. The equality in relationship strength is because the reward was given when the head direction changed in the route recall task, and the learning result was fixed in the EM matrix before the time decay of the learned route became noticeable.

3.3 Reward recognition task

In the reward recognition task, a reward was given only during either the counterclockwise or clockwise excursion. For recalling the reward location, the recall steps were repeated after the excursions. The following two inputs: and , were prepared as trigger events, as shown by the green and yellow circles in Figs. 5a and 5b, respectively. Further, occurred in a counterclockwise route, whereas occurred in a clockwise route. Although the reward was not located near , by repeating the recall procedures from the trigger event, the reward location ans route to the reward appeared. Thus, when is given, the reward and route to the reward were successfully retrieved after a couple of recall steps and , as shown in Fig. 5a. However, when , the reward was not recalled.

When is given, both the reward and the route to the reward were not recalled, as shown in Fig. 5b. So neither the reward nor route to the reward was recalled at any number of recalls . This is because no reward is given in the clockwise excursion. Therefore, in the EM matrix, the reward is neither directly nor indirectly associated with on the clockwise path. The virtual mouse reaches the reward location in the central stem during mind travel only if an impetus input to remember is on the counterclockwise excursion in which the reward was gained.

a

b

Fig. 6 depicts the heat map in the second reward recognition task in which the reward is fed only after moving clockwise. Figs. 6a and 6b show the experimental results triggered by and , respectively. In both figures, the reward was recalled when the number of recalls . However, there is a color difference in the heat map. This color difference is thought to be caused by the time difference between learning the route and being rewarded. More specifically, it is because the counterclockwise route information was time-attenuated by the EH matrix, and it is accumulated in the EM matrix more than the clockwise route information. Additionally, in the 3-way junction route in the upper center, which was recalled when the number of recalls , the heat map of the route to the left is smaller than that to the right. This difference in the heat map size is because the information on the movement order of the counterclockwise route is accumulated in the EM matrix after time attenuation. Accordingly, it is thought that the clockwise route has a stronger relevance to the reward than the counterclockwise route.

a

b

3.4 Constructed-route retrieval task

Fig. 7 depicts the heat map in the constructed-route retrieval task. This heat map was obtained by repeatedly applying the recall procedures starting from the impetus input . The clockwise route was learned with a break after the counterclockwise excursion was conducted. For , the central aisle has a bright color. Due to the waiting time of 100 s, the sequence information of the events related to the counterclockwise route expressed on the EH matrix was attenuated with time and then accumulated in the EM matrix. This process is considered to have caused this color difference in the heat map. This result indicates that the experience can be integrated to indirectly associate the trigger event with the reward, even if there is a time lag before obtaining the reward.

A virtual mouse could reach the point where the reward was given in the clockwise route when , even when recalling from the impetus input in the counterclockwise route. For , the heat map of the right route at the T-junction on the central stem is brighter than that of the left route. The difference in brightness is because the reward was given at the right corner on the clockwise route, and the event order during the clockwise route was less attenuated. It is considered that this phenomenon occurred because it was judged that the behavior that was close in time to the timing of receiving the reward in this model was more valuable.

We can expect to integrate the routes that the virtual mouse had traveled so far and associate it with the position where the reward was given, even if the virtual mouse recalls from positions on counterclockwise route that was not rewarded.

4 Discussion

For temporally close events, the Hebb’s and STDP rules allow to potentiate synaptic connections between neural assemblies of the events. However, if there is a relationship between events A and B, but with a gap in time, it will be difficult to link that relationship by directly enhancing the synaptic connections between the neuronal ensembles representing the events A and B. Also, it will be difficult to augment the causal relationship between place cells that determine action and neural activity representing the action. This difficulty is because if a reward is obtained as a result of the action, the neural activity that represents the action is likely to have ended before the reward is obtained. Therefore, those temporally distant neural ensembles can be linked together because of learning. To address this problem, we implemented two stages of learning: one for temporally distant events and the other for reward-related learning.

In the route recall task, the recall mechanism was devised based on the idea that the target event is indirectly reached by tracing the events that are directly related to the recall trigger event in order. The time series information of the events is converted into the event-order information, and it is held in the event-order memory matrix. Since the relationship between directly related events is maintained in the EM matrix, it is reasonable to recall both routes when the rat passes through both the counterclockwise and clockwise routes.

In the constructed-route retrieval task, it was still possible to find a route from the counterclockwise route to the rewarded route, even when the counterclockwise route was followed by the clockwise route with a 100 s waiting period in between. By incorporating an event holding function, it is possible to link trigger events and reward locations that are separated in time.

We employed a heteroassociative network that encodes the sequential memory of events into a matrix. This memory of events is then transferred to the matrix of long-term memory. The hippocampal CA3 is considered an autoassociative memory because of its recurrent connections. However, the hippocampal CA3 has been also considered a heteroassociative memory because of the phase precession of place cells [25] [26]. Several network models have been proposed for heteroassociative memory [27, 28]. de Camargo et al. [29] have proposed the hippocampal CA3 network model for pattern sequence recall based on the heteroassociative network. In our model, the pattern sequences are given according to the joint information about the place and associated cue when exploring the maze. Thus, the events are recalled in the order in which they occurred, and the path from a certain point in the maze to the reward can be stored.

In the heteroassociative network, the relatively long time constant of synaptic potentiation is assumed. The physiological time constant of the STDP function is several tens of milliseconds. In the medial temporal lobe, persistent firing neurons, cue-holding cells [23, 30] and time cells [7] have been found. In a pair association memory task, neural activity of a cue-holding cell induced by a cue stimulus sustains during a couple of seconds of the delay period [23, 30]. Time cells fires after an elapsed period from a cue input [7]. Moreover, all of these cells continue to fire for several tens of seconds after stimulation. Such sustaining firings potentially contribute to linking temporally distant events and informing the timing of a series of events. There is a physiological contradiction in synchronization between two distant events, but it can be resolved by introducing time or cue-holding cells.

5 Conclusion

We proposed an entorhinal cortex and hippocampus neural network model with a long-term memory matrix to encode event-order information and to consolidate that event sequences in the matrix. The route to the reward is recalled by successive repetition of the recall steps in the mind travel. Such recall mechanisms for goal-oriented behavior are beneficial for mobile service robots that perform autonomous navigation. Further, a neural network that remembers the sequential relationships of related events in a single trial leads to a system that easily learns from experience.

Acknowledgment

This research is supported by JSPS KAKENHI Grant Numbers 20K21819. This research is also based on results obtained from a project, JPNP16007, commissioned by the New Energy and Industrial Technology Development Organization (NEDO).

References

- [1] C. Rizzi, C. G. Johnson, F. Fabris, and P. A. Vargas, “A situation-aware fear learning (safel) model for robots,” Neurocomputing, vol. 221, pp. 32–47, 2017.

- [2] N. Cazin, M. Llofriu Alonso, P. Scleidorovich Chiodi, T. Pelc, B. Harland, A. Weitzenfeld, J. M. Fellous, and P. F. Dominey, “Reservoir computing model of prefrontal cortex creates novel combinations of previous navigation sequences from hippocampal place-cell replay with spatial reward propagation.” PLoS Computational Biology, vol. 15, no. 7, p. e1006624, 2019.

- [3] Y. Tanaka, T. Morie, and H. Tamukoh, “An amygdala-inspired classical conditioning model implemented on an fpga for home service robots,” IEEE Access, vol. 8, pp. 212 066–212 078, 2020.

- [4] E. Tulving et al., “Episodic and semantic memory,” Organization of memory, vol. 1, pp. 381–403, 1972.

- [5] W. B. Scoville and B. Milner, “Loss of recent memory after bilateral hippocampal lesions,” Journal of neurology, neurosurgery, and psychiatry, vol. 20, no. 1, pp. 11–21, 1957.

- [6] J. O’keefe and L. Nadel, The hippocampus as a cognitive map. Oxford: Clarendon Press, 1978.

- [7] C. J. MacDonald, K. Q. Lepage, U. T. Eden, and H. Eichenbaum, “Hippocampal “time cells” bridge the gap in memory for discontiguous events,” Neuron, vol. 71, no. 4, pp. 737–749, 2011.

- [8] J. O’Keefe and J. Dostrovsky, “The hippocampus as a spatial map: Preliminary evidence from unit activity in the freely-moving rat.” Brain research, vol. 34, no. 1, pp. 171–175, 1971.

- [9] E. C. Tolman, “Cognitive maps in rats and men.” Psychological Review, vol. 55, no. 4, pp. 189–208, 1948.

- [10] E. Kropff, J. E. Carmichael, M.-B. Moser, and E. I. Moser, “Speed cells in the medial entorhinal cortex,” Nature, vol. 523, no. 7561, pp. 419–424, 2015.

- [11] J. S. Taube, R. U. Muller, and J. B. Ranck, “Head-direction cells recorded from the postsubiculum in freely moving rats. i. description and quantitative analysis,” The Journal of neuroscience, vol. 10, no. 2, pp. 420–435, 1990.

- [12] K. M. Gothard, W. E. Skaggs, and B. L. McNaughton, “Dynamics of mismatch correction in the hippocampal ensemble code for space: interaction between path integration and environmental cues,” The Journal of Neuroscience, vol. 16, no. 24, pp. 8027–8040, 1996.

- [13] D. O. Hebb, The organization of behavior: A neuropsychological theory. Psychology Press, 2005.

- [14] A. H. Klopf, The hedonistic neuron: A theory of memory, learning, and intelligence. Hemisphere Pub, 1982.

- [15] R. S. Sutton and A. G. Barto, Reinforcement learning: an introduction. Cambridge (MA): The MIT Press, 1998.

- [16] U. Frey and R. Morris, “Synaptic tagging and long-term potentiation,” Nature, vol. 385, p. 533–536, 1997.

- [17] Z. Brzosko, W. Schultz, and O. Paulsen, “Retroactive modulation of spike timing-dependent plasticity by dopamine,” eLife, vol. 4, p. e09685, 2015.

- [18] K. He, M. Huertas, S. Z. Hong, X. Tie, J. W. Hell, H. Shouval, and A. Kirkwood, “Distinct Eligibility Traces for LTP and LTD in Cortical Synapses,” Neuron, vol. 88, no. 3, pp. 528 – 538, 2015.

- [19] S. Yagishita, A. Hayashi-Takagi, G. C. Ellis-Davies, H. Urakubo, S. Ishii, and H. Kasai, “A critical time window for dopamine actions on the structural plasticity of dendritic spines,” Science, vol. 345, no. 6204, pp. 1616–1620, 2014.

- [20] T. Shindou, M. Shindou, S. Watanabe, and J. Wickens, “A silent eligibility trace enables dopamine-dependent synaptic plasticity for reinforcement learning in the mouse striatum,” The European journal of neuroscience, vol. 49, no. 5, p. 726–736, 2019.

- [21] G.-q. Bi and M.-m. Poo, “Synaptic modifications in cultured hippocampal neurons: dependence on spike timing, synaptic strength, and postsynaptic cell type,” The Journal of neuroscience, vol. 18, no. 24, pp. 10 464–10 472, 1998.

- [22] R. K. Mishra, S. Kim, S. J. Guzman, and P. Jonas, “Symmetric spike timing-dependent plasticity at ca3–ca3 synapses optimizes storage and recall in autoassociative networks,” Nature communications, vol. 7, p. 11552, 2016.

- [23] T. Hirabayashi, D. Takeuchi, K. Tamura, and Y. Miyashita, “Functional microcircuit recruited during retrieval of object association memory in monkey perirhinal cortex,” Neuron, vol. 77, no. 1, pp. 192–203, 2013.

- [24] E. M. Izhikevich, “Solving the distal reward problem through linkage of STDP and dopamine signaling,” Cerebral cortex, vol. 17, no. 10, pp. 2443–2452, 2007.

- [25] O. Jensen and J. E. Lisman, “Hippocampal ca3 region predicts memory sequences: accounting for the phase precession of place cells,” Learning & Memory, vol. 3, no. 2-3, pp. 279–287, 1996.

- [26] J. E. Lisman, “Relating hippocampal circuitry to function: recall of memory sequences by reciprocal dentate-ca3 interactions,” Neuron, vol. 22, no. 2, pp. 233–242, 1999.

- [27] H. Sompolinsky and I. Kanter, “Temporal association in asymmetric neural networks,” Physical review letters, vol. 57, pp. 2861–2864, 1986.

- [28] W. B. Levy, “A sequence predicting ca3 is a flexible associator that learns and uses context to solve hippocampal-like tasks,” Hippocampus, vol. 6, no. 6, pp. 579–590, 1996.

- [29] R. Y. de Camargo, R. S. Recio, and M. B. Reyes, “Heteroassociative storage of hippocampal pattern sequences in the ca3 subregion,” PeerJ, vol. 6, p. e4203, 2018.

- [30] Y. Naya, M. Yoshida, M. Takeda, R. Fujimichi, and Y. Miyashita, “Delay-period activities in two subdivisions of monkey inferotemporal cortex during pair association memory task,” European Journal of Neuroscience, vol. 18, no. 10, pp. 2915–2918, 2003.