A Multi-objective Economic Statistical Design of CUSUM Chart: NSGA II Approach

Abstract

This paper presents an approach for the economic statistical design of the Cumulative Sum (CUSUM) control chart in a multi-objective optimization framework. The proposed methodology integrates economic considerations with statistical aspects to optimize the design parameters like the sample size (), sampling interval (), and decision interval () of the CUSUM chart. The Non-dominated Sorting Genetic Algorithm II (NSGA II) is employed to solve the multi-objective optimization problem, aiming to minimize both the average cost per cycle () and the out-of-control Average Run Length () simultaneously. The effectiveness of the proposed approach is demonstrated through a numerical example by determining the optimized CUSUM chart parameters using NSGA-II. Additionally, sensitivity analysis is conducted to assess the impact of variations in input parameters. The corresponding results indicate that the proposed methodology significantly reduces the expected cost per cycle by about 43% when compared to the findings of the article by M. Lee in year 2011. No comparison of could be made due to the non-availability of references. This highlights the practical relevance and potential of this study for the right application of the technique of the CUSUM chart for process control purposes in industries.

keywords:

CUSUM chart, multi-objective design, NSGA II, optimal parameters1 Introduction

Control charts are known to be a pivotal tool for exercising control over a process in statistical process control, with their origins dating back to Shewhart’s pioneering work in the 1920s. The design of the control chart encompasses the critical task of selecting parameters such as sample size, sampling interval, and control limits’ multiplier. These design parameters are crucial as they directly influence the quality of the control over the underlying process being monitored.

The cumulative sum (CUSUM) control chart, pioneered by Page in 1954, is a widely adopted method for exercising control over the mean of a quality characteristic in production processes. The usefulness of the techniques of the CUSUSM control chart in practical applications in industry is to identify the trend of the process. If the trend is found to worsen, the concerned quality characteristic needs to be analyzed for possible assignable or special causes with or without halting the process. One can refer to the article written by Mukhopadhyay, (2001) where the CUSUM chart has been used for routine bias correction on the shop floor for measuring and controlling moisture content in tobacco. When compared with the -chart, the CUSUM chart demonstrates superior efficiency in detecting small to moderate shifts in the process mean. Similar to the Shewhart control chart the CUSUM control chart also needs to be implemented by collecting samples at regular intervals. The chart depicts a cumulative sum of deviations between sample means and a target value over time. As long as the computed CUSUM statistic remains within a predefined decision interval, the process is said to be in control. However, if the CUSUM statistic exceeds the decision interval, it serves as an indication that the process went out of control. Consequently, it requires further investigation to find the potential causes (assignable causes) of this change. However, there are some costs associated with finding the assignable causes, like the costs of sampling, the costs of eliminating the assignable causes, and the costs of producing nonconforming units. It is interesting to note that the cost of producing nonconforming units increases with less sampling and less effort or lower costs of eliminating assignable causes. This underscores the importance of the trade-off between sampling cost followed by the cost of eliminating assignable causes and the cost of producing nonconforming units. From an economic viewpoint, it is always better to consider the economic statistical design of the control chart.

The economic design of control charts has always been a significant area to focus on in statistical process control. The objective of economic design is to determine optimal design parameters that minimize the expected cost per hour, thereby achieving control over a process in a cost-effective manner. Duncan, (1956) introduced the first cost model for chart, paving the way for subsequent research in the economic design of other control charts. A few of these are mentioned here. Lorenzen and Vance, (1986) proposed the unified economic design of control charts. The economic design of the control chart for sustainable operations under the gamma shock model was proposed by Wang and Li, (2020). Yeganeh et al., (2021) proposed a novel run rules-based MEWMA scheme for monitoring general linear profiles. Detection of intermittent faults based on moving average control charts with multiple window lengths was studied by Zhao et al., (2020). The optimal design of -EWMA control chart for short production runs is given by Ong et al., (2023). Zhang et al., (2024) proposed the joint design of VSI control chart. Pour et al., (2024) designed a log-logistic-based EWMA control chart using MOPSO and VIKOR approaches for monitoring cardiac surgery performance. Hu et al., (2024) proposed combined Shewhart-EWMA and Shewhart-CUSUM monitoring schemes for time between events.

Four parameters are of paramount importance in designing a CUSUM chart. These parameters are the optimal sample size (), the optimal sampling interval (), the reference value (), and the decision interval (). Taylor, (1968) was the first researcher to explore the economic design of the CUSUM chart, laying the foundation for subsequent investigations in this area. Since then various methodologies have been proposed for the economic design of the CUSUM chart. For instance, Goel and Wu, (1973) developed a procedure specifically tailored for controlling the mean of a process that follows a normal distribution using a CUSUM chart.

Chiu, (1974) introduced a production model centered on quality surveillance, employing the CUSUM chart with decision interval criteria. Chung, (1992) devised a search algorithm utilizing the one-dimensional -pattern search technique given by Hooke and Jeeves, (1961) for the economic design of the CUSUM chart. Simpson and Keats, (1995) utilized two-level fractional factorial designs to find the optimal parameters using the economic model for control charts given by Lorenzen and Vance, (1986) under CUSUM conditions.

Pan and Su-Tsu, (2005) proposed an innovative approach for monitoring and evaluating environmental performance through the economic design of the CUSUM chart. Additionally, an economic model of the CUSUM chart for controlling the process mean in short production runs was proposed by Nenes and Tagaras, (2006). Lee, (2011) proposed the economic design of a CUSUM control chart for non-normally correlated data. Overall, the economic design of the CUSUM chart has drawn significant attention and continues to be a subject of active research, with various methodologies and applications contributing to its advancement in the field of statistical process control.

Along with the economic aspects associated with control charts, sometimes it is necessary to consider the statistical aspects like detecting the shift as early as possible. The multi-objective economic statistical design of control charts is one way to study the economic aspects along with the statistical aspects. In the literature, there are several papers dealing with the multi-objective economic design of other control charts, some of which are included here. Celano and Fichera, (1999) studied the multi-objective economic design of an control chart. Yang et al., (2012) used a multi-objective particle swarm optimization algorithm to develop a multi-objective model for the optimal design of and S control charts. Safaei et al., (2012) studied the multi-objective economic statistical design of control chart considering Taguchi loss function. Faraz and Saniga, (2013) examined a bi-objective optimization model for the economic-statistical design of control charts. Lupo, (2014) developed a multi-objective optimization model for the optimal design of a c-chart. Bashiri et al., (2014) proposed a multi-objective economic-statistical design for the cumulative count of conforming control chart. Morabi et al., (2015) presented a multi-objective optimization model for designing a control chart with fuzzy parameters to monitor the process mean.

However, the multi-objective economic statistical design of the CUSUM chart is yet to be explored. So, in this article, a multi-objective economic statistical design of the CUSUM chart is proposed using the cost model given by Lorenzen and Vance, (1986). In this model, we would try to minimize the expected cost per cycle () as well as the out-of-control average run length () while maintaining a reasonably large in-control average run length (). Along with minimizing the two objectives, we would find out the optimal parameters , , and . The optimal value of is the half of the magnitude of the shift given in units, as mentioned in Section 2. The proposed multi-objective model has been solved with the help of Non-dominated Sorting Genetic Algorithm II (NSGA II) which was introduced by Deb, (2011). NSGA-II has been chosen for this study because it was designed specifically to handle multi-objective problems. It uses a genetic algorithm framework to seek out Pareto-optimal solutions, which are characterized by the inability to enhance one objective without compromising another. This enables decision-makers to navigate a spectrum of alternatives while weighing the trade-offs between different objectives. By harnessing evolutionary search techniques like crossover and mutation, NSGA II efficiently traverses the solution space, making it adept at handling intricate optimization problems with numerous decision variables and constraints, mirroring those found in our proposed model. Furthermore, NSGA II possesses adaptability, allowing it to adjust to changes in problem formulations or objective functions with minimal alterations to the algorithm. This attribute lends itself to flexibility, enabling the seamless incorporation of additional objectives or constraints as the problem evolves over time. Amiri et al., (2014) considered a multi-objective economical-statistical design of the EWMA chart and solved it using NSGA II and MOGA algorithms. Mobin et al., (2015) used NSGA-II algorithm to solve multi-objective design of control chart. Zandieh et al., (2019) proposed the economic-statistical design of the c-chart with multiple assignable causes and solved it using a hybrid NSGA-II approach.

We are using NSGA II since it is specifically designed to handle problems with multiple objectives. It can handle complex optimization problems with many decision variables and constraints as we have in our proposed model. It can adapt to changes in problem formulations or objective functions without significantly modifying the algorithm. It offers flexibility in incorporating additional objectives or constraints as the problem evolves. In contrast, the primary aim of goal programming is minimizing deviations from predefined goals and may not be as effective in handling multiple conflicting objectives. Goal programming typically produces a single solution that minimizes deviations from goals but may not provide a comprehensive view of the problem’s trade-offs. It may face challenges in dealing with intricate constraints. It may require adjustments in the formulation or constraints when objectives or priorities change. Moreover, solving a double-objective problem by using goal programming requires users to provide a weight factor for each goal. The resulting solution, therefore, depends on the chosen set of weight factors. Also, the goal programming has difficulty in finding solutions for the problems having non-convex feasible decision space as shown in Deb, (1999).

The rest of the paper is organized like this. The sample statistic of the CUSUM chart is given in Section 2. The formulation of the multi-objective economic statistical design of the CUSUM chart is given in Section 3. The pseudocode for using the NSGA II is given in Section 4. The aspects of applicability for the proposed approach are demonstrated by a numerical example in Section 5. The results of the sensitivity analysis are provided in Section 6. Benefits and practical applications are discussed in Section 7. The conclusion for the proposed approach and the scope for future work are given in Section 8.

2 The Statistic of CUSUM chart

Let’s consider a scenario where the variation of a quality characteristic follows the normal distribution with mean and standard deviation in the in-control state, denoted as , where both and are known. However, over time, the process may transit to an out-of-control state, resulting in a shift in the mean of the quality characteristic from (where = ) to , where and represent respectively the sample mean and the sample standard deviation. Here, signifies the magnitude of the shift in mean, while the standard deviation is assumed to remain constant. Samples of size are taken after every hours of production, with reference value () and decision interval (). Subsequently, the gathered sample information is plotted on a CUSUM chart. In the event of CUSUM statistic surpassing the decision interval, an investigation to identify and eliminate the assignable cause is initiated. The CUSUM statistic can be calculated as

| (1) | |||

where i denote the sample and = = 0.

The effectiveness of a control chart can be assessed using the average run length (), which represents the average number of samples needed to detect an out-of-control condition or trigger a false alarm. The in-control () is used for calculating the false alarm rate whereas the out-of-control () is an indicator of the power (or effectiveness) of the control chart. One of the major difficulties in the economic design of the CUSUM chart is the evaluation of average run lengths. In this paper, we are utilizing approximation for calculating given by Siegmund, (1985). The main reason for using Siegmund’s approximation is its simplicity. Woodall and Adams, (1993) also recommended to use approximation given by Siegmund, (1985). For one-sided CUSUM (i.e., or ) Siegmund’s approximation for is

| (2) |

For , where for the lower one-sided CUSUM , for the upper one-sided CUSUM , being the magnitude of process shift in units for which needs to be calculated, , and . For , can be calculated by . Hence, the formula given in equation (2) can be used to determine when and when it can be used to calculate .

For , equation (2) can be used for calculating one-sided in-control (i.e., and ):

| (3) |

Whereas for , equation (2) can be used for calculating one-sided out-of-control (i.e., , and )

| (4) | |||

For calculating of a two-sided CUSUM, one can use the formula given in equation (5) using two one-sided (i.e., , and )

| (5) |

The above values of and are used in the multi-objective economic statistical design of the CUSUM chart defined in Section 3.

3 A Multi-objective Economic Statistical Design of CUSUM chart

In this section, based on certain assumptions the expected cost per cycle has been considered for proposing a multi-objective economic statistical design of the CUSUM chart.

3.1 Assumptions for the Model

The following assumptions are deemed valid for the proposed model:

(1) The mean of the quality characteristics is assumed to follow Normal distribution.

(2) The mean of the quality characteristic shifts from to

(3) The occurrence of an assignable cause follows an exponential distribution with a mean of .

3.2 Expected Cost Per Cycle

In this article, Lorenzen and Vance, (1986) cost model has been extended to a multi-objective economic statistical design of a CUSUM chart. The reason is that it is the most widely used statistically constrained economic model. The expected cost per cycle() is:

| (6) | |||||

The parameters given in equation (6) are defined below:

: Quality cost per hour for the in-control process

: Quality cost per hour for the out-of-control process

: Average time taken for assignable cause to occur and can be determined by :

| (7) | |||||

: Average time to take a sample and obtain the results

: Average in-control run length

: Average out-of-control run length

: Average time associated with a false alarm

: The average time required to discover an assignable cause

: The average time required to eliminate an assignable cause

: A binary variable that takes the value 1 if the production continues during the search for an assignable cause and 0 otherwise

: A binary variable that takes the value 1 if the production continues during the elimination of an assignable cause through intervening in the process and 0 otherwise

: Average number of samples taken while the process is in control. It can be determined by:

| (8) | |||||

: The average cost for searching an assignable cause when there is none

: The average cost of identifying and eliminating an assignable cause

: The cost per sample for maintaining the CUSUM chart in a process

: The variable cost of sampling an inspection unit

3.3 Multi-objective Economic Statistical Design

A multi-objective design of a CUSUM chart with two objectives has been proposed in this article. Our aim is to minimize the expected cost per cycle () as well as the Average out-of-control Run Length (). The multi-objective design is given as follows:

| (9) | |||

and are the respective lower and upper bounds of and .

It has already been mentioned that to arrive at the economic statistical design of a CUSUM chart, one requires three decision variables, namely, , , and . This article uses the NSGA II method to determine the optimal parameters of the multi-objective design for the CUSUM chart given in equation (3.3).

4 Pseudo Code for Using NSGA II

At iteration , NSGA II initializes with a population consisting of candidate solutions. It then proceeds to a loop where children are generated. Each child is created by selecting a pair of parents through binary tournament selection, with the criterion being the Crowded-comparison Operator (CCO) operator. The CCO compares two solutions based on their Pareto rank and crowding distance, which are described below.

Pareto Rank: A solution with a lower rank (i.e., it belongs to a better Pareto front) is considered superior.

Crowding Distance: If two solutions have the same rank, the one with the higher crowding distance (i.e., it is more isolated from others) is preferred to maintain diversity.

The parents chosen using the CCO criterion then undergo crossover to produce offspring, which subsequently undergoes mutation. All children are stored in a matrix .

A combined population is formed. Utilizing fast non-dominated sorting, the Pareto fronts of are determined. Subsequently, the population for the next generation, , is constructed.

is initially an empty set. The algorithm then sets . If the cardinality of , denoted as , equals , the creation of is concluded, and the algorithm proceeds to the next iteration. However, if , the creation process continues. In this case, fronts are added to in the order of their ranking until .

While incorporating fronts into , it’s possible that one front, denoted as , may not entirely fit into . In such instances, the solutions of are arranged in descending order of the CCO, and these ordered solutions are sequentially inserted into until . The decision to conclude or continue forming based on its cardinality relative to is essential for maintaining the appropriate working of the algorithm for consistent and valid results ensuring that the population remains fixed in size while also being of high quality and diversity.

The pseudocode for implementing NSGA-II is given in Algorithm 1. This algorithm was given by Scardua, (2021). Following this algorithm, the code to implement NSGA II has been appropriately modified for our proposed model. In order to write the code for implementing NSGA II in the realm of the CUSUM chart, certain pseudocodes are required. These pseudocodes pertaining to Pareto Ranking, Fast Non-dominated Sorting, and Crowded-comparison Operator are also given in Scardua, (2021).

It is worthwhile to mention here that NSGA II generates a set of optimal solutions that are represented as Pareto fronts. These fronts are a key concept in multi-objective optimization, particularly in evolutionary algorithms like NSGA-II. These fronts are a visual and conceptual representation of the trade-offs between multiple conflicting objectives. Each front contains solutions that are optimal in the sense that no other solution is strictly better in all objectives. The shape and distribution of solutions on the Pareto fronts provide valuable insights for decision-making in multi-objective optimization problems.

5 Numerical Example

In this section, an application of the economic design of the CUSUM chart is shown with the help of an example taken from Lee, (2011) where he considered a hypothetical set of process and cost parameters.

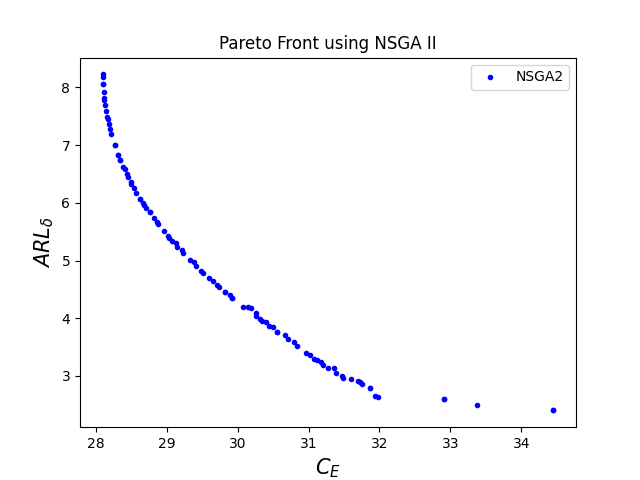

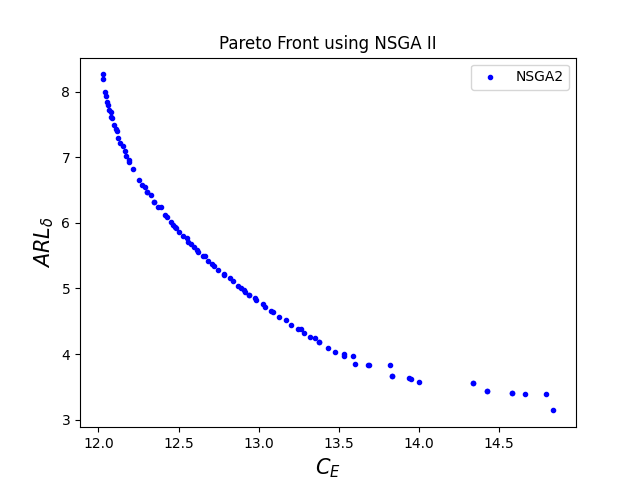

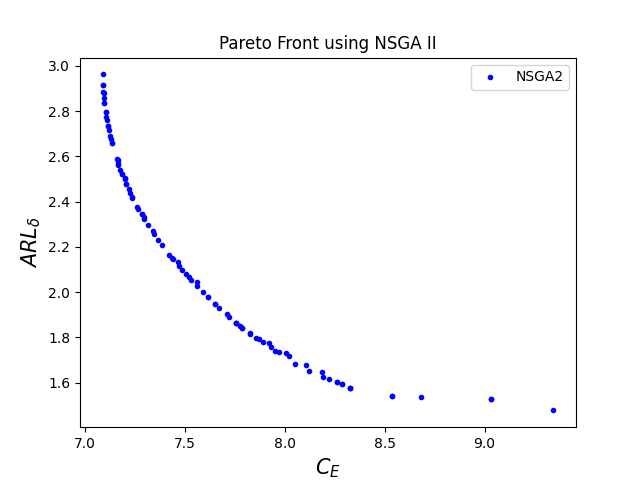

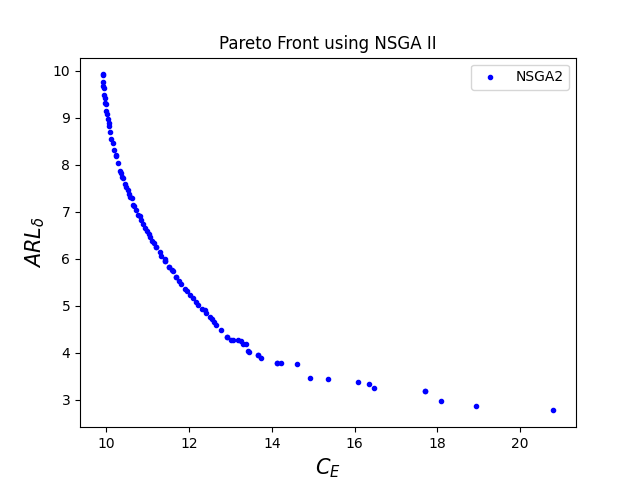

He considered a factory in which yogurt drinks are produced and contained in bottles. The target quantity of yogurt drink for each bottle is 0.02 liters. The produced yogurt drink is then inserted into fifteen bottles at a time. Now, those fifteen bottles are packed in a box later. Suppose that the hourly in-control quality cost is = $10 and that of an out-of-control state is = $100. Since the inter-occurrence time of the assignable causes was assumed to follow an exponential distribution, let’s assume that assignable causes occur with a frequency of about one every hundred hours of operation. Thus, = 0.01. The cost per sample for maintaining the CUSUM chart and the variable cost of sampling respectively are = $0.5 and = $0.1. The cost of investigating a false alarm is = $50. The average cost of identifying and eliminating an assignable cause is = $25. It takes on an average of three minutes ( = 0.05 hours) to take a sample and obtain the results. It requires about = 2 hours to discover an assignable cause, and it requires about = 2 hours to eliminate the assignable cause. The lower and upper control limits on and respectively are 200 and 14 units and constraints on , , and respectively range from 2 to 20, 0.01 to 2, and 0.0001 to 5. Further, it is assumed that the process continues to operate while searching and elimination of an assignable cause are going on. There are 82 non-dominated solutions, so it will be difficult to give all solutions in the form of a table. Due to this reason, table 1 contains the solution in terms of percentile (i.e. 5th, 10th, 15th,….,100th) with an increment of 5 percentile beginning with the 1st percentile. The corresponding optimal Pareto Front is shown in figure 1.

| 9.50 | 8.72 | 2 | 0.36 | 4.19 |

| 9.50 | 8.49 | 2 | 0.37 | 4.07 |

| 9.52 | 8.10 | 2 | 0.40 | 3.88 |

| 9.56 | 7.69 | 2 | 0.43 | 3.67 |

| 9.61 | 7.37 | 2 | 0.44 | 3.51 |

| 9.69 | 6.94 | 2 | 0.48 | 3.29 |

| 9.75 | 6.71 | 2 | 0.53 | 3.18 |

| 9.75 | 6.71 | 2 | 0.53 | 3.18 |

| 9.84 | 6.39 | 2 | 0.55 | 3.02 |

| 9.92 | 6.15 | 2 | 0.56 | 2.89 |

| 10.04 | 5.84 | 2 | 0.65 | 2.74 |

| 10.13 | 5.63 | 2 | 0.64 | 2.63 |

| 10.24 | 5.38 | 2 | 0.71 | 2.50 |

| 10.41 | 5.05 | 2 | 0.78 | 2.33 |

| 10.55 | 4.80 | 2 | 0.82 | 2.20 |

| 10.69 | 4.58 | 2 | 0.94 | 2.09 |

| 10.92 | 4.23 | 2 | 1.07 | 1.90 |

| 11.05 | 4.13 | 2 | 0.94 | 1.85 |

| 11.16 | 3.88 | 2 | 1.15 | 1.73 |

| 11.40 | 3.62 | 2 | 1.19 | 1.59 |

| 12.05 | 3.37 | 2 | 1.03 | 1.46 |

| 13.10 | 2.92 | 2 | 1.07 | 1.22 |

The minimum value of expected cost per cycle () is $9.50 and the pertinent optimal parameters are = 2, = 0.36 hours, and = 4.19 units. Lee, (2011) obtained the corresponding optimal values of , , , and under the normality condition respectively as $16.78, 2, 0.85 hours, and 1.69 units where he used Markov chain-based approach given by Prabhu et al., (1997) to find and . If one uses the proposed multi-objective economic statistical approach, the expected cost per cycle ( decreases by 43.39%.

6 Sensitivity Analysis

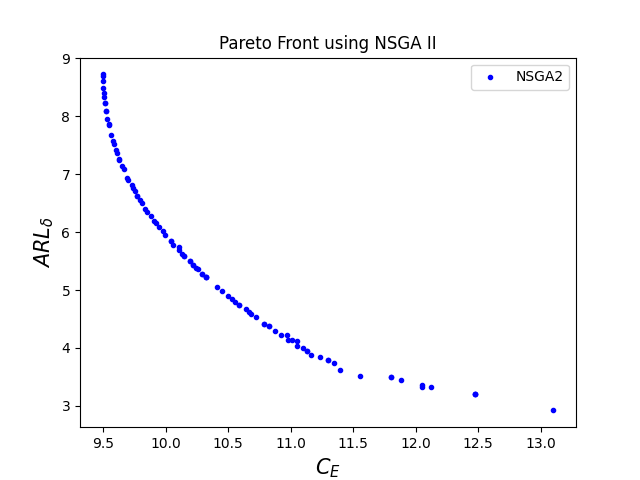

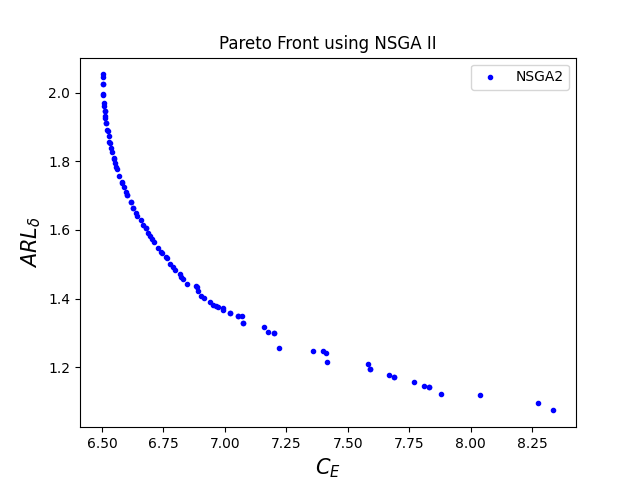

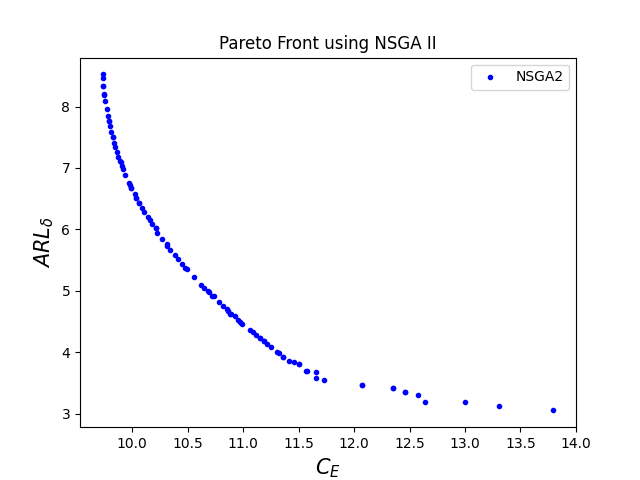

Table 2 contains two sets of values along with optimal parameters , , and for a given and these are (i) is minimum and is maximum (ii) is maximum and is minimum. As the magnitude of the shift () increases, both and decrease. However, remains the same. As the magnitude of shift increases, decreases, and increases.

| 9.50 | 8.72 | 2 | 0.36 | 4.19 | |

| 13.10 | 2.92 | 2 | 1.07 | 1.22 | |

| 7.97 | 4.78 | 2 | 0.48 | 3.08 | |

| 10.91 | 2.03 | 2 | 1.09 | 1.00 | |

| 7.09 | 2.96 | 2 | 0.64 | 2.30 | |

| 9.34 | 1.48 | 2 | 1.19 | 0.80 | |

| 6.50 | 2.05 | 2 | 0.79 | 1.80 | |

| 8.34 | 1.08 | 2 | 1.46 | 0.57 |

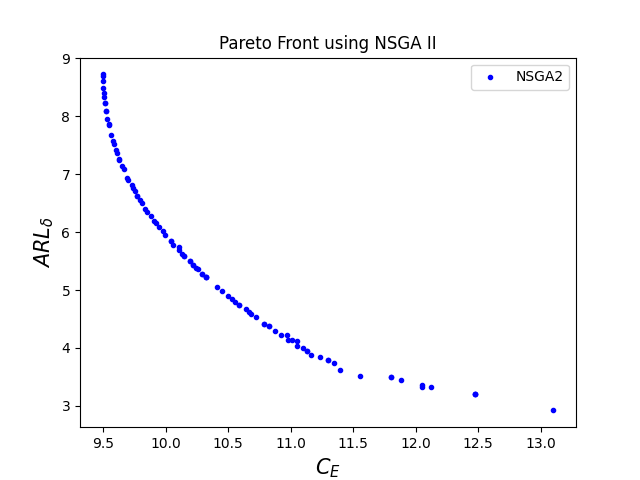

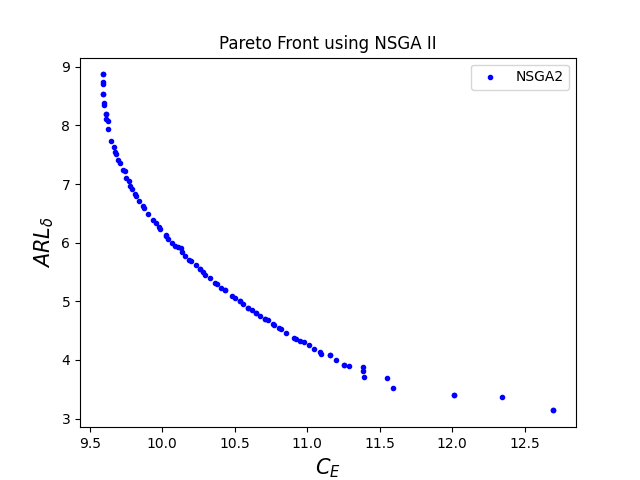

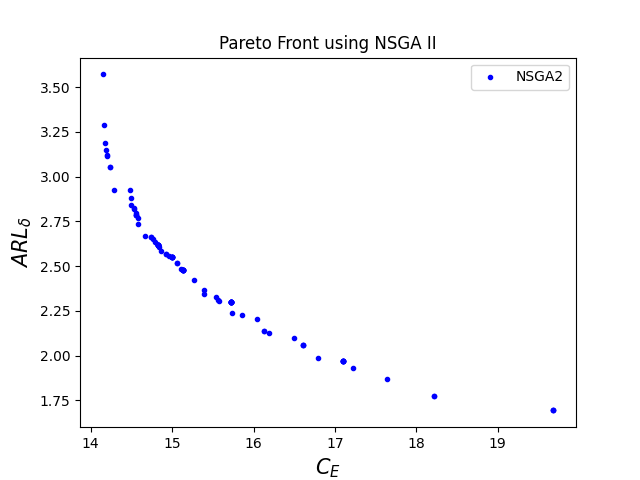

Table 3 also contains two sets of values along with optimal parameters , , and when cost parameters are increased from their respective low levels to high levels with a change of one-factor-at-a-time and these are (i) is minimum and is maximum (ii) is maximum and is minimum. All the low levels of input parameters are kept the same as given in the numerical example section and high levels are chosen at random since it is a common practice in research articles that deal with the economic design of control charts. For = 1.0, table 2 presents the corresponding values of the optimal parameters when each input variable is at its pertinent low level. The minimum change in the minimum is obtained corresponding to the parameter and the maximum change in the minimum is obtained corresponding to the parameter . Similarly, The minimum change in the maximum is also obtained corresponding to the parameter , and the maximum change in the maximum is obtained corresponding to the parameter . Also, the minimum change in the minimum is obtained corresponding to the parameter , and the maximum change in the minimum is obtained corresponding to the parameter . Similarly, The minimum change in the maximum is also obtained corresponding to the parameter , and the maximum change in the maximum is obtained corresponding to the parameter .

| Input Parameters | Low Level | High Level | |||||

|---|---|---|---|---|---|---|---|

| 10 | 20 | 9.59 | 8.83 | 2 | 0.36 | 4.25 | |

| 13.02 | 3.12 | 2 | 0.96 | 1.32 | |||

| 100 | 200 | 15.64 | 8.92 | 2 | 0.24 | 4.29 | |

| 20.96 | 3.42 | 2 | 0.52 | 1.48 | |||

| 50 | 100 | 9.91 | 9.92 | 2 | 0.34 | 4.79 | |

| 20.77 | 3.18 | 2 | 0.77 | 1.36 | |||

| 25 | 50 | 9.73 | 8.52 | 2 | 0.37 | 4.09 | |

| 13.80 | 3.05 | 2 | 0.89 | 1.29 | |||

| 0.5 | 5 | 14.15 | 3.57 | 2 | 1.87 | 1.56 | |

| 19.68 | 1.69 | 2 | 1.60 | 0.54 | |||

| 0.1 | 1 | 12.38 | 5.17 | 2 | 1.02 | 2.39 | |

| 15.74 | 2.17 | 2 | 1.45 | 0.81 |

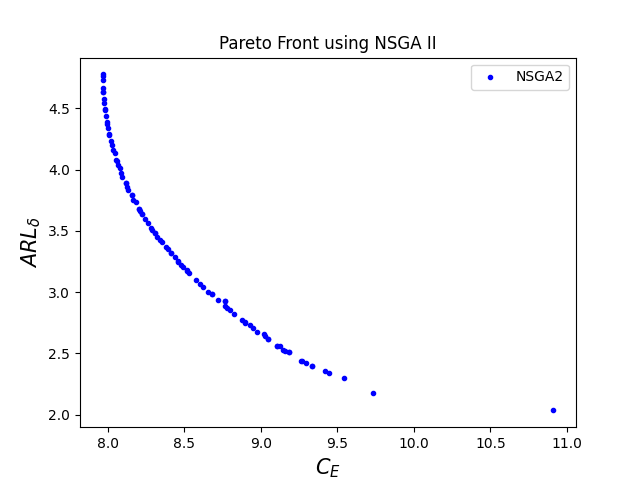

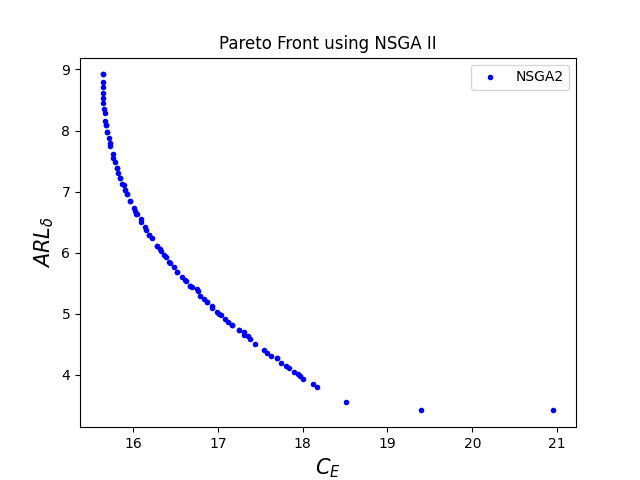

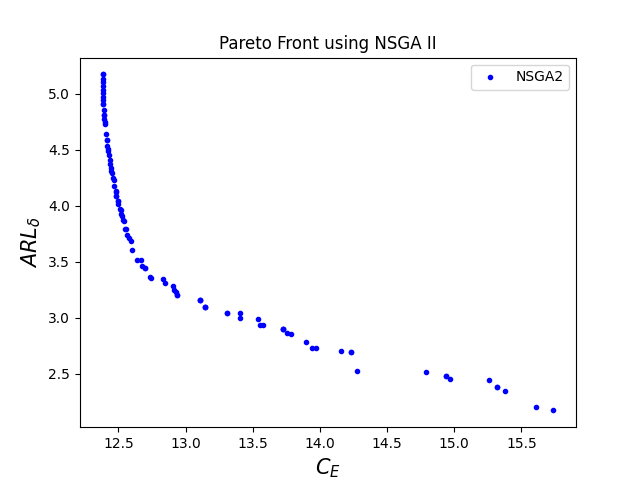

Table 4 contains two sets of values along with optimal parameters , , and when time-related parameters are increased from their respective low levels to high levels with one-factor-at-a-time change. The minimum change in the minimum is obtained corresponding to the parameter and the maximum change in the minimum is obtained corresponding to the parameter . Similarly, the minimum change in the maximum is also obtained corresponding to the parameter , and the maximum change in the maximum is obtained corresponding to the parameter . Also, the minimum change in the minimum is obtained corresponding to the parameters and , and the maximum change in the minimum is obtained corresponding to the parameter . Similarly, the minimum change in the maximum is obtained corresponding to the parameter , and the maximum change in the maximum is obtained corresponding to the parameter . The optimum values remain the same when is changed from low to high level since the process is assumed to operate continuously while the search and elimination of an assignable cause are going on. All of the corresponding optimal Pareto fronts are given in the Appendix.

| Input Parameters | Low Level | High Level | |||||

|---|---|---|---|---|---|---|---|

| 0.01 | 0.05 | 28.10 | 8.23 | 2 | 0.20 | 3.94 | |

| 34.45 | 2.40 | 2 | 0.71 | 0.94 | |||

| 0.05 | 0.25 | 9.84 | 8.82 | 2 | 0.36 | 4.24 | |

| 12.27 | 3.26 | 2 | 1.18 | 1.40 | |||

| 2 | 5 | 9.50 | 8.72 | 2 | 0.36 | 4.19 | |

| 13.10 | 2.92 | 2 | 1.07 | 1.22 | |||

| 2 | 5 | 12.03 | 8.26 | 2 | 0.40 | 3.96 | |

| 14.84 | 3.14 | 2 | 1.08 | 1.34 | |||

| 2 | 5 | 12.03 | 8.26 | 2 | 0.40 | 3.96 | |

| 14.84 | 3.14 | 2 | 1.08 | 1.34 |

7 Benefits and Practical Applications

The multi-objective economic statistical design of the CUSUM chart focuses on optimizing the control chart’s parameters by balancing both economic and statistical aspects simultaneously. This allows for effective monitoring and detection of small shifts, often referred to as drifts, in a process. Here are a few potential practical areas of application of this approach:

1. Manufacturing Process Control:- The CUSUM chart can very much be used for exercising control in production processes to detect small shifts or drifts in product quality characteristics such as weight, thickness, or other various dimensions. The early detection of process deviations ensures minimal waste, reduced rework costs, and better product quality. The multi-objective economic statistical design of the CUSUM chart helps in balancing the cost of inspections with the cost of defective products.

2. Chemical and Pharmaceutical Industry:- The CUSUM chart can also be used for monitoring the concentration of chemicals or ingredients in continuous processes like pharmaceuticals and chemical. The multi-objective economic statistical design of the CUSUM chart helps in maintaining product consistency by detecting small shifts in the chemical mixture or reaction parameters and also helps in reducing the risk of costly recalls.

3. Healthcare and Medical Processes:- The CUSUM chart has potential to be used for monitoring vital signs of patients, such as, blood pressure, blood sugar levels, or other health metrics, to detect abnormal trends. The early detection of health related abnormalities reduces the risk of aggravation and the multi-objective economic design of the CUSUM chart can help healthcare providers balance the cost of frequent monitoring with timely interventions ensuring efficient use of the resources.

The application of the multi-objective economic statistical design of the CUSUM chart can be extended to other arenas like Food & Beverage Industry, Automotive Industry, Supply Chain & Inventory Management, Financial Market Monitoring, Telecommunications & Network Monitoring, Environmental Monitoring, and Energy & Utility Sector.

8 Conclusions

In this paper, a multi-objective economic statistical design of the CUSUM chart is proposed. The expected cost per cycle () and out-of-control Average Run Length () are considered as two objectives. This multi-objective problem is then solved with the help of the Non-dominated Sorting Generating Algorithm II (NSGA II). Since there is no research article on the multi-objective design of the CUSUM chart, results are compared with the results of a single objective design proposed by Lee, (2011). The minimum value of obtained by the proposed approach is $9.50 and the corresponding optimal parameters are = 2, = 0.36 hours, and = 4.19 units. The respective optimal values of , , , and under the normality condition obtained by Lee, (2011) are $16.78, 2, 0.85 hours, and 1.69 units. The proposed multi-objective economic statistical approach reduces the expected cost per cycle ( by 43.39%. In a practical situation pertaining to an industry, if the cost and time parameters are adequately estimated, the demonstrated algorithm and pseudocode can suitably be used for the effective implementation of the CUSUM control chart.

References

- Amiri et al., (2014) Amiri, A., Bashiri, M., Maleki, M. R., and Moghaddam, A. S. (2014). Multi-objective markov-based economic-statistical design of ewma control chart using nsga-ii and moga algorithms. International Journal of Multicriteria Decision Making, 4(4):332–347.

- Bashiri et al., (2014) Bashiri, M., Amiri, A., et al. (2014). Multi-objective economic-statistical design of cumulative count of conforming control chart. International Journal of Engineering Transactions B: Applications, 27(10):1591–1600.

- Celano and Fichera, (1999) Celano, G. and Fichera, S. (1999). Multiobjective economic design of an x control chart. Computers & industrial engineering, 37(1-2):129–132.

- Chiu, (1974) Chiu, W. (1974). The economic design of cusum charts for controlling normal means. Journal of the Royal Statistical Society Series C: Applied Statistics, 23(3):420–433.

- Chung, (1992) Chung, K.-J. (1992). Economically optimal determination of the parameters of cusum charts. International Journal of Quality & Reliability Management, 9(6).

- Deb, (1999) Deb, K. (1999). Solving goal programming problems using multi-objective genetic algorithms. In Proceedings of the 1999 Congress on Evolutionary Computation-CEC99 (Cat. No. 99TH8406), volume 1, pages 77–84. IEEE.

- Deb, (2011) Deb, K. (2011). Multi-objective optimization using evolutionary algorithms: an introduction. Springer.

- Duncan, (1956) Duncan, A. J. (1956). The economic design of -charts used to maintain current control of a process. Journal of the American Statistical Association, 51:228–242.

- Faraz and Saniga, (2013) Faraz, A. and Saniga, E. (2013). Multiobjective genetic algorithm approach to the economic statistical design of control charts with an application to bar and s2 charts. Quality and Reliability Engineering International, 29(3):407–415.

- Goel and Wu, (1973) Goel, A. L. and Wu, S. (1973). Economically optimum design of cusum charts. Management Science, 19(11):1271–1282.

- Hooke and Jeeves, (1961) Hooke, R. and Jeeves, T. A. (1961). “direct search”solution of numerical and statistical problems. Journal of the ACM (JACM), 8(2):212–229.

- Hu et al., (2024) Hu, X., Xia, F., Zhang, J., and Song, Z. (2024). Combined shewhart–ewma and shewhart–cusum monitoring schemes for time between events. Quality and Reliability Engineering International.

- Lee, (2011) Lee, M. (2011). Economic design of cumulative sum control chart for non-normally correlated data. MATEMATIKA: Malaysian Journal of Industrial and Applied Mathematics, pages 79–96.

- Lorenzen and Vance, (1986) Lorenzen, T. J. and Vance, L. C. (1986). The economic design of control charts: a unified approach. Technometrics, 28(1):3–10.

- Lupo, (2014) Lupo, T. (2014). A multi-objective design approach for the c chart considering taguchi loss function. Quality and Reliability Engineering International, 30(8):1179–1190.

- Mobin et al., (2015) Mobin, M., Li, Z., and Khoraskani, M. M. (2015). Multi-objective x-bar control chart design by integrating nsga-ii and data envelopment analysis. In IIE annual conference. Proceedings, page 164. Institute of Industrial and Systems Engineers (IISE).

- Morabi et al., (2015) Morabi, Z. S., Owlia, M. S., Bashiri, M., and Doroudyan, M. H. (2015). Multi-objective design of x control charts with fuzzy process parameters using the hybrid epsilon constraint pso. Applied Soft Computing, 30:390–399.

- Mukhopadhyay, (2001) Mukhopadhyay, A. R. (2001). Statistical process control procedure for controlling moisture content in tobacco. Total Quality Management, 12(3):299–306.

- Nenes and Tagaras, (2006) Nenes, G. and Tagaras, G. (2006). The economically designed cusum chart for monitoring short production runs. International Journal of Production Research, 44(8):1569–1587.

- Ong et al., (2023) Ong, K., Teh, S., Saha, S., Khoo, M., and Soh, K. (2023). Optimal design of s2-ewma control chart for short production runs. Quality and Reliability Engineering International, 39(7):2881–2904.

- Pan and Su-Tsu, (2005) Pan, J.-N. and Su-Tsu, C. (2005). The economic design of cusum chart for monitoring environmental performance. Asia Pacific Management Review, 10(2).

- Pour et al., (2024) Pour, A. N., Azizi, A., Rahimzadeh, A., Ershadi, M. J., and Zeinalnezhad, M. (2024). Designing a log-logistic-based ewma control chart using mopso and vikor approaches for monitoring cardiac surgery performance. Decision Making: Applications in Management and Engineering, 7(1):342–363.

- Prabhu et al., (1997) Prabhu, S. S., RUNGER, G. C., and MONTGOMERY, D. C. (1997). Selection of the subgroup size and sampling interval for a cusum control chart. IIE transactions, 29(6):451–457.

- Safaei et al., (2012) Safaei, A. S., Kazemzadeh, R. B., and Niaki, S. T. A. (2012). Multi-objective economic statistical design of control chart considering taguchi loss function. The International Journal of Advanced Manufacturing Technology, 59:1091–1101.

- Scardua, (2021) Scardua, L. A. (2021). Applied Evolutionary Algorithms for Engineers Using Python. CRC Press.

- Siegmund, (1985) Siegmund, D. (1985). Sequential analysis: tests and confidence intervals. Springer Science & Business Media.

- Simpson and Keats, (1995) Simpson, J. R. and Keats, J. B. (1995). Sensitivity study of the cusum control chart with an economic model. International journal of production economics, 40(1):1–19.

- Taylor, (1968) Taylor, H. M. (1968). The economic design of cumulative sum control charts. Technometrics, 10(3):479–488.

- Wang and Li, (2020) Wang, C.-H. and Li, F.-C. (2020). Economic design under gamma shock model of the control chart for sustainable operations. Annals of Operations Research, 290(1):169–190.

- Woodall and Adams, (1993) Woodall, W. H. and Adams, B. M. (1993). The statistical design of cusum charts. Quality Engineering, 5(4):559–570.

- Yang et al., (2012) Yang, W.-a., Guo, Y., and Liao, W. (2012). Economic and statistical design of and s control charts using an improved multi-objective particle swarm optimisation algorithm. International Journal of Production Research, 50(1):97–117.

- Yeganeh et al., (2021) Yeganeh, A., Shadman, A., and Amiri, A. (2021). A novel run rules based mewma scheme for monitoring general linear profiles. Computers & Industrial Engineering, 152:107031.

- Zandieh et al., (2019) Zandieh, M., Hosseinian, A. H., and Derakhshani, R. (2019). A hybrid nsga-ii-dea method for the economic-statistical design of the c-control charts with multiple assignable causes. International Journal of Quality Engineering and Technology, 7(3):222–255.

- Zhang et al., (2024) Zhang, Y., Yan, M., and Ke, C. (2024). Joint design of vsi tr control chart and equipment maintenance in high quality process. Journal of Process Control, 136:103177.

- Zhao et al., (2020) Zhao, Y., He, X., Pecht, M. G., Zhang, J., and Zhou, D. (2020). Detection and detectability of intermittent faults based on moving average t2 control charts with multiple window lengths. Journal of Process Control, 92:296–309.

Appendix

9 Appendix

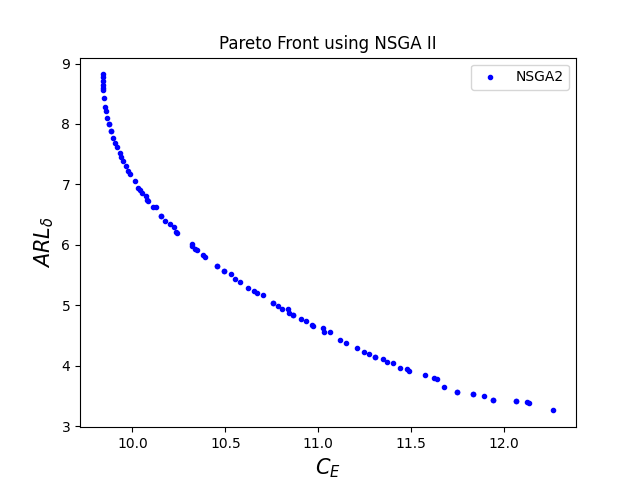

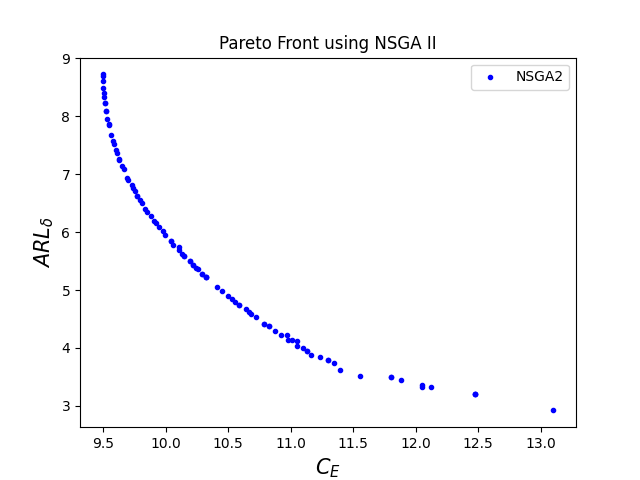

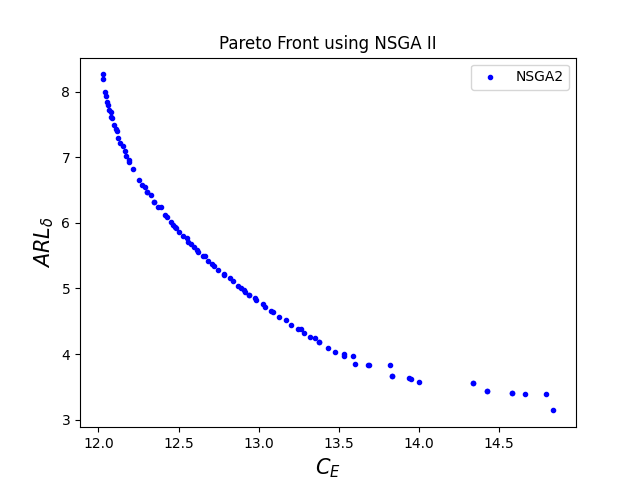

The corresponding optimal Pareto fronts for different values of are given in figure 2.

The corresponding optimal Pareto fronts for high levels of cost parameters are given in figure 3.

The corresponding optimal Pareto fronts for high levels of time-related parameters are given in figure 4.