A Modular Framework for

Centrality and Clustering in Complex Networks

Abstract

Abstract: The structure of many complex networks includes edge directionality and weights on top of their topology. Network analysis that can seamlessly consider combination of these properties are desirable. In this paper, we study two important such network analysis techniques, namely, centrality and clustering. An information-flow based model is adopted for clustering, which itself builds upon an information theoretic measure for computing centrality. Our principal contributions include a generalized model of Markov entropic centrality with the flexibility to tune the importance of node degrees, edge weights and directions, with a closed-form asymptotic analysis. It leads to a novel two-stage graph clustering algorithm. The centrality analysis helps reason about the suitability of our approach to cluster a given graph, and determine ‘query’ nodes, around which to explore local community structures, leading to an agglomerative clustering mechanism. The entropic centrality computations are amortized by our clustering algorithm, making it computationally efficient: compared to prior approaches using Markov entropic centrality for clustering, our experiments demonstrate multiple orders of magnitude of speed-up. Our clustering algorithm naturally inherits the flexibility to accommodate edge directionality, as well as different interpretations and interplay between edge weights and node degrees. Overall, this paper thus not only makes significant theoretical and conceptual contributions, but also translates the findings into artifacts of practical relevance, yielding new, effective and scalable centrality computations and graph clustering algorithms, whose efficacy has been validated through extensive benchmarking experiments.

Index Terms:

Directed Weighted Graphs, Entropy, Centrality, Graph Clustering, Random Walkers.1 Introduction

Complex networks [9] are a form of structured data that model interactions among individuals in diverse real-life applications. New instances of complex networks may be studied with existing techniques, or may motivate the introduction of new approaches. An example of a relatively recent and fast evolving complex network is the Bitcoin network, where individuals or nodes are Bitcoin addresses, and edges represent transactions from one address to another; they are thus directed and weighted. There are many reasons to study the Bitcoin network and other cryptocurrency networks as complex networks: understanding their topology and their evolution over time in turn offers economic or societal insights. Centrality analysis and clustering (also known as community detection) are two popular techniques that are used to study network structures and could be applied.

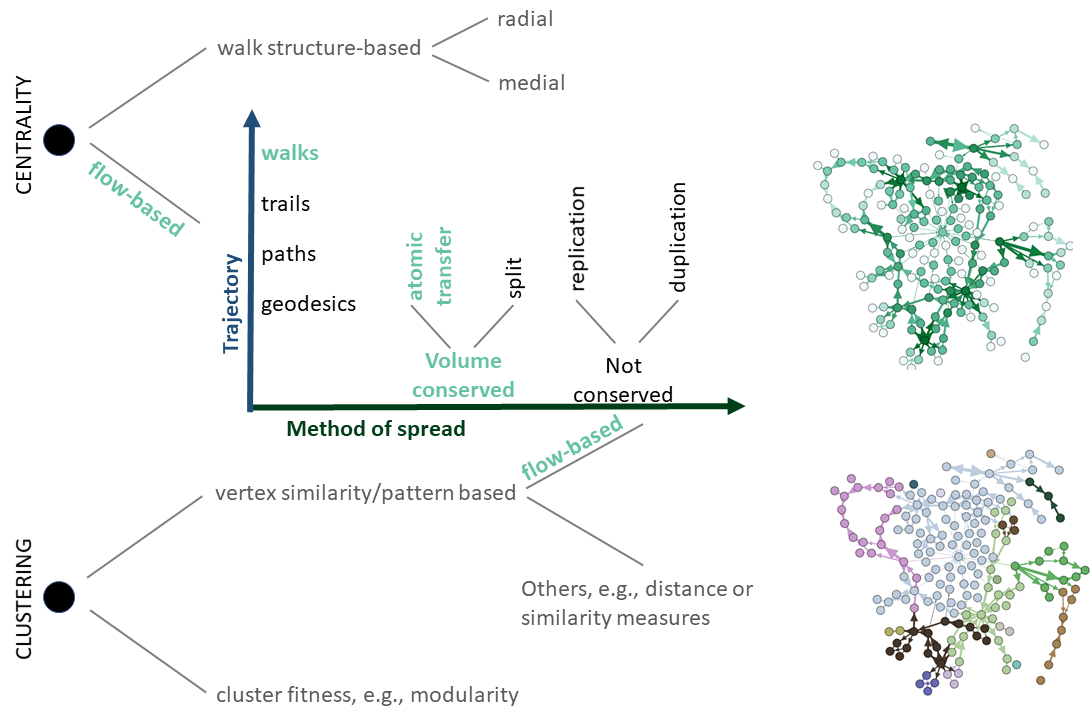

Centrality: The identification of key individuals in a complex network is often done using centrality, a family of measures that captures the importance of nodes in a graph. Centrality measures are often categorized (see Figure 1) by either flow or walk structure. (i) Flow-based measures come with a 2-dimensional typology [6]: the trajectory of the flow (categorized as - geodesics, paths where neither nodes nor edges are repeated, trails where nodes are repeated but edges are not, and walks, where both nodes and edges can be repeated) and the method of spread (which includes - transfer of an atomic flow, duplication or replication of the flow or a split of a volume conserved flow). (ii) Walk structure-based measures are partitioned into radial centralities (counting walks which start/end from a given vertex, e.g. the degree and the eigenvalue centralities) and medial centralities (counting walks which pass through a given vertex, e.g. betweenness centrality).

Our focus is on flow-based measures, motivated by the study of phenomena such as the movement of cryptocurrencies across a network from one sender to a receiver, where the volume of the flow is conserved. In [36], it was argued that the existing flow-based centrality measures back then left a void in the typology because they did not capture well the idea of path-transfer flow. Accordingly entropy, as a measure of uncertainty of dispersal, was advanced as a new centrality measure. The idea was modified in [23], where the path restriction was relaxed from the original idea of [36], allowing for the study of the flow as a random walker using a Markov model.

In order to distinguish senders from receivers, and consider equivalent or distinct (as the need of a specific analysis might be) the users involved in transactions involving large amounts from users carrying out large number of transactions of small amounts, and other such nuances, it is desirable to have the flexibility to consider the directionality and weights of the edges, which could carry their own semantics. While a notion of weighted degree centrality has been proposed [28], this paper proposes a notion of flow-based centrality which is seamlessly adjustable to undirected, directed and/or weighted graphs. Akin to [23], our work uses a random walker starting its walk at any given node, whose centrality is captured by the spread of the walk, measured in terms of entropy, and an absorption probability is used to model the walker stopping at any destination. While [23] rendered the usage of entropic centrality more practical than [36] by introducing the idea of a random walker, it also has limitations both in the theoretical exploration of the concepts as well as the resulting practical implications. These limitations, together with the potential of the ideas themselves, serve as motivations for our current work. Particularly, we provide, among other improvements, closed form expressions to characterize the behaviour of the walker. The specific details are elaborated when we discuss our contributions below.

Graph clustering: A set of nodes that share common or similar features are considered to comprise a cluster in a network. A general understanding of a good cluster is that it is dense (nodes within are well connected), and have few connections with the rest of the network [35, 22]. Beyond that, there is no unique criterion that necessarily captures or reflects the quality of the clusters. Depending on the particular aspects being captured, different approaches may be relevant, and likewise, different outcomes may be expected and desirable. In [35], two broad families of graph clustering methods have been identified (see Figure 1) - (i) those based on vertex similarity in some manner, e.g., distance or similarity measure which may or not take into account structural information (for instance, web-page similarity may consider only content similarity, or may also consider the hyperlinks to determine similarity), adjacency or connectivity based measures; and (ii) those based on cluster fitness measures, including density and cut-based measures, e.g., modularity. In [22], the former are referred to as pattern based, since these methods go beyond basic edge density characteristics. They include algorithms relying on random walkers [1, 4, 16], where the basic premise is that random walks originating at a given ‘query’ node is more likely to stay confined within a subgraph which forms a local community containing the query node, than to visit nodes outside said community. The probability distribution of a random walker’s visit (and absorption) at different nodes in the graph is used as a signal to identify cluster boundaries.

It is observed in [22] that while numerous clustering algorithms exist for undirected (weighted) graphs (see [35] for a survey of well known clustering techniques for undirected graphs), clustering algorithms for directed graphs are fewer. Most algorithms rely on creating an “undirected” representation of the directed graph considered, which may result in the loss of semantics and information contained in the directionalities. Examples of notable exceptions where the clustering algorithm is designed specifically for directed graphs are the information flow approaches of [34], where clusters are identified as subgraphs where the information flow within is larger compared to outside node groups, and [16], where an information flow-based cluster is a group of nodes where a random walker (referred as surfer in the work) is more likely to get trapped, rather than moving outside the group. Tellingly, the other random walker based approaches mentioned above, namely [1, 4], because of the additional constraints imposed on the walkers in those algorithms, are only suitable for undirected unweighted graphs.

Since directionality is of importance in many set-ups such as the example of a cryptocurrency network, we will focus on clustering algorithms that can handle both (un)directed and/or (un)weighted graphs, and thus we explore flow circulation based algorithms, which falls within the pattern based (vertex similarity) family of graph clustering algorithms.

This choice has a natural coupling with our proposed entropic centrality measure. Under the premise of confinement of flows within a subset of nodes as a way to interpret community structures, one may further consider the flow using any of the many characterization of flows described above in the context of centrality measures, including those supporting the treatment of directed and weighted graphs, each in turn capturing distinct real world phenomena. We would like to emphasize that these distinct approaches of interpreting the flows are not meant to compete with each other (let alone, compete with other clustering algorithms such as those based on local density), and are instead targeted at modelling and capturing distinct behaviors. Given a complex network which is inherently both directed and weighted, e.g., the Bitcoin network, one may need to study its properties considering its directionality, with or without the influence of weights, considering possibly different interpretation of weights, or just its bare topology. It is thus important to have a single clustering method with enough versatility to accommodate and instantiate the spectrum of combinations. Our proposed approach is designed to meet this particular objective.

Organization. The paper is logically organized in two halves: the first part (Sections 2 and 3) is dedicated to the centrality analysis, while the second (Section 4), which relies on the first part, delves into the clustering algorithm. Relevant experiments are reported within the corresponding sections.

In Section 2, we carry out a study of the proposed Markov entropic centrality for unweighted (un)directed graphs. We coin the term ‘Markov’ entropic centrality, as opposed to the original path based model studied in [36], because our model leverages the random walks being absorbed in an abstraction called auxiliary nodes with certain probability, and the future steps of the random walker depends solely on its current location, irrespective of its past. We analyze the behavior of this centrality as a function of (a) the probability that the walker stops at given nodes, and (b) time (or number of steps in the walk). Results are analytically proven in terms of bounds and a closed form expression captures the asymptotic behavior of the random walk probability distribution for unweighted directed graphs (undirected graphs can be readily captured by introducing directed edges for both directions), in contrast to the numerical computations carried out in [23]. An immediate practical implication of such a closed form solution instead of reliance on numerical computations is computational efficiency and scalability of the model. Our analysis provides a thorough understanding of the role of an associated parameter termed as the absorption probability, as well as the flexibility to explore the probability being a constant at all nodes, or being dependent on the individual nodes’ degrees — which in turn makes the model versatile to capture different situations, and enables to reason about the choice of the parameter. This is in contrast to the ad-hoc choices used for experiments in [23]. Finally, we introduce the notion of Markov entropic centralization which we study analytically in the asymptotic time regime as a way to provide further insights regarding the relative importance of the nodes.

In Section 3, we generalize the theory of entropic centrality for weighted graphs, taking into account the need to accommodate different interpretations of edge weights, and its competition with number of edges, in determining node centrality, e.g., tune the model as per the need of an analysis to let an edge with weight 3 have either more or less or equal contribution to a node’s centrality as three edges of weight 1. We refer to [28] for a more elaborate discussion on interpreting centrality for weighted graphs. This is furthermore in contrast to the treatment in [23], where weighted graphs are dealt with by changing the transition probabilities of the random walker as per edge weights, which is one specific instance within our tunable model.

Overall, Sections 2 and 3 result in our first set of contributions, which is vis-à-vis entropic centrality. We provide (i) a generalization of the entropic centrality model, which can be tuned to capture a spectrum of combinations in terms of the role of the edges’ weights and directionality, accompanied with (ii) a mathematically rigorous analysis which helps understand the role of the model parameters and choose them judiciously, and ultimately resulting in (iii) a computationally, significantly efficient and thus scalable model with respect to prior work.

Section 4 contains the proposed clustering algorithm. The above analysis of the random walker probability distributions carried out in the context of entropic centrality, accompanied with an analytical study of the graph centralization measure which captures how tightly the graph is organized around high centrality nodes, directly lends itself to the design of a novel graph clustering (community detection) algorithm, which amortizes the computations carried out for computing the node centralities. Foremost, from the study of the (absorption) probability distributions of the random walkers and the resulting entropic centrality scores of the nodes, we observed that: (i) nodes with a low entropic centrality have local clusters where ties are relatively strong, while nodes tend to have a relatively high entropic centrality when they either are embedded within a large cluster, or when they are at the boundary of two clusters (and could arguably belong to either), and moreover, (ii) sharp changes in the random walker absorption probability distribution signal boundaries of local community structures.

This leads to a two-stage clustering algorithm: First, observing that nodes at the boundary of clusters often act as hubs and have high centrality, we determine meaningful node centric local communities around nodes with low entropic centrality. This approach inherits the flexibility of the underlying centrality model, and is applicable to the spectrum of combinations (un/directed, un/weighted) of graphs. Furthermore, the strong coupling of the algorithm’s design with the aforementioned centrality measure allows to initiate the random walkers in an informed manner, in contrast to previous works based on random walks, such as [1, 4], which, in absence of any guidance on where to initiate random walks needed more complex (computationally, as well as in terms of implementation and analysis), constrained random walkers. Second, the process is reapplied on the created clusters (instead of the nodes) to effectuate a bottom-up, scalable, hierarchical clustering. Working principles behind the heuristic algorithms are supported by formal derivations, and the performance of the algorithms have been tested with experiments in terms of sensitivity and correctness, as well as through rigorous benchmarking using synthetic and real world networks with ground truth.

Finally, the existing flow based clustering approaches (and most clustering approaches, in general) do not have an inherent way to reason about the quality of clusters they may yield, given any graph. In contrast the distribution of the relative values of entropic centrality of nodes and graph centralization also inform us on whether and how much a given graph is amenable to the use of our approach for carrying out clustering.

In [23], a radically different approach (adapting [11]) for community detection is applied, where edges are iteratively removed, and in each iteration one needs to identify the edge such that the average entropic centrality over all the nodes is reduced the most. This requires the computation of entropic centrality of all the nodes multiple times for the different resulting graph snapshots from edge removals, and do so numerically over and over again. Naturally, the approach is computationally intensive and not scalable. For a specific undirected unweighted graph, our algorithm took 1.072 seconds to compute the communities, which is multiple orders of magnitude faster than the 3196.07 seconds needed by the edge removal algorithm [23] (more details on the specific experiments can be found in Subsection 4.4). The communities detected by our approach were also qualitatively better for the network where the two approaches were compared head-to-head. Moreover, the authors in [23] noted that their approach was not useful for community detection in directed graphs. We demonstrate with experiments that our approach works also with directed graphs.

To summarize, our second set of contributions are vis-à-vis graph clustering. The salient aspects of our work comprise (i) the use of entropic centrality (and underlying analysis) to inform on whether our approach will yield good quality of clusters given a graph, and if so, (ii) how to identify good candidate ‘query nodes’ around which initial sets of local community structures should be explored, which in turn yields (iii) an effective novel two-stage clustering algorithm even while using a single random walker (as opposed to prior studies, where random walkers initiated at random query nodes were shown not to be effective, necessitating more complex and constrained walks by multiple random walkers). Our clustering algorithm (iv) amortizes the previous computation of entropic centralities in computing these communities, and (v) inherits (from the underlying centrality model) the flexibility of interpreting the roles of edge weights and directionality, allowing for capturing different behaviors of a weighted directed graph as per application needs. Our approach is thus one of the few graph clustering approaches in the literature that handles directed graphs naturally, and additionally, it allows for flexible incorporation and interpretation of edge weights.

Experiments and Benchmarking: Emerging complex networks such as the Bitcoin network motivated the introduction of a framework for centrality and clustering that handles a variety of angles from which the network structures can be studied, considering in combination the direction and weight of edges. The principal objective of the proposed clustering algorithm is to seamlessly accommodate the various combinations in which the network can be interpreted. A second objective is to ensure that our approach also yields meaningful clusters in general, and in particular, that the obtained results are meaningful when applied to standard graphs used for graph clustering benchmarking. Experiments to support our work are designed accordingly.

Clustering of Bitcoin transaction subgraphs [24, 25] is performed to explore the versatility of our model vis-à-vis the spectrum of choices in interpreting the directionality and weights of edges. Since no ground truth is known, we compare the obtained results with an existing Bitcoin forensics study [32].

Evaluating in general the quality of a clustering algorithm is a debated topic [37, 30]. Complications arise because of multiple reasons, including that different clustering algorithms may identify communities which may reflect different aspects of relationships and thus different results may not necessarily be wrong or worse; there may be multiple interpretations of relationships, resulting in different ‘ground truths’ being valid. We thus report results from a variety of networks, falling into two categories: (a) synthetic networks, and (b) real world graphs with meta-information used to assign a ground truth.

We apply our algorithm on synthetically generated networks, which eliminates the ambiguity of ‘ground truth’ since it is determined by design. We refer to [17, 18] for more details advocating the use of synthetic graphs for benchmarking community detection algorithms. A caveat with using synthetic benchmark graphs is that, they may themselves be created following certain rules, e.g., [18] is generated guided by local density measures. That in turn may favor specific families of clustering algorithms. Ranging from small graphs (of 100 to 500 nodes) to larger graphs (of 1000 and 4000 nodes), the proposed algorithm fares well and is comparative with Louvain [5], with our results showing neither algorithm clearly outperforming the other in determining the clusters, but rather, each algorithm outperforming the other depending on individual instances.

We carry out comparative studies with classical networks and connect these experimental studies back to the conceptual foundations of our approach, which allow us to understand whether our approach is expected to yield good clustering results for a given graph depending on the distribution of the relative values of entropic centralities. This includes the classical karate club [38] and the small cocaine dealing network [8] to interpret and explain the meaning of our model and validate the corresponding findings guided by the well understood narrative associated with these graphs, but also the dolphin network [21] and the American football network [11], for which the performance of our clustering algorithm is compared with well known algorithms, namely Louvain [5], Infomap [34] and label propagation [2]. Remark: All experiments and implementations presented in this paper were done in Python, using in particular the libraries NetworkX [14] and Scikit-Learn [29]. All graphs were drawn using either NetworkX or Gephi [3].

2 Entropic Centrality for Unweighted Graphs

A right stochastic matrix is a square matrix, where every coefficient is a non-negative real number and each row sums to 1. A right stochastic matrix describes a Markov chain , , over states. The coefficient of is the probability of moving from state to state , and since the total of transition probabilities from state to all other states must be 1 by definition of probability, the matrix is indeed a right stochastic matrix, called a transition matrix. To show that is again a right stochastic matrix for any positive integer , take the all 1 column vector and notice that for a right stochastic matrix. Then . Let be the th row of , and be its th column. Their product determines the probability of going from the state to the state in two steps, where the intermediate state is , for any possible state . In general, gives the probability transition of going from any state to any another state in steps.

Consider now a connected directed graph with nodes labeled from to and directed edges, and a random walker on , which starts a random walk at any given node according to an initial probability distribution. If the walker decides on which node to walk to, based only on the current node at which it currently is ( does not have to be distinct from ), the random walk is a Markov chain, which can be modeled by a stochastic matrix where is the probability to go to node from node . We will assume that every node has a self-loop (a self-loop is needed for the model to encapsulate the case of nodes with zero out-degree.) Let denote the out-degree of , which includes the self-loop as per our model. A typical choice that we consider in this section is for every node belonging to the set of out-neighbors of (this is saying that each neighbor is chosen uniformly at random). Other choices are possible, and indeed, subsequently in this paper, we shall consider the case of weighted graphs where the transition probabilities depend on the edge weights.

2.1 Markov Entropic Centrality

The notion of entropic centrality for a node in an undirected graph was originally defined in [36] as

| (1) |

where denotes the probability of reaching from following any path. This formalizes the idea that a node is central if there is a high uncertainty about the destination of a random walker starting at it, assuming that at each hop, the random walker is equally likely to continue to any unvisited node, or to stop there. This is because the Shannon entropy of a random variable measures the amount of uncertainty contained in . Entropic centrality given in (1) was then extended to non-atomic flows in [26, 27] and to a Markov model in [23] by relaxing the assumption (in [36]) that a random walker cannot revisit previously traversed nodes. In this paper we generalize the Markov entropic centrality model. This notion of centrality captures the influence of “friends”, and of friends of friends (see e.g. [15] for a discussion of the role of friends in considering centralities).

To define a notion of entropic centrality similar to (1), the random walker actually needs to have the choice of stopping at any given node. This is not well captured by the transition matrix described above, even if there is a self-loop at every node. Thus for every node in , an auxiliary node is added [23], together with a single directed edge , and a probability to be chosen (the original probabilities are adjusted to the probabilities so that the overall matrix remains stochastic). Once the flow reaches an auxiliary node, it is absorbed because the auxiliary nodes have no outgoing edges. This gives a notion of Markov entropic centrality as defined in [23].

Definition 1

The Markov entropic centrality of a node at time is defined to be

| (2) |

where is the probability to reach at time from , for a node in where is the probability to reach an auxiliary node from .

A node is central if is high: if is high, using the underlying entropy interpretation, this means that for a random walker starting at node , the uncertainty about its destination after steps is high, thus is well connected.

The time parameter can also be interpreted as a notion of locality. It describes a diameter around the node , of length steps. Thus the entropic centrality at emphasizes a node’s degree, being the graph diameter implies that we are considering a period of time by when the whole graph can be first reached, and describes the asymptotic behavior over time. Thus can be regarded as a measure of influence of over its close neighborhood for small values of , and over the whole graph asymptotically.

Given an stochastic matrix describing the moves of a random walker on a directed graph, let us then introduce auxiliary nodes, one for each node , , with corresponding probabilities to walk from node to node . This means the out-degree of increases by 1 because of the addition of . Nevertheless, by , we will actually refer to the degree of before the addition of . This creates the following right stochastic matrix

where is a diagonal matrix, and is such that . We assume that has for -th row . Then for every . This is alternatively written as . The identity matrix represents the stoppage of the flow at the auxiliary nodes (an absorption of the flow arriving at these nodes). To determine the centrality of a specific node , we assume an initial distribution that gives a probability of 1 to start at , and elsewhere.

The definition of Markov entropic centrality was used as part of a clustering algorithm in [23], and the probabilities were explored numerically to optimize the clustering results. Our first contribution is the following closed-form expression for the asymptotic behavior of the transition matrix.

Lemma 1

For an integer and , we have

| (3) |

In particular

| (4) |

when .

Proof:

Formula (3) follows from an immediate computation. Since have non-negative real coefficients, so has , thus for any , and equality holds if and only if for some . But this must exist when , because is a stochastic matrix, meaning that the sum of every row must remain 1, while the coefficients of increases at every increment of . Then

but since , the matrix is invertible, , yielding (4). ∎

The matrix in (3) contains a first block whose coefficients are the probabilities to go from to in steps. The second block contains as coefficients the probabilities to go from to in steps, which we denote by . Therefore the probabilities in (2) are obtained from the th row of the matrix , by summing the coefficients in the columns and . When , the term in column becomes , and we are left with the term in column ,

of the form

This means that we are looking at the probability to start at and reach in steps, and then to get absorbed at (that is reaching , and then not leaving ). Asymptotically, the entropic centrality defined in (2) becomes

We discuss next how the choice of the probabilities to reach an auxiliary node from influences the random walker at time .

Lemma 2

The probability to start a walk at and to reach in steps is bounded as follows:

Proof:

Since , we have

and we observe that the minimization is taken over all walks from to in steps, or more precisely over all nodes involved in such walks, except and . Certainly for in such a walk, . The other inequality can be established identically, and thus

For small or for an isolated node, better bounds are obtained by replacing the minimum/maximum over all nodes by those in the different walks from to , but for large, starting from , it is likely that for most well connected nodes, the bounds are getting tight. ∎

Corollary 1

In particular:

-

1.

If for all , we have .

-

2.

If and , then , and

Proof:

-

1.

All inequalities in the proof of the lemma are equalities in this case.

-

2.

By definition, .

∎

The case when is reminiscent of the notion of Katz centrality. A factor is introduced so that the longer the path, the lower the probability. If is chosen close to 1, e.g. , then (e.g. ) becomes quickly negligible. If instead is chosen close to , e.g. , then it takes longer for probabilities to become negligible (e.g. ).

The case instead uses (inverse) proportionality to the number of outgoing edges. Both the upper and lower bounds given in the proof of the above corollary depend on the degree of the nodes included in walks from to , and when the walk length grows, the number of distinct nodes is likely to increase, giving the bounds stated in the corollary. These bounds depend on the function , which closely converges to 1, in fact for , we already get . Therefore, is mostly behaving as , except if is small enough (say less than 8).

In summary, the emphasis of the case is on the length of the walk, not on the walk itself, while for it is actually on the nodes traversed during the walk, with the ability to separate the nodes of low entropic centrality from the others.

The bounds of Lemma 2 can be applied to the asymptotic case.

Lemma 3

The probability to start at and to be absorbed at over time is bounded as follows:

Proof:

Recall that is the probability to start at and to be absorbed at over time. Then

| (5) |

and use Lemma 2:

to get

Therefore

| (6) | |||||

for an intermediate node between and .

The bound can be made coarser, using that :

∎

The bounds are well matching the intuition: three components mostly influence : the likelihood of leaving (the term ), that of reaching from , and that of getting trapped at (the term ). When for all , the bounds on are met with equality and we have that

Therefore is weighted by and irrespectively of the choice of , and so is the corresponding entropic centrality: when grows, grows, thus for must decrease since probabilities sum up to 1 and

since the second sum contains terms whose sum is at most , and whose maximum is reached when all probabilities are . Also the function is concave and has a global maximum at such that , that is , for which . Thus

| (7) |

and for a given , this upper bound becomes small when grows. The same argument repeated for shows (part of) the role of the out-degree of in its centrality.

In what follows, we will provide some experiments to illustrate the consequences of choosing , but we will then focus on the case for the reasons just discussed: (1) choosing is influenced by the choice of the walk rather than its length, and (2) depends on the degrees of and rather than on the constant .

Lemma 4

Set . The asymptotic Markov entropic centralization (see [10]) defined by

where is the node with the highest Markov entropic centrality in is given by

when .

Proof:

The maximum at the denominator is taken over all possible graphs with the same number of vertices. A graph that maximizes the denominator would have one node with the maximum centrality, and all the other nodes with a centrality 0 (thus minimizing the terms contributing negatively). This graph is the star graph on vertices, since the middle node has outgoing edges, and the leaves have none. Therefore all leaves have an entropic centrality of 0, and the center of the star has maximum entropic centrality. Formally, with and putting in the first row:

Then

and using Schur complement:

so

Lemma 1 tells us that for all and thus its Markov entropic centrality is , while is 0 for . ∎

Definition 2

Given a graph , label its vertices in increasing order with respect to their Markov entropic centralities, that is for and . We define the centralization sequence of to be the ordered sequence

It is an increasing sequence, with values ranging from -1 to 1. We already know that the maximum is 1 by Lemma 4. The minimum is achieved when has centrality 0, and every other node has centrality . This is the case if we consider a graph on vertices defined as follows: build a complete graph on vertices, that is each of the vertices have outgoing edges (including to themselves). Then add one additional vertex (), and an outgoing edge from every of the previous vertices of the complete graph to this new vertex. The advantage of the centralization sequence with say considering the sequences of ordered centralities is that it captures for every node how central it is with respect to other nodes, normalized by a factor that takes into account the size of the graph, which allows comparisons among different graphs.

2.2 A Markov Entropic Centrality Analysis of the Karate Club Network

Consider the karate club network [38] (used as an example in [23]) shown in Figure 2. We use this small social network comprising 34 members (nodes) as a toy example to illustrate and validate some of the ideas explored in this paper. The 78 edges represent the interactions between members outside the club, which eventually led to a split of the club into two, and are used to predict which members will join which group. This is an undirected unweighted graph, which is treated as a particular case of directed graph ( is the degree of ). Let denote the transition matrix such that for every and every neighbor of . The work [23] explores the choice of (and ) in the context of clustering. Here, we start by investigating the role of in terms of the resulting Markov entropic centrality, for finite ( since the karate club network has a diameter of 5) and asymptotic (using Lemma 1).

Influence of .

Figure 3 illustrates the Markov entropic centrality of the nodes 111We choose these specific nodes, since these were studied explicitly in [23]. for different values of : for ,222Note that represents the identity matrix of dimension . with 333We cannot use since this means no absorption probability. Also the matrix in Lemma 1 would have no reason to be invertible., , we observe that is decreasing and flattening, for all nodes and for every value of . This is expected, since when the probabilities at the auxiliary nodes are increasing as a constant, the overall uncertainty about the random walk is reducing (as computed in (7)). Thus the higher the absorption probability, the greater the attenuation of the entropic centralities for all nodes. More precisely, (7) upper bounds by

which is consistent with the numerical values obtained (, , ).

For such that (shown on the lower right subfigure), the Markov entropic centralities are more separated than previously: indeed, a node with small degree then has a high absorption probability, which induces a large attenuation on its entropic centrality (as discussed after (7)). The net effect is a wide gulf in the centrality scores between nodes with low and high degrees.

Influence of .

We notice how the centrality of a node is influenced over time by its neighbors. Consider for example the upper left corner figure for nodes 12 and 5. Node 12 starts (at ) with an entropic centrality significantly lower than node 5 - indeed node 5 has three neighbors ( neighbors and the self-loop), more than 12 which has only one (thus including the self-loop). Yet node 12 reaches a “hub” (a node of itself high centrality), namely node 1, at , and thus for , its entropic centrality grows, and eventually ends up being almost as high as that of node 1 itself. In contrast, even though node 5 reaches the same hub, it also belongs to a local community within the graph, inside which a significant amount of its influence (flow) stays confined. This explains why node 5 ends up having a lower entropic centrality than node 12 in particular. In the upper right and lower left plots, we have assigned a significant volume of the flow to be absorbed at the auxiliary nodes, which has a net effect of attenuating the absolute values of entropic centrality for all nodes, i.e., a downward shift and flattening. This happens for nodes 5, 12, 29 on the lower right plot, since is significant if the node has a low degree like 5, 12, and 29. Taken together with the initial gap among the centralities of nodes 5 and 12, we thus do not observe the overtake in these experiments, unlike in the case where the absorption probability was negligible.

Another less prominent behavior that we notice for node 34, and to a certain extent for node 1, is that there is a dip in their entropic centralities at the beginning, before they rise up to their long term values. We hypothesize that this is because some of the immediate neighbors of these nodes form local groupings (for instance, 34’s neighbor node 15 has only 33 and 34 as neighbors, node 27 has only nodes 30 and 34 as neighbors), and thus after the initial dispersal of influence among a relatively large number of these neighbors (hence the initial high centrality), their influence gets concentrated within the local cluster with the initiating node itself for a while (thus the dip), before reaching out more evenly to the rest of the network, leading to another rise in entropic centrality.

Centralization sequence.

The min and the max of the centralities are 3.0859 and 4.7216, while the mean and the median are 4.07636 and 3.9111. The min, median, mean and max of the centralization sequence as defined in Definition 2 are [-0.19467,-0.03248,0,0.12682]. The values for the above studied nodes of interest are 0.1226 for 1, 0.1241 for 34, 0.10195 for 33, 0.03485 for 29, -0.17471 for 12 and -0.06289 for 5.

Hypothesis.

The above analysis suggests that changes in the Markov entropic centralities over time are indicative of local communities in the graph, with changes in gradient corresponding to traversal of boundaries from one community to another. Nodes with low centralization have a low centrality with respect to both other nodes in the graph and graphs of the same size, since they are likely to be either isolated or to belong to a small community (e.g. node 5). While the reverse argument suggests that nodes with high centralization (e.g. nodes 1, 33 and 34) are likely to be either hubs or close to hubs. We will explore and exploit this observation to design a clustering algorithm in Section 4.

Comparison with known centralities.

Table I compares different centralities. In addition to the previously mentioned prominent centrality measures, we also consider load centrality [12] in our experiments, because it captures the fraction of traffic that flows through individual nodes (load), considering all pairwise communications to be through corresponding shortest paths. Both the path and the asymptotic Markov centralities give the same ranking, it is similar to the ranking given by the degree centrality (with some difference regarding node 5 and 12, which are explained by the above discussion). The betweenness and load centrality agree on their ranking, which is different from the other ones. These results are not surprising: for a small graph, the degree heavily influences short paths/walks, explaining the agreement among and , but it has little to do with the betweenness and the load. The same ranking observed between betweenness and load centrality is expected, since the latter was proposed to be a different interpretation of betweenness centrality [12], even though some minor differences have been identified subsequently [7].

| node | |||||

| node 34 | 4.83992 | 4.82504 | 0.5151 | 0.3040 | 0.2984 |

| node 1 | 4.83041 | 4.81999 | 0.4848 | 0.4376 | 0.4346 |

| node 33 | 4.76892 | 4.72539 | 0.3636 | 0.1452 | 0.1476 |

| node 29 | 4.50092 | 4.34323 | 0.0909 | 0.0017 | 0.0017 |

| node 5 | 4.07244 | 3.90674 | 0.0909 | 0.0006 | 0.0006 |

| node 12 | 3.39469 | 3.26763 | 0.0303 | 0 | 0 |

3 Entropic Centrality for Weighted Graphs

Consider now a weighted directed graph , where the weight function attaches a non-negative weight to every edge . For a node , let be the set of out-neighbors of , be the out-degree of , and be the weighted out-degree of .

A natural way to define a transition matrix to describe a random walk over taking into account the weight function would be to set . Now at , a node whose out-degree is 2 with outgoing edges of weight 1 is considered less important than a node with out-degree 6 and all 6 outgoing edges of weight 1, because contributes a probability instead of for , therefore reducing the uncertainty from to . But if a node has two outgoing edges, one with weight 2 and one with weight 3, then its entropic centrality is . Thus the node with weighted outgoing edges has lower entropic centrality than the node with unweighted outgoing edges. This is meaningful from an entropy view point, since it captures uncertainty, and the uniform distribution over the outgoing edges gives the most uncertainty about the random walk. However this may not be desired if the given scenario requires nodes with high weighted out-degree to be more central than others, e.g., when using the centrality measure as a proxy for determining influence. It is well known that centrality measures for unweighted graphs can be adapted to weighted graphs, but at the risk of changing their meaning, and that one way to remedy this is by the introduction of a weight parameter, see e.g. [28].

3.1 Weighted Markov Entropic Centrality

In order to capture the weights of the outgoing edges in the current framework of Markov entropic centrality, we introduce two tuning parameters, a conversion function and a node weight function .

The conversion function adjusts the transition matrix such that (with ) depending on the importance that weights are supposed to play compared to edges. The two obvious choices for are for all edges , which treats the weighted graph as unweighted, and which considers the graph with its given weights, more generally one could define for some parameter . This formulation has the flexibility to give more or less importance to weights with respect to edges. It is enough here to consider the case where weighted edges are more important than unweighted ones.

The conversion function allows the weights to play a role in the transition matrix , based on the scenario needs, but it does not address the issue of defining entropic centrality for weighted graphs. To do so, we need a tuning parameter (e.g., [28]) within the definition of entropic centrality, that maintains the semantics and meaning of the notion of entropy. We use the node weight function and propose the notion of weighted Markov entropic centrality, which is inspired by the concept of weighted entropy [13].

Definition 3

The weighted Markov entropic centrality of a node at time is defined to be

| (8) |

where is the probability to reach at time from , for in , taking into account the weights for every edge in . Auxiliary nodes defined for the unweighted case are still present and is the probability to reach an auxiliary node from at time , which depends on the choice of the absorption probability matrix , as in the unweighted case. The probabilities are obtained from the matrix (use instead of in Lemma 1).

Before discussing the choice of , we recall that when , as for the unweighted case, the terms in column of becomes , and we are left with the term in column , of the form

asymptotically yielding the entropic centrality

Lemma 3 holds, therefore similarly to the unweighted case, we have that if the probability of absorption is , then repeating the arguments leading to (7) yields:

| (9) | |||||

which shows that while may increase the overall centrality, it remains true, as for the unweighted case, that increasing just reduces the overall centrality. Therefore we will continue to use as absorption probability.

The computation of involves a sum over all nodes , including , and the probability to go from to every . The weight captures the influence of the reached node : if we reach influential nodes of weight in time, then should be more central than if the only nodes we reach from it have no influence themselves. We define to be if . When , and we set . The weighted Markov entropic centrality (8) gives an influence which is proportional to the ratio , while gives a way to amplify or reduce this influence.

If all weights are 1, then the ratio simplifies to 1, and we are back to the non-weighted definition of Markov entropic centrality (as also with ). Other possible choices for could be explored, such as . Indeed, would be the influence that would have had over its neighbors in terms of entropic centrality if it had neighbors of weight 1, while is the entropic centrality over the neighbors of , ignoring the weights.

If the weights are normalized such that the lowest weight is mapped to 1, the entropic centrality values obtained when using a non-trivial function for will necessarily be at least as much as obtained with .

The centralization as computed in Lemma 4 can be generalized to the weighted case. In the unweighted case, comparison was done among graphs with the same number of vertices. In the weighted case, comparison is done among graphs with the same number of vertices, with given weights . A graph that would give the maximum centrality consists again of a star graph, with center , assuming that the leaves have a self-loop which can be weighted, in which case for , and is any weight. The centrality of the leaves will be zero, but that of will be . However maximizing this quantity over does not have a closed form expression. Loose bounds can be computed though: (see before (7) for a bound on ).

3.2 A Weighted Markov Entropic Centrality Analysis of a Cocaine Dealing Network

We consider the cocaine dealing network [8], a small directed weighted graph coming from an investigation into a large cocaine traffic in New York City. It involves 28 persons (nodes), and 40 directed weighted edges indicate communication exchanges obtained from police wiretapping.

The (weighted) Markov entropic centrality depends on the choice of the absorption matrix , we thus start by looking at how a change in influences the entropic centralities. We keep the same choices for as for the unweighted case, namely , , and such that , since when for all edges, then for all nodes.

In Figure 5, we plot the (weighted) Markov entropic centrality for for , for the 4 choices of absorption probabilities . For , 4 variations of Markov entropic centralities are considered: and (straight lines), corresponding to the unweighted case, and (long dash lines) for the case where the transition matrix is used, and (short dash lines) to show how centrality is computed based purely on , and finally with (dotted lines), for which is used for the transition matrix, together with the weighted entropic centrality. Nodes 27 and 14 are different in nature, in that node 14 is a hub, with high degree compared to the rest of the other nodes, while node 27 has only one incoming edge and two outgoing edges. They are chosen as representatives of nodes of respectively high and low degree (even though the degree is not the only contributing factor in the node centrality, it still plays an important role for small values of ). For each of the 4 plots, the 4 upper lines characterize the behavior of node 14, and the 4 lower lines the one of node 27. We observe that the behavior of the entropic centralities when are similar to what was already observed for the karate club network: for and , the centralities are flattened because the path uncertainty is reduced when the absorption probabilities are increasing (as shown in (9)), while for such that , the gap among the centralities is (slightly) increasing. When , both centralities are amplified. Since node 14 has many outgoing edges, with weights including 10, 11, 14 18, 19, the introduction of creates a higher amplification for node 14 than for node 27, whose edge weights are all less than 5. Zooming at time for node 14 and , we notice that the short dashed line has a peak, before going down. This is explained by the fact that when and , the entropic centrality is that of a node with degree amplified by the weights, thus creating an initial jump in the entropy. However at the next time, several reached nodes have in turn very few (or no) neighbors, and edges of low weights, and this leads to a saturation effect. This behavior is less prominent for other choices of , where the absorption probability is high, and hence the effect of the edge weights is attenuated.

We then focus on the case where , which depends only on the network setting, instead of other choices of which are parameters whose range can be explored one by one. With this choice of , we consider the (weighted) Markov entropic centrality for , as displayed in Figure 6. These nodes have outgoing edges with weights , for , weights for , and weights for . These nodes are chosen because they have similar out-degrees, but different weighted out-degrees. The same 4 scenarios as above are considered. For node 2, its edge to 10 stops at 10, its edge to 5 has only one connection to node 23 which has weight 1, and its last edge goes to 25, which has no connection either, therefore the 4 centralities are actually of the same quantity, and there all the 4 lines are coincident. For node 27, since it has the same out-degree as node 2, in the unweighted case, it starts at the same centrality as node 2. However it is even less influential since none of its own neighbors have neighbors. In the 3rd scenario, its entropic centrality is even lower than in the unweighted case, which is normal, since in this case, the walk distribution is not uniform anymore. In the two cases where , we see a jump in the centrality, explained by the weights of the outgoing edges which are higher than for node 2. However, the centrality of node 27 remains below that of 20, whose weighted out-degree is less than that of 27, but its out-degree is actually more.

3.3 Interpreting Entropic Centrality with a Bitcoin Subgraph

We next consider a small subgraph extracted from the bitcoin network comprising 178 nodes and 250 edges. Nodes are bitcoin addresses, and there is an edge between one node to another if a bitcoin payment has been made. Since the proposed Markov entropic centrality measure is suitable for undirected, directed and weighted graphs, we look at the chosen bitcoin subgraph as an undirected unweighted graph by ignoring the direction of transactions, as a directed unweighted graph by just considering whether any transactions exists, and as a directed weighted graph (with , ) to capture the effect of transaction amount.

In Figure 7 we show the three variations with nodes colored according to their Markov entropic centrality at (asymptotic). The darker the node color, the higher the Markov entropic centrality. In subfigure 7a, one hub stands apart, with the highest entropic centrality, which is a node which is highly connected to other nodes. If we look at the same node in subfigure 7b, we see that it is not central anymore: this is because in the directed graph, this node is actually seen to receive bitcoin amounts from many other nodes, so as far as sending money is concerned, it has very little actual influence (in the same graph but with edge directions reversed, this node would have had the highest centrality). On the other hand, nodes that were not so prominent in the undirected graph appear now as important. The graph in subfigure 7b highlights nodes which are effectuating many bitcoin transactions. Now one node may do several transactions with little bitcoin amount, we may want a node that does few but high amount transactions to be more visible, which is illustrated in subfigure 7c. It turns out that for the subgraph we studied, high centrality nodes in the unweighted case remain high centrality nodes in the weighted case, meaning that nodes performing many bitcoin transactions are also those sending high amount transactions.

For the sake of completeness, we provide Kendall- rank correlation coefficients among different variations of (weighted) Markov entropic centralities for the directed graph, for : for (shown on subfigure 7b), for (shown on subfigure 7c), but also for , and for . The out-degree centrality is also tested. A Kendall- coefficient is a measure of rank correlation: the higher the measure, the higher the pairwise mismatch of ranks across two lists. Results are shown in Table II. Above the diagonal, coefficients are obtained using all 178 nodes. Below the diagonal, only 67 nodes are used, which are in the union of the top 20% nodes for each of the 5 aforementioned centrality measures. In the given graph, many (peripheral) nodes have the same (low) entropic centrality, so when all nodes are considered for ranking correlation, the average is misleadingly low. Since high centrality nodes are often of interest, ranking variations among the top nodes is pertinent, and we observe that (i) out-degree is not a good proxy for most entropic centrality variants, and (ii) the different variations yield significantly distinct sets of high centrality nodes, corroborating the need for our flexible entropic centrality framework.

| , | 1,1 | ,1 | 1, | , | |

| 1,1 | 0 | 0.159 | 0.075 | 0.084 | 0.086 |

| 0.419 | 0 | 0.193 | 0.214 | 0.218 | |

| ,1 | 0.315 | 0.60 | 0 | 0.120 | 0.050 |

| 1, | 0.186 | 0.538 | 0.305 | 0 | 0.089 |

| , | 0.346 | 0.671 | 0.097 | 0.283 | 0 |

4 Entropic Centrality Based Clustering

We next explore whether and how the local communities inferred from the gradients observed in the evolution of the entropic centralities as a function of (see Section 2) can be exploited to realize effective clustering.

The formulas (2) and (8) for the (weighted) Markov entropic centralities involve the sum of probabilities and respectively. We will use to denote the matrix whose th row contains the coefficient in the unweighted case, and in the weighted case, in column . Since the algorithm that we describe next uses in the same manner, irrespectively of (though we will focus on the case ) and of whether there is a weight function, we keep the same notation .

The proposed algorithm works in two stages: first, we create local clusters around ‘query nodes’, and then, we carry out a hierarchical agglomeration. The choice of query nodes in our approach is informed by the entropic centrality, which we describe first (Subsection 4.1), before we elaborate the algorithm (in Section 4.2).

4.1 Query Node Selection

In the initial step of the algorithm, we look for a cluster around and inclusive of a specific query node, similarly to [4]. In [4] though, it was not possible a priori to determine suitable query nodes (e.g., a node could be at the boundary of two clusters, and thus yield the union of subsets of these clusters as a resultant cluster), and hence multiple constrained random walkers were deployed to increase the confinement probability of the random walks within a single cluster. In contrast, our choice of query nodes is informed by their Markov entropic centrality.

Let us first formalize some of the information captured by the notion of entropy.

Proposition 1

Given the probabilities , , , and such that , we have:

-

1.

, and for ,

-

2.

.

Proof:

Since we ordered the probabilities by increasing order, we write

and for some certainly implies . Thus there must be probabilities of the form (in particular, if , , and likewise, when , ). In turn, we must have which is at least probabilities shared between and , and they must sum up to .

It is not possible that all probabilities belong to the group (i.e., ), because if all of them were strictly less than , we would have at most (a similar argument holds for not having all the probabilities in the group ). More generally, this is saying that deficiency from among the has to be compensated by the :

where , and accordingly varies from being strictly less than to strictly more than .

We can refine these ranges using the number of probabilities we are allowed in each sum. Suppose that among the probabilities that are left to be assigned, are the probabilities for some , and the rest, namely are the probabilities .

For at least probabilities strictly more than , we have:

∎

We apply this proposition to , for a given node , and for ranging over possible nodes in . Let be a parameter that decides the proportion of probabilities less than (the larger , the smaller the proportion), and let be a parameter that characterizes how small the Markov entropic centrality of a node is (the larger , the lower the entropic centrality). The result says that given , there is a tension between agglomeration of small probabilities on the one hand and agglomeration of large probabilities on the other hand: the larger the agglomeration of large probabilities (as a function of and ), the lower the agglomeration of small probabilities, and conversely. Furthermore, as grows, it creates an agglomeration of large probabilities which is detached from that with small probabilities. This suggests that nodes with a low entropic centrality have local clusters where ties are relatively strong, while

nodes tend to have a relatively high entropic centrality when they either are embedded within a large cluster, or when they are at the boundary of two clusters (and could arguably belong to either). Accordingly, we choose to start identifying local communities by choosing the low entropic centrality nodes as query nodes.

This is illustrated in Figure 8 for the 178 node Bitcoin subgraph, treated as unweighted and undirected. We consider a node with high centrality on the left hand-side, it has centrality , and one with low centrality on the right hand-side (with centrality ). By high and low, we mean that both belong to the top 10 nodes in terms of respective high/low centrality. The histograms show the probability that a random walker is (asymptotically) absorbed at a node. The -axis is in logscale. The bins are placed at 0, , , and then at half the maximum probability (as observed across the two cases) and the maximum probability. In the first bin, for both cases, there are more than 100 nodes which have a negligible probability of the random walker being absorbed. As predicted by Proposition 1, for the node with high entropic centrality, they are less spread apart (and there are more probabilities close to ) than for the low entropic centrality node, which has, furthermore one high probability (between 0.3 and 0.6). Such higher probabilities of absorption at a few nodes in the graph are precisely what we use as a signal for a local community structure.

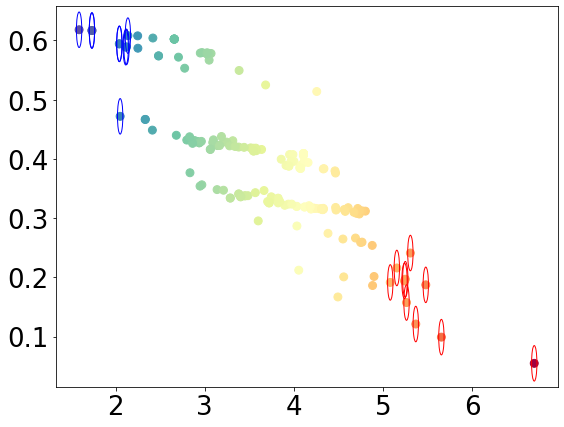

This finding is consistent with the hypothesis formulated at the end of Section 2.2, namely that if there is no significant change in the entropic centrality over time, then the node belongs to a small community, while larger entropic centralities indicate nodes that are well connected (possibly indirectly) to many nodes rather than being strongly embedded in a particular well defined community. In Figure 9 we show a scatter diagram illustrating, again for the 178 Bitcoin subgraph, the relations between the entropic centrality of a node and the highest probability of absorption at any node for a random walker starting at the respective node. We see that nodes with high centralities have as highest absorption probability, values below 0.3, while low entropic centrality nodes have highest absorption probability of more than 0.4. For this subgraph, the centralization sequence has a minimum of -0.27962, a maximum of 0.40350, a mean of 0 and a median of 0.00560. The nodes that are “circled” are those with highest centralization in red (centralization values more than 0.18), and those with lowest centralization in blue (centralization values less than -0.2).

The Markov entropic centrality score acts as a good summary of the detailed probability distributions of absorption for a random walker starting at different query nodes. Specifically, we see that using low entropic centrality nodes as query nodes would yield meaningful local clusters precisely by identifying the nodes at which the random walker is absorbed with high probability to constitute a local community. Such communities can then be further coalesced into larger (and fewer) clusters or re-clustered into smaller (and more numerous) communities, as deemed suitable, in a hierarchical manner. We will see, in our experiments, what is a suitable ‘low’ or ‘high’ entropic centrality, particularly, that this would depend on the distribution of the centrality scores. The distribution of the relative Markov centrality scores for a given graph is thus also a good indicator of whether the proposed approach would be effective for clustering that graph instance, and helps us reason about whether to use the proposed approach. This will be illustrated in our experiments, in particular in Subsection 4.6.

4.2 Entropic Centrality Based Clustering Algorithms

Keeping in mind the above discussion, we describe the first stage (without the iterative hierarchical process) of clustering in Algorithms 1 and 2.

In Algorithm 1, we maintain as a current global view of clusters. We start from the lowest entropic centrality node as a query node, and repeat the process as long as there are nodes that do not already belong to some existing cluster (listed in ).

We consider the transition probabilities from a query node to all the other nodes as per , and carry out a clustering of these (one-dimensional, scalar) probability values. Lemma 1 shows the existence of (up to) “three clusters”, formed by probabilities of values around , and of values away from , either by being smaller or larger enough. How clearly defined these clusters are depends on : if is small, close to 1, probability values can still be close to uniform, on the other extreme, if is large, there may be very few or no value around , resulting in mostly two clusters. In our implementation, we use the Python scikit-learn agglomerative clustering to look for this initial set of (up to) three clusters . Among these clusters, we choose the cluster with the highest average transition probability from . We define to be the nodes corresponding to this cluster along with itself since, (i) the comprising nodes have similar probabilities for random walks to end up there when originating from (this follows from the clustering of the probability values), and crucially, these nodes are considered to comprise the immediate local community because (ii) this is the largest (in expectation) such probability.

We consider the absorption probabilities for a random walker starting at to be absorbed at any of the nodes in , and define the minimum of these values as i.e. . Thus, can be understood as an effective threshold (deduced a posteriori) above which the probability of being absorbed in the local cluster of is high enough. Proposition 2 and its corollary below show that if belongs to the local cluster of , but should also belong to the local cluster of , then provided that the absorption probability at is not too large (), will also belong to , together with .

Proposition 2

The probability to start at and to be absorbed at over time is lower bounded by:

Proof:

We have that is the probability to start at and to be absorbed at over time. We start again with (5):

since we recognize a Cauchy product, and we invoked Merten’s Theorem where , , and both sequences are absolutely converging to 0. ∎

Corollary 2

Given that the probability to start at and to be absorbed at is more than a threshold , and that the probability to start at and to be absorbed at is also more than , then if , .

Proof:

Suppose , then by the above proposition:

so implies . Note that this reasoning is iterative, namely if now if we consider that from , we got absorbed at , but also from , we got absorbed at , with , then

and again implies . ∎

If the query node is a low entropic centrality node, it is expected that once a cluster is formed, nodes belonging to are unlikely to be high centrality nodes themselves (otherwise would likely have inherited the influence and would not be a low centrality node itself). However, if the relative difference between what are considered low or high entropic centrality is not high, then several well connected nodes may get clubbed together in the clustering process, further bringing in many other nodes, coalescing a large group of nodes in the early stage of the clustering. Likewise, if the query node belongs to a pre-designated group of high entropic centrality nodes , then there is a risk that it may inadvertently merge multiple clusters which one may want to be separate. These motivate the addition of a pruning algorithm described in Algorithm 2, which works as follows.

If the query node is a high entropic centrality node, then we check the intersection of against existing clusters from , and in case there are non-trivial intersections, and yet there is no unique cluster with which there is a largest intersection, we retain only one of the largest clusters as chosen randomly, since it otherwise risks merging clusters which ought to be distinct. Otherwise, we retain as the nodes from the largest intersection, as well as nodes that were in no intersection, but discard the rest. Irrespective of the query node, if there are multiple pre-designated high entropic centrality nodes in (other than ), we retain among these only the ones to which the transition probability from is highest. This pruned list is given to Algorithm 1, where it is checked against existing clusters in , and if there is any intersection, then they are merged, otherwise, is added as a new cluster in . Note that the pruning mechanism introduces an element of randomization in our algorithm. As such, all the reported results in this paper are based on ten experiment runs. For all but one of the graphs used in our experiments, the conditional statement which introduces the randomization was in fact never triggered, and thus the results are produced in a consistent fashion. Only some variations of the 178 nodes Bitcoin subgraph (results shown in Figure 12) triggered the conditional statement: When it was treated as undirected and unweighted, the conditional statement was triggered but nevertheless resulted in same clusters across the ten experiment runs. For the same graph treated as directed but unweighted, the randomization yielded distinct but very similar cluster results (F-score 0.982 among the distinct results), and one of these result instances is shown.

Having identified localized query-node centric community structures, in the second stage, we agglomerate these to identify clusters at different degrees of granularity. A single stage of agglomeration is almost identical to the initial clustering process described above, with the following subtle changes. The cluster results from the previous step are considered as the new nodes. We still only use the matrix , and hence we do not (need to) explicitly define edges connecting the clustered nodes. The new coalesced nodes are assigned an entropic centrality value corresponding to the average of the entropic centrality of their constituent nodes. For transition probabilities across clustered nodes (say and ), we considered the minimum, mean and maximum transition probabilities amongst all node pairs as per . Finally, we discard a specific agglomeration in case the resulting agglomerated cluster would not result in a (weakly) connected graph. Our experiments indicate that the best clustering results are obtained using the minimum transition probabilities, we only report the corresponding results next.

4.3 Clustering of the Karate Club Network

We consider the karate club network [38] (see Figure 10 for the cluster results), to illustrate and analyze the workings of the clustering algorithm with a toy example with known baseline, before studying larger graphs. In Figure 10b, the initial set of clusters is shown along with the dendrogram for agglomeration, and Figure 10c shows the final clusters. Clustering obtained using the edge removal technique (20 iterations) from [23] is shown in Figure 10a for comparison. We also show the time evolution of some of the nodes with highest asymptotic entropic centrality, and the node with the lowest asymptotic entropic centrality in Figure 11 (this is in addition to Figure 3 where we demonstrated the time evolution of certain nodes too), to illustrate the behavior of nodes which are hubs, at the interface of the clusters and at the periphery of the network. This top-down clustering approach follows the idea of edge removal from [11], but using the reduction of average Markov entropic centrality to determine which edge to remove.

While it is visually apparent that our approach yields better clustering, we quantify this based on the ground truth [38] using F-score [31]. The result obtained with our approach has a F-score of 0.884 while the one obtained with [23] has a F-score of 0.737.

We furthermore benchmark the computation time: the edge removal based clustering approach [23] took 14.154 seconds, while our final result of 2-clusters were computed in 0.026 seconds. These experiments were run on a 64-bit PC with x64-based Intel(R) Xeon(R) CPU E5-1650 0 @3.20GHz processor and 16 GB RAM. This is easily explained: in our algorithm, the transition probability matrix needs to be computed only once. In contrast, even within a single iteration, the edge removal algorithm [23] needs to recompute the said matrix for every graph instance created by removal of each possible edge, to determine which edge to remove, and this exercise is then repeated in every iteration. That accounts for the huge discrepancy in the computation time, and demonstrates the computational efficacy of our approach.

4.4 Clustering of Bitcoin Subgraphs

We apply our proposed clustering algorithm to variations (un/directed, un/weighted) of the 178 node Bitcoin network subgraph [24] and report the results in Figure 12. The results obtained using the edge removal algorithm [23] on the undirected unweighted graph variant is shown on Figure 13. Unless otherwise stated, we use as a parameter , essentially considering to comprise the top 30% entropic centrality nodes (see the sensitivity analysis below).

While it is visually clear from Figure 12 that we obtain different clusters depending on the scenario considered, Table III confirms this by reporting F-scores [31] across the graph variants. Also, the effect of the parameter (without the transition probabilities being altered by edge weights) is rather low. This reinforces an underlying motivation of our work, namely that which graph variant (un/directed and/or weighted) to study is application dependent, and hence having one graph clustering algorithm that works across all variants is beneficial.

The clusterings of the undirected unweighted graph found by our algorithm in 1.072 seconds (Figure 12) and by the edge removal algorithm in 3196.07 seconds (Figure 13) are very different (F-score of 0.125). Our algorithm visually yields (more) meaningful results, though in the absence of a ground truth, this assertion remains subjective. The agglomeration process in our algorithm could have been continued beyond the final result shown here, as indicated by the associated dendrogram. The edge removal based clustering was stopped at the 50th iteration, after which no further average entropy reduction was observed with any single edge removal.

| graph | ||||||

| 1 | 0.416 | 0.506 | 0.372 | 0.271 | 0.125 | |

| 0.416 | 1 | 0.473 | 0.806 | 0.364 | 0.146 | |

| 0.506 | 0.473 | 1 | 0.408 | 0.416 | 0.122 | |

| 0.372 | 0.806 | 0.408 | 1 | 0.413 | 0.145 | |

| 0.271 | 0.346 | 0.416 | 0.413 | 1 | 0.131 | |

| 0.125 | 0.146 | 0.122 | 0.145 | 0.131 | 1 |

When to stop agglomerating is typically purpose dependent. The second row of Figure 12 shows various stages of agglomeration, the dendrogram for agglomeration is given for the 1st and 3rd row. There are many community structures that repeat across the considered scenarios, and most of the cluster boundaries can be traced back to the high entropy nodes (Figure 7), yet there are also subtle differences, e.g., in the weighted directed graph, there are instances of single isolated nodes, which stay isolated for several interactions of agglomeration because of weak (low weight) connections.

Sensitivity analysis. For each graph variant, the size of was varied to comprise 10%, 20%, 30% and 40% of the top entropic centrality nodes. For the unweighted and weighted directed graph, the F-score between clusterings obtained with 10% and the others are 0.824 and 0.989 respectively, while all the other pairs have F-score of 1. This suggests very consistent results in these cases, irrespectively of the choice of . However for the unweighted undirected graph, has a significant impact, with the F-score between 20% and 40% being the lowest at 0.626, while the best score of 0.912 is obtained between 30% and 40%. This justifies the use of the top 30% entropic centrality nodes for the reported results. Looking back at Figure 9, we observe that considering only 20% for the size of means including a number of query nodes with relatively low highest probabilities, while between 30% and 40%, these highest probabilities are not changing significantly, which is consistent with the observed variations in sensitivity.

We next consider another Bitcoin subgraph, but this time, we use a specific subgraph [25] of 4571 nodes, constructed around Bitcoin addresses suspected to be involved in the Ashley-Madison extortion scam [32]. The result of the clustering algorithm is shown on Figure 14 and Figure 15: (1) the graph was considered once unweighted (the emphasis is thus on node connections), once weighted (to capture the amount of Bitcoins involved), (2) the asymptotic Markov entropic centrality was used (we have no specific diameter of interest), (3) the top 30% nodes with the highest centrality are chosen as high centrality nodes , (4) five agglomeration iterations were performed for clustering the unweighted graph, and one for the weighted variant.

On Figure 14, showing the overall unweighted graph, there are three visually obvious main clusters: the upper green cluster, the purple cluster on the right, and the grey cluster on the left. The first observation is that the grey color here only represents nodes whose cluster size is too small to be significant (only 5 iterations were performed), they are thus kept in grey so as to make the other clusters more visible. The green and purple clusters are easily interpreted: each contains one Bitcoin address that is a hub for all its neighbors.

We then zoom into the central clusters, shown on Figure 15a. The actual relationship among the constituent wallet addresses in a cluster can be determined e.g. by using blockchain.com/explorer. We observe a green cluster near the middle (boxed). In our layout, we have isolated one of the constituent nodes (on the right, encircled), to show that the nodes in this collection have multiple mutual connections, as expected among nodes within a cluster. We see that the encircled node above the boxed group has also been assigned to the same cluster. This node is in fact connected to several of the other clusters that have been identified with our algorithm, and is one of the high centrality nodes, which lies at the interface of clusters. It happens to have been assigned to the green cluster, since each node is assigned to at most a single cluster. Some of the nodes in the (boxed) cluster were previously identified to be suspect addresses involved in the Ashley-Madison data breach extortion scam [32]. The resulting clusters thus help draw our attention to the other nodes which have been grouped together, since it indicates that Bitcoin flows have circulated among them, for their relationship with the already known suspected nodes to be investigated further.