A Mathematical Introduction to Generative Adversarial Nets (GAN)

Abstract.

Generative Adversarial Nets (GAN) have received considerable attention since the 2014 groundbreaking work by Goodfellow et al [4]. Such attention has led to an explosion in new ideas, techniques and applications of GANs. To better understand GANs we need to understand the mathematical foundation behind them. This paper attempts to provide an overview of GANs from a mathematical point of view. Many students in mathematics may find the papers on GANs more difficulty to fully understand because most of them are written from computer science and engineer point of view. The aim of this paper is to give more mathematically oriented students an introduction to GANs in a language that is more familiar to them.

Key words and phrases:

Deep Learning, GAN, Neural Network2010 Mathematics Subject Classification:

Primary 42C151. Introduction

1.1. Background

Generative Adversarial Nets (GAN) have received considerable attention since the 2014 groundbreaking work by Goodfellow et al [4]. Such attention has led to an explosion in new ideas, techniques and applications of GANs. Yann LeCun has called “this (GAN) and the variations that are now being proposed is the most interesting idea in the last 10 years in ML, in my opinion.” In this note I will attempt to provide a beginner’s introduction to GAN from a more mathematical point of view, intended for students in mathematics. Of course there is much more to GANs than just the mathematical principle. To fully understand GANs one must also look into their algorithms and applications. Nevertheless I believe that understanding the mathematical principle is a crucial first step towards understanding GANs, and with it the other aspects of GANs will be considerably easier to master.

The original GAN, which we shall refer to as the vanilla GAN in this paper, was introduced in [4] as a new generative framework from training data sets. Its goal was to address the following question: Suppose we are given a data set of objects with certain degree of consistency, for example, a collection of images of cats, or handwritten Chinese characters, or Van Gogh painting etc., can we artificially generate similar objects?

This question is quite vague so we need to make it more mathematically specific. We need to clarify what do we mean by “objects with certain degree of consistency” or “similar objects”, before we can move on.

First we shall assume that our objects are points in . For example, a grayscale digital image of 1 megapixel can be viewed as a point in with . Our data set (training data set) is simply a collection of points in , which we denote by . When we say that the objects in the data set have certain degree of consistency we mean that they are samples generated from a common probability distribution on , which is often assumed to have a density function . Of course by assuming to have a density function mathematically we are assuming that is absolutely continuous. Some mathematicians may question the wisdom of this assumption by pointing out that it is possible (in fact even likely) that the objects of interest lie on a lower dimensional manifold, making a singular probability distribution. For example, consider the MNIST data set of handwritten digits. While they are images (so ), the actual dimension of these data points may lie on a manifold with much smaller dimension (say the actual dimension may only be 20 or so). This is a valid criticism. Indeed when the actual dimension of the distribution is far smaller than the ambient dimension various problems can arise, such as failure to converge or the so-called mode collapsing, leading to poor results in some cases. Still, in most applications this assumption does seem to work well. Furthermore, we shall show that the requirement of absolute continuity is not critical to the GAN framework and can in fact be relaxed.

Quantifying “similar objects” is a bit trickier and holds the key to GANs. There are many ways in mathematics to measure similarity. For example, we may define a distance function and call two pints “similar” if the distance between them is small. But this idea is not useful here. Our objective is not to generate objects that have small distances to some existing objects in . Rather we want to generate new objects that may not be so close in whatever distance measure we use to any existing objects in the training data set , but we feel they belong to the same class. A good analogy is we have a data set of Van Gogh paintings. We do not care to generate a painting that is a perturbation of Van Gogh’s Starry Night. Instead we would like to generate a painting that a Van Gogh expert will see as a new Van Gogh painting she has never seen before.

A better angle, at least from the perspective of GANs, is to define similarity in the sense of probability distribution. Two data sets are considered similar if they are samples from the same (or approximately same) probability distribution. Thus more specifically we have our training data set consisting of samples from a probability distribution (with density ), and we would like to find a probability distribution (with density ) such that is a good approximation of . By taking samples from the distribution we obtain generated objects that are “similar” to the objects in .

One may wonder why don’t we just simply set and take samples from . Wouldn’t that give us a perfect solution? Indeed — if we know what is. Unfortunately that is exactly our main problem: we don’t know. All we know is a finite set of samples drawn from the distribution . Hence our real challenge is to learn the distribution from only a finite set of samples drawn over it. We should view finding as the process of approximating . GANs do seem to provide a novel and highly effective way for achieving this goal. In general the success of a GAN will depend on the complexity of the distribution and the size of the training data set . In some cases the cardinality can be quite large, e.g. for ImageNet data set is well over . But in some other cases, such as Van Gogh paintings, the size is rather small, in the order of 100 only.

1.2. The Basic Approach of GAN

To approximate , the vanilla GAN and subsequently other GANs start with an initial probability distribution defined on , where may or may not be the same as . For the time being we shall set to be the standard normal distribution , although we certainly can choose to be other distributions. The technique GANs employ is to find a mapping (function) such that if a random variable has distribution then has distribution . Note that the distribution of is , where maps subsets of to subsets of . Thus we are looking for a such that , or at least is a good approximation of . Sounds simple, right?

Actually several key issues remain to be addressed. One issue is that we only have samples from , and if we know we can have samples where is drawn from the distribution . How do we know from these samples that our distribution is the same or a good approximation of ? Assuming we have ways to do so, we still have the issue of finding .

The approach taken by the vanilla GAN is to form an adversarial system from which continues to receive updates to improve its performance. More precisely it introduces a “discriminator function” , which tries to dismiss the samples generated by as fakes. The discriminator is simply a classifier that tries to distinguish samples in the training set (real samples) from the generated samples (fake samples). It assigns to each sample a probability for its likelihood to be from the same distribution as the training samples. When samples are generated by , the discriminator tries to reject them as fakes. In the beginning this shouldn’t be hard because the generator is not very good. But each time fails to generate samples to fool , it will learn and adjust with an improvement update. The improved will perform better, and now it is the discriminator ’s turn to update itself for improvement. Through this adversarial iterative process an equilibrium is eventually reached, so that even with the best discriminator it can do no better than random guess. At such point, the generated samples should be very similar in distribution to the training samples .

So one may ask: where do neural networks and deep learning have to do with all this? The answer is that we basically have the fundamental faith that deep neural networks can be used to approximate just about any function, through proper tuning of the network parameters using the training data sets. In particular neural networks excel in classification problems. Not surprisingly, for GAN we shall model both the discriminator function and the generator function as neural networks with parameters and , respectively. Thus we shall more precisely write as and as , and denote . Our objective is to find the desired by properly tuning .

2. Mathematical Formulation of the Vanilla GAN

The adversarial game described in the previous section can be formulated mathematically by minimax of a target function between the discriminator function and the generator function . The generator turns random samples from distribution into generated samples . The discriminator tries to tell them apart from the training samples coming from the distribution , while tries to make the generated samples as similar in distribution to the training samples. In [4] a target loss function is proposed to be

| (2.1) |

where denotes the expectation with respect to a distribution specified in the subscript. When there is no confusion we may drop the subscript. The vanilla GAN solves the minimax problem

| (2.2) |

Intuitively, for a given generator , optimizes the discriminator to reject generated samples by attempting to assign high values to samples from the distribution and low values to generated samples . Conversely, for a given discriminator , optimizes so that the generated samples will attempt to “fool” the discriminator into assigning high values.

Now set , which has distribution as has distribution . We can now rewrite in terms of and as

| (2.3) |

The minimax problem (2.2) becomes

| (2.4) |

Assume that has density and has density function (which of course can only happen if ). Then

| (2.5) |

The minimax problem (2.2) can now be written as

| (2.6) |

Observe that the above is equivalent to under the constraint that for some . But to better understand the minimax problem it helps to examine without this constraint. For the case where have densities [4] has established the following results:

Proposition 2.1 ([4]).

Given probability distributions and on with densities and respectively,

is attained by for .

The above proposition leads to

Theorem 2.2 ([4]).

Let be a probability density function on . For probability distribution with density function and consider the minimax problem

| (2.7) |

Then the solution is attained with and for all .

Theorem 2.2 says the solution to the minimax problem (2.7) is exactly what we are looking for, under the assumption that the distributions have densities. We discussed earlier that this assumption ignores that the distribution of interest may lie on a lower dimensional manifold and thus without a density function. Fortunately, the theorem actually holds in the general setting for any distributions. We have:

Theorem 2.3.

Let be a given probability distribution on . For probability distribution and function consider the minimax problem

| (2.8) |

Then the solution is attained with and -almost everywhere.

Proof. The proof follows directly from Theorem 3.7 and the discussion in Subsection 3.5, Example 2.

Like many minimax problems, one may use the alternating optimization algorithm to solve (2.7), which alternates the updating of and (hence ). An updating cycle consists of first updating for a given , and then updating with the new . This cycle is repeated until we reach an equilibrium. The following is given in [4]:

Proposition 2.4 ([4]).

If in each cycle, the discriminator is allowed to reach its optimum given , followed by an update of so as to improve the minimization criterion

Then converges to .

Here I have changed the wording a little bit from the original statement, but have kept its essence intact. From a pure mathematical angle this proposition is not rigorous. However, it provides a practical framework for solving the vanilla GAN minimax problem, namely in each cycle we may first optimize the discriminator all the way for the current , and then update given the new just a little bit. Repeating this cycle will lead us to the desire solution. In practice, however, we rarely optimize all the way for a given ; instead we usually update a little bit before switching to updating .

Note that the unconstrained minimax problem (2.7) and (2.8) are not the same as the original minimax problem (2.2) or the equivalent formulation (2.3), where is constrained to be of the form . Nevertheless it is reasonable in practice to assume that (2.2) and (2.3) will exhibit similar properties as those being shown in Theorem 2.3 and Proposition 2.4. In fact, we shall assume the same even after we further restrict the discriminator and generator functions to be neural networks and as intended. Set . Under this model our minimax problem has become where

| (2.9) | ||||

| (2.10) |

Equation (2.9) is the key to carrying out the actual optimization: since we do not have the explicit expression for the target distribution , we shall approximate the expectations through sample averages. Thus (2.9) allows us to approximate using samples. More specifically, let be a subset of samples from the training data set (a minibatch) and be a minibatch of samples in drawn from the distribution . Then we do the approximation

| (2.11) | ||||

| (2.12) |

The following algorithm for the vanilla GAN was presented in [4]:

Vanilla GAN Algorithm Minibatch stochastic gradient descent training of generative adversarial nets. The number of

steps to apply to the discriminator, , is a hyperparameter. , the least expensive option, was used in the experiments in [4].

for number of training iterations do

for k steps do

-

•

Sample minibatch of samples in from the distribution .

-

•

Sample minibatch of samples from the training set .

-

•

Update the discriminator by ascending its stochastic gradient with respect to :

end for

-

•

Sample minibatch of samples in from the distribution .

-

•

Update the generator by descending its stochastic gradient with respect to :

end for

The gradient-based updates can use any standard gradient-based learning rule. The paper used momentum in their experiments.

Proposition 2.4 serves as a heuristic justification for the convergence of the algorithm. One problem often encountered with the Vanilla GAN Algorithm is that the updating of from minimization of may saturate early. So instead the authors substituted it with minimizing . This is the well-known “ trick”, and it seems to offer superior performance. We shall examine this more closely later on.

3. -Divergence and -GAN

Recall that the motivating problem for GAN is that we have a probability distribution known only in the form of a finite set of samples (training samples). We would like to learn this target distribution through iterative improvement. Starting with a probability distribution we iteratively update so it gets closer and closer to the target distribution . Of course to do so we will first need a way to measure the discrepancy between two probability distributions. The vanilla GAN has employed a discriminator for this purpose. But there are other ways.

3.1. -Divergence

One way to measure the discrepancy between two probability distributions and is through the Kullback-Leibler divergence, or KL divergence. Let and be two probability density functions defined on . The KL-divergence of and is defined as

Note that is finite only if on almost everywhere. While KL-divergence is widely used, there are other divergences such as the Jensen-Shannon divergence

where . One advantage of the Jensen-Shannon divergence is that it is well defined for any probability density functions and , and is symmetric . In fact, following from Proposition 2.1 the minimization part of the minimax problem in the vanilla GAN is precisely the minimization over of for a given density function . As it turns out, both and are special cases of the more general -divergence, introduced by Ali and Silvey [1].

Let be a strictly convex function with domain such that . Throughout this paper we shall adopt the convention that for all .

Definition 3.1.

Let and be two probability density functions on . Then the -divergence of and is defined as

| (3.1) |

where we adopt the convention that if .

Remark. Because the -divergence is not symmetric in the sense that in general, there might be some confusion as to which divides which in the fraction. If we follow the original Ali and Silvey paper [1] then the definition of would be our . Here we adopt the same definition as in the paper [9], which first introduced the concept of -GAN.

Proposition 3.1.

Let be a strictly convex function on domain such that . Assume either (equivalent to ) or for . Then , and if and only if .

Proof. By the convexity of and Jensen’s Inequality

where the equality holds if and only if is a constant or is linear on the range of . Since is strictly convex, it can only be the former. Thus for the equality to hold we must have on .

Now clearly . If then , and we have . The equality holds if and only if . If for all then we also have . For we will have . Thus if we must have and .

It should be noted that -divergence can be defined for two arbitrary probability measures and on a probability space . Let be another probability measure such that , namely both are absolutely continuous with respect to . For example, we may take . Let and be their Radon-Nikodym derivatives. We define the -divergence of and as

| (3.2) |

Again we adopt the convention that if . It is not hard to show that this definition is independent of the choice for the probability measure , and Proposition 3.1 holds for the more general as well.

With -divergence measuring the discrepancy between two measures, we can now consider applying it to GANs. The biggest challenge here is that we don’t have an explicit expression for the target distribution . As with the vanilla GAN, to compute we must express it in terms of sample averages. Fortunately earlier work by Nguyen, Wainwright and Jordan [8] has already tackled this problem using the convex conjugate of a convex function.

3.2. Convex Conjugate of a Convex Function

The convex conjugate of a convex function is also known as the Fenchel transform or Fenchel-Legendre transform of , which is a generalization of the well known Legendre transform. Let be a convex function defined on an interval . Then its convex conjugate is defined to be

| (3.3) |

As mentioned earlier we extend to the whole real line by adopting the convention that for . Below is a more explicit expression for .

Lemma 3.2.

Assume that is strictly convex and continuously differentiable on its domain , where with . Then

| (3.4) |

Proof. Let . The on , which is strictly decreasing by the convexity of . Hence is strictly concave on . If for some then is a critical point of so it must be its global maximum. Thus attains its maximum at . Now assume is not in the range of then or on . Consider the case for all . Clearly the supreme of is achieved as since is monotonously increasing. The case for for all is similarly derived.

Remark. Note that is a possible value for . The domain for is defined as the set on which is finite.

A consequence of Lemma 3.2 is that under the assumption that is continuously differentiable, is attained for some if and only if is in the range of . This is clear if , but it can also be argued rather easily for finite boundary points of . More generally without the assumption of differentiability, is attained if and only if for some , where is the set of sub-derivatives. The following proposition summarizes some important properties of convex conjugate:

Proposition 3.3.

Let be a convex function on with range in . Then is convex and is lower-semi continuous. Furthermore if is lower-semi continuous then it satisfies the Fenchel Duality .

Proof. This is a well known result. We omit the proof here.

The table below lists the convex dual of some common convex functions:

3.3. Estimating -Divergence Using Convex Dual

To estimate -divergence from samples, Nguyen, Wainwright and Jordan [8] has proposed the use of the convex dual of . Let be probability measures such that for some probability measure , with and . In the nice case of , by we have

| (3.5) | ||||

| (3.6) | ||||

where is any Borel function. Thus taking over all Borel functions we have

| (3.7) |

On the other hand, note that for each , is attained for some as long as is in the range of sub-derivatives of . Thus if this holds for all we have

In fact, equality holds in general under mild conditions.

Theorem 3.4.

Let be strictly convex and continuously differentiable on . Let be Borel probability measures on such that . Then

| (3.8) |

where is taken over all Borel functions . Furthermore assume that for all . Then is an optimizer of (3.8).

Proof. We have already establish the upper bound part (3.7). Now we establish the lower bound part. Let . We examine (3.5) with and for each . Denote . Let and assume where . We now construct a sequence as follows: If is in the range of , say , we set . If for all then is strictly increasing. The supreme of is attained at the boundary point , and we will set where . If for all then is strictly decreasing. The supreme of is attained at the boundary point , and we will set where . By Lemma 3.2 and its proof we know that

Thus

To establish the last part of the theorem, assume that . By Lemma 3.2, set for in the range of so we have

Thus . It follows that attains its maximum at . This proves that is an optimizer for (3.8).

The above theorem requires that . What if this does not hold? We have

Theorem 3.5.

Let be convex such that the domain of contains for some . Let be Borel probability measures on such that . Then

| (3.9) |

where is taken over all Borel functions .

Proof. Take . Then . Let and be their Radon-Nikodym derivatives. Since there exists a set with on which . Fix a in the domain of . Let for and otherwise. Then

This proves the theorem.

As one can see, we clearly have a problem in the above case. If the domain of is not bounded from above, (3.8) does not hold unless . In many practical applications the target distribution might be singular, as the training data we are given may lie on a lower dimensional manifold. Fortunately there is still hope as given by the next theorem:

Theorem 3.6.

Let be a lower semi-continuous convex function such that the domain of has . Let be Borel probability measures on such that , where and . Then

| (3.10) |

where is taken over all Borel functions .

Proof. Again, take . Then . The decomposition where and is unique and guaranteed by the Lebesgue Decomposition Theorem. Let , and be their Radon-Nikodym derivatives. Since , we may divide into where . Clearly we have for . Thus

This proves the theorem.

3.4. -GAN: Variational Divergence Minimization (VDM)

We can formulate a generalization of the vanilla GAN using -divergence. For a given probability distribution , the -GAN objective is to minimize the -divergence with respect to the probability distribution . Carried out in the sample space, -GAN solves the following minimax problem

| (3.11) |

The -GAN framework is first introduced in [9], and the optimization problem (3.11) is referred to as the Variational Divergence Minimization (VDM). Note VDM looks similar to the minimax problem in vanilla GAN. The Borel function here is called a critic function, or just a critic. Under the assumption of , by Theorem 3.4 this is equivalent to . One potential problem of -GAN is that by Theorem 3.5 if then (3.11) is in general not equivalent to . Fortunately for specially chosen this is not a problem.

Theorem 3.7.

Let be a lower semi-continuous strictly convex function such that the domain of has . Assume further that is continuously differentiable on its domain and for . Let be Borel probability measures on . Then is the unique optimizer of

where is taken over all Borel functions and is taken over all Borel probability measures.

3.5. Examples

We shall now look at some examples of -GAN for different choices of the convex function .

Example 1: .

This is the KL-divergence. We have with domain . satisfies all conditions of Theorem 3.7. The corresponding -GAN objective is

| (3.12) |

where . If we ignore the constant term and set then we obtain the equivalent minimax problem

Example 2: .

This is the Jensen-Shannon divergence. We have with domain . Again satisfies all conditions of Theorem 3.7. The corresponding -GAN objective is

| (3.13) |

where . Set , and so . Substituting in (3.13) yields

Ignoring the constant , the vanilla GAN is a special case of -GAN with being the Jensen-Shannon divergence.

Example 3: where .

Here we have with domain . While is not strictly convex and continuously differentiable, it does satisfy the two important conditions of Theorem 3.7 of and for . The corresponding -GAN objective is

| (3.14) |

For , if we require to be continuous then the supremum part of (3.14) is precisely the total variation (also known as the Radon metric) between and , which is closely related to the Wasserstein distance between and .

Example 4: (the “log D Trick”).

Here so is strictly convex. It satisfies all conditions of Theorem 3.7. The explicit expression for the convex dual is complicated to write down, however we do know the domain for is the range of , which is . The -GAN objective is

By Theorem 3.6 and the fact ,

| (3.15) |

Take so . Let and . Observe that

Denote . Then the outer minimization of the minimax problem is

| (3.16) |

This is precisely the “ ” trick used in the original GAN paper [4] for the vanilla GAN to address the saturation problem. Now we can see it is equivalent to the -GAN with the above . It is interesting to note that directly optimizing (3.15) is hard because it is hard to find the explicit formula for in this case. Thus the vanilla GAN with the “ trick” is an indirect way to realize this -GAN.

To implement an -GAN VDM we resort to the same approach as the vanilla GAN, using neural networks to approximate both and to solve the minimax problem (3.11)

We assume the critic function comes from a neural network. [9] proposes , where is a neural network with parameters taking input from and is an output activation function to force the output from onto the domain of . For we again consider its approximation by probability distributions of the form , where is an initially chosen probability distribution on (usually Gaussian, where may or may not be ), and is a neural network with parameters , with input from and output in . Under this model the -GAN VMD minimax problem (3.11) becomes

| (3.17) |

Like the vanilla GAN, since we do not have the explicit expression for the target distribution , we shall approximate the expectations through sample averages. More specifically, let be a minibatch of samples from the training data set (a minibatch) and be a minibatch of samples in drawn from the distribution . Then we employ the approximations

| (3.18) | ||||

| (3.19) |

The following algorithm for -GAN VDM is almost a verbatim repeat of the Vanilla GAN Algorithm [4] stated earlier:

VDM Algorithm Minibatch stochastic gradient descent training of generative adversarial nets. Here and are hyperparameters.

for number of training iterations do

for k steps do

-

•

Sample minibatch of samples in from the distribution .

-

•

Sample minibatch of samples from the training set .

-

•

Update by ascending its stochastic gradient with respect to :

end for

-

•

Sample minibatch of samples in from the distribution .

-

•

Update the discriminator by descending its stochastic gradient with respect to :

end for

The gradient-based updates can use any standard gradient-based learning rule.

4. Examples of Well-Known GANs

Since inception GANS have become one of the hottest research topics in machine learning. Many specially trained GANS tailor made for particular applications have been developed. Modifications and improvements have been proposed to address some of the shortcomings of vanilla GAN. Here we review some of the best known efforts in these directions.

4.1. Wasserstein GAN (WGAN)

Training a GAN can be difficult, which frequently encounters several failure modes. This has been a subject of many discussions. Some of the best known failure modes are:

- Vanishing Gradients:

-

This occurs quite often, especially when the discriminator is too good, which can stymie the improvement of the generator. With an optimal discriminator generator training can fail due to vanishing gradients, thus not providing enough information for the generator to improve.

- Mode Collapse:

-

This refers to the phenomenon where the generator starts to produce the same output (or a small set of outputs) over and over again. If the discriminator gets stuck in a local minimum, then it’s too easy for the next generator iteration to find the most plausible output for the current discriminator. Being stuck the discriminator never manages to learn its way out of the trap. As a result the generators rotate through a small set of output types.

- Failure to Converge:

-

GANs frequently fail to converge, due to a number of factors (known and unknown).

The WGAN [2] makes a simple modification where it replaces the Jensen-Shannon divergence loss function in vanilla GAN with the Wasserstein distance, also known as the Earth Mover (EM) distance. Don’t overlook the significance of this modification: It is one of the most important developments in the topic since the inception of GAN, as the use of EM distance effectively addresses some glaring shortcomings of divergence based GAN, allowing one to mitigate those common failure modes in the training of GANs.

Let be two probability distributions on (or more generally any metric space). Denote by the set of all probability distributions on such that the marginals of are and respectively. Then the EM distance (Wasserstein-1 distance) between and is

Intuitively is called the earth mover distance because it denotes the least amount of work one needs to do to move mass to mass .

In WGAN the objective is to minimize the loss function as opposed to the loss function in -GAN. The advantage of is illustrated by [2] through the following example. Let be the uniform distribution on in . Define and on . Then are singular distributions with disjoint support if . It is easy to check that

However, visually for small , even though and have disjoint support, they look very close. In fact if a GAN can approximate by for a very small we would be very happy with the result. But no matter how close is to 0 we will have . If we train the vanilla GAN with the initial source distribution we would be stuck with a flat gradient so it will not converge. More generally, let be probability measures such that . Then we always have . By Theorem 3.6 and (3.10)

Thus gradient descend will fail to update . In more practical setting, if our target distribution is a Gaussian mixture with well separated means, then starting with the standard Gaussian as initial source distribution will likely miss those Gaussian distributions in the mixture whose means are far away from 0, resulting in mode collapse and possibly failure to converge. Note that by the same Theorem 3.6 and (3.10), things wouldn’t improve by changing the convex function in the -GAN. As we seen from the examples, this pitfall can be avoided in WGAN.

The next question is how to evaluate using only samples from the distributions, since we do not have explicit expression for the target distribution . This is where Kantorovich-Rubenstein Duality [11] comes in, which states that

| (4.1) |

where denotes the set of all Lipschitz functions on with Lipschitz constant 1. Here the critic serves the role of a discriminator. With the duality WGAN solves the minimax problem

| (4.2) |

The rest follows the same script as vanilla GAN and -GAN. We write where is a prior source distribution (usually the standard normal) in and maps to . It follows that

Finally we approximate and by neural networks with parameters and respectively, and . Stochastic gradient descend is used to train WGAN just like all other GANs. Indeed, replacing the JS-divergence with the EM distance , the algorithm for vanilla GAN can be copied verbatim to become the algorithm for WGAN.

Actually almost verbatim. There is one last issue to be worked out, namely how does one enforce the condition ? This is difficult. The authors have proposed a technique called weight clipping, where the parameter (weights) is artificially restricted to the region . In other words, all parameters in are clipped so they fall into the box . Obviously this is not the same as restricting the Lipschitz constant to 1. However, since is compact, so will be . This means the Lipschitz constant will be bounded by some . The hope is that

where the latter is just .

Weight clipping may not be the best way to approximate the Lipschitz condition. Alternatives such as gradient restriction [5] can be more effective. There might be other better ways.

4.2. Deep Convolutional GAN (DCGAN)

DCGAN refers to a set of architectural guidelines for GAN, developed in [10]. Empirically the guidelines help GANs to attain more stable training and good performance. According to the paper, these guidelines are

-

•

Replace any pooling layers with strided convolutions (discriminator) and fractional-strided convolutions (generator).

-

•

Use batch normalization in both the generator and the discriminator.

-

•

Remove fully connected hidden layers for deeper architectures.

-

•

Use ReLU activation in generator for all layers except for the output, which uses Tanh.

-

•

Use LeakyReLU activation in the discriminator for all layers.

Here strided convolution refers to shifting the convolution window by more than 1 unit, which amounts to downsampling. Fractional strided convolution refers to shifting the convolution window by a fractional unit, say of a unit, which is often used for upsampling. This obviously cannot be done in the literal sense. To realize this we pad the input by zeros and then take the appropriate strided convolution. For example, suppose our input data is and the convolution window is . For strided convolution we would first pad to become and then execute . Nowadays, strided convolution is just one of the several ways for up and down sampling.

4.3. Progressive Growing of GANs (PGGAN)

Generating high resolution images from GANs is a very challenging problem. Progressive Growing of GANs developed in [6] is a technique that addresses this challenge.

PGGAN actually refers to a training methodology for GANs. The key idea is to grow both the generator and discriminator progressively: starting from a low resolution image, one adds new layers that model increasingly fine details as training progresses. Since low resolution images are much more stable and easier to train, the training is very stable in the beginning. Once training at a lower resolution is done, it gradually transit to training at a higher resolution. This process continues until the desired resolution is reached. In the paper [6], very high quality facial images have been generated by starting off the training at resolution and gradually increasing the resolution to , etc, until it reaches .

It would be interesting to provide a mathematical foundation for PGGAN. From earlier analysis there are pitfalls with GANs when the target distribution is singular, especially if it and the initial source distribution have disjoint supports. PGGAN may have provided an effective way to mitigate this problem.

4.4. Cycle-Consistent Adversarial Networks (Cycle-GAN)

Cycle-GAN is an image-to-image translation technique developed in [12]. Before this work the goal of image-to-image translation is to learn the mapping between an input image and an output image using a training set of aligned image pairs. However, often we may still want to do a similar task without the benefit of having a training set consisting of aligned image pairs . For example, while we have many paintings by Claude Monet, we don’t have photographs of the scenes, and may wonder what those scenes would look like in a photo. We may wonder how a Monet painting of Mt. Everest would look like even though Claude Monet had never been to Mt. Everest. One way to achieve these tasks is through neural style transfer [3], but this technique only transfers the style of a single painting to a target image. Cycle-GAN offers a different approach that allows for style transfer (tanslation) more broadly.

In a Cycle-GAN, we start with two training sets and . For example, could be a corpus of Monet scenery paintings and could be a set of landscape photographs. The training objective of a Cycle-GAN is to transfer styles from to and vice versa in a “cycle-consistent” way, as described below.

Precisely speaking, a Cycle-GAN consists of three components, each given by a tailored loss function. The first component is a GAN (vanilla GAN, but can also be any other GAN) that tries to generate the distribution of , with one notable deviation: instead of sampling initially from a random source distribution such as a standard normal distribution, Cycle-GAN samples from the training data set . Assume that are samples drawn from the distribution and are samples drawn from the distribution . The loss function for this component is

| (4.3) |

where is the discriminator network and is the generator network. Clearly this is the same loss used by the vanilla GAN, except the source distribution is replaced by the distribution of . The second component is the mirror of the first component, namely it is a GAN to learn the distribution of with the initial source distribution set as the distribution of . The corresponding loss function is thus

| (4.4) |

where is the discriminator network and is the generator network. The third component of the Cycle-GAN is the “cycle-consistent” loss function given by

| (4.5) |

The overall loss function is

| (4.6) |

where is a parameter. Intuitively, translates a sample from the distribution into a sample from , while translates a sample from the distribution into a sample from . The loss function encourages “consistency” in the sense that is not too far off and is not too far off . Finally Cycle-GAN is trained by solving the minimax problem

| (4.7) |

5. Alternative to GAN: Variational Autoencoder (VAE)

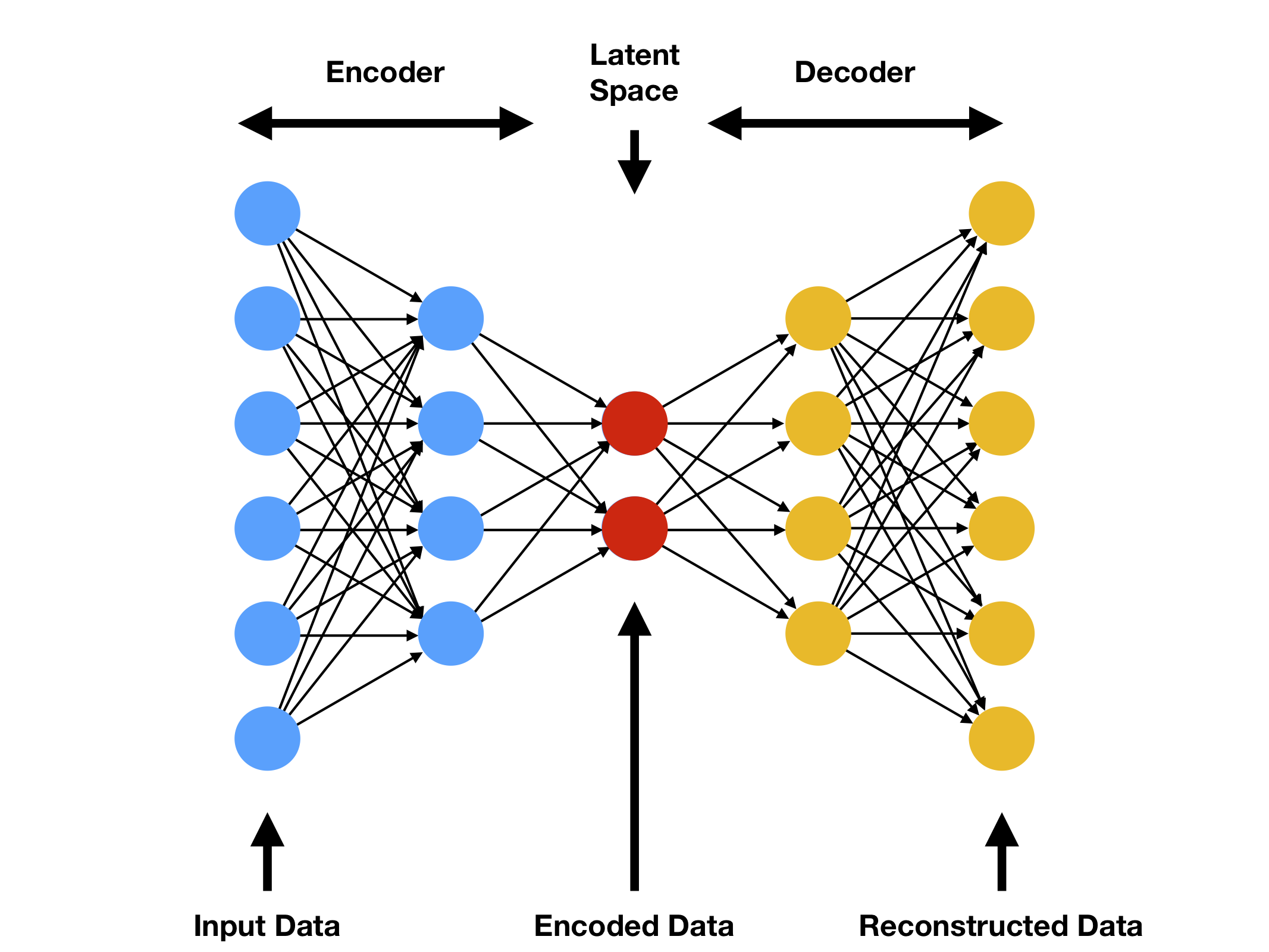

Variational Autoencoder (VAE) is an alternative generative model to GAN. To understand VAE one needs to first understand what is an autoencoder. In a nutshell an autoencoder consists of an encoder neural network that maps a dataset to , where is typically much smaller than , together with a decoder neural network that “decodes” elements of back to . In other words, it encodes the -dimensional features of the dataset to the -dimensional latents, along with a way to convert the latents back to the features. Autoencoders can be viewed as data compressors that compress a higher dimensional dataset to a much lower dimensional data set without losing too much information. For example, the MNIST dataset consists of images of size , which is in . An autoencoder can easily compress it to a data set in using only 10 latents without losing much information. A typical autoencoder has a “bottleneck” architecture, which is shown in Figure 1.

The loss function for training an autoencoder is typically the mean square error (MSE)

where is the size of . For binary data we may use the Bernoulli Cross Entropy (BCE) loss function. Furthermore, we may also add a regularization term to force desirable properties, e.g. a LASSO style loss to gain sparsity.

First developed in [7], VAEs are also neural networks having similar architectures as autoencoders, with stochasticity added into the networks. Autoencoders are deterministic networks in the sense that output is completely determined by the input. To make generative models out of autoencoders we will need to add randomness to latents. In an autoencoder, input data are encoded to the latents , which are then decoded to . A VAE deviates from an autoencoder in the following sense: the input is encoded into a diagonal Gaussian random variable in with mean and variance . Here and the variance is actually . Another way to look at this setup is that instead of having just one encoder in an autoencoder, a VAE neural network has two encoders and for both the mean and the variance of the latent variable . As in an autoencoder, it also has a decoder . With randomness in place we now have a generative model.

Of course we will need some constraints on and . Here VAEs employ the following heuristics for training:

-

•

The decoder decodes the latent random variables to that are close to .

-

•

The random variable with sampled uniformly from is close to the standard normal distribution .

The heuristics will be realized through a loss function consisting of two components. The first component is simply the mean square error between and given by

| (5.1) | ||||

| (5.2) |

where denotes entry-wise product. Here going from (5.1) to (5.2) is a very useful technique called re-parametrization. The second component of the loss function is the KL-divergence (or other -divergences) between and . For two Gaussian random variables their KL-divergence has an explicit expression, given by

| (5.3) |

The loss function for a VAE is thus

| (5.4) |

where is a parameter. To generate new data from a VAE, one inputs random samples into the decoder network . A typical VAE architecture is shown in Figure 2.

This short introduction of VAE has just touched on the mathematical foundation of VAE. There have been a wealth of research focused on improving the performance of VAE and applications. We encourage interested readers to further study the subject by reading recent papers.

References

- [1] S. M. Ali and S. D. Silvey. A general class of coefficients of divergence of one distribution from another. Journal of the Royal Statistical Society: Series B (Methodological), 28(1):131–142, 1966.

- [2] M. Arjovsky, S. Chintala, and L. Bottou. Wasserstein generative adversarial networks. In ICML, 2017.

- [3] L. A. Gatys, A. S. Ecker, and M. Bethge. Image style transfer using convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2414–2423, 2016.

- [4] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio. Generative adversarial nets. In NIPS, 2014.

- [5] I. Gulrajani, F. Ahmed, M. Arjovsky, V. Dumoulin, and A. C. Courville. Improved training of wasserstein gans. In Advances in neural information processing systems, pages 5767–5777, 2017.

- [6] T. Karras, T. Aila, S. Laine, and J. Lehtinen. Progressive growing of gans for improved quality, stability, and variation. In International Conference on Learning Representations, 2018.

- [7] D. P. Kingma and M. Welling. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114, 2013.

- [8] X. Nguyen, M. J. Wainwright, and M. I. Jordan. Estimating divergence functionals and the likelihood ratio by convex risk minimization. IEEE Transactions on Information Theory, 56(11):5847–5861, 2010.

- [9] S. Nowozin, B. Cseke, and R. Tomioka. f-gan: Training generative neural samplers using variational divergence minimization. In Advances in neural information processing systems, pages 271–279, 2016.

- [10] A. Radford, L. Metz, and S. Chintala. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434, 2015.

- [11] C. Villani. Optimal transport: old and new, volume 338. Springer Science & Business Media, 2008.

- [12] J.-Y. Zhu, T. Park, P. Isola, and A. A. Efros. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision, pages 2223–2232, 2017.