A Mathematical Framework for Learning Probability Distributions

Abstract

The modeling of probability distributions, specifically generative modeling and density estimation, has become an immensely popular subject in recent years by virtue of its outstanding performance on sophisticated data such as images and texts. Nevertheless, a theoretical understanding of its success is still incomplete. One mystery is the paradox between memorization and generalization: In theory, the model is trained to be exactly the same as the empirical distribution of the finite samples, whereas in practice, the trained model can generate new samples or estimate the likelihood of unseen samples. Likewise, the overwhelming diversity of distribution learning models calls for a unified perspective on this subject. This paper provides a mathematical framework such that all the well-known models can be derived based on simple principles. To demonstrate its efficacy, we present a survey of our results on the approximation error, training error and generalization error of these models, which can all be established based on this framework. In particular, the aforementioned paradox is resolved by proving that these models enjoy implicit regularization during training, so that the generalization error at early-stopping avoids the curse of dimensionality. Furthermore, we provide some new results on landscape analysis and the mode collapse phenomenon.

1 Introduction

The popularity of machine learning models in recent years is largely attributable to their remarkable versatility in solving highly diverse tasks with good generalization power. Underlying this diversity is the ability of the models to learn various mathematical objects such as functions, probability distributions, dynamical systems, actions and policies, and often a sophisticated architecture or training scheme is a composition of these modules. Besides fitting functions, learning probability distributions is arguably the most widely-adopted task and constitutes a great portion of the field of unsupervised learning. Its applications range from the classical density estimation [119, 115] which is important for scientific computing [13, 118, 70], to generative modeling with superb performance in image synthesis and text composition [15, 94, 16, 96], and also to pretraining tasks such as masked reconstruction that are crucial for large-scale models [28, 16, 25].

Despite the impressive performance of machine learning models in learning probability measures, this subject is less understood than the learning of functions or supervised learning. Specifically, there are several mysteries:

1. Unified framework. There are numerous types of models for representing and estimating distributions, making it difficult to gain a unified perspective for model design and comparison. One traditional categorization includes five model classes: the generative adversarial networks (GAN) [39, 7], variational autoencoders (VAE) [64], normalizing flows (NF) [112, 95], autoregressive models [92, 85], and diffusion models [104, 107]. Within each class, there are further variations that complicate the picture, such as the choice of integral probability metrics for GANs and the choice of architectures for normalizing flows that enable likelihood computations. Ideally, instead of a phenomenological categorization, one would prefer a simple theoretical framework that can derive all these models in a straightforward manner based on a few principles.

2. Memorization and curse of dimensionality. Perhaps the greatest difference between learning functions and learning probability distributions is that, conceptually, the solution of the latter problem must be trivial. On one hand, since the target distribution can be arbitrarily complicated, any useful model must satisfy the property of universal convergence, namely the modeled distribution can be trained to converge to any given distribution (e.g. Section 5 will show that this property holds for several models). On the other hand, the target is unknown in practice and only a finite sample set is given (with the empirical distribution denoted by ). As a result, the modeled distribution can only be trained with and inevitably exhibits memorization, i.e.

Hence, training results in a trivial solution and does not provide us with anything beyond the samples we already have. This is different from regression where the global minimizer (interpolating solution) can still generalize well [36].

One related problem is the curse of dimensionality, which becomes more severe when estimating distributions instead of functions. In general, the distance between the hidden target and the empirical distribution scales badly with dimension : For any absolutely continuous and any [120]

where is the Wasserstein metric. This slow convergence sets a limit on the performance of all possible models: for instance, the following worst-case lower bound [103]

where is any distribution supported on and is any estimator, i.e. a mapping from every sample set to an estimated distribution . Hence, to achieve a generalization error of , an astronomical sample size could be necessary in high dimensions.

These theoretical difficulties form a seeming paradox with the empirical success of distribution learning models, for instance, models that can generate novel and highly-realistic images [15, 62, 94] and texts [92, 16].

3. Training and mode collapse. The training of distribution learning models is known to be more delicate than training supervised learning models, and exhibits several novel forms of failures. For instance, for the GAN model, one common issue is mode collapse [100, 66, 77, 90], when a positive amount of mass in becomes concentrated at a single point, e.g. an image generator could consistently output the same image. Another issue is mode dropping [129], when fails to cover some of the modes of . In addition, training may suffer from oscillation and divergence [91, 19]. These problems are the main obstacle to global convergence, but the underlying mechanism remains largely obscure.

The goal of this paper is to provide some insights into these mysteries from a mathematical point of view. Specifically,

-

1.

We establish a unified theoretical framework from which all the major distribution learning models can be derived. The diversity of these models is largely determined by two simple factors, the distribution representation and loss type. This formulation greatly facilitates our analysis of the approximation error, training error and generalization error of these models.

-

2.

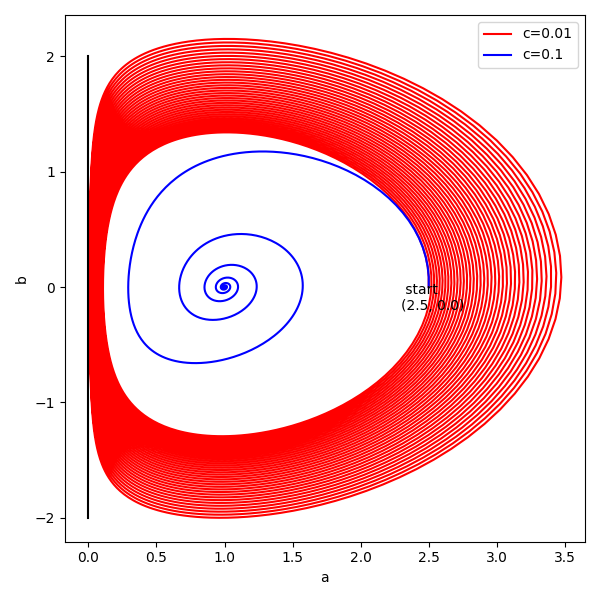

We survey our previous results on generalization error, and resolve the paradox between memorization and generalization. As illustrated in Figure 1, despite that the model eventually converges to the global minimizer, which is the memorization solution, the training trajectory comes very close to the hidden target distribution. With early-stopping or regularized loss, the generalization error scales as

for some constant instead of dimension-dependent terms such as . Thereby, the model escapes from the curse of dimensionality.

-

3.

We discuss our previous results on the rates of global convergence for some of the models. For the other models, we establish new results on landscape and critical points, and identify two mechanisms that can lead to mode collapse.

This paper is structured as follows. Section 2 presents a sketch of the popular distribution learning models. Section 3 introduces our theoretical framework and the derivations of the models. Section 4 establishes the universal approximation theorems. Section 5 analyzes the memorization phenomenon. Section 6 discusses the generalization error of several representative distribution learning models. Section 7 analyzes the training process, loss landscape and mode collapse. All proofs are contained in Section 9. Section 8 concludes this paper with discussion on the remaining mysteries.

Here is a list of related works.

Mathematical framework: A framework for supervised learning has been proposed by [35, 32] with focus on the function representations, namely function spaces that can be discretized into neural networks. This framework is helpful for the analysis of supervised learning models, in particular, the estimation of generalization errors [33, 31, 36] that avoid the curse of dimensionality, and the determination of global convergence [24, 97]. Similarly, our framework for distribution learning emphasizes the function representation, as well as the new factor of distribution representation, and bound the generalization error through analogous arguments. Meanwhile, there are frameworks that characterizes distribution learning from other perspectives, for instance, statistical inference [54, 108], graphical models and rewards [130, 12], energy functions [134] and biological neurons [88].

Generalization ability: The section on generalization reviews our previous results on the generalization error estimates for potential-based model [126], GAN [127] and normalizing flow with stochastic interpolants [125]. The mechanism is that function representations defined by integral transforms or expectations [34, 35] enjoy small Rademacher complexity and thus escape from the curse of dimensionality. Earlier works [33, 31, 36, 37] used this mechanism to bound the generalization error of supervised learning models. Our analysis combines this mechanism with the training process to show that early-stopping solutions generalize well, and is related to concepts from supervised learning literature such as the frequency principle [124, 123] and slow deterioration [76].

Training and convergence: The additional factor of distribution representation further complicates the loss landscape, and makes training more difficult to analyze, especially for the class of “free generators” that will be discussed later. The model that attracted the most attention was GAN, and convergence has only been established in simplified settings [79, 121, 38, 10, 127] or for local saddle points [81, 47, 73]. In practice, GAN training is known to suffer from failures such as mode collapse and divergence [9, 20, 79]. Despite that these issues can be fixed using regularizations and tricks [44, 66, 77, 90, 50], the mechanism underlying this training instability is not well understood.

2 Model Overview

This section offers a quick sketch of the prominent models for learning probability distributions, while their derivations will be presented in Section 3. These models are commonly grouped into five categories: the generative adversarial networks (GAN), autoregressive models, variational autoencoders (VAE), normalizing flows (NF), and diffusion models. We assume access to samples drawn from the target distribution , and the task is to train a model to be able to generate more samples from the distribution (generative modeling) or compute its density function (density estimation).

1. GAN. The generative adversarial networks [39] model a distribution by transport

| (1) |

The map is known as the generator and is a base distribution that is easy to sample (e.g. unit Gaussian). To solve for a generator such that , the earliest GAN model considers the following optimization problem [39]

where is known as the discriminator and this type of min-max losses are known as the adversarial loss. A well-known variant is the WGAN [7] defined by

where the discriminator is a neural network with parameter , which is bounded in norm. For the other variants, a survey on the GAN models is given by [43].

2. VAE. The variational autoencoder proposed by [64] uses a randomized generator and its approximate inverse, known as the decoder and encoder, and we denote them by the conditional distributions and . Similar to (1), the distribution is modeled by and can be sampled by . VAE considers the following optimization problem

where KL is the Kullback–Leibler divergence. To simplify computation, is usually set to be the unit Gaussian , and the decoder and encoder are parametrized as diagonal Gaussians [64]. For instance, consider

where are parametrized functions and are scalars. Then, we have

Up to constant, the VAE loss becomes

3. Autoregressive. Consider sequential data such as text and audio. The autoregressive models represent a distribution through factorization , and can be sampled by sampling iteratively from . These models minimize the loss

There are several approaches to the parametrization of the conditional distributions , depending on how to process the variable-length input . The common options are the Transformer networks [116, 92], recurrent networks [87], autoregressive networks [68, 87] and causal convolution [86]. For instance, consider Gaussian distributions parametrized by a recurrent network

where are parametrized functions, and is the hidden feature. Since

the loss is equal, up to constant, to

4. NF. The normalizing flows proposed by [112, 111] use a generator (1) similar to GAN and VAE. The optimization problem is given by

where is set to be the unit Gaussian and is the Jacobian determinant of . To enable the calculation of these terms, the earliest approach [112, 111, 95] considers only the inverse and models it by a concatenation of simple maps such that each is easy to compute. The modeled distribution (1) cannot be sampled, but can serve as a density estimator, with the density given by

Up to constant, the loss becomes

A later approach [29, 63, 89, 55] models the generator by such that each is designed to be easily invertible with being a triangular matrix. Then, the loss becomes

Another approach [30, 21, 40] defines as a continuous-time flow, i.e. solution to an ordinary differential equation (ODE)

| (2) |

for some time-dependent velocity field . Then, the loss becomes

A survey on normalizing flows is given by [65].

5. Monge-Ampère flow. A model that is closely related to the normalizing flows is the Monge-Ampère flow [131]. It is parametrized by a time-dependent potential function , and defines a generator by the ODE

such that the flow is driven by a gradient field. The model minimizes the following loss

where is the Laplacian.

6. Diffusion. The diffusion models [104, 106, 49] define the generator by a reverse-time SDE (stochastic differential equation)

where is a time-dependent velocity field known as the score function [56], is some noise scale, and is a reverse-time Wiener process. The modeled distribution is sampled by solving this SDE backwards in time from to . The score function is learned from the optimization problem

where is the unit Gaussian and is any weight. Besides the reverse-time SDE, another way to sample from the model is to solve the following reverse-time ODE, which yields the same distribution [107]

A survey on diffusion models is given by [128].

7. NF interpolant. Finally, we introduce a model called normalizing flow with stochastic interpolants [2, 75]. It is analogous to the diffusion models, and yet is conceptually simpler. This model learns a velocity field from the optimization problem

Then, the generator is defined through the ODE (2) and the modeled distribution is sampled by (1).

3 Framework

Previously, a mathematical framework for supervised learning was proposed by [35], which was effective for estimating the approximation and generalization errors of supervised learning models [34, 33, 31]. In particular, it helped to understand how neural network-based models manage to avoid the curse of dimensionality. The framework characterizes the models by four factors: the function representation (abstract function spaces built from integral transformations), the loss, the training scheme, and the discretization (e.g. how the continuous representations are discretized into neural networks with finite neurons).

This section presents a similar framework that unifies models for learning probability distributions. We focus on two factors: the distribution representation, which is a new factor and determines how distributions are parametrized by abstract functions, and the loss type, which specifies which metric or topology is imposed upon the distributions. A sketch of the categorization is given in Table 1. We show that the diverse families of distribution learning models can be simultaneously derived from this framework. The theoretical results in the latter sections, in particular the generalization error estimates, are also built upon this mathematical foundation.

| Density | Expectation | Regression | |

|---|---|---|---|

| Potential | bias potential model | feasible | unknown |

| Free generator | NF, VAE, autoregressive | GAN | unknown |

| Fixed generator | upper bound | feasible | diffusion, NF interpolant, OT |

3.1 Background

The basic task is to estimate a probability distribution given i.i.d. samples . We denote this unknown target distribution by and the empirical distribution by

The underlying space is assumed to be Euclidean . To estimate a distribution may have several meanings depending on its usage: e.g. to obtain a random variable , estimate the density function , or compute expectations . The first task is known as generative modeling and the second as density estimation; these two problems are the focus of this paper, while the third task can be solved by them.

There are two general approaches to modeling a distribution, which can be figuratively termed as “vertical” and “horizontal”, and an illustration is given by Figure 2. Given a base distribution , the vertical approach reweighs the density of to approximate the density of the target , while the horizontal approach transports the mass of towards the location of . When a modeled distribution is obtained and a distance is needed to compute either the training loss or test error, the vertical approach measures the difference between the densities of and over each location, and is exemplified by the KL-divergence

| (3) |

while the horizontal approach measures the distance between the “particles” of and , and is exemplified by the 2-Wasserstein metric [61, 117]

| (4) |

where is any coupling between (i.e. a joint distribution in whose marginal distributions are ). We will see that the vertical and horizontal approaches largely determine the distribution representation and loss type.

Finally, consider the operator

which maps a random variable to its distribution . Similarly, given a random path , we obtain a path in the distribution space. In general, there can be infinitely many random variables that are mapped to the same distribution, e.g. let and be uniform over , for any , we can define the random variable with , which all satisfy . One drawback of this non-uniqueness is that, for many generative models, there can be plenty of global minima, which make the loss landscape non-convex and may lead to training failures, as we will show that this is inherent in mode collapse. One benefit is that, if the task is to learn some time-dependent distribution , one can select from the infinitely many possible random paths the one that is the easiest to compute, and therefore define a convenient loss.

For the notations, for any measureable subset of , denote by the space of probability measures over , the subspace of measures with finite second moments, and the subspace of absolutely continuous measures (i.e. have density functions). Denote by the support of a distribution. Given any two measures , we denote by the product measure over the product space. We denote by the training time and by the time that parametrizes flows.

3.2 Distribution representation

Since machine learning models at the basic level are good at learning functions, the common approach to learning distributions is to parametrize distributions by functions. There are three common approaches:

1. Potential function. Given any base distribution , define the modeled distribution by

| (5) |

where is a potential function and is for normalization. This parametrization is sometimes known as the Boltzmann distribution or exponential family.

2. Free generator. Given any measureable function , define the modeled distribution by

is known as the transported measure or pushforward measure and denoted by , while is called the generator or transport map. Equivalently, is defined as the measure that satisfies

| (6) |

for all measurable sets .

The name “free generator” is used to emphasize that the task only specifies the target distribution to estimate, and we are free to choose any generator from the possibly infinite set of solutions .

There are several common extensions to the generator. First, can be modeled as a random function, such that for some conditional distribution . Second, can be induced by a flow. Let be a Lipschitz velocity field, and define as the unique solution to the ODE

| (7) |

where is the flow map. Furthermore, if we define the interpolant distributions , then they form a (weak) solution to the continuity equation

| (8) |

Specifically, for any smooth test function

Third, one can restrict to a subset of the possibly infinite set of solutions , specifically generators that are gradients of some potential functions . By Brennier’s theorem [14, 117], such potential function exists in very general conditions. Similarly, one can restrict the velocity fields in (7) to time-dependent gradient fields

| (9) |

By Theorem 5.51 of [117], in general there exists a potential function such that the flow induced by satisfies . Specifically, the interpolant distribution is the Wasserstein geodesic that goes from to . Finally, it is interesting to note that there is also a heuristic argument from [2] that justifies the restriction to gradient fields: Given any velocity field with the interpolant distribution generated by (7), consider the equation

By the theory of elliptic PDEs, the solution exists. It follows from (8) that we can always replace a velocity field by a gradient field that induces the same interpolant distribution .

3. Fixed generator. Contrary to the free generator, another approach is to choose a specific coupling between the base and target distributions , where

and the generator is represented as the conditional distribution .

One can further extend into a random path such that . Then, analogous to the construction (7), can be represented as the ODE or SDE that drives the trajectories . Thanks to the non-uniqueness of , one can further consider the interpolant distributions and solve for the velocity field in the continuity equation (8). Then, can be represented as the solution to the ODE (7) with velocity .

Currently, models of this category belong to either of the two extremes:

Fully deterministic: For some measureable function ,

| (10) |

The generator is usually set to be the optimal transport map from to . The idea is simple in one dimension, such that we sort the “particles” of and and match according to this ordering. This monotonicity in can be generalized to the cyclic monotonicity in higher dimensions [117]. Couplings that are cyclic monotonic are exactly the optimal transport plans with respect to the squared Euclidean metric [117], namely minimizers of (4). Then, Brennier’s theorem [14, 117] implies that, under general conditions, the problem (4) has unique solution, which has the form (10), and furthermore the generator is a gradient field of a convex function .

Fully random: The coupling is simply the product measure

| (11) |

At first sight, this choice is trivial and intractable, but the trick is to choose an appropriate random path such that either the dynamics of or the continuity equation (8) is easy to solve.

One of the simplest constructions, proposed by [75, 2], is to use the linear interpolation

| (12) |

Then, to solve for the target velocity field in (8), define a joint distribution over

| (13) | ||||

for any test function . Similarly, define the current density , a vector-valued measure, by

| (14) |

for any test function . Then, we can define a velocity field by the Radon-Nikodym derivative

| (15) |

Each is the weighted average of the velocities of the random lines (12) that pass through the point in spacetime. As shown in [2, 125], under general assumptions, is the solution to the continuity equation (8) and satisfies

where is the generator defined by the flow (7) of .

A more popular construction by [104, 106, 49] uses the diffusion process

| (16) |

where is a non-decreasing function that represents the noise scale. Consider the coupling . The conditional distribution is an isotropic Gaussian [107]

| (17) |

and the interpolant distributions are given by

| (18) |

Then,

where the last line follows from the formula for the KL divergence between multivariable Gaussians. Let be the unit Gaussian . It follows that if has finite second moments, then the coupling converges to the product measure exponentially fast.

By choosing sufficiently large, we have . Then, a generative model can be defined by sampling from and then going through a reverse-time process to approximate the target . One approach is to implement the following reverse-time SDE [6]

which is solved from time to , and is the reverse-time Wiener process. This backward SDE is equivalent to the forward SDE (16) in the sense that, if , then they induce the same distribution of paths [6], and in particular . (An analysis that accounts for the approximation error between and is given by [105].) The gradient field is known as the score function [56], which is modeled by a velocity field , and then the generator can be defined as the following random function

| (19) |

Another approach is to implement the following reverse-time ODE [107]

This is the solution to the continuity equation (8), and we similarly have if . Then, the generator can be defined as a deterministic function

| (20) |

4. Mixture. Finally, we remark that it is possible to use a combination of these representations. For instance, [82] uses a normalizing flow model reweighed by a Boltzmann distribution, which is helpful for sampling from distributions with multiple modes while maintaining accurate density estimation. Another possibility is that one can first train a model with fixed generator representation as a stable initialization, and then finetune the trained generator as a free generator (e.g. using GAN loss) to improve sample quality.

3.3 Loss type

There are numerous ways to define a metric or divergence on the space of probability measures, which greatly contribute to the diversity of distribution learning models. One requirement, however, is that since the target distribution is replaced by its samples during training, the term almost always appears in the loss as an expectation.

The commonly used losses belong to three categories:

1. Density-based loss. The modeled distribution participates in the loss as a density function. The default choice is the KL divergence (3), which is equivalent up to constant to the negative log-likelihood (NLL)

| (21) |

In fact, we can show that NLL is in a sense the only possible density-based loss.

Proposition 3.1.

Let be any loss function on that has the form

where is some function on . If for any , the loss is minimized by , then

for and . The converse is obvious.

Besides the KL divergence, there are several other well-known divergences in statistics such as the Jensen–Shannon divergence and divergence. Despite that they are infeasible by Proposition 3.1, certain weakened versions of these divergences can still be used as will be discussed later.

2. Expectation-based loss. The modeled distribution participate in the loss through expectations. Since both and are seen as linear operators over test functions, it is natural to define the loss as a dual norm

| (22) |

where is some user-specified functional norm. The test function is often called the discriminator, and such loss is called an adversarial loss. If is a Hilbert space norm, then the loss can also be defined by

| (23) |

There are several classical examples of adversarial losses: If is the norm, then (22) becomes the total variation norm . If is the Lipschitz semi-norm, then (22) becomes the 1-Wasserstein metric by Kantorovich-Rubinstein theorem [60, 117]. If is the RKHS norm with some kernel , then (22) becomes the maximum mean discrepancy (MMD) [41], and (23) is the squared MMD:

which gives rise to the moment matching network [72].

In practice, the discriminator is usually parametrized by a neural network, denoted by with parameter . One common choice of the norm is simply the norm on

| (24) |

This formulation gives rise to the WGAN model [7], and the bound can be conveniently implemented by weight clipping. The loss (24) and its variants are generally known as the neural network distances [8, 133, 45, 37].

The strength of the metric (e.g. fineness of its topology) is proportional to the size of the normed space of , or inversely proportional to the strength of . Once some global regularity such as the Lipschitz norm applies to the space, then the dual norm or becomes continuous with respect to the underlying geometry (e.g. the metric), and is no longer permutation invariant like NLL (21) or total variation. If we further restrict to certain sparse subspaces of the Lipschitz functions, in particular neural networks, then becomes insensitive to the “high frequency” parts of . As we will demonstrate in Section 6, this property is the source of good generalization.

Note that there are some variants of the GAN loss that resemble (23) but whose norms are so weak that the dual norms are no longer well-defined. For instance, the loss with penalty from [122]

or the loss with Lipschitz penalty from [44]. By [127, Proposition 5], in general we have . Nevertheless, if we consider one-time-scale training such that and are trained with similar learning rates, then this blow-up can be avoided [127].

Beyond the dual norms (22), one can also consider divergences. Despite that Proposition 3.1 has ruled out the use of divergences other than the KL divergence, one can consider the weakened versions of the dual of these divergences. For instance, given any parametrized discriminator , the Jensen–Shannon divergence can be bounded below by [39]

| (25) |

This lower bound gives rise to the earliest version of GAN [39]. GANs based on other divergences have been studied in [83, 78].

3. Regression loss. The regression loss is used exclusively by the fixed generator representation discussed in Section 3.2. If a target generator has been specified, then we simply use the loss over the base distribution

| (26) |

If a target velocity field has been specified, then the loss is integrated over the interpolant distributions

| (27) |

or equivalently, we use the loss with the joint distribution defined by (13).

3.4 Combination

Having discussed the distribution representations and loss types, we can now combine them to derive the distribution learning models in Table 1. Our focus will be on the highlighted four classes in the table.

Density + Potential. Since Proposition 3.1 indicates that the negative log-likelihood (NLL) (21) is the only feasible density-based loss, we simply insert the potential-based representation (5) into NLL, and obtain a loss in the potential function ,

| (28) |

This formulation gives rise to the bias-potential model [115, 13], also known as variationally enhanced sampling.

Density + Free generator. In order to insert the transport representation into NLL (21), we need to be able to compute the density . For simple cases such as when is Gaussian and is affine, the density has closed form expression. Yet, in realistic scenarios when needs to satisfy the universal approximation property and thus has complicated forms, one has to rely on indirect calculations. There are three common approaches:

-

1.

Change of variables (for normalizing flows): If is a diffeomorphism, the density of is given by the change of variables formula

Usually is set to the unit Gaussian . Then, the NLL loss (21) becomes

If is modeled by a flow (7) with velocity field , then its Jacobian satisfies

It follows that

and this is known as Abel’s formula [113]. Hence, we obtain the loss of the normalizing flow model [112, 21]

(29) Moreover, if the velocity field is defined by a gradient field as discussed in (9), then the loss has the simpler form

which leads to the Monge-Ampère flow model [131].

One potential shortcoming of NF is that diffeomorphisms might not be suitable for the generator when the target distribution is singular, e.g. concentrated on low-dimensional manifolds, which is expected for real data such as images. To approximate , the generator needs to shrink the mass of onto negligible sets, and thus blows up. As is involved in the loss, it can cause the training process to be unstable.

-

2.

Variational lower bound (for VAE): Unlike NF, the variational autoencoders do not require the generator to be invertible, and instead use its posterior distribution. The generator can be generalized to allow for random output, and we define the conditional distribution

The generalized inverse can be defined as the conditional distribution that satisfies

in the distribution sense. If the generator is deterministic, i.e. , and invertible, then is simply . It follows that the KL divergence (3) can be written as

This is an example of the variational lower bound [64], and the NLL loss (21) now becomes

which is the loss of VAE. To make the problem more solvable, the decoder and encoder are usually parametrized by diagonal Gaussian distributions [64]:

where are parametrized functions such as neural networks, and is taken entry-wise. Using the formula for KL divergence between Gaussians

we can show that, up to constant, the VAE loss equals

where is entry-wise product. This loss resembles the classical autoencoder [1, 102]

and thus are addressed by the decoder and encoder.

-

3.

Factorization (for autoregressive model): To model a distribution over sequential data , one can choose a generator that is capable of processing variable-length inputs , such as the Transformer network [116] or recurrent networks [99], and define the distribution by

Then, NLL (21) is reduced to

Usually, each has a simple parametrization such as Gaussian or Softmax so that is tractable [86, 92].

Expectation + Free generator. By the definition (6) of the transport representation ,

for all measureable functions . Then, the classical GAN loss (25) becomes

| (30) |

Similarly, the WGAN loss (24) becomes

| (31) |

Regression + Fixed generator. For the case with fully deterministic coupling (10), a target generator is provided by numerical optimal transport, and then fitted by a parametrized function with the regression loss (26). This formulation leads to the generative model [132]. (Moreover, a few models with some technical variations [4, 5, 98] are related to this category, but for simplicity we do not describe them here.)

For the case with fully random coupling (11), we fit either the score function from (18) or the velocity field from (15) using the regression loss (27). Note that the targets (18, 15) are both defined by expectations and thus the loss (27) cannot be computed directly. Thanks to the linearity of expectation, we can expand the loss to make the computation tractable.

Model the score function by a velocity field and let be a user-specified weight. The regression loss can be written as

Since the conditional distribution is the isotropic Gaussian (17), this loss is straightforward to evaluate. Thus, we obtain the loss of the score-based diffusion models [104, 106, 49, 107]

| (32) |

Similarly, for the velocity field (15), using the definitions (13, 14) of the joint distribution and current density , we can write the regression loss as

| (33) |

Thus, we obtain the loss of normalizing flow with stochastic interpolants [2, 75, 125].

Other classes. Finally, we briefly remark on the rest of the classes in Table 1. For the combination “Density + Fixed generator”, it has been shown by [105] that the regression loss upper bounds the KL divergence. Specifically, if in the loss (32) we set the weight by where is the noise scale in the SDE (16), then given any score function ,

| (34) |

where is the generator defined by the reverse-time SDE (19) with the score . The result also holds for defined by the reverse-time ODE (20) under a self-consistency assumption: let be the reverse-time flow map of (20), then

The combinations “Expectation + Potential” and “Expectation + Fixed generator” are feasible, but we are not aware of representative models. The combinations “Regression + Potential” and “Regression + Free generator” do not seem probable, since there is no clear target to perform regression.

Remark 1 (Empirical loss).

As discussed in Section 3.3, the loss is almost always an expectation in the target distribution . Indeed, one can check that all the loss functions introduced in this section can be written in the abstract form

where is the parameter function and depends on the model. Thus, if only a finite sample set of is available, as is usually the case in practice, one can define the empirical loss

| (35) |

where is the empirical distribution.

3.5 Function representation

Having parametrized the distributions and losses by abstract functions, the next step is to parametrize these functions by machine learning models such as neural networks. There is much freedom in this choice, such that any parametrization used in supervised learning should be applicable to most of the functions we have discussed. These include the generators and discriminators of GANs, the means and variances of the decoder and encoder of VAE, the potential function of the bias potential model, the score function of score-based diffusion models, and the velocity field of normalizing flows with stochastic interpolants. Some interesting applications are given by [27, 92, 58, 96].

One exception is the generator of the normalizing flows (29), which needs to be invertible with tractable Jacobian. As mentioned in Section 2, one approach is to parametrize as a sequence of invertible blocks whose Jacobians have closed-form formula (Example designs can be found in [29, 63, 89, 55]). Another approach is to represent as a flow, approximate this flow with numerical schemes, and solve for the traces in (29) (Examples of numerical schemes are given by [21, 131, 40]).

For the theoretical analysis in the rest of this paper, we need to fix a function representation. Since our focus is on the phenomena that are unique to learning distributions (e.g. memorization), we keep the function representation as simple as possible, while satisfying the minimum requirement of the universal approximation property among distributions (and thus the capacity for memorization). Specifically, we use the random feature functions [93, 35, 126].

Definition 1 (Random feature functions).

Let be the space of functions that can be expressed as

| (36) |

where is a fixed parameter distribution and is a parameter function. For simplicity, we use the notation when the input and output dimensions are clear.

Definition 2 (RKHS norm).

For any subset , consider the quotient space

with the norm

We use the notation if is clear from context. By [26, 93], is a Hilbert space and is equal to the RKHS norm (reproducing kernel Hilbert space) induced by the kernel

Furthermore, given any distribution , we can define the following integral operator ,

| (37) |

Definition 3 (Time-dependent random feature function).

Given any , one can define a flow by

Define the flow-induced norm

Our results adopt either of the following settings:

Assumption 3.1.

Assume that the activation is ReLU . Assume that the parameter distribution is supported on the sphere and has a positive and continuous density over this sphere.

Assumption 3.2.

Assume that the activation is sigmoid . Assume that has a positive and continuous density function over and also bounded variance

Given either assumption, the universal approximation theorems [51, 109] imply that the space is dense among the continuous functions with respect to the norm for any compact subset . Also, by Lemma 9.1, is dense in for any distribution .

The random feature functions (36) can be seen as a simplified form of neural networks, e.g. if we replace the parameter distribution by a finite sample set , then (36) becomes a 2-layer network with neurons and frozen first layer weights. Similarly, for the flow in Definition 3, if the ODE is replaced by a forward Euler scheme, then becomes a deep residual network whose layers share similar weights. Beyond the random feature functions, one can extend the analysis to the Barron functions [34, 11] and flow-induced functions [31], which are the continuous representations of 2-layer networks and residual networks.

3.6 Training rule

The training of distribution learning models is very similar to training supervised learning models, such that one chooses from the many algorithms for gradient descent and optimizes the function parameters. One exception is the GANs, whose losses are min-max problems of the form (30, 31) and are usually solved by performing gradient descent on the generator and gradient ascent on the discriminator [39].

For the theoretical analysis in this paper, we use the continuous time gradient descent. Specifically, given any loss over for some , we parametrize by the random feature function from Definition 1 and denote the loss by . Given any initialization , we define the trajectory by the dynamics

| (38) |

It follows that the function evolves by

| (39) |

where is the integral operator defined in (37). Similarly, given the empirical loss (35), we define the empirical training trajectory

| (40) |

By default, we use the initialization:

| (41) |

or equivalently .

3.7 Test error

For our theoretical analysis, given a modeled distribution and target distribution , we measure the test error by either the Wasserstein metric or KL-divergence . As discussed in Section 1, exhibits the curse of dimensionality, while KL is stronger than . Thus, they are capable of detecting memorization and can distinguish the solutions that generalize well.

In addition, one advantage of the metric is that it can be related to the regression loss.

Proposition 3.2 (Proposition 21 of [127]).

Given any base distribution and any target distribution , for any

So effectively, the test error is the error with the closest target generator.

One remark is that these test losses are only applicable to theoretical analysis. In practice, we only have a finite sample set from , and the curse of dimensionality becomes an obstacle to meaningful evaluation.

4 Universal Approximation Theorems

It is not surprising that distribution learning models equipped with neural networks satisfy the universal approximation property in the space of probability distributions. The significance is that the models in general have the capacity for memorization. This section confirms that the universal approximation property holds for all three distribution representations introduced in Section 3.2. Since our results are proved with the random feature functions (Definitions 1 and 3), they hold for more expressive function parametrizations such as 2-layer and deep neural networks.

For the free generator representation, the following result is straightforward.

Proposition 4.1.

In particular, can approximate the empirical distribution .

4.1 Potential representation

Consider the potential-based representation (5). Let be any compact set with positive Lebesgue measure, let be the space of distributions with continuous density functions, and let the base distribution be uniform over .

4.2 Flow-based free generator

For the normalizing flows, we have seen in Section 3.4 the two common approaches for modeling the generator , i.e. continuous-time flow (2) or concatenation of simple diffeomorphisms. Both approaches have an apparent issue, that they do not satisfy the universal approximation property among functions. Since is always a diffeomorphism, it cannot approximate for instance functions that are overlapping or orientation-reversing (such as and ). Hence, the approach of Proposition 4.1 is not applicable.

4.3 Fixed generator

For the fixed generator representation, we analyze the normalizing flow with stochastic interpolants (15) instead of the score-based diffusion models (16), since the former has a simpler formulation. As the target velocity field has been specified, we show a stronger result than Propositions 4.1 and 4.3, such that we can simultaneously bound the test error and the training loss.

Proposition 4.4 (Proposition 3.2 of [125]).

5 Memorization

The previous section has shown that the distribution learning models, from all known distribution representations, satisfy the universal approximation property. In particular, they are capable of approximating the empirical distribution and thus have the potential for memorization. This section confirms that memorization is inevitable for some of the models. Specifically, we survey our results on the universal convergence property, that is, the ability of a model to converge to any given distribution during training. We believe that this property holds for other models as well, and it should be satisfied by any desirable model for learning probability distributions.

5.1 Bias-potential model

Recall that the bias-potential model is parametrized by potential functions (5) and minimizes the loss (28). For any compact set with positive Lebesgue measure, let the base distribution be uniform over . Parametrize by random feature functions , and define the training trajectory by continuous time gradient descent (39) on with any initialization . Denote the modeled distribution by . Similarly, let be the training trajectory on the empirical loss (35) and denote its modeled distribution by .

Proposition 5.1 (Lemma 3.8 of [126]).

Given Assumption 3.1, for any target distribution , if has only one weak limit, then converges weakly to

Corollary 5.2 (Memorization (Proposition 3.7 of [126])).

Given Assumption 3.1, the training trajectory can only converge to the empirical distribution . Moreover, both the test error and the norm of the potential function diverge

In the setting of Proposition 5.1, a limit point always exists, but we have to exclude the possibility of more than one limit point, e.g. the trajectory may converge to a limit circle. We believe that with a more refined analysis one can prove that such exotic scenario cannot happen.

5.2 GAN discriminator

As will be demonstrated in Section 7, the training and convergence of models with the free generator representation is in general difficult to analyze. Thus, we consider the simplified GAN model from [127] such that the representation replaced by a density function .

Consider the GAN loss (23), and parametrize the discriminator by . Equivalently, we set the penalty term to be the RKHS norm and the loss (23) becomes

| (42) |

where is the kernel function from Definition 2. This loss is an instance of the maximum mean discrepancy [41]. Model the density as a function in , and define the training trajectory by continuous time gradient descent

| (43) |

where . Let be the nearest point projection from to the convex subset . We measure the test error by .

6 Generalization Error

Despite that Sections 4 and 5 have demonstrated that distribution learning models have the capacity for memorization, this section shows that solutions with good generalization are still achievable. For the four classes highlighted in Table 1, we show that their models escape from the curse of dimensionality with either early stopping or regularization. Specifically, their generalization errors scale as where are absolute constants, instead of dimension-dependent terms such as .

These results depend on either of the two forms of regularizations

-

•

Implicit regularization: As depicted in Figure 3 (Left), the training trajectory comes very close to the hidden target distribution before eventually turning towards the empirical distribution

-

•

Explicit regularization: Analogous to the above picture, we consider some regularized loss with strength . With an appropriate regularization strength, the minimizer becomes very close to the hidden target .

The mechanism underlying both scenarios is that the function representations of the models are insensitive to the sampling error . Thus, we resolve the seeming paradox between good generalization and the inevitable memorization.

Without a good function representation, this behavior cannot be guaranteed. For instance, as argued in [127], if a distribution is trained by Wasserstein gradient flow (i.e. without any function parametrization) on the empirical loss

and if the initialization is in , then the training trajectory follows the geodesic that connects and . Since the Wasserstein manifold has positive curvature [3], the geodesic in general can never come close to the hidden target , as depicted in Figure 3 (Right).

In the following five subsections, we survey our results on three models that have rigorous proofs, and then analyze two models with heuristic calculations.

6.1 Bias-potential model

We start with the bias-potential model (28) since it enjoys the most convexity and thus the arguments are the most transparent.

Consider the domain with base distribution . Let be potential functions trained on the population loss (28) and the empirical loss (35) respectively, using continuous time gradient descent (39). Denote their induced distributions (5) by .

Theorem 6.1 (Theorem 3.3 of [126]).

Corollary 6.2.

Given the condition of Theorem 6.1, if we choose an early-stopping time such that

then the test error satisfies

Hence, the generalization error escapes from the curse of dimensionality.

The two terms in the upper bound (44) are the training error and generalization gap. The former is a consequence of convexity, while the latter follows from the observation that the landscapes of and differ very little

where the term is the Rademacher complexity and scales as . Then, remain close during training

which confirms the depiction in Figure 3 (Left).

In the meantime, the bias potential model also generalizes well in the explicit regularization setting. Here we consider the Ivanov and Tikhonov regularizations [57, 114, 84].

Proposition 6.3 (Proposition 3.9 of [126]).

Given Assumption 3.1, assume that the target is generated by a potential . Let be the minimizer of the regularized loss

where is any constant such that . For any , with probability over the sampling of , the distribution generated by the potential satisfies

Proposition 6.4.

Given the condition of Proposition 6.3, for any , let be the minimizer to the regularized loss

With probability over the sampling of , the distribution generated by satisfies

6.2 Normalizing flow with stochastic interpolants

Consider the normalizing flow with stochastic interpolants (33), and model the velocity field and generator by Definition 3. Denote by the training trajectories on the population and empirical losses (33, 35) using gradient flow (39, 40). Denote the generated distributions by and .

First, we bound the generalization gap.

Theorem 6.5 (Theorem 3.4 of [125]).

Next, to estimate the generalization error, we need a sharper norm to bound the training error.

Definition 4 (Proposition 2.4 of [125]).

Given any distribution , let be the integral operator (37) over . Given Assumption 3.2, Proposition 2.4 of [125] implies that is a symmetric positive compact operator, and thus have an eigandecomposition with positive eigenvalues and eigenfunctions , which form an orthonormal basis of . Define the subspace with the following norm

where the coefficients are from the decomposition . Furthermore, we have and thus .

Theorem 6.6 (Theorem 3.7 of [125]).

In short, the generalization error scales as

and thus with early-stopping , the model escapes from the curse of dimensionality

The condition may seem strict, so we present the following corollary that holds for general target distributions.

6.3 GAN

Similar to Section 5.2, we consider the simplified GAN model such that the modeled distribution is represented by a density function . We show that the discriminator alone is sufficient to enable good generalization.

With the discriminator modeled by , the GAN loss (23) becomes the maximum mean discrepancy in (42). Consider the training trajectory defined by (43). Similarly, define the empirical loss and empirical training trajectory by

Fix some initialization . We measure the test error by , where is the nearest point projection onto .

Theorem 6.8 (Theorem 2 of [127]).

Given Assumption 3.1, for any target density function such that , with probability over the sampling of ,

It follows that with an early stopping time , the generalization error scales as and escapes from the curse of dimensionality.

Part of this result can be extended to the usual case with a generator. As shown in [127], if we consider the GAN loss , then the generator is insensitive to the difference between the landscapes of the population loss and empirical loss ,

The mechanism is that the sampling error is damped by .

Furthermore, we can model the generator as a random feature function, , and consider the population trajectory and empirical trajectory , which are trained respectively on with continuous time gradient descent (39, 40) and with the same initialization. Assume that the activation is , then the difference grows slowly at

Note that the sampling error is damped twice by both and .

Finally, we remark that the generalization error of GANs have been studied in several related works using other kinds of simplified models, for instance when the generator is a linear map [38], one-layer network (without hidden layer, of the form for ) [121, 69], or polynomial with bounded degree [71].

6.4 Score-based diffusion model

As a further demonstration of the techniques from the previous sections, this section presents an informal estimation of the generalization error of the score-based diffusion model (32). By heuristic calculations, we derive a bound in the implicit regularization setting that resembles Theorem 6.6.

Model the score function by . Let be the training trajectories on the population loss (32) and empirical loss (35) using gradient flow (39, 40) with zero initialization . Model the generators by the reverse-time SDE (19) with scores . Denote the generated distributions by and .

For any target distribution , denote the target score function by with given by (18). By inequality (34),

Assume that . Then, by convexity (see for instance [126, Proposition 3.1])

Meanwhile, by the growth rate bound from [125, Proposition 5.3],

Then, using a calculation analogous to the proof of [125, Theorem 3.4]

Combining these inequalities, we obtain

Hence, if we ignore the approximation error due to finite , the generalization error with early stopping scales as and escapes from the curse of dimensionality.

6.5 Normalizing flow

This section presents an informal estimation of the generalization error of the normalizing flow model (29). We conjecture an upper bound in the explicit regularization setting that resembles Proposition 6.3.

Let the velocity field be modeled by , let be the flow map from Definition 3, and define the reverse-time flow map for ,

Let be the population and empirical losses (29, 35). Let the base distribution be the unit Gaussian . For any target distribution such that for some , and for any , consider the problem with explicit regularization:

Let be a minimizer. It follows that

Then,

Denote the two terms by the random variables . Using the techniques of [31, Theorem 2.11] and [46, Theorem 3.3] for bounding the Rademacher complexity of flow-induced functions, one can try to bound the following expectations

Meanwhile, for the random fluctuations , one can apply the extension of McDiarmid’s inequality to sub-Gaussian random variables [67], and try to show that, with probability over the sampling of ,

Combining these inequalities, one can conjecture that the solution satisfies

7 Training

This section studies the training behavior of distribution learning models, and illustrates the differences between the three distribution representations discussed in Section 3.2. On one hand, we survey our results on the global convergence rates of models with the potential representation and fixed generator representation. On the other hand, we present new results on the landscape of models with the free generator representation, and analyze the mode collapse phenomenon of GANs.

7.1 Potential and fixed generator

Models with these two representations are easier to analyze since their losses are usually convex over abstract functions. Specifically, this section considers the bias-potential model (28) and normalizing flow with stochastic interpolants (33):

If we choose a convex function representation for , then the optimization problem becomes convex.

To estimate the rate of convergence, one approach is to bound the test error by a stronger norm. The following toy example shows how to bound the error by the RKHS norm .

Example 1 (Kernel regression).

Fix any base distribution and assume that the activation is bounded. For any target function , consider the regression loss . Parametrize by and train the parameter function by continuous time gradient descent (38) with initialization . Then,

Proof one.

Denote the loss by . Since is bounded, has continuous Fréchet derivative in , so the gradient descent (38) is well-defined. Choose such that and . Define the Lyapunov function

Then,

By convexity, for any ,

Hence, . We conclude that . ∎

Proof two.

Since evolves by (38), evolves by (39):

| (45) |

where is the integral operator (37) over . Since is symmetric, positive semidefinite and compact, there exists an eigendecomposition with non-negative eigenvalues and eigenfunctions that form an orthonormal basis of . Consider the decomposition

It is known that the RKHS norm satisfies [93, 26]

Since by assumption, (45) implies that . Hence,

∎

Using similar arguments, we have the following bounds on the test error of models trained on the population loss.

Proposition 7.1 (Bias-potential, Proposition 3.1 of [126]).

Given the setting of Theorem 6.1, the distribution generated by the potential satisfies

Proposition 7.2 (NF, Proposition 3.5 of [125]).

Given the setting of Theorem 6.6, the distribution generated by the trajectory satisfies

Corollary 7.3 (NF, Corollary 3.6 of [125]).

It seems probable that the term can be strengthened to , which would imply universal convergence, i.e. convergence to any target distribution.

7.2 Free generator: Landscape

Models with the free generator representation are more difficult to analyze, since the loss is not convex in . For instance, it is straightforward to check that if and are uniform over , then the solution set , or equivalently the set of minimizers of , is an infinite and non-convex subset of , and thus is non-convex.

Despite the non-convexity, there is still hope for establishing global convergence through a careful analysis of the critical points. For instance, one can conjecture that there are no spurious local minima and that the saddle points can be easily avoided. This section offers two results towards this intuition: a characterization of critical points for general loss functions of the form , and a toy example such that global convergence can be determined from initialization.

In general, no matter how we parametrize the generator, the modeled distribution satisfies the continuity equation during training: Assume that is in and , where is some Hilbert space. Then, given any path that is in , for any smooth test function ,

where the velocity field is defined by

for any test function . Thus, is a weak solution to the continuity equation

In particular, the equation implies that no matter how is parametrized, the “particles” of during training can only move continuously without jumps or teleportation.

For abstraction, it is helpful to consider the Wasserstein gradient flow [101, 3]: For any loss function over and any initialization , define the training trajectory by

where is the first variation, which satisfies

for any bounded and compactly-supported density function .

The Wasserstein gradient flow abstracts away the parametrization of the generator, and thus simplifies the analysis of critical points. To relate to our problem, the following result shows that the Wasserstein gradient flow often shares the same critical points as the parametrized loss , and thus we are allowed to study the former instead.

Proposition 7.4 (Comparison of critical points).

Given any loss over , assume that the first variation exists, is , and for all . Define the set of critical points

Given any base distribution and any generator parametrized by , where is a Hilbert space. Assume that is Lipschitz in for any , and in for any , and that the gradient at any is a continuous linear operator . Define the set of generated distributions and the set of critical points

Then, . Furthermore, if the parametrization is the random feature functions , and either Assumption 3.1 or 3.2 holds, then

Below is an example use case of this proposition.

Example 2.

Consider the GAN loss in (42) induced by discriminators that are random feature functions (36). Assume that the target distribution is radially symmetric, the parameter distribution in (36) is radially symmetric in conditioned on any , and that the activation is . Consider the point mass . Then,

Thus, for almost all , and . It follows from Proposition 7.4 that is a critical point () for any parametrized generator that can express the constant zero function.

A probability measure is called singular if cannot be expressed as a density function (). The critical points of an expectation-based loss are often singular distributions such as from the previous example. The following toy model shows that the critical points may consist of only global minima and saddle points that are singular.

Consider a one-dimensional setting. Model the generator by any function , where the base distribution is conveniently set to be uniform over . Given any target distribution and any initialization , consider the dynamics

| (46) |

where is the conditional distribution of the optimal transport plan between and . By Brennier’s theorem [117], since is absolutely continuous, the optimal transport plan is unique.

Note that if is also absolutely continuous, then Brennier’s theorem implies that the transport plan is deterministic: for some convex potential . Since the first variation of is exactly the potential [101], the dynamics (46) when restricted to becomes equivalent to the gradient flow on the loss

Since the dynamics (46) is not everywhere differentiable, we consider stationary points instead of critical points, and extend the definition of saddle points: A stationary point is a generalized saddle point if for any , there exists a perturbation () such that .

Proposition 7.5.

For any target distribution , the stationary points of the dynamics (46) consist only of global minima and generalized saddle points. If is a generalized saddle point, then is a singular distribution, and there exists such that . Moreover, global convergence holds

if and only if the initialization .

This toy example confirms the intuition that despite the loss is nonconvex, global convergence is still achievable. Moreover, all saddle points have one thing in common, that part of the mass has collapsed onto one point.

7.3 Free generator: Mode collapse

The previous section has presented a general study of the critical points of models with free generator, while this section focuses on a particular training failure that is common to GANs, the mode collapse phenomenon. Mode collapse is characterized as the situation when the generator during training maps a positive amount of mass of onto the same point [100, 66, 77, 90], and is identified as the primary obstacle for GAN convergence. This characterization is analogous to the saddle points from Proposition 7.5 that are also singular distributions. Despite that in the setting of Proposition 7.5, the singular distributions can be avoided and the toy model enjoys global convergence, how mode collapse occurs in practice remains a mystery.

To provide some insight into the mode collapse phenomenon, we demonstrate with toy examples two mechanisms that can lead to mode collapse.

Denote by the uniform distribution over the interval . Let the base and target distributions be . Model the generator by , and discriminator by for some to be specified. Consider the following GAN loss based on (23)

where is the strength of regularization. Train by continuous time gradient flow

with initialization and . Mode collapse happens when becomes a singular distribution, i.e. when .

Case one. This example shows that a non-differentiable discriminator can lead to mode collapse. Set . Restricting to the half space , the loss and training dynamics become

Assume that . Then, the unique solution is given by

At time , we have

Thus, if , then the trajectory must hit the line at some , and thus mode collapse happens.

This process is depicted in Figure 4 (Left). In general, one can conjecture that mode collapse may occur at the locations where is discontinuous.

Case two. This example shows that the accumulation of numerical error due to the finite learning rate can lead to mode collapse. This result is analogous to [79] which shows that if is a point mass, numerical error can cause it to diverge to infinity.

Set . The loss and training dynamics become

Given a learning rate , consider the discretized training dynamics

for every

In general, given a discrete dynamics in the form of , one can fit the sequence by the continuous time solution of an ODE , where for some function . Assume that the two trajectories match at each ,

It follows that

Plugging in , we obtain the following approximate ODE

Define the energy function

Then

Thus, if ,

The energy is strictly convex and its only minimizer is the stationary point where . Assume that the initialization and . Since the energy is non-decreasing, , and thus the trajectory will never enter the set . Since is an open set that contains , there exists such that the open ball . Since , the trajectory satisfies

and thus

Consider the four subsets , , , that partition the space . Then, the trajectory repeatedly moves from to to to and back to . In particular, it crosses the line for times that go to infinity. It follow that

Hence, converges to exponentially fast, and then numerical underflow would lead to mode collapse. This process is depicted in Figure 4 (Right).

In summary, these two examples indicate two possible causes for mode collapse. On one hand, if the discriminator is non-smooth, then the gradient field may squeeze the distribution at the places where is discontinuous and thus form a singular distribution. On the other hand, the numerical error due to the finite learning rate may amplify the oscillatory training dynamics of and , and if this error is stronger than the regularization on , then the norm of can diverge and lead to collapse.

Note that, however, if we consider two-time-scale training such that is always the maximizer, then the loss becomes proportional to or , and training converges to the global minimum as long as .

8 Discussion

This paper studied three aspects of the machine learning of probability distributions.

First, we proposed a mathematical framework for generative models and density estimators that allows for a unified perspective on these models. Abstractly speaking, the diversity of model designs mainly arises from one factor, the vertical or horizontal way of modeling discussed in Section 3.1. When applied to distribution representation, it leads to the options of potential representation and transport representation, and the latter ramifies into the free generator and fixed generator depending on whether a probabilistic coupling can be fixed. Similarly, when applied to the loss, it leads to three loss types, depending on whether the difference is measured vertically or horizontally, with or without a fixed target. Then, the rest of the design process is to try to realize each category in Table 1 by satisfying the various constraints of implementation, for instance, whether to compute the density of directly or indirectly, and which random path is chosen to achieve the product coupling . Thereby, all the major models are derived. By isolating the factors of distribution representation, loss type and function representation, this framework allows for a more transparent study of training and landscape (who depend more on distribution representation and loss type) and generalization error (which depends more on function representation).

Second, we studied the seeming conflict between the memorization phenomenon and the generalization ability of the models, and reviewed our results that resolve this conflict. On one hand, we confirmed that the models satisfy the universal approximation property (some models even enjoy universal convergence) and thus memorization is inevitable. On the other hand, function representations defined by expectations are insensitive to the sampling error , so that the training trajectory tends to approximate the hidden target distribution before eventually diverging towards memorization. In particular, our results established generalization error bounds of the form with for several models. There should be room for improvement, but for now we are content that the models can escape from the curse of dimensionality. Considering that this generalization ability is mostly an effect of the function representations, it seems reasonable to expect that good generalization is enjoyed by all models regardless of the choice of distribution representation and loss type.

Third, we discussed the training dynamics and loss landscape. For the potential and fixed generator representations, the convexity of their distribution parametrizations and loss functions enable the estimation of the rates of global convergence. For the free generator representation, despite the loss is non-convex, we demonstrated that the critical points have tractable forms, and also identified two general mechanisms common to the min-max training of GANs that can provably lead to mode collapse. It seems worthwhile to devote more effort in the design of models with the fixed generator representation, since they are as expressive as the free generator models while their convexity greatly eases training. It is not clear at this moment whether the product coupling is the best choice for the fixed generators, and whether the diffusion SDE and linear interpolants are the most effective random paths, so there is much to explore.

To conclude, we list a few interesting topics that have not been covered in this paper

-

•

Unstructured data: Our analysis was conducted only in the Euclidean space, whereas most of the applications of distribution learning models involve unstructured data such as images, texts and molecules. One thing of practical importance is that the performance of generative models for unstructured data is judged by human perception or perceptual loss [59], which can greatly differ from the Euclidean metric and thus the metric. To train the model to have higher fidelity, one approach is to use the adversarial loss of GANs such that the hidden features of the discriminators can be seen as an embedding space that captures fidelity. A related approach is to rely on a pretrained feature embedding as in [96, 53].

-

•

Prior knowledge: For supervised tasks involving unstructured data, it is often helpful to instill prior knowledge from humans into the models through self-supervised pretraining. A well-known example is the approximate invariance of image classification with respect to color distortion and cropping, and models that are pretrained to be insensitive to these augmentations can achieve higher test accuracy after training [22, 42, 18]. It could be beneficial to try to boost distribution learning models by prior knowledge. One example is given by [131] such that a generative model for sampling a thermodynamic system is designed to respect the spatial symmetry of the system.

-

•

Conditional generation: In practice, people are more interested in estimating conditional distributions, e.g. generate images conditioned on text descriptions [94, 96]. Incorporating a context variable can be done by simply allowing an additional input variable in the parameter functions [80], but it can also be accomplished with tricks that minimize additional training [107].

-

•

Factor discovery: For generative models, instead of using as a blackbox for sampling, it could be useful to train in a way such that has semantic meaning, e.g. for image synthesis, given a random image of a face, one coordinate of may control hair style and another may control head orientation. This unsupervised task is known as factor discovery [110], and some solutions are provided by [23, 48, 62] with application to semantic photo editing [74].

-

•

Density distillation: The basic setting considered in this paper is to estimate a target distribution given a sample set; yet, another task common to scientific computing is to train a generative model to sample from a distribution given a potential function . One popular approach [70, 131, 82, 17] is to use a modified normalizing flow with the reverse KL-divergence.

9 Proofs

9.1 Loss function

Proof of Proposition 3.1.

For any , the assumption on global minimum implies that

Define the map . Then,

Assume for contradiction that is nonconstant on , then there exist such that . Let be two disjoint hyperrectangles with volumes and . Define as the uniform distribution over , and as

Then the above inequality is violated. It follows that is constant and has the form . Finally, the assumption on global minimum implies that . ∎

9.2 Universal approximation theorems

Proof of Proposition 4.1.

The preceding proof uses the following lemma, which is a slight extension of the classical universal approximation theorem [52, 51] to cases with possibly unbounded base distributions . Such extension is needed since in practice is usually set to be the unit Gaussian.

Proof.

It suffices to consider the case with output dimension . Denote by to emphasize the choice of the activation .

Given Assumption 3.1, the activation is ReLU. Define

where is a continuous distribution supported in . Since ReLU is homogeneous, can be expressed by (36) with some bounded parameter function , and thus . Similarly, given Assumption 3.2, the activation is sigmoid. Define

Since the parameter distribution is bounded below by some positive constant over the ball , the function can be expressed by (36) with bounded parameter function , and thus .

Hence, we always have and it is integrable (). Define the subspace of functions whose parameter functions are compactly-supported. Since , it suffices to show that is dense in . Without loss of generality, we denote by . Since is , its Fourier transform is well-defined. Since is not constant zero, there exists a constant such that . Perform scaling if necessary, assume that .

It suffices to approximate the subspace , which is dense in . Fix any . Its Fourier transform is integrable. Then, Step 1 of the proof of [52, Theorem 3.1] implies that,

For any , define the signed distribution by

Define the function by

If Assumption 3.2 holds, then define the parameter function by the compactly supported function

Then,

If Assumption 3.1 holds, then define by the following function over the sphere

Then,

Thus, we always have .

The approximation error is bounded by

Note that , and pointwise, and is integrable:

Hence, the dominated convergence theorem implies that , which completes the proof. ∎

Proof of Proposition 4.2.

Proof of Proposition 4.3.

It suffices to approximate the compactly-supported distributions, which are dense with respect to the metric. Fix any compactly-supported distribution . Choose such that the support is contained in . Assume that the base distribution has full support over . The case without full support will be discussed in the end.

By Brennier’s theorem [117, Theorem 2.12], there exists a convex function over such that . For any , we can define the mollified function

where is a mollifier (i.e. is , non-negative, supported in the unit ball, and ). Then, is and convex, and

Thus, without loss of generality, we can assume that is .

Define the time-dependent transport map for

which is in and , and define the distributions , which are known as McCann interpolation. Then, define the vector field

The Jacobian of is positive definite for

so the inverse function theorem implies that the inverse exists and is over , with