A Learning Framework for -bit Quantized Neural Networks toward FPGAs

Abstract

The quantized neural network (QNN) is an efficient approach for network compression and can be widely used in the implementation of FPGAs. This paper proposes a novel learning framework for -bit QNNs, whose weights are constrained to the power of two. To solve the gradient vanishing problem, we propose a reconstructed gradient function for QNNs in back-propagation algorithm that can directly get the real gradient rather than estimating an approximate gradient of the expected loss. We also propose a novel QNN structure named -BQ-NN, which uses shift operation to replace the multiply operation and is more suitable for the inference on FPGAs. Furthermore, we also design a shift vector processing element (SVPE) array to replace all 16-bit multiplications with SHIFT operations in convolution operation on FPGAs. We also carry out comparable experiments to evaluate our framework. The experimental results show that the quantized models of ResNet, DenseNet and AlexNet through our learning framework can achieve almost the same accuracies with the original full-precision models. Moreover, when using our learning framework to train our -BQ-NN from scratch, it can achieve state-of-the-art results compared with typical low-precision QNNs. Experiments on Xilinx ZCU102 platform show that our -BQ-NN with our SVPE can execute 2.9 times faster than with the vector processing element (VPE) in inference. As the SHIFT operation in our SVPE array will not consume Digital Signal Processings (DSPs) resources on FPGAs, the experiments have shown that the use of SVPE array also reduces average energy consumption to 68.7% of the VPE array with 16-bit.

Index Terms:

Deep learning, quantized neural network (QNN), deep compression, FPGAI Introduction

Deep convolutional neural networks (CNNs) have substantially become the dominant Artificial Intelligence (AI) approach for a variety of computer vision tasks such as image classification [1, 2, 3], face recognition [4, 5], semantic segmentation [6, 7] and object detection [8, 9]. The significant accuracy improvement of CNNs brings with the cost of huge computational complexity, resource, and power consumption as it requires a comprehensive estimation of all the scopes within the feature maps [10, 11]. For example, the AlexNet model is over 200 MB, and the VGG-16 model is over 500 MB [10]. Towards such overwhelming resources and computation pressure, hardware accelerators such as GPUs, FPGAs, and ASICs have been applied to accelerate CNNs. Among these accelerators, FPGAs have emerged as one of the popular solutions when considering both the reprogramability and energy efficiency.

Implementing CNN on FPGAs is not an efficient practice due to limited resources and bandwidth. Thus QNN is a good choice for FPGAs implementation, which simultaneously gives consideration to computational efficiency, resources and classification accuracy in inference. In general, QNNs can be achieved in two ways: An estimator is used to estimate the gradient of the expected loss to solve the problem of gradient vanishing so that QNNs can be trained from scratch with the help of this estimator. Fine-tuning on a pretrained full-precision model obtains QNNs that bypasses the problem of gradient vanishing. Although the first method estimates a gradient, which makes it possible to train QNNs from scratch, the gradient of expected loss obtained by estimators has a noise source compared to the real gradient that causes a gap on classification accuracy between the QNNs and full-precision CNNs. The second method fine-tunes QNNs on a pretrained full-precision model that solves the problem of classification accuracy better, but a challenging factor is that the structure of QNNs is limited by the original structure of the pretrained CNNs model, and the structure of QNNs cannot be flexibly adjusted. Due to the constraints of computational resources and computational efficiency on FPGAs, it is inevitable to adjust the network structure for the hardware environment. In order to transform different CNNs into QNNs that can run efficiently on FPGAs, it is essential for a general learning framework to solve the above two challenges [12, 13, 14, 15].

In this paper, we propose a novel learning framework for -bit QNNs, whose weights are constrained to the power of two . We introduce a reconstructed gradient function in back-propagation algorithm that can directly get the real gradient, rather than the estimated gradient given by estimators. Thus the QNNs trained by our framework will be more accurate. At the same time, QNNs after adjusting the structure can continue to fine-tune with our framework. The learning framework is applied to train our proposed -BQ-NN, which is suitable for efficient implementation on FPGAs. We also evaluate the effectiveness of our approach on state-of-the-art networks such as ResNet [16], DenseNet [17] and AlexNet [1]. The main contributions of this article are summarized as follows:

-

1.

We propose a novel learning framework for -bit QNNs. In this framework, we propose a reconstructed gradient function in back-propagation algorithm, which can overcome the gradient vanishing problem during training the QNNs and can calculate the accurate gradient compared with the estimators based approaches. We achieve state-of-the-art results compared with typically low-precision QNNs.

-

2.

We propose a highly efficient QNN structure called -BQ-NN for FPGAs. Our proposed architecture, which consists entirely of convolutional layers and implements a uniform convolution kernel, can maximize the resource utilization and improve the parallel computational efficiency on FPGAs while preserving the accuracy of QNNs.

-

3.

We propose a novel shift vector processing element (SVPE) array for FPGAs, which replaces the multiplication with the SHIFT operation when calculating convolution operation on FPGAs. The computational efficiency of our SVPE array can achieve a performance of 2.9 times higher than that of the VPE array in the case of the same network structure on FPGAs.

The rest of this paper is organized as follows: Section II summarizes related prior works on QNNs and FPGAs. Our learning framework is presented in Section III. In Sections IV, we demonstrate the effectiveness of our learning framework via comparable experiments. We theoretically analyse and practically test the computational efficiency of our -BQ-NN using our quantization method in Section V. The conclusion is given in Section VI.

II Related work

II-A Learning for QNNs

Since the amount of the model capacity is too large, it is necessary to cut down it to perform CNNs on FPGAs, which is consistent with the purpose of deep compression. In general, deep compression can be divided into three categories, i.e., pruning, Huffman coding, and quantization. The pruning method will simplify the deep neural network by cutting off the network connections with small weights on the normal trained network [18] [19] [20]. The Huffman coding method is an optimal code used for lossless data compression [21] which uses entropy to encode source symbols by variable-length codewords. Han et al. [20] show that 20% - 30% of the network storage will be saved after Huffman coding the non-uniformly distributed values. When considering perform compressed networks on FPGAs, the network after pruning is an asymmetric structure, which is unsuitable for hardware implementation, and the Huffman coding may only be regarded as a post-compression combined with the other two compression methods, so most of the hardware accelerators will focus on the quantization method.

The quantization based method normally employ the low-precision weights, varied from 1 bit to 5 bits, to represent the CNNs [15, 22, 23, 24, 25, 26, 27, 28]. Some studies train QNNs from scratch by estimating the gradient of expected loss based on Straight-Through Estimator [15, 23, 22, 27]. For example, Courbariaux et al. [15] train a classification neural network from scratch with 1-bit weight and activation, which can run 7 times faster than the CNNs. Choi et al. [23] propose a neural quantization scheme called Parameter Clipping Activation, which uses a parameter to find the optimal quantization scale for arbitrary bit width activations. Choi et al. [22] introduce a novel technique called Statistics-Aware Weight Binning, which finds the optimal scaling factor based on statistical characteristics of the distribution of the weights to minimize the quantization error. The QNNs trained by the above quantization methods only accelerate the inference, Zhou et al. [27] propose a DoReFa-Net that can accelerate both training and inference by low bit width weights, activations and gradients respectively. However, these estimator-based methods have a noise compared to the real gradient. Thus these QNNs can’t achieve an ideal classification accuracy, especially on multi-classification datasets such as CIFAR-100.

Some other quantization methods are dedicated to design special strategies to fine-tune QNNs, which will not rely on the backpropagation algorithm and can bypass the problem of gradient vanishing [29, 26, 28]. They can achieve a much better accuracy as they are independent of estimators. For example, Park et al. [29] propose precision highway that has an end-to-end high-precision information flow for ultra-low-precision computation. This linear weight quantization method is based on the assumption that the weight distribution is the Laplace distribution. Recently, Zhou et al. [26] propose an incremental network quantization method, which converts pretrained full-precision CNNs model into a low-precision model where the weights are constrained to the power of two or zero. It has been studies that there will be little loss on the classification accuracies when using 2-5 bits low-precision weight [27, 26]. However, these quantization methods will depend on the pretrained network structure rather than the backpropagation algorithm, which will be difficult to satisfy the network-structure-optimization requirements due to the hardware limitation.

II-B CNNs Implemented by FPGAs

Considering the inference, the CNNs have a highly hierarchical structure of multiple feature maps, whose structure exposes a large amount of parallelism that makes CNNs very suitable for FPGAs implementation. This structure builds on the accumulation of a huge number of convolutions that will consume a huge number of floating-point resources on FPGAs. In addition, the structure of CNNs often contains many convolutional layers. Thus the convolution module with different parameters needs to be executed iteratively during the inference. Frequent execution of data caching and parameter loading will be limited by the bandwidth. Therefore, in many studies, their hardware structures of CNNs are designed mainly for the two bottlenecks of floating-point resources and bandwidth [12, 17, 16, 13].

In terms of optimizing for floating-point resources, Lu et al. [30] design a fast Winograd algorithm, which can decrease the use of floating-point resources on FPGAs and reduces the complexity of convolution dramatically. Simultaneously, they also give the formula for estimating the computational efficiency, which demonstrates that the fast Winograd algorithm is more efficient than conventional convolutional algorithm due to the use of fewer floating-point resources on FPGAs. Meloni et al. [13] present an accelerator configuration for CNNs that reaches more than 97% DSP resource utilization at 150 MHz operating frequency with 16-bit precision. And they show that the floating-point resource utilization is the highest when executing 33 filters on FPGAs.

Other studies have focused on optimizing the data scheduling structure to reduce the impact of the bandwidth. For example, Sankaradas et al. [14] implement a vector processing element (VPE) array coprocessor, which can accelerate the CNNs by optimizing the cache between distributed off-chip memory banks and on-chip computing elements on FPGAs. Peemen et al. [12] show that their scheduler prefers to use only convolutional layers without fully connected layers on FPGAs, which can maximize the efficiency of on-chip memories by reducing the impact of the bandwidth bottleneck.

The crucial issue with the above methods is that they usually only consider the bottleneck at a single level and fail to coordinate these two constraints to improve the computational efficiency of the hardware accelerators. In this paper, we reduce the impact of the above two constraints by introducing the QNNs into FPGAs, which provides a new idea to deal with the above two bottlenecks. Since the weights in our QNNs are quantized to the power of two, the quantized weights directly reduce the bandwidth required to load the weights. In addition, the use of the quantized weights can translate the multiplication into shifting in convolution module, which greatly reduces the use of floating-point resources.

III -BQ-NN

III-A Fundamental Idea of Our -BQ-NN

The main idea of our -BQ-NN is based on Fig.1, which shows the information loss led by the quantization method with the power of can be interpreted as the sampling loss caused by non-uniform sampling. In fact, the weights of CNNs with large absolute values will be dominant to the overall classification accuracy of the networks, although these weights with large values only account for a small ratio among all the weights [11, 31]. For an arbitrary probability density function of the weights in a neural network, denoted as , we can use the blue curve in Fig.1 to represent which meets . In this way, we can calculate the sampling loss which can be represented as the area between two distributions (red and blue curves) in Fig.1. By calculating, the sampling loss is represented as the following recursive formula, where is the quantized bit width of the weights.

| (1) |

It can be seen from the above formula that the sampling loss always decreases as the increasing of quantized bit width of the weights, which indicates that the sampling loss is negatively related to the quantized bit width of weights. However, the bit width is limited and needs to reduce as much as possible in QNNs. Thus, finding the best balance between quantized bit width and the sampling loss is the key to balancing the performance, speed, and resources of QNNs. We define the as the variation between two sampling losses, and , from Eq.1. Then, we can prove that will approach to zero in our quantization method with the power of , which can be ensured by the Theorem 1. Therefore, continuing to increase the quantized bit width of after is not helpful to decrease the sampling loss.

Theorem 1

Proof:

From the hardware perspective, the resource consumption of SHIFT operation is much less than multiplication, so our intention is to use the SHIFT operation instead of multiplication. Considering that the shift right operation will make the weights exceed the constraint range of (,), thus, all SHIFT operations are shift left and every quantized weight is chosen from the entries , where indicates its multiplication can be calculated by and indicates that no operations are required. Our -BQ-NN quantizes the weights to the entries, which are encoded to -bit and suitable for hardware computation. Under such circumstance, the staircase function can be used to describe our -bit quantized weights as Eq.4 (typically, is greater than , and is degraded to if is equal to ), where are full-precision weights.

| (4) |

Here is taken from to in turn, where and is the sign function:

| (5) |

III-B Gradients Computation in -BQ-NN

In order to facilitate the discussion as follows, we need to define some variables first, where represents the weight that connects the -th neuron of the -th layer to the -th neuron of the -th layer, represents the bias of the -th neuron of the -th layer, represents the input of the -th neuron of the -th layer (), represents the output of the -th neuron of the -th layer (), and is activation function.

We also have to add a extra quantized weight so that we can train our -BQ-NN, where the quantized weight is shown as follows,

| (6) |

And the cost function of mini-batch of samples in our -BQ-NN is,

| (7) |

Where is the input sample, is the actual classification, is the prediction output, and is the maximum number of layers in the network.

By defining as the error produced by the -th neuron of the -th layer, we can use the back-propagation algorithm to calculate the gradient and update the parameters according to the following three steps111 represents the Hadamard product that is used for point-to-point product between matrices or vectors..

-

-

Calculating the error of the last layer of the network.

(8) -

-

Calculating the error of each layer of the network from the back to the front.

(9) -

-

Calculating the gradient of weight and bias respectively.

(10) (11)

In the above process of deriving the entire back-propagation, except for the gradient of weight of the last step, the other steps are well-defined. Based on the Eq.11, the gradient of weight can be calculated as follows,

| (12) |

Where is exactly the , which is the derivative of . And this derivative satisfies the conditions of Dirac Delta Function . According to the properties of , can be calculated as follows,

| (13) |

Substituting into Eq.12, we discover that model cannot be trained by back-propagation algorithm due to gradient vanishing.

To resolve the above problem, we reconstruct the quantized weight function as Eq.14 to ensure that the weights can be updated by using the back-propagation algorithm as shown in Fig.2, the blue full line, where is an adjustable parameter in the range of .

| (14) |

By substituting Eq.14 into Eq.11, we can recalculate the gradient of weight as follows again with ,

| (15) |

At this point, we have reconstructed the quantized weight function as Eq.14 to solve the gradient vanishing, but the weights cannot be quantized to the entries directly as Eq.6. However, we can prove that the reconstructed quantized weight function will approximate to the entries after several iterations, which can be ensured by the Theorem 2.

Assumption. Since the algorithm needs to be iterated, our problem needs to be discussed within the framework of the series. We define as an iteration of , is equivalent to , and the value of is chosen from .

Theorem 2

In the framework of the series, will approach to when the number of iterations is sufficient, when is the number of iterations.

Proof:

The general terms of series from to are written as follows based on Eq.14,

| (16) |

We let , then we get the equation as follows,

| (17) | ||||

As the number of iterations increases, will approach . With the guarantee of Theorem 2, the above equation can be rewritten as (namely, ) when the number of iterations is enough () and is in the range of . In the actual algorithm implementation, it is only necessary to iterate through several steps following the training process, and the networks can be quantized completely as Eq.6. ∎

The design of in our reconstructed quantized weight function takes three aspects into consideration: First, the designed function must satisfy the Theorem 2. Second, our reconstructed function indicates that the ratio of between quantized weights and full-precision weights can be used to adjust the information ratio of between quantized weights and full-precision weights in the training process. Third, on the other hand, is the slope of our reconstructed function shown as the blue full line in Fig.2, which can be used to change the gradient descent rate of back-propagation based on Eq.15 during the training.

III-C Posterior-Distribution Adjustment

In the initialization of the networks, the initialization modes MSRA and Xavier [32] which will adjust variance based on the number of inputs are prone to converge than the traditional Gaussian distribution initialization mode with fixed variance in DNNs. Inspiring by this fact, we suspect that adjusting the distribution of quantized weights may make it easier for us to train our -BQ-NN. Here, we consider that full-precision networks are prone to converge than quantized networks; thus, we prefer to keep the distribution of quantized weights consistent with full-precision weights’. Comparing the probability density function before quantization (its corresponding expectation and variance are and , respectively) and the probability density function after quantization (as Eq.4), we make their expectation and variance equal respectively so that their distribution is consistent as follows,

| (18) |

The original full-precision probability density function and the value of quantized weight function are fixed, so we can only adjust the value range of to meet Eq.18.

III-D The Training Algorithm for -BQ-NN

In actual training algorithm for -BQ-NN, the Batch Normalization (BN [31]) is added in our -BQ-NN because it is conducive to reduce the overall impact of the weight scale and accelerate the training. Thus, we will derive the back-propagation algorithm for -BQ-NN with BN and give the training algorithm in this subsection.

First, we define four variables of BN, where represents the variance of all samples of a batch, represents the sample mean, and are the scale variation coefficients. Due to the existence of BN, the bias term can be ignored, so the input of the neuron is re-expressed as , the normalized input of the neuron is , and the output of the neuron is . Then, we can calculate the error and the gradient, based on the discussion of Section III-B, according to the following three steps.

-

-

Counting the mean and variance of the sample, and calculating the gradient of them.

(19) (20) (21) -

-

Calculating the error of the network.

(22) -

-

Calculating the gradient of weight, , and respectively.

(23) (24) (25)

With the foundation of the above formulas, we can propose our training algorithm for -BQ-NN, as indicated in Algorithm 1. This algorithm covers two learning modes: training from scratch and fine-tuning on the pretrained model, where the first mode means the weights are randomly initialized and the second mode means the weights are initialized by the pretrained full-precision network model. The overall quantization process is illustrated as Fig.3. The code for training algorithm is available 222https://github.com/papcjy/n-BQ-NN.

III-E Activation Quantization in -BQ-NN

The above discussion is all about the quantization of weights. To take the integrity of our -BQ-NN and the necessity of subsequent ablation experiments into consideration, we need to discuss the quantization of activations in this subsection. Now, let’s put our eyes back on Section III-B. In the case of the quantized activations, the output of the -th neuron of the -th layer can be rewritten as,

| (26) |

Where is activation function.

At this point, we have encountered the same problem, the error of network becomes zero due to the existence of , when the Eq.26 is substituted into Eq.8 and Eq.9.

Considering the expectation of , the error of network has reappeared, which is guaranteed by Theorem 3.

Theorem 3

Let us define where follows Eq.26 that is chosen from , then, we get a new expression as

| (27) |

Where is the noise source that influences , means the expectation over , and is a constant.

Proof:

Under the Theorem 3, we can re-express the error of network and quantize the activations in our -BQ-NN by rewriting the Eq.8 and Eq.9 as follows,

| (33) |

| (34) | ||||

IV Experiment

| type | patch size/stride | output size |

| conv quantized | 33/1 | 3232128 |

| conv quantized | 33/1 | 3232128 |

| conv quantized | 33/1 | 3232128 |

| pool | 22/2 | 1616128 |

| conv quantized | 33/1 | 1616256 |

| conv quantized | 33/1 | 1616256 |

| conv quantized | 33/1 | 1616256 |

| pool | 22/2 | 88256 |

| conv quantized | 33/1 | 88512 |

| conv quantized | 11/1 | 881024 |

| conv quantized | 11/1 | 8810 (100) |

| pool | 88 | 1110 (100) |

| softmax | classifier | 1110 (100) |

In our experiments, we use three network structures ResNet, DenseNet and AlexNet. The network structure of our -BQ-NN ( can take ) is similar to the architecture of All-CNN [33] that consists solely of convolution layers and Network in Network block [34]. Table I details the parameter settings and our network architecture. In the following experiments, our training algorithm is used to train the model from scratch or fine-tune on the full-precision model in five benchmark datasets MNIST, SVHN, CIFAR-10, CIFAR-100 and ImageNet. We unfold our experiments from 4 dimensions, respectively classification accuracy compared with low-precision QNNs, quantization errors by our training method, compression ratio in different datasets, and convergence speed compared with BNN.

IV-A MNIST

IV-B CIFAR

The two CIFAR datasets [37] consist of natural color images with 3232 pixels, respectively 50,000 training and 10,000 test images, and we hold out 5,000 training images as a validation set from the training set. CIFAR-10 (C10) consists of images organized into 10 classes and CIFAR-100 (C100) into 100 classes. We adopt a standard data augmentation scheme (random corner cropping and random flipping) that is widely used for these two datasets [38, 39, 40, 34, 41, 36, 33, 38]. We normalize the images using the channel means and standard deviations in preprocessing.

IV-C SVHN

The SVHN dataset [42] consists of color images of house numbers collected by Google Street View with 3232 pixels, organized into 10 classes (0 to 9). There are 73,257 images in the training set, 531,131 images for additional training, and 26,032 images in the test set respectively. We divide the pixel values by 255.0 so that they are in the [0,1] range as [43]. Moreover, we do not preprocess the dataset following common practice without data augmentation [44, 39, 34, 36, 45].

IV-D Experiment Results

IV-D1 -bit

| Model | Bit-width | Test error |

| ResNet-110 ref | 16 | 6.61% |

| -BQ-ResNet | 5 | 7.04% |

| -BQ-ResNet | 4 | 7.07% |

| -BQ-ResNet | 3 | 7.15% |

| -BQ-ResNet | 2 | 8.76% |

| -BQ-ResNet | 1 | 10.52% |

As the theoretical analysis in Section III, different quantized bit width brings different sampling loss, and the larger bit width means the less sampling loss. Thus, in this experiment, we evaluate the test error rates of our -BQ-ResNet that is fine-tuned on full-precision ResNet-110 when takes different values on CIFAR-10. The experimental results from Table II are consistent with Eq.1. Therefore, the choice of 3-bit is better because is close to as Eq.3 when considering both the sampling loss and the conciseness of weight representation. Obviously, this result is also experimentally proved by works in [26]. Thus, our -BQ-NN is chosen as T-BQ-NN when in the subsequent experiments.

IV-D2 Accuracy and Capacity

| Test error | ||||||

| Method | Depth | Params | CIFAR-10 | CIFAR-100 | SVHN | MNIST |

| Network in Network [34] | 9 | 1.9M | 8.81% | 35.68% | 2.35% | 0.53% |

| All-CNN [33] | 9 | 1.4M | 7.25% | 33.71% | ∗3.17% | ∗0.63% |

| Highway Network [38] | 19 | 2.3M | 7.72% | 32.39% | % | 0.67% |

| BNN [15] | 9 | 1.7M | 11.40% | % | 2.80% | 0.96% |

| Round Quantization | 9 | 1.2M | 85.88% | 98.90% | 83.72% | 80.55% |

| T-BQ-NN | 9 | 1.2M | 7.59% | 28.90% | 2.29% | 0.50% |

| Network | Depth | Bit-width | Params | Test error(%) | |

| CIFAR-10 | ResNet | 110 | 16 | 1.7M | 6.61 |

| T-BQ-ResNet | 110 | 3 | 0.3M | 7.15 (+0.54) | |

| DenseNet | 100 | 16 | 0.8M | 4.51 | |

| T-BQ-DenseNet | 100 | 3 | 0.15M | 5.31 (+0.80) | |

| CIFAR-100 | ResNet | 110 | 16 | 1.7M | 35.87 |

| T-BQ-ResNet | 110 | 3 | 0.3M | 37.56 (+1.69) | |

| DenseNet | 100 | 16 | 0.8M | 22.27 | |

| T-BQ-DenseNet | 100 | 3 | 0.15M | 24.10 (+1.83) | |

| SVHN | ResNet | 110 | 16 | 1.7M | 3.13 |

| T-BQ-ResNet | 110 | 3 | 0.3M | 3.25 (+0.12) | |

| DenseNet | 100 | 16 | 0.8M | 1.76 | |

| T-BQ-DenseNet | 100 | 3 | 0.15M | 2.10 (+0.34) |

| Method | Encoding bit-width | Compression ratio |

| T-BQ-ResNet on C10 (P+Q) | 3 | 49 |

| T-BQ-ResNet on C10 (P+Q+H) | 2.6 | 57 |

| T-BQ-ResNet on C100 (P+Q) | 3 | 25 |

| T-BQ-ResNet on C100 (P+Q+H) | 2.8 | 27 |

| T-BQ-ResNet on SVHN (P+Q) | 3 | 24 |

| T-BQ-ResNet on SVHN (P+Q+H) | 2.8 | 26 |

| T-BQ-DenseNet on C10 (P+Q) | 3 | 38 |

| T-BQ-DenseNet on C10 (P+Q+H) | 2.5 | 46 |

| T-BQ-DenseNet on C100 (P+Q) | 3 | 15 |

| T-BQ-DenseNet on C100 (P+Q+H) | 2.8 | 16 |

| T-BQ-DenseNet on SVHN (P+Q) | 3 | 133 |

| T-BQ-DenseNet on SVHN (P+Q+H) | 2.1 | 190 |

| -bit Weights | -bit Activations | Inference Operation | AlexNet Top-1 Accuracy | AlexNet Top-5 Accuracy |

| 1 | 1 | XNOR | 0.279 (BNN) | 0.504 (BNN) |

| 1 | 1 | XNOR | 0.348 | 0.601 |

| 1 | 32 (float) | XNOR ADDER | 0.368 (BC) | 0.620 (BC) |

| 1 | 16 (float) | XNOR ADDER | 0.486 | 0.734 |

| 2 | 32 (float) | XNOR ADDER | 0.529 (TWN) | 0.766 (TWN) |

| 2 | 16 (float) | XNOR ADDER | 0.536 | 0.777 |

| 3 | 16 (float) | SHIFT ADDER | 0.560 | 0.795 |

| 8 (float) | 8 (float) | MAC | 0.530 (DoReFa-Net) | 0.768 (DoReFa-Net) |

| 32 (float) | 32 (float) | MAC | 0.566 | 0.802 |

As hardware devices require relatively simple architecture and less number of layers, we have selected some network suited for hardware implementation as our comparative experiment. For example, BNN with binary weights and activations replaces most multiplications by 1-bit XNOR operations. Network in Network utilizes the global average pooling over feature maps in the classification layer, which is less prone to overfitting than the fully connected layers. All-CNN achieves a new architecture that consists solely of convolution layers replacing max-pooling by a convolution layer without loss in accuracy on several benchmarks. Highway Network allows unimpeded information flow across many layers using adaptive gating units to regulate the information flow.

There is a general manifestation that T-BQ-NN performs better than most other network structures, while these network structures have never been quantized except BNN. In the experiment here, T-BQ-NN is trained by our training algorithm from scratch due to the lack of pretrained model, and this model is trained with a mini-batch size of 50, a weight decay of 0.0001. Its test error rates of 7.59 % on CIFAR-10, 28.9 % on CIFAR-100, 2.29 % on SVHN, and 0.5 % on MNIST are lower than the test error rates achieved by Network in Network, Highway Network, and BNN. Particularly, T-BQ-NN makes up for the classification accuracy of BNN on CIFAR-100 to some extent. The best result for all listed datasets is T-BQ-NN except CIFAR-10 is All-CNN, and all results are shown in Table III.

Our model capacity is even more encouraging: the number of parameters of T-BQ-NN is significantly lower than other network structures. Particularly, T-BQ-NN achieves the number of parameters of 1.2M that is even lower than 1.7M of BNN shown in Table III.

IV-D3 Extension

One positive-effect of our training algorithm is universal. We popularize our training method to the better and deeper architectures, not just limited to CNNs, such as ResNet [16] and DenseNet [17]. In the experiment here, T-BQ-ResNet and T-BQ-DenseNet are 3-bit that are fine-tuned by our training algorithm based on the full-precision model of ResNet and DenseNet.

For T-BQ-ResNet, all the multiplications are converted to SHIFT and ADDER operations using 3-bit weights in all convolutional layers and shortcut connections. We use a momentum of 0.9, weight decay of 0.0001 [49, 44], and adopt the weight initialization and BN [31, 32] without dropout [50]. This model is trained with a mini-batch size of 128 and a learning rate of 0.1, divided by 10 at 32k and 38k iterations, and terminates training at 64k iterations. We achieve the test error rates of 7.15% on C10, 37.56% on C100, and 3.25% on SVHN using T-BQ-ResNet, just rises 0.54% on C10, 1.69% on C100, and 0.12% on SVHN compared with ResNet on the basis of Table IV.

For T-BQ-DenseNet, its model consists of Bottleneck layers indicated to BN-ReLU-Conv(1 1)-BN-ReLU-Conv(3 3) and transition layers indicated to BN-ReLU-Conv(1 1)-averpool(2 2), and both of these layers contain 1x1 convolution. We use a weight decay of 0.0001, momentum of 0.9 [51], and adopt the weight initialization and BN without dropout. This model is trained with an initial learning rate of 0.1, divided by 10 at 50% and 75% of the total number of training epochs. And we train using a batch size of 64 for 300 and 40 epochs respectively on CIFAR and SVHN. Comparing between DenseNet and T-BQ-DenseNet, the increasing in error is 0.80% from 4.51% to 5.31% on C10, 1.83% from 22.27% to 24.10% on C100, and 0.34% from 1.76% to 2.10% on SVHN as Table IV.

We attribute this primarily to reduce the number of parameters approximately 5 times from 0.8M to 0.15M on T-BQ-DenseNet, and from 1.7M to 0.3M on T-BQ-ResNet is shown as Table IV. Furthermore, a hybrid network compression solution combined with three different techniques, respectively network pruning [19], quantization and Huffman coding is tested for T-BQ-ResNet and T-BQ-DenseNet in a scene with the same classification accuracy. Comparing with the original full-precision ResNet-110 model, we achieve the compression ratio of 57 on C10, 27 on C100 and 26 on SVHN for T-BQ-ResNet. For T-BQ-DenseNet, the compression ratio is 46 on C10, 16 on C100 and 190 on SVHN shown as Table V.

IV-D4 Convergence Speed

In this experiment, we train our T-BQ-NN and BNN from scratch on C10, C100, and SVHN. The results in Fig.4 indicate that T-BQ-NN not only has better performance on classification accuracy than BNN but also converges much faster. We just only compare our method with BNN, because the weights of other network models in Table III are full-precision and these models are not quantized except BNN and T-BQ-NN. We use the same conditions, including learning rate, batch size, and iterations, to test the error rates of BNN and T-BQ-NN at first epoch. Comparing with BNN, T-BQ-NN reaches the best test error dropping from 500 to 150 epochs on C10, from 1000 to 100 epochs on MNIST, and from 1000 to 180 epochs on C100. As a result, T-BQ-NN can be trained much easier and faster than BNN.

This result may be due to the fact that straight-through estimator used by BNN contains noise, which causes the unexpected deviation, while our training algorithm is based entirely on back-propagation without the effect of noise and weight representation is more abundant.

IV-E ImageNet

We further evaluate our -BQ-NN on ILSVRC2012 [11] image classification dataset that consists of 1.2 million high-resolution natural images where the validation set contains 50k images. These images are organized into 1000 categories of the object for training, which are resized to 224224 pixels before fed into the network. In the next experiments, we report our single-crop evaluation results using top-1 and top-5 accuracy.

AlexNet: This CNN architecture is the first structure that shows to be successful on ImageNet classification task, which consists of 5 convolutional layers and 2 fully-connected layers [1]. We use AlexNet coupled with BN that contains a total of 61M parameters.

In training, images are resized randomly to 256256 pixels, and then a random crop of 224224 is selected for training. We train our -BQ-NN for 50 epochs with batch size of 128/16 based on AlexNet/Vgg-16. We use ADAM optimizer with the learning rate of 1e-4. We replace the Local Contrast Renomalization layer with Batch Normalization layer. At inference, we use the 224224 center crop for forward-propagation.

The ablation experiments are listed in Table VI. The baseline AlexNet model scores 56.6% top-1 accuracy and 80.2% top-5 accuracy that is reported in [52]. For ablation studies, we strictly control the consistency of variables, including network structure, bit width and quantized layers. The only difference is the quantization method. In experiments of “1-1” v.s. “1-1”, “1-16” v.s. “1-32” and “2-16” v.s. “2-32”, our -BQ-NN achieves 6.9%, 11.8% and 0.7% accuracy improvements respectively. For “3-16” v.s. “32-32”, our -BQ-NN only reduces the accuracy by 0.6%.

V Acceleration on FPGA

We evaluate our -BQ-NN on FPGA platform: Xilinx ZCU102, which consists of an UltraScale FPGA, quad ARM Cortex-A53 processors and 500 MB DDR3. To measure the performance of our -BQ-NN running on FPGA, we get the data of run-time, resource utilization, and power by simulating and testing on Vivado-2017 when the operating frequency is 200 MHz. Our -BQ-NN implementation involves a few design parameters, parallelization degree ( and ), filter size (), input feature maps width (), input feature maps height (), input feature channels (), and output feature channels ().

V-A Coprocessor Architecture

Fig.5 shows the block diagram of our system. In the CNN calculation process, the host computer hands off the weights and images to the coprocessor (ZCU102), and collects the predicted classification results. The transmission mode between host computer and ARM CPU can be switched in PCI or UDP. Before the computation, the host computer is responsible for feeding the images and reducing precision. In addition, the ARM CPU needs to complete the calculation of fully-connected layers that is not suitable for FPGA parallel acceleration and the FPGA accelerates the calculation of convolutional layers.

We build the coprocessor with parallel architecture, as shown in Fig.6. The critical part of the coprocessor is SVPE cluster interface that has SVPE clusters, where each SVPE cluster consists of SVPE arrays with a size of . The adders are used to compute partial sums of convolutions while the SVPE arrays compute convolutions. The fetch unit is programmed to fetch images and weights from ARM-based processing system (PS), and the load/store unit is used to load or store intermediate calculation results. The AXI-HP port is used to receive or send the data, and the AXI-GP port is used to receive or send the network structure information and the control signal. A key point to note is 16-bit computational accuracy acts on the data buses to save data bandwidth.

The basic design ideas continue the architecture of -BQ-NN, which converts all 16-bit weights as -bit weights to reduce memory usage and increase the parallelization degree. On FPGAs, due to the shortage of DSPs, this has become a major factor limiting the increase in parallelization degree that directly affects the ability to accelerate calculation for CNNs, because the multiplication in the convolution calculation needs to call DSPs. Instead, we implement the multiplication with SHIFT operation that consumes the Look-Up-Table (LUT) arrays on FPGAs, while the resource of LUTs is more abundant than DSPs’ [30]. In general, our -BQ-NN, which consists of 16-bit activations and -bit weights.

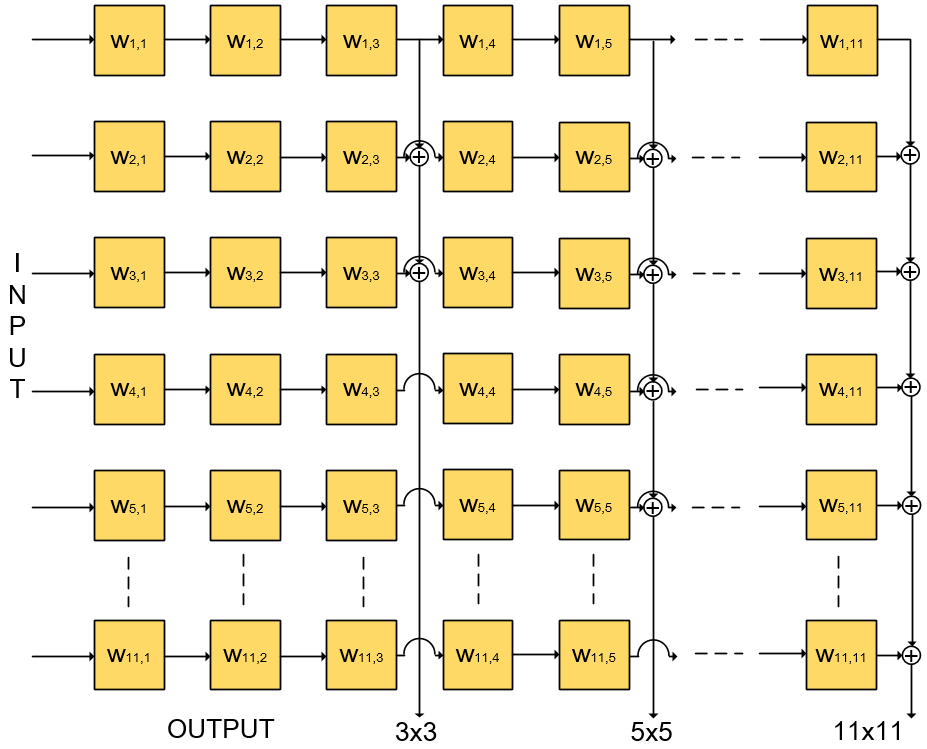

Our architecture of convolution computation is characterized by several key attributes compared with VPEs [14]. First, we organize the architecture as arrays of SVPEs, where the SVPE array is an array of 2D convolvers, each of which consists of connected SHIFT and ADDER units instead of Multiply Accumulate (MAC) units, shown in Fig.7. The weights and feature maps are loaded into each PE alternately by AXI-HP port. Before each calculation, the weights are buffered to the specified areas (the double orange rectangles in Fig.7), and then the pipeline calculation starts with the enablement of feature maps. Modeling the SVPE and VPE arrays, we compare their resource consumption, parallelization degree and power on FPGAs shown as Table VII, and our SVPE array achieves the average energy consumption of 3.81 W at different that is less than VPE array of 5.53 W. Second, we reduce banded off-chip data memory and improve the data movement between the SVPE clusters and the off-chip memory by using -bit weights. Third, all convolvers are homogeneous when is fixed as our primitive operator. We evaluate the improvement of the computational efficiency of -BQ-NN in hardware by the following section.

V-B Computational Efficiency

| Array | SVPE | VPE | |||||

| Precision (n) | 1 | 2 | 3 | 4 | 5 | 16 | |

| Power (W) | Signal | 1.94 | 2.31 | 2.61 | 2.53 | 2.13 | 4.88 |

| Logic | 1.03 | 1.33 | 1.55 | 1.63 | 1.62 | 0.25 | |

| DSPs | 0.08 | 0.09 | 0.09 | 0.08 | 0.06 | 0.40 | |

| Total | 3.05 | 3.73 | 4.25 | 4.24 | 3.81 | 5.53 | |

| Used Resource | LUTs | 353 | 280 | 307 | 334 | 346 | 41 |

| FFs | 220 | 226 | 232 | 238 | 244 | 213 | |

| DSPs | 3 | 3 | 3 | 3 | 3 | 12 | |

| Parallelization degree () | (8,32) | (4,16) | |||||

Since the filter size (33) is fixed for our -BQ-NN, resource utilization will be maximized. Here, we can predict the performance of -BQ-NN on FPGAs by developing an analytical model. In the following, we rely on it to compare computational efficiency between traditional implementation and -BQ-NN on FPGAs.

On the hardware, MAC unit, adder and multiplier will consume DSP. In fact, the number of DSPs only depends on the size of filter and parallelization degree [30, 12] as follows,

| (35) |

We must balance the memory bandwidth between the on-chip and off-chip memory and ensure that the speed of transmission is greater than or equal to the speed of computation for utilizing the resource efficiently. The formula of the time to process input data in the line buffer on FPGA is,

| (36) |

Where is the operating frequency of the FPGA. Together, we have to parallel the speed of transmission between input and output data as follows,

| (37) |

We require that . Therefore, we can get the minimum requirement of bandwidth is,

| (38) |

Where is the bit-width of computation, and we evaluate the performance of hardware acceleration choosing 16 bit-width. We define the as the time to load the first rows of input image and filter needed into on-chip memory as follows,

| (39) |

Where is the bit-width of the weights. The total operations are,

| (40) |

And the total processing time of the convolution is,

| (41) |

Finally, we can compare the computational efficiency of the different models defining the effective performance of convolution as,

| (42) |

We obtain the computational efficiency corresponding to different bit width of the weights where represents the bit width of our -BQ-NN.

| (43) |

Now, given a convolutional layer represented by (,,,), we get the computational efficiency based on design parameters (,,).

The main reason for restricting the computational efficiency of CNNs on FPGA is parallelization degree, which is directly related to DSPs when the setting of bandwidth is reasonable. To speed-up the inference of CNNs on FPGAs, we use our SVPE cluster to replace the traditional VPE cluster by converting the multiplications as the SHIFT and ADDER operations. Since we no longer use the multiplication, the amount of DSPs is reduced as follows,

| (44) |

Since SVPE array consumes much less DSPs than VPE array compared Eq.35 with Eq.44, -BQ-NN with SVPE array can get a larger amount of parallelization degree than CNNs with VPE array when the consumed DSPs are the same. Based on the maximum DSPs number of 2520 as Table VIII and the balanced memory bandwidth, we can design the maximum parallelization degree of (,) and (,) respectively on SVPE and VPE array with filters 33. Thanks to the SVPE array, the parallelization degree increases by 4 times to improve the computational efficiency greatly when the total consumed DSPs is 768. Supposing the color image is 32()32()3() pixels, filter size is 3, DDR bit width is 128, the computational efficiency of our -BQ-NN using SVPE array has improved by about 4.1 times compared with traditional network using VPE array on the basis of Eq.43.

V-C Performance on FPGA

We evaluate our SVPE cluster implementation using AlexNet, where our -BQ-NN contains 16-bit activations and 3-bit weights. Table VIII gives the evaluation results with the comparison of the state-of-the-art FPGA accelerators, where GOP indicates the unit of the number of operations. From the hardware perspective, we prefer to use the computational efficiency to describe the performance of the algorithm. Because the total operations of computing a network is fixed, we can get the execution time (s) by dividing the total operations (GOP) by the computational efficiency (GOP/s). Similar to the structure of Fig.7, a universal SVPE array designed by the largest filter size of AlexNet is proposed in Fig.8. This experiment will use the universal SVPE array that fits the full-size after a slight adjustment. Our array designs to be recycled when calculating the convolutions of different layers, and filter of AlexNet is only used in the first convolutional layer, so most of the array utilization is extremely low. This also confirms the necessity of designing the network architecture with unified filter to improve resource utilization as Table I.

Compared to prior works [53, 30], we improve the average CNN performance to 957.4 GOP/s where the work [30] is implemented by Winograd algorithm. The baseline is to implement the same hardware architecture as our implementation. The only difference is that it uses VPE cluster because its weights and activations are both 16-bit. The computational efficiency of our implementation has improved by 2.9 times compared with the baseline, which is slightly less than 4.1 times based on the theoretical calculations of the Section V-B. On the other hand, Our implementation also improves the energy efficiency to 48.9 GOP/s/W. The better energy efficiency and resource efficiency come from the novel SVPE structure.

| [53] | Baseline | [30] | Our Impl. | |

| Precision | 32bits fixed | 16bits fixed | 16bits fixed | 16bits fixed |

| Device | VX485T | ZCU102 | ZCU102 | ZCU102 |

| Freq(MHz) | 100 | 200 | 200 | 200 |

| Logic cell(K) | 485.7 | 600 | 600 | 600 |

| DSP | 2800 | 2520 | 2520 | 2520 |

| BRAM(Kb) | 206018 | 182418 | 182418 | 182418 |

| conv1(GOP/s) | 27.5 | 227.5 | 409.6 | 410.5 |

| conv2(GOP/s) | 83.8 | 535.8 | 1355.6 | 1744.3 |

| conv3(GOP/s) | 78.8 | 655.9 | 1535.7 | 1680.7 |

| conv4(GOP/s) | 77.9 | 634.4 | 1361.7 | 1739.4 |

| conv5(GOP/s) | 77.6 | 559.5 | 1285.7 | 1456.1 |

| CNN average (GOP/s) | 61.6 | 332.2 | 854.6 | 957.4 |

| Power(W) | 18.6 | 28.7 | 23.6 | 19.6 |

| DSP Efficiency (GOP/s/DSPs) | 0.022 | 0.131 | 0.339 | 0.381 |

| Logic cell Efficiency (GOP/s/cells/K) | 0.127 | 0.553 | 1.424 | 1.596 |

| Energy Efficiency (GOP/s/W) | 3.31 | 11.57 | 36.2 | 48.85 |

| DSP Utilization | 80% | 30% | 63% | 30% |

| LUT Utilization | 61% | 48% | 39% | 73% |

| FF Utilization | 34% | 42% | 33% | 68% |

| BRAM Utilization | 50% | 50% | 43% | 83% |

VI Conclusion and future work

In this paper, we present a novel learning framework to quantize full-precision CNN models into low-precision QNN models whose weights are constrained to the power of two. We solve the problem of gradient vanishing by adding a reconstructed gradient function into back-propagation algorithm. To satisfy the network-structure-optimization requirements for hardware limitation, we propose -BQ-NN, a novel QNN structure, to replace the multiplication with SHIFT operation whose structure is more suitable for the inference on FPGAs. Furthermore, we also design the SVPE array to replace all 16-bit multiplications with SHIFT operations in convolution operation on FPGAs. For proving the validity of our learning framework, we conduct experiments and show that the quantized models of ResNet, DenseNet and AlexNet through our learning framework can achieve almost the same accuracies with the original full-precision models. Moreover, when using our learning framework to train our -BQ-NN from scratch, it can achieve nearly state-of-the-art results compared with typically low-precision QNNs. We also evaluate the computational efficiency and energy consumption by implementing our QNNs models on Xilinx ZCU102 platform. In our hardware experiments, our -BQ-NN with our SVPE can execute 2.9 times faster than with the VPE in inference, and the use of SVPE array also reduces average energy consumption to 68.7% of the VPE array with 16-bit. Our future work should explore how to decrease the accumulated quantization errors further when our learning framework is used on different CNNs structures.

References

- [1] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” in International Conference on Neural Information Processing Systems, pp. 1097–1105, 2012.

- [2] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich, “Going deeper with convolutions,” pp. 1–9, 2014.

- [3] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” CoRR, vol. abs/1409.1556, 2015.

- [4] Y. Taigman, M. Yang, M. Ranzato, and L. Wolf, “Deepface: Closing the gap to human-level performance in face verification,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1701–1708, 2014.

- [5] Y. Sun, X. Wang, and X. Tang, “Deep learning face representation from predicting 10,000 classes,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1891–1898, 2014.

- [6] J. Long, E. Shelhamer, and T. Darrell, “Fully convolutional networks for semantic segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 3431–3440, 2015.

- [7] L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille, “Semantic image segmentation with deep convolutional nets and fully connected crfs,” arXiv preprint arXiv:1412.7062, 2014.

- [8] R. Girshick, “Fast r-cnn,” in Proceedings of the IEEE international conference on computer vision, pp. 1440–1448, 2015.

- [9] S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” in Advances in neural information processing systems, pp. 91–99, 2015.

- [10] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein et al., “Imagenet large scale visual recognition challenge,” International Journal of Computer Vision, vol. 115, no. 3, pp. 211–252, 2015.

- [11] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference on, pp. 248–255. Ieee, 2009.

- [12] M. Peemen, A. A. Setio, B. Mesman, H. Corporaal et al., “Memory-centric accelerator design for convolutional neural networks.” in ICCD, vol. 2013, pp. 13–19, 2013.

- [13] P. Meloni, G. Deriu, F. Conti, I. Loi, L. Raffo, and L. Benini, “A high-efficiency runtime reconfigurable ip for cnn acceleration on a mid-range all-programmable soc,” in 2016 International Conference on ReConFigurable Computing and FPGAs, pp. 1–8. IEEE, 2016.

- [14] M. Sankaradas, V. Jakkula, S. Cadambi, S. Chakradhar, I. Durdanovic, E. Cosatto, and H. P. Graf, “A massively parallel coprocessor for convolutional neural networks,” in Application-specific Systems, Architectures and Processors, 2009. ASAP 2009. 20th IEEE International Conference on, pp. 53–60. IEEE, 2009.

- [15] M. Courbariaux, I. Hubara, D. Soudry, R. El-Yaniv, and Y. Bengio, “Binarized neural networks: Training deep neural networks with weights and activations constrained to +1 or -1,” 2016.

- [16] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778, 2016.

- [17] G. Huang, Z. Liu, L. Van Der Maaten, and K. Q. Weinberger, “Densely connected convolutional networks.” in CVPR, vol. 1, no. 2, p. 3, 2017.

- [18] S. Han, J. Pool, J. Tran, and W. Dally, “Learning both weights and connections for efficient neural network,” in Advances in neural information processing systems, pp. 1135–1143, 2015.

- [19] H. Li, A. Kadav, I. Durdanovic, H. Samet, and H. P. Graf, “Pruning filters for efficient convnets,” arXiv preprint arXiv:1608.08710, 2016.

- [20] S. Han, H. Mao, and W. J. Dally, “Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding,” arXiv preprint arXiv:1510.00149, 2015.

- [21] J. Van Leeuwen, “On the construction of huffman trees.” in ICALP, pp. 382–410, 1976.

- [22] J. Choi, P. I.-J. Chuang, Z. Wang, S. Venkataramani, V. Srinivasan, and K. Gopalakrishnan, “Bridging the accuracy gap for 2-bit quantized neural networks (qnn),” arXiv preprint arXiv:1807.06964, 2018.

- [23] J. Choi, Z. Wang, S. Venkataramani, P. I.-J. Chuang, V. Srinivasan, and K. Gopalakrishnan, “Pact: Parameterized clipping activation for quantized neural networks,” arXiv preprint arXiv:1805.06085, 2018.

- [24] Z. Liu, B. Wu, W. Luo, X. Yang, W. Liu, and K.-T. Cheng, “Bi-real net: Enhancing the performance of 1-bit cnns with improved representational capability and advanced training algorithm,” in Proceedings of the European Conference on Computer Vision (ECCV), pp. 722–737, 2018.

- [25] B. Zhuang, C. Shen, M. Tan, L. Liu, and I. Reid, “Towards effective low-bitwidth convolutional neural networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7920–7928, 2018.

- [26] A. Zhou, A. Yao, Y. Guo, L. Xu, and Y. Chen, “Incremental network quantization: Towards lossless cnns with low-precision weights,” arXiv preprint arXiv:1702.03044, 2017.

- [27] S. Zhou, Y. Wu, Z. Ni, X. Zhou, H. Wen, and Y. Zou, “Dorefa-net: Training low bitwidth convolutional neural networks with low bitwidth gradients,” arXiv preprint arXiv:1606.06160, 2016.

- [28] C. Zhu, S. Han, H. Mao, and W. J. Dally, “Trained ternary quantization,” arXiv preprint arXiv:1612.01064, 2016.

- [29] E. Park, D. Kim, S. Yoo, and P. Vajda, “Precision highway for ultra low-precision quantization,” arXiv preprint arXiv:1812.09818, 2018.

- [30] L. Lu, Y. Liang, Q. Xiao, and S. Yan, “Evaluating fast algorithms for convolutional neural networks on fpgas,” in Field-Programmable Custom Computing Machines (FCCM), 2017 IEEE 25th Annual International Symposium on, pp. 101–108. IEEE, 2017.

- [31] S. Ioffe and C. Szegedy, “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” arXiv preprint arXiv:1502.03167, 2015.

- [32] K. He, X. Zhang, S. Ren, and J. Sun, “Delving deep into rectifiers: Surpassing human-level performance on imagenet classification,” in Proceedings of the IEEE international conference on computer vision, pp. 1026–1034, 2015.

- [33] J. T. Springenberg, A. Dosovitskiy, T. Brox, and M. Riedmiller, “Striving for simplicity: The all convolutional net,” arXiv preprint arXiv:1412.6806, 2014.

- [34] M. Lin, Q. Chen, and S. Yan, “Network in network,” arXiv preprint arXiv:1312.4400, 2013.

- [35] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proc. IEEE, vol. 86, no. 11, pp. 2278–2324, 1998.

- [36] C.-Y. Lee, S. Xie, P. Gallagher, Z. Zhang, and Z. Tu, “Deeply-supervised nets,” in Artificial Intelligence and Statistics, pp. 562–570, 2015.

- [37] A. Krizhevsky and G. Hinton, “Learning multiple layers of features from tiny images,” Citeseer, Tech. Rep., 2009.

- [38] R. K. Srivastava, K. Greff, and J. Schmidhuber, “Training very deep networks,” in Advances in neural information processing systems, pp. 2377–2385, 2015.

- [39] G. Huang, Y. Sun, Z. Liu, D. Sedra, and K. Q. Weinberger, “Deep networks with stochastic depth,” in European Conference on Computer Vision, pp. 646–661. Springer, 2016.

- [40] G. Larsson, M. Maire, and G. Shakhnarovich, “Fractalnet: Ultra-deep neural networks without residuals,” arXiv preprint arXiv:1605.07648, 2016.

- [41] A. Romero, N. Ballas, S. E. Kahou, A. Chassang, C. Gatta, and Y. Bengio, “Fitnets: Hints for thin deep nets,” arXiv preprint arXiv:1412.6550, 2014.

- [42] Y. Netzer, T. Wang, A. Coates, A. Bissacco, B. Wu, and A. Y. Ng, “Reading digits in natural images with unsupervised feature learning,” in NIPS workshop on deep learning and unsupervised feature learning, vol. 2011, no. 2, p. 5, 2011.

- [43] S. Zagoruyko and N. Komodakis, “Wide residual networks,” arXiv preprint arXiv:1605.07146, 2016.

- [44] I. J. Goodfellow, D. Warde-Farley, M. Mirza, A. Courville, and Y. Bengio, “Maxout networks,” arXiv preprint arXiv:1302.4389, 2013.

- [45] P. Sermanet, S. Chintala, and Y. LeCun, “Convolutional neural networks applied to house numbers digit classification,” in Pattern Recognition, 2012 21st International Conference on, pp. 3288–3291. IEEE, 2012.

- [46] V. Sze, Y.-H. Chen, T.-J. Yang, and J. S. Emer, “Efficient processing of deep neural networks: A tutorial and survey,” Proc. IEEE, vol. 105, no. 12, pp. 2295–2329, 2017.

- [47] M. Courbariaux, Y. Bengio, and J.-P. David, “Binaryconnect: Training deep neural networks with binary weights during propagations,” in Advances in neural information processing systems, pp. 3123–3131, 2015.

- [48] F. Li, B. Zhang, and B. Liu, “Ternary weight networks,” arXiv preprint arXiv:1605.04711, 2016.

- [49] S. Gross and M. Wilber, “Training and investigating residual nets,” Facebook AI Research, CA.[Online]. Avilable: http://torch. ch/blog/2016/02/04/resnets. html, 2016.

- [50] N. Srivastava, G. Hinton, A. Krizhevsky, I. Sutskever, and R. Salakhutdinov, “Dropout: a simple way to prevent neural networks from overfitting,” The Journal of Machine Learning Research, vol. 15, no. 1, pp. 1929–1958, 2014.

- [51] I. Sutskever, J. Martens, G. Dahl, and G. Hinton, “On the importance of initialization and momentum in deep learning,” in International conference on machine learning, pp. 1139–1147, 2013.

- [52] M. Rastegari, V. Ordonez, J. Redmon, and A. Farhadi, “Xnor-net: Imagenet classification using binary convolutional neural networks,” in European Conference on Computer Vision, pp. 525–542. Springer, 2016.

- [53] C. Zhang, P. Li, G. Sun, Y. Guan, B. Xiao, and J. Cong, “Optimizing fpga-based accelerator design for deep convolutional neural networks,” in Proceedings of the 2015 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, pp. 161–170. ACM, 2015.