∎

22email: yun, lliu1, [email protected]

A Learned Proximal Alternating Minimization Algorithm and Its Induced Network for a Class of Two-block Nonconvex and Nonsmooth Optimization

Abstract

This work proposes a general learned proximal alternating minimization algorithm, LPAM, for solving learnable two-block nonsmooth and nonconvex optimization problems. We tackle the nonsmoothness by an appropriate smoothing technique with automatic diminishing smoothing effect. For smoothed nonconvex problems we modify the proximal alternating linearized minimization (PALM) scheme by incorporating the residual learning architecture, which has proven to be highly effective in deep network training, and employing the block coordinate decent (BCD) iterates as a safeguard for the convergence of the algorithm. We prove that there is a subsequence of the iterates generated by LPAM, which has at least one accumulation point and each accumulation point is a Clarke stationary point. Our method is widely applicable as one can employ various learning problems formulated as two-block optimizations, and is also easy to be extended for solving multi-block nonsmooth and nonconvex optimization problems. The network, whose architecture follows the LPAM exactly, namely LPAM-net, inherits the convergence properties of the algorithm to make the network interpretable. As an example application of LPAM-net, we present the numerical and theoretical results on the application of LPAM-net for joint multi-modal MRI reconstruction with significantly under-sampled -space data. The experimental results indicate the proposed LPAM-net is parameter-efficient and has favourable performance in comparison with some state-of-the-art methods.

Keywords:

Learned alternating minimization algorithm Nonconvex nonsmooth optimization Deep learning MRI Image reconstructionMSC:

90C26 65K05 65K10 68U101 Acknowledgement

Funding This research was partially supported by NSF grant DMS-2152961 and Simons Foundation Collaboration Grant (Award Number: 584918).

Data Availability The original dataset we used in all experiments are from Multi-modal Brain Tumor Segmentation Challenge 2018.menze2014multimodal

2 Introduction

Recent years have witnessed remarkable success of deep learning across various real-world applications. However, a purely data-driven approach may fail to approximate the desired functions, especially when training data are scarce. It is well known that the scarcity of data leads to overfitting and challenges in interpretation. To mitigate these issues, the unrolling/unfolding neural networks (UNNs) have been developed and have shown promising results in solving inverse problems arising from computer vision and medical imaging. The UNNs are multi-phase neural networks, in which each phase mimics one iteration of the optimization algorithms for solving the inverse problems. However, despite their promising performance in practice, most of the existing UNNs only superficially resemble steps of optimization algorithms. Consequently, their outputs do not really yield solutions to any interpretable variational models. This results is lack of theoretical justifications and convergence guarantees for their outputs.

Recently a novel class of unrolling methods known as learned optimization algorithms (LOA) LDA ; ldct ; wanyuMiccai has been developed. The goal of LOA is to tackle the challenges associated with solving a class of inverse problems arising from various image reconstruction and synthesis problems. These LOAs strategically combine the learned variational model with the prior domain knowledge of underlying physical processes to enhance its interpretability. These methods also utilize optimization to guarantee the convergence.

The optimization scheme induces a highly structured deep network, aligning its architecture precisely with the iterative algorithm. Hence, the network inherits the convergence property of the algorithm, and it outputs an approximation of the solution to the variational model. As a result, it is interpretable and parameter efficient. However, the existing LOAs only consider a single block of variables.

Motivated by a wide range of applications in machine learning that involve multi-modalities or multi-domains problems, such as multi-task learning, transfer learning, multi-modal learning and fusion, dual domain image reconstruction, and image synthesis, in this work we extend the idea of LDA to deal with learned multi-block nonconvex and nonsmooth optimization problems. More specifically, we develop a novel convergent learned proximal alternating minimization (LPAM) algorithm to solve the following class of learnable optimization problems:

| (1) |

where each learnable function is a parameterized function. is the set of learned parameters. Each function in (1) is possibly nonconvex and nonsmooth. We shall also study the convergence and iteration complexity of the algorithm, and provide experimental results on the application on the joint multi-contrast MRI reconstruction with significantly under-sampled data.

3 Related work

3.1 Related work on alternating minimization algorithms:

Alternating minimization (AM) or “two block” coordinate minimization (BCM) algorithm plays an indispensable role in solving a rich class of problems formulated as follows:

| (2) |

The standard approach to solve this problem is the so-called Gauss-Seidel iteration scheme, also known as block coordinate descent (BCD) method. This scheme alternately keeps one block of updated variable fixed and optimize the other block palomar2010convex ; bertsekas2015parallel . With assumptions on convexity and smoothness, several convergence results have been established beck2013convergence ; charbonnier1997deterministic .

Inspired by many practical problems arising from machine learning and image processing, such as non-negative matrix factorization, blind image deconvolution, low rank matrix completion jain2013low ; hardt2014understanding , phase retrieval problem netrapalli2013phase , interference alignmentpeters2009interference , and compressed sensing using synthesis sparsity modelsabolghasemi2012gradient joint multi-modal image reconstructionsroubek2011robust , the AM algorithms for nonconvex (including biconvex) and nonsmooth minimization have attracted great interest. One of the effective approaches to solve nonconvex and nonsmooth problems (2) is the proximal alternating minimization (PAM) algorithms. The PAM algorithm developed in attouch2010proximal can be viewed as a proximal regularization of a two block Gauss-Seidel method for solving (2). Under the assumption that the objective function satisfies Kurdyka- Lojasiewicz (KL) property, the convergence to a critical point of of each bounded sequence generated by the algorithm has been obtained in attouch2013convergence . However, PAM algorithm requires exact minimization of a nonconvex and nonsmooth problem in each step. To overcome this difficulty, the pioneer work bolte2014proximal introduced a proximal alternating linearized minimization (PALM) algorithm, which can be viewed as alternating the steps of the proximal forward-backward scheme. Building on the KL property, it was proved that each bounded sequence generated by PALM globally converges to a critical point. Later, the work pock2016inertial proposed an inertial version of the PALM (iPALM) algorithm motivated from the Heavy Ball method of Polyak polyak1964some . It is also closely related to multi-step algorithms of Nesterov’s accelerated gradient method nesterov2018lectures . The global convergence to a critical point is also obtained for iPALM under the assumption that the objective function satisfies the KL property and the same smoothness conditions as that for PALM.

3.2 Related work on unrolling neural networks inspired by AM algorithms:

In recent years, the unrolling neural networks inspired by AM algorithms unroll the iteration scheme of an AM algorithm into a multi-phase deep neural network. Several unrolling convolutional neural networks (CNN) based on AM algorithms have been developed for solving inverse problems in computer vision including the unrolling neural networks resembling the steps of alternating direction method of multipliers (ADMM) algorithm, and block coodinate decent (BCD) algorithm. The ADMM-net sun2016deep with the architecture derived from ADMM has been applied to compressive sensing MRI reconstruction. The deeply aggregated alternating minimization neural network (DeepAM) kim2017deeply for image restoration uses a data-driven approach in the energy minimization framework. At each iteration, the DeepAM advances the following two steps in the conventional AM algorithm. One step uses a data-driven approach to update the auxiliary variable representing the gradient of the image by a CNN that plays the role of regularization. The other step recovers the image by minimizing the data fidelity and the disparity between the gradient of the image and updated auxiliary variable. Moreover, several versions of BCD-nets that resemble the steps of BCD algorithm have been successfully applied to solve the inverse problems for signal/image recovery/reconstruction aggarwal2018modl ; chun2019bcd ; chun2018deep ; chun2020convolutional ; chun2020momentum .

The common idea of those BCD-nets is combining a denoising CNN in defining the regularization as one module, namely a denoising module, and a model based image reconstruction (MBIR) as the other module into the BCD framework. The MBIR module enhances the data fidelity for the image from the denoising module. For instance, at each iteration of the BCD-net proposed in chun2018deep , it trains an image mapping CNN using identical convolutional kernels in both encoders and decoders as one module in a BCD framework for image recovery. The other module in this BCD-net minimizes an objective function consisting of the data fidelity and penalty between to be updated image and the deniosed image from image mapping CNN. This BCD-Net chun2018deep is modified from chun2019bcd , where a sparsity promoting denoising network is trained in denoising modules and the accelerated proximal gradient method using a majorizer is applied to MBIR modules with statistical CT data-fidelity for low-dose CT reconstruction. Furthermore, to achieve fast and convergent solutions for the inverse problem in image processing, the work in chun2020momentum proposes a Momentum-Net. The Momentum-Net is motivated by applying the block proximal extrapolated gradient method using a Majorizer and convolutional autoencoders to MBIR. Each iteration of Momentum-Net consists of three core modules: image refining, extrapolation and MBIR. The image refining module is a denoising module that trains an image refining CNN to remove iteration-wise artifact. The extrapolation module uses momentum from previous updates to amplify the changes in subsequent iterations and accelerate convergence. The MBIR is non-iterative, since the MBIR problem in consideration of this work has a closed-form solution. Momentum-Net guarantees convergence to a fixed-point for general differentiable nonconvex data-fit terms and convex feasible sets, under the conditions that the sequence of paired refining CNNs is asymptotically nonexpansive and the refined image from the image refining module gives an asymptotically block-coordinate minimizer.

Very recently, ding2023learned presented a learned alternating algorithm aimed at solving a specific variational model for dual-domain sparse-view CT reconstruction. In this model, the joint term is convex and smooth, and the regularization terms are learnable in both the image and sinogram domains, respectively. In this study, we extend their work by considering a broader scenario, where each term in the objective function can be nonconvex and nonsmooth. We provide sufficient conditions for taking smoothing approximations of nonsmooth objective functions. We also provide the analysis of the iteration complexity in addition to the convergence analysis.

4 Contributions and Organization

In this work we propose a novel learned proximal alternating minimization algorithm addressing nonsmooth and nonconvex two-block variational models with provable convergence and iteration complexity. The proposed LPAM algorithm for solving () determines the architecture of the deep neural network, hence the design of the LPAM considers not only the convergence and efficiency, but also the ability to assist the training of the network parameters , i.e., reducing the error in minimizing the loss function.

Our main idea for designing this algorithm is as follows: (i) We tackle the nonsmoothness by an appropriate smoothing technique with automatic diminishing smoothing effect; (ii) We modify the PALM scheme by incorporating the residual learning architecture, which has proven to be highly effective in deep network training he2016deep ; and (iii) When some modified PALM iterates fail to satisfy certain conditions, we employ the block coordinate decent (BCD) iterates as a safeguard to ensure convergence. Moreover, we prove that a subsequence generated by the proposed LPAM has accumulation points and all of them are Clarke stationary points of the nonsmooth nonconvex problem.This algorithm can be readily extended to multi-block nonsmooth and nonconvex optimization.

This algorithm significantly broadens its applicability, as it relaxes the strict convergence conditions of the existing algorithms. The PALM or iPALM algorithm assumes the joint term to be smooth and possibly nonconvex, and the proximal points associated with () are easy to obtain. Their global convergence results are built on KL property of the objective function. These assumptions in general cannot be met by the learnable parameterized functions representing neural networks. The proposed LPAM algorithm will not require these conditions. Each function in (1) could be nonconvex and nonsmooth. Without those restrictions, the LPAM algorithm is flexible and applicable to deep learning problems in various applications. Moreover, the convergence of LPAM can still be established in the sense of sub-sequence convergence to Clarke stationary points (see detail in Section 3). But it cannot have global convergence to a critical point as the PALM or iPALM algorithm.

The convergence result of the BCD-net is based on the assumptions that the paired image refining neural networks are asymptotically nonexpansive, the data fidelity is differentiable, and the MBIR problem is not difficult to solve. The proposed LPAM algorithm does not require these assumptions. The convergence of LPAM is assured by the convergence of the BCD algorithm for smooth optimization and the property of the limit of the gradient of the smoothed objective functions as smoothing factor tends zero. By removing the constraints on the model, the PALM can significantly broaden its range of applications.

The remainder of the paper is organized as follows. In Section 5 we construct the LPAM algorithm motivated by the PALM algorithm and the BCD algorithm, and introduce the three steps that constitute the LPAM algorithm. In Section 6, we prove the convergence of the LPAM algorithm and deduce the iteration complexity of this algorithm. In Section 7, we apply the LPAM algorithm to the joint reconstruction of T1 and T2 MRI images with significantly under-sampled data to show the promising performance of the proposed method. Furthermore, we provide convergence analysis and comparison with several existing methods. All the figures are listed at the end of the article.

Throughout the paper, we use the following notations without further notification.

-

1.

denotes the function of and given a collection of parameters . denotes the smooth approximation of with a smoothing parameter .

-

2.

For a differentiable function , we denote the gradient of with respect to by . The gradient on the domain is represented by .

-

3.

We use to denote the standard norm in Euclidean space, and write for the standard inner product on .

-

4.

.

5 Learned Proximal Alternating Minimization (LPAM) Algorithm

In this section we propose a learned proximal alternating minimization (LPAM) algorithm for solving the problem (1). Since is learned, we will omit it and simply write (1) as (2).

For ,, and we assume

| Each of them is possibly non-convex and non-smooth. | ||

The proposed LPAM algorithm consists of three stages. In the first stage we convert the nonconvex and nonsmooth problem (2) to a nonconvex smooth optimization problem by an appropriate smoothing procedure. The smoothing method is not unique, but we require the smooth approximation of , which is defined as

| (3) |

to satisfy following conditions:

-

•

(C1:) , and are Lipschitz continuous in , and , respectively.

-

•

(C2:) , , for any given in the corresponding domain. Also for any , we assume that when and , .

-

•

(C3:) There is a continuous and non-negative function such that ,

-

•

(C4:) For any and any , , where stands for the Clarke subdifferential of (see Definition 1).

Remark 1

The Lipschitz constants of and may tend to infinity as tends to . The condition is much weaker than the monotonicity of . The condition roughly says that as long as is not too much different from a monotonic function with respect to , our method still leads to convergence. This brings great convenience in application.

In the second stage we solve the smoothed nonconvex problem with a fixed smoothing factor , i.e.

| (4) |

In light of the substantial improvement in practical performance by ResNet he2016deep , we propose a modified PALM that incorporates the architecture of the ResNet for solving (4). With , PALM bolte2014proximal generates a sequence of iterates by

and

where and are step sizes. In some situations, the proximal points and are not easy to compute, so we replace by its linear approximation at together with a proximal term . Similarly, we replace by its linear approximation at together with a proximal term . Thus, we have

| (5) | ||||

where we recall that stands for the gradient for the first variables.

| (6) | ||||

In deep learning approach, the step sizes , , and could be learnable hyper-parameters. The advantage for taking the scheme (5) and (6) to update and is that this scheme is consistent with the architecture of residual learning he-1 ; he-2 , which only learns the correction needed for and avoids gradient vanishing in network training, and thus improves solution quality in practice. However, the convergence of the sequence is not guaranteed. Inspired by the proof of convergence in bolte2014proximal , we propose the following:

If and satisfy

| (7) |

and

| (8) |

for some , we take , .

If one of (7) and (8) is violated, we compute by BCD algorithm with a simple line-search strategy for better step sizes as follows: Let , we compute

| (9) | ||||

| (10) |

The first order optimality condition leads to

| (11) | ||||

If for some ,

| (12) |

we set

otherwise we reduce step sizes by where , and recompute until the condition (12) holds. Then take , .

In the third stage, we check if the -norm of the gradient of the smoothed objective function has been reduced enough, so that we can perform the second stage with a reduced smoothing factor. By gradually reducing the smoothing factor, we obtain a subsequence of the iterates that converges to a Clarke stationary point of the original nonconvex and nonsmooth problem.

The scheme of LPMA is given below (For notational simplicity, we omit all learned network parameters).

We would like to comment here for the LPAM algorithm. Line 2 to line 18 can be viewed as the steps of the inner iteration, which, for a fixed , generates a sequence such that as (see the first statement of Lemma 1 in the next section). This guarantees the reduction of for a fixed . When this value drops below a prefixed value, line 19 decreases , and then we repeat the procedures from line 2 to line 18 to generates a new sequence corresponding to the reduced . We continue these steps until the termination condition in the line 20 is met. The sequence formed by the iterates, in which the reduction criterion for is met, has at least one accumulation point and each accumulation point is a Clarke Stationary Point of the original problem. This will be proved in Theorem 1 in Section 6.

Remark: In order to make the LPMA algorithm match the ResNet architecture, if the function is learnable and and are given, it is better to use the scheme above. If the functions and are learnable and is given, it is better to change the steps 3-6 to the following:

| (13) | |||

| (14) | |||

| (15) | |||

| (16) |

and keep the other steps in Algorithm 1 unchanged.

6 Convergence Analysis

Since we deal with a nonconvex and nonsmooth optimization problem, the Clarke subdifferential, which is based on the concept of generalized directional derivatives, is employed here to characterize the optimality of the solutions to (2).

Definition 1

(Clarke subdifferential). Suppose that is locally Lipschitz. The Clarke subdifferential of at is defined as

where , for , .

Definition 2

(Clarke stationary point) For a locally Lipschitz function defined as in Definition 1, a point is called a Clarke Stationary Point of , if .

Lemma 1

Assume that is a smooth approximation of defined in (3) satisfying the conditions (C1)-(C4). Let , , and be an arbitrary initial condition. Suppose is the sequence generated by repeating Lines 2–18 in Algorithm 1 with . Then we have

-

1.

as .

-

2.

Let be the initial step size for . The maximum number of required line search steps is

where represents the largest integer less than or equal to , and is the sum of the Lipschitz constants for , , and , i.e.

-

3.

For any ,

(17)

Proof of Lemma 1:

Given , in the case that generated by Algorithm 1 satisfies the conditions (7)-(8), we take . From (7)-(8), it is easy to get

| (18) |

If fails to satisfy the condition (7) or (8), the algorithm computes by (11) with a line search strategy. Let be the number of the required line search steps to meet the condition (12), and then we take . This gives the following

| (19) |

Since is -Lipschitz continuous, we have

| (20) |

The combination of (19) and (20) yields

| (21) |

Hence the condition (12) is met if

| (22) |

Hence, for any the maximum line search steps required for (12) satisfies

so we abuse the notation by defining it as

| (23) |

This proves the second statement of this lemma. Moreover, the fact that for any , implies

| (24) |

Next we prove the other two statements. Note that from (19) the condition (12) can be rewritten as:

| (25) |

Now we estimate the last term in (25) in terms of . First by the Lipschitz continuity of we have

Clearly this implies

| (26) |

Here if the right hand side is positive, we will choose to be determined, and take advantage of the following inequality: together with (19) to obtain

| (27) |

Inserting (6) into (25), we have

| (28) | ||||

If , we just choose . Otherwise we set as . In either case the coefficient of is less than . For the coefficient of , in the first case it is less than . In the second case it is less than . Then it is easy to verify that in both cases, by (24) both coefficients are less than Thus we have

| (29) |

where

| (30) |

Consequently,

| (31) |

If the right hand side of (26) is negative, we obtain from (19) that

Consequently,

| (32) |

Then we use (32) to estimate (25) differently:

| (33) | ||||

If , we have

Otherwise

In either case we see that (29) still holds. Note that we used (24) for a lower bound of . Thus we have established (31).

Observing (7), (18), (12) and (31) we see that in either case of or , there are two constants

| (34) |

such that

| (35) |

and

| (36) |

From (35) and C2 we get

| (37) |

Furthermore, for any integer summing up (36) for , we have

| (38) |

We use to denote a uniform lower bound for all : for all . Since is independent of , we see that for any fixed , as . This proves the first statement of Lemma 1. For the third statement of Lemma 1, we set , then we know that for all . Thus from (38) we have

The third statement follows immediately. Lemma 1 is established.

From the first statement of Lemma 1 the reduction criterion for in Line 19 of Algorithm 1 must be satisfied within finitely many iterations for any . Hence will eventually be small enough to terminate the algorithm. Let be the counter of iteration when the criterion in Line 19 of Algorithm 1 is met for the -th time (we set ), then we can partition the iteration counters into segments accordingly, so that in the -th segment and . In the next theorem we will give the length of each segment, which will lead to the iteration complexity of Algorithm 1 for any .

Theorem 6.1

Let and be described as in Lemma 1 and hold for . If , suppose is the sequence generated by Algorithm 1 with arbitrary initial condition . Let be the subsequence of where the reduction criterion for in the algorithm is met for and . Then has at least one accumulation point and each accumulation point is a Clarke Stationary Point of the original problem. Moreover, the number of iterations, , for the segment is bounded by

| (39) |

For any , the total number of iterations for Algorithm 1 to terminate with is bounded by

| (40) |

where is number of reductions and we have , depends only on and .

Remark 2

In application we usually have , which makes the bound in (40) .

Remark 3

The figure below shows the generation of the sequence in Theorem 1. The iterates highlighted in red indicate the selected sequence , where the reduction criterion for is met at for .

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/4891e925-c9a4-4ae9-beae-addede4602e5/x1.png)

Proof of Theorem 6.1: First we claim that the sequence is compact. This is largely based on C3. In the first step, the initial corresponds to . Then we have . After this we may have and then we have and . Here we mention that we don’t have , but by the third requirement of we have

In a similar manner we then prove that is uniformly bounded for all . Since is a positive continuous function and all , after removing the bound for all we have the uniform bound for all .

Next we estimate the iteration times based on the previous lemma. If , to estimate , which is the iteration times for the inner circle, we see that , then in Lemma 1 is , the initial step is . Then

and (39) is justified. Let be the number of reductions. Then . Thus

| (41) |

Based on this we have

which yields the left hand side of (40). If we use as an upper bound of for all , the summation above leads to . Using the estimate of in (41) we see that this bound is . Since as , so if we choose a subsequence of that converges to an equilibrium point , by C4, , then Definition 2 says is a Clarke Stationary Point of . Theorem 6.1 is established.

6.1 Small initial steps

Finally in this subsection we prove that if the initial steps and satisfy

| (42) |

for some , we can improve the estimate of from (30) to for some universal constant .

Since under this assumption we have and , we can estimate the decrease of in terms of using (19),(21) and :

| (43) |

To estimate we use Lipschitz continuity and an elementary inequality to obtain (6) as before. Inserting (6) into (6.1) we have

| (44) |

where

| (45) |

we choose to be

Then

Observing (42) we have

for some independent of . This clearly implies that is bounded by for some universal .

7 Experiments

In this section, we examine the performance of the proposed method for joint MRI T1 and T2 image reconstruction using significantly under-sampled data. We outline the proposed variational model, explore the convergence property of the LPAM algorithm used to solve the model, and demonstrate the efficiency of the LPAM-net.

The content is organized into five subsections. The first subsection introduces the variational model, especially the learned joint feature extractor. Then in the second subsection we verify the assumptions for the convergence of the algorithm. Then in subsection 3, we describe the dataset used in the experiments and the metrics used to evaluate the reconstruction results. Subsection 4 elucidates the complete reconstruction procedure including the initialization network and the LPAM-net. Finally, in the last subsection, we demonstrate the numerical results of the experiments via comparisons with several existing methods.

7.1 Proposed model and the feature extractor

Given the under-sampled k-space data of the modalities T1 and T2, our goal is to jointly reconstruct the corresponding images and . We formulate the T1-T2 joint reconstruction as the following nonconvex and nonsmooth minimization problem:

| (46) |

where , , and . and are the k-space data, and is a learned joint feature extractor mapping from to where is the spatial dimension and is the channel number of the output feature tensor. Here, stands for the discrete Fourier transform and P is the binary matrix representing the k-space mask when acquiring data for and means the image and the image are concatenated. In this model, the first two terms are data fidelity terms and the last term is the regularization term. The regularization term is a learnable common feature extractor for and and it enhances the sparsity of the common features.

More specifically, let denote the vector of the concatenated two images of dimension . Then, the regularization term is represented by . The is the -feature vector of for . Here, represents the pixel number of the image. represents the gradient of as a function of variables. Then,

where is a vanilla l-layer CNN with component-wise activation function .

| (47) |

Here is a smoothed ReLu function defined as:

| (48) |

We denote as for simplicity in the following text and in the convergence analysis.

Let be the smoothed approximation of the regularization term with the parameter as follows:

where , . Then, the gradient of the smoothed term is:

| (49) |

Now the smoothed objective function of is written as

7.2 Convergence Analysis

7.2.1 Theoretical proof

In this subsection, we verify that the proposed variational model (46) and its smoothing approximation satisfy the assumptions required in Lemma 1 and Theorem 1. This verification provides a strong theoretical support for the convergence of T1-T2 joint reconstruction.

Step one: Verification of and :

From the definition of , it is easy to see that both and hold.

Step two: Verification of :

In order to prove C3, We choose the continuous function and . Then, we need to prove the following inequality:

| (50) |

Note that the data fidelity term is independent of smoothing parameter, hence we only need to prove the following inequality:

| (51) |

Recall that is defined as:

| (52) |

The proof of (51) consists of three steps. In the first case, if , it is easy to use the definition of to see that both sides of (51) are equal to . In the second case, we suppose . Then

Note that the last inequality holds due to the simple fact that the function

is nondecreasing for .

In case three: If ,

where the inequality also holds due to the fact that the function as defined above is a nondecreasing function for . In each case, is satisfied.

Step three: Verification of :

Note that satisfies as . For simplicity, we use instead of is the smoothed factor used in the iteration to obtain . Then, we have , and .

Here we claim that converges to a Clarke point. Similar proof has been demonstrated in LDA that

| (53) | |||

where and . is the projection of onto which stands for the column space of . Then for sufficiently large

We use the fact that and to prove the first inequality and we use and the continuity of for all to prove the last inequality. Furthermore we denote

Then, we have

As obviously converges to the three terms in (53). Since and is closed, converges to a Clarke stationary point. We have validated the four conditions required for the proposed algorithm to be convergent in regards of the application of joint reconstruction of T1 and T2 MRI images. Therefore, by Lemma 1 and Theorem 1, this joint reconstruction will provide a convergent solution.

7.3 Experiment settings

The dataset we used in all experiments are from Multi-modal Brain Tumor Segmentation Challenge 2018.menze2014multimodal Both the training set and the validation set in Multi-modal Brain Tumor Segmentation Challenge 2018 contain four modalities (T1,T2, FLAIR and T). The training set is scanned from 285 patients and the validation set is obtained from 66 patients. Each image has a volume size of Each modality has two types of gliomas: 75 volumes of low-grade gliomas(LGG) and 210 volumes of high-grade gliomas(HGG).

In our experiments, we used HGG MRI images from two modalities (T1 and T2). For each modality, we chose images scanned from 50 patients as our training set and we randomly chose images scanned from 6 patients as our testing set. We cropped the 2D image size to be in the center region, resulting in a overall number of 500 images as our training set and 60 images as our testing set.

To obtain the k-space images, We used Matlab to apply 2D fast Fourier transform to every ground truth image in the training set and the testing set. Then, we shifted the zero-frequency components to the central area for each image and we applied 10%, 20% radial mask to every image. After having all the operations on Matlab, we had the k-space under-sampled images, denoted as and .

The Initialization network was implemented in Python using the TensorFlow framework and the LPAM-net was implemented in Python using the PyTorch framework. Our experiments were run on a Linux server with an NVIDIA A100 Tensor Core GPU available on HiPerGator. The batch size was set to be 1 in all experiments due to the consideration of the GPU memory, data volume and the operation speed.

Our reconstruction results are assessed using four evaluation matrices: peak-to-noise ratio (PSNR), structural similarity (SSIM), normalized mean squared error (NMSE) and root mean square error (RMSE). PSNR, SSIM, NMSE and RMSE can be computed using the following equations.

Suppose we have a reconstructed image and a ground truth image Y, each with a dimension of We define the mean square error and peak-to-noise ratio as

| (54) |

where is the maximum possible pixel value of the ground truth image. PSNR measures the quality of a compressed image in comparison to its ground truth image. A higher PSNR indicates a better quality of the compressed or reconstructed image.

where is the pixel mean of and respectively; are the variance of respectively. are two variables to avoid zero denominator and is the dynamic range of the pixel values. The SSIM, ranging from 0 to 1, is used to measure the similarity between two images. A higher SSIM value indicates a greater similarity between the two images.

NMSE measures the mean relative error.

RMSE is utilized to measure the difference between the reconstructed image and the ground truth.

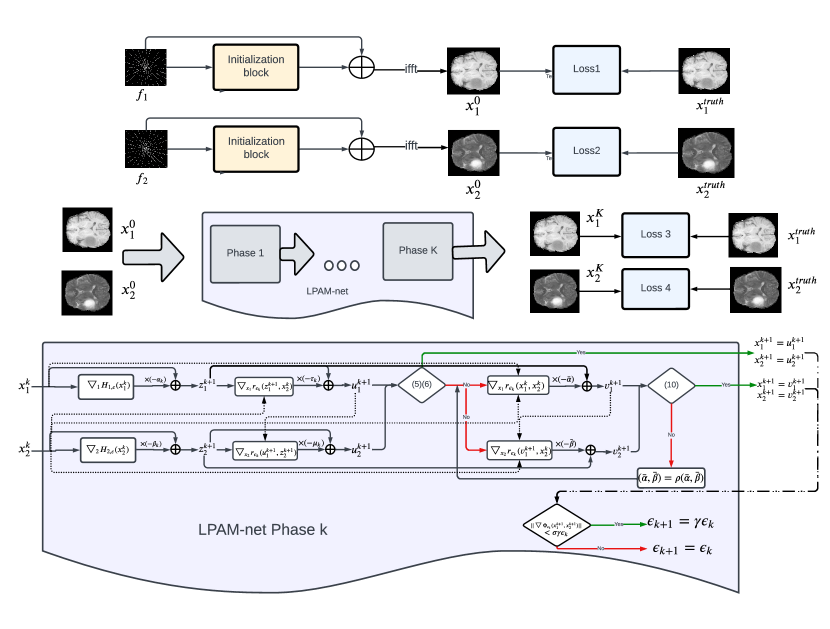

7.4 The architecture of the reconstruction process

The complete reconstruction procedure consists of the Initialization network and the LPAM-net. The architecture of the multi-phase LPAM-net follows the LPAM algorithm exactly in the way that each phase of the network corresponds to each itration of the algorithm. The details are given in the following subsections.

7.4.1 Initialization network

To have a better input for LPAM-net, we construct an initialization network, the major component of which is the initialization blocks. It contains a 4-layer convolutional neural network(CNN) following the residual structure, as outlined in he2016deep . Subsequently, an inverse Fourier transform is applied to the output of the initialization block. The initialization block performs interpolation on the inputs and , which helps to fill up the missing components of the under-sampled images in the k-space domain. We learned the weights of the kernels of the Initialization network by minimizing the following loss function using Adam Optimizer implemented using Tensorflow:

where is the dimension of the image.

A learning rate of was set, complemented by a decay rate of 0.95 for every 100 steps. The Xavier initialization was utilized to initialize the kernels. Table 1 displays the quantitative assessment of the Initialization network for the 60 images in the testing set. This includes the average and the standard deviation for the outputs under noiseless condition, corresponding to radial masks with an under-sampling ratios of 10% and 20%.

| Image | PSNR | SSIM | NMSE |

|---|---|---|---|

| 10% T1 | 23.146 0.66 | 0.617 0.034 | 0.0196 0.0035 |

| 10% T2 | 23.65 1.64 | 0.619 0.04 | 0.063 0.026 |

| 20% T1 | 26.517 0.518 | 0.735 0.031 | 0.009 0.0014 |

| 20% T2 | 27.032 1.633 | 0.735 0.031 | 0.029 0.012 |

7.4.2 LPAM-net for Joint Reconstruction

The architecture of the proposed LPAM-net is shown in the flowchart 1, which follows the LPAM algorithm exactly. The inputs for the LPAM-net are the outputs of the Initialization network, denoted as and . The outputs of the final phase of the LPAM-net are the resulting reconstructed images.

Throughout our experiments, the regularization we used is the -norm of the joint feature extractor, which is described in the Section 7.1. The formula for the regularization term is and the expressions of and are given in 49 and 52, respectively. We denote the feature extractor as or for simplicity in the following text. Regarding the joint feature extractor , we parameterize it as 47 with component-wise activation as 48. The prefixed parameter in the activation function was set to be 0.01. The default configuration of the feature extraction operator was set as follows: it consisted of complex-kernel convolution layers. Every kernel in each layer has a dimension of 3332 (except for the first layer), where 32 is the depth of the kernel. In the first layer, the kernel size was 332, with a depth of 2. For each layer, we had 32 kernels. We set the stride to be 1 and used zero-padding to preserve image size.

For joint reconstruction using the LPAM-net, we need to learn the step sizes and the threshold and the weights of the kernels of the feature extractor . We consolidated all the learned parameters into a unified symbol denoted as . The total number of the phases k is set to be . To enhance the stability and precision of the parameters before increasing the iteration count, we initially trained LPAM-net with phase number K=3 for 100 epochs. After completing the training of the phase K=3, an incremental strategy involving adding 2 more phases each time was employed and for K3, we conducted training of 30 epochs during each iteration. Throughout the training, the batch size was set to be and parameters were updated after the training for every batch.

We updated the parameters by minimizing the loss function using Adam Optimizer implemented by Pytorch with . The learning rate was set at , and the initial step sizes were established as . For and we have three initial step sizes for different phases. For phase 1 to phase 3, ; for phase 4 to phase 12, for phase 13 to phase 15, The Xavier initialization was applied to initialize the kernels. The outputs for the algorithm were denoted as and and the ground truth were represented by and To calculate the loss, we converted the image into a vector of length . Suppose the total pixel number of an image is the loss function we minimized is expressed as where and are defined as follows respectively:

7.5 Convergence behavior of the LPAM-net

In the previous subsection, we proved that there is at least a subsequence of the iterates generated by the LPAM algorithm converges to a Clarke stationary point. It is expected that LPAM would perform stably even beyond the trained phases. To demonstrate its stability empirically, we set the parameters as follows: and the number of phases = 15. We used the -space data of a under-sampling ratio for the joint reconstruction of MR T1 and T2 images. The -norm of the gradient of the objective function value () decreases as the number of phase increases. According to the reduction criteria specified in the line 19 in the algorithm, this leads to a reduction in the smoothing factor from to by phase . During the 15 phases, the smoothing factor steadily decreases as the number of phases increases, indicating that the smoothed objective function is progressively converging to the original problem.

Furthermore, we extend the algorithm beyond 15 phases and observe its performance on the values of the objective function and PSNR for the reconstruction. To this end, after phases, we set and ran the algorithm for up to 5010 iterations with fixed that is learned at the phase. Figure 2 and figure 3 illustrate the changes in the values of PSNR and objective function as the number of iterations increases, respectively. Figure 3 is drawn based on the objective function with 0.0093 as the coefficient of the regularization part. This coefficient does not affect the convergence based on Lemma 1. As we can tell, LPAM-net reduces the objective function value steadily in the first 15 phases. After that, the value of the objective function continue to decrease, but not as fast as the first 15 phases. The PSNR for both T1 and T2 images after the phase maintain stable with slightly decrease in the first a few iterations. The figure 4 visually shows the reconstructed images obtained after 15, 150, 1005, 5010 iterations. We can see that the reconstructed images are almost identical and free of visual artifacts, with very similar PSNR for both T1 and T2 contracts. These evidence shows that LPAM-net maintains stable performance over extended iterations.

|

|

|

|

|

| Reference | Iteration 15 (41.683) | Iteration 150 (41.579) | Iteration 1005 (41.500) | Iteration 5010 (41.499) |

|

|

|

|

|

| Reference | Iteration 15 (43.332) | Iteration 150 (43.368) | Iteration 1005 (43.373) | Iteration 5010 (43.363) |

7.6 Results

In this subsection, we provide numerical results to showcase the enhanced image quality achieved by the proposed LPAM-net and the variational model 46. First, in order to show the advantage of using the joint features as the regularization term in the model, we make comparison between the LPAM-net and the Individual-modality Reconstruction Network. Next, we compare the LPAM-net with the network induced by BCD algorithm, which is equivalent to run only the lines 10-17 and skip the lines 3-9 in LPAM algorithm. Finally, we present the comparison of the LPAM-net with four state-of-art methods for joint reconstruction of MRI T1 and T1 images.

7.6.1 Comparison with Individual-modality Reconstruction Network

This experiment is designed to verify that using the joint features in the regularization term enhances the accuracy and efficiency of the reconstructions of the MRI T1 and T2 images. Since the LPAM-net takes advantage of the common features from both T1 and T2 contracts, the proposed reconstructed images should preserve more details. To validate this advantage, we designed an Individual-modality Reconstruction Network which extracts the individual features of the T1 and T2 contracts separately. The Individual-modality Reconstruction Network precisely adheres the algorithm in LDA for minimizing the following problem:

where , is the under-sampled k-space images and is the individual feature extractor from to and it is designed to be a 4-layer CNN. Unlike the joint reconstruction, in which we learn a joint feature extraction operator , in this individual reconstruction, we learn two feature extraction operators and separately. We learned the weights of the kernels in the CNNs by minimizing the loss function using Adam Optimizer implemented by Pytorch. Suppose the total pixel number of an image is the loss function we minimized is expressed as follows:

where is the output and is the ground truth image.

We compare T1 and T2 image reconstruction results with under-sampling ratio -space noiseless data resulting from LPAM-net and the Individual-modality reconstruction Network, respectively. Figure 5 shows the PSNR value at the phase number of both deep neural networks. Both networks were trained using Adam Optimizer implemented in Pytorch with The learning rate was set at and the number of phase is set at 15. The average PSNR and its standard deviation are depicted in figure 5 for each phase. For phase 3, Individual-modality Reconstruction Network outperforms, but as the phase number increases, the LPAM-net produces better reconstructions. At phase 15, the average PSNR of the LPAM-net improves 0.40 dB for the T1 images and improves 1.49 dB for the T2 images, respectively comparing to the Individual-modality Reconstruction Network. In addition, the standard deviation decreases 0.10 dB for the T1 images and decreases 0.21 dB for the T2 images.

Although PSNR is a crucial measure for comparing the two networks, it is hard for us to visually discern the impact that the LPAM-net has in the reconstruction process. In order to visualize the differences, we added Gaussian white noise to the complex-valued under-sampled k-space images. The real noise and the imaginary noise are both normally distributed with the same mean and the same standard deviation, but they maintain independence from each other. Then, we acquired three groups of T1, T2 images: one set is noiseless; one set has complex-valued noise with a mean of zero and a standard deviation of 3 on both the real part and imaginary part, and the third set has complex-valued noise with a mean of zero and a standard deviation of 7 on both the real part and imaginary part. Next, we trained and tested these three groups of T1,T2 images using both the LPAM-net and the Individual-modality Reconstruction Network. The resulting reconstructed T1 images from the three groups are presented in figure 6 with the ground truth reference. Figure 7 illustrates the pointwise absolute error for each group of the images compared to the ground truth reference. Likewise, the reconstructed T2 images and the associated absolute errors are shown in figure 8 and figure 9.

Figure 6 and figure 8 reveal that, for under-sampled images with any levels of Gaussian white noise, the edges of the tissues in the images reconstructed by the LPAM-net are sharper than the edges of the tissue reconstructed by the Individual-modality Reconstruction Network. Furthermore, the absolute errors are less in the images reconstructed from the LPAM-net in figure 7 and figure 9. Table 2 and table 3 provide a comprehensive summary for the quantitative comparison between the LPAM-net and the Individual-modality Reconstruction Network across various levels of noise. The PSNR, SSIM, NMSE and RMSE are provided for images with each level of noise. We can conclude that the LPAM-net generates more accurate images than the Individual-modality Reconstruction Network regardless of the noise level in the under-sampled images. The results show the advantage of using the joint feature operator as the regularization term in the reconstruction process. Additionally, using joint feature operator demonstrates greater parameter efficiency.

| Method | SD of noise | PSNR | SSIM | NMSE | RMSE | #Par |

|---|---|---|---|---|---|---|

| Individual-modality | 7 | 29.1356 0.9087 | 0.8688 0.0108 | 0.0051 0.0016 | 0.0351 0.0036 | 55903 |

| LPAM-net | 7 | 29.9429 0.7945 | 0.8852 0.0097 | 0.0042 0.0011 | 0.032 0.0028 | 56510 |

| Individual-modality | 3 | 31.2754 1.1333 | 0.9044 0.0098 | 0.0031 0.0011 | 0.0275 0.0034 | 55903 |

| LPAM-net | 3 | 31.994 1.01 | 0.9175 0.0091 | 0.0026 0.0008 | 0.0253 0.0028 | 56510 |

| Individual-modality | none | 33.0252 1.3921 | 0.9251 0.0094 | 0.0021 0.0008 | 0.0226 0.0034 | 55903 |

| LPAM-net | none | 33.3269 1.2853 | 0.927 0.0091 | 0.002 0.0007 | 0.0218 0.003 | 56510 |

| Method | SD of noise | PSNR | SSIM | NMSE | RMSE | #Par |

|---|---|---|---|---|---|---|

| Individual-modality | 7 | 28.6758 1.0697 | 0.8632 0.016 | 0.0193 0.0067 | 0.0371 0.0046 | 55903 |

| LPAM-net | 7 | 29.8219 1.107 | 0.88 0.0178 | 0.015 0.0055 | 0.0325 0.0041 | 56510 |

| Individual-modality | 3 | 30.972 1.2514 | 0.9049 0.0149 | 0.0114 0.0040 | 0.0286 0.0041 | 55903 |

| LPAM-net | 3 | 32.1759 1.0952 | 0.9209 0.0147 | 0.0087 0.0031 | 0.0248 0.0031 | 56510 |

| Individual-modality | none | 32.621 1.6011 | 0.9209 0.0151 | 0.0078 0.0028 | 0.0238 0.0044 | 55903 |

| LPAM-net | none | 34.011 1.3859 | 0.9392 0.0132 | 0.0057 0.0021 | 0.0202 0.0032 | 56510 |

| Individual_7 (29.233) | Individual_3 (31.373) | Individual_non (32.933) | Reference |

| LPAM-net_7 (29.943) | LPAM-net_3 (31.994) | LPAM-net_non (33.327) | Reference |

| Individual_7 (29.233) | Individual_3 (31.373) | Individual_non (32.933) |

| LPAM-net_7 (29.943) | LPAM-net_3 (31.994) | LPAM-net_non (33.327) |

| Individual_7 (28.989) | Individual_3 (31.052) | Individual_non (32.521) | Reference |

| LPAM-net_7 (29.822) | LPAM-net_3 (32.176) | LPAM-net_non (34.011) | Reference |

| Individual_7 (28.989) | Individual_3 (31.052) | Individual_non (32.521) |

| LPAM-net_7 (29.822) | LPAM-net_3 (32.176) | LPAM-net_non (34.011) |

7.6.2 Comparison with the BCD algorithm

This experiment aims to demonstrate the effectiveness of the proposed LPAM algorithm by comparing to the standard BCD algorithm. The LPAM algorithm uses to update the scheme to match the Res-net structure for better training, and as a safeguard to ensure convergence. The standard BCD algorithm is equivalent to remove the iterations for updating and only performing in the LPAM. Although it converges for nonconvex smooth optimization, but the networks induced by BCD algorithm do not have Resnet structure. We conducted experiments comparing these two methods on under-sampled T1 and T2 images using the same variational model 46. Figure 10 and figure 11 illustrate the average PSNR and SSIM versus phase number with respect to the radial mask with a under-sampling ratio of 10% and 20%. As we can tell, both the average PSNR and the average SSIM of the two algorithms ascend, indicating an increase in image quality as phase number rises. For each phase, the values of the PSNR and the SSIM of the LPAM algorithm outperforms the values of the BCD algorithm in each graph, highlighting the efficiency and accuracy of the LPAM algorithm. The compared networks were trained using Adam Optimizer implemented in Pytorch with and the learning rate was set at

7.6.3 Comparison with the State-of-the-Art

We compare the proposed algorithm LPAM-net with five representative methods, including the X-netdo2020reconstruction , the Joint Group Sparsity-based Network (JGSN)guo2023joint , ReconFormerguo2023reconformer and the Joint Cross-Attention Network (jCAN)sun2023joint . X-net, originating from the U-net, introduces the capability to accommodate two inputs and generates two outputs and it is a non model-based method. JGSN, jCAN and ReconFormer are three model-based methods. JGSN unrolls the iterative process of the joint sparsity algorithm. jCAN deploys Vision Transformer and CNN in the image and k-space domains, respectively. ReconFormer designs Recurrent Pyamid Transformer at each architecture unit. Since the original codes for X-net and JGSN are not available, we implemented X-net and JGSN according to their original paper.

Similar to LPAM-net, X-net and JGSN update the two under-sampled modalities jointly. ReconFormer is a single-modality reconstruction network and we reconstructed T1 and T2 images respectively for comparison. jCAN restores the under-sampled target modality with guidance from the full-sampled auxiliary modality; thus, we used full-sampled T1 images to restore under-sampled T2 images and vice versa for comparison. Since some methods incorporate transformers, we adjusted the patch size and the window size to fit our image sizes. To ensure a fair evaluation, we trained each of the state-of-the-art method for 100 epoches and the quantitative analysis of the reconstructed images is presented in the table 4 and table 5. Compared with the other methods, the proposed LPAM-net achieves competitive performance on both T1 and T2 images with a under-sampling ratio of 20%.

p

| Method | PSNR | SSIM | NMSE | RMSE | #Par |

|---|---|---|---|---|---|

| X-net | 32.713 0.93 | 0.93 0.006 | 0.002 0.0006 | 0.024 0.024 | 42.816M |

| JGSN | 38.617 1.22 | 0.972 0.0048 | 0.0006 0.0002 | 0.012 0.0016 | 21192 |

| jCAN | 35.367 1.006 | 0.927 0.0087 | 0.0012 0.0004 | 0.017 0.0019 | 45.1M |

| ReconFormer | 39.124 1.47 | 0.977 0.0047 | 0.0005 0.0002 | 0.011 0.0019 | 1.1M |

| LPAM-net | 40.66 1.508 | 0.983 0.004 | 0.0004 0.0002 | 0.0094 0.0016 | 56510 |

| Method | PSNR | SSIM | NMSE | RMSE | #Par |

|---|---|---|---|---|---|

| X-net | 32.65 1.634 | 0.923 0.0106 | 0.0081 0.0033 | 0.024 0.005 | 42.816M |

| JGSN | 39.3 1.413 | 0.975 0.0046 | 0.0017 0.0006 | 0.011 0.0017 | 21192 |

| jCAN | 37.583 1.513 | 0.964 0.0054 | 0.0025 0.001 | 0.013 0.0023 | 45.1M |

| ReconFormer | 40.58 1.706 | 0.982 0.0051 | 0.0012 0.0004 | 0.0095 0.0018 | 1.1M |

| LPAM-net | 42.536 1.527 | 0.987 0.0043 | 0.0008 0.0003 | 0.0076 0.0013 | 56510 |

8 Declarations

The authors declare that they have no conflict of interest.

References

- (1) Abolghasemi, V., Ferdowsi, S., Sanei, S.: A gradient-based alternating minimization approach for optimization of the measurement matrix in compressive sensing. Signal Processing 92(4), 999–1009 (2012)

- (2) Aggarwal, H.K., Mani, M.P., Jacob, M.: Modl: Model-based deep learning architecture for inverse problems. IEEE transactions on medical imaging 38(2), 394–405 (2018)

- (3) Attouch, H., Bolte, J., Redont, P., Soubeyran, A.: Proximal alternating minimization and projection methods for nonconvex problems: An approach based on the kurdyka-łojasiewicz inequality. Mathematics of operations research 35(2), 438–457 (2010)

- (4) Attouch, H., Bolte, J., Svaiter, B.F.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward–backward splitting, and regularized gauss–seidel methods. Mathematical Programming 137(1-2), 91–129 (2013)

- (5) Beck, A., Tetruashvili, L.: On the convergence of block coordinate descent type methods. SIAM journal on Optimization 23(4), 2037–2060 (2013)

- (6) Bertsekas, D., Tsitsiklis, J.: Parallel and distributed computation: numerical methods. Athena Scientific (2015)

- (7) Bian, W., Zhang, Q., Ye, X., Chen, Y.: A learnable variational model for joint multimodal mri reconstruction and synthesis. Lecture Notes in Computer Science 13436, 354–364 (2022). DOI https://doi.org/10.1007/978-3-031-16446-0˙34

- (8) Bolte, J., Sabach, S., Teboulle, M.: Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Mathematical Programming 146(1-2), 459–494 (2014)

- (9) Charbonnier, P., Blanc-Féraud, L., Aubert, G., Barlaud, M.: Deterministic edge-preserving regularization in computed imaging. IEEE Transactions on image processing 6(2), 298–311 (1997)

- (10) Chen, Y., Liu, H., Ye, X., Zhang, Q.: Learnable descent algorithm for nonsmooth nonconvex image reconstruction. SIAM Journal on Imaging Sciences 14(4), 1532–1564 (2021). DOI 10.1137/20M1353368. URL https://doi.org/10.1137/20M1353368

- (11) Chun, I.Y., Fessler, J.A.: Convolutional analysis operator learning: Acceleration and convergence. IEEE Transactions on Image Processing 29, 2108–2122 (2020)

- (12) Chun, I.Y., Huang, Z., Lim, H., Fessler, J.: Momentum-net: Fast and convergent iterative neural network for inverse problems. IEEE transactions on pattern analysis and machine intelligence (2020)

- (13) Chun, I.Y., Zheng, X., Long, Y., Fessler, J.A.: Bcd-net for low-dose ct reconstruction: Acceleration, convergence, and generalization. In: Medical Image Computing and Computer Assisted Intervention–MICCAI 2019: 22nd International Conference, Shenzhen, China, October 13–17, 2019, Proceedings, Part VI 22, pp. 31–40. Springer (2019)

- (14) Chun, Y., Fessler, J.A.: Deep bcd-net using identical encoding-decoding cnn structures for iterative image recovery. In: 2018 IEEE 13th Image, Video, and Multidimensional Signal Processing Workshop (IVMSP), pp. 1–5. IEEE (2018)

- (15) Ding, C., Zhang, Q., Wang, G., Ye, X., Chen, Y.: Learned alternating minimization algorithm for dual-domain sparse-view ct reconstruction. arXiv preprint arXiv:2306.02644 (2023)

- (16) Do, W.J., Seo, S., Han, Y., Ye, J.C., Choi, S.H., Park, S.H.: Reconstruction of multicontrast mr images through deep learning. Medical physics 47(3), 983–997 (2020)

- (17) Guo, D., Zeng, G., Fu, H., Wang, Z., Yang, Y., Qu, X.: A joint group sparsity-based deep learning for multi-contrast mri reconstruction. Journal of Magnetic Resonance 346, 107354 (2023)

- (18) Guo, P., Mei, Y., Zhou, J., Jiang, S., Patel, V.M.: Reconformer: Accelerated mri reconstruction using recurrent transformer. IEEE transactions on medical imaging (2023)

- (19) Hardt, M.: Understanding alternating minimization for matrix completion. In: 2014 IEEE 55th Annual Symposium on Foundations of Computer Science, pp. 651–660. IEEE (2014)

- (20) He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778 (2016)

- (21) Jain, P., Netrapalli, P., Sanghavi, S.: Low-rank matrix completion using alternating minimization. In: Proceedings of the forty-fifth annual ACM symposium on Theory of computing, pp. 665–674 (2013)

- (22) Kaiming He Xiangyu Zhang, S.R., Sun, J.: Deep residual learning for image recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition p. 770–778 (2016)

- (23) Kaiming He Xiangyu Zhang, S.R., Sun, J.: Deep residual learning for image recognition. European conference on computer vision pp. 630–645 (2016). DOI 10.1007/978-3-319-46493-0˙38

- (24) Kim, Y., Jung, H., Min, D., Sohn, K.: Deeply aggregated alternating minimization for image restoration. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 6419–6427 (2017)

- (25) Menze, B.H., Jakab, A., Bauer, S., Kalpathy-Cramer, J., Farahani, K., Kirby, J., Burren, Y., Porz, N., Slotboom, J., Wiest, R., et al.: The multimodal brain tumor image segmentation benchmark (brats). IEEE transactions on medical imaging 34(10), 1993–2024 (2014)

- (26) Nesterov, Y., et al.: Lectures on convex optimization, vol. 137. Springer (2018)

- (27) Netrapalli, P., Jain, P., Sanghavi, S.: Phase retrieval using alternating minimization. Advances in Neural Information Processing Systems 26 (2013)

- (28) Palomar, D.P., Eldar, Y.C.: Convex optimization in signal processing and communications. Cambridge university press (2010)

- (29) Peters, S.W., Heath, R.W.: Interference alignment via alternating minimization. In: 2009 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 2445–2448. IEEE (2009)

- (30) Pock, T., Sabach, S.: Inertial proximal alternating linearized minimization (ipalm) for nonconvex and nonsmooth problems. SIAM Journal on Imaging Sciences 9(4), 1756–1787 (2016). DOI 10.1137/16M1064064

- (31) Polyak, B.T.: Some methods of speeding up the convergence of iteration methods. Ussr computational mathematics and mathematical physics 4(5), 1–17 (1964)

- (32) Sroubek, F., Milanfar, P.: Robust multichannel blind deconvolution via fast alternating minimization. IEEE Transactions on Image processing 21(4), 1687–1700 (2011)

- (33) Sun, J., Li, H., Xu, Z., et al.: Deep admm-net for compressive sensing mri. Advances in neural information processing systems 29 (2016)

- (34) Sun, K., Wang, Q., Shen, D.: Joint cross-attention network with deep modality prior for fast mri reconstruction. IEEE Transactions on Medical Imaging (2023)

- (35) Zhang, Q., Alvandipour, M., Xia, W., Zhang, Y., Ye, X., Chen, Y.: Provably convergent learned inexact descent algorithm for low-dose ct reconstruction (2021). DOI 10.48550/ARXIV.2104.12939. URL https://arxiv.org/abs/2104.12939