A Learnable Counter-condition Analysis Framework for Functional Connectivity-based Neurological Disorder Diagnosis

Abstract

To understand the biological characteristics of neurological disorders with functional connectivity (FC), recent studies have widely utilized deep learning-based models to identify the disease and conducted post-hoc analyses via explainable models to discover disease-related biomarkers. Most existing frameworks consist of three stages, namely, feature selection, feature extraction for classification, and analysis, where each stage is implemented separately. However, if the results at each stage lack reliability, it can cause misdiagnosis and incorrect analysis in afterward stages. In this study, we propose a novel unified framework that systemically integrates diagnoses (i.e., feature selection and feature extraction) and explanations. Notably, we devised an adaptive attention network as a feature selection approach to identify individual-specific disease-related connections. We also propose a functional network relational encoder that summarizes the global topological properties of FC by learning the inter-network relations without pre-defined edges between functional networks. Last but not least, our framework provides a novel explanatory power for neuroscientific interpretation, also termed counter-condition analysis. We simulated the FC that reverses the diagnostic information (i.e., counter-condition FC): converting a normal brain to be abnormal and vice versa. We validated the effectiveness of our framework by using two large resting-state functional magnetic resonance imaging (fMRI) datasets, Autism Brain Imaging Data Exchange (ABIDE) and REST-meta-MDD, and demonstrated that our framework outperforms other competing methods for disease identification. Furthermore, we analyzed the disease-related neurological patterns based on counter-condition analysis.

Resting-state fMRI Adaptive feature selection Transformer Prototype learning Explainable AI

1 Introduction

To comprehend the diverse pathological conditions of disorders such as autism spectrum disorder (ASD) and major depressive disorder (MDD), it is imperative to consider their neurological characteristics. In recent years, numerous functional MRI studies have identified neurological biomarkers associated with diseases. Functional connectivity (FC), temporal correlations of resting-state functional magnetic resonance imaging (rs-fMRI) signals among spatially distant brain regions, has been widely used in the literature [1, 2].

Conventional brain disease studies have used voxel- and connection-wise statistical tests (i.e., independent -test) to compare statistical differences between the patient and healthy control groups and determine the pathological regions or connections [3, 4, 5]. Moreover, these statistical methods have been utilized as feature selection methods to improve the performance of the machine/deep learning classification models [6, 7, 8]. Concordantly, many neuroimaging studies also have used machine learning-based methods such as a least absolute shrinkage and selection operator (LASSO) and its variants for feature selection [9, 10, 11]. These methods have the advantage of eliminating features unrelated to the diagnosis. In general, a support vector machine (SVM) classifier has been implemented with the features chosen in the feature selection.

However, independent implementation of feature selection and classifier may result in a suboptimal problem, which fails to provide the best predictive features for the classification model [12]. To address this concern in brain disease diagnosis, a method called recursive feature elimination (RFE) with SVM has been employed for disease diagnosis [13, 14]. This technique iteratively eliminates a subset of features from the input data until the optimal set of features is obtained based on their importance evaluated by SVM weights. Furthermore, several deep models employ a feature selection using a learnable mask. For instance, a binary mask is thresholded by setting a quarter of its elements to one, and the remaining to zero [15]. Edge-mask learning for GCN models used the ReLU activation function to ensure the elements of the mask are non-negative and implemented regularization on the mask for its sparsity [16]. Despite the end-to-end process, these existing feature selection methods [13, 14, 15, 16] primarily aim at identifying the connections that distinguish patients and normal controls. Accordingly, the features selected through these methods are applied across all patients, which may lead to the loss of individual-specific information.

Although the most discriminative disease-related regions or connections are identified for a general understanding of the disease, elucidating the individual-specific difference in neurological patterns is also advantageous in clinical practice [17]. However, it is challenging to consider the individual differences with group-based feature selection due to the substantial heterogeneity of the disease. This heterogeneity results from distinct neurological mechanisms among individuals and results in varied symptoms, even when diagnosed with the same disorder [18]. To handle the heterogeneity of the disease in the feature selection stage, a few recent studies have implemented a personalized ROI selection to capture individual variability in disease diagnosis. [19] devised a personalized ROI selection method by automatically selecting regions of interest (ROIs) via reinforcement learning (RL). Concretely, classification loss is used as the reward signal of RL for individual ROI selection. Furthermore, [20] developed the ROI-based top- pooling to select salient ROIs for each individual during training. Although personalized feature engineering methods can select features at the ROI level, they may not be able to account for the intricate topological properties of FC at the network level [21].

Along with considering personal traits, encoding FC relations for discriminative feature representations is also essential for diagnosis. Recently, many studies have proposed deep learning methods for discovering the topological features of FC [22, 20, 2]. FC is regarded as a graph structure where nodes and edges represent brain regions and their functional connections, respectively. These approaches demonstrated promising results in predicting behavior or cognitive states during the experiments. For example, BrainNetCNN [22], a convolutional neural network (CNN)-based model, encodes each row of FC with 1D convolution operations. Additionally, graph convolutional network (GCN)-based models have garnered attention for FC-based diagnoses. Most GCN-based models define ROIs as nodes and functional connectivities as edges. Node features usually consist of functional time series of ROI or ROI-based networks (each row of FC). One of the GCN-based models, BrainNetGNN [20], employs FC as a node feature and selects the top 10% partial correlations as edges. By utilizing the ROI-aware graph convolutional layers and ROI pooling layer, BrainNetGNN demonstrates significant prediction performance for both disease diagnosis and task stimuli identification. Although these studies exhibited enhanced efficiency in prediction by considering the topological properties of FC, they are limited in their ability to cover the global context of FC owing to their high local dependencies. Specifically, the row-/column-wise 1D convolution of BrainNetCNN only works on connections within each seed-based network. Meanwhile, graph convolution in GCN-based models works on only pre-defined and strongly linked edges. Therefore, devising a model that explores the complex topological patterns of FC from a global perspective is desired.

Most conventional machine and deep learning classification models have adopted post-hoc explainable models such as a saliency map [23], which calculates the importance of input region/connection from the final output of the trained model. However, these methods have limited efficacy and reliability of the analysis [24, 25]. Specifically, additional modeling must be required after training the diagnostic model. Furthermore, these methods are vulnerable to noise[26], and there is a wide diversity of opinions regarding malfunctioning regions[27, 28]. Therefore, validating the involvement of these regions in disease identification and the extent to which they contribute to disease severity is challenging. To address these issues, an end-to-end framework for both classification and analysis that offers verifiable disease-related regions is desirable.

| ASD | TD | -value | |

| Number of subjects | 405 | 468 | - |

| Sex (Male/Female) | 351/54 | 378/90 | 0.6980 |

| Age (Mean/STD) | 17.047.94 | 16.847.23 | 0.1325 |

| IQ (Mean/STD) | 105.8117.06 | 111.0912.06 | <0.0001 |

| MDD | HC | -value | |

| Number of subjects | 830 | 771 | - |

| Sex (Male/Female) | 306/524 | 316/455 | 0.1014 |

| Age (Mean/STD) | 34.3911.57 | 34.5513.14 | 0.8025 |

| Education (Mean/STD) | 11.943.36 | 13.553.42 | <0.0001 |

1.1 Contributions

In this study, we propose a unified framework that selects personal features at the connection level, summarizes the global context of FC for diagnosis, and provides reliable neuroscientific explanations. The four main contributions of this work can thus be summarized as follows: (1) We propose an adaptive attention network that can re-weigh the importance of connection features. (2) We devised a functional network relational learning method that summarizes inter-network functional connectivity as disease-related features. (3) Our model captures group-level disease-related characteristics, also termed prototypes (such as patient and normal control prototypes), and utilizes them for classification and elucidation. (4) Unlike the commonly adopted post-hoc analysis, our model is inherently interpretable. The explanation from our model starts with the question,“Where and how much FC should be changed?.” Notably, our model simulates a counter-condition of FC, i.e., alterations when a healthy individual is affected by the disease or the conditions under which patients with the disease may not be affected by the disease. We termed this approach counter-condition analysis.

A preliminary version of this work [29] was presented at the 2022 International Conference on Medical Image Computing and Computer-Assisted Interventions. This study extends the preliminary version with four main improvements: (1) designing novel adaptive feature selection networks, (2) devising an additional training step and a novel regularization term for a counter-condition explanation, (3) providing comprehensive qualitative and quantitative counter-condition analysis, and (4) demonstrating the applicability of the framework by validating it on an additional dataset, REST-meta-MDD.

2 Dataset and preprocessing

We used rs-fMRI data from two publicly available datasets; Autism Imaging Data Exchange (ABIDE) I and the REST-meta-MDD[30]. ABIDE I dataset provides image-based data of 1,112 individuals from 17 international organizations. We excluded individuals with no files, those who had all zero values in specific ROIs, and those who failed the quality-control test conducted by three experts. Eventually, we used data from 873 individuals, which included 405 ASD patients and 468 individuals with typical development (TD). REST-meta-MDD dataset provides image-based data of 2,428 individuals from 17 hospitals in China. We removed the data by following the standard quality control procedures outlined in [31]. We also excluded data from Site 4, as it was found to be a duplicate of Site 14. Thereby, we used 1,601 individuals, which included 830 MDD patients and 771 healthy control participants. The demographic details of participants from the ABIDE and REST-meta-MDD studies are provided in the Table 1.

In our study, both datasets were preprocessed with a Data Processing Assistant for Resting-State fMRI (DPARSF)111Scan procedure and protocols for ABIDE can be found at http://fcon_1000.projects.nitrc.org/indi/abide/. 222Scan procedure and protocols for REST-meta-MDD can be found at http://rfmri.org/REST-meta-MDD without global signal regression. We also applied ComBat harmonization [32] to control the site-related effects. The brain was parcellated into 200 ROIs using Craddock (CC) atlas [33], a functionally-defined parcellation approach. For both datasets, we calculated Pearson’s correlation coefficients to estimate FC using parcellated time-series fMRI data. Additionally, we initialized the diagonal elements in the FC matrix to zero.

3 Proposed Method

| Notation | Description |

|---|---|

| X | Input FC |

| M | Mask |

| Masked FC | |

| Reconstructed FC | |

| Simulated FC of counter-condition | |

| Prototype-guided (simulated) FC | |

| The output of -th functional network relation encoder | |

| The summary feature | |

| Averaged seed-based network features | |

| Prototype features | |

| c | The class index (i.e., patient and control) |

3.1 Architecture overview

Our framework comprises two learning steps and four main components, as illustrated in Fig. 1. The first step plays a major role in classification, whereas the second step aims to generate simulated FC. Our proposed model comprises an adaptive attention network, functional network relation encoder, prototype-based classifier, and decoder. The adaptive attention network emphasizes the significant connections of diagnosis that are fitted to each individual. Subsequently, the functional network relation encoder learns both local and global representations, that is, intra- and inter-network relations. Given the summary feature containing class-relevant information, the prototype-based classifier calculates the similarity between group-wise (i.e., ASD and TD, MDD and HC) prototypes for diagnosis. Meanwhile, the decoder encourages learning expressive features by reconstruction as well as generates FC in the opposite condition.

3.2 Adaptive Attention Network

Inspired by the scaling attention approach [34], we propose an adaptive attention network to reduce the influence of redundant and insignificant connections on classification. Our adaptive attention network generates different attention masks conditioned on the functional connections of each individual. The attention mask reweights the connections according to the classification task on account of the end-to-end framework.

Given FC , where is the number of ROIs, we vectorize the upper triangular parts of the FC without diagonal elements, . Subsequently, the adaptive attention network encodes functional connections into attention masks m that are the same size as the input, as follows,

| (1) | ||||

where the adaptive attention network has weight and bias parameters , and two activation functions, ReLU and Gumbel-sigmoid. Notably, we used the Gumbel-sigmoid function [35] in the last layer to approximate the sampling process of discrete data. Thus, a subset of significant connections can be obtained. While the sigmoid function outputs values between zero and one, the Gumbel-sigmoid function maps inputs to either zero or one. Using the temperature , we can relax this discrete property to be more deterministic. Unlike the feature selection methods conducted prior to training, our network can choose disease-related connections in a unified framework.

After reshaping to the upper and lower triangular parts of the FC matrix M, we masked the original FC with attention mask M by element-wise multiplication, as follows,

| (2) |

High masking scores maintained existing FC values, whereas low masking scores weakened the values to near zero. In other words, the mask emphasizes class-relevant connections by paying less attention to insignificant and redundant connections. Finally, the scaled FC with the attention mask represents the connection that requires more focus for accurate diagnosis. Unlike the feature selection methods that apply to all individuals equally [36, 37, 38], M varies with the functional properties of individuals, such that each individual has different subsets of attentive features.

3.3 Functional Network Relation Encoder

Our functional network relation encoder is designed to capture both local and global contexts of FC, motivated by [39]. Each row in the FC matrix is termed a seed-based (ROI-based) network, which captures the temporal correlations between a designated seed ROI and other ROIs. Consequently, FC can be considered a set of seed-based networks, consisting of number of seed-based networks. From the perspective of seed-based networks, intra-network relational learning focuses on understanding the relationships within each seed-based network. On the other hand, to learn interactions between seed-based networks is referred to as inter-network relational learning. While intra-network relational learning concentrates on the local aspects of FC, inter-network relational learning encompasses the broader and global aspects of FC.

3.3.1 Intra-network Relational Learning

Given the masked FC as a sequence of seed-based networks, each row of , seed-based network, represents the temporal correlations of -th ROI and the other ROIs (i.e., intra-network). First, we embed the intra-network relations of each masked seed-based network, as follows,

| Z | (3) | |||

where multi-layer perceptron (MLP) layers include two feed-forward layers and a GELU activation function. The MLP is shared across seed-based network features. Layer normalization (LN) [40] is applied to each seed-based network feature before the MLP layers. The Intra-network-encoder is designed such that its output preserves the dimensionality of the input, resulting in .

3.3.2 Inter-network Relational Learning

Before learning inter-network relations between seed-based networks, we first included a learnable vector to the set of seed-based network features, Z. Furthermore, a sinusoidal positional encoding matrix, , was added for relative regional information. Therefore, the inter-network encoder accepts as the input,

| (4) |

The inter-network encoder learns interactions between seed-based networks via multi-head self-attention (MHSA) [41]. Particularly, the self-attention (SA) mechanism is essential for learning inter-network relations:

| (5) |

where , and are referred to as the query, key, and value, respectively. The query, key, and value represent the linear transformation of input with learnable weight parameters , and , thus, , and with bias, respectively. Specifically, the dot product of Q and K measures the similarity between seed-based networks, and the softmax function normalizes these similarities. By multiplying V, the output of self-attention is proportional to the inter-network similarity score. With this module, our proposed network can encode the relations of network pairs to represent an inter-network graph.

Rather than a single SA for single inter-network relations, we jointly encode multiple representations with a multi-head SA to learn various relational patterns,

| (6) | ||||

where is the number of heads. Each single-head SA is calculated by its respective , and . Subsequently, the outputs of the single-head SA are aggregated and linearly transformed by . Therefore, the inter-network encoder is defined as follows:

| (7) |

3.3.3 Functional Network Relation Encoder

Taken together, each encoder block consists of two types of encoders, intra-network encoder and inter-network encoder, as follows,

| (8) |

| (9) |

where represents the number of blocks. The residual connections are implemented after the Inter-Network-Encoder and Intra-Network-Encoder. As the summary vector is employed for classification, it captures diagnostic information by iteratively learning the local and global patterns of FC.

3.4 Prototype-based Classifier

For classification, the similarity is calculated between the summary feature vector in the last layer and learnable class prototypes , where . The prototypes are representative vectors of each class, which are the centroids of the summary features of each class. These were randomly initialized and trained using prototype learning [42]. The probability X is derived as follows:

| (10) |

where represents a class index. We used cosine similarity for the similarity measure and adopted cross-entropy for the classification loss .

The label of the individual summary feature vector is predicted to be the prototype(s) index with the highest similarity. More explicitly, an input instance showing high similarity to the patient prototype is identified as a patient. Simultaneously, the prototypes are trained to be class-representative by increasing their similarity with the assigned summary feature vector. In scenarios with more than two classes, the number of prototypes augments in proportion to the number of classes. Consequently, this prototype-based classifier enables the extension of binary classification to multi-class classification.

| ABIDE | REST-meta-MDD | |||||||

| Methods | AUC | ACC (%) | SEN (%) | SPC (%) | AUC | ACC (%) | SEN (%) | SPC (%) |

| BrainCNN [22] | 0.65360.02* | 61.020.01* | 60.370.03* | 61.590.03* | 0.71290.01* | 65.710.50* | 65.011.63* | 66.461.45* |

| BrainCNN-P [23] | 0.66680.06* | 62.210.08* | 62.451.76* | 62.002.39* | 0.70110.02* | 64.392.38* | 65.044.35* | 63.706.23* |

| GCN [43] | 0.64250.01* | 64.321.12* | 63.221.65* | 65.281.99* | 0.63720.01* | 63.850.86* | 65.381.17* | 62.052.15* |

| GAT [44] | 0.65680.03* | 65.632.56* | 66.453.40 | 64.922.93* | 0.68080.02* | 68.101.40* | 68.211.94 | 67.944.17* |

| GraphSage [45] | 0.64060.01* | 64.111.12* | 63.511.79* | 64.613.18* | 0.65570.02* | 65.571.74* | 65.653.62* | 65.483.12* |

| STCAL [46] | 0.69180.01 | 63.970.80* | 61.442.47* | 65.011.90* | 0.73310.01 | 66.980.70* | 70.241.22 | 63.321.53* |

| STAGIN (GARO) [47] | 0.58510.01* | 55.660.12* | 58.200.38* | 55.930.11* | 0.70960.01* | 66.560.34* | 68.032.21* | 64.573.05* |

| STAGIN (SERO) [47] | 0.60460.01* | 58.810.18* | 57.754.21* | 60.003.96* | 0.69040.01* | 65.290.90* | 64.210.69* | 65.502.00* |

| -test + SVM | 0.68750.01* | 63.931.13* | 60.192.01* | 67.162.05* | 0.67890.01* | 63.150.87* | 65.011.55* | 61.151.95* |

| LASSO + SVM [48] | 0.68160.01* | 64.072.09* | 60.532.91* | 67.051.69* | 0.73540.01 | 67.720.95* | 69.350.67 | 66.021.77* |

| RFE-SVM [49] | 0.68120.10* | 63.951.58* | 59.661.60* | 66.112.65* | 0.72960.01* | 67.810.80* | 70.011.31 | 65.501.57* |

| BrainGNN [20] | 0.61130.13* | 59.971.80* | 54.654.03* | 64.515.71* | 0.61510.01* | 60.360.65* | 62.244.99* | 56.176.32* |

| t-test + P | 0.65790.01* | 63.670.09* | 61.913.29* | 65.202.77* | 0.67360.01* | 65.110.40* | 63.011.89* | 67.192.58* |

| LASSO + P [48] | 0.65390.01* | 63.440.08* | 63.253.80* | 63.553.99* | 0.67290.01* | 65.150.38* | 65.241.21* | 64.771.01* |

| Binary Mask + P [15] | 0.64290.01* | 61.880.58* | 61.942.74* | 61.821.58* | 0.66840.01* | 64.000.44* | 65.894.16* | 61.933.42* |

| Edge Mask + P [16] | 0.64300.01* | 62.570.52* | 60.192.82* | 64.602.54* | 0.67060.01* | 63.360.28* | 63.161.04* | 63.421.17* |

| Preliminary [29] | 0.68690.01* | 65.001.34* | 63.053.61* | 66.613.39* | 0.70530.01* | 68.210.72* | 68.951.47 | 67.082.72* |

| P w/o M | 0.65650.01* | 63.461.98* | 62.824.16* | 65.421.15* | 0.68740.01* | 66.421.23* | 68.001.56* | 65.580.94* |

| P w/o intra-network | 0.69620.02 | 64.121.97* | 62.295.58* | 69.502.55 | 0.71450.01* | 67.800.16* | 69.032.86 | 65.244.11* |

| P w/o prototype | 0.66820.01* | 62.910.94* | 64.022.35 | 61.832.52* | 0.73000.01* | 67.720.98* | 69.340.71 | 62.641.86* |

| Proposed | 0.71500.01 | 67.910.91 | 65.453.93 | 70.413.32 | 0.75620.01 | 70.190.83 | 69.702.69 | 70.682.11 |

3.5 Decoder

We introduced three decoding approaches using the same decoder by varying the input combinations. The decoder was trained by reconstruction (Eq. 11 and Eq. 12) in Step 1 and counter-condition learning (Eq. 10 and Eq. 13) in Step 2. The decoder in Eq. 14 is not involved in training but is used for counter-condition analysis.

3.5.1 Decoder for Reconstruction

We reconstruct the FC in Step 1 as follows,

| (11) |

where is the reconstructed FC and the feature is the average of number of seed-based networks embeddings, , for each instance. The decoder is defined by two fully connected layers with a nonlinear activation function. Considering that the summary feature is utilized for classification, it involves information that distinguishes between patients and normal controls, thereby regarded as a group-level representation. On the other hand, the averaged feature is an embedding of FC, which reflects individual-level characteristics. We utilized the loss for reconstruction:

| (12) |

We reconstructed instead of X only to focus on the connections selected in the adaptive attention network to better fit the prototype-based classifier.

3.5.2 Decoder for Counter-condition Learning

To allow the decoder to generate a counter-condition FC in Step 2, we first replaced the summary feature vector with that of a sample in the other class. Specifically, we shuffled the summary features in each mini-batch to replace a subject’s class information with the opposite class information of others. Consequently, the decoder can generate a masked FC of the opposite class,

| (13) |

where Shuffle denotes a permutation function. Subsequently, the simulated FC was passed through the functional network relational encoder and the prototype-based classifier. Due to the changes in the class attributes, referred to as the counter-condition, it should be classified as belonging to the opposite class in the classification task. To differentiate the classification that utilizes the original FC, the classification with simulated counter-condition FC is termed counter-condition classification.

3.5.3 Decoder for Counter-condition Analysis

Similar to the shuffling of the summary feature vector, we can also substitute the summary feature vector of an individual into a representative prototype vector. Subsequently, we decoded seed-based network features of an individual with the group prototype to produce prototype-guided FC (pFC), , as follows,

| (14) |

where represents a class index, patient or normal control. To maintain the same influence of the summary feature vector, we normalized to the same magnitude as the original summary features.

Consequently, the pFC maintains individual characteristics inherent to the averaged seed-based network features, whereas individual class-related characteristics are replaced with group-representative characteristics. Notably, replacing the summary feature vector of a healthy individual with the patient prototype can help predict the functional degradation of the FC, assuming that the individual is affected by the disease. Meanwhile, for patients with the healthy prototype , we can identify the distinguishing variations of FC as if the individuals follow a normal development or cognitive process. Similarly to , pFC that changes in the class attributes is categorized under counter-condition FC. Our proposed method can simulate counter-condition FC for each individual, which is beneficial for obtaining deeper insights into the functional characteristics of the brain with the disease.

3.5.4 Regularization

Simultaneously, we specifically reduce the influence of seed-based network features as follows,

| (15) |

By minimizing the absolute value of the similarity between and prototypes , seed-based network features become less informed of class characteristics. Without regularization, the residual class information in seed-based network features negatively affects the generation of the FC of the opposite class.

3.6 Overall Objective Function and Training Strategy

Our framework has two steps: Step 1 for classification and reconstruction and Step 2 for counter-condition FC generation. The objective function of each step comprises different losses,

| (16) |

| (17) |

where represents the hyperparameter that controls the balance of each loss. We control the balance between the reconstruction loss and the classification loss in to generate FC that contains information on the counter-condition with while maintaining a subject’s own functional configuration with to avoid losing reconstruction capacity.

Step 1 and Step 2 are trained by the turns of the epoch. The alternation of these two steps in the training process enables disease identification and counter-condition FC generation for analysis in a unified framework. In Step 1, all modules, i.e., adaptive attention network, functional network relational encoder, decoder, and prototype classifier, are trained. However, in Step 2, only the decoder is trained while the other networks are fixed. In this step, the classification loss of is minimized to guide the decoder to generate a counter-condition FC.

4 Results

4.1 Comparative Methods

We demonstrate the efficacy and validity of our proposed method in two aspects: functional network relational learning and feature selection. From the perspective of functional network relational learning, we chose BrainNetCNN (BrainCNN) [22], designed to learn network features with a 1D convolution operation, for comparison. As our model uses a prototype-based classifier, we added a prototype-based BrainNetCNN that predicts the label with prototypes (BrainCNN-P) [23]. We also compared our method with the representative GNN models: GCN [43], GAT [44], GraphSage [45], and BrainGNN [20]. As our functional network relational learning is grounded in Transformer architecture, we compared our model with other Transformer-based models: spatio-temporal attention graph isomorphism network (STAGIN) [47] and spatial-temporal co-attention learning (STCAL) [46].

We additionally adopted three traditional feature selection methods: an independent -test for each connection as a univariate feature selection, a LASSO [48] and a RFE [49] as a multivariate feature selection. These are group-level feature selections in which all samples have the same selected features. We selected significant functional connections via a -test or LASSO and conducted a SVM classifier, a conventional diagnostic framework in neuroimaging. Meanwhile, RFE-SVM recursively ranked the features based on the SVM weights and removed the least informative and redundant features until only the desired number of features remains. Furthermore, we replaced an adaptive attention network with these traditional feature selection methods and trained the functional network relational encoder, decoder, and prototype-based classifier (-test+P and LASSO+P in Table 3). The connections selected via the -test and LASSO are consistent across all individuals, in contrast to our model which selects connections on an individual basis. Consequently, we engaged in a comparison between group-level and individual-level feature selection.

Furthermore, we conducted a comparison between two deep learning-based feature selection methods and our adaptive attention mask: the binary mask method [15] and the edge mask [16]. It is worth noting that these masks are trainable within an end-to-end learning framework. For contrasting ROI-level and network-level feature selection, we selected BrainGNN [20], a notable GNN model, as a comparative model. While our model operates by selecting connections at the individual level, BrainGNN is especially adept at ROI selection at this level, facilitated by its pooling layer. We also included our prior model [29] published in MICCAI (Preliminary), for comparison.

4.2 Experimental Settings

We used the same hyperparameters for both datasets. Our adaptive attention network used two fully connected layers with hidden units . The value of the Gumbel-sigmoid was set to 5.0 for both datasets. Functional network relational learning comprises a two-block (=2) Transformer encoder, where the number of heads was 10 (=10) and the hidden feature dimension of the encoder was 128. The output feature dimension of the encoder was as same as the number of ROIs (=). The decoder was composed of two fully connected layers with hidden sizes of . The cross-entropy loss with a softmax temperature of 0.5 is used for disease identification. All modules were optimized using the AdamW optimizer [50]. The learning rate of Step 1 was 0.0005. In Step 2, only the decoder was trained with a learning rate of 0.0001. The batch size was 32 in all steps. To avoid losing the reconstruction ability of the decoder, the and in Step 2 were set to 1.0 and 0.1, respectively. To avoid overfitting, we applied regularization of 0.0001 and dropout with a drop rate of 0.5.

For competing methods, we followed the same hyperparameter setting as reported in the original manuscript for BrainCNN, BrainCNN-P, and BrainGNN. For GAT and Graphsage, where no fMRI data were used in the original manuscript but used as a comparative method in BrainGNN, the same hyperparameter settings in BrainGNN, except for the learning rate, were used. In addition to the original learning rate setting, we also identified the optimal learning rate among by tuning the validation set. We selected connections with the -value of less than 0.05 as a feature selection method, whereas the for LASSO was selected from . The parameter for SVM and of sparsity term in Edge Mask were chosen from . For RFE-SVM, we predefined the number of features following the previous research [13] for ABIDE and [14] for REST-meta-MDD. We conducted five-fold cross-validation and repeated it five times for the proposed and all competing methods.

4.3 Performance Comparison

| ABIDE | ||||

|---|---|---|---|---|

| AUC | ACC (%) | SEN (%) | SPC (%) | |

| w/o Step 2 | 0.68760.06 | 59.6910.20 | 74.4918.80 | 44.876.81 |

| w/o | 0.90090.06 | 85.0411.73 | 90.166.55 | 82.9916.89 |

| Proposed | 0.94540.06 | 92.847.27 | 88.1911.00 | 96.144.33 |

| REST-meta-MDD | ||||

| AUC | ACC (%) | SEN (%) | SPC (%) | |

| w/o Step 2 | 0.68690.05 | 61.254.56 | 72.729.22 | 49.7812.78 |

| w/o | 0.93130.03 | 91.316.15 | 92.166.71 | 93.133.85 |

| Proposed | 0.97070.02 | 94.611.77 | 98.511.90 | 90.662.67 |

The results are summarized in Table 3. We reported the mean and standard deviation of the classification performance using four metrics: area under the receiver operating characteristic curve (AUC), accuracy (ACC), sensitivity (SEN), and specificity (SPC). Our method achieved the best ACC of 67.91% and a high AUC of 0.7150, SEN of 65.45%, and SPC of 70.41% on the ABIDE dataset. Additionally, our method obtained the best ACC of 70.19%, AUC of 0.7562, and SPC of 70.68% on the REST-meta-MDD dataset. In addition, we performed the Wilcoxon signed-rank test (0.05) to validate the superiority of our method.

Our model achieved significant improvements on two separate datasets in AUC compared with that using the graph-based models. This can be attributed to two factors. First, our model covers the entire brain relations () owing to the self-attention mechanism, compared with that in BrainCNN and BrainCNN-P, which share the weights of 1 convolution for all seed-based networks. Our model can capture subtle and globally distributed information in the FC. Second, compared with GNN-based models that predefine edges by thresholding (such as the top 10% positive or absolute values of FC) prior to feature extraction, our model selects connections using adaptive attention networks within a unified framework. In addition, our functional network relational encoder learns various network relations via a multi-head self-attention operation.

We also performed evaluations in comparison to Transformer-based models, such as STAGIN and STCAL. These methods showed degraded classification performance in ACC and SPC, especially when applied to the ABIDE dataset. Despite their ability to capture both intra-network and inter-network relations of FC, their use of all connections without feature selection could result in a decline in model performance, as it could learn irrelevant or noisy patterns.

The results of our study demonstrate that our proposed method outperforms competing methods that use conventional feature selection (such as -test, LASSO, and RFE-SVM) and optimizable masks (such as Binary Mask and Edge Mask) on both datasets. These findings suggest that our end-to-end approach with personalized feature selection is more effective than group-wise feature selection conducted independently of the classifier. Despite a unified framework for selecting task-relevant ROIs, BrainGNN performed poorly when using rs-fMRI. This finding indicates that the connection-level feature selection approach outperforms the ROI-level feature selection approach.

4.4 Counter-condition Classification

To evaluate the simulated counter-condition FC, we conducted counter-condition classification. The performance of the counter-condition classification is estimated in Table 4. As mentioned in Sec. 3.5.3, we replaced the summary feature with the prototype of the counter-condition and reconstructed the pFC, FC with class information of the opposite condition, denoted as , where represents a class index. Using the pFC as an input, we identified the disease using a trained prototype-based classifier. The label is assigned the same index as that of the prototypes. Only correct test samples in classification were considered in the counter-condition classification.

Counterintuitively, in counter-condition classification, our proposed method exhibited high performance in all metrics. Notably, the counter-condition classification with pFC is not implemented in training phase. As our summary feature is trained by maximizing the similarity between the class prototypes and Step 2 where the decoder is conditioned to produce a counter-condition FC using others’ summary features from the opposite class, these underlying mechanisms contribute to the performance of our method in counter-condition classification.

4.5 Ablation Studies

We investigated which component had an impact on performance. We evaluated the classification performance by conducting ablation studies on model components and training steps. As shown in Table 3, our model without the prototype-based classifier (w/o prototype) and adaptive attention network (w/o M) exhibit degraded performance in all metrics by a large margin. Meanwhile, the performance of our model without intra-network relation encoding is less degraded. Inter-network relational learning appears to play a more critical role in encoding than intra-network learning. Step 2 is designed for counter-condition FC generation, therefore, it has less impact on classification.

As shown in Table 4, we compared the diagnostic performance depending on the factors that affect counter-condition classification. Our method demonstrated high classification performance in counter-condition classification. However, there was a decline in AUC and ACC when training without Step 2 and on both datasets. Specifically, there was a sharp decline in all metrics when training without Step 2. This indicates that the guidance provided by Step 2 is crucial for the successful generation of the simulated FC in the counter-condition. Without applying regularization, the SPC is sharply degraded on ABIDE dataset and there is an increase in the standard deviation of all metrics on the MDD datasets. This illustrates the influence of the residual class information in seed-based network features on the generation of counter-condition FC.

| ABIDE | |||

| Craddock | Corresponding ROIs in AAL | ASD DC | TD DC |

| ROI 52* | Cerebellum 9.L | 0.356 | 0.488 |

| ROI 58* | Precuneus.L/R, Vermis 4,5 | 0.470 | 0.400 |

| ROI 78* | Temporal Pole Mid/Sup.L | 0.310 | 0.510 |

| ROI 149* | Frontal Sup Medial.L/R | 0.467 | 0.672 |

| ROI 185* | Rolandic Oper.R, Temporal Sup.R | 0.382 | 0.555 |

| REST-meta-MDD | |||

| Craddock | Corresponding ROIs in AAL | MDD DC | HC DC |

| ROI 10* | Cerebellum Crus1/6.R | 0.188 | 0.290 |

| ROI 41* | Cerebellum 6.R, Cerebellum 4,5.R | 0.139 | 0.297 |

| ROI 72* | Temporal Mid.L | 0.158 | 0.262 |

| ROI 73* | Precentral.L, Frontal Mid.L | 0.132 | 0.247 |

| ROI 140* | Temporal Mid.R, Temporal Inf.R | 0.123 | 0.180 |

5 Neuroscientific Analysis

5.1 Feature Selection Analysis

To demonstrate that our adaptive attention network generates an individual-specific mask M, we visualized the mean and standard deviation (STD) of the mask, as shown in Fig. 2. The average mask shows the connections that are attentive or irrelevant to the diagnosis. A higher average value indicates that the connection is more attentive. Meanwhile, the STD of the mask represents the connections that are consistently attentive or irrelevant across individuals. Connections with high average and low STD may be considered primary biomarkers associated with the disease. In contrast, connections with high average and high STD can be considered biomarkers associated with the individual characteristics of the disease. This selection of connections can provide valuable insights into the underlying mechanisms of the disease and facilitate the identification of potential biomarkers. It is worth mentioning that our method inherently provides explanations at the connection-level, elaborating on the disruptions to functional network properties in the analysis of brain disorders [21].

We further analyzed these changes of connections at the ROI-level as well. To figure out disease-related ROIs, we calculated the degree centrality of selected connections, which is one of the graph-theoretic concepts required for analyzing the brain connectivity architecture in fMRI studies [51]. Degree centrality in the context of FC provides a way to understand the importance of brain regions as hubs that are highly connected to other regions within the overall network. Subsequently, we performed a group t-test with a significance level of 0.05. We then identified the top five disease-related ROIs that exhibited the highest degree centrality among those that showed statistically significant differences in Table 5.

5.2 Counter-condition Analysis

For quantitative analysis, we considered all individuals in the training, validation, and test datasets and filtered the correctly predicted subjects in both conventional disease and counter-condition classification. For the counter-condition explanations, we assumed two scenarios: (1) What if the patient becomes normal ()? (2) What if a healthy is diagnosed with disease ()? As explained in Sec. 3.5.3, our decoder generates FC from seed-based network features and prototypes to simulate the mechanisms by which functional connections are changed depending on the prototypes of the counter-condition. To calculate the amount of change, we subtracted the counter-condition FC from the reconstructed FC with its own class information. Then, we excluded the extreme 1% connections (i.e., 199 connections for Craddock 200 atlas based on the absolute values for each individual. As a general understanding of the disease, the average changes in functional connectivity are displayed in Fig. 3. The connection patterns of ASD-simulated TD and TD-simulated ASD show opposite tendencies to each other. This suggests that to change from a patient to a healthy state, or vice versa, the disease-related connections should be modified in reverse. Additionally, we calculated and added the actual average differences between patients and healthy controls. The patterns between ASD-TD and ASD-simulated TD are alike, indicating that the simulated FC properly represents the functional connectivity of the opposite condition. In line with previous research [52, 53], we also identified patterns of both functional hypo- and hyper-connectivity in individuals with ASD. To deal with differences in neuropathological abnormalities, we focused on the patients and their counter-condition FC for afterwards analyses.

5.3 Subtype Analysis

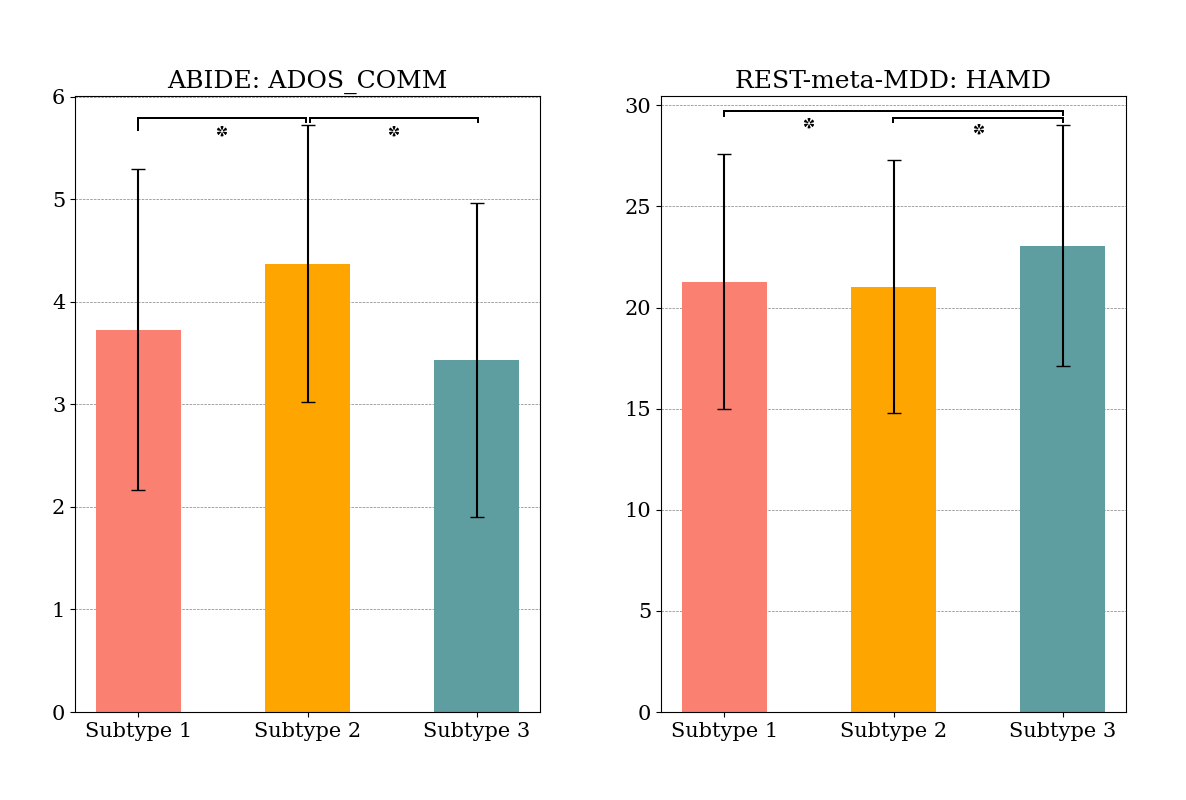

To deal with the diversity of malfunctioning connections, we conducted a clustering analysis of the changes in functional activities in ASD and MDD patients, i.e., differences between patients’ FC and their counter-condition FC. We categorized the patients into subtypes using a Ward linkage-based hierarchical clustering method, widely used in neuroimaging studies [19]. According to previous research that suggested 25 subtypes of disease [27, 54], we empirically set the number of clusters as three.

To analyze neuroscientific differences among subtypes, we found subtype-related ROIs with these functional changes. First, we calculated the degree centrality of selected connections, which is one of the graph-theoretic concepts required for analyzing the brain connectivity architecture in fMRI studies [51, 55]. Then, we conducted ANOVA (0.05) with Bonferroni correction. We reported subtype-related ROIs in Fig. 4.

As depicted in Fig. 5, we also observed that the ADOS communication (COMM) score that measures patients’ verbal and non-verbal communication skills was significantly related (0.05) with subtypes. Specifically, patients belonging to Subtype 2 exhibited higher communication scores compared to those in the other subtypes. For MDD patients, we utilized the Hamilton Depression Rating Scale (HAMD) and found that the three identified subgroups exhibited different average HAMD scores. Subtype 3 shows the highest-averaged score.

6 Conclusion

In this study, we proposed a unified framework that integrates diagnosis and neuroscientific explanation. Our framework has three main advantages: no pre-selected features by the use of adaptive attention networks, no pre-defined ROI relations through the utilization of functional network relation encoder, and no additional training for the explanation thanks to counter-condition learning. In our experiments on the ABIDE and REST-meta-MDD datasets, the proposed method outperformed competing methods. We believe that our counter-condition analysis could facilitate precise diagnoses and effective treatments for the patients.

References

- [1] T.-E. Kam, H. Zhang, Z. Jiao, and D. Shen, “Deep learning of static and dynamic brain functional networks for early MCI detection,” IEEE Transactions on Medical Imaging, vol. 39, no. 2, pp. 478–487, 2019.

- [2] Y. Li, J. Liu, Y. Jiang, Y. Liu, and B. Lei, “Virtual adversarial training-based deep feature aggregation network from dynamic effective connectivity for mci identification,” IEEE Transactions on Medical Imaging, vol. 41, no. 1, pp. 237–251, 2021.

- [3] S.-J. Hong, R. Vos de Wael, R. A. Bethlehem, S. Lariviere, C. Paquola, S. L. Valk, M. P. Milham, A. Di Martino, D. S. Margulies, J. Smallwood et al., “Atypical functional connectome hierarchy in autism,” Nature Communications, vol. 10, no. 1, pp. 1–13, 2019.

- [4] J. Ciarrusta, R. Dimitrova, D. Batalle, J. O’Muircheartaigh, L. Cordero-Grande, A. Price, E. Hughes, J. Kangas, E. Perry, A. Javed et al., “Emerging functional connectivity differences in newborn infants vulnerable to autism spectrum disorders,” Translational Psychiatry, vol. 10, no. 1, pp. 1–10, 2020.

- [5] A. Nair, M. Jolliffe, Y. S. S. Lograsso, and C. E. Bearden, “A review of default mode network connectivity and its association with social cognition in adolescents with autism spectrum disorder and early-onset psychosis,” Frontiers in Psychiatry, vol. 11, p. 614, 2020.

- [6] C.-M. Chen, P. Yang, M.-T. Wu, T.-C. Chuang, and T.-Y. Huang, “Deriving and validating biomarkers associated with autism spectrum disorders from a large-scale resting-state database,” Scientific Reports, vol. 9, no. 1, pp. 1–10, 2019.

- [7] X. Xu, W. Li, J. Mei, M. Tao, X. Wang, Q. Zhao, X. Liang, W. Wu, D. Ding, and P. Wang, “Feature selection and combination of information in the functional brain connectome for discrimination of mild cognitive impairment and analyses of altered brain patterns,” Frontiers in Aging Neuroscience, vol. 12, p. 28, 2020.

- [8] H. S. Nogay and H. Adeli, “Machine learning (ML) for the diagnosis of autism spectrum disorder (ASD) using brain imaging,” Reviews in the Neurosciences, vol. 31, no. 8, pp. 825–841, 2020.

- [9] P. Zille, V. D. Calhoun, J. M. Stephen, T. W. Wilson, and Y.-P. Wang, “Fused estimation of sparse connectivity patterns from rest fMRI—application to comparison of children and adult brains,” IEEE Transactions on Medical Imaging, vol. 37, no. 10, pp. 2165–2175, 2017.

- [10] Y. Li, J. Liu, X. Gao, B. Jie, M. Kim, P.-T. Yap, C.-Y. Wee, and D. Shen, “Multimodal hyper-connectivity of functional networks using functionally-weighted LASSO for MCI classification,” Medical Image Analysis, vol. 52, pp. 80–96, 2019.

- [11] Y. Li, J. Liu, Z. Tang, and B. Lei, “Deep spatial-temporal feature fusion from adaptive dynamic functional connectivity for MCI identification,” IEEE Transactions on Medical Imaging, vol. 39, no. 9, pp. 2818–2830, 2020.

- [12] N. Pudjihartono, T. Fadason, A. W. Kempa-Liehr, and J. M. O’Sullivan, “A review of feature selection methods for machine learning-based disease risk prediction,” Frontiers in Bioinformatics, vol. 2, p. 927312, 2022.

- [13] C. Wang, Z. Xiao, and J. Wu, “Functional connectivity-based classification of autism and control using SVM-RFECV on rs-fMRI data,” Physica Medica, vol. 65, pp. 99–105, 2019.

- [14] P. Dai, T. Xiong, X. Zhou, Y. Ou, Y. Li, X. Kui, Z. Chen, B. Zou, W. Li, Z. Huang et al., “The alterations of brain functional connectivity networks in major depressive disorder detected by machine learning through multisite rs-fMRI data,” Behavioural Brain Research, vol. 435, p. 114058, 2022.

- [15] H. Wen, J. Shi, Y. Zhang, K.-H. Lu, J. Cao, and Z. Liu, “Neural encoding and decoding with deep learning for dynamic natural vision,” Cerebral Cortex, vol. 28, no. 12, pp. 4136–4160, 2018.

- [16] G. Qu, L. Xiao, W. Hu, J. Wang, K. Zhang, V. D. Calhoun, and Y.-P. Wang, “Ensemble manifold regularized multi-modal graph convolutional network for cognitive ability prediction,” IEEE Transactions on Biomedical Engineering, vol. 68, no. 12, pp. 3564–3573, 2021.

- [17] S. Mueller, D. Wang, M. D. Fox, B. T. Yeo, J. Sepulcre, M. R. Sabuncu, R. Shafee, J. Lu, and H. Liu, “Individual variability in functional connectivity architecture of the human brain,” Neuron, vol. 77, no. 3, pp. 586–595, 2013.

- [18] E. Feczko, O. Miranda-Dominguez, M. Marr, A. M. Graham, J. T. Nigg, and D. A. Fair, “The heterogeneity problem: approaches to identify psychiatric subtypes,” Trends in cognitive sciences, vol. 23, no. 7, pp. 584–601, 2019.

- [19] J. Lee, W. Ko, E. Kang, H.-I. Suk, A. D. N. Initiative et al., “A unified framework for personalized regions selection and functional relation modeling for early MCI identification,” NeuroImage, vol. 236, p. 118048, 2021.

- [20] X. Li, Y. Zhou, N. Dvornek, M. Zhang, S. Gao, J. Zhuang, D. Scheinost, L. H. Staib, P. Ventola, and J. S. Duncan, “BrainGNN: Interpretable brain graph neural network for fMRI analysis,” Medical Image Analysis, vol. 74, p. 102233, 2021.

- [21] M. P. van den Heuvel and O. Sporns, “A cross-disorder connectome landscape of brain dysconnectivity,” Nature Reviews Neuroscience, vol. 20, no. 7, pp. 435–446, 2019.

- [22] J. Kawahara, C. J. Brown, S. P. Miller, B. G. Booth, V. Chau, R. E. Grunau, J. G. Zwicker, and G. Hamarneh, “BrainNetCNN: Convolutional neural networks for brain networks; towards predicting neurodevelopment,” NeuroImage, vol. 146, pp. 1038–1049, 2017.

- [23] D. Zhi, V. D. Calhoun, C. Wang, X. Li, X. Ma, L. Lv, W. Yan, D. Yao, S. Qi, R. Jiang et al., “BNCPL: Brain-network-based convolutional prototype learning for discriminating depressive disorders,” in 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society, 2021, pp. 1622–1626.

- [24] P.-J. Kindermans, S. Hooker, J. Adebayo, M. Alber, K. T. Schütt, S. Dähne, D. Erhan, and B. Kim, “The (un) reliability of saliency methods,” in Explainable AI: Interpreting, Explaining and Visualizing Deep Learning. Springer, 2019, pp. 267–280.

- [25] N. Arun, N. Gaw, P. Singh, K. Chang, M. Aggarwal, B. Chen, K. Hoebel, S. Gupta, J. Patel, M. Gidwani et al., “Assessing the trustworthiness of saliency maps for localizing abnormalities in medical imaging,” Radiology: Artificial Intelligence, vol. 3, no. 6, 2021.

- [26] J. Adebayo, J. Gilmer, M. Muelly, I. Goodfellow, M. Hardt, and B. Kim, “Sanity checks for saliency maps,” Advances in Neural Information Processing Systems, vol. 31, 2018.

- [27] H. M. Van Loo, P. De Jonge, J.-W. Romeijn, R. C. Kessler, and R. A. Schoevers, “Data-driven subtypes of major depressive disorder: a systematic review,” BMC medicine, vol. 10, pp. 1–12, 2012.

- [28] W. K. Lau, M.-K. Leung, and B. W. Lau, “Resting-state abnormalities in autism spectrum disorders: a meta-analysis,” Scientific Reports, vol. 9, no. 1, pp. 1–8, 2019.

- [29] E. Kang, D.-W. Heo, and H.-I. Suk, “Prototype learning of inter-network connectivity for ASD diagnosis and personalized analysis,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2022, pp. 334–343.

- [30] X. Chen, B. Lu, H.-X. Li, X.-Y. Li, Y.-W. Wang, F. X. Castellanos, L.-P. Cao, N.-X. Chen, W. Chen, Y.-Q. Cheng et al., “The DIRECT consortium and the REST-meta-MDD project: towards neuroimaging biomarkers of major depressive disorder,” Psychoradiology, vol. 2, no. 1, pp. 32–42, 2022.

- [31] C.-G. Yan, X. Chen, L. Li, F. X. Castellanos, T.-J. Bai, Q.-J. Bo, J. Cao, G.-M. Chen, N.-X. Chen, W. Chen et al., “Reduced default mode network functional connectivity in patients with recurrent major depressive disorder,” Proceedings of the National Academy of Sciences, vol. 116, no. 18, pp. 9078–9083, 2019.

- [32] J.-P. Fortin, D. Parker, B. Tunç, T. Watanabe, M. A. Elliott, K. Ruparel, D. R. Roalf, T. D. Satterthwaite, R. C. Gur, R. E. Gur et al., “Harmonization of multi-site diffusion tensor imaging data,” NeuroImage, vol. 161, pp. 149–170, 2017.

- [33] R. C. Craddock, G. A. James, P. E. Holtzheimer III, X. P. Hu, and H. S. Mayberg, “A whole brain fMRI atlas generated via spatially constrained spectral clustering,” Human Brain Mapping, vol. 33, no. 8, pp. 1914–1928, 2012.

- [34] H. Banville, S. U. Wood, C. Aimone, D.-A. Engemann, and A. Gramfort, “Robust learning from corrupted EEG with dynamic spatial filtering,” NeuroImage, vol. 251, p. 118994, 2022.

- [35] X. Geng, L. Wang, X. Wang, B. Qin, T. Liu, and Z. Tu, “How does selective mechanism improve self-attention networks?” in Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, 2020, pp. 2986–2995.

- [36] J. Zhang, F. Feng, T. Han, X. Gong, and F. Duan, “Detection of autism spectrum disorder using fMRI functional connectivity with feature selection and deep learning,” Cognitive Computation, pp. 1–12, 2022.

- [37] A. Abrol, Z. Fu, M. Salman, R. Silva, Y. Du, S. Plis, and V. Calhoun, “Deep learning encodes robust discriminative neuroimaging representations to outperform standard machine learning,” Nature Communications, vol. 12, no. 1, pp. 1–17, 2021.

- [38] Y. Du, Z. Fu, and V. D. Calhoun, “Classification and prediction of brain disorders using functional connectivity: Promising but challenging,” Frontiers in Neuroscience, vol. 12, p. 525, 2018.

- [39] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly, J. Uszkoreit, and N. Houlsby, “An image is worth 16x16 words: Transformers for image recognition at scale,” in International Conference on Learning Representations, 2021.

- [40] J. L. Ba, J. R. Kiros, and G. E. Hinton, “Layer normalization,” arXiv preprint arXiv:1607.06450, 2016.

- [41] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” in Advances in Neural Information Processing Systems, vol. 30, 2017.

- [42] H.-M. Yang, X.-Y. Zhang, F. Yin, and C.-L. Liu, “Robust classification with convolutional prototype learning,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 3474–3482.

- [43] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” arXiv preprint arXiv:1609.02907, 2016.

- [44] P. Veličković, G. Cucurull, A. Casanova, A. Romero, P. Liò, and Y. Bengio, “Graph attention networks,” in International Conference on Learning Representations, 2018.

- [45] W. Hamilton, Z. Ying, and J. Leskovec, “Inductive representation learning on large graphs,” in Advances in Neural Information Processing Systems, vol. 30, 2017.

- [46] R. Liu, Z.-A. Huang, Y. Hu, Z. Zhu, K.-C. Wong, and K. C. Tan, “Spatial–temporal co-attention learning for diagnosis of mental disorders from resting-state fMRI data,” IEEE Transactions on Neural Networks and Learning Systems, 2023.

- [47] B.-H. Kim, J. C. Ye, and J.-J. Kim, “Learning dynamic graph representation of brain connectome with spatio-temporal attention,” Advances in Neural Information Processing Systems, vol. 34, pp. 4314–4327, 2021.

- [48] R. Tibshirani, “Regression shrinkage and selection via the LASSO,” Journal of the Royal Statistical Society: Series B (Methodological), vol. 58, no. 1, pp. 267–288, 1996.

- [49] I. Guyon, J. Weston, S. Barnhill, and V. Vapnik, “Gene selection for cancer classification using support vector machines,” Machine Learning, vol. 46, pp. 389–422, 2002.

- [50] I. Loshchilov and F. Hutter, “Decoupled weight decay regularization,” in International Conference on Learning Representations, 2019.

- [51] J. Wang, X. Zuo, and Y. He, “Graph-based network analysis of resting-state functional MRI,” Frontiers in Systems Neuroscience, vol. 4, p. 1419, 2010.

- [52] J. S. Nomi and L. Q. Uddin, “Developmental changes in large-scale network connectivity in autism,” NeuroImage: Clinical, vol. 7, pp. 732–741, 2015.

- [53] S. Liang, W. Deng, X. Li, A. J. Greenshaw, Q. Wang, M. Li, X. Ma, T.-J. Bai, Q.-J. Bo, J. Cao et al., “Biotypes of major depressive disorder: Neuroimaging evidence from resting-state default mode network patterns,” NeuroImage: Clinical, vol. 28, p. 102514, 2020.

- [54] A. P. Spencer and M. Goodfellow, “Using deep clustering to improve fMRI dynamic functional connectivity analysis,” NeuroImage, vol. 257, p. 119288, 2022.

- [55] H. Lin, X. Xiang, J. Huang, S. Xiong, H. Ren, and Y. Gao, “Abnormal degree centrality values as a potential imaging biomarker for major depressive disorder: a resting-state functional magnetic resonance imaging study and support vector machine analysis,” Frontiers in Psychiatry, vol. 13, p. 960294, 2022.