A generative model for surrogates of spatial-temporal wildfire nowcasting

Abstract

Recent increase in wildfires worldwide has led to the need for real-time fire nowcasting. Physics-driven models, such as cellular automata and computational fluid dynamics can provide high-fidelity fire spread simulations but they are computationally expensive and time-consuming. Much effort has been put into developing machine learning models for fire prediction. However, these models are often region-specific and require a substantial quantity of simulation data for training purpose. This results in a significant amount of computational effort for different ecoregions. In this work, a generative model is proposed using a three-dimensional Vector-Quantized Variational Autoencoders to generate spatial-temporal sequences of unseen wildfire burned areas in a given ecoregion. The model is tested in the ecoregion of a recent massive wildfire event in California, known as the Chimney fire. Numerical results show that the model succeed in generating coherent and structured fire scenarios, taking into account the impact from geophysical variables, such as vegetation and slope. Generated data are also used to train a surrogate model for predicting wildfire dissemination, which has been tested on both simulation data and the real Chimney fire event.

Index Terms:

Deep Learning; Generative model; Wildfire; Surrogate model; spatial-temporal systemI Introduction

A significant increase of wildfire frequency has been noticed world-widely in the past decades. These unwanted wildland fires can result in losses of lives, huge economic cost and lethal effects of air pollution. Real-time fire nowcasting is crucial for establishing fire fighting strategies and preventing multiple fire related hazards. Much effort has be given to develop fire simulation algorithms, for instance, based on Cellular Automata (CA) [1, 2], Minimum Travel Time (MTT) [3] and computational fluid dynamics (CFD) [4]. These physics-driven modellings are usually built on complex (often probabilistic) mathematical models which take into account a considerable number of geophysical and climate features, such as vegetation distribution, slope elevation and wind directions. As a consequence, running physics-based simulations for large fire events is computationally expensive and time consuming [5]. A key challenge in current wildfire forecasting study consists of developing efficient surrogate models (also known as digital twins) [6, 7, 8], capable of near real-time fire nowcasting.

In recent years, thanks to their effectiveness, machine learning techniques have been widely adapted to wildfire prediction problems [9]. For example, much attention is given in applying Convolutional Neural Networks on terrestrial-based images for fire and smoke detection [10, 11]. Shallow machine learning algorithms, such as Random Forest (RF) and Support Vector Machine (SVM), have been widely adopted in regional fire-susceptibility mapping [12]. The very recent work of [7] proposed a deep learning surrogate model based on Convolutional Autoencoder (CAE) for reduced-order-modelling and Recurrent Neural Network (RNN) for fire dynamic predictions. Their approach is tested in three recent large fire events in California where a CA fire simulator is employed to generate the training dataset for the surrogate model. By learning from the simulated fire spread instances, the online computational time of fire spread forecasting can be significantly reduced from several hours to several seconds. Furthermore, the model is capable of efficiently integrating near real-time observations from satellites via performing data assimilation in the reduced latent space. However, as stated in [7], a main challenge for generalizing their model to different ecoregions stands for the generation of the training data using the CA model. As mentioned in [7], runing the CA model for simulating a massive wildfire event can take up to several days on a High performance computing (HPC) system. In this work, we aim to build a generative model that can produce spatial-temporal consistent and physical realistic wildfire scenarios in a given ecoregion.

Much effort has been given in generating high dimensional dynamical systems that are consistent with physical principles [13, 14, 15, 16, 17]. Typical approaches include Generative Adversarial Networks, Normalizing Flows and Variational Autoencoders. Compared to the other methods, the advantages of VAE are mainly two-folds: (i) VAEs are Likelihood-based methods which are convenient to train and interpret [18]; (ii) VAEs compress data into a low-dimensional latent space, enabling efficient interpolation between data points [19]. The latter is crucial for generating a smooth transition between different data samples. In particular, the Vector Quantized Variational Autoencoder (VQ-VAE) introduced by [20] is an extension of VAE that replaces the continuous latent variables with discrete ones. The main strength of VQ-VAE consists of producing high-fidelity and sharp samples [18], which are vital for spatial-temporal systems. More precisely, by contructing a discrete, finite and trainable latent space (known as ’codebook’), VQ-VAE demonstrates exceptional capability in capturing the underlying structure of the data, enabling more coherent and structured generated samples [20]. Therefore, by implementing 3D convolutional layers (with an additional temporal axis), we apply VAE for generating spatial-temporal fire scenarios in this study.

The proposed approach is tested in a recent massive wildfire event in California, known as the Chimney fire [21], which lasted more than 20 days and had burned out over of land. A CA model [1] is first implemented with random ignition points in the ecoregion of the Chimney fire to generate training data. 500 sequences of burned area evolution (each consists of 16 snapshots, equivalent to 4 days in real time) are generated using 3D VQ-VAE. Qualitative and quantitative analysis is performed to assess the fidelity of the generated samples in relation to established geophysical principles in wildfire spread [1]. Thanks to its great efficiency compared to the CA model, the proposed generative model can provide a fast recognition of ’area at risk’ for fire management.

A Machine Learning (ML) surrogate model, based on Proper Orthogonal Decomposition (POD) and RNN, is also implemented in this study. POD is a method used in the field of dynamical systems to decompose a complex system into a set of uncorrelated modes [22]. These modes are chosen to represent the most important features of the dynamical system, and can be used to reconstruct the original system with a high degree of accuracy [23]. On the other hand, much effort has been given in predicting compressed latent variables via machine learning models, such as RF [24], RNN [25, 7] or Transformers [26]. Since we look for long-term predictions, long short-term memory (LSTM) neural netowk [27], a variant of RNN is chosen to build the surrogate model in this study. LSTM is a type of RNN that is able to process sequential data. LSTMs is able to maintain a ”memory” of previous inputs, allowing them to better understand context and relationships in the data. The surrogate model implemented in this work uses the 500 fire spread sequences generated by VQ-VAE as training data. It significantly outperforms the surrogate model only trained using 40 CA simulations on both synthetic fire events and the observed Chimney fire event. The observation of the burned area of the Chimney fire is processed using the MODIS satellite. The proposed generative model runs xxx times faster than the CA simulation, thus, it can significantly release the computational burden of generating training samples for surrogate models.

The main contribution of the present paper can be summarised as:

-

•

To the best of the author’s knowledge, this is the first reported attempt of generative models applied on wildfire spread dynamics.

- •

-

•

Generated wildfire data are used to train a machine learning surrogate model for fire spread prediction. The prediction accuracy on both the unseen fire simulation data and the real wildfire event can be significantly improved thanks to the generated data.

The rest of this paper is organised as follows. Section II introduces the data source, the study area and the CA fire spread model used in this study. Section III describes the dimension reduction and the surrogate model for fire prediction. Section IV presents the methodology of VQ-VAE in generating spatial-temporal burned area snapshots. Numerical results, together with quantitative and qualitative analysis, are shown in Section V. We finish the paper with a conclusion in Section VI.

II Fire simulation and study area

II-A Study area

We evaluate the performance of the generative and the surrogate models using a recent massive fire event in California, namely the Chimney fire in 2016. The exact ecoregion of this study is shown in Table I. We use active fire data from Moderate Resolution Imaging Spectroradiometer (MODIS) and Visible Infrared Imaging Radiometer Suite (VIIRS) satellites. MODIS provides thermal observations four times a day at a resolution of about 1km ([28]). VIIRS thermal data provides improved fire detection capabilities every 12 hours [29]. In this study, the level 2 VIIRS I-band active fire product (VNP14IMG) with a resolution of 375 m is combined with the MODIS fire products at 1km to derive continuous daily fire perimeters, using the natural neighbour geospatial interpolation method [30].

| Fire (Year) | latitude | longitude | area | ||

| North | South | West | East | ||

| Chimney (2016) | 37.7366 | 37.5093 | -119.9441 | -119.7053 | |

II-B Cellular Automata fire simulation

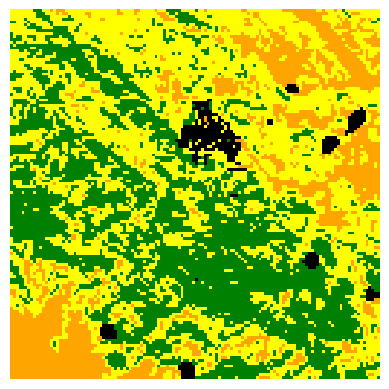

For the training dataset of the generative model and the surrogate model, we make use of an operational CA model [1], which has been tested in the Spetses fire in 1990 in Greece [1] and several recent wildfire events in California [7, 31]. As shown in Figure 1, the model uses square meshes to simulate the random spatial spread of wildfires. The use of regular square meshes reduces the computational cost for large fire events compared to unstructured meshes. Four states are assigned to represent a cell at a discrete time, i.e., unburnable, unburned, burning and burned. When a neighbouring cell is burning, the transition from unburned to burning is random following a probabiliy determined by local geological variables. The fire propagation towards 8 neighbouring cells (as illustrated in Figure 1) at a discrete time follows the probability,

| (1) |

where , , and are related to the local canopy density, canopy cover, landscape slope and wind speed/direction of the receiving cell, respectively [1]. These geological fields for the corresponding study areas are processed using remote sensing images of the MODIS satellite. These data are available at the Interagency Fuel Treatment Decision Support System (IFTDSS) [32]. The simulated burned area states are denoted as where is the time index. The outputs of the CA simulations are used as training data for the generative and the surrogate models in this study.

III Reduced order surrogate modelling for burned area

In this section, we introduce the reduced order surrogate model that is coupled with the generative model in this paper.

III-A Proper orthogonal decomposition for dimension reduction

In this study, a set of state snapshots, from several CA simulations, are represented by a tensor where each column of X represents a flattened burned area state at a given time step, that is,

| (2) |

The empirical covariance of X can be written and decomposed as

| (3) |

where the columns of are the principal components of X and is a diagonal matrix with the associated eigenvalues in a decreasing order,

| (4) |

For a truncation parameter , a projection operator can be constructed by keeping the first columns of . This projection operator can be obtained by a Singular Value Decomposition (SVD) [33] which does not require the computation of the full covariance matrix . For a flattened field of burned area , the compressed latent vector can be written as

| (5) |

which is a reduced rank approximation to the full state vector . in Equation (5) denotes the transpose operator.

The latent variable can be decompressed to a full vector by

| (6) |

The compression rate and the compression accuracy can be defined as:

| (7) |

By reducing the space dimension, the surrogate model becomes more efficient and faster to train, but it may also lead to a loss of information. Therefore, it is important to strike a balance between the dimensionality reduction and the preservation of the useful information.

III-B Predictive model using recurrent neural networks

Once the data compression is performed, we aim to emulate the dynamics of fire spread in the low-dimensional latent space. In this study, we aim to perform sequence-to-sequence predictions with time steps as input and time steps as output. As pointed out by [34], sequence-to-sequence predictions can decrease the online computational time, and more importantly, reduce the accumulation of prediction error. The time steps in LSTM are determined by the CA model introduced in Section II. Given a sequence of encoded variables , the training of LSTM can be performed by shifting the initial time step, i.e.,

| (8) | |||

| (9) | |||

| (10) | |||

Different metrics, such as Mean Square Error (MSE) or Mean Absolute Error (MAE), can be used as training loss functions by measuring the mismatch between predicted and true latent variables. As for the online prediction for a given test sequence , an iterative process can be carried out for long-term forecasting, that is,

| (11) | |||

| (12) | |||

IV VQ-VAE for fire data generation

In this section, we describe how 3D VQ-VAE can be used to generate spatial-temporal consistent fire spread dynamics. The pipeline is illustrated in Figure 2.

VAEs are feed-forward neural networks belonging to unsupervised learning, being widely used for data compression and generation [35]. A typical VAE consists of an encoder which maps the input to a Gaussian distribution in the latent space , where denote the mean and the standard deviation, respectively. A latent vector can then be resampled and a generated vector in the full space can be obtained through a decoder ,

| (13) |

where denotes the identity matrix in the latent space. The encoder and the decoder are then trained jointly through a MSE reconstruction loss which measures the difference between and ,

| (14) |

where denotes the Frobenius norm.

A Kullback–Leibler Divergence (KLD) loss is also constructed with the idea of approximating conditional likelihood distribution by the posterior distribution [35],

| (15) | ||||

| (16) |

where and are the parameterization of the encoder and the decoder , respectively. The KLD loss regularizes the approximate posterior distribution over the latent variables to be similar to the prior distribution [36], ensuring the VAE to generate diverse and meaningful samples.

Despite its great success in image compression and generation [37], it has been noticed that standard VAE is not typically well suited for generating spatial-temporal data [38]. In fact, a standard VAE assumes that the data at each time step is generated independently, leading to poor performance and unrealistic generated samples when being applied to spatial-temporal systems [39]. To overcome these drawbacks, we use the VQ-VAE [20] which compresses the data points into a discretized latent space instead of a continuous one. VQ-VAE can better capture the underlying structure of the data, leading to more coherent and structured generated samples compared to a standard VAE [18]. Furthermore, the discretization in the latent space in VQ-VAE encourages the model to focus on the main features such as objects and background, and implicitly alleviates the overfitting issue from the VAE-based model. In this work, following the idea in [18], we apply the sequential VQ-VAE to encode a sequence of burned area snapshots into a series of latent vectors , i.e.,

| (17) |

The standard VQ-VAE uses Convolutional layer to process images. To enable the VQ-VAE to process the sequential wildfire snapshots, we adopt Convolutional layers in and by building a temporal axis . The wildfire snapshots are concatenated along to form inputs. VQ-VAE then discretize the latent code in a codebook (an ensemble of latent sequences) where each element consists of a sequence of latent vectors of the same dimension as . We then obtain the latent code e of through the nearest neighbour algorithm, that is,

| (18) |

The generated burned area sequences can then be obtained through the decoder,

| (19) |

It is worth mentioning that the codebook in VQ-VAE is also trainable. It is trained jointly with and . Following the idea of [18], three loss functions are used in this study. Similar to VAE, the reconstruction loss measures the mismatch between and . The codebook loss measures the distance between and the closest element in the codebook. A commit loss is also added to stabilize the encoder output . These loss functions are defined respectively as

| (20) | ||||

| (21) | ||||

| (22) |

where denotes the stop-gradient function [40]. It is used in the VQ-VAE loss function to prevent the gradients from flowing through the codebook elements during the backpropagation. is an empirical coefficient that regularizes the weight of . Finally, the total loss of VQ-VAE reads:

| (23) |

Once the VQ-VAE is trained, Gaussian noises (see Equation (13)) are added in the latent to generate a new fire spread scenario . More precisely,

| (24) |

where is a hyperparameter. It is worth mentioning that Equation (24) is only used for data generation. It is not involved in the training process.

V Numerical Results

V-A Experiments set up

In this work, 40 CA simulations are used as the training dataset for the VQ-VAE generative model. The ignition points are randomly chosen in the central zone of the ecoregion to avoid the boundary problem as shown in Figure 3. In this study, the parameters in VQ-VAE are fixed as (in Equation (22)) and (in Equation (24)). The performance of the generative model is evaluated through two aspects, namely the realsticness of the generated fire events regarding geological variables and the improvement of the predictive surrogate model which use the generated fire events as training data. For the latter, 8 CA simulations, different from the training dataset are generated to find out if the surrogate models can correctly emulate the fire spread with unseen ignition points. For a fair comparison, two pipelines are established,

- •

-

•

proposed: 500 wildfire events generated through VQ-VAE and the 40 CA simulations are used to train the surrogate model.

The training and testing processes of the two surrogate models are illustrated in Figure 3. The performance of the two surrogate models is then compared using the test dataset of the CA simulations and satellite data of the Chimney wildfire event. In the training and the test datasets, each CA simulation consists of 16 snapshots where the time interval between two snapshots is equivalent to 6 hours in real time. Since the state is represented by integers in the CA model (see Section II), post-processing is required for generated/predicted values. Here, the threshold is chosen to be 0.4, i.e., the cells with a value higher than 0.4 are considered ’burned’. This threshold is set empirically following the previous study [7]. For simplicity, the burned area state and the geological fields (vegetation and slope) are all resized to pixels. The generative model and the surrogate model are trained on a work station with an NVIDIA RTX A6000 GPU and 48 GB Memory. As for the online generation/simulation, VQ-VAE, CA and MTT are all performed on a same labtop CPU of Intel(R) Core(TM) i7-10810U with 16 GB Memory.

V-B Generated wildfire events

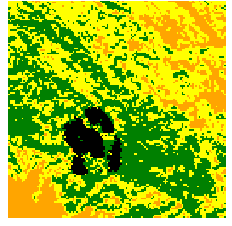

Figure 4 illustrates 8 generated fire events with random ignition points. For each generated fire, 6 snapshots respectively at 12, 24, 36, 48, 60, 72 hours after the fire ignition are shown. The background color map indicates the vegetation distribution where the green color refers to high vegetation density.

The generated data present characteristics that are in alignment with observable phenomena in CA simulations. First, the burned areas (i.e., number of burned pixels) are always increasing in each generated fire event. This observation is quantified in Figure 5 (a) where we show the averaged burned pixels against the hours after fire ignition. Overall the generated (i.e., VQ-VAE) and the simulated data (i.e., CA) demonstrate concurrent patterns despite that generated fire grow slightly slower in the nascent phase. The generated fire events also exhibit a larger standard deviation against propagation time, which is to be expected in this study. The exact burned areas observed from the satellite for the Chimney fire event are slightly larger than the simulated and generated burned areas.

It can also been clearly seen from Figure 4 that the generated fires tend to burn out the cells with high vegetation density which appears to be consistent with the CA propagation function (Equation (1)). To verify this assertion, we plot in Figure 5 (b,c), the final burned area (72 hours after the fire ignition) in number of cells against the averaged vegetation density and the slope elevation around the ignition point. More precisely, the ignition point of generated fire events is obtained by calculating the barycentre of the burned area 12 hours after ignition. The vegetation density and the slope elevation are normalized to the range of . There is conclusive evidence in Figure 5 that the generated fires show similar patterns compared to the CA simulations in the training dataset. Thus, the impact of vegetation and slope on the evolution of the burned area has been well understood by the generative model.

It is worth mentioning that all training data are generated with a single ignition point for each fire scenario. However, as shown in Figure 4, separate burned regions can be found in the synthetic data (especially the last fire sequence). More importantly, the growth of each burned region appears to be consistent with the reality. In fact, multiple ignition points are frequently observed in actual wildfire occurrences. This phenomena demonstrates the capability of VQ-VAE in generalizing and extending CA simulations with realistic scenarios. The averaged computational time of generating/simulating fire spread of 8 days in the ecoregion of the Chimney fire is shown in Table II. VQ-VAE result in a 5 order of magnitude acceleration, compared to the CA and MTT fire simulators [3].

| VQ-VAE | CA | MTT |

| 0.26s | min | min |

In summary, VQ-VAE allows to easily enlarge the simulation datasets with high-fidelity synthetic data. It shows great potential in generating realistic fire scenarios, which can be used to evaluate the area at risk. The efficiency of VQ-VAE can also be crucial for data-demanding downstream tasks, such as surrogate model training.

V-C Surrogate model

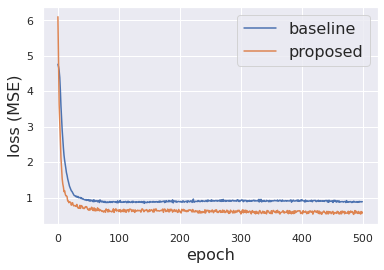

We compare the surrogate model trained on both generated (500 sequences) and simulated (40 sequences) data (i.e., proposed method in Figure 3) and the one trained only on simulation (40 sequences) data (i.e., baseline method in Figure 3). The performance is evaluated on a test dataset consisting of 8 independent CA simulations and the observed snapshots of the Chimney fire event. For each sequences of burned area, the first 4 snapshots, corresponding to 6, 12, 18, 24 hours after fire ignition, are used as input for the predictive surrogate model. The latter then predict the burned area for 30, 36, 42, 48 hours after fire ignition, as introduced in Section IV. For both surrogate models, the validation dataset consists of 8 independent CA simulations, different from the training and the test data.

The evolution of the loss value (MSE) against the number of training epochs is depicted in Figure 6 (a). It can be clearly observed that, despite some oscillations introduced by the generated data, the loss of the proposed approach converges faster than baseline, and reaches a lower stable value. Some quantitative metrics are used to assess the prediction performance as shown in Figure 6 (b). For comparison purpose, we also run the CA from the burned area of 24 hours after ignition. As mentioned in II, CA is a probabilistic model, thus the re-ran simulation will lead to a different burned area as the one in the test dataset. The blue bar stands for the Relative Mean Square Error (RMSE), which represents the mismatch normalized by the exact burned area. The red bar illustrates the online prediction time and the orange line represents the Structural Similarity Index Measure (SSIM), which measures the similarity between the true burned area and the prediction. All metrics are obtained using the average score on the 8 fire sequences in the test dataset. Consistent with our analysis for Figure 6 (a), the proposed approach shows a significant advantage in terms of both RMSE and SSIM compared to the baseline. In fact, the accuracy of the proposed surrogate model is close to a re-implementation of CA with a speed-up of 4 orders of magnitude.

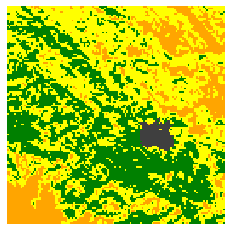

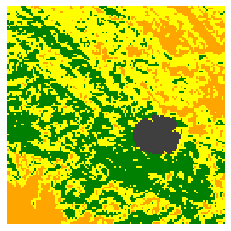

Two fire events in the test data are illustrated in Figure 7 and 8, respectively. In accordance with our findings in Figure 6, the proposed approach succeeds in delivering accurate predictions of the burned area. Only minor difference can be observed against the ground truth. The baseline approach, on the other hand, manages to roughly predict the progression of fire dissemination but fails in specifying the precise geometry of burned area. This is mainly due to the lack of training data. Figure 8 shows a particular case where the vegetation density is small near the ignition point, leading to a deceleration of fire spread. This phenomenon is only well understood by the proposed approach.

We also test the prediction performance of surrogate models applied on the Chimney fire event (Table I), which took place on August 13, 2016. The observed burned area is provided by the MODIS satellite as described in Section II. Since the observation data are on daily basis [7], image interpolation method proposed by [41] is applied to emulate the burned area at intervals of 6 hours. As shown in Figure 9, for both surrogate models larger area of mismatch can be noticed compared to the CA test data (Figure 7, 8). However, a substantial gain of the proposed approach can be found against the baseline. In a consistent manner, the averaged RMSE for proposed and baseline approaches are and , respectively.

These numerical results convincingly demonstrate the strength of including generated data in the surrogate model to improve the robustness and generalizability when predicting unseen scenarios.

VI Conclusion

One of the main challenges in the current wildfire forecasting study is that physics-based simulation processes incur a significant computational cost and demand a substantial allocation of time. In this study, we use 3D VQ-VAE for generating spatial-temporal consistent and physically realistic wildfire scenarios in a given ecoregion. This work is a first attempt to generate sequential fire spread instances via a generative deep learning model. Our approach is tested using data from a recent massive wildfire event in California. The proposed approach manages to generate wildfire spread of 8 days in 0.3 seconds, resulting in a speed-up of 4 orders of magnitude compared to the state-of-the-art CA model. The generated data can be used to assess the likelihood of high fire susceptibility in the given ecoregion. Furthermore, numerical results show that the accuracy and the generalizability of a fire predictive model can be significantly enhanced by including generated fire scenarios in the training dataset.

As a proof of concept, initial fire simulations are produced from CA as an example of physics-based models. A future investigation calls for additional work to incorporate more advanced fire behavior models such as FARSITE [42] and SPARK [43], which take into account, for instance, surface-to-crown fire transition [44] and fire spotting. Future work can also be considered to combine the generative and predictive models in an end-to-end framework.

Acronyms

- NN

- Neural Network

- DNN

- Deep Neural Network

- ML

- Machine Learning

- MAE

- Mean Absolute Error

- LA

- Latent Assimilation

- DA

- Data Assimilation

- PR

- Polynomial Regression

- AE

- Autoencoder

- VAE

- Variational Autoencoder

- CAE

- Convolutional Autoencoder

- VAE

- Variational Autoencoder

- SVM

- Support Vector Machine

- MTT

- Minimum Travel Time

- BLUE

- Best Linear Unbiased Estimator

- 3D-Var

- 3D Variational

- GAN

- Generative Adversarial Network

- RNN

- Recurrent Neural Network

- CNN

- Convolutional Neural Network

- SSIM

- Structural Similarity Index Measure

- LSTM

- long short-term memory

- POD

- Proper Orthogonal Decomposition

- PCA

- Principal Component Analysis

- PC

- principal component

- SVD

- Singular Value Decomposition

- ROM

- reduced-order modelling

- CFD

- computational fluid dynamics

- 1D

- one-dimensional

- 2D

- two-dimensional

- NWP

- numerical weather prediction

- RMSE

- Relative Mean Square Error

- MSE

- Mean Square Error

- S2S

- sequence-to-sequence

- R-RMSE

- relative root mean square error

- BFGS

- Broyden–Fletcher–Goldfarb–Shanno

- LHS

- Latin Hypercube Sampling

- AI

- artificial intelligence

- DL

- Deep Learning

- PIV

- Particle Image Velocimetry

- LIF

- Laser Induced Fluorescence

- KNN

- K-nearest Neighbours

- DT

- Decision Tree

- RF

- Random Forest

- KF

- Kalman filter

- CART

- Classification And Regression Tree

- CA

- Cellular Automata

- MLP

- Multi Layer Percepton

- GLA

- Generalised Latent Assimilation

- 3Dvar

- Three-dimensional variational data assimilation

- 4Dvar

- Four-dimensional variational data assimilation

- KLD

- Kullback–Leibler Divergence

- MODIS

- Moderate Resolution Imaging Spectroradiometer

- VIIRS

- Visible Infrared Imaging Radiometer Suite

Appendix: parameter studies

Here we perform additional numerical tests for parameter studies, regarding the value of and (Equation (22) and (24)), and the number of generated videos used in training the surrogate model

VI-A VQ-VAE parameters: and

The parameter determines the relative weight of while determines the level of additional noise when generating synthetic fire spread videos. A small value of will lead to a generated video that is very similar to the input due to the reconstruction loss. When the value of the parameter exceeds a certain threshold, it engenders a diminution in controllability and an increase in noise levels within the generated video, as shown in Figure 10.

On the other hand, the coefficient balances the weight of the commit loss. When the value is set excessively high, it will additionally introduce unrealistic noises in the generated scenarios, as depicted in Figure 10.

These experiments illustrate the necessity of making thoughtful parameter selections during the training and application of the generative model.

VI-B Number of generated videos in the training set

In order to delve deeper into the influence of the number of generated fire scenarios during the training process, we conducted experiments where the surrogate model was trained using a range of 50 to 500 generated fire videos. Subsequently, we assessed the performance of the trained surrogate model, as depicted in Figure 11.

The results clearly demonstrate that the performance of the surrogate model exhibits a noticeable improvement as the number of generated videos in the training data increases. The performance stabilizes once the number of generated videos exceeds four hundred. Notably, it is important to highlight that employing a small number of generated videos may result in a deterioration of the prediction performance.

Acknowledgements

The authors thank Dr. Yuhang Huang and Prof. Yufang Jin from University of California, Davis for data curation of the Chimney fire burned area. The authors thank Mr. Bo Pang from Imperial College London for runing the MTT fire simulation for comparison. This research is funded by the Leverhulme Centre for Wildfires, Environment and Society through the Leverhulme Trust, grant number RC-2018-023. This work is partially supported by the EP/T000414/1 PREdictive Modelling with QuantIfication of UncERtainty for MultiphasE Systems (PREMIERE).

References

- [1] A. Alexandridis, D. Vakalis, C. Siettos, and G. Bafas, “A cellular automata model for forest fire spread prediction: The case of the wildfire that swept through spetses island in 1990,” Applied Mathematics and Computation, vol. 204, no. 1, pp. 191–201, 2008.

- [2] J. G. Freire and C. C. DaCamara, “Using cellular automata to simulate wildfire propagation and to assist in fire management,” Natural hazards and earth system sciences, vol. 19, no. 1, pp. 169–179, 2019.

- [3] M. A. Finney, “Fire growth using minimum travel time methods,” Canadian Journal of Forest Research, vol. 32, no. 8, pp. 1420–1424, 2002.

- [4] M. M. Valero, L. Jofre, and R. Torres, “Multifidelity prediction in wildfire spread simulation: Modeling, uncertainty quantification and sensitivity analysis,” Environmental Modelling & Software, vol. 141, p. 105050, 2021.

- [5] G. D. Papadopoulos and F.-N. Pavlidou, “A comparative review on wildfire simulators,” IEEE systems Journal, vol. 5, no. 2, pp. 233–243, 2011.

- [6] Q. Zhu, F. Li, W. J. Riley, L. Xu, L. Zhao, K. Yuan, H. Wu, J. Gong, and J. Randerson, “Building a machine learning surrogate model for wildfire activities within a global earth system model,” Geoscientific Model Development, vol. 15, no. 5, pp. 1899–1911, 2022.

- [7] S. Cheng, I. C. Prentice, Y. Huang, Y. Jin, Y.-K. Guo, and R. Arcucci, “Data-driven surrogate model with latent data assimilation: Application to wildfire forecasting,” Journal of Computational Physics, p. 111302, 2022.

- [8] C. Zhong, S. Cheng, M. Kasoar, and R. Arcucci, “Reduced-order digital twin and latent data assimilation for global wildfire prediction,” Natural Hazards and Earth System Sciences, vol. 23, no. 5, pp. 1755–1768, 2023.

- [9] P. Jain, S. C. Coogan, S. G. Subramanian, M. Crowley, S. Taylor, and M. D. Flannigan, “A review of machine learning applications in wildfire science and management,” Environmental Reviews, vol. 28, no. 4, pp. 478–505, 2020.

- [10] S. Saponara, A. Elhanashi, and A. Gagliardi, “Real-time video fire/smoke detection based on cnn in antifire surveillance systems,” Journal of Real-Time Image Processing, vol. 18, no. 3, pp. 889–900, 2021.

- [11] P. Li and W. Zhao, “Image fire detection algorithms based on convolutional neural networks,” Case Studies in Thermal Engineering, vol. 19, p. 100625, 2020.

- [12] A. Achu, J. Thomas, C. Aju, G. Gopinath, S. Kumar, and R. Reghunath, “Machine-learning modelling of fire susceptibility in a forest-agriculture mosaic landscape of southern india,” Ecological Informatics, vol. 64, p. 101348, 2021.

- [13] J. Pan, J. Dong, Y. Liu, J. Zhang, J. Ren, J. Tang, Y.-W. Tai, and M.-H. Yang, “Physics-based generative adversarial models for image restoration and beyond,” IEEE transactions on pattern analysis and machine intelligence, vol. 43, no. 7, pp. 2449–2462, 2020.

- [14] M. Cheng, F. Fang, I. M. Navon, J. Zheng, X. Tang, J. Zhu, and C. Pain, “Spatio-temporal hourly and daily ozone forecasting in china using a hybrid machine learning model: Autoencoder and generative adversarial networks,” Journal of Advances in Modeling Earth Systems, vol. 14, no. 3, p. e2021MS002806, 2022.

- [15] S. Otten, S. Caron, W. de Swart, M. van Beekveld, L. Hendriks, C. van Leeuwen, D. Podareanu, R. Ruiz de Austri, and R. Verheyen, “Event generation and statistical sampling for physics with deep generative models and a density information buffer,” Nature communications, vol. 12, no. 1, p. 2985, 2021.

- [16] M. Cheng, F. Fang, C. Pain, and I. Navon, “An advanced hybrid deep adversarial autoencoder for parameterized nonlinear fluid flow modelling,” Computer Methods in Applied Mechanics and Engineering, vol. 372, p. 113375, 2020.

- [17] L. Chagot, C. Quilodrán-Casas, M. Kalli, N. M. Kovalchuk, M. J. Simmons, O. K. Matar, R. Arcucci, and P. Angeli, “Surfactant-laden droplet size prediction in a flow-focusing microchannel: a data-driven approach,” Lab on a Chip, vol. 22, no. 20, pp. 3848–3859, 2022.

- [18] W. Yan, Y. Zhang, P. Abbeel, and A. Srinivas, “Videogpt: Video generation using vq-vae and transformers,” arXiv preprint arXiv:2104.10157, 2021.

- [19] X. Chen, Y. Duan, R. Houthooft, J. Schulman, I. Sutskever, and P. Abbeel, “Infogan: Interpretable representation learning by information maximizing generative adversarial nets,” Advances in neural information processing systems, vol. 29, 2016.

- [20] A. Van Den Oord, O. Vinyals, et al., “Neural discrete representation learning,” Advances in neural information processing systems, vol. 30, 2017.

- [21] E. D. Kuligowski, E. H. Walpole, R. Lovreglio, and S. McCaffrey, “Modelling evacuation decision-making in the 2016 chimney tops 2 fire in gatlinburg, tn,” International journal of wildland fire, vol. 29, no. 12, pp. 1120–1132, 2020.

- [22] A. Chatterjee, “An introduction to the proper orthogonal decomposition,” Current science, pp. 808–817, 2000.

- [23] D. Xiao, F. Fang, A. G. Buchan, C. C. Pain, I. M. Navon, and A. Muggeridge, “Non-intrusive reduced order modelling of the navier–stokes equations,” Computer Methods in Applied Mechanics and Engineering, vol. 293, pp. 522–541, 2015.

- [24] H. Gong, S. Cheng, Z. Chen, and Q. Li, “Data-enabled physics-informed machine learning for reduced-order modeling digital twin: Application to nuclear reactor physics,” Nuclear Science and Engineering, pp. 1–26, 2022.

- [25] M. Amendola, R. Arcucci, L. Mottet, C. Q. Casas, S. Fan, C. Pain, P. Linden, and Y.-K. Guo, “Data assimilation in the latent space of a neural network,” 2020.

- [26] N. Geneva and N. Zabaras, “Transformers for modeling physical systems,” Neural Networks, vol. 146, pp. 272–289, 2022.

- [27] S. Hochreiter, “The vanishing gradient problem during learning recurrent neural nets and problem solutions,” International Journal of Uncertainty, Fuzziness and Knowledge-Based Systems, vol. 6, no. 02, pp. 107–116, 1998.

- [28] L. Giglio, W. Schroeder, and C. O. Justice, “The collection 6 modis active fire detection algorithm and fire products,” Remote Sensing of Environment, vol. 178, pp. 31–41, 2016.

- [29] W. Schroeder, P. Oliva, L. Giglio, and I. A. Csiszar, “The new viirs 375 m active fire detection data product: Algorithm description and initial assessment,” Remote Sensing of Environment, vol. 143, pp. 85–96, 2014.

- [30] E. Scaduto, B. Chen, and Y. Jin, “Satellite-based fire progression mapping: A comprehensive assessment for large fires in northern california,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 13, pp. 5102–5114, 2020.

- [31] S. Cheng, Y. Jin, S. P. Harrison, C. Quilodrán-Casas, I. C. Prentice, Y.-K. Guo, and R. Arcucci, “Parameter flexible wildfire prediction using machine learning techniques: Forward and inverse modelling,” Remote Sensing, vol. 14, no. 13, p. 3228, 2022.

- [32] S. A. Drury, H. M. Rauscher, E. M. Banwell, S. Huang, and T. L. Lavezzo, “The interagency fuels treatment decision support system: functionality for fuels treatment planning,” Fire Ecology, vol. 12, no. 1, pp. 103–123, 2016.

- [33] K. Baker, “Singular value decomposition tutorial,” The Ohio State University, vol. 24, 2005.

- [34] S. Cheng, J. Chen, C. Anastasiou, P. Angeli, O. K. Matar, Y.-K. Guo, C. C. Pain, and R. Arcucci, “Generalised latent assimilation in heterogeneous reduced spaces with machine learning surrogate models,” arXiv preprint arXiv:2204.03497, 2022.

- [35] D. P. Kingma and M. Welling, “Auto-encoding variational bayes,” arXiv preprint arXiv:1312.6114, 2013.

- [36] J. R. Hershey and P. A. Olsen, “Approximating the kullback leibler divergence between gaussian mixture models,” in 2007 IEEE International Conference on Acoustics, Speech and Signal Processing-ICASSP’07, vol. 4. IEEE, 2007, pp. IV–317.

- [37] D. Bank, N. Koenigstein, and R. Giryes, “Autoencoders,” arXiv preprint arXiv:2003.05991, 2020.

- [38] Z.-S. Liu, W.-C. Siu, and Y.-L. Chan, “Photo-realistic image super-resolution via variational autoencoders,” IEEE Transactions on Circuits and Systems for video Technology, vol. 31, no. 4, pp. 1351–1365, 2020.

- [39] M. Fraccaro, S. K. Sønderby, U. Paquet, and O. Winther, “Sequential neural models with stochastic layers,” Advances in neural information processing systems, vol. 29, 2016.

- [40] X. Chen and K. He, “Exploring simple siamese representation learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 15 750–15 758.

- [41] A. Schenk, G. Prause, and H.-O. Peitgen, “Efficient semiautomatic segmentation of 3d objects in medical images,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2000, pp. 186–195.

- [42] M. A. Finney, FARSITE, Fire Area Simulator–model development and evaluation. US Department of Agriculture, Forest Service, Rocky Mountain Research Station, 1998, no. 4.

- [43] J. E. Hilton, J. E. Leonard, R. Blanchi, G. J. Newnham, K. Opie, A. Power, C. Rucinski, and W. Swedosh, “Radiant heat flux modelling for wildfires,” Mathematics and Computers in Simulation, vol. 175, pp. 62–80, 2020.

- [44] D. Weise, J. Cobian-Iñiguez, and M. Princevac, “Surface to crown transition,” in S. L. Manzello, ed. Encyclopedia of Wildfires and Wildland-Urban Interface (WUI) Fires. Cham, Switzerland: Springer, Cham. 5 p., 2018.