A framework for massive scale personalized promotion

Abstract.

Technology companies building consumer-facing platforms may have access to massive-scale user population. In recent years, promotion with quantifiable incentive has become a popular approach for increasing active users on such platforms. On one hand, increased user activities can introduce network effect, bring in advertisement audience, and produce other benefits. On the other hand, massive-scale promotion causes massive cost. Therefore making promotion campaigns efficient in terms of return-on-investment (ROI) is of great interest to many companies.

This paper proposes a practical two-stage framework that can optimize the ROI of various massive-scale promotion campaigns. In the first stage, users’ personal promotion-response curves are modeled by machine learning techniques. In the second stage, business objectives and resource constraints are formulated into an optimization problem, the decision variables of which are how much incentive to give to each user. In order to do effective optimization in the second stage, counterfactual prediction and noise-reduction are essential for the first stage. We leverage existing counterfactual prediction techniques to correct treatment bias in data. We also introduce a novel deep neural network (DNN) architecture, the deep-isotonic-promotion-network (DIPN), to reduce noise in the promotion response curves. The DIPN architecture incorporates our prior knowledge of response curve shape, by enforcing isotonicity and smoothness. It out-performed regular DNN and other state-of-the-art shape-constrained models in our experiments.

1. Introduction

Digital platforms nowadays serve various demands of societies, for example, e-commerce, ride sharing, and personal finance. For-profit platforms have strong motivation to grow the sizes of their active user bases, because larger active user bases introduce more network effect, more advertisement audience, more cash deposit, etc.

To convert inactive users to active users, one established way is to use personalized recommendation systems (Covington et al., 2016)(Gomez-Uribe and Hunt, 2015). A platform can infer users’ personal interests from profile information and behavior data, and recommend content accordingly. Recommendation systems rely on good product design, ”big data”, efficient machine learning algorithms(Sarwar et al., 2001), and high-quality engineering systems (Amatriain and Basilico, 2016).

Recently, the approach of using promotion incentive, such as coupon, to convert users has become popular (Christino et al., 2019)(Li et al., 2019). To enjoy the incentive, users are required to finish specified tasks, for example subscribing to a service, purchasing a product, sharing promotional information on a social network, etc. The key decision to make in such promotion campaigns is how much incentive to give to each user.

Our work is in the hypothetical context of Alipay offline payment marketing campaign, though it can be easily generalized to other incentive promotion campaigns. Gaining offline payment market share is a main business objective of Alipay. Ren-chuan-ren-hong-bao (social network based red packet) is the largest marketing campaign hosted by Alipay to achieve this goal. In this campaign, Alipay granted coupons to customers to incentivize them to make mobile payments with the Alipay mobile app. Given its marketing campaign budget, the company needed to determine the value of the coupon given to each user to maximize overall user adoption. We illustrate the marketing campaign in Figure 1.

We propose a two-stage framework for solving the personalized incentive decision problem. In the first stage, we model users’ personal promotion-response curves with machine learning algorithms. In the second stage, we formulate the problem as a linear programming (LP) problem and solve it by established LP algorithms.

In practice, modeling promotion-response curves is challenging due to data sparsity and noise. Real-world promotion-response datasets usually lack samples for certain incentive values, even though the total amount of samples is large. Such sparsity combined with noise causes inaccuracy in response curve modeling and sub-optimal decision in incentives. We introduce a novel isotonic neural network architecture, the deep-isotonic-promotion-network (DIPN), to alleviate this problem. DIPN incorporates our prior knowledge of the response curve shape by enforcing isotonicity and regularizing for smoothness. It out-performed regular DNN and other state-of-the-art shape-constrained models in our experiments. Figure 2 illustrates such an example.

Another well known challenge for response curve modeling is treatment bias in historical data. If data samples are not collected through randomized trials, naively fitting the relationship between incentive and response cannot capture the true causal effect of incentive. Figure 3 illustrates an example on how a biased dataset causes sub-optimal incentive decision. In real-world marketing campaigns, collecting fully randomized incentive samples is cost-ineffective, because it means randomly giving a large amount of users random amount of incentives. We use the inverse propensity score (IPS) (Austin, 2011) technique to correct treatment bias.

2. Related work

2.1. Large-scale or personalized optimization problems based on prediction

Many companies, e.g. Linkedin, Pinterest, Yahoo!, have developed solutions for large-scale optimization based on predictive models. (Xu et al., 2015) focused on online advertisement pacing. The authors developed user response prediction models and optimized for performance goals under budget constraints. (Agarwal et al., 2012) focused on online content recommendation. The authors developed personalized click shaping plans by solving a LP problem in its dual space. (Gupta et al., 2016)(Gupta et al., 2017)(Zhao et al., 2018) study the problem of email volume control. The predicted responses at different levels of email volume served as coefficients of engagement maximization problems. To our knowledge, there are few published studies on large-scale personalized incentive optimization.

2.2. Causal inference and counterfactual prediction

Incentive response modeling should establish causal relationship between incentive and user response. The model is used in a counterfactual way to answer the question ”what if users are given incentive levels different from that is observed”. There is a large volume of literature on counterfactual modeling, e.g. (Bonner and Vasile, 2018)(Hartford et al., 2016)(Swaminathan and Joachims, 2015). We use inverse propensity score (IPS) (Austin, 2011)(Rosenbaum and Rubin, 1983) to weight sample data when the data collection process is not randomized. This is assumed in our framework unless otherwise stated.

2.3. Shape-constrained models

Shape constraints include constraints on monotonicity and convexity (concavity). They are a form of regularization, introducing bias and prior knowledge. Shape constraints are helpful in below scenarios.

-

•

Monotonicity or convexity (concavity) is desired for interpretability. For example, in economy theory, marginal gain on increasing promotion incentive should be positive but diminishing (Kahneman and Tversky, 2013). In our experience, promotion response is non-decreasing, but the marginal gain does not necessarily decrease. We thus propose to apply just monotonicity constraint to promotion response models.

-

•

Prior knowledge on function shapes exists, but training data is too sparse to guarantee such shapes without regularization. In our experience, this is usually true for promotion response modeling, because a reasonable model is required as early as possible after a promotion campaign kicks off, allowing very limited time for training data collection. At the same time, we do know that response rate should monotonically increase with incentive.

The related works section of (Gupta et al., 2018) summarized four categories of shape-constrained models:

-

•

General additive models (GAMs). A GAM is a summation of multiple one dimensional functions. Each of the 1-d function takes one input feature, and is responsible for enforcing the desired shape for that input.

-

•

Max-affine functions, which express piece-wise linear convex functions as the max of a set of affine functions. If the derivative with respect to an input is restricted to be positive/negative, monotonicity can also be enforced.

-

•

Monotonic neural networks. Neural networks can be viewed as recursive functions, with the output of one layer serving as the next layer’s input. For a recursive function to be convex increasing, it is sufficient if its input function and the function itself are convex increasing. For a recursive function to be convex, it is sufficient if its input function is convex and the recursion is convex increasing.

-

•

Lattice networks (You et al., 2017). The simplest form of lattice network is linear interpolation built on a grid of input space. Monotonicity and convexity are enforced as linear constraints on first and second order derivatives when solving for the model. The grid dimension grows exponentially with the input dimension, so the authors ensembled multiple low-dimension lattice networks built on different subsets of inputs to handle high dimensional data.

(Gupta et al., 2018) showed that lattice network has more expressive power than monotonic neural network since the former allows convex and concave inputs to coexist, and that lattice network is at least as good as monotonic neural network in accuracy.

Our work proposes the DIPN architecture (discussed in section 4) that constrains NN to be monotonic for one input i.e. the incentive level. It does not aim to compete with state-of-the-art shape constrained models in terms of expressiveness, but instead aim to provide high accuracy and interpretability for promotion response modeling.

3. The personalized promotion framework

The two steps of our framework for making personalized incentive decisions are (1) incentive-response modeling and (2) user response maximization under incentive budget. As the second step is the decision making step, and the first step prepares coefficients for it, we start section 3 by describing the optimization problem in the second step assuming a incentive-response model is already available, and dive deep into our approach for the incentive-response model in section 4.

Table 1 summarizes mathematical notations necessary for this section.

| symbol | meaning |

|---|---|

| feature vector of user i | |

| incentive for user i | |

| response label for user i | |

| user response prediction function with two inputs: x is the user feature vector, and c is the incentive | |

| user cost prediction function for the k-th resource | |

| the j-th incentive level bin after discretizing the incentive space, | |

| total number of incentive level bins after discretizing the incentive space | |

| decision variable representing the probability of choosing for the i-th user | |

| total campaign budget | |

| budget per capita |

The objective of our formulation is to maximize total future user responses, and the constraint is the limited budget. Here future response can an arbitrarily defined business metric, e.g. click-through rate or long-term user value.

| (1) |

3.1. Solving the optimization problem

Because the number of users is large, and is usually nonlinear and non-convex, Equation 1 can be difficult to solve. We can restrict promotion incentive to a fixed number of levels: , and use assignment probability variables to turn Equation 1 into an LP.

| (2) |

Solution of Equation 2 should be on one of the vertices of its feasible polytope, hence most of the values of should be or . For fractional in solutions, we treat as a probability simplex and sample from it. This is usually good enough for production usage.

Equation 2 can be solved by commercial solvers implementing off-the-shelf algorithms such as primal-dual or simplex. If the number of users is too large, Equation 2 can be solved by dual ascent (Boyd et al., 2011). Note the dual problem of the LP can be decomposed into many user-level optimization problems and solved in parallel. A few specialized large-scale algorithms can also be applied to problems with the structure of Equation 2, e.g. (Zhong et al., 2015)(Zhang et al., 2020).

One advantage of Equation 2 is that is computed before solving the optimization problem. Hence the specific choice of the functional form of does not affect the difficulty of the optimization problem. Whether is a logistic regression model or a DNN model is transparent to Equation 2.

Sometimes promotion campaigns have more than one constraints. A commonly seen example is that overlapped subgroups of users are subject to separate budget constraints. In general, we can model any constraints by predictive modeling, and then formulate a multiple-constraint problem. Suppose for each user there are kinds of costs modeled by:

| (3) |

The multiple-constraint optimization problem is:

| (4) |

A frequently seen alternative to constrain the total budget is to constrain budget per capita . The corresponding formulation is as below.

| (5) |

Equation 4 and Equation 5 are both LPs since and are pre-computed coefficients. They can be solved by the same solvers used for Equation 2.

3.2. Online decision making for new users

Sometimes it is desired to be able to make incentive decisions for a stream of incoming users. It is not possible to put such users together and solve one optimization problem beforehand. However, if we can assume the dual variables is stable over a short period of time, such as one hour, we can solve the optimization problem with users in the previous hour, and reuse the optimal dual variables in the next hour. This assumption is usually not applicable to general optimization problems, but when the amount of users is large and user population is stable, it holds. This approach can also be viewed from the perspective of shadow price (Boyd and Vandenberghe, 2004). The Lagrangian multiplier can be interpreted as the marginal objective gain when one more unit of budget is available. This marginal gain should not change rapidly for a stable user population.

We thus break down the optimization step into a dual variable solving step and a decision making step. The decision making step can make incentive decision for a single user. Consider the dual formulation of Equation 2, and let be the Lagrangian multiplier for the budget constraint:

| (6) |

If is given, Equation 6 can be decomposed into a per user optimization policy:

| (7) |

Equation 7 is applicable to unseen users as long as is known.

3.3. Challenges for two-stage framework

”User’s optimal incentive level” is the lowest level for a certain user to be converted. If we offer less incentive, we will lose a user conversion(situation A). If we offer more incentive, we waste a certain amount of marketing funds.

For illustration, we could relax constraint by supposing that optimal is on its boundary, omit the notation of index and rewrite formula 7 as

| (8) |

where .

Figure 2 illustrates wrong prediction leads to wrong best incentive level. In this section, Figure 4 illustrates two situations of them in more details. Considering is the optimal incentive level for user , according to Equation 7, we hope our prediction function satisfy . If due to lack of data, , incentive level will be chosen, and a wrong decision will be made.

Situation A: non-monotonic

the user response prediction function frequently gives a high prediction at a lower incentive level . And because , user didn’t get a satisfactory incentive, we will lose a customer.

In this situation, we introduce prior knowledge to constrain the response curve’s shape: users’ expected response monotonically increases with the promotion incentive. Therefore, must greater than zero.

Situation B: non-smooth

In other situations, a very high prediction is given at a higher incentive level . And because , marketing funds is wasted.

In situation B, we introduce another prior knowledge to constrain the response curve’s shape: the incentive-response curves are smooth. Therefore, should not be too large.

Based on the discussion of these two situations above, we introduce a novel deep-learning structure DIPN to avoid both situations as far as possible.

4. Deep isotonic promotion network (DIPN)

In practice, promotion events often do not last for long, so there is business interest to serve promotion-response models as soon as possible after a campaign starts. Given limited time accumulating training data, incorporating prior knowledge to facilitate modeling is desirable. We choose to enforce the promotion response to be monotonically increasing with incentive level.

DIPN is a DNN model designed for learning user promotion-response curve. DIPN predicts the response value for a given discretized incentive level and a user’s feature vector. We can get a user’s response curve by enumerating all incentive levels. The response curve learned by DIPN satisfies both monotonicity and smoothness. DIPN achieves this using its isotonic layer (discussed later). Incentive level, which is a one-dimensional discrete scalar, and user features are all inputs to the isotonic layer. While incentive level is inputted to the isotonic layer directly, user features can be transformed by other layers. DIPN consists of bias net and uplift net. The term uplift refers to response increment due to incentive increment. While prediction result of the bias net gives the user’s response estimate for minimum incentive, the uplift net learns the uplift response. The DIPN architecture is shown in Figure 5. In the remaining of section 4, we focus on explaining the isotonic layer and learning process.

4.1. Isotonic Embedding

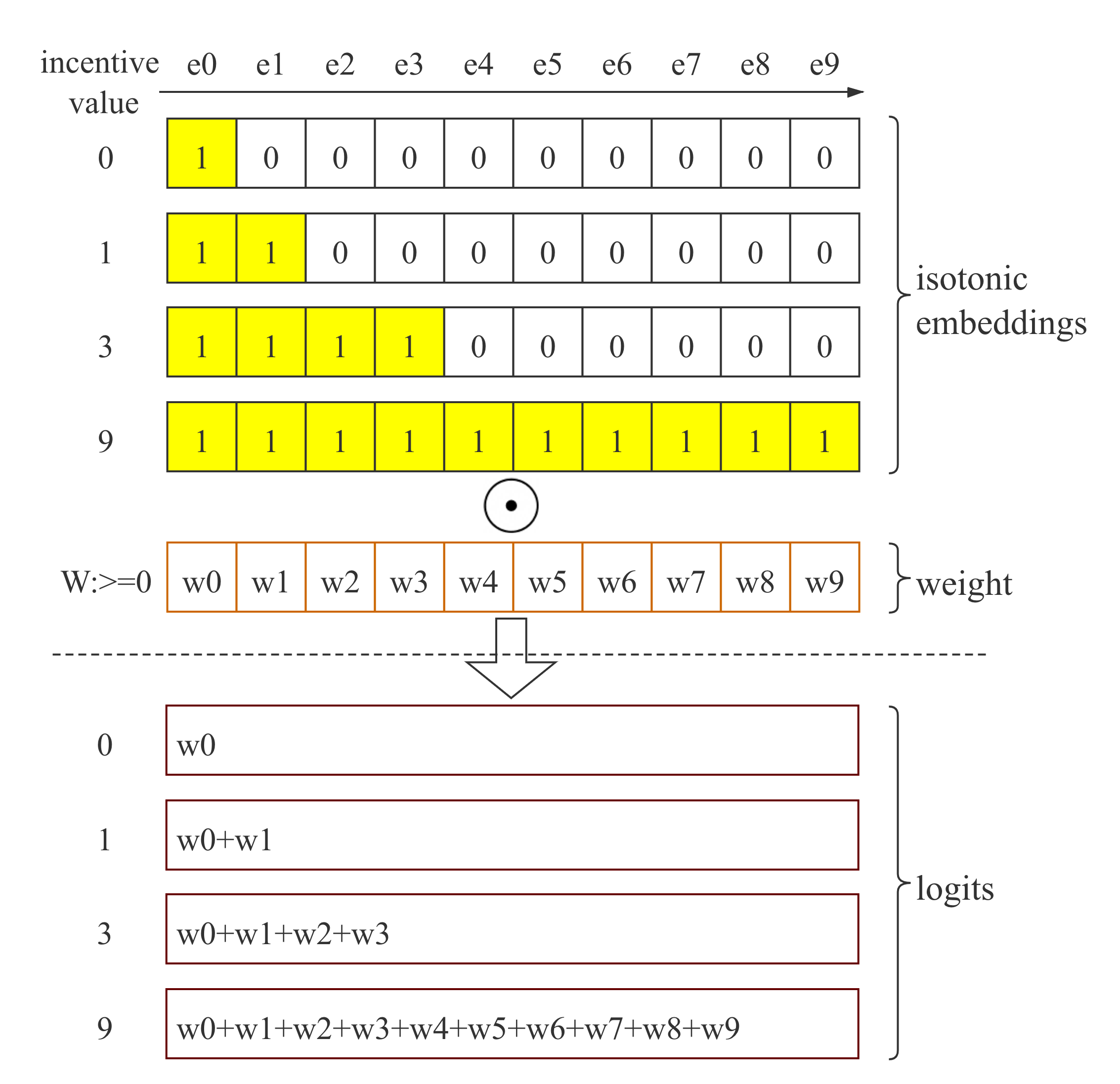

Isotonic embedding transforms an incentive level to a vector of binary values. Each digit in the vector represents one level. All digits representing levels lower than or equal to the input level are ones. We use to denote the -digit isotonic embedding of incentive level , and to denote the incentive level of the digit, thus

| (9) |

If we fit a logistic regression response curve with non-negative weights using isotonic embedding as input, the resulting curve will be monotonically increasing with incentive. Several examples are given in Figure 6.

| (10) |

It is trivial to show the monotonicity of since function is monotonic, and

| (11) |

4.2. Uplift Weight Representation

In DIPN, the non-negative weights are output of DNN layers. These weights are thus personalized. We name the personalized non-negative weight the uplift weight, because it is proportion to the uplift value, defined as the incremental response value corresponding to one unit increase in incentive value.

In a binary classification scenario, the prediction function of DIPN is:

| (12) |

where is the uplift weight representation, and is the isotonic embedding. is learned by a neural network using ReLU activation function in last layer so that is non negative .

For two consecutive incentive levels and , is a uplift measure for the incremental treatment effect of increasing incentive from to . To see this, approximate function by its first order expansion around :

| (13) |

where is function and is its first order derivative, . Hence in a small region surrounding , if can be seen as a constant, is proportional to the uplift value at .

4.3. Smoothness

Smoothness means that response value does not change much as the incentive level varies. Users’ incentive-response curves are usually smooth when fitted with sufficient amount of unbiased data. We therefore add regularization in the DIPN loss function to enforce smoothness.

| (14) |

where is the number of training data points, measures the degree of fitting to training data, measures smoothness of predicted response curve, and balances the two losses.

The definition of and the definition of are given in Equation 15 and Equation 16 respectively. User index in Equation 15 and user feature vector in Equation 16 are omitted for simplicity.

| (15) |

| (16) |

One necessary and sufficient condition of smoothness of the predicted response curve is the uplift values of consecutive incentive levels are as close as enough. As we have proven in subsection 4.2 that the uplift value can be approximated by the uplift weight representation, we want the difference of the uplift weights to be small enough. is added to denominator of to normalize the differences over all incentive levels.

4.4. Two Phases Learning

For stability, the training process is split into two phases-bias net learning phase (BLP) and uplift net learning phase (ULP).

BLP learns the bias net while ULP learns the uplift net. In BLP, only samples with lowest incentive are used for training, and only bias net is activated which is easy to converge.

In ULP, all samples are used and variables in bias net are fixed. The fixed bias net sets up a robust initial boundary for uplift net learning. Bias net’s prediction will be inputted to isotonic layer to enhance uplift net’s capability based on the consumption that users with similar bias are more likely to have similar uplift responses.

Another difference in UWLP is smoothness loss’s weight . will be decaying gradually in UWLP as we observed that larger helped model converging faster at the beginning of training. The logloss will dominates total loss eventually. ’s updating formula is given as follow:

| (17) |

where is initial upper bound of , is final lower bound of , controls decaying speed.

5. Experiment

In experiments, we focus on comparing DIPN with other response models, solving the same optimization problems Equation 2 with the same budget. Specifically, we compare (1) regular DNN, (2) ensemble Lattice network (You et al., 2017), and DIPN. For each model, we search for a good architecture configuration and hyperparameter setting, and calculate below metrics:

-

•

Logloss: Measures the likelihood of the fitted model to explain the observations.

-

•

AUC-ROC: Measures the correctness of the predicted probability order of being positive events.

-

•

Reverse pair rate (RPR): For each observation of user-incentive-response in the dataset, we make counterfactual predictions on the response of the same user given all possible incentives. For any pair of two incentives, if incentive is larger than , and the predicted response of is smaller than that of , we find one reversed pair. For incentives, there are pairs. If there are reversed pairs, the RPR is defined as . RPR can be viewed as the degree of violating the response model monotonicity. To obtain RPR for a population, we average all users’ RPR values.

-

•

Equal pair rate (EPR): Similar to RPR, but instead of counting the pairs of reversed pairs, EPR counts the pairs having equal predicted response. We consider low EPR as a good indicator for monotonicity.

-

•

Max local slope standard deviation (MLSS): Similar to RPR, for each user, we make counterfactual prediction on response for each incentive. For every two consecutive incentives and , assuming their predicted responses are and , we can compute the local slope . Consider a range on incentive , we collect all local slopes inside this range, compute their standard deviation. Across all such incentive ranges, we use the maximum local slope standard deviation as MLSS. This metric reflects smoothness of the response curve. Average of all users MLSS is used as a model’s MLSS.

-

•

Future response: Applying the strategy learned from training data to a new user population, following Equation 7, the average response rates of this population. Higher response rate is better. With synthetic datasets, for which we know the ground truth data generation process, we can use the true response expectation on the promotion given by the strategy to evaluate the response outcome. With real-world datasets, we can search in the holdout dataset for the same type of user with the same promotion given by the strategy, and consider all such users will show the observed response on this promotion. This approach can be viewed as importance sampling.

-

•

Future cost error: Similar to future response, applying the strategy learned from the training data to a holdout population, the user-average cost may exceed the business constraint. We can follow the evaluation method for the future response metric, instead of compute the response rates, compute the response induced cost that exceeds budget.

5.1. Synthetic and Production datasets

We use synthetic dataset, for which we know the ground truth of data generation process, to evaluate our promotion solution. The feature space consists of three 1-in-n categorical variables, with , , and categories, respectively. The joint distribution of the three categories consists of different combinations. For each combination, we randomly generate four parameters , , , and , where is the uniform distribution bounded by and . A curve is then generated as follows:

| (18) |

The discrete version is:

| (19) | |||

| (20) |

The curves of is used as the ground truth of the expected response on different incentive , for each joint category of users. Without loss of generality, we constrain the incentive range to be . It’s easy to see that the curve is monotonically increasing, convex if , and concave if .

To generate pseudo dataset with noise and and sparsity, we first generate a random integer between 1 and 1000 with equal probability, for each feature combination. With being the number of data points for this combination, we randomly choose a promotion integer between 1 and 100 with equal probability for each data point. With promotion and expected response , a label is generated with probability of being 1.

On this dataset, we generated 20000 data points, 5000 training samples, 5000 validation samples and 10000 testing samples.

The evaluation metrics for the response models are shown below tables. Table 2 shows the simulation results on synthetic data with . DIPN showed the highest future response rate and lowest future cost. Table 3 shows the simulation results on synthetic data with . Again, DIPN showed the highest future response rate and lowest future cost. Table 4 shows the results on a real promotion campaign, DIPN showed the lowest future cost and the same future response rate as that of DNN. Overall DIPN consistently outperformed other models in our test.

| DNN | Lattice | DIPN | |

|---|---|---|---|

| LogLoss(lower is better) | 0.5713 | 0.5772 | 0.5770 |

| AUC-ROC | 0.6976 | 0.6931 | 0.6967 |

| RPR(lower is better) | 0.153 | 0 | 0 |

| EPR(lower is better) | 0 | 0.048 | 0.001 |

| MLSS(lower is better) | 0.003 | 0.007 | 0.006 |

| Future response | 0.743 | 0.710 | 0.759 |

| Future cost() | 11.0 | 11.7 | 11.0 |

optimization problem cannot be solved.

| DNN | Lattice | DIPN | |

|---|---|---|---|

| LogLoss | 0.8189 | 0.6267 | 0.5822 |

| AUC-ROC | 0.7582 | 0.7579 | 0.7618 |

| RPR(lower is better) | 0.40 | 0.00 | 0.00 |

| EPR(lower is better) | 0 | 0.05 | 0.00 |

| MLSS(lower is better) | 0.33 | 0.01 | 0.01 |

| Future response | 0.625 | 0.685 | 0.694 |

| Future cost() | 7.5 | 11.0 | 10.9 |

optimization problem cannot be solved.

| DNN | Lattice | DIPN | |

|---|---|---|---|

| LogLoss | 0.2240 | 0.2441 | 0.2189 |

| AUC-ROC | 0.7623 | 0.5000 | 0.7722 |

| RPR(lower is better) | 0.28 | 0.00 | 0.00 |

| EPR(lower is better) | 0.00 | 1.00 | 0.08 |

| MLSS(lower is better) | 0.06 | 0.00 | 0.01 |

| Future response | 0.020 | 0.000 | 0.020 |

| Future cost() | 0.050 | 0.000 | 0.048 |

optimization problem cannot be solved.

5.2. Online Results

We deployed our solution at Alipay and evaluated it in multiple marketing campaigns using A/B tests. Our solution consistently showed better performance than baselines, and was eventually deployed to all users. We show A/B test results for three marketing campaigns for HuaBei. HuaBei is a online micro-loan service launched by Ant Financial. It has about 300 million users according to public data. The incentive is electronic cash voucher, with usability subject to different terms in different campaigns. These campaigns include:

-

•

Preferred Payment: the voucher can be cashed if a user set Huabei as the default payment method in the mobile application of Alipay.

-

•

New User: the voucher can be cashed if a user activates the Huabei service.

-

•

User Churn Intervention: the voucher can be cashed when a user uses Huabei to make a purchase.

All these campaigns have their corresponding business targets strongly correlated with voucher usage, so we use the usage rates as well as the monetary costs as evaluation metrics. Table 5 shows the relative improvements of DIPN compared to DNN model baselines. DIPN models use traffic in each experiment. 95 percentile confidence intervals are shown in square brackets. In all of the experiments, costs were significantly reduced. In two experiments, average voucher use rates were significantly increased.

| Cost (%) | Usage Rate (%) | |

|---|---|---|

| Payment Preferred | -6.05%[-8.63%, -3.47%] | 1.86%[-0.31%, 4.04%] |

| New User Gift | -8.58%[-10.51%, -6.66%] | 5.20%[3.16%, 7.23%] |

| User Churn Intervention | -9.42%[-12.99%, -5.84%] | 8.45%[4.53%, 12.37%] |

6. Conclusion

We focus on the problem of massive-scale personalized promotion in the internet industry. Such promotions typically require maximizing total user responses with limited budget. The decision variables for solving such problems are the incentive associated with the promotion.

We propose a two-step framework for solving such personalized promotion problems. In the first step, each user’s response to each incentive level is predicted, and stored as coefficients for the second step. Predicting all these coefficients for many users is a counterfactual prediction problem. We recommend using randomized promotion policy or appropriate causal inference techniques such as IPS to process training data. To deal with data sparsity and noise, we designed a neural network architecture that incorporate our prior knowledge: (1) users’ expected response monotonically increases with promotion incentive, and (2) the incentive-response curves are smooth. The proposed neural network, DIPN, ensures monotonicity and regularizes the magnitude of the first order derivative change. In the second step, an LP optimization problem can be formulated with the coefficients computed in the first step. We discussed its variants including (1) optimizing with more than one constraints, (2) supporting the constraint on average value, and (3) making decision for unseen users.

In experiments on synthetic datasets, we compared three algorithms: DNN, Deep Lattice Network, and our proposed DIPN. We show that DIPN has better performance in terms of data fitting, constraint violation, and promotion decisions. We also conducted a online experiment in one of Alipay’s promotion campaign, in which user engagement was the desired response. DIPN got 6.05% budget saving without losing user engagement, compared to DNN.

There are many possible extensions to the proposed framework. For example, the promotion response modeling can adapt to any causal inference techniques besides IPS(Dudík et al., 2011), for example causal embedding (Bonner and Vasile, 2018) and instrumental variable(Hartford et al., 2016). Also the optimization formulation can be changed according to business requirements, as long as the computational complexity can be handled.

References

- (1)

- Agarwal et al. (2012) Deepak Agarwal, Bee-Chung Chen, Pradheep Elango, and Xuanhui Wang. 2012. Personalized click shaping through lagrangian duality for online recommendation. In Proceedings of the 35th international ACM SIGIR conference on Research and development in information retrieval. ACM, 485–494.

- Amatriain and Basilico (2016) Xavier Amatriain and Justin Basilico. 2016. System Architectures for Personalization and Recommendation. https://medium.com/netflix-techblog/system-architectures-for-personalization-and-recommendation-e081aa94b5d8

- Austin (2011) Peter C Austin. 2011. An introduction to propensity score methods for reducing the effects of confounding in observational studies. Multivariate behavioral research 46, 3 (2011), 399–424.

- Bonner and Vasile (2018) Stephen Bonner and Flavian Vasile. 2018. Causal embeddings for recommendation. In Proceedings of the 12th ACM Conference on Recommender Systems. ACM, 104–112.

- Boyd et al. (2011) Stephen Boyd, Neal Parikh, Eric Chu, Borja Peleato, Jonathan Eckstein, et al. 2011. Distributed optimization and statistical learning via the alternating direction method of multipliers. Foundations and Trends® in Machine learning 3, 1 (2011), 1–122.

- Boyd and Vandenberghe (2004) Stephen Boyd and Lieven Vandenberghe. 2004. Convex optimization. Cambridge university press.

- Christino et al. (2019) Juliana Maria Magalhães Christino, Thaís Santos Silva, Erico Aurélio Abreu Cardozo, Alexandre de Pádua Carrieri, and Patricia de Paiva Nunes. 2019. Understanding affiliation to cashback programs: An emerging technique in an emerging country. Journal of Retailing and Consumer Services 47 (2019), 78 – 86. https://doi.org/10.1016/j.jretconser.2018.10.009

- Covington et al. (2016) Paul Covington, Jay Adams, and Emre Sargin. 2016. Deep Neural Networks for YouTube Recommendations. In Proceedings of the 10th ACM Conference on Recommender Systems. New York, NY, USA.

- Dudík et al. (2011) Miroslav Dudík, John Langford, and Lihong Li. 2011. Doubly robust policy evaluation and learning. arXiv preprint arXiv:1103.4601 (2011).

- Gomez-Uribe and Hunt (2015) Carlos A. Gomez-Uribe and Neil Hunt. 2015. The Netflix Recommender System: Algorithms, Business Value, and Innovation. ACM Trans. Manage. Inf. Syst. 6, 4, Article 13 (Dec. 2015), 19 pages. https://doi.org/10.1145/2843948

- Gupta et al. (2018) Maya Gupta, Dara Bahri, Andrew Cotter, and Kevin Canini. 2018. Diminishing Returns Shape Constraints for Interpretability and Regularization. In Advances in Neural Information Processing Systems. 6834–6844.

- Gupta et al. (2016) Maya Gupta, Andrew Cotter, Jan Pfeifer, Konstantin Voevodski, Kevin Canini, Alexander Mangylov, Wojciech Moczydlowski, and Alexander Van Esbroeck. 2016. Monotonic Calibrated Interpolated Look-up Tables. J. Mach. Learn. Res. 17, 1 (Jan. 2016), 3790–3836. http://dl.acm.org/citation.cfm?id=2946645.3007062

- Gupta et al. (2017) Rupesh Gupta, Guanfeng Liang, and Romer Rosales. 2017. Optimizing Email Volume For Sitewide Engagement. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management (CIKM ’17). ACM, New York, NY, USA, 1947–1955. https://doi.org/10.1145/3132847.3132849

- Hartford et al. (2016) Jason Hartford, Greg Lewis, Kevin Leyton-Brown, and Matt Taddy. 2016. Counterfactual prediction with deep instrumental variables networks. arXiv preprint arXiv:1612.09596 (2016).

- Kahneman and Tversky (2013) Daniel Kahneman and Amos Tversky. 2013. Prospect theory: An analysis of decision under risk. In Handbook of the fundamentals of financial decision making: Part I. World Scientific, 99–127.

- Li et al. (2019) Chenchen Li, Xiang Yan, Xiaotie Deng, Yuan Qi, Wei Chu, Le Song, Junlong Qiao, Jianshan He, and Junwu Xiong. 2019. Latent Dirichlet Allocation for Internet Price War. CoRR abs/1808.07621 (2019).

- Rosenbaum and Rubin (1983) Paul R Rosenbaum and Donald B Rubin. 1983. The central role of the propensity score in observational studies for causal effects. Biometrika 70, 1 (1983), 41–55.

- Sarwar et al. (2001) Badrul Sarwar, George Karypis, Joseph Konstan, and John Riedl. 2001. Item-based Collaborative Filtering Recommendation Algorithms. In Proceedings of the 10th International Conference on World Wide Web (WWW ’01). ACM, New York, NY, USA, 285–295. https://doi.org/10.1145/371920.372071

- Swaminathan and Joachims (2015) Adith Swaminathan and Thorsten Joachims. 2015. Counterfactual risk minimization: Learning from logged bandit feedback. In International Conference on Machine Learning. 814–823.

- Xu et al. (2015) Jian Xu, Kuang-chih Lee, Wentong Li, Hang Qi, and Quan Lu. 2015. Smart Pacing for Effective Online Ad Campaign Optimization. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’15). ACM, New York, NY, USA, 2217–2226. https://doi.org/10.1145/2783258.2788615

- You et al. (2017) Seungil You, David Ding, Kevin Canini, Jan Pfeifer, and Maya R. Gupta. 2017. Deep Lattice Networks and Partial Monotonic Functions. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17). Curran Associates Inc., USA, 2985–2993. http://dl.acm.org/citation.cfm?id=3294996.3295058

- Zhang et al. (2020) Xingwen Zhang, Feng Qi, Zhigang Hua, and Shuang Yang. 2020. Solving Billion-Scale Knapsack Problems. arXiv preprint arXiv:2002.00352 (2020).

- Zhao et al. (2018) Bo Zhao, Koichiro Narita, Burkay Orten, and John Egan. 2018. Notification Volume Control and Optimization System at Pinterest. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (KDD ’18). ACM, New York, NY, USA, 1012–1020. https://doi.org/10.1145/3219819.3219906

- Zhong et al. (2015) Wenliang Zhong, Rong Jin, Cheng Yang, Xiaowei Yan, Qi Zhang, and Qiang Li. 2015. Stock constrained recommendation in tmall. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. 2287–2296.