A Framework for High-throughput Sequence Alignment using Real Processing-in-Memory Systems

Abstract.

Sequence alignment is a memory bound computation whose performance in modern systems is limited by the memory bandwidth bottleneck. Processing-in-memory architectures alleviate this bottleneck by providing the memory with computing competencies. We propose Alignment-in-Memory (AIM), a framework for high-throughput sequence alignment using processing-in-memory, and evaluate it on UPMEM, the first publicly-available general-purpose programmable processing-in-memory system.

Our evaluation shows that a real processing-in-memory system can substantially outperform server-grade multi-threaded CPU systems running at full-scale when performing sequence alignment for a variety of algorithms, read lengths, and edit distance thresholds. We hope that our findings inspire more work on creating and accelerating bioinformatics algorithms for such real processing-in-memory systems.

Our code is available at https://github.com/safaad/aim.

1. Introduction

One of the most fundamental computational steps in most genomic analyses is sequence alignment. This step is formulated as an approximate string matching (ASM) problem (navarro2001guided, ), which typically uses a dynamic programming (DP) algorithm to optimally calculate the type, location, and number of differences in one of the two given genomic sequences. Such sequence alignment information is typically needed for DNA sequence alignment, gene expression analysis, taxonomy profiling of a multi-species metagenomic sample, rapid surveillance of disease outbreaks, and many other important genomic applications.

DP-based alignment algorithms, such as Needleman-Wunsch (NW) (needleman1970general, ) and Smith-Waterman-Gotoh (SWG) (gotoh1982improved, ), are computationally-expensive as they have quadratic time and space complexity (i.e., O() for a sequence length of ). It is mathematically proven that subquadratic alignment algorithm cannot exist (backurs2015edit, ). Recent attempts for improving sequence alignment tend to follow one of three key directions: (1) accelerating the DP algorithms using hardware accelerators, (2) accelerating the DP algorithms using heuristics and limited functionality, and (3) reducing the workload for alignment by filtering out highly dissimilar sequences using pre-alignment filtering algorithms. Comprehensive surveys have been done on these existing attempts (alser2020accelerating, ; alser2020technology, ; alser2022going, ).

The first direction accelerates exact sequence alignment using existing hardware devices, such as SIMD-capable multi-core CPUs, GPUs, and FPGAs, or using to-be-manufactured devices, such as application-specific integrated circuits (ASICs). One of the most recent alignment algorithms, the wavefront algorithm (WFA) (marco2020fast, ), indeed benefits from acceleration via SIMD (marco2020fast, ; marco2022optimal, ), GPUs (aguado2022wfa, ), and FPGAs (haghi2021fpga, ). Parasail (daily2016parasail, ), BWA-MEM2 (vasimuddin2019efficient, ), and mm2-fast (kalikar2022accelerating, ) all exploit SIMD-capable and multi-core CPUs to accelerate sequence alignment in read mapping. SillaX (fujiki2018genax, ) provides an order of magnitude of acceleration through specialization, but requires fabricating their architecture designs into real hardware, which is costly and performed at a semiconductor fabrication facility.

The second direction includes limiting the functionality of sequence alignment to performing only edit distance calculation, as in Edlib (vsovsic2017edlib, ), or limiting the number of calculated entries in the DP table, as in windowing/tiling the DP table (turakhia2018darwin, ; rizk2010gassst, ; arlazarov1970economical, ) and the X-drop algorithm (zhang2000greedy, ) implemented in one of the versions of KSW2 (li2018minimap2, ). This second direction is not mutually exclusive from the first and can also benefit from acceleration via SIMD (li2018minimap2, ), GPUs (ahmed2020gpu, ), and FPGAs (banerjee2018asap, ).

The third direction is to early and quickly detect any two dissimilar genomic sequences, which differ by more than a user-defined edit distance threshold, and exclude them from being aligned as their alignment result is not useful. Pre-alignment filtering usually saves a significant amount of time by avoiding DP-based alignment without sacrificing alignment accuracy or limiting the algorithm functionality as demonstrated when using even basic CPU implementations (rasmussen2006efficient, ; xin2013accelerating, ; rizk2010gassst, ; alser2020sneakysnake, ). Pre-alignment filtering can also benefit from hardware acceleration (xin2015shifted, ; alser2017gatekeeper, ; alser2017magnet1, ; kim2018grim, ; alser2019shouji, ; alser2020sneakysnake, ; cali2020genasm, ).

Regardless of which of these three directions are followed to improve performance, sequence alignment remains a fundamentally memory-bounded computation with a low data reuse ratio (gupta2019rapid, ; 10.1145/3383669.3398279, ; cali2020genasm, ; lavenier2020variant, ). Sequence alignment implementations suffer from wasted execution cycles due to the memory bandwidth bottleneck faced when moving data between the memory units and the computing units (e.g., CPUs, FPGAs, GPUs). This bottleneck exists because of the large disparity in performance between the compute units and memory units in modern computing systems. Following Moore’s law, the number of transistors in processors has been doubling about every two years, leading to an exponential increase in the power of the processor cores (moore1998cramming, ). However, memory performance did not scale comparably which has made the cost of transferring data between the main memory and the CPU more expensive than the computations to be performed by a CPU instruction on the data (mutlu2019processing, ; mutlu2020modern, ).

Processing-in-memory (PIM) architectures alleviate the data movement bottleneck of modern computing systems by providing the memory with computing competencies (mutlu2019processing, ; mutlu2020modern, ; wen2017rebooting, ; hajinazar2021simdram, ; hajinazar2021simdram1, ; ferreira2022pluto, ; mansouri2022genstore, ; puma, ; panther, ; huang2021mixed, ). PIM has been used to improve the performance of a wide variety of memory-bound computations, including sequence alignment as is the case in RAPID (gupta2019rapid, ), BioSEAL (10.1145/3383669.3398279, ), GenASM (cali2020genasm, ), and SeGraM (cali2022segram, ). However, these PIM-based sequence alignment solutions rely on emerging technologies that require either major changes to existing hardware or fabricating new hardware chips that are specially designed for the subject algorithm. These limitations pose a critical barrier to the adoption of PIM in sequence alignment.

UPMEM is the first publicly-available programmable PIM system in the market (devaux2019true, ). The UPMEM architecture integrates conventional DRAM arrays and general-purpose cores called DPUs into the same chip. This architecture allows computations to be performed near where the data resides, which reduces the latency imposed by data movement. UPMEM systems have been used to accelerate memory-bound applications such as database index search, compression/decompression, image reconstruction, genomics, and many others (DBLP:journals/corr/abs-2105-03814, ; gomez2021benchmarking, ; gomez2022benchmarking, ; giannoula2022sparsep, ; lavenier2016mapping, ; lavenier2016blast, ; lavenier2016dna, ; diab2022high, ; lavenier2020variant, ; nider2021case, ; zois2018massively, ).

Our goal is to evaluate the suitability of real PIM systems for accelerating sequence alignment algorithms. To this end, we introduce Alignment-in-Memory (AIM), a framework for PIM-based sequence alignment that targets the UPMEM system. AIM dispatches a large number of sequence pairs across different memory modules and aligns each pair using compute cores within the memory module where the pair resides. AIM supports multiple alignment algorithms including NW, SWG, GenASM, WFA, and WFA-adaptive. Each algorithm has alternate implementations that manage the UPMEM memory hierarchy differently and are suitable for different read lengths.

We evaluate AIM on a real UPMEM system and compare the throughput it can achieve with that achieved by server-grade multi-threaded CPU systems running at full scale. Our evaluation shows that a real PIM system can substantially outperform CPU systems for a wide variety of algorithms, read lengths, and edit distance thresholds. For example, for WFA-adaptive, the state-of-the-art sequence alignment algorithm, AIM achieves a speedup of up to 2.56 when the data transfer time between the CPU and DPUs is included, and up to 28.14 when that data transfer time is not included. These speedups to sequence alignment can translate into substantial performance improvements for widely used bioinformatics tools such as minimap2, where alignment can consume up to 76% of the time (kalikar2022accelerating, ), or BWA-MEM, where alignment can consume up to 47.2% of the time (vasimuddin2019efficient, ). Our results demonstrate that emerging real PIM systems are promising platforms for accelerating sequence alignment. We hope that our findings inspire more work on creating and accelerating bioinformatics algorithms for real PIM systems.

2. System and Methods

In this section, we provide an overview of PIM and the UPMEM PIM architecture, (Section 2.1). We then describe the overall workflow of our PIM-based sequence alignment framework (Section 2.2), how we manage data within the UPMEM PIM memory hierarchy (Section 2.3), and how each of the different sequence alignment algorithms are supported within our framework (Section 2.4).

2.1. PIM and the UPMEM PIM Architecture

Fig. 1 compares the organization of conventional CPU processing systems to PIM systems. In conventional systems, illustrated in Fig. 1(a), data resides in dynamic random access memory (DRAM) which is typically organized into multiple DRAM banks. This data is transferred to the CPU cores where computations are performed on the data. If the same data is reused for many computations, the cost of moving that data from memory to the CPU cores is amortized. However, if the data is not reused, the cost of moving the data is much higher than the cost of the computations on that data. In this case, the CPU cores will be idle most of the time waiting for memory accesses to complete, and the movement of data between the CPU cores and the memory becomes a major performance bottleneck.

In a PIM system, illustrated in Fig. 1(b), small PIM cores are placed in the memory chip near the memory banks. These PIM cores are typically much less powerful than CPU cores at performing computations, however they are much faster at accessing data from memory because of their proximity. Hence, if only a few computations are performed on the data, it is cheaper to perform these computations in the PIM cores than to move the data all the way to the CPU cores. In this case, programmers would load their code onto the PIM cores and execute it there where the data can be fetched quickly, instead of executing it on the CPU cores. Sequence alignment algorithms are well-suited for such a system because there are usually a few computations performed for each data element accessed from the intermediate data structures (e.g., entries of the DP table in NW).

The UPMEM PIM architecture (devaux2019true, ) is the first publicly-available general-purpose programmable (https://sdk.upmem.com) processing-in-DRAM architecture. An UPMEM system consists of a set of UPMEM DIMM modules plugged alongside main memory (standard DDR4 DIMMs) and acting as parallel co-processors to the host CPU. An UPMEM module is a DDR4-2400 DIMM with 16 PIM-enabled chips, where each chip consists of eight general-purpose processing PIM cores called DRAM Processing Units (DPUs). Each DPU is coupled with a 64MB DRAM bank called main RAM (MRAM). A current UPMEM system supports up to 20 UPMEM modules, which is equivalent to 2560 DPUs and 160GB of memory. A DPU is a 32-bit RISC processor with a proprietary Instruction Set Architecture (ISA), which can potentially run at 500 Mhz. Each DPU has 24 hardware threads that share a 24KB instruction memory (IRAM) and a 64KB scratchpad memory which is also called a working RAM (WRAM). The threads also share the 64MB MRAM bank coupled with the DPU. DPUs cannot communicate with other DPUs or access data outside their own MRAM bank. The host CPU transfers data between the main memory and the MRAM banks, and coordinates communication and synchronization across DPUs if needed.

2.2. Overall Workflow

Fig. 2 illustrates our framework’s overall workflow. In Step (1), we read the sequence pairs from an input file on disk and store them to the main memory. In Step (2), we transfer the sequence pairs from the main memory to the UPMEM DIMMs, distributing them evenly across the MRAM banks of the different DPUs. We use parallel transfers so that the sequence pairs are written to multiple MRAM banks simultaneously, thereby optimizing the transfer latency.

Next, we launch the DPU kernels and have each thread in each DPU work independently to align a set of sequence pairs. This parallelization scheme avoids inter-DPU and inter-thread synchronization, which can be expensive in the UPMEM system (DBLP:journals/corr/abs-2105-03814, ). In Step (3), each DPU thread performs a DMA transfer to fetch a sequence pair from MRAM and store it in WRAM. In Step (4), the DPU thread aligns the sequence pair and extracts the alignment operations using traceback. Our framework supports five different alignment algorithms: NW, SWG, GenASM, WFA, and WFA-adaptive. In Step (5), the DPU thread performs a DMA transfer to write the alignment score and operations to MRAM. The thread then moves on to process the next sequence pair, repeating Steps (3), (4), and (5) until all sequence pairs have been processed.

Finally, once all DPUs finish execution, we retrieve and save the alignment results. In Step (6), we transfer the alignment score and operations of each sequence pair from the UPMEM DIMMs to main memory using parallel transfers. In Step (7), we load the results from main memory and write them to an output file on disk.

2.3. Data Management

One important aspect of implementing alignment algorithms on the UPMEM PIM architecture efficiently is data management. Recall from Section 2.1 that an UPMEM DPU has access to two memory spaces for data: a 64KB WRAM and a 64MB MRAM. WRAM is fast and is accessed via loads and stores, whereas MRAM is slow and is accessed via DMA transfers to and from WRAM. Our framework always places the full sequence pair to be aligned and the full alignment result in WRAM for fast access because these data items are small. However, the intermediate data structures used by the alignment algorithms are relatively large. For this reason, it may not be possible to fit the entire intermediate data structure for each DPU thread in WRAM while supporting a large enough number of DPU threads to efficiently utilize the DPU pipeline. In this case, the constrained WRAM capacity can act as a limiting factor to parallelism.

To tackle this trade-off, we provide two alternative implementations of each alignment algorithm. This first implementation, illustrated in Fig. 3 (top), places the entire intermediate data structure for each DPU thread in WRAM, thereby prioritizing fast load/store access to the intermediate data structures. However, as the aligned sequences get larger, the WRAM capacity begins to constrain the number of DPU threads that can be launched, which causes the DPU pipeline to be underutilized. The second implementation, illustrated in Fig. 3 (bottom) places the intermediate data structure for each DPU thread in MRAM and transfers the parts of the data structure that need to be accessed to WRAM on-demand. With the WRAM capacity less of an issue, this approach enables using a larger number of DPU threads to better utilize the DPU pipeline. However, it incurs longer access latency to the intermediate data structures. The user of our framework can easily select which implementation they would like to use, and our framework automatically adapts the number of DPU threads launched depending on the implementation selected as well as the choice of alignment algorithm, read length, and error rate. We evaluate the trade-off between these two implementations in Section 3.5.

Another important aspect of data management is performing dynamic memory allocation efficiently. NW, SWG, and GenASM have fixed-size data structures that are allocated in WRAM or MRAM up-front. However, WFA and WFA-adaptive rely on dynamic memory allocation which is performed frequently as the algorithms run. Dynamic memory allocation is needed for allocating the wavefront components, which vary in size at run time depending on the read length and similarity. The UPMEM SDK has an incremental dynamic memory allocator which allocates memory incrementally from beginning to end and then frees it all at once. However, it is not suitable for our purpose because it is shared by all threads so it requires synchronization across threads to perform allocation, which degrades performance. To overcome this issue, we provide our own per-thread custom memory allocator to perform low-overhead dynamic memory allocation in the WFA and WFA-adaptive algorithms. The allocator also ensures that allocations are properly aligned such that they can be used in DMA transfers between WRAM and MRAM.

2.4. Supported Alignment Algorithms

2.4.1. Needleman-Wunsch (NW)

NW (needleman1970general, ) computes the alignment of sequences using a DP-table. In the implementation that only uses WRAM for intermediate alignment data structures, we have each DPU thread allocate its entire DP-table in WRAM, fill it, perform the traceback, and send the alignment result to MRAM. The size of the DP-table is where and are the lengths of the two aligned sequences. The data type of the DP-cells is . Therefore, the WRAM memory consumption per thread consists of the DP-table (), the sequence pair, and the traceback operations. In this case, the 64KB WRAM capacity limits the maximum read length to 175bp, where one DPU thread is executed consuming 61KB of WRAM. On the other hand, in the implementation that uses MRAM for intermediate alignment data structures, we store the DP-table of each DPU thread in MRAM and use WRAM to store the neighboring DP-table cells of the current cell being computed. In this case, the 64MB MRAM capacity limits the maximum read length to around 4Kbp. NW uses the linear gap model to compute the alignment score. In our evaluation, we set the scoring parameters to (match cost), (mismatch cost), and (deletion/insertion cost).

2.4.2. Smith-Waterman-Gotoh (SWG)

SWG (gotoh1982improved, ) resembles NW, but uses an affine gap model which treats opening new gaps and extending existing gaps differently. To do so, SWG uses three DP-tables – Matching (M), Insertion (I), and Deletion (D) – each of size . In the implementation that only uses WRAM for intermediate alignment data structures, we have each DPU thread store all three DP-tables in WRAM. Since the memory usage of SWG is higher than that of NW, the maximum read length that can be used in this case is only 100bp. On the other hand, in the implementation that uses MRAM for intermediate alignment data structures, we store the three DP-tables of each DPU thread in MRAM and use WRAM to store the neighboring DP-table cells of the current cell being computed. In this case, the maximum read length that can be used is around 2.5Kbp. We physically store the three tables as a single table where each cell has three consecutive values. By doing so, we can transfer cells from all three tables with the same DMA transfer, thereby amortizing the cost of transferring data from MRAM over fewer DMA transfers. In our evaluation, we set the scoring parameters to (match cost), (mismatch cost), (deletion/insertion opening cost), and (deletion/insertion extension cost).

2.4.3. GenASM

GenASM (cali2020genasm, ) is a recently proposed alignment algorithm that modifies and adds a traceback method to the bitap algorithm (10.1145/135239.135243, ; wu1992fast, ). It uses the affine gap model and takes as an input the maximum number of edit distances () allowed while computing the alignment. In the implementation that only uses WRAM for intermediate alignment data structures, we have each DPU thread use WRAM to store the pattern bit-mask for each character in the alphabet, two status bit-vectors to hold the partial alignment between subsequences of the sequences in the pair, and four intermediate bit-vectors for each edit case (matching, substitution, deletion, and insertion). On the other hand, in the implementation that uses MRAM for intermediate alignment data structures, we store the pattern bit-mask and the two status bit-vectors in MRAM and transfer parts of them to WRAM as needed. However, the four intermediate bit vectors are still allocated in WRAM since they are small in size. In our evaluation, we set according to the read length and error rate used.

2.4.4. Wavefront Algorithm (WFA)

WFA (marco2020fast, ) is the state-of-the-art affine gap alignment algorithm that computes exact pairwise alignments efficiently using wavefronts in the DP-table. Each wavefront represents an alignment score, and the algorithm finds successive wavefronts (i.e., computes increasing-score partial alignments) until reaching the optimal alignment. Hence, the complexity of WFA is , where is the alignment score. As the alignment score increases, WFA takes longer to execute and consumes more memory because it spans more diagonals. For this reason, WFA-adaptive (marco2020fast, ), a heuristic variant of WFA, reduces the number of the spanned diagonals by eliminating outer diagonals that are unlikely to lead to an optimal alignment. Note that both WFA and WFA-adaptive have linear space complexity, which makes them representative of other linear space sequence alignment algorithms (myers1988optimal, ; durbin1998biological, ) that could also benefit from our framework.

In our framework, we provide implementations of both WFA and WFA-adaptive. In the implementations that only use WRAM for intermediate alignment data structures, we have each DPU thread use WRAM to store all the wavefront components. On the other hand, in the implementations that use MRAM for intermediate alignment data structures, we store all the wavefront components in MRAM, and keep the addresses of the components in WRAM so they can be found when needed. To compute a new wavefront component , a DPU thread transfers from MRAM to WRAM only the components it needs. After computing , the DPU thread transfers the result from WRAM to MRAM. In our evaluation, we set the scoring parameters to , , , and .

3. Evaluation

3.1. Experimental Setup

We compare the performance of our proposed framework to a mutli-threaded CPU baseline that uses OpenMP to align multiple sequence pairs in parallel. The baseline CPU implementations of NW, SWG, WFA, and WFA-adaptive are taken from the original WFA repository (marco2020fast, ), and the baseline CPU implementation of GenASM is taken from the GenASM repository (cali2020genasm, ).

We evaluate our PIM implementations on an UPMEM system with 2560 DPUs (20 UPMEM-DIMMs) running at 425MHz. We evaluate the CPU implementations on three different server-grade CPU systems shown in Table 1. We use execution time as the basis for comparison, which includes the wall clock time for performing data transfer, alignment, backtrace, and CIGAR string generation.

| System | 1 | 2 | 3 |

|---|---|---|---|

| CPU | Intel Xeon Silver 4215 | Intel Xeon Gold 5120 | Intel Xeon E5-2697 v2 |

| Process node | 14 nm | 14 nm | 22 nm |

| Sockets | 2 | 2 | 2 |

| Cores | 16 | 28 | 24 |

| Threads | 32 | 56 | 48 |

| Frequency | 2.50 GHz | 2.20 GHz | 2.70 GHz |

| L3 cache | 22 MB | 38 MB | 60 MB |

| Memory | 256 GB | 64 GB | 32 GB |

| CPU TDP | 170 W | 210 W | 260 W |

We use real and synthetic datasets to evaluate our proposed framework, as shown in Table 2. The real datasets are short read-reference pairs generated using minimap2 (10.1093/bioinformatics/bty191, ) by mapping the datasets (https://www.ebi.ac.uk/ena/browser/view) mentioned in Table 2 to the human reference genome GRCh37 (church2011modernizing, ). The synthetic datasets are long sequence pairs simulated using the synthetic data generator provided in the WFA repository (marco2020fast, ).

| Read Lengths | Edit Distances | Description |

|---|---|---|

| 100 | 0-5% | Real, Accession# ERR240727 |

| 150 | 0-5% | Real, Accession# SRR826460 |

| 250 | 0-5% | Real, Accession# SRR826471 |

| 500, 1000, 5000, 10000 | 0-5% | Synthetic (marco2020fast, ) |

3.2. CPU Performance

Fig. 4(a) shows how the execution times of the CPU implementations scale with the number of CPU threads while aligning five million sequence pairs using different alignment algorithms and read lengths, and an edit distance of 1%. The results are reported for the Xeon E5 CPU system, which is the best performing CPU system as we show in Section 3.3. Our key observation is that the CPU implementations face limited performance improvement as the number of CPU threads grows. To investigate the cause of this limited performance improvement, we profile the application using perf. Fig. 4(b) shows that as the number of CPU threads increases, the instructions executed per cycle by each thread decreases. Hence, the CPU is increasingly underutilized when more threads are added. To further understand what is causing the CPU to be underutilized, Fig. 4(c) shows that as the number of CPU threads increases, the cycles spent by the CPU stalling while waiting for memory requests increases. Hence, the limited performance improvement is caused by the inability of the memory to serve memory requests quickly enough. These results demonstrate the importance of using PIM to overcome the memory bandwidth bottleneck faced by sequence alignment applications.

3.3. PIM Performance versus CPU Performance

Fig. 5 shows the execution time of our framework aligning five million sequence pairs using different alignment algorithms, read lengths, and edit distances. We report the execution time both with and without the data transfer time between main memory and the UPMEM DIMMs. Based on these results, we make four key observations.

The first observation is that among the three CPU baselines, the best performing baseline in the majority of cases is the Xeon E5 CPU system. Despite not having the largest number of threads, this baseline has the largest L3 cache. This result demonstrates the memory-boundedness of the sequence alignment problem, where having a larger L3 cache is favored over more having more threads.

The second observation is that our framework running on the UPMEM system outperforms the CPU baselines in the majority of cases, even when data transfer time is included. The speedup achieved over the best CPU baseline is up to 4.06 in the case of SWG. For the state-of-the-art algorithms, WFA and WFA-adaptive, the speedups achieved over the best CPU baseline are up to 1.83 and 2.56, respectively. These results demonstrate the effectiveness of PIM at accelerating memory-bound sequence alignment workloads.

Here, we would like to reiterate that our CPU baselines are powerful server-grade dual-socket systems running at full scale. CPU hardware and software have been developed and optimized for decades by large teams of engineers, whereas the UPMEM PIM system has been developed over a few years by a small team. We expect that the relative advantage of PIM systems will be more pronounced as PIM hardware and software matures. We also note that in our current system, the DPUs are running at 425 MHz, however they are expected to run at 500 MHz in future systems which would further improve performance.

The third observation is that, when the data transfer time is not included, our framework achieves a speedup over the best CPU baseline of up to 28.14 in the case of WFA-adaptive (25.93 for WFA). The results without transfer time are important for two reasons. The first reason is that in the current UPMEM system, the UPMEM DIMMs cannot be used as regular main memory. For this reason, the CPU must read the data from disk to main memory then transfer it from main memory to the UPMEM DIMMs. However, in future systems where a PIM module could also be used as main memory, the CPU could potentially read data from disk to the PIM module directly and then perform the alignment in the PIM module without the additional transfer. The second reason is that in the current UPMEM system, one cannot overlap writing to MRAM by the CPU and execution by the DPUs. However, in future systems where CPU access to a PIM module may be overlapped with execution in the PIM cores, such overlapping can hide some of the latency of writing to the PIM module. Therefore, in light of the two aforementioned reasons, the results without the transfer time demonstrate the potential that future PIM systems have for accelerating memory-bound sequence alignment workloads.

The fourth observation is that our framework does not outperform the best CPU baseline for NW and SWG at small read lengths. NW and SWG have more regular memory access patterns than the other alignment algorithms, and when the read lengths are small, the intermediate data structures fit into the CPU cache. The combination of these two factors makes the memory bandwidth bottleneck less pronounced, which diminishes the advantage of PIM. However, for other algorithms where the memory access pattern is less regular, and for larger read lengths where the sizes of the intermediate data structures outgrow the size of the CPU cache, the computation becomes more memory bound causing our framework to achieve large speedups over the CPU baseline.

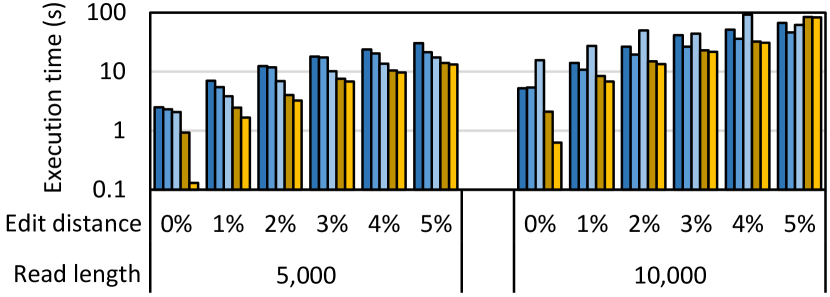

To further study the scalability of our framework, we evaluate the state-of-the-art algorithm, WFA-adaptive, on large sequence lengths of 5Kbp and 10Kbp. NW and SWG have difficulty scaling to such large read lengths because their quadratic space complexity causes them to exceed the MRAM capacity even for a single alignment. However, WFA and WFA-adaptive are not limited by MRAM capacity due to their linear space complexity. The execution time of WFA-adaptive for large read lengths while aligning one million sequence pairs is shown in Fig. 6. We observe that our framework executing on the UPMEM system continues to outperform all CPU baselines. The only exception is for read length 10,000 with an edit distance threshold of 5%. In this case, the WRAM capacity is only sufficient to support a single DPU thread per DPU which underutilizes the DPU pipelines. Although the algorithm is not limited by the 64MB MRAM for storing all the wavefront components, it is limited by the 64KB WRAM for storing the select wavefront components needed for computing a new wavefront component. Hence, our current framework executing on the current hardware cannot scale to read lengths far beyond 10,000 for WFA and WFA-adaptive due to WRAM capacity constraints. In some bioinformatics applications (such as minimap2), sequence alignment is typically performed only between every two seed chains to avoid long execution time and peak memory that could result from performing sequence alignment on the complete read sequence. Thus, we believe that our framework is useful for a wide range of applications despite the sequence length limitation. However, if sequence lengths far beyond 10,000 are a concern, the current limitation can be mitigated by streaming partial wavefront components from MRAM to WRAM in order to reduce the WRAM footprint and support aligning more sequence pairs of larger length simultaneously, or by using multiple DPU threads to align a single sequence pair. These improvements are the subject of our future work. We also expect the supportable read length and the performance for large read lengths to improve in future systems as the hardware and software mature, as the clock frequency of the DPUs increases, and as future systems may have larger WRAM capacity.

3.4. PIM Performance versus GPU Performance

Table 3 compares the throughput of our PIM implementations of WFA-adaptive (the fastest algorithm) to a recent GPU implementation of WFA-adaptive by (aguado2022wfa, ) using the results reported in that work. It is clear that our PIM implementation outperforms the GPU implementation in the majority of cases. This result shows that PIM is a promising technology for accelerating sequence alignment, even when compared to mature and widely used accelerators such as GPUs.

| Sequence | Edit | Throughput (alignments per second) | Throughput | |

|---|---|---|---|---|

| length | distance | WFA-GPU | UPMEM (with transfer) | improvement |

| 150 | 2% | 9.09M | 12.97M | 1.42 |

| 5% | 5.56M | 7.03M | 1.27 | |

| 1,000 | 2% | 1.43M | 1.10M | 0.77 |

| 5% | 370K | 434K | 1.17 | |

| 10,000 | 2% | 25.0K | 66.9K | 2.68 |

| 5% | 5.56K | 11.81K | 2.12 | |

3.5. Using WRAM Only or WRAM and MRAM

Recall from Section 2.3 that our framework provides two implementations of each algorithm: one that only uses WRAM for intermediate alignment data structures, and another that uses MRAM for these data structures and transfers data currently being accessed from these data structures to WRAM as needed. Fig. 7 compares the execution time and scalability of these two implementations for each algorithm and read length with edit distance 1% and 5%. Based on these results, we make three key observations.

The first observation is that for the algorithms that use a large amount of memory for the intermediate alignment data structures (i.e., NW and SWG), the implementations that use both WRAM and MRAM scale better with the read length than those that only use WRAM. In the case of NW for small read lengths, the implementation that uses WRAM only is up to 1.70 faster. However, for the remaining cases, this implementation is slower or cannot even execute. The reason is that the implementations that use WRAM only can only support a small number of DPU threads due to the constrained WRAM capacity, which prevents them from utilizing the DPU pipeline well. In contrast, the implementations that use both WRAM and MRAM can support a larger number of DPU threads, causing them to perform better for long read lengths despite incurring higher memory access latency. Note that the trends for these two algorithms are independent of the edit distance since their operations and memory consumption are independent of the alignment score.

The second observation is that for the algorithm that uses a small amount of memory for the intermediate alignment data structures (i.e., GenASM), the implementation that only uses WRAM is usually faster (up to 2.76 for edit distance 1%). The reason is that the WRAM only implementation can support a large enough number of DPU threads to utilize the DPU pipeline well. We note, however, that for larger edit distances that require more memory, the implementation that uses WRAM and MRAM becomes more favorable for large reads. For example, for read length 1,000 and edit distance 5%, the implementation that uses WRAM and MRAM together is 1.91 faster.

The third observation is that for the implementations that use a moderate amount of memory for the intermediate alignment data structures (i.e., WFA and WFA-adaptive), the implementations that use WRAM only are faster for shorter reads and low edit distance (up to 1.17 for WFA and 1.12 for WFA-adaptive at an edit distance of 1%). On the other hand, the implementations that use both WRAM and MRAM are faster for longer reads (up to 2.70 for WFA and 1.25 for WFA-adaptive at an edit distance of 1%). The reason is that the number of DPU threads that can be used decreases as the read length gets larger, which favors the implementation that can use more threads over the one with lower access latency. Note that the difference between the two implementations grows as the edit distance grows because the memory consumption of WFA and WFA-adaptive is highly sensitive to the edit distance. For example, for edit distance 5%, the implementation that uses WRAM and MRAM together is up to 6.15 faster.

The three observations presented in this section demonstrate the importance of our framework supporting both implementations of each algorithm, those that use WRAM only and those that use WRAM and MRAM for intermediate alignment data structures. In either case, our framework can automatically identify the maximum number of threads that can be used to alleviate this burden from the user.

4. Conclusion

We present a framework for high-throughput pairwise sequence alignment that overcomes the memory bandwidth bottleneck by using real processing-in-memory systems. Our framework targets UPMEM, the first publicly-available general-purpose programmable PIM architecture. It supports multiple alignment algorithms and includes two implementations of each algorithm that manage the UPMEM memory hierarchy differently and are suitable for different read lengths. Our evaluation shows that our framework executing on an UPMEM PIM system substantially outperforms parallel CPU implementations executing at full-scale on dual-socket server-grade CPU systems. Our results demonstrate that PIM systems provide a promising alternative for accelerating sequence alignment. We expect even larger improvements from future incarnations of PIM systems.

Acknowledgements and Funding

We thank Fabrice Devaux and Rémy Cimadomo for their valuable support, including insightful feedback and access to UPMEM hardware. This work has been supported by the University Research Board of the American University of Beirut [URB-AUB-104107-26306]. We also acknowledge the generous gifts provided by the SAFARI Research Group’s industrial partners, including ASML, Facebook, Google, Huawei, Intel, Microsoft, VMware, and Xilinx, as well as the support from the Semiconductor Research Corporation, the ETH Future Computing Laboratory, and the European Union’s Horizon programme for research and innovation under grant agreement No 101047160, project BioPIM (Processing-in-memory architectures and programming libraries for bioinformatics algorithms).

References

- (1) G. Navarro, “A guided tour to approximate string matching,” ACM computing surveys (CSUR), vol. 33, no. 1, pp. 31–88, 2001.

- (2) S. B. Needleman and C. D. Wunsch, “A general method applicable to the search for similarities in the amino acid sequence of two proteins,” Journal of molecular biology, vol. 48, no. 3, pp. 443–453, 1970.

- (3) O. Gotoh, “An improved algorithm for matching biological sequences,” Journal of molecular biology, vol. 162, no. 3, pp. 705–708, 1982.

- (4) A. Backurs and P. Indyk, “Edit distance cannot be computed in strongly subquadratic time (unless seth is false),” in Proceedings of the Annual ACM Symposium on Theory of Computing, 2015, pp. 51–58.

- (5) M. Alser, Z. Bingöl, D. S. Cali, J. Kim, S. Ghose, C. Alkan, and O. Mutlu, “Accelerating genome analysis: A primer on an ongoing journey,” IEEE Micro, vol. 40, no. 5, pp. 65–75, 2020.

- (6) M. Alser, J. Rotman, K. Taraszka, H. Shi, P. I. Baykal, H. T. Yang, V. Xue, S. Knyazev, B. D. Singer, B. Balliu et al., “Technology dictates algorithms: Recent developments in read alignment,” arXiv preprint arXiv:2003.00110, 2020.

- (7) M. Alser, J. Lindegger, C. Firtina, N. Almadhoun, H. Mao, G. Singh, J. Gomez-Luna, and O. Mutlu, “Going from molecules to genomic variations to scientific discovery: Intelligent algorithms and architectures for intelligent genome analysis,” arXiv preprint arXiv:2205.07957, 2022.

- (8) S. Marco-Sola, J. C. Moure López, M. Moreto Planas, and A. Espinosa Morales, “Fast gap-affine pairwise alignment using the wavefront algorithm,” Bioinformatics, no. btaa777, pp. 1–8, 2020.

- (9) S. Marco-Sola, J. M. Eizenga, A. Guarracino, B. Paten, E. Garrison, and M. Moreto, “Optimal gap-affine alignment in o (s) space,” bioRxiv, 2022.

- (10) Q. Aguado-Puig, S. Marco-Sola, J. C. Moure, C. Matzoros, D. Castells-Rufas, A. Espinosa, and M. Moreto, “WFA-GPU: Gap-affine pairwise alignment using GPUs,” bioRxiv, 2022.

- (11) A. Haghi, S. Marco-Sola, L. Alvarez, D. Diamantopoulos, C. Hagleitner, and M. Moreto, “An FPGA accelerator of the wavefront algorithm for genomics pairwise alignment,” in 2021 31st International Conference on Field-Programmable Logic and Applications (FPL), 2021, pp. 151–159.

- (12) J. Daily, “Parasail: SIMD C library for global, semi-global, and local pairwise sequence alignments,” BMC bioinformatics, vol. 17, no. 1, pp. 1–11, 2016.

- (13) M. Vasimuddin, S. Misra, H. Li, and S. Aluru, “Efficient architecture-aware acceleration of BWA-MEM for multicore systems,” in 2019 IEEE International Parallel and Distributed Processing Symposium (IPDPS). IEEE, 2019, pp. 314–324.

- (14) S. Kalikar, C. Jain, M. Vasimuddin, and S. Misra, “Accelerating minimap2 for long-read sequencing applications on modern CPUs,” Nature Computational Science, vol. 2, no. 2, pp. 78–83, 2022.

- (15) D. Fujiki, A. Subramaniyan, T. Zhang, Y. Zeng, R. Das, D. Blaauw, and S. Narayanasamy, “Genax: A genome sequencing accelerator,” in 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA). IEEE, 2018, pp. 69–82.

- (16) M. Šošić and M. Šikić, “Edlib: a C/C++ library for fast, exact sequence alignment using edit distance,” Bioinformatics, vol. 33, no. 9, pp. 1394–1395, 2017.

- (17) Y. Turakhia, G. Bejerano, and W. J. Dally, “Darwin: A genomics co-processor provides up to 15,000 x acceleration on long read assembly,” ACM SIGPLAN Notices, vol. 53, no. 2, pp. 199–213, 2018.

- (18) G. Rizk and D. Lavenier, “GASSST: global alignment short sequence search tool,” Bioinformatics, vol. 26, no. 20, pp. 2534–2540, 2010.

- (19) V. L. Arlazarov, Y. A. Dinitz, M. Kronrod, and I. Faradzhev, “On economical construction of the transitive closure of an oriented graph,” in Doklady Akademii Nauk, vol. 194, no. 3. Russian Academy of Sciences, 1970, pp. 487–488.

- (20) Z. Zhang, S. Schwartz, L. Wagner, and W. Miller, “A greedy algorithm for aligning DNA sequences,” Journal of Computational biology, vol. 7, no. 1-2, pp. 203–214, 2000.

- (21) H. Li, “Minimap2: pairwise alignment for nucleotide sequences,” Bioinformatics, vol. 34, no. 18, pp. 3094–3100, 2018.

- (22) N. Ahmed, T. D. Qiu, K. Bertels, and Z. Al-Ars, “GPU acceleration of Darwin read overlapper for de novo assembly of long DNA reads,” BMC bioinformatics, vol. 21, no. 13, 2020.

- (23) S. S. Banerjee, M. El-Hadedy, J. B. Lim, Z. T. Kalbarczyk, D. Chen, S. S. Lumetta, and R. K. Iyer, “ASAP: accelerated short-read alignment on programmable hardware,” IEEE Transactions on Computers, vol. 68, no. 3, pp. 331–346, 2018.

- (24) K. R. Rasmussen, J. Stoye, and E. W. Myers, “Efficient q-gram filters for finding all -matches over a given length,” Journal of Computational Biology, vol. 13, no. 2, pp. 296–308, 2006.

- (25) H. Xin, D. Lee, F. Hormozdiari, S. Yedkar, O. Mutlu, and C. Alkan, “Accelerating read mapping with fasthash,” in BMC genomics, vol. 14. Springer, 2013, pp. 1–13.

- (26) M. Alser, T. Shahroodi, J. Gómez-Luna, C. Alkan, and O. Mutlu, “SneakySnake: a fast and accurate universal genome pre-alignment filter for CPUs, GPUs and FPGAs,” Bioinformatics, vol. 36, no. 22-23, pp. 5282–5290, 2020.

- (27) H. Xin, J. Greth, J. Emmons, G. Pekhimenko, C. Kingsford, C. Alkan, and O. Mutlu, “Shifted Hamming distance: a fast and accurate SIMD-friendly filter to accelerate alignment verification in read mapping,” Bioinformatics, vol. 31, no. 10, pp. 1553–1560, 2015.

- (28) M. Alser, H. Hassan, H. Xin, O. Ergin, O. Mutlu, and C. Alkan, “GateKeeper: a new hardware architecture for accelerating pre-alignment in DNA short read mapping,” Bioinformatics, vol. 33, no. 21, pp. 3355–3363, 2017.

- (29) M. Alser, O. Mutlu, and C. Alkan, “MAGNET: Understanding and improving the accuracy of genome pre-alignment filtering,” Transactions on Internet Research, vol. 13, no. 2, pp. 33–42, 2017.

- (30) J. S. Kim, D. S. Cali, H. Xin, D. Lee, S. Ghose, M. Alser, H. Hassan, O. Ergin, C. Alkan, and O. Mutlu, “GRIM-Filter: Fast seed location filtering in DNA read mapping using processing-in-memory technologies,” BMC genomics, vol. 19, no. 2, pp. 23–40, 2018.

- (31) M. Alser, H. Hassan, A. Kumar, O. Mutlu, and C. Alkan, “Shouji: a fast and efficient pre-alignment filter for sequence alignment,” Bioinformatics, vol. 35, no. 21, pp. 4255–4263, 2019.

- (32) D. S. Cali, G. S. Kalsi, Z. Bingöl, C. Firtina, L. Subramanian, J. S. Kim, R. Ausavarungnirun, M. Alser, J. Gomez-Luna, A. Boroumand et al., “GenASM: A high-performance, low-power approximate string matching acceleration framework for genome sequence analysis,” in 2020 53rd Annual IEEE/ACM International Symposium on Microarchitecture (MICRO). IEEE, 2020, pp. 951–966.

- (33) S. Gupta, M. Imani, B. Khaleghi, V. Kumar, and T. Rosing, “RAPID: A ReRAM processing in-memory architecture for DNA sequence alignment,” in IEEE/ACM International Symposium on Low Power Electronics and Design. IEEE, 2019.

- (34) R. Kaplan, L. Yavits, and R. Ginosasr, “BioSEAL: In-memory biological sequence alignment accelerator for large-scale genomic data,” in 13th ACM International Systems and Storage Conference, 2020, p. 36–48.

- (35) D. Lavenier, R. Jodin, and R. Cimadomo, “Variant calling parallelization on processor-in-memory architecture,” bioRxiv, 2020.

- (36) G. E. Moore, “Cramming more components onto integrated circuits,” Proceedings of the IEEE, vol. 86, no. 1, pp. 82–85, 1998.

- (37) O. Mutlu, S. Ghose, J. Gómez-Luna, and R. Ausavarungnirun, “Processing data where it makes sense: Enabling in-memory computation,” Microprocessors and Microsystems, vol. 67, pp. 28–41, 2019.

- (38) ——, “A modern primer on processing in memory,” arXiv preprint arXiv:2012.03112, 2020.

- (39) W.-m. Hwu, I. El Hajj, S. G. De Gonzalo, C. Pearson, N. S. Kim, D. Chen, J. Xiong, and Z. Sura, “Rebooting the data access hierarchy of computing systems,” in 2017 IEEE International Conference on Rebooting Computing (ICRC). IEEE, 2017.

- (40) N. Hajinazar, G. F. Oliveira, S. Gregorio, J. Ferreira, N. M. Ghiasi, M. Patel, M. Alser, S. Ghose, J. G. Luna, and O. Mutlu, “SIMDRAM: An end-to-end framework for bit-serial SIMD computing in DRAM,” arXiv preprint arXiv:2105.12839, 2021.

- (41) N. Hajinazar, G. F. Oliveira, S. Gregorio, J. D. Ferreira, N. M. Ghiasi, M. Patel, M. Alser, S. Ghose, J. Gómez-Luna, and O. Mutlu, “SIMDRAM: a framework for bit-serial SIMD processing using DRAM,” in Proceedings of the 26th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, 2021, pp. 329–345.

- (42) J. D. Ferreira, G. Falcao, J. Gómez-Luna, M. Alser, L. Orosa, M. Sadrosadati, J. S. Kim, G. F. Oliveira, T. Shahroodi, A. Nori et al., “pluto: Enabling massively parallel computation in dram via lookup tables,” in 2022 55th IEEE/ACM International Symposium on Microarchitecture (MICRO). IEEE, 2022, pp. 900–919.

- (43) N. Mansouri Ghiasi, J. Park, H. Mustafa, J. Kim, A. Olgun, A. Gollwitzer, D. Senol Cali, C. Firtina, H. Mao, N. Almadhoun Alserr et al., “Genstore: a high-performance in-storage processing system for genome sequence analysis,” in Proceedings of the 27th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, 2022, pp. 635–654.

- (44) A. Ankit, I. El Hajj, S. R. Chalamalasetti, G. Ndu, M. Foltin, R. S. Williams, P. Faraboschi, W.-m. W. Hwu, J. P. Strachan, K. Roy, and D. S. Milojicic, “PUMA: A programmable ultra-efficient memristor-based accelerator for machine learning inference,” in Proceedings of the Twenty-Fourth International Conference on Architectural Support for Programming Languages and Operating Systems, 2019, p. 715–731.

- (45) A. Ankit, I. El Hajj, S. r. Chalamalasetti, S. Agarwal, M. Marinella, M. Foltin, J. P. Strachan, D. Milojicic, W.-m. Hwu, and K. Roy, “PANTHER: A programmable architecture for neural network training harnessing energy-efficient reram,” IEEE Transactions on Computers, vol. PP, 05 2020.

- (46) S. Huang, A. Ankit, P. Silveira, R. Antunes, S. R. Chalamalasetti, I. El Hajj, D. E. Kim, G. Aguiar, P. Bruel, S. Serebryakov et al., “Mixed precision quantization for reram-based dnn inference accelerators,” in 2021 26th Asia and South Pacific Design Automation Conference (ASP-DAC). IEEE, 2021, pp. 372–377.

- (47) D. S. Cali, K. Kanellopoulos, J. Lindegger, Z. Bingöl, G. S. Kalsi, Z. Zuo, C. Firtina, M. B. Cavlak, J. Kim, N. M. Ghiasi et al., “Segram: A universal hardware accelerator for genomic sequence-to-graph and sequence-to-sequence mapping,” in Proceedings of the 49th Annual International Symposium on Computer Architecture, 2022, pp. 638–655.

- (48) F. Devaux, “The true processing in memory accelerator,” in 2019 IEEE Hot Chips 31 Symposium (HCS). IEEE Computer Society, 2019.

- (49) J. Gómez-Luna, I. El Hajj, I. Fernandez, C. Giannoula, G. F. Oliveira, and O. Mutlu, “Benchmarking a new paradigm: An experimental analysis of a real processing-in-memory architecture,” arXiv preprint arXiv:2105.03814, 2021.

- (50) ——, “Benchmarking memory-centric computing systems: Analysis of real processing-in-memory hardware,” in 12th International Green and Sustainable Computing Conference (IGSC), 2021.

- (51) ——, “Benchmarking a new paradigm: Experimental analysis and characterization of a real processing-in-memory system,” IEEE Access, vol. 10, pp. 52 565–52 608, 2022.

- (52) C. Giannoula, I. Fernandez, J. G. Luna, N. Koziris, G. Goumas, and O. Mutlu, “Sparsep: Towards efficient sparse matrix vector multiplication on real processing-in-memory architectures,” Proceedings of the ACM on Measurement and Analysis of Computing Systems, vol. 6, no. 1, pp. 1–49, 2022.

- (53) D. Lavenier, C. Deltel, D. Furodet, and J.-F. Roy, “MAPPING on UPMEM,” Ph.D. dissertation, INRIA, 2016.

- (54) ——, “BLAST on UPMEM,” Ph.D. dissertation, INRIA Rennes-Bretagne Atlantique, 2016.

- (55) D. Lavenier, J.-F. Roy, and D. Furodet, “DNA mapping using processor-in-memory architecture,” in 2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM). IEEE, 2016, pp. 1429–1435.

- (56) S. Diab, A. Nassereldine, M. Alser, J. G. Luna, O. Mutlu, and I. El Hajj, “High-throughput pairwise alignment with the wavefront algorithm using processing-in-memory,” arXiv:2204.02085, 2022.

- (57) J. Nider, C. Mustard, A. Zoltan, J. Ramsden, L. Liu, J. Grossbard, M. Dashti, R. Jodin, A. Ghiti, J. Chauzi et al., “A case study of processing-in-memory in off-the-shelf systems,” in USENIX Annual Technical Conference, 2021, pp. 117–130.

- (58) V. Zois, D. Gupta, V. J. Tsotras, W. A. Najjar, and J.-F. Roy, “Massively parallel skyline computation for processing-in-memory architectures,” in 27th International Conference on Parallel Architectures and Compilation Techniques, 2018.

- (59) R. Baeza-Yates and G. H. Gonnet, “A new approach to text searching,” Commun. ACM, vol. 35, no. 10, p. 74–82, oct 1992.

- (60) S. Wu and U. Manber, “Fast text searching: allowing errors,” Communications of the ACM, vol. 35, no. 10, pp. 83–91, 1992.

- (61) E. W. Myers and W. Miller, “Optimal alignments in linear space,” Bioinformatics, vol. 4, no. 1, pp. 11–17, 1988.

- (62) R. Durbin, S. R. Eddy, A. Krogh, and G. Mitchison, Biological sequence analysis: probabilistic models of proteins and nucleic acids. Cambridge university press, 1998.

- (63) H. Li, “Minimap2: pairwise alignment for nucleotide sequences,” Bioinformatics, vol. 34, no. 18, pp. 3094–3100, 05 2018.

- (64) D. M. Church, V. A. Schneider, T. Graves, K. Auger, F. Cunningham, N. Bouk, H.-C. Chen, R. Agarwala, W. M. McLaren, G. R. Ritchie et al., “Modernizing reference genome assemblies,” PLoS biology, vol. 9, no. 7, p. e1001091, 2011.