A Flexible Lossy Depth Video Coding Scheme Based on Low-rank Tensor Modelling & HEVC Intra Prediction for Free Viewpoint Video

Abstract

The compression quality losses of depth sequences determine quality of view synthesis in free-viewpoint video. The depth map intra prediction in 3D extensions of the HEVC applies intra modes with auxiliary depth modeling modes (DMMs) to better preserve depth edges and handle motion discontinuities. Although such modes enable high efficiency compression, but at the cost of very high encoding complexity. Skipping conventional intra coding modes and DMMs in depth coding limits practical applicability of the HEVC for 3D display applications. In this paper, we introduce a novel low-complexity scheme for depth video compression based on low-rank tensor decomposition and HEVC intra coding. The proposed scheme leverages spatial and temporal redundancy by compactly representing the depth sequence as a high-order tensor. Tensor factorization into a set of factor matrices following CANDECOMP/PARAFAC (CP) decomposition via alternating least squares give a low-rank approximation of the scene geometry. Further, compression of factor matrices with HEVC intra prediction support arbitrary target accuracy by flexible adjustment of bitrate, varying tensor decomposition ranks and quantization parameters. The results demonstrate proposed approach achieves significant rate gains by efficiently compressing depth planes in low-rank approximated representation. The proposed algorithm is applied to encode depth maps of benchmark “Ballet” and “Breakdancing” sequences. The decoded depth sequences are used for view synthesis in a multi-view video system, maintaining appropriate rendering quality.

Index Terms:

Depth video coding, 3D video representation, tensor decomposition, low-rank approximation, 3D displays, 3D-HEVC, depth intra mode, view synthesis, rate distortion optimization.I Introduction

The market of 3D videos will continue to flourish in upcoming days. The strongest demand of 3D contents currently comes from industries in the creative economy and immersive media such as Gaming, Virtual Reality (VR) or Augmented Reality (AR) platforms, Displays, Film Entertainment, Imaging Systems and Retail. More pervasive applications of 3D videos, we will find in industries as diverse as healthcare, education, military, and the real estate over time. The advancement of affordable Time-of-flight (ToF) sensing or 3D depth cameras, further, promote application of 3D videos in academia and industry. There exist a variety of 3D video content formats in the user mass market to support 3D applications [1]. This includes conventional stereo video (CSV), mixed resolution stereo (MRS), hybrid mono-stereo, video plus depth (V+D), multiview video (MVV), multiview video plus depth (MVD), and layered depth video (LDV). These formats are specifically used for rendering in 3D and VR head mounted displays [2, 3]. Moving Picture Experts Group (MPEG) standardized MVD and LDV representations and depth-image-based rendering (DIBR) for flexible display adaptation of current 3D display technology. Latest call for 3D video standardization support depth enhanced stereo (DES) as a generic backward compatible 3D video format that would support extended functionality like baseline adaptation, post production, multi-view rendering, specific to display types and sizes [4, 5]. The objective is to decouple content creation from the display system requirement. The key functionality in this context is identified as manipulation of the depth composition of a scene via view synthesis [6, 7]. Owing to the constraints in transmission and broadcasting channels, only limited texture views and their corresponding depth maps are acquired, compressed, and transmitted for 3D video applications. At decoder side, with the aid of depth information, DIBR is employed to synthesize an arbitrary number of views to support head motion parallax viewing and baseline adaptation within practical limits of different multiview display types. The perceptual quality of synthesized novel views is mainly determined by the quality of RGB images and depth maps as well as DIBR algorithm [8, 9]. Predominately, rendered view quality is sensitive to the compression distortion in depth images.

A depth map is basically stored as a gray-level image. It describes the relative distance from recording camera to actual objects in the 3D space [10]. The characteristics of depth maps are fairly different from RGB texture images. Typically, a depth map consists of smooth regions and sharp edges around the object’s boundaries [11]. The compression distortion of sharp edges causes visual artifacts such as ringing effect at object’s boundaries in synthesized views using DIBR algorithm [8, 9]. Thus, preserving sharp edges is a critical task for depth video coding and high-quality view synthesis. The conventional 2D block matching based video coding algorithms are suboptimal for depth image sequences [11, 12, 13]. Such approaches generally divide large homogeneous regions in depth maps into small blocks. The sharp discontinuities around object boundaries can be placed within the same block. Block matching with arbitrary motion prediction is fairly demanding. This leads to significant coding artifacts around the depth edges in decoded depth images at high compression ratios [14, 15, 16, 17].

Joint Collaborative Team on Video Coding (JCT-VC) introduced a new generation of coding standards for 3D video such as High Efficiency Video Coding (HEVC) [18], 3D-HEVC [19], MV-HEVC [20], etc. New tools have been introduced such as depth modeling mode (DMM) [21], segment-based depth coding (SDC) [22], and view synthesis optimization (VSO) [21, 23, 24] to explicitly handle depth video coding with consideration of preserving sharp edges. DMM intra mode of depth maps in 3D-HEVC offers flexibility in representing the sharp edges by following a non-rectangle partition approach based on wedgelet [25] or contour [26] based segmentation. It can save about 5% of the transmitted bitrate maintaining acceptable view quality. The SDC approach chooses an alternative coding path and compresses the residual signal with constant pixel value, instead of following conventional transformed and quantization coefficients. The VSO mode selects different coding modes and the unit partition to trade off rate-distortion (RD) optimization and synthesized view distortion. In VSO evaluation, all combinations of block sizes need to be checked, considering DMM intra mode with and without SDC. This improves the coding efficiency, but at the expense of high computational complexity. This issue cannot be solved even adopting complex HEVC quadtree coding structure in 3D-HEVC [27].

Several other research efforts are made to remove temporal or intra/inter-view redundancies in depth video images considering the specific properties of depth images [28, 29]. The basis of such schemes is motion estimation considering 2D block matching algorithms. Most of the algorithms exploit correlation between the RGB color and depth frames. Grewatsch and Miiller [28] adopts a conventional block matching approach and determine motion vectors (MVs) using the RGB video. The estimated MVs from texture information are then considered for encoding both the texture and depth sequences. Contrary, Daribo et al. [29] considered the motion of a block at the same coordinates in both texture and depth videos and adopted a joint distortion criterion to estimate common MVs for both texture and depth frames. Such approaches remove temporal or inter-view redundancies if accurate estimation of motion can be determined. Thus, these algorithms work well for coding the depth images acquired from cameras that remain in a fixed position. The case becomes inevitably much more complicated when moving cameras are involved, such as handheld RGB-D capturing. Unlike static camera acquisition, the same objects depth values change in successive depth frames as the distance between a mobile camera and the objects in a scene change across the time. Therefore, motion compensation based depth coding schemes output very inaccurate results with mobile RGB-D cameras [16, 17, 30, 14].

The above mentioned depth video codecs/methods usually can only work for a specific bitrate. This restriction of one system per bps (bits per second) limits the generality and flexibility of existing codecs/methods for practical 3D video rendering applications. This paper proposed a novel coding scheme which flexibly performs depth video compression at multiple bitrates, accomplishing varying quality levels. The idea of lossy compression is inspired from low-rank modelling by tensor approximation that represents the high-dimensional depth data more compactly. A simple yet effective CANDECOMP/PARAFAC (CP) tensor decomposition based scheme is developed, where there is a tensor modelling block to approximate a low-rank representation of depth video data into a set of factor matrices. By differing the rank of factor matrices in tensor decomposition and quantization parameters of the HEVC intra coding block, one can flexibly adjust the bitrate within a single compact system. The proposed scheme applied to coding depth maps used for view synthesis in a multi-view video coding system. It demonstrates significant bitrate savings on average, maintaining state-of-the-art performance in terms of both PSNR and SSIM indices of rendered views. Withstanding their demonstrated success, the proposed scheme benefits in three major ways:

-

•

Different from systems which generally employ a series of cascaded modules to compress the depth data, our proposed scheme does not introduce cumulative errors because there are few interactions between low-rank modelling and HEVC encoding modules.

-

•

The mathematical multi-linear tensor decomposition model for processing depth data is compact and the procedure is not handcrafted, which can efficiently represent the various complex structures in depth maps.

-

•

The performance of proposed tensor approximation based scheme is effective for compression with a low bitrate and does not get much affected with visual artifacts in rendering views from decoded depth maps (e.g., blocky artifacts, blurs, and ringings).

II Related Work

Several schemes have been introduced that give natural extension to existing video codec for depth compression [31, 32, 33, 34, 35, 36]. Belyaev et al. [31] proposed method to compress 16 bit depth infrared images via 8 bit depth codecs. They utilize JPEG and H.264/AVC codecs with 8 bits per pixel input format. They compare rate-distortion performance with JPEG 2000, JPEG-XT and H.265/HEVC codecs. Their approach with two 8 bit H.264/AVC codecs achieve similar results as 16 bit HEVC codec. In [32, 33], Hamout and Elyousfi improved the 3D-HEVC depth map intra prediction model for practical 3D applications. Their approach classified depth video regions as a homogenous or non-homogenous region. Further, they allow conventional intra coding and depth modelling modes step to be skipped. This intuitive approach applies automatic merging possibilistic clustering for region classification based on tensor feature extraction and data analysis. This leads to fast depth map intra coding. Fu et al. [37] introduced a Depth Intra Skip (DIS) mode that allows early determination for intra mode decision in depth map coding. Pece et al. [34] developed codec for depth streaming by converting single channel depth images of Microsoft Kinect to standard 8 bit three channel images and using existing codecs such as VP8 or H.264. Besides, Pajak et al. [35] extends H.264 codec for depth compression by capturing a relation between depth perception and contrast in luminance and improve decompressed visual quality of depth for rendering content. Contrary to above mentioned approaches, Liu et al. [36] research suggests that coding scheme based on hybrid lossless-lossy methods provide a better tradeoff between quality and compression ratio for the real-time compression of multiple depth streams. They suggested using x264 for lossy run length encoding to keep the highest bits of 12 bit depth images.

II-A Edge-preserving Depth-map Coding

Zhang et al. [10, 38] presented a detailed analysis on the computational requirement of depth intra-mode decision of 3D-HEVC. They presented two fast algorithms aiming at speeding up the most time-consuming processes in depth intra-mode. The first technique is based on statistical characteristics of variance distributions in 3D-HEVC depth modelling mode. The technique introduces an efficient criterion based on the squared Euclidean distance of variances to evaluate rate-distortion costs. Second, a probability-based scheme is proposed to early determine depth intra-mode decision for using segment-wise depth coding based on the low-complexity rate-distortion cost. These proposed approaches provide time saving with less effect in the coding performance compared with the existing coders.

Kim et al. [39] represents depth video as a graphical signal. The graph is generated avoiding crossing the depth edges. The scheme employs spectral representation of graph signal and transform kernels to find the eigenvectors of Laplacian matrix of the graph. The scheme requires additional information, i.e., an edge map or an optimal adjacency matrix, into a bitstream for regenerating the exact signal. Their encoder applies context-based adaptive binary arithmetic coding. The scheme is applied to coding depth maps and used for view synthesis in a multi-view video system. Overall, the scheme provides 14% bit rate savings on average.

Nguyen et al. [40] proposed techniques to compress a depth video considering coding artifacts, spatial resolution, and dynamic range of the depth data. The coding artifacts around object boundaries are suppressed by weighted mode filtering. The filtering process is also adaptive to reconstruct depth video from the reduced spatial resolution and low dynamic range. Fabian Jager [41] achieves coding quality by adaptive computation of suitable contour lines, segmenting the depth image. The segments can be approximated with a piecewise-linear function to gain high coding efficiency. Gao and Smolic [42] dynamic programming based approach jointly optimize rate-distortion for occlusion-inducing pixels and minimize depth distortion in view synthesis.

Fu et al. [30] explicitly take special characteristics of Kinect-like depth data in coding scheme. Their idea is to first reform depth image by suppressing the depth spatial noises using divisive normalized bilateral filter (DNBL), and then utilize the uniqueness of depth contents for better bit allocation. Their depth measurement error model based on spatial DNBL filtering distinguish the inherent depth edges from the normalized error. This distinction combined with depth padding rebuild the inner depth block continuity and improve efficient block-based coding. The approach saves more than 55% bitrate with a significant reduction in the coding complexity. Andrew D. Wilson [43] presented a lossless image compression method for 16-bit single channel images typical of Kinect depth cameras. The algorithm is faster than existing lossless techniques. Its performance is demonstrated for a network of eight Kinect v2 cameras.

| HEVC | HEVC | ||||||

| Ballet (Camera 3) | Ballet (Camera 5) | ||||||

| QP | Bytes | Depth Bitrate (kbps) | Y-PSNR | QP | Bytes | Depth Bitrate (kbps) | Y-PSNR |

| 2 | 4200792 | 1680.317 | 62.4805 | 2 | 3978203 | 1591.281 | 62.9252 |

| 6 | 2696080 | 1078.432 | 58.4358 | 6 | 2548177 | 1019.271 | 58.8076 |

| 10 | 1728360 | 691.344 | 55.6816 | 10 | 1618184 | 647.274 | 56.0112 |

| 14 | 1178418 | 471.367 | 53.5304 | 14 | 1087004 | 434.802 | 53.8004 |

| 20 | 703521 | 281.408 | 50.1811 | 20 | 634659 | 253.864 | 50.4475 |

| 26 | 376222 | 150.489 | 45.7444 | 26 | 338075 | 135.23 | 46.0462 |

| 38 | 92549 | 37.02 | 36.425 | 38 | 83245 | 33.298 | 36.8471 |

| Proposed | Proposed | ||||||

| Ballet (Camera 3) | Ballet (Camera 5) | ||||||

| RANK | QP | Bytes | Depth Bitrate (kbps) | Y-PSNR | Bytes | Depth Bitrate (kbps) | Y-PSNR |

| RANK 1 | 2 | 2093323 | 837.329 | 62.4673 | 2129651 | 851.86 | 62.3348 |

| 6 | 935321 | 374.128 | 59.2998 | 958034 | 383.214 | 59.1788 | |

| 10 | 475741 | 190.296 | 57.31 | 490424 | 196.17 | 57.2048 | |

| 14 | 253160 | 101.264 | 55.6207 | 263657 | 105.463 | 55.4672 | |

| 20 | 107432 | 42.973 | 53.2944 | 112378 | 44.951 | 53.1227 | |

| 26 | 46086 | 18.434 | 50.4296 | 47853 | 19.141 | 50.2065 | |

| 38 | 11648 | 4.659 | 43.227 | 12482 | 4.993 | 43.234 | |

| RANK 5 | 2 | 2890680 | 1156.272 | 61.1024 | 2410855 | 964.342 | 62.1882 |

| 6 | 1479537 | 591.815 | 58.0589 | 1239664 | 495.866 | 59.0484 | |

| 10 | 839854 | 335.942 | 56.0635 | 688043 | 275.217 | 56.9578 | |

| 14 | 496024 | 198.41 | 54.3667 | 391928 | 156.771 | 55.1635 | |

| 20 | 235276 | 94.11 | 51.9565 | 176794 | 70.718 | 52.67 | |

| 26 | 107465 | 42.986 | 48.8446 | 76305 | 30.522 | 49.6478 | |

| 38 | 26363 | 10.545 | 41.4941 | 17550 | 7.02 | 42.4727 | |

| RANK 10 | 2 | 3494243 | 1397.697 | 60.4022 | 2666111 | 1066.444 | 61.7884 |

| 6 | 1915949 | 766.38 | 57.4036 | 1465273 | 586.109 | 58.7244 | |

| 10 | 1151363 | 460.545 | 55.3769 | 866271 | 346.508 | 56.6165 | |

| 14 | 721940 | 288.776 | 53.6757 | 526263 | 210.505 | 54.8219 | |

| 20 | 371092 | 148.437 | 51.1087 | 259444 | 103.778 | 52.2219 | |

| 26 | 176086 | 70.434 | 47.626 | 119809 | 47.924 | 48.9273 | |

| 38 | 44154 | 17.662 | 40.104 | 29028 | 11.611 | 41.4843 | |

| RANK 15 | 2 | 3967986 | 1587.194 | 60.0746 | 3119810 | 1247.924 | 61.2036 |

| 6 | 2232791 | 893.116 | 57.0258 | 1755918 | 702.367 | 58.1392 | |

| 10 | 1365342 | 546.137 | 54.9812 | 1051988 | 420.795 | 56.0157 | |

| 14 | 873014 | 349.206 | 53.2572 | 652055 | 260.822 | 54.223 | |

| 20 | 454838 | 181.935 | 50.6177 | 325014 | 130.006 | 51.565 | |

| 26 | 215147 | 86.059 | 47.0147 | 147255 | 58.902 | 48.1186 | |

| 38 | 50911 | 20.364 | 39.5581 | 33911 | 13.564 | 40.8367 | |

| RANK 20 | 2 | 4503567 | 1801.427 | 59.7568 | 3481704 | 1392.682 | 60.9215 |

| 6 | 2593111 | 1037.244 | 56.6565 | 1980722 | 792.289 | 57.8009 | |

| 10 | 1598926 | 639.57 | 54.5595 | 1193309 | 477.324 | 55.6532 | |

| 14 | 1026445 | 410.578 | 52.7944 | 745125 | 298.05 | 53.8574 | |

| 20 | 535315 | 214.126 | 50.0534 | 373015 | 149.206 | 51.1535 | |

| 26 | 249138 | 99.655 | 46.3509 | 165915 | 66.366 | 47.5866 | |

| 38 | 55239 | 22.096 | 38.9104 | 36043 | 14.417 | 40.4755 | |

| HEVC | HEVC | ||||||

| Breakdancing (Camera 3) | Breakdancing (Camera 5) | ||||||

| QP | Bytes | Depth Bitrate (kbps) | Y-PSNR | QP | Bytes | Depth Bitrate (kbps) | Y-PSNR |

| 2 | 4926087 | 1970.435 | 62.1439 | 2 | 5071386 | 2028.554 | 62.2019 |

| 6 | 3187503 | 1275.001 | 57.7865 | 6 | 3323876 | 1329.55 | 57.7723 |

| 10 | 2007349 | 802.94 | 54.928 | 10 | 2112154 | 844.862 | 54.8452 |

| 14 | 1353745 | 541.498 | 52.8257 | 14 | 1427821 | 571.128 | 52.6807 |

| 20 | 828067 | 331.227 | 49.6795 | 20 | 870996 | 348.398 | 49.4617 |

| 26 | 428770 | 171.508 | 44.7678 | 26 | 446056 | 178.422 | 44.4987 |

| 38 | 81779 | 32.712 | 36.4129 | 38 | 83009 | 33.204 | 36.3369 |

II-B Segmentation Based Depth Coding

Ionut Schiopu and Ioan Tabus [44, 45] developed a method for generating sequences of lossy versions of depth image. The sequences are created either by successively merging constant regions of the input depth image, or by iteratively splitting regions from a created lossy depth image employing horizontal or vertical line segments. Their greedy rate-distortion slope optimization algorithms take merge and split decisions greedily, depending on the best slope direction in the rate-distortion curve. They applied suitable entropy coder for compressing these sequences by coding region contours and the optimal depth values of each created lossy image. The obtained results compare favorably over the full range of bitrates with the existing lossy methods. Duch et al. [46] jointly encode depth and color images in their region-based coding technique. They considered a global 3D scene representation, where segmented color and depth images are organized in a coherent hierarchy. This 3D planar decomposition of the scene allows to combine color and depth partitions to obtain the final coding partitions (i.e., segmented regions), without encoding all the depth edges. Their rate-distortion methodology demonstrates competitive results with HEVC. Liu and Kim [47] followed a quad-tree decomposition approach to partition a depth frame into smooth and edge blocks for encoding.

II-C Motion Compensation Based Depth Video Coding

Wang et al. [14] presented a 3D image warping-based depth video compression algorithm for mobile RGB-D sensors. Their 3D IW-DVC coding framework include motion compensation scheme, designed to exploit the unique characteristics of depth images. The combined egomotion estimation and 3D image warping techniques in their lossless coding scheme adapt depth data with a high dynamic range.

Zhang et al. [48] depth coding approach analysed high correlation between depth and the corresponding texture video using motion vector and prediction mode. They proposed three efficient, low-complexity approaches based on this correlation for early termination mode decision, adaptive search range motion estimation, and fast disparity estimation. The result outperforms original 3D-HEVC encoder with reduction about 66% computational complexity and negligible rate-distortion performance loss.

A number of techniques determine MVs according to the texture video and used this information for depth coding. Shahriyar et al. [49] edge-preserving depth map coding scheme used texture MVs to avoid distortion on the edges. Fan et al. [50] proposed a motion estimation method that corrects the depth values in each block using MVs information determined from the color video. Similarly, Lee and Huang [51] extends 2D block matching algorithm with a 3D one, considering horizontal, vertical, and depth dimensions to suppress the coding artifacts. Lei et al. [52] develop a nonsequential coding method for depth maps. Their statistical method suggests that the skip-coding mode and its associated motion vectors in the coded texture can be used for depth coding. It saves bitrate at the cost of a little increase of distortion.

II-D Compression Methods Based on Tensor Decomposition

Most of the compression algorithms based on tensor decomposition rely on data dependent bases such as factor matrices, instead of choosing the pre-determined ones. Principal component analysis (PCA) as well as Tucker decomposition based approaches adhere to this idea. The Tucker model strives to improve sparsity of transform domain at the cost to reserve the learned bases, which comparatively incline for a three or more dimensions. Some visual data coding algorithms based on Tucker approaches are presented in [53, 54]. Recently, progressive truncation based approaches for tensor rank reduction demonstrated usefulness in analysing features and structural details in volume data at different scales [55]. Besides, tensor compression algorithms are explored in the context of 3D displays [56], volume rendering and visualization [57, 58], and multi-dimensional signal processing applications [59, 60]. Tensor decompositions and in particular the Tucker model is primarily employed for higher-order compression and dimensionality reduction in the graphics and visualization fields. Ballester-Ripoll and Pajarola [61] investigated 3D scalar field compression employing Tucker transform coefficients. The study concluded that coefficient thresholding outperforms conventional rank truncation-based methods in terms of quality vs. compression performance. In their algorithm, they combined Tucker core hard thresholding with factor matrix quantization and achieve a better compression rate than slice-wise truncating the core. This approach inspired TAMRESH [62] and TTHRESH [61] compression algorithms for multidimensional volume data. TAMRESH large-scale renderer handles input volume by partitioning in small multi-resolution cubic bricks and compressing each brick as a separate higher-order singular value decomposition (HOSVD) core. TTHRESH [61] performs lossy compression of multidimensional medical data over regular grids. They leverage HOSVD together with the bit-plane, run-length and arithmetic coding in encoding the HOSVD transform coefficients generated in core tensor. The arbitrary target accuracy is supported in their approach via bit-plane coding by greedily compressing bit planes of progressively less importance. The data reduction in their HOSVD-driven approach is achieved by keeping all ranks, however, following attentive lossless compression of all bit planes up to a certain threshold. They also apply this bit-plane based strategy on the factor matrices to encode the data.

In the last several years, convolutional neural networks (CNNs) based lossy image compression (LIC) algorithms have been proposed. The end-to-end training in such LIC systems adaptively learns an encoder-decoder pair or adopt specific loss functions to retain image structures and perceptual quality of the decomposed image. Despite such advantages, CNN-based depth video coding algorithms impose challenges. First, CNN-based compressors are only adjusting the bitrate by changing the number of latent feature maps and/or quantized values. The network could be trained specifically for a particular bitrate once at a time. This limits the applicability of CNN-based compressors for practical 3D video rendering systems. Second, updating quantizer in network architecture is hard because of the nondifferentiable property of discrete operation, during the end-to-end training. Cia et al. [62] CNN-based LIC approach removes these limitations by proposing an effective Tucker Decomposition Network (TDNet), which can adjust multiple bits-per-pixel rates of latent image representation within a single CNN. Their Tucker decomposition layer decomposes a latent image representation into a set of matrices and one small core tensor for lossy coding. However, Cha et al. [62] TDNet Tucker decomposition layer can not withstand computational complexity of the large amount of 3D video data.

In this paper, we leverage an efficient CANDECOMP/PARAFAC (CP) tensor decomposition algorithm based on alternating least squares (ALS) for depth video coding. Usually, for regular CP-ALS, the cost of decomposition is dominated by the tensor contractions required to solve the quadratic optimization subproblems. Instead, we investigated an efficient adaptation of pairwise perturbation with sparse depth tensors, suggested by Ma and Solomonik [63]. The approximation of tensor with factor matrices could be cost effective if perturbative corrections are employed rather than recomputing the tensor contractions to solve the optimization subproblems. In our proposed formulation, we benefit pairwise perturbation to speed up the decomposition procedure as suggested by [63]. We observed that approximation to model depth tensor problems with the pairwise perturbation algorithm is accurate as ALS with faster converge to minima with fewer operations.

III Proposed Scheme for Depth Video Coding

An overview of proposed lossy depth video compression scheme is illustrated in Fig. 1. The proposed system contains mainly three modules: 1) an encoder module, which consists of two subblocks: first, a tensor decomposition block, which approximates varying low-rank representation of depth video data, and the second HEVC intra encoding subblock, which encodes approximated depth data with varying quantization parameters, 2) a corresponding decoder module, which performs reverse decoding operations, i.e., dequantization and tensor reconstruction, and 3) 3D rendering module, where decoded depth maps are used for novel view synthesis.

In the first module, the system performs factorization of scene geometry into a set of factor matrices following CP decomposition via alternating least squares algorithm. The representation of the depth data into a high-dimensional sparse tensor domain provides an economic solution to 3D video storage and transmission. Further, performing lossy quantization in the second module with HEVC intra modes on the tensor components of low-rank approximated scene geometry effectively encodes depth data at multiple bitrates. Thus, the scheme overcomes decisive bottlenecks of memory and network bandwidth when handling high-resolution depth frames. The proposed scheme considers a range of rank values for factorizing tensor. Further, encoding with HEVC adopts a variable quantizer to allocate different quantization parameters to the factor matrices. As an indispensable step in multi-view 3D video system, this is a critical step in preserving the major texture and edges in spatially variant depth frames and mitigate rendering artifacts or sampling issues in synthesized views from decoded data. The flexibility proposed scheme offers for changing the rank of factor matrices in tensor decomposition and its quantization levels, facilitates to easily adjust the bitrate and quality levels of reconstructed depth maps within a compact system. Thus, a single system could enable display adaptation with different 3D devices at reduce storage space, while maintaining backward compatibility and extended functionality such as N-view synthesis without sacrificing much the rendered view quality for baseline adaptation under multiple bitrates.

Different components of our proposed depth video compression pipeline are described in the following sections.

III-A Tensor Low Rank Approximation

In module I shown in Fig. 1, we performed low-rank approximation of depth video sequences. A CP tensor decomposition model is adopted with an alternating least square procedure [63]. The CP tensor decomposition which is a higher-order generalization of the matrix SVD that approximates tensor stack of depth video frames by a sum of tensor products of vectors. Here, denotes the decomposition rank. Let us denote tensor created by stacking depth video frames as an order tensor . The CP decomposition is represented by

| (1) |

where, . Writing (1) by a sum of tensor products of vectors serves to approximate as

| (2) |

The conventional CP-ALS alternates among quadratic optimization problems for each of the factor matrices . This requires to solve linear least squares problems for each row,

| (3) |

The matrix is formed by Khatri-Rao products of the other factor matrices

| (4) |

where, . The linear least squares problems (3) are usually solved following the pairwise perturbation method with normal equations

| (5) |

and computing as

| (6) |

with each . The equations (5) could be solved by formulating the problem as an unconstrained minimization of the nonlinear objective function,

| (7) |

with gradient component computed as

| (8) |

This CP decomposition works if ascertain convergence of Frobenius norm of the components of the overall gradient. To fasten the computation, we adopted Ma et al. [64] pairwise perturbation approach which overcomes the main computational bottleneck of CP-ALS caused by Matricized Tensor Times Khatri-Rao Product estimation. The ALS procedure for CP decomposition is accelerated to approximate . Let can be expressed as

| (9) |

where, denotes the computed with a standard ALS step at some (i.e., ) number of steps preceding to the present one. Thus, at the current step can be expressed as

| (10) |

The pairwise perturbation algorithm of Ma et al. [63] effectively approximates as

| (11) |

where,

| (12) |

and

| (13) |

The is defined as contracted with for . Given and , the is computed for all efficiently in small number of operations. We adopted dimension trees based approach [64, 65] for the computation of pairwise perturbation operators and .

III-B HEVC Coding for Low Rank Depth Video

We encode the low-rank approximated tensor components of depth video (i.e. factor matrices) via HEVC intra coding tools in module II, as shown in Fig. 2. High Efficiency Video Coding (HEVC) compression standard is designed under the collaborative standardization project of ITU-T VCEG and ISO/IEC MPEG [66]. It is developed as part of the MPEG-H project, which is a successor of widely used Advanced Video Coding (H.264, or MPEG-4 Part 10) standard. The detail description of the changes relative to H.264/MPEG-4 AVC is given in [67].

In HEVC, coding tree blocks (CTBs) based partition approach is adopted in encoding a view. The encoder selects the size of CTBs according to architectural characteristics and application requirements such as delay and memory constraints. A coding tree unit (CTU) processes the luma CTB and the two chroma CTBs. Typically, samples of the luma component and the corresponding samples of two chroma components are signaled inside the bitstream. The CTU is defined as the basic processing unit, which identify the decoding process in the standard. The luma and chroma blocks in CTBs can be partitioned further into multiple coding blocks (CBs). The quadtree syntax of CTU allows splitting into variable size blocks taken into account the attributes of the region covered by the CTB. The CB size is specified by the decoder syntax. It could be of minimum size for luma samples or larger. For a coding unit (CU), where luma and the chroma CBs are processed, a prediction mode is signaled. The prediction mode can be either an intra or inter mode. In intra prediction, thirty five prediction modes are specified for the luma CB. A single intra prediction mode is signaled for both chroma CBs. The same prediction mode is specified for luma or a horizontal, vertical, planar, left-downward diagonal or DCT (discrete cosine transform) prediction mode [68]. The intra mode is applied independently for each transform block. In the inter-mode coding units, the luma and chroma coding blocks are associated with one, two, or four luma and chroma prediction blocks. The inter-mode determine one or two motion vectors for each prediction unit following unipredictive or bipredictive coding. Asymmetric motion partitioning and splitting is applied appropriately to chroma coding blocks.

HEVC supports several common features related to H.264/MPEG-4 AVC. It facilities quarter sample precision motion vectors, weighted prediction and multiple reference pictures. The concepts of I, P, and B slices are similar to H.264/MPEG-4 AVC. We used latest HEVC reference software HM-16.6 in the proposed formulation. The advanced HEVC version supports so called merge mode, which substantially improved the coding of motion parameters compared to earlier standards. In merge mode, no motion parameters are coded. Alternatively, a candidate list of motion parameters are derived which includes motion parameters of spatially neighboring blocks and temporally predicted motion parameters for the corresponding prediction unit. This information is derived considering motion data of a co-located block in a reference image. This advanced mode for motion compensation addresses the challenges of large block sizes and consistently displaced image regions, e.g., due to the object’s motion.

HEVC supports residual quadtree (RQT) split of luma and chroma CBs for coding the inter or intra prediction residual signal. In RQT, either CB is represented as sole luma transform block or four equal-sized luma transform blocks. In split mode of RQT, each resulting luma transform block can be further broken into four smaller luma transform blocks. Similarly, split applies to the chroma CB. The associated syntax of RQT with the luma and chroma transform blocks form a transform unit (TU) where a 2D transform (i.e. DCT approximation) is applied to luma and chroma samples.

Besides, HEVC like H.264/MPEG-4 AVC supports entropy coded using CABAC, improved sophisticated context selection scheme for transform coefficient coding, and sample-adaptive coding for efficient motion compensation that reduces the distortion in encoding and decoding samples. The HEVC Main profile (MP) supports coding of 8-bit-per-sample video. Two additional profiles are specified in the later HEVC Drafts 9-10 specification: the one Main 10 profile (for 10-bit-per-sample video) and the second Main Still Picture profile for coding still images employing only intra-coding tools.

III-C View Synthesis

In module III shown in Fig. 1, we performed depth-image-based free-viewpoint rendering using the decoded depth map of the left and right cameras. DIBR method proposed by Sharma et al. [9] is used for virtual view synthesis. The imperfections of depth images cause unrealistic artifacts and affect rendered view quality in DIBR systems. Thus, it is more appropriate to consider the effect of decoded depth maps on synthesized view quality, instead of observing the distortion in compressed depth data. The PSNR and SSIM metrics are used to estimate the rendered view distortion caused by the errors in decoded depth images compressed using the proposed coding scheme and HEVC codec. For a fair evaluation of depth coding, we have not used the captured original reference video at the virtual camera position to measure the quality. This is to prevent distortion incorporated in quality measurement caused by camera parameters and lighting variation among multi-view video data. PSNR and SSIM scores are computed by comparing the synthesized video without compression of depth videos as the reference and the synthesized video using the decoded depth videos [69].

IV Experimental Results

A series of experiments are conducted to assess the performance of the proposed depth compression scheme. We tested our model on “Ballet” and “Breakdancing” sequences provided by Microsoft Research. The multi-camera data is acquired by eight cameras located along a 1D arc covering about from one end to the other. The color and depth videos are provided with resolutions of [70].

We encode depth video of each camera sequence using the proposed coding model and High Efficiency Video Coding (HEVC) reference software HM-16.0 [71]. The color and depth video of left and right cameras are encoded considering various quantization parameters (QPs). The QP values of , , , , , , are used to encode the depth videos.

The experimental model to test the performance of proposed scheme analysed the quality of synthesized intermediate view. We selected reference cameras and among eight cameras of “Ballet” and “Breakdancing” multi-camera data. The intermediate views are synthesized at virtual camera using free-viewpoint rendering algorithm developed by Sharma et al. [9]. The objective quality assessment is performed by measuring Peak Signal-to-Noise ratio (PSNR) and structural similarity index measure (SSIM). The PSNR is measured by comparing the synthesized video rendered using original depth maps as the reference and the synthesized video using the decoded depth maps. It is critical to note that decoded video is used for virtual view synthesis to measure the distortion caused by depth map compression. The rate distortion (RD) curves are plotted considering the total bitrate required to encode depth video of both reference cameras and PSNR/SSIM scores of the synthesized virtual video.

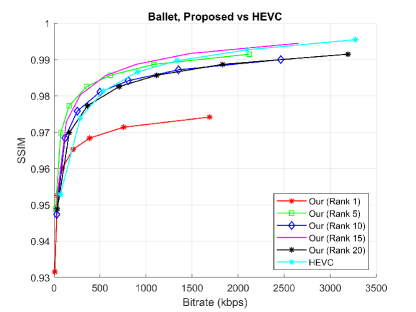

Fig. 2 shows the “Bitrate vs PSNR” and “Bitrate vs SSIM” comparison graphs between the proposed method and HEVC codec. The x-axis plots total bitrate required to encode left (camera 3) and right camera (camera 5) depth sequence. The y-axis plots PSNR/SSIM measures of the synthesized intermediate camera view (camera 4). We achieve significant bitrate reduction using proposed coding scheme compared to directly encode the depth video of left and right camera using HEVC codec, while maintaining appropriate quality of synthesized virtual camera views in terms of PSNR and SSIM measures. The encoder results obtained by applying proposed scheme and HEVC in compressing “Ballet” and “Breakdancing” depth sequences are tabulated in Table I-IV. Total number of bytes required to encode left (camera 3) and right (camera 5) depth sequences are summarized in Table VI-VIII. The Bjontegaard metric calculation results demonstrate the coding efficiency of proposed scheme compared to HEVC codec [72]. The Bjontegaard delta bitrate (BDBR) reduction results considering different ranks and quantization values are given in Table V. The BDBR measures are computed by considering the total bitrate needed to encode left and right camera depth video and PSNR scores of the synthesized intermediate camera video. Note that minus sign indicates bitrate reduction. Compared to HEVC, our scheme achieves 62.8143%, 35.7449%, 43.1331%, 21.5080%, 30.7221% rate gains on average with “Ballet” data, considering tensor ranks , , , , respectively. On “Breakdancing” data, we obtain 70.7924%, 73.3179%, 49.1670%, 26.6356%, 16.2384% rate gains, considering tensor ranks , , , , respectively. This is a substantial improvement over HEVC for directly encoding depth videos with the same quantization parameters and configuration. It can also be observed in Fig 2 that for higher ranks, particularly large PSNR gains are obtained using the proposed model even for low bitrates, comparable to HEVC. The SSIM scores demonstrate that the perceived quality of synthesized views is also appropriate for different bitrates, obtained considering varying tensor decomposition ranks and quantization parameters in our scheme. This explains significant redundancies can be removed, which lowers the overhead in transmission for bitrate coding without affecting much the perceived change in structural information of rendered views quality. Another critical advantage of our scheme is to allow scalable depth video coding. Since the proposed scheme can render virtual views of varying quality at the decoder considering different ranks and QP values. This flexibility could be preferable in 3D video transmission and broadcasting scenarios as it provides additional levels of scalability.

| Proposed | Proposed | ||||||

| Breakdancing (Camera 3) | Breakdancing (Camera 5) | ||||||

| RANK | QP | Bytes | Depth Bitrate (kbps) | Y-PSNR | Bytes | Depth Bitrate (kbps) | Y-PSNR |

| RANK 1 | 2 | 2367817 | 947.127 | 61.8849 | 2351345 | 940.538 | 61.7692 |

| 6 | 1130354 | 452.142 | 58.8111 | 1133670 | 453.468 | 58.7342 | |

| 10 | 607790 | 243.116 | 56.8681 | 613464 | 245.386 | 56.8112 | |

| 14 | 341031 | 136.412 | 55.2676 | 347297 | 138.919 | 55.2019 | |

| 20 | 156412 | 62.565 | 53.0796 | 160576 | 64.23 | 53.0383 | |

| 26 | 70544 | 28.218 | 50.1954 | 73637 | 29.455 | 50.244 | |

| 38 | 19246 | 7.698 | 42.2817 | 20801 | 8.32 | 42.5151 | |

| RANK 5 | 2 | 2868655 | 1147.462 | 60.6944 | 2921655 | 1168.662 | 60.6136 |

| 6 | 1489113 | 595.645 | 57.8249 | 1512204 | 604.882 | 57.7453 | |

| 10 | 874402 | 349.761 | 55.8803 | 890661 | 356.264 | 55.8043 | |

| 14 | 534396 | 213.758 | 54.2632 | 542109 | 216.844 | 54.1774 | |

| 20 | 266753 | 106.701 | 51.9505 | 268900 | 107.56 | 51.8714 | |

| 26 | 129442 | 51.777 | 48.7986 | 128559 | 51.424 | 48.7618 | |

| 38 | 35465 | 14.186 | 41.1126 | 34696 | 13.878 | 41.2135 | |

| RANK 10 | 2 | 3424073 | 1369.629 | 59.9562 | 3499848 | 1399.939 | 59.8421 |

| 6 | 1859422 | 743.769 | 57.1562 | 1912219 | 764.888 | 57.0479 | |

| 10 | 1150623 | 460.249 | 55.2579 | 1194034 | 477.614 | 55.1332 | |

| 14 | 731822 | 292.729 | 53.6425 | 760978 | 304.391 | 53.4965 | |

| 20 | 379396 | 151.758 | 51.1748 | 393172 | 157.269 | 51.0177 | |

| 26 | 186746 | 74.698 | 47.7901 | 190911 | 76.364 | 47.6202 | |

| 38 | 50172 | 20.069 | 40.1731 | 50211 | 20.084 | 40.1083 | |

| RANK 15 | 2 | 3790115 | 1516.046 | 59.6792 | 3939703 | 1575.881 | 59.532 |

| 6 | 2104438 | 841.775 | 56.8484 | 2197956 | 879.182 | 56.6962 | |

| 10 | 1309412 | 523.765 | 54.9319 | 1382325 | 552.93 | 54.7757 | |

| 14 | 841605 | 336.642 | 53.3062 | 891563 | 356.625 | 53.137 | |

| 20 | 438737 | 175.495 | 50.7645 | 463942 | 185.577 | 50.5765 | |

| 26 | 212934 | 85.174 | 47.2708 | 223156 | 89.262 | 47.0653 | |

| 38 | 54525 | 21.81 | 39.648 | 56255 | 22.502 | 39.5446 | |

| RANK 20 | 2 | 4143552 | 1657.421 | 59.4377 | 4306444 | 1722.578 | 59.2869 |

| 6 | 2331086 | 932.434 | 56.5539 | 2445676 | 978.27 | 56.4193 | |

| 10 | 1462222 | 584.889 | 54.6463 | 1544937 | 617.975 | 54.4916 | |

| 14 | 943868 | 377.547 | 53.0033 | 1001271 | 400.508 | 52.8347 | |

| 20 | 493315 | 197.326 | 50.4118 | 522700 | 209.08 | 50.2065 | |

| 26 | 236580 | 94.632 | 46.8621 | 248178 | 99.271 | 46.6374 | |

| 38 | 58277 | 23.311 | 39.3249 | 60115 | 24.046 | 39.1823 | |

| Ballet | Breakdancing | ||

|---|---|---|---|

| RANK | QP | BDBR (%) | BDBR (%) |

| RANK 1 | 2 | -91.7644 | -91.4810 |

| 6 | -87.563 | -88.1485 | |

| 10 | -82.5545 | -84.3392 | |

| 14 | -74.6781 | -78.3987 | |

| 20 | -56.571 | -64.9441 | |

| 26 | -28.9834 | -49.3263 | |

| 38 | -17.586 | -38.9087 | |

| Average | -62.8143 | -70.7924 | |

| RANK 5 | 2 | -61.2031 | -87.2847 |

| 6 | -50.9729 | -83.9632 | |

| 10 | -42.1304 | -81.3858 | |

| 14 | -29.3045 | -77.3789 | |

| 20 | -2.9364 | -68.0988 | |

| 26 | -22.4574 | -59.3702 | |

| 38 | -41.2093 | -55.7434 | |

| Average | -35.7449 | -73.3179 | |

| RANK 10 | 2 | -66.6627 | -66.2222 |

| 6 | -60.9403 | -59.6894 | |

| 10 | -56.9193 | -57.4577 | |

| 14 | -50.9719 | -52.7528 | |

| 20 | -38.9249 | -40.1808 | |

| 26 | -23.6252 | -30.8459 | |

| 38 | -3.8872 | -37.0202 | |

| Average | -43.1331 | -49.1670 | |

| RANK 15 | 2 | -37.6335 | -46.5526 |

| 6 | -28.1844 | -37.5994 | |

| 10 | -22.922 | -35.5160 | |

| 14 | -15.2211 | -30.5218 | |

| 20 | -1.1148 | -15.0541 | |

| 26 | -17.0494 | -4.1785 | |

| 38 | -28.4309 | -17.0270 | |

| Average | -21.5080 | -26.6356 | |

| RANK 20 | 2 | -49.8622 | -33.7813 |

| 6 | -43.92 | -23.8671 | |

| 10 | -40.5368 | -22.4193 | |

| 14 | -35.388 | -17.5907 | |

| 20 | -23.2538 | -1.0055 | |

| 26 | -5.8719 | -9.8350 | |

| 38 | -16.2222 | -5.1702 | |

| Average | -30.7221 | -16.2384 |

| SCENE | QP | HEVC Codec | ||

|---|---|---|---|---|

| Camera 3 | Camera 5 | Total Bytes | ||

| Ballet | 2 | 4200792 | 3978203 | 8178995 |

| 6 | 2696080 | 2548177 | 5244257 | |

| 10 | 1728360 | 1618184 | 3346544 | |

| 14 | 1178418 | 1087004 | 2265422 | |

| 20 | 703521 | 634659 | 1338180 | |

| 26 | 376222 | 338075 | 714297 | |

| 38 | 92549 | 83245 | 175794 | |

| Breakdancing | 2 | 4926087 | 5071386 | 9997473 |

| 6 | 3187503 | 3323876 | 6511379 | |

| 10 | 2007349 | 2112154 | 4119503 | |

| 14 | 1353745 | 1427821 | 2781566 | |

| 20 | 828067 | 870996 | 1699063 | |

| 26 | 428770 | 446056 | 874826 | |

| 38 | 81779 | 83009 | 164788 | |

| RANK | QP | Camera 3 | Camera 5 | Total Bytes |

| RANK 1 | 2 | 2093323 | 2129651 | 4222974 |

| 6 | 935321 | 958034 | 1893355 | |

| 10 | 475741 | 490424 | 966165 | |

| 14 | 253160 | 263657 | 516817 | |

| 20 | 107432 | 112378 | 219810 | |

| 26 | 46086 | 47853 | 93939 | |

| 38 | 11648 | 12482 | 24130 | |

| RANK 5 | 2 | 2890680 | 2410855 | 5301535 |

| 6 | 1479537 | 1239664 | 2719201 | |

| 10 | 839854 | 688043 | 1527897 | |

| 14 | 496024 | 391928 | 887952 | |

| 20 | 235276 | 176794 | 412070 | |

| 26 | 107465 | 76305 | 183770 | |

| 38 | 26363 | 17550 | 43913 | |

| RANK 10 | 2 | 3494243 | 2666111 | 6160354 |

| 6 | 1915949 | 1465273 | 3381222 | |

| 10 | 1151363 | 866271 | 2017634 | |

| 14 | 721940 | 526263 | 1248203 | |

| 20 | 371092 | 259444 | 630536 | |

| 26 | 176086 | 119809 | 295895 | |

| 38 | 44154 | 29028 | 73182 | |

| RANK 15 | 2 | 3967986 | 3119810 | 7087796 |

| 6 | 2232791 | 1755918 | 3988709 | |

| 10 | 1365342 | 1051988 | 2417330 | |

| 14 | 873014 | 652055 | 1525069 | |

| 20 | 454838 | 325014 | 779852 | |

| 26 | 215147 | 147255 | 362402 | |

| 38 | 50911 | 33911 | 84822 | |

| RANK 20 | 2 | 4503567 | 3481704 | 7985271 |

| 6 | 2593111 | 1980722 | 4573833 | |

| 10 | 1598926 | 1193309 | 2792235 | |

| 14 | 1026445 | 745125 | 1771570 | |

| 20 | 535315 | 373015 | 908330 | |

| 26 | 249138 | 165915 | 415053 | |

| 38 | 55239 | 36043 | 91282 | |

| RANK | QP | Camera 3 | Camera 5 | Total Bytes |

|---|---|---|---|---|

| RANK 1 | 2 | 2367817 | 2351345 | 4719162 |

| 6 | 1130354 | 1133670 | 2264024 | |

| 10 | 607790 | 613464 | 1221254 | |

| 14 | 341031 | 347297 | 688328 | |

| 20 | 156412 | 160576 | 316988 | |

| 26 | 70544 | 73637 | 144181 | |

| 38 | 19246 | 20801 | 40047 | |

| RANK 5 | 2 | 2868655 | 2921655 | 5790310 |

| 6 | 1489113 | 1512204 | 3001317 | |

| 10 | 874402 | 890661 | 1765063 | |

| 14 | 534396 | 542109 | 1076505 | |

| 20 | 266753 | 268900 | 535653 | |

| 26 | 129442 | 128559 | 258001 | |

| 38 | 35465 | 34696 | 70161 | |

| RANK 10 | 2 | 3424073 | 3499848 | 6923921 |

| 6 | 1859422 | 1912219 | 3771641 | |

| 10 | 1150623 | 1194034 | 2344657 | |

| 14 | 731822 | 760978 | 1492800 | |

| 20 | 379396 | 393172 | 772568 | |

| 26 | 186746 | 190911 | 377657 | |

| 38 | 50172 | 50211 | 100383 | |

| RANK 15 | 2 | 3790115 | 3939703 | 7729818 |

| 6 | 2104438 | 2197956 | 4302394 | |

| 10 | 1309412 | 1382325 | 2691737 | |

| 14 | 841605 | 891563 | 1733168 | |

| 20 | 438737 | 463942 | 902679 | |

| 26 | 212934 | 223156 | 436090 | |

| 38 | 54525 | 56255 | 110780 | |

| RANK 20 | 2 | 4143552 | 4306444 | 8449996 |

| 6 | 2331086 | 2445676 | 4776762 | |

| 10 | 1462222 | 1544937 | 3007159 | |

| 14 | 943868 | 1001271 | 1945139 | |

| 20 | 493315 | 522700 | 1016015 | |

| 26 | 236580 | 248178 | 484758 | |

| 38 | 58277 | 60115 | 118392 | |

V Conclusion

In this paper, we presented a simple yet effective depth video codec as an extension of the HEVC standard for stereoscopic and autostereoscopic multi-view displays. The proposed mathematical scheme is developed on tensor modelling of higher-order information in visual depth data. Our system decomposes a tensor representation of scene geometry into a set of factor matrices following CP decomposition via alternating least squares for lossy depth compression. By varying the rank of factor matrices and its quantization levels, we could smoothly adapt the bitrate in coding the depth content. Thus, appropriately achieved the goal of using a single system to cover a range of bit rates and quality levels. The scheme offers scalable and flexible encoder for coding of depth content, its delivery and display on a variety of 3D device in terms of supported spatial and temporal resolution and decoder complexity.

Our depth coding scheme is applicable to be used together with other hybrid coding architectures such as, e.g., MPEG-4 Visual, H.264/MPEG-4 AVC, MV-HEVC, 3D-HEVC, H.263, and H.262/MPEG-2, etc. Low-rank decomposition by pairwise perturbation retain the multi-dimensional dependencies in activation tensors of depth data and results in significant bitrate savings. This encoder control of the depth data and view synthesis quality, guarantees display adaptation via intermediate view generation at the decoder. Using a subset of the coded depth components, 2D video, stereo video or full MVD can be reconstructed from the 3D decoded depth bitstreams and reference texture data. The objective results shown in Table V show significant bitrate reduction (BDBR) in comparison to HEVC, while retaining the almost similar synthesis quality. The PSNR and SSIM measurements demonstrate satisfactory performance and not much obtrusion due to geometrical distortions in the synthesis coming from compression along depth discontinuities. In the future, we analyse view quality considering different factors such as camera baseline variation, scene characteristics, artifacts caused by inherent visibility, disocclusion, and resampling problems in DIBR [9, 73, 74, 75]. This enables a more precise estimate of synthesize view quality which helps to gain an optimal RD performance, choosing different parameters and mode selection in low-rank tensor based coding algorithms for 3D display applications.

Other avenues for future research include exploration of different tensor models in revealing latent correlations residing in high dimensional spaces of 3D video data and remove redundancies using optimized linear/multilinear algebra. A generic higher-order tensor representation in the context of an efficient HEVC extension for MVD coding is critical. While matrices based coding methods are limited to a single mode of variation, however, to naturally accommodate different modes of variation, tensors could be used in MVD video compression. We would develop an adaptive control mechanism that would allow for efficient representation of the MVD content description along each mode by a factor matrix and analyze different grounds of the decomposition with modes interaction by a core coefficient tensor for model reduction applications. We believe preliminary coding results with CP decomposition reported in this paper could motivate further investigation of tensor-based methods as a crucial mathematical object for representation and standardization of improved coding tools for candidate extensions of HEVC and its variant for 3D display applications. This could particularly benefit for further space savings for low bit rates, higher-resolution 3D content and low-delay application encodings. We would also explore tensor regression approaches for achieving higher coding gains.

References

- [1] Y. Chan, C. Fu, H. Chen, and S. Tsang, “Overview of current development in depth map coding of 3d video and its future,” in IET Signal Processing, vol. 14, no. 1, pp. 1–14, 2020.

- [2] C. Zhu, Y. Zhao, L. Yu, and M. Tanimoto, 3DTV System with Depth-Image-Based Rendering: Architectures, Techniques and Challenges. New York: Springer, 2013.

- [3] L. Fink, N. Hensel, D. Markov-Vetter, C. Weber, O. Staadt, and M. Stamminger, “Hybrid mono-stereo rendering in virtual reality,” in on Virtual Reality and 3D User Interfaces (VR), (I. Conference, ed.), (Japan), pp. 88–96, Osaka, 2019.

- [4] Y. Gil, S. Elmalem, H. Haim, E. Marom, and R. Giryes, “Monster: Awakening the mono in stereo.” 2019.

- [5] J. Combier, B. Vandeportaele, and P. Danès, “Towards an augmented reality head mounted display system providing stereoscopic wide field of view for indoor and outdoor environments with interaction through the gaze direction,” in International Conference on Computer Vision Theory and Applications, 2018.

- [6] A. S. al., “Three-dimensional video postproduction and processing,” in Proceedings of the IEEE, vol. 99, no. 4, pp. 607–625, 2011.

- [7] B. B. al., “Display-independent 3d-tv production and delivery using the layered depth video format,” in IEEE Transactions on Broadcasting, vol. 57, no. 2, pp. 477–490, 2011.

- [8] X. Gao, K. Li, W. Chen, Z. Yang, W. Wei, and Y. Cai, “Free viewpoint video synthesis based on dibr,” in on Multimedia Information Processing and Retrieval, Shenzhen, (I. Conference, ed.), (China), pp. 275–278, Guangdong, 2020.

- [9] M. Sharma, S. Chaudhury, B. Lall, and M. S. Venkatesh, “A flexible architecture for multi-view 3dtv based on uncalibrated cameras,” Journal of Visual Communication and Image Representation, vol. 25, no. 4, pp. 599–621, 2014.

- [10] H. Zhang, C. Fu, Y. Chan, S. Tsang, and W. Siu, “Probability-based depth intra-mode skipping strategy and novel vso metric for dmm decision in 3d-hevc,” in IEEE Transactions on Circuits and Systems for Video Technology, vol. 28, no. 2, pp. 513–527, 2018.

- [11] O. Stankiewicz, K. Wegner, and M. Domański, “Study of 3d video compression using nonlinear depth representation,” in IEEE Access, vol. 7, pp. 31110–31122, 2019.

- [12] Y. Liu and J. Kim, “Variable block-size compressed sensing for depth map coding,” Multimedia Tools and Applications, vol. 79, pp. 8825–8839, 2020.

- [13] B. Kamolrat, W. A. C. Fernando, and M. Mrak, “Adaptive motion-estimation-mode selection for depth video coding,” in Speech and Signal Processing, (I. I. C. on Acoustics, ed.), (TX), pp. 702–705, Dallas, 2010.

- [14] X. Wang, Y. A. Şekercioğlu, T. Drummond, E. Natalizio, I. Fantoni, and V. Frémont, “Fast depth video compression for mobile rgb-d sensors,” in IEEE Transactions on Circuits and Systems for Video Technology, vol. 26, no. 4, pp. 673–686, 2016.

- [15] M. Paul, S. Chakraborty, M. Murshed, and P. K. Podder, “Joint texture and depth coding using cuboid data compression,” in International Conference on Computer and Information Technology, pp. 138–143, 2015.

- [16] I. Daribo, D. Florencio, and G. Cheung, “Arbitrarily shaped motion prediction for depth video compression using arithmetic edge coding,” in IEEE Transactions on Image Processing, vol. 23, no. 11, pp. 4696–4708, 2014.

- [17] S. kak Kwon and D. seok Lee, “Zoom motion estimation for color and depth videos using depth information,” J. Image Video Proc., vol. 11, 2020.

- [18] J. Ohm, G. J. Sullivan, H. Schwarz, T. K. Tan, and T. Wiegand, “Comparison of the coding efficiency of video coding standards—including high efficiency video coding,” in IEEE Transactions on Circuits and Systems for Video Technology, vol. 22, no. 12, pp. 1669–1684, 2012.

- [19] J.-L. Lin, Y.-W. Chen, Y.-L. Chang, J. An, K. Zhang, Y.-W. Huang, and S. Lei, “Advanced texture and depth coding in 3d-hevc,” Journal of Visual Communication and Image Representation,”, vol. 50, pp. 83–92, 2018.

- [20] G. Tech, Y. Chen, K. M”uller, J. Ohm, A. Vetro, and Y. Wang, “Overview of the multiview and 3d extensions of high efficiency video coding,” in IEEE Transactions on Circuits and Systems for Video Technology, vol. 26, no. 1, pp. 35–49, 2016.

- [21] K. M”uller, P. Merkle, G. Tech, and T. Wiegand, “3d video coding with depth modeling modes and view synthesis optimization,” in Proceedings of the 2012 Asia Pacific Signal and Information Processing Association Annual Summit and Conference (C. A. Hollywood, ed.), pp. 1–4, 2012.

- [22] F. J”ager, “Simplified depth map intra coding with an optional depth lookup table,” in International Conference on 3D Imaging, pp. 1–4, 2012.

- [23] H. Dou, Y. Chan, K. Jia, and W. Siu, “View synthesis optimization based on texture smoothness for 3d-hevc,” in Speech and Signal Processing, (I. I. C. on Acoustics, ed.), (QLD), pp. 1443–1447, Brisbane, 2015.

- [24] H. Dou, Y. Chan, K. Jia, W. Siu, P. Liu, and Q. Wu, “An adaptive segment-based view synthesis optimization method for 3d-hevc,” Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, Hong Kong, pp, pp. 297–302, 2015.

- [25] P. Merkle, K. M”uller, and T. Wiegand, “Coding of depth signals for 3d video using wedgelet block segmentation with residual adaptation,” in IEEE International Conference on Multimedia and Expo, pp. 1–6, 2013.

- [26] I. Daribo, G. Cheung, and D. Florencio, “Arithmetic edge coding for arbitrarily shaped sub-block motion prediction in depth video compression,” in Conference on Image Processing, (I. International, ed.), (FL), pp. 1541–1544, Orlando, 2012.

- [27] H. Hamout and A. Elyousfi, “Fast depth map intra-mode selection for 3d-hevc intra-coding,” Signal, Image and Video Processing, vol. 14, pp. 1301–1308, 2020.

- [28] S. Grewatsch and E. Miiller, “Sharing of motion vectors in 3d video coding,” in International Conference on Image Processing, pp. 3271–3274, vol. 5, 2004.

- [29] I. Daribo, C. Tillier, and B. Pesquet-Popescu, “Motion vector sharing and bitrate allocation for 3d video-plus-depth coding,” EURASIP J. Adv Signal Process 20, vol. 2589, 2009.

- [30] J. Fu, D. Miao, W. Yu, S. Wang, Y. Lu, and S. Li, “Kinect-like depth data compression,” in IEEE Transactions on Multimedia, vol. 15, no. 6, pp. 1340–1352, 2013.

- [31] E. Belyaev, C. Mantel, and S. Forchhammer, “High bit depth infrared image compression via low bit depth codecs,” in Proc. SPIE 10403 (I. R. Sensing and I. Xxv, eds.), 104030A, 2017.

- [32] H. Hamout and A. Elyousfi, “Fast depth map intra coding for 3d video compression based tensor feature extraction and data analysis,” in IEEE Transactions on Circuits and Systems for Video Technology, vol. 30, pp. 1933–1945, July 2020.

- [33] H. Hamout and A. Elyousfi, “Fast 3d-hevc pu size decision algorithm for depth map intra-video coding,” J Real-Time Image Proc, vol. 17, pp. 1285–1299, 2020.

- [34] F. Pece, J. Kautz, and T. Weyrich, “Adapting standard video codecs for depth streaming,” in Proceedings of the 17th Eurographics conference on Virtual Environments & Third Joint Virtual Reality, pp. 59–66, 2011.

- [35] D. Pajak, R. Herzog, R. Mantiuk, P. Didyk, E. Eisemann, K. Myszkowski, and K. Pulli, “Perceptual depth compression for stereo applications,” Comput. Graph, vol. 33, pp. 195–204, 2014.

- [36] Y. Liu, S. Beck, R. Wang, J. Li, H. Xu, S. Yao, X. Tong, and B. Froehlich, “Hybrid lossless-lossy compression for real-time depth-sensor streams in 3d telepresence applications,” in Pacific Rim Conference on Multimedia, pp. 442–452, 2015.

- [37] C.-H. Fu, H. Chen, Y.-L. Chan, S.-H. Tsang, and X. Zhu, “Early termination for fast intra mode decision in depth map coding using dis-inheritance,” Signal Processing: Image Communication, vol. 80, 2020.

- [38] Q. Zhang, Y. Wang, T. Wei, B. Jian, and Y. Gan, “A complexity reduction scheme for depth coding in 3d-hevc,” Information, vol. 10, no. 5, p. 164, 2019.

- [39] W. Kim, S. K. Narang, and A. Ortega, “Graph based transforms for depth video coding,” in Speech and Signal (I. I. C. on Acoustics, ed.), (Kyoto), pp. 813–816, Processing, 2012.

- [40] V. Nguyen, D. Min, and M. N. Do, “Efficient techniques for depth video compression using weighted mode filtering,” in IEEE Transactions on Circuits and Systems for Video Technology, vol. 23, no. 2, pp. 189–202, 2013.

- [41] F. J”ager, “Contour-based segmentation and coding for depth map compression,” VCIP, Tainan pp, pp. 1–4, 2011.

- [42] P. Gao and A. Smolic, “Occlusion-aware depth map coding optimization using allowable depth map distortions,” in IEEE Transactions on Image Processing, vol. 28, no. 11, pp. 5266–5280, 2019.

- [43] A. D. Wilson, “Fast lossless depth image compression,” in Proceedings of theACM International Conference on Interactive Surfaces and Spaces, (New York, NY, USA), pp. 100–105, Association for Computing Machinery, 2017.

- [44] I. Schiopu and I. Tabus, “Lossy depth image compression using greedy rate-distortion slope optimization,” in IEEE Signal Processing Letters, vol. 20, no. 11, pp. 1066–1069, 2013.

- [45] I. Schiopu and I. Tabus, “Lossy and near-lossless compression of depth images using segmentation into constrained regions,” European Signal Processing Conference, Bucharest pp, pp. 1099–1103, 2012.

- [46] M. M. Duch, D. Varas, J. R. M. Rubio, J. Ruiz-Hidalgo, and F. Marques, “3d hierarchical optimization for multi-view depth map coding,” Multimed Tools Appl, vol. 77, pp. 19869–19894, 2018.

- [47] Y. Liu and J. Kim, “A depth map into smooth and edge blocks of variable sizes via rate-distortion optimized quad-tree decomposition,” Multimedia Tools and Applications, vol. 79, pp. 8825–8839, 2020.

- [48] Q. Zhang, M. Chen, X. Huang, N. Li, and Y. Gan, “Low-complexity depth map compression in hevc-based 3d video coding,” EURASIP Journal on Image and Video Processing, vol. 2, 2015.

- [49] S. Shahriyar, M. Murshed, M. Ali, and M. Paul, “A novel depth motion vector coding exploiting spatial and inter-component clustering tendency,” Visual Communications and Image Processing, Singapore, pp, pp. 1–4, 2015.

- [50] Y. Fan, S. Wu, and B. Lin, “Three-dimensional depth map motion estimation and compensation for 3d video compression,” in IEEE Transactions on Magnetics, vol. 47, no. 3, pp. 691–695, 2011.

- [51] P. Lee and X. Huang, “3d motion estimation algorithm in 3d video coding,” in Proceedings 2011 International Conference on System Science and Engineering, pp. 338–341, Macao, 2011.

- [52] J. Lei, S. Li, C. Zhu, M. Sun, and C. Hou, “Depth coding based on depth-texture motion and structure similarities,” in IEEE Transactions on Circuits and Systems for Video Technology, vol. 25, no. 2, pp. 275–286, 2015.

- [53] H. Wang and N. Ahuja, “Compact representation of multidimensional data using tensor rank-one decomposition,” in Proceedings of the 17th International Conference on Pattern Recognition, pp. 44–47, vol. 1, 2004.

- [54] Q. Wu, T. Xia, and Y. Yu, “Hierarchical tensor approximation of multidimensional images,” in Conference on Image Processing, San (I. International, ed.), (TX), Antonio, 2007.

- [55] S. Suter, C. Zollikofer, and R. Pajarola, Application of Tensor Approximation to Multiscale Volume Feature Vision Representations Modeling, and Visualization. 2010.

- [56] G. Wetzstein, D. Lanman, M. Hirsch, and R. Raskar, “Tensor displays: Compressive light field synthesis using multilayer displays with directional backlighting,” ACM Trans. Graph, vol. 31, p. 11, July 2012.

- [57] S. K. Suter, J. A. I. Guitian, F. Marton, M. Agus, A. Elsener, C. P. Zollikofer, M. Gopi, E. Gobbetti, and R. Pajarola, “Interactive multiscale tensor reconstruction for multiresolution volume visualization,” IEEE Transactions on Visualization and Computer Graphics, vol. 17, no. 12, pp. 2135–2143, 2011.

- [58] S. K. Suter, M. Makhinya, and R. Pajarola, “Tamresh: Tensor approximation multiresolution hierarchy for interactive volume visualization,” in Proceedings of the 15th Eurographics Conference on Visualization (EuroVis’13, pp. 151–160.

- [59] R. Ballester-Ripoll, S. K. Suter, and R. Pajarola, “Analysis of tensor approximation for compression-domain volume visualization,” Computers and Graphics, vol. 47, pp. 34–47, 2015.

- [60] R. Ballester-Ripoll and R. Pajarola, “Lossy volume compression using tucker truncation and thresholding,” The Visual Computer, pp, pp. 1–14, 2015.

- [61] R. Ballester-Ripoll, P. Lindstrom, and R. Pajarola, “Tthresh: Tensor compression for multidimensional visual data,” in IEEE Transactions on Visualization and Computer Graphics, vol. 26, no. 9, pp. 2891–2903, 2020.

- [62] J. Cai, Z. Cao, and L. Zhang, “Learning a single tucker decomposition network for lossy image compression with multiple bits-per-pixel rates.” 2018.

- [63] L. Ma and E. Solomonik, “Accelerating alternating least squares for tensor decomposition by pairwise perturbation,” arXiv, vol. 1811, p. 10573, 2020.

- [64] O. Kaya and B. Uçar, “Parallel candecomp/parafac decomposition of sparse tensors using dimension trees,” SIAM Journal on Scientific Computing, Society for Industrial and Applied Mathematics, vol. 40, no. 1, pp. C99–C130, 2018.

- [65] A. Phan, P. Tichavský, and A. Cichocki, “Fast alternating ls algorithms for high order candecomp/parafac tensor factorizations,” in IEEE Transactions on Signal Processing, vol. 61, no. 19, pp. 4834–4846, 2013.

- [66] B. Bross, W. J. Han, J. R. Ohm, G. J. Sullivan, and T. Wiegand, “High efficiency video coding (hevc) text specification draft 9,” Joint Collaborative Team on Video Coding (JCT-VC) of ITU-T SG WP 3 and ISO/IEC JTC 1/SC 29/WG 11, document JCTVC-K1003, Shanghai, China, vol. 16, 2012.

- [67] T. W. al., “Special section on the joint call for proposals on high efficiency video coding (hevc) standardization,” in IEEE Transactions on Circuits and Systems for Video Technology, vol. 20, no. 12, pp. 1661–1666, 2010.

- [68] G. Pastuszak, “Hardware architectures for the h.265/hevc discrete cosine transform,” in IET Image Processing, vol. 9, no. 6, p. 6, 2015.

- [69] H. Schwarz, D. Marpe, and T. Wiegand, “Description of exploration experiments in 3d video coding,” ISO/IEC JTC/SC29/WG11 MPEG2010/N11274, Dresden, Germany, vol. 1, 2010.

- [70] C. L. Zitnick, S. B. Kang, M. Uyttendaele, S. Winder, and R. Szeliski, “High-quality video view interpolation using a layered representation,” ACM Trans. Graph, vol. 23, no. 3, pp. 600–608, 2004.

- [71] J. Collaborative, “Team on video coding (jctvc),” HEVC reference software, HM version, vol. 16, p. 8.

- [72] G. Bjontegaard, “Calculation of average psnr differences between rd-curves,” in ITU-T VCEG Meeting, Austin, Texas, USA, Tech. Rep, 2001.

- [73] M. Sharma, S. Chaudhury, and B. Lall, “3dtv view generation with virtual pan/tilt/zoom functionality,” in In Proceedings of the Eighth Indian Conference on Computer Vision, Graphics and Image Processing, Article 41, pp. 1–8.

- [74] M. Sharma and G. Ragavan, “A novel image fusion scheme for ftv view synthesis based on layered depth scene representation & scale periodic transform,” in International Conference on 3D Immersion (IC3D) (B. Brussels, ed.), pp. 1–8, 2019.

- [75] M. Sharma, G. Ragavan, and B. Arathi, “A novel algebaric variety based model for high quality free-viewpoint view synthesis on a krylov subspace,” in International Conference on 3D Immersion (IC3D) (B. Brussels, ed.), pp. 1–8, 2019.