A Fast and Effective Method of Macula Automatic Detection for Retina Images

Abstract

Retina image processing is one of the crucial and popular topics of medical image processing. The macula fovea is responsible for sharp central vision, which is necessary for human behaviors where visual detail is of primary importance, such as reading, writing, driving, etc. This paper proposes a novel method to locate the macula through a series of morphological processing. On the premise of maintaining high accuracy, our approach is simpler and faster than others. Furthermore, for the hospital’s real images, our method is also able to detect the macula robustly.

Keywords— Macula Detection, Diabetic Retinopathy, Morphology

1 Introduction

Diabetic retinopathy (DR) is the leading cause of blindness in the working-age population of the developed world [9, 12]. Moreover, it is expected that the number of people suffered from DR will rise to 191 million by 2030 [7, 15, 8]. However, vision loss due to DR can be prevented if DR is diagnosed in its early development stages [8]. DR diagnosis requires the detection of macula fovea on the retina, performed by visual examination of eye fundus images. The macula fovea is responsible for the sharp central vision, which is necessary for humans in activities where visual detail is of primary importance, such as reading, writing, driving, etc. Besides, macular degeneration damages human vision irreversibly, so the identification of the macular area is significant.

A large number of image processing techniques and algorithms have been proposed to detect the macula. Most of the existing fovea detection methods include two sequential stages. In the first stage, the optic disc (OD) center is detected, and a region of interest is defined by using the known average distance between the fovea and the OD location. They were separated by a constant distance of 2.5 OD diameter approximately. In the second stage, the fovea location is obtained by exploiting the fovea’s visual appearance of in the region extracted in the first stage [3]. Li and Chutatape [10] locates the macular according to the OD location and vascular arches. Many methods used the vascular arches and OD to find a region of interest where the fovea location is. Yu et al. [16] locate the macular by selecting the lowest response of a template matching. In the method introduced by Gegundez-Arias et al. [4], they detect the macula by defining a region of interest concerning the OD location and the vascular tree. And Chin et al. [2] uses the OD location information, arched blood vessels, and vascular density to locate the macula. Dashtbozorg et al. [3] presents an automatic OD and fovea detection technique using an innovative super-elliptical filter.

Besides, there are machine learning methods to recognize the macula, which generally consist of three stages: candidate exudate detection, feature extraction, and classification. In the method proposed by Li et al. [11], the exudate candidate regions using the background subtraction technique and morphological technique and the basic properties of exudates are extracted as features for classification by the support vector machine classifier. The recent paper [14] about macula detection is also using machine learning to analyze the Age-related Macular Degeneration.

To improve the detection accuracy, the above methods can be mixed. The method presented by Aquino [1] utilizes both the visual and the anatomical features for the fovea detection and improve the obtained fovea center estimation when the fovea is detectable in the image.

However, these methods have some drawbacks, which are time-consuming because they need to combine many different methods. Moreover, most of them detect the macula with the information of OD. So if the OD positioning failed, then the location of the macula must be wrong. Zheng et al. [17] proposes a method to detect macular firstly before optic disk location or vessel detection using directional local contrast filter and local vessel density feature. And Kamble et al. [6] presents an approach for fast and accurate localization of OD and fovea using a one-dimensional scanned intensity profile analysis.

In the paper, we propose a quite fast method to locate the macula through a series of morphological processing. With a common modern laptop equipped Intel i5 processor and 4GB RAM, our algorithm can accomplish the whole detection procedure within half a second on average, faster than most of the current methods. Our macula detection approach is also powerful except for speediness, achieving a desirable accuracy in the real retina images dataset. Moreover, because the detection process does not involve complicated computation, it does not require high-performance computing power and memory hardware to be accessible to most hospitals. And the flow chart of processing is shown below.

2 Macula Detection Approach

2.1 Image Preprocessing

2.1.1 Grayscale Conversion

First, all color channels from the fundus image (Red, Green, Blue) can be extracted. The green channel shows the best contrast between the vessels and background, usually used for vessels and veins segmentation. Besides, it contains most of the information about macula. The red channel also shows macula and less information about veins and vessels. Therefore, in most articles, the red or green channel of the fundus image is used.

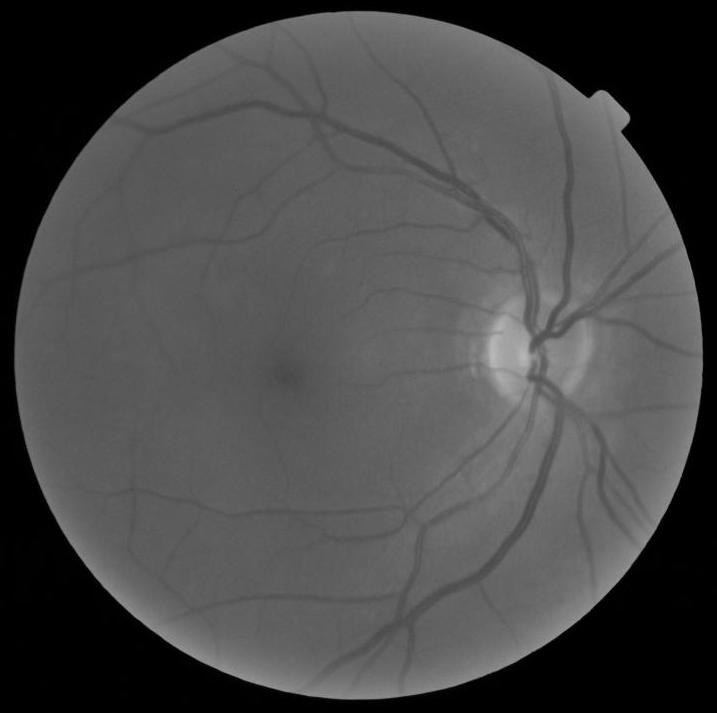

In fact, from figure 1, we conclude that each layer contains the macular information more or less. And we can see the location of the macula from the original image. So we combine the three layers by converting the RGB image to a grayscale image which we will be based on for the follow-up processing. The formula represented as:

| (1) |

Grayscale can be regarded as the quantification of luminance, and RGB is defined as three-wavelength values. When converting, it needs to consider the sensitivity curve of different wavelengths for human eyes. And the coefficients corresponding to the three RGB layers are as shown in (1).

In the following, we will utilize two pictures as an example to describe the details of the macula detection from the beginning to the end.

2.1.2 Morphological Transformation

Morphology is a broad set of image processing operations that process images based on shapes. In most articles about macula detection, the morphological transformation is an indispensable operation, and this article is no exception.

Grayscale structuring elements are also functions of the same format, called ”structuring functions”. Let and represent the coordinates of each pixel. Denoting an image by and the structuring function by , the grayscale dilation of by is given by

| (2) |

where “sup” denotes the supremum.

Similarly, the erosion of by is given by

| (3) |

where “inf” denotes the infimum.

Just like in binary morphology, the opening and closing are given respectively by

| (4) |

and

| (5) |

Dilation expands an image and erosion shrinks it. Opening generally smooths the contour of an object, breaks narrow isthmuses, and eliminates thin protrusions. The closing also tends to smooth sections of contours, but, as opposed to opening, it generally fuses narrow breaks and long thin gulfs, eliminates small holes, and fills gaps in the contour [5].

Moreover, the top-hat transform of is given by:

| (6) |

where denotes the opening operation.

This transformation is useful for enhancing the detail in the presence of shading. And the bottom-hat transform of is given by:

| (7) |

where is the closing operation.

In the actual image processing, we use the detailed algorithm as follows:

| (8) |

means a disk-shaped structuring element, which radius is 15 pixels. And after processing, the two images are visualized in Figure 3.

2.1.3 Adaptive Histogram Equalization

To further enhance the macular area’s detail, we use Adaptive Histogram Equalization (AHE) to process the image. AHE is applied for contrast enhancement. And dark regions, including vessels, macular areas, and so on, are dominant after contrast enhancement. It usually increases the global contrast of images. By this adjustment, the intensities can be better distributed on the histogram. This allows for areas of lower local contrast to gain a higher contrast. Histogram equalization accomplishes this by effectively spreading out the most frequent intensity values. The first step is to count the number of occurrences of each gray level in the gray histogram. The second step is to accumulate normalized gray histograms. And the final step is to calculate new pixel values. The two images after adaptive histogram equalization are demonstrated in Figure 4.

For eliminating the useless noise, we also need to erode and dilate the images.

2.2 Otsu’s method

Next, we aim to find the macular area and choose Otsu’s method (the maximum between-class variance method), which uses the idea of clustering. Through this method, the number of grayscale images is divided into two parts by grayscale, calculated by variance to find a suitable gray level to divide. So we can utilize Otsu algorithm in the binarization to select the threshold for binarization automatically. Besides, Otsu algorithm is considered the best algorithm for threshold selection in image segmentation, and the calculation is simple and not affected by image brightness and contrast.

The algorithm assumes that the image contains two classes of pixels following bi-modal histogram (foreground pixels and background pixels). It then calculates the optimum threshold separating the two classes so that their combined spread (intra-class variance) is minimal, or equivalently (because the sum of pairwise squared distances is constant) so that their inter-class variance is maximal. [13]

Assuming that the gray level of the original image is and the total number of pixels is . The number of pixels with grayscale is .

First, we normalize the gray level

and set the threshold of segmentation as . The grayscale is divided into two categories, the probability of each category is

where .

The average grayscale appearance of each category is

and the overall average grayscale is

Then, we exhaustively search for the threshold that maximizes the intra-class variance, defined as a weighted sum of variances of the two classes:

| (9) | ||||

Finally, we step through all possible thresholds , and maximum the intra-class variance . Due to the particularity of the macula. We subtract a threshold value of 0.2 from the final value as a threshold value for better results. If the threshold value is larger than 1, we take 1 (white), and if it is less than 0, we take 0 (black). Figure 6 shows the results after Otsu’s method.

2.3 Area Selection

We select the final region by calculating the area of each connected domain and retain the area that more than 400 and less than 5000. Because most of the macular areas are circular, we judge the location of the macula by whether it is a circle or not through the formula

| (10) |

where is the connected domain area, is the longest length of the connected domain. The value of is between 0 and 1, and the closer to 1, the more circular the connected domain is. And the connected domain is circular if and only if .

Finally, we filter out the largest connected domain and determine the center of the connected domain, the center of the foveal location. At the same time, to improve the recognition accuracy, for the images that are easy to identify the OD, we can determine the location of the macula with the information of OD.

3 Real Data

This section employed our proposed algorithm on 254 real retina images collected by Image Reading Center Zhongshan Ophthalmic Center of Sun Yat-sen University. The data set contained 247 images, including the macula. The image was stretched by .

A part of typical macula detection results based on our proposed algorithm is demonstrated in Figure 8. And it shows that our method can successfully detect the location of the macula.

Furthermore, we discuss the classification accuracy of our method. Our detection results are evaluated by 2 experienced image readers. Besides, the recognition accuracy is shown in Table 1.

| Actual 0 | Actual 1 | |

|---|---|---|

| Predicted 0 | 7 | 8 |

| Predicted 1 | 0 | 239 |

Table 1 shows that if we identify a macula in a retina image, it must be a macula. And it means that the false positive rate is 0%. The sensitivity is 96.8%, and the specificity is 100%.

4 Conclusion

This paper proposes a simple method to locate the macula through a series of morphological processing. On the premise of maintaining high accuracy, our approach is simpler and faster than others and does not use the blood vessel, optic disc, and other location information. For the real images of the hospital, our method can also detect the macula automatically and robustly.

References

- Aquino [2014] A Aquino. Establishing the macular grading grid by means of fovea centre detection using anatomical-based and visual-based features. Computers in Biology & Medicine, 55:61, 2014.

- Chin et al. [2013] Khai Sing Chin, Emanuele Trucco, Lailing Tan, and Peter J. Wilson. Automatic fovea location in retinal images using anatomical priors and vessel density. Pattern Recognition Letters, 34(10):1152–1158, 2013.

- Dashtbozorg et al. [2016] Behdad Dashtbozorg, Jiong Zhang, Fan Huang, and Bart M. Ter Haar Romeny. Automatic Optic Disc and Fovea Detection in Retinal Images Using Super-Elliptical Convergence Index Filters. Springer International Publishing, 2016.

- Gegundez-Arias et al. [2013] Manuel E. Gegundez-Arias, Diego Marin, Jose M. Bravo, and Angel Suero. Locating the fovea center position in digital fundus images using thresholding and feature extraction techniques. Computerized Medical Imaging & Graphics the Official Journal of the Computerized Medical Imaging Society, 37(5-6):386, 2013.

- Gonzalez and Wintz [2010] Rafael C Gonzalez and Paul Wintz. Digital image processing. PUBLISHING HOUSE OF ELECTRONICS INDUSTRY, 2010.

- Kamble et al. [2017] Ravi Kamble, Manesh Kokare, Girish Deshmukh, Fawnizu Azmadi Hussin, and Fabrice Mériaudeau. Localization of optic disc and fovea in retinal images using intensity based line scanning analysis. Computers in biology and medicine, 87:382–396, 2017.

- Kaur and Mittal [2018] Jaskirat Kaur and Deepti Mittal. A generalized method for the segmentation of exudates from pathological retinal fundus images. Biocybernetics and Biomedical Engineering, 38(1):27–53, 2018.

- Khojasteh et al. [2018] Parham Khojasteh, Behzad Aliahmad, and Dinesh K Kumar. Fundus images analysis using deep features for detection of exudates, hemorrhages and microaneurysms. BMC ophthalmology, 18(1):288, 2018.

- Leontidis et al. [2017] Georgios Leontidis, Bashir Al-Diri, and Andrew Hunter. A new unified framework for the early detection of the progression to diabetic retinopathy from fundus images. Computers in biology and medicine, 90:98–115, 2017.

- Li and Chutatape [2004] H. Li and O Chutatape. Automated feature extraction in color retinal images by a model based approach. IEEE transactions on bio-medical engineering, 51(2):246–54, 2004.

- Li et al. [2015] Wen Li, Yide Ma, and Ting Yu. Automatic detection of exudates and fovea for grading of diabetic macular edema in fundus image. 2015.

- Mookiah et al. [2013] Muthu Rama Krishnan Mookiah, U Rajendra Acharya, Chua Kuang Chua, Choo Min Lim, EYK Ng, and Augustinus Laude. Computer-aided diagnosis of diabetic retinopathy: A review. Computers in biology and medicine, 43(12):2136–2155, 2013.

- Otsu [1979] Nobuyuki Otsu. A threshold selection method from gray-level histograms. IEEE Transactions on Systems, Man, and Cybernetics, 9(1):62–66, 1979.

- PC Bhosale [2017] S Bobde PC Bhosale, P Kulkarni. Automatic detection of age related macular degeneration using retinal colour images. International Journal on Emerging Trends in Technology, 4:8078–8080, 2017.

- Shaw et al. [2010] Jonathan E Shaw, Richard A Sicree, and Paul Z Zimmet. Global estimates of the prevalence of diabetes for 2010 and 2030. Diabetes research and clinical practice, 87(1):4–14, 2010.

- Yu et al. [2011] H. Yu, S. Barriga, C. Agurto, S. Echegaray, M. Pattichis, G. Zamora, W. Bauman, and P. Soliz. Fast localization of optic disc and fovea in retinal images for eye disease screening. In Medical Imaging: Computer-aided Diagnosis, 2011.

- Zheng et al. [2014] Shao Hua Zheng, Chen Jian, Pan Lin, Guo Jian, and Yu Lun. A novel method of macula fovea and optic disk automatic detection for retinal images. Journal of Electronics & Information Technology, 36(11):2586–2592, 2014.