A Diffusive Data Augmentation Framework for Reconstruction of Complex Network Evolutionary History

Abstract

The evolutionary processes of complex systems contain critical information regarding their functional characteristics. The generation time of edges provides insights into the historical evolution of various networked complex systems, such as protein-protein interaction networks, ecosystems, and social networks. Recovering these evolutionary processes holds significant scientific value, including aiding in the interpretation of the evolution of protein-protein interaction networks. However, existing methods are capable of predicting the generation times of remaining edges given a partial temporal network but often perform poorly in cross-network prediction tasks. These methods frequently fail in edge generation time recovery tasks for static networks that lack timestamps. In this work, we adopt a comparative paradigm-based framework that fuses multiple networks for training, enabling cross-network learning of the relationship between network structure and edge generation times. Compared to separate training, this approach yields an average accuracy improvement of 16.98%. Furthermore, given the difficulty in collecting temporal networks, we propose a novel diffusion-model-based generation method to produce a large number of temporal networks. By combining real temporal networks with generated ones for training, we achieve an additional average accuracy improvement of 5.46% through joint training.

Index Terms:

Complex network, Evolution, Data Augmentation, Diffusion model.I Introduction

The core challenge in reconstructing the evolution of complex networks is inferring the order in which edges are formed in dynamic graphs, thus reproducing the network’s evolutionary process ([1, 2]). This task is intrinsically tied to the dependency between network structure and edge formation sequence, as accurately inferring this order can reveal the underlying dynamic mechanisms of the network, as illustrated in Fig. 1. Successfully reconstructing network evolution has significant applications across various domains, such as social network analysis, traffic system optimization, and biological network research ([3, 4, 5]). These fields require a deep understanding of how networks evolve over time to predict future trends and implement effective interventions or optimizations.

Recent work published in Nature Communications 2024 [6] introduces a novel framework that defines and addresses the important problem of complex network evolution prediction. This approach predicts the generation times of network edges by leveraging the structural features of the network. Specifically, it utilizes the generation times of a subset of edges to infer the temporal information for the remaining edges, effectively linking network structural characteristics with edge generation times. While this represents a significant advancement, many real-world applications involve networks where no temporal information is available, presenting an even more challenging problem. Many networks are observed as static snapshots without temporal information. For example, in ecological predator-prey networks (as shown in Fig. 1), current interactions are easy to capture, but reconstructing evolutionary trajectories over millennia is costly and challenging. Similarly, in social and protein-protein interaction networks, while current structures are observable, tracing their step-by-step evolution remains difficult.

These scenarios highlight a common challenge in real-world applications: inferring the evolutionary history of a network solely from its static structure. While the [6] framework is effective in scenarios where partial temporal information is available, it is not well-suited for tasks where the network is entirely static, with no prior temporal data to leverage. This limitation underscores the necessity of developing novel methodologies capable of reconstructing network evolution directly from static structures, addressing a problem of critical importance across diverse domains.

To address the limitations of existing methods, we reformulate the task of predicting edge generation times into an equivalent task: predicting the relative temporal order between any two edges within the network. This reformulation simplifies the problem and transforms the previously non-transferable task into one that enables cross-network learning of the relationship between network features and edge generation times.

Unlike prior models that are typically trained on a single temporal network, our approach leverages multiple temporal networks for training, allowing the model to learn generalized associations between network features and edge generation times across networks. As a result, the model trained on multiple temporal networks demonstrates superior and consistent performance when applied to previously unseen static networks.

Furthermore, to enhance the model’s performance and address the scarcity of real-world temporal network data, we employ a diffusion model to generate an arbitrary number of augmented temporal networks based on existing temporal networks. This augmentation process provides a large and diverse dataset comprising both real and generated temporal networks. By training on this enriched dataset, the model achieves even better performance, particularly in scenarios involving unseen static networks, demonstrating its robustness and effectiveness.

In summary, the key contributions of our work are as follows:

-

•

Cross-Network Framework for Edge Generation Time Prediction: We introduce a novel framework capable of learning the relationship between network features and edge generation times across multiple temporal networks. This approach enables the model trained on multiple temporal networks to generalize effectively to unseen static networks for edge generation time prediction.

-

•

Diffusion-Based Edge Timestamp Generation: We propose a diffusion model-based method for generating edge timestamps from network features, achieving exceptional performance in predicting edge generation times and demonstrating robustness and effectiveness.

-

•

Integration of Real and Augmented Temporal Networks: By integrating real temporal networks with diffusion model-augmented networks, we train a stable, high-performing model that outperforms existing methods in predicting edge generation times in static networks.

II RELATED WORK

II-A Temporal Networks in Complex Networks

Recent advancements in temporal networks have significantly enriched complex network analysis [7, 8, 9, 10, 11]. Early models such as Erdős-Rényi and Barabási-Albert ([12, 13]) mainly addressed static network properties, offering limited applicability to dynamic systems. [14] provided foundational concepts like temporal paths, establishing a basis for subsequent research. [15] highlighted bursty interaction patterns in real-world networks, emphasizing temporal heterogeneity. [16] extended random walk theory to temporal networks, crucial for understanding diffusion processes. [17] introduced methods for detecting communities in dynamic structures, while [18] integrated predictive models for forecasting interactions. [19] reviewed evolving community detection approaches, showcasing the need to address both temporal and topological dynamics in analysis. [6] introduced the problem and method for predicting network evolution based on network structures. By utilizing the timestamps of a subset of network edges, the method effectively predicts and reconstructs the remaining edges, achieving promising results. Furthermore, this work demonstrated that the reconstructed edge timestamps could also enhance performance in link prediction tasks, further underscoring the significance of studying network evolution.

II-B Dynamic Graphs and Temporal Network Generation

Recent developments in temporal network generation have significantly advanced the field, focusing on capturing both the structural and temporal dynamics of evolving networks. [20] improved temporal modeling by transforming temporal interactions into static graphs, while [21] utilized motif-based approaches for generating time-dependent structures, though it assumed fixed rates and lacked adaptability to real-world temporal fluctuations. [22] introduced TIGGER leveraging temporal point processes, combining both inductive and transductive learning to handle large-scale, complex networks with superior efficiency and accuracy. This progression highlights the growing ability of temporal models to replicate the nuanced evolution of real-world dynamic systems.

II-C Data Augmentation via Graph Diffusion Models

Employing generative methods such as generative adversarial networks (GANs) to learn a distribution based on a small sample of data and subsequently conduct sampling to obtain a large quantity of training samples is a commonly adopted method [23, 24, 25, 26]. Diffusion models have recently shown significant advantages in performance, and have done well on graph generation tasks [27, 28, 29, 30], making them suitable for solving the problem of small-sample learning in reconstructing network evolutions. Specifically, [28] first propose to introduce the score-based diffusion model to solve the graph generation problem. The Gaussian noise is adopted to construct the diffusion process, leading to poor sparsity generated graphs. [29] explore jointly modeling the nodes and edges with semantic features. [27] use multinomial noise to make the diffusion process operating in discrete space, which greatly improves the performance. Therefore, we can see that using graph diffusion models to generate sufficient samples to enhance edge order prediction performance is promising.

III Preliminaries

III-A Reformulating the Edge Generation Prediction Task

In the study of network evolution, predicting the exact generation time of edges represents a significant challenge. Directly learning the relationship between network features and edge generation times proves to be difficult due to the inherent complexity and variability of these relationships across different networks. Moreover, such a task lacks generalizability; the features learned in one network often fail to generalize effectively to another, rendering the task unsuitable for cross-network transferability. Thus, learning to predict edge generation times within the same network—where partial timestamps are available for predicting the remaining ones—is appropriate. However, if the goal is to generalize across networks and learn the relationship between network features and edge generation times, the equivalence transformation becomes crucial.

To address this limitation, we reformulate the original task of predicting edge generation times into an equivalent task: predicting the relative temporal order of generation between any two edges in the network. This reformulation simplifies the prediction problem into a pairwise classification task, which is more robust and transferable across networks.

We rigorously prove the mathematical equivalence between these two tasks, with the final equivalence result expressed as:

| (1) |

where represents the pairwise prediction accuracy, and denotes the total number of edges in the network. The detailed proof is provided in Appendix -A.

Furthermore, as illustrated in Fig. 2, experimental results validate this equivalence in real-world networks. These results demonstrate that improving pairwise prediction accuracy directly contributes to reducing the overall prediction error in edge generation times. Equation 1 reveals that the overall sequence error scales inversely with the square root of the edge count, favoring networks with many edges.

This equivalence also yields an additional benefit: achieving a low RMSE in predicting edge generation times does not necessitate exceedingly high accuracy in pairwise temporal order predictions. This insight reduces the performance requirements for the underlying pairwise prediction model, enhancing the practical applicability of the approach.

To infer the complete temporal order of edge generation from pairwise relationships, we adopt the Borda algorithm. This algorithm aggregates pairwise predictions to construct a global sequence of edge generation times while ensuring consistency across predictions. A detailed explanation of the Borda algorithm, including its derivation and implementation, is provided in the Appendix -B.

III-B Mathematical Definition of the Task

In the study of complex networks, the generation time of edges is a critical factor influencing network evolution. Our prediction task aims to learn the relationship between network structure and edge generation time, given a temporal network. Specifically, we focus on how to infer the generation order of edges in the absence of time labels.

Our ultimate goal is to predict the generation time of each edge for a pure network structure. To simplify the task, we specify it as follows: for any two edges and in the network, we need to predict their generation order, determining which edge is generated first and which is generated later. By inferring the order of all edges, we can construct the overall generation sequence of edges in the network, thereby gaining a comprehensive understanding of the network’s evolutionary process.

1. Temporal Network Representation: Let the temporal network consist of a set of nodes and a set of edges . Each edge represents a connection between a pair of nodes and has an associated generation time .

2. Edge Generation Order: For any two edges and , we define the generation order relation as follows:

Here, indicates that edge is generated before edge , while indicates that the generation time of edge is later than or equal to that of edge .

3. Edge Generation Time Prediction: Our task can be expressed as a binary relation prediction problem, where the objective is to predict the edge generation order by learning the structural features of the network :

In this context, is the predicted generation order, and the function represents the model used to infer the generation order based on network structural features.

4. Construction of Edge Generation Sequence: Once the generation order for all edges is predicted, we can obtain the overall edge generation sequence , expressed as:

Here, are the indices of edges arranged according to the predicted generation order.

Through the above description and mathematical definitions, our task clearly demonstrates how to infer edge generation times based solely on structural information in the absence of time labels, thus providing robust support for the evolutionary prediction of complex networks.

III-C Framework for Comparative Paradigm-based Neural Network

The framework for predicting the order of two edges in a network is structured as follows. Initially, we utilize an embedding representation learning method to obtain a representation vector for each edge in the network. These embedding vectors are derived from 12 network features, which include the degree, clustering coefficient, betweenness centrality, common neighbors, random walk probability, minimum spanning tree connection, Jaccard coefficient, resource allocation index, Adamic-Adar index, shortest path length, PageRank, and average clustering coefficient [31, 32, 33]. These features are calculated based on the network’s adjacency matrix and provide a comprehensive structural description of each edge. Let us denote the edges as and , with their respective embedding vectors represented as and .

Each embedding vector is subsequently fed into a fully connected neural network comprising three layers. The architecture is defined as follows:

Input Layer: The first layer receives the input vectors and , which are concatenated to form a single input vector , where is the dimension of the embedding vectors.

Hidden Layer: The second layer is a hidden layer with a dimensionality of . This layer applies a linear transformation followed by a ReLU activation function, defined as:

where and are the weight matrix and bias vector for the hidden layer, respectively.

Output Layer: The output layer consists of two neurons that produce a scalar output and :

where and are the weights and biases for the output layer.

After obtaining the outputs and , we apply the softmax function to convert these into a probability distribution representing the likelihood that each edge was generated first:

These probabilities reflect the generation times of the edges, normalized to ensure that they sum to one.

The loss function is defined as the categorical cross-entropy loss between the predicted probabilities and the true labels , where indicates that occurs before :

Additionally, we incorporate regularization to mitigate overfitting. The regularization term is defined as:

where is the regularization strength. Thus, the final loss function to be minimized is:

III-D Limitations of Existing Method

Current methods for network evolution prediction rely on partial edge timestamps to train models and predict the temporal order of remaining edges. For instance, the Nature Communications [6] approach performs well on synthetic networks by learning from one fully timestamped network and predicting on another. However, its effectiveness diminishes on real-world networks due to the structural homogeneity of synthetic networks (e.g., Barabási–Albert [34], Popularity-similarity-optimization model [35], Fitness model [36]), which share scale-free properties. In contrast, real-world networks exhibit diverse and complex generative mechanisms, making learned models difficult to generalize across networks.

A more critical limitation arises in scenarios where no temporal information is available. In practice, collecting edge timestamps is often infeasible, leaving only the network’s topological structure. Existing methods, which depend on partial temporal annotations, fail to address the challenge of predicting temporal dynamics in completely untimestamped networks.

IV Method

IV-A Joint Training for Improved Transferability

To address the limitations of existing methods, we propose a joint training approach that combines multiple networks to train a single model, exposing it to diverse network types and improving its ability to generalize to unseen networks.

In Section V-I, we demonstrate why traditional methods struggle with transferability when trained on a single network type. Structural differences across networks lead to significant performance gaps, making these models unreliable on unseen data. Reformulating the prediction task—from predicting edge generation times to predicting pairwise edge order—mitigates this issue. Furthermore, Section V-C1 highlights the superior performance of our jointly trained model, which achieves consistent results on unseen networks.

Fig. 4 illustrates our approach. Solid circles represent existing networks, and current methods often exhibit instability, as gaps between networks hinder generalization. Joint training reduces these gaps by incorporating a diverse range of networks, enabling the model to learn more generalizable patterns.

To further enhance the model’s transferability, we generate augmented networks using diffusion models, represented by the small solid circles in Figure 4. These augmented networks expand the range of each original network, increasing coverage across network types and reducing gaps. The broader state space allows the model to encounter more diverse network structures during training, improving its robustness on unseen networks. The dashed circles in Figure 4 represent the combined sets of original and augmented networks, showcasing the scalability and adaptability of our approach.

In Section IV-B1, we detail the diffusion-based augmentation process and demonstrate its effectiveness in further improving the model’s performance.

IV-B Enhancing Network Evolution Prediction with Diffusion Model-Based Augmentation

As shown in Fig. 5, we propose an enhanced framework for network evolution prediction based on diffusion models. The core idea is that we have a limited number of temporal networks. By performing extensive sampling on each temporal network, we input the samples into the diffusion model to learn the underlying generation mechanism of the temporal networks. The diffusion model then generates an arbitrary number of augmented samples. Using a combination of a small number of real temporal networks and a large number of diffusion-augmented temporal networks, we input them into a comparative paradigm-based neural network (CPNN) for training. This approach results in a model with better transferability and stability. In practical applications, when a static network is input into the trained CPNN, we can predict the temporal order of pairs of edges. Further, by applying the Borda count algorithm, we can derive the overall edge order of the entire network, enabling the prediction of edge evolution processes in static networks.

IV-B1 Construction of Training Dataset for the Diffusion Model

The construction of the training dataset for our diffusion model is a crucial step in enhancing the edge order prediction task. Our approach begins with a labeled temporal network, which serves as the foundational dataset. The methodology can be outlined as follows:

Sampling Strategy: We employ a systematic sampling technique to generate multiple training network samples from the original labeled temporal network. Specifically, we start by sampling a subset of edges, beginning with 50% of the total edges and progressively increasing to include all edges in the network. This approach allows us to explore various network densities and their impacts on the learning process.

Creation of Diverse Samples: For each sampling iteration, we construct a new training network by retaining the sampled edges and discarding the remaining edges. This results in 100 distinct training network samples, each representing a unique configuration of the original temporal network. As shown in Fig. 5, we sampled networks. By varying the percentage of edges retained, we ensure a diverse representation of network structures, which is essential for robust model training.

IV-B2 Graph Denoising Diffusion Model

Based on the training data sampled from the real temporal network, we utilize the graph diffusion models to learn the distribution of the topology evolutions conditioned on the final topology structure. We refer to our model as the Diffusion Model for Generating Topology Evolution History, abbreviated as TopoEvoDiff. The graph diffusion models are adapted to ordered edges diffusion based on the final topology structure, where the structural features are encoded into the node features, such as node embeddings learned through structural learning.

The graph diffusion model consists of two main procedures: the forward diffusion process and the reverse denoising process . In the forward diffusion process, the network orders are disrupted by Gaussian noise step by step and reach to pure noise. The forward diffusion process is utilized to construct the training data for the denoising networks used in the reverse denoising process. The computation is defined as: should mean the timestamps and should mean the indices of the edges

| (2) |

where represents the diffusion steps, denotes the normal distribution, indicates the noise level at step , and refers to the identity matrix. The reverse denoising process is to recover the origin edge orders from the pure noise, with the guidance of the final topology structure. In each step, the noised to be removed from the noisy data is predicted by the denoising networks. The denoising networks used in the reverse process are graph transformers [37]. The construction of the prediction model during the denoising process is shown in Fig. 6. In the denoising process, node features serve as guidance to direct the data generation, remaining unchanged throughout. In each step of the denoising process, the noisy edges and the node features used as guidance are fed into the graph transformer as the node inputs and edge inputs, and the small noise that needs to be removed from the noisy edges in a single step is output. The computation of the reverse denoising process is defined as:

| (3) |

where

| (4) |

, and . Here, is the noise predicted by based on the noisy state , diffusion step and the spatial characteristics of the topology of the node

The denoising networks are trained to minimize the reconstruction loss between the predicted noise and the true noise. The loss function we adopted is the same as denoising diffusion probabilistic models (DDPMs) [38], which is the mean squared error between the predicted noise and the true noise. The loss function is defined as:

| (5) |

where denotes the norm.

When the model is trained, we can sample the temporal networks with similar distribution as the original dataset by the graph diffusion model. First, a pure Gaussian noise is sampled and then the denoising networks iteratively predict the noise to be removed, and the ordered edges will be obtained from the weights of the sampled network gradually. The generated temporal networks are used to augment the original dataset for the edge order prediction model.

V Experiments

V-A Datasets

This study employs 10 real-world datasets from five distinct categories: Protein-Protein Interaction (PPI) Networks, World Trade Web (WTW), Collaboration Networks, Animal Networks, and Transportation Networks. These datasets, covering diverse domains, provide a solid foundation for evaluating the proposed methods. The details of the datasets are summarized in Table I, and the descriptions are as follows:

| Network type | Network name | N | M | S | Refs | ||

| Protein-Protein Interaction (PPI) | Fungi | 2,144 | 6,000 | 3,132,599 | 0.174 | 3 | [39] |

| Human | 1,891 | 2,840 | 1,467,231 | 0.364 | 3 | ||

| Fruit | 461 | 598 | 58,768 | 0.329 | 3 | ||

| Worm | 485 | 438 | 57,980 | 0.606 | 4 | ||

| Bacteria | 873 | 2,321 | 357,730 | 0.133 | 2 | ||

| World Trade Web | WTW | 187 | 3,249 | 1,799,895 | 0.341 | 17 | [40] |

| Collaboration | Thermody | 158 | 228 | 25,668 | 0.992 | 131 | [41] |

| Animal | Weaver | 445 | 1,332 | 762,058 | 0.860 | 8 | [42] |

| Transportation | Airplane | 48 | 125 | 1,291 | 0.167 | 5 | [43] |

| Coach | 1,874 | 2,666 | 84,495 | 0.024 | 4 |

-

•

PPI Networks: This category includes five networks: Fungi, Human, Fruit, Worm, and Bacteria. Each node represents a protein, while edges denote interactions between proteins. The datasets capture the first occurrence of each edge to distinguish the order in which interactions are established. These networks are particularly valuable for studying temporal evolution in biological systems.

-

•

WTW: The WTW dataset represents global trade relations between countries from 1997 to 2013. Nodes correspond to countries, and edges signify bilateral trade relationships. The generation time of an edge reflects when trade between two countries was first recorded. This dataset highlights the evolving dynamics of global trade.

-

•

Collaboration Networks: We use the Thermody collaboration network, constructed from publication data of the American Physical Society. Nodes represent researchers, and edges denote coauthorship relationships. The earliest collaboration between two authors determines the generation time of an edge. This dataset provides insight into academic collaboration patterns.

-

•

Animal Networks: The Weaver network is based on 10 months of social interaction data from colonies of weaver birds in South Africa. Nodes represent individual birds, and edges denote interactions. To improve data density and reduce sparsity, the raw data is aggregated into 8 snapshots, capturing the temporal dynamics of social behavior.

-

•

Transportation Networks: Two transportation networks are used: Airplane and Coach. In the Airplane network, nodes are airports, and edges represent flight connections, while in the Coach network, nodes are coach stations, and edges denote transport links. The generation time of an edge is the earliest recorded connection between two nodes. The data is aggregated into daily snapshots, resulting in 5 snapshots for Airplane and 4 snapshots for Coach.

These datasets, spanning diverse domains such as biology, economics, collaboration, animal, and transportation, provide comprehensive support for the experiments in this study.

V-B Baseline Models

To evaluate the effectiveness of our proposed method, we compare it against a set of state-of-the-art baseline models. These models are designed to explore the relationship between node, edge, and network features and the edge weights within a network. The baseline models fall into three categories and are described as follows:

-

•

Random Forest (RF) [44]: A tree-based machine learning algorithm that uses node and edge-level features to predict edge weights. Its robustness and ability to handle non-linear feature interactions make it a strong benchmark.

-

•

TIGGER [22]: A temporal generative model that combines synthetic network generation with node embeddings to capture dynamic relationships and predict edge weights over time.

-

•

NetGAN [45]: A generative adversarial network (GAN)-based model designed to recreate realistic network structures by learning the patterns of walks in the graph. The model is adapted here to generate networks with weighted edges.

-

•

Digress [46]: A state-of-the-art method for network generation, utilizing a discrete denoising diffusion model to directly operate on graph structures while preserving their sparsity and topology. By employing a Markovian noise process and a graph transformer for reconstruction, Digress sets a new benchmark in generating large-scale graphs.

These baselines encompass a wide range of methodologies, from classical physics-based models to advanced deep learning and generative approaches, providing a comprehensive basis for comparison. The detailed parameter settings for these models are presented in the supplementary material.

| Train / Test | Airplane | Fruit | Thermody | Weaver | Worm | Bacteria | Coach | Fungi | Human | WTW | Average |

| Average (Split) | 0.7641 | 0.7245 | 0.5513 | 0.6696 | 0.6514 | 0.7264 | 0.8462 | 0.7104 | 0.6902 | 0.6417 | 0.6978 |

| 0.9828 | 0.8495 | 0.4957 | 0.8201 | 0.7020 | 0.9197 | 0.8663 | 0.8938 | 0.7438 | 0.8245 | 0.8098 | |

| 0.9761 | 0.8290 | 0.7420 | 0.7536 | 0.6119 | 0.8940 | 0.8971 | 0.8246 | 0.7235 | 0.7880 | 0.8030 | |

| 0.9772 | 0.8112 | 0.7218 | 0.8350 | 0.6579 | 0.8205 | 0.8034 | 0.8573 | 0.7711 | 0.8098 | 0.8065 | |

| 0.9775 | 0.8336 | 0.4924 | 0.8452 | 0.7352 | 0.7656 | 0.9124 | 0.8654 | 0.7602 | 0.7729 | 0.7960 | |

| 0.9772 | 0.8119 | 0.7030 | 0.8346 | 0.7329 | 0.8642 | 0.9133 | 0.8655 | 0.7551 | 0.8133 | 0.8271 |

| Train / Test | Bacteria | Coach | Fungi | Human | WTW | Average |

| 0.8642 (18.97+%) | 0.9133 (7.93+%) | 0.8655 (+21.83%) | 0.7551 (+9.40%) | 0.8133 (+26.74%) | +16.98% | |

| + | 0.8873 (+22.15%) | 0.9416 (+11.27%) | 0.8956 (+26.07%) | 0.7823 (+13.34%) | 0.8172 (+27.35%) | +20.04% |

| + | 0.9213 (+26.83%) | 0.9554 (+12.90%) | 0.9128 (+28.49%) | 0.7875 (+14.10%) | 0.8211 (+27.96%) | +22.06% |

| + | 0.9336 (+28.52%) | 0.9538 (+12.72%) | 0.9145 (+28.73%) | 0.7845 (+13.66%) | 0.8249 (+28.55%) | +22.44% |

| + | 0.9360 (+28.85%) | 0.9609 (+13.55%) | 0.9116 (+28.32%) | 0.7837 (+13.55%) | 0.7895 (+23.03%) | +21.46% |

| + | 0.9138 (+25.80%) | 0.9610 (+13.57%) | 0.9101 (+28.11%) | 0.7882 (+14.20%) | 0.8158 (+27.13%) | +21.76% |

| + | 0.9063 (+24.77%) | 0.9596 (+13.40%) | 0.9071 (+27.69%) | 0.7953 (+15.23%) | 0.8159 (+27.15%) | +21.65% |

V-C Enhancing Model Performance through Joint Training and Data Augmentation

V-C1 Impact of Joint Training

Table II provides a detailed comparison of model performance under different training scenarios, revealing the benefits of joint training. The first row of the table, labeled as Average (Split), shows the average accuracy of models trained on each individual network and tested across all networks. This serves as a baseline for understanding how single-network training performs in isolation.

Rows labeled through represent models trained on progressively larger combinations of networks. , , , , and represent networks Airplane, Fruit, Thermody, Weaver, and Worm, respectively. denotes the union of networks Airplane and Fruit. As more networks are incorporated, the model’s performance becomes increasingly stable, with the worst-case accuracy improving significantly. For instance, the worst accuracy of is 0.4957, whereas achieves 0.7030. This indicates that adding networks reduces variability and enhances robustness across test scenarios. On average, the fused training achieves 0.8133, demonstrating an improvement over the Average (Split) of 0.6978 from separate training.

Interestingly, the addition of seemingly unrelated networks, such as Thermody, not only improves accuracy but also stabilizes the model. As shown in Table V, when Thermody is trained in isolation, its predictions on other networks consistently exhibit poor performance, with all accuracies below 0.6. However, comparing the results of and , incorporating Thermody into the training process enhances predictions on Bacteria and WTW. This highlights the complementary value of diverse networks, demonstrating that even those with poor standalone transferability can contribute critical information to improve both model stability and overall performance when included in joint training.

Overall, joint training proves to be a powerful approach, leveraging network diversity to achieve robust and accurate predictions. This strategy mitigates the limitations of single-network training and underscores the importance of comprehensive data integration in predictive tasks.

V-C2 Benefits of Data Augmentation

While joint training has proven to enhance model accuracy, particularly on unseen networks, we sought to explore further improvements through the use of additional training samples. However, obtaining networks with edge generation timestamps is a labor-intensive and resource-consuming process. To overcome this limitation, we employed generative methods to create synthetic networks, enabling us to generate an arbitrary number of samples without the acquisition difficulties associated with real-world data.

The experimental results, as shown in Fig. 7, reveal that adding generated networks does not improve performance when predicting the withheld parts of the training networks themselves. This suggests that when sufficient real edges are already present, adding synthetic edges provides no additional advantage. Importantly, the performance does not degrade either, remaining consistent despite the augmented training data. In Table II, the results on the temporal networks Airplane, Fruit, Thermody, Weaver, and Worm, which are included in the training set, are consistent with those of the . Therefore, we just provide a detailed presentation of the performance on unseen static networks. Each training network generates 100 corresponding augmented networks. During integrated training, only the edges absent in the original training network are retained for training.

The benefits of data augmentation become apparent when evaluating the model’s accuracy on unseen networks. Models trained with augmented samples from Airplane, Fruit, and Worm demonstrated significant accuracy improvements on previously unseen networks. However, augmenting samples from Thermody did not yield similar gains. This is likely due to the inherent structural dissimilarity between Thermody and other networks, which may cause the model to deviate from generalizable patterns and overfit to the unique characteristics of Thermody.

To further leverage the advantages of augmentation, we combined the augmented samples from Airplane, Fruit, and Worm for training. The results, shown in Fig. 8, indicate that combining augmented samples leads to further performance improvements. Specifically, combining Airplane and Fruit, Fruit and Worm, and all three networks together, consistently improved accuracy. The improvement magnitudes are detailed in Fig. 8. The specific numerical results are presented in Table II, demonstrating the effectiveness of augmentation. Notably, as shown in Table II, fused training achieves a 16.98% improvement, while data augmentation provides an additional 5.46% boost, resulting in a total improvement of 22.44%. Additionally, to further validate the effectiveness of our augmentation strategy, we conducted experiments not only under the fused training model but also with single-network training, where one original network was paired with an augmented network. For details, please refer to Appendix -C.

These findings demonstrate the potential of data augmentation to enhance model performance, particularly on unseen networks, while highlighting the importance of selecting networks with complementary structures for augmentation to maximize its effectiveness.

V-D Performance of Baseline Models with Augmentation.

| Model / Network | Bacteria | Coach | Fungi | Human | WTW |

| 0.8642 | 0.9133 | 0.8655 | 0.7551 | 0.8133 | |

| RF | 0.8409 | 0.9203 | 0.8745 | 0.7645 | 0.8199 |

| TIGGER | 0.9051 | 0.9402 | 0.8977 | 0.7745 | 0.8215 |

| NetGAN | 0.8945 | 0.9121 | 0.8883 | 0.7708 | 0.8202 |

| Digress | 0.9089 | 0.9345 | 0.9047 | 0.7754 | 0.8205 |

| TopoEvoDiff | 0.9336 | 0.9538 | 0.9145 | 0.7845 | 0.8249 |

To further validate the effectiveness of our augmentation strategy, we applied it to networks generated using alternative baseline methods and compared the results. Specifically, synthetic networks were generated through baseline approaches, and these augmented samples were then combined with the original training data for joint training. The results revealed that, although the augmentation increased the diversity of the training samples, the performance improvements achieved by the baseline methods were limited compared to those observed with our proposed approach.

In this experiment, five real networks were used to train the generation models, and each trained model generated 100 augmented networks. To maximize the performance gains, we selected the augmented network set from the five generated options that brought the most significant improvement when used for augmentation. Among these, the Worm network generated by our method led to the largest performance enhancement. The augmented baseline models showed some performance improvement compared to their non-augmented counterparts. However, the magnitude of the improvement was notably smaller than that achieved with our proposed method. Across all test networks, the accuracy showed modest gains, indicating that while the additional synthetic samples did contribute to enhancing the predictive capabilities of the baseline models, the improvements were not as significant as those observed with our augmentation strategy. This suggests that, although the baseline methods benefited from augmentation, they were less effective in capturing the structural and temporal dynamics of the networks, resulting in smaller performance gains compared to our approach.

V-E Comparison of Generated Network Quality

| Model / Network | Airplane | Fruit | Thermody | Weaver | Worm |

| RF | 0.6547 | 0.6521 | 0.5901 | 0.7504 | 0.6179 |

| TIGGER | 0.6398 | 0.6348 | 0.5674 | 0.7311 | 0.6039 |

| NetGAN | 0.6478 | 0.6413 | 0.5802 | 0.7402 | 0.6085 |

| Digress | 0.6403 | 0.6294 | 0.5758 | 0.7258 | 0.6038 |

| TopoEvoDiff | 0.5814 (+9.13%) | 0.5408 (+14.07%) | 0.5540 (+2.37%) | 0.6863 (+5.44%) | 0.5605 (+7.17%) |

To evaluate the effectiveness of our augmentation method compared to baseline approaches, we analyzed the discrepancy between generated networks and their corresponding real networks. The primary metric used for this evaluation was the Normalized Root Mean Square Error (NRMSE), which measures the difference between the generated edge generation times and the real timestamps.

Table IV presents the NRMSE results for networks generated using our method and the baseline approaches. Our method, which learns directly from real networks, consistently achieves lower NRMSE values compared to the baselines. This indicates that the generated networks more accurately replicate the temporal dynamics of edge generation observed in the real data. Baseline methods exhibit higher NRMSE values, suggesting a lack of fidelity in capturing the nuances of real network evolution. The inability to closely match the temporal properties of real networks limits the utility of baseline-generated samples in enhancing model performance.

V-F Limited Transferability of Single-Network Trained Models

| Train / Test | Airplane | Fruit | Thermody | Weaver | Worm | Bacteria | Coach | Fungi | Human | WTW | Average |

| Airplane | 0.9915 | 0.8034 | 0.5029 | 0.8149 | 0.7217 | 0.8619 | 0.9773 | 0.9011 | 0.7793 | 0.8498 | 0.8180 |

| Fruit | 0.7249 | 0.8589 | 0.5066 | 0.6293 | 0.5369 | 0.8777 | 0.8947 | 0.7374 | 0.7374 | 0.5827 | 0.7437 |

| Thermody | 0.5524 | 0.4787 | 0.7921 | 0.4827 | 0.5529 | 0.2632 | 0.5968 | 0.4298 | 0.4838 | 0.5469 | 0.5179 |

| Weaver | 0.9427 | 0.7992 | 0.4909 | 0.8704 | 0.6930 | 0.9017 | 0.9420 | 0.8718 | 0.7794 | 0.8135 | 0.8115 |

| Worm | 0.6090 | 0.6824 | 0.4643 | 0.5509 | 0.7529 | 0.7276 | 0.8207 | 0.6288 | 0.6712 | 0.4159 | 0.6326 |

| Average (Split) | 0.7641 | 0.7245 | 0.5513 | 0.6696 | 0.6514 | 0.7264 | 0.8462 | 0.7104 | 0.6902 | 0.6417 | / |

Table V illustrates the performance of models trained on one network and tested on others. The results highlight two key findings: First, models perform well on the same network they are trained on. Diagonal entries, such as Airplane (0.9915) and Fruit (0.8589), demonstrate the ability to accurately capture the relationship between network features and edge generation times within the same network. Second, the models often fail when transferred to different networks. As seen in the underlined results, such as Thermody on Fruit (0.4787) and Fruit on Worm (0.5369), accuracies below 0.6 indicate poor transfer performance in binary classification tasks. This inconsistency reflects the complexity of real-world network mechanisms and the challenge of determining whether two networks are compatible for transfer.

In summary, models trained on a single network are unstable when applied to new networks. While some networks show decent transfer performance, others exhibit significant failures, highlighting the limitations of single-network training for generalizable predictions.

V-G Similarity Between Augmented and Original Networks

This section evaluates the structural and dynamic similarity between augmented and the original networks. We employ various metrics, including degree distribution, eigenvector centrality, and clustering coefficient distribution, to assess similarity. The analysis reveals that the degree distributions of the networks generated by TopoEvoDiff closely align with those of the original networks. This indicates a strong preservation of structural characteristics. Furthermore, the eigenvector centrality measures show a robust correlation between nodes’ importance in both networks, confirming the retention of critical structural roles. Lastly, clustering coefficient distributions closely match between the generated and original networks, reflecting consistent local clustering tendencies.

Fig. 9 highlights the effectiveness of our approach, showing that the enhanced networks generated by TopoEvoDiff exhibit structures more similar to the original networks compared to those produced by the representative TIGGER method. The top graphs present results for the Airplane network, while the bottom graphs correspond to the Thermody network. This demonstrates the ability of our method to generate augmented networks with greater structural fidelity.

V-H Effect of Training Sample Size on Prediction Accuracy

The results of predicting edge generation timestamps within a single network reveal an important finding: high prediction accuracy can be achieved using only a small subset of the network’s edges. As shown in Fig. 10, utilizing just 20% of a network’s edges for training yields strong performance, and increasing the number of training edges does not lead to significant improvements. This indicates that beyond a certain threshold, the additional training samples do not provide substantial value for improving accuracy within the same network.

This observation further supports the uniqueness of our augmentation strategy. The improvement in model performance on unseen networks is not merely a result of increasing the number of training samples. Instead, it demonstrates that the augmented samples generated through our method contribute meaningful and diverse information, enabling the model to generalize effectively across different networks. This contrasts with the plateauing effect observed when merely increasing training edges from the same network, highlighting the distinctive value of our augmentation approach.

V-I Benefits of Pairwise Relationship Prediction

Pairwise relationship prediction addresses the challenges of network heterogeneity and facilitates cross-network generalization by normalizing relationships across networks. This approach is crucial for transferring learned models between networks with diverse structural and temporal characteristics, a task that direct prediction often fails to achieve.

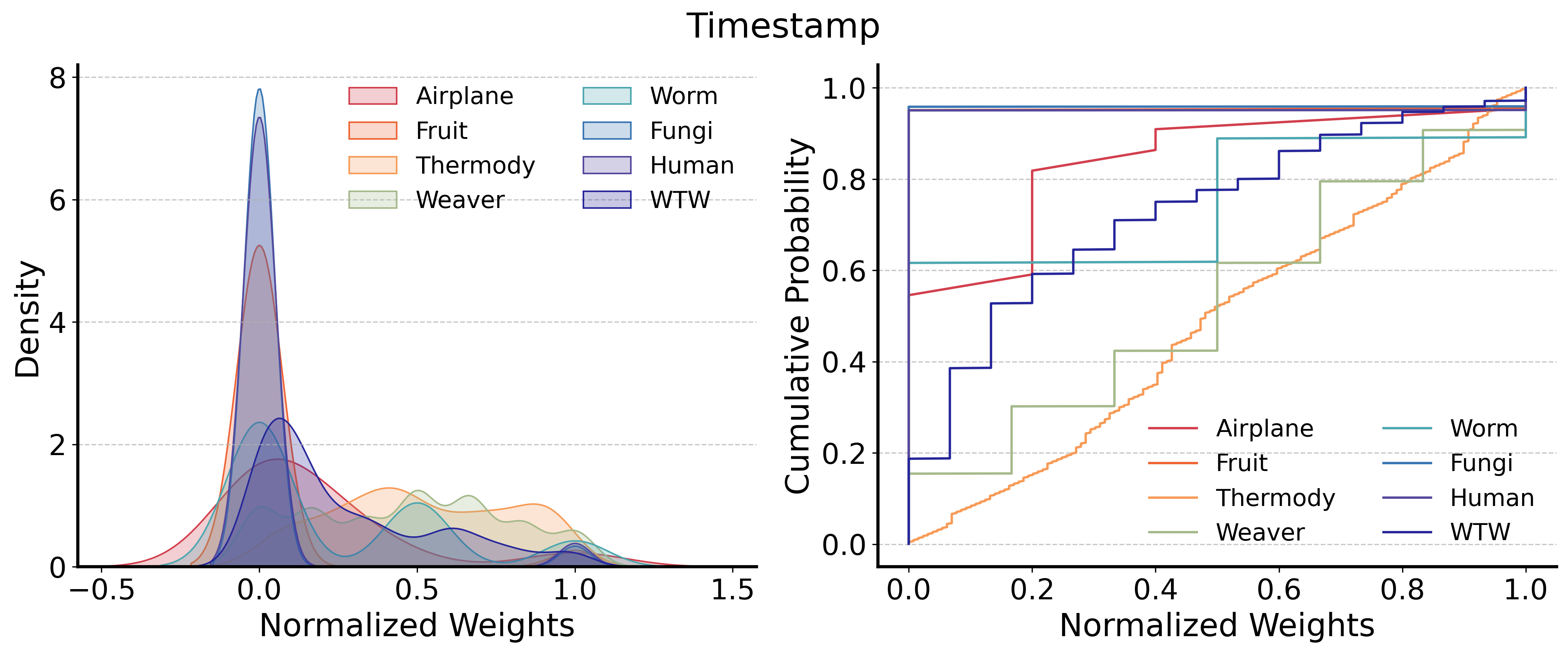

Direct prediction struggles due to significant differences in edge timestamp distributions and feature characteristics across networks. For instance, as shown in Fig. 11, edge timestamps in Thermody are evenly distributed, whereas other networks exhibit skewed distributions. Similarly, clustering coefficient distributions vary greatly, hindering generalization.

Some network features are more transferable. For example, the common neighbor count exhibits similar distributions across networks, as demonstrated in Fig. 11. This aligns with our experimental findings, where common neighbor count proves highly effective for learning the relationship between network features and edge generation timestamps. Furthermore, by shifting to pairwise learning of feature-edge relationships, normalization is achieved within the network itself, ensuring that the models learned through this method are inherently transferable across networks.

VI Conclusion

Our work focuses on predicting edge generation times from given network structures, which aids in understanding network evolution and forecasting future developmental trends. Existing methods are capable of predicting the edge generation sequence for the remaining edges of a temporal network when part of the edges’ generation process is known. However, these methods often fail when applied to static networks. In real-world scenarios, a significant number of static networks, which lack timestamps, need to have their evolutionary processes reconstructed. To address this issue, we use the CPNN framework, which leverages the fusion of multiple temporal networks to train a model that can associate network structure with edge generation times across different networks. This approach achieves stable and improved accuracy in the prediction of evolution for unseen static networks. Furthermore, to further enhance the model’s stability and performance, we adopt the TopoEvoDiff generation model to produce additional temporal networks. By integrating real temporal networks with the generated ones for training, we achieve further improvements in the model’s prediction accuracy.

References

- [1] S. Boccaletti, V. Latora, Y. Moreno, M. Chavez, and D.-U. Hwang, “Complex networks: Structure and dynamics,” Physics reports, vol. 424, no. 4-5, pp. 175–308, 2006.

- [2] H. Liao, M. S. Mariani, M. Medo, Y.-C. Zhang, and M.-Y. Zhou, “Ranking in evolving complex networks,” Physics Reports, vol. 689, pp. 1–54, 2017.

- [3] A.-L. Barabâsi, H. Jeong, Z. Néda, E. Ravasz, A. Schubert, and T. Vicsek, “Evolution of the social network of scientific collaborations,” Physica A: Statistical mechanics and its applications, vol. 311, no. 3-4, pp. 590–614, 2002.

- [4] B. Wang, Y. Zhang, X. Wang, P. Wang, Z. Zhou, L. Bai, and Y. Wang, “Pattern expansion and consolidation on evolving graphs for continual traffic prediction,” in Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2023, pp. 2223–2232.

- [5] Z. Seferbekova, A. Lomakin, L. R. Yates, and M. Gerstung, “Spatial biology of cancer evolution,” Nature Reviews Genetics, vol. 24, no. 5, pp. 295–313, 2023.

- [6] J. Wang, Y.-J. Zhang, C. Xu, J. Li, J. Sun, J. Xie, L. Feng, T. Zhou, and Y. Hu, “Reconstructing the evolution history of networked complex systems,” Nature Communications, vol. 15, no. 1, p. 2849, 2024.

- [7] J. Ding, C. Liu, Y. Zheng, Y. Zhang, Z. Yu, R. Li, H. Chen, J. Piao, H. Wang, J. Liu et al., “Artificial intelligence for complex network: Potential, methodology and application,” arXiv preprint arXiv:2402.16887, 2024.

- [8] C. Liu, F. Xu, C. Gao, Z. Wang, Y. Li, and J. Gao, “Deep learning resilience inference for complex networked systems,” Nature Communications, vol. 15, no. 1, p. 9203, 2024.

- [9] B. Jin, G. Liu, C. Han, M. Jiang, H. Ji, and J. Han, “Large language models on graphs: A comprehensive survey,” IEEE Transactions on Knowledge and Data Engineering, 2024.

- [10] S. Zhang, Z. Hu, A. Subramonian, and Y. Sun, “Motif-driven contrastive learning of graph representations,” IEEE Transactions on Knowledge and Data Engineering, 2024.

- [11] H. Li, C. Li, K. Feng, Y. Yuan, G. Wang, and H. Zha, “Robust knowledge adaptation for dynamic graph neural networks,” IEEE Transactions on Knowledge and Data Engineering, 2024.

- [12] M. Karoński and A. Ruciński, “The origins of the theory of random graphs,” The Mathematics of Paul Erdös I, pp. 311–336, 1997.

- [13] D. J. Watts and S. H. Strogatz, “Collective dynamics of ‘small-world’networks,” nature, vol. 393, no. 6684, pp. 440–442, 1998.

- [14] P. Holme and J. Saramäki, “Temporal networks,” Physics reports, vol. 519, no. 3, pp. 97–125, 2012.

- [15] M. Karsai, K. Kaski, A.-L. Barabási, and J. Kertész, “Universal features of correlated bursty behaviour,” Scientific reports, vol. 2, no. 1, p. 397, 2012.

- [16] N. Masuda, M. A. Porter, and R. Lambiotte, “Random walks and diffusion on networks,” Physics reports, vol. 716, pp. 1–58, 2017.

- [17] P. J. Mucha, T. Richardson, K. Macon, M. A. Porter, and J.-P. Onnela, “Community structure in time-dependent, multiscale, and multiplex networks,” science, vol. 328, no. 5980, pp. 876–878, 2010.

- [18] B. Millidge, M. Tang, M. Osanlouy, N. S. Harper, and R. Bogacz, “Predictive coding networks for temporal prediction,” PLOS Computational Biology, vol. 20, no. 4, p. e1011183, 2024.

- [19] G. Rossetti and R. Cazabet, “Community discovery in dynamic networks: a survey,” ACM computing surveys (CSUR), vol. 51, no. 2, pp. 1–37, 2018.

- [20] D. Zhou, L. Zheng, J. Han, and J. He, “A data-driven graph generative model for temporal interaction networks,” in Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 2020, pp. 401–411.

- [21] G. Zeno, T. La Fond, and J. Neville, “Dymond: Dynamic motif-nodes network generative model,” in Proceedings of the Web Conference 2021, 2021, pp. 718–729.

- [22] S. Gupta, S. Manchanda, S. Bedathur, and S. Ranu, “Tigger: Scalable generative modelling for temporal interaction graphs,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, no. 6, 2022, pp. 6819–6828.

- [23] K. Zhang, Q. Chen, J. Chen, S. He, F. Li, and Z. Zhou, “A multi-module generative adversarial network augmented with adaptive decoupling strategy for intelligent fault diagnosis of machines with small sample,” Knowledge-Based Systems, vol. 239, p. 107980, 2022.

- [24] M. Abdollahzadeh, T. Malekzadeh, C. T. Teo, K. Chandrasegaran, G. Liu, and N.-M. Cheung, “A survey on generative modeling with limited data, few shots, and zero shot,” arXiv preprint arXiv:2307.14397, 2023.

- [25] R. Zhang, T. Che, Z. Ghahramani, Y. Bengio, and Y. Song, “Metagan: An adversarial approach to few-shot learning,” Advances in neural information processing systems, vol. 31, 2018.

- [26] Y. Fu, C. Chen, Y. Qiao, and Y. Yu, “Dreamda: Generative data augmentation with diffusion models,” arXiv preprint arXiv:2403.12803, 2024.

- [27] C. Vignac, I. Krawczuk, A. Siraudin, B. Wang, V. Cevher, and P. Frossard, “Digress: Discrete denoising diffusion for graph generation,” arXiv preprint arXiv:2209.14734, 2022.

- [28] C. Niu, Y. Song, J. Song, S. Zhao, A. Grover, and S. Ermon, “Permutation invariant graph generation via score-based generative modeling,” in International Conference on Artificial Intelligence and Statistics. PMLR, 2020, pp. 4474–4484.

- [29] J. Jo, S. Lee, and S. J. Hwang, “Score-based generative modeling of graphs via the system of stochastic differential equations,” in International Conference on Machine Learning. PMLR, 2022, pp. 10 362–10 383.

- [30] C. Liu, J. Ding, Y. Song, and Y. Li, “Tdnetgen: Empowering complex network resilience prediction with generative augmentation of topology and dynamics,” in Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2024, pp. 1875–1886.

- [31] L. Lü and T. Zhou, “Link prediction in complex networks: A survey,” Physica A: statistical mechanics and its applications, vol. 390, no. 6, pp. 1150–1170, 2011.

- [32] S. Hesamipour and M. A. Balafar, “A new method for detecting communities and their centers using the adamic/adar index and game theory,” Physica A: Statistical Mechanics and its Applications, vol. 535, p. 122354, 2019.

- [33] M. Bianchini, M. Gori, and F. Scarselli, “Inside pagerank,” ACM Transactions on Internet Technology (TOIT), vol. 5, no. 1, pp. 92–128, 2005.

- [34] A.-L. Barabási, R. Albert, and H. Jeong, “Mean-field theory for scale-free random networks,” Physica A: Statistical Mechanics and its Applications, vol. 272, no. 1-2, pp. 173–187, 1999.

- [35] F. Papadopoulos, C. Psomas, and D. Krioukov, “Network mapping by replaying hyperbolic growth,” IEEE/ACM Transactions on Networking, vol. 23, no. 1, pp. 198–211, 2014.

- [36] G. Bianconi and A.-L. Barabási, “Competition and multiscaling in evolving networks,” Europhysics letters, vol. 54, no. 4, p. 436, 2001.

- [37] V. P. Dwivedi and X. Bresson, “A generalization of transformer networks to graphs,” arXiv preprint arXiv:2012.09699, 2020.

- [38] J. Ho, A. Jain, and P. Abbeel, “Denoising diffusion probabilistic models,” Advances in neural information processing systems, vol. 33, pp. 6840–6851, 2020.

- [39] Y. Jin, D. Turaev, T. Weinmaier, T. Rattei, and H. A. Makse, “The evolutionary dynamics of protein-protein interaction networks inferred from the reconstruction of ancient networks,” PloS one, vol. 8, no. 3, p. e58134, 2013.

- [40] G. García-Pérez, M. Boguñá, A. Allard, and M. Serrano, “The hidden hyperbolic geometry of international trade: World trade atlas 1870–2013,” Scientific reports, vol. 6, no. 1, pp. 1–10, 2016.

- [41] Y. Hu, S. Havlin, and H. A. Makse, “Conditions for viral influence spreading through multiplex correlated social networks,” Physical Review X, vol. 4, no. 2, p. 021031, 2014.

- [42] R. Rossi and N. Ahmed, “The network data repository with interactive graph analytics and visualization,” in Proceedings of the AAAI conference on artificial intelligence, vol. 29, no. 1, 2015.

- [43] R. Gallotti and M. Barthelemy, “The multilayer temporal network of public transport in great britain,” Scientific data, vol. 2, no. 1, pp. 1–8, 2015.

- [44] N. Pourebrahim, S. Sultana, A. Niakanlahiji, and J.-C. Thill, “Trip distribution modeling with twitter data,” Computers, Environment and Urban Systems, vol. 77, p. 101354, 2019.

- [45] A. Bojchevski, O. Shchur, D. Zügner, and S. Günnemann, “Netgan: Generating graphs via random walks,” in International conference on machine learning. PMLR, 2018, pp. 610–619.

- [46] C. Vignac, I. Krawczuk, A. Siraudin, B. Wang, V. Cevher, and P. Frossard, “Digress: Discrete denoising diffusion for graph generation,” in Proceedings of the 11th International Conference on Learning Representations, 2023.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/78331bed-e8c5-469d-9900-6c9b6e0526e0/EnXU.jpg) |

En Xu received the BS and PhD degrees in Computer Science and Technology from Northwestern Polytechnical University, Xi’an, China, in 2018 and 2023, respectively. He was a postdoctoral research fellow at Hong Kong Baptist University from 2023 to 2024 and is currently a postdoctoral research fellow with the Department of Electronic Engineering, Tsinghua University, Beijing, China. His research interests include the predictability of time series and complex networks, with a current focus on AI for complex networks. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/78331bed-e8c5-469d-9900-6c9b6e0526e0/CanRong.jpg) |

Can Rong received the MEng degree in software engineering from Peking University in 2017. He is currently working toward the PhD degree with the Department of Electronic Engineering of Tsinghua University, advised by Prof. Yong Li. His research areas include urban computing and spatio-temporal data mining. He has recently been working on how to apply graph neural networks to model the interactive characteristics of regions in cities. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/78331bed-e8c5-469d-9900-6c9b6e0526e0/JingtaoDing.jpg) |

Jingtao Ding received the BS degrees in electronic engineering and the PhD degree in electronic engineering from Tsinghua University, Beijing, China, in 2015 and 2020, respectively. He is currently a postdoctoral research fellow with the Department of Electronic Engineering, Tsinghua University. His research interests include mobile computing, spatiotemporal data mining and user behavior modeling. He has more than 60 publications in journals and conferences such as IEEE Transactions on Knowledge and Data Engineering, ACM Transactions on Information Systems, KDD, NeurIPS, WWW, ICLR, SIGIR, IJCAI, etc. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/78331bed-e8c5-469d-9900-6c9b6e0526e0/YongLi.png) |

Yong Li (Senior Member, IEEE) received the PhD degree in electronic engineering from Tsinghua University in 2012. He is currently a tenured professor with the Department of Electronic Engineering, Tsinghua University. He has authored or coauthored more than 100 papers on first-tier international conferences and journals, including KDD, WWW, UbiComp, SIGIR, AAAI, IEEE Transactions on Knowledge and Data Engineering, IEEE Transactions on Mobile Computing and his papers have total citations more than 28,000. His research interests include machine learning and big data mining, particularly, automatic machine learning and spatial-temporal data mining for urban computing, recommender systems, and knowledge graphs. He was the general chair, the TPC chair, an SPC or a TPC member for several international workshops and conferences. He is on the editorial board of two IEEE journals. Among them, 10 are ESI Highly Cited Papers in computer science, and five were the recipient of conference Best Paper (run-up) awards. |

-A Equivalence Between Predicting Edge Generation Times and Pairwise Orderings

In this section, we demonstrate the equivalence between predicting the exact generation times of network edges and predicting their pairwise order of generation. Consider a network with edges generated sequentially. The normalized generation time sequence is denoted as , where represents the normalized position of edge in the sequence, with larger values indicating later generation times. The goal is to reconstruct the sequence , either by directly estimating or by predicting the pairwise order of edges and deriving from these comparisons.

For a pair of edges and , the pairwise score is defined as:

where denotes the probability of correctly predicting the order of a pair of edges. The expected value and variance of are given by:

Using the pairwise scores, the total score for edge is calculated as:

The expected score of edge is determined by summing its comparisons with all other edges. Specifically, comparisons with the first edges contribute , while comparisons with the remaining edges contribute . The total expected score is therefore:

This expression shows that is a linear function of the edge index . The scores are evenly distributed over the interval , allowing to serve as a mean-field approximation for ranking edges. The normalized position of edge in the reconstructed sequence can be calculated as:

The variance of can be derived as:

and the standard deviation is:

The expected value of matches the true value , demonstrating that the reconstructed sequence is unbiased:

The overall theoretical error in reconstructing the sequence is equivalent to the standard deviation of the position estimates:

This theoretical error decreases as the pairwise accuracy approaches 1 or as the number of edges increases. However, the error diverges as approaches 0.5, emphasizing the importance of achieving reliable pairwise predictions. This equivalence highlights that predicting pairwise order is sufficient to reconstruct the temporal sequence of edges, offering a simpler yet equally effective alternative to directly predicting generation times.

-B Reconstructing Edge Generation Order

The following algorithm reconstructs the global edge generation order from pairwise comparison results. The pairwise comparison matrix contains the probabilities , where indicates the likelihood that edge was generated before edge . The algorithm computes a score for each edge based on these pairwise probabilities and then sorts the edges by their scores to derive the global order.

-C Validation of Augmentation Strategy in Separate Training

This section evaluates the effectiveness of combining a single original network with an augmented network in predicting edge generation times for other networks. The experiments were conducted on three types of generated datasets, and the detailed descriptions of these datasets are as follows:

-

•

Barabási–Albert (BA) model: The BA model generates networks through preferential attachment. Starting with a small fully connected graph, each new node added to the network connects to an existing node with probability , where is the degree (number of connections) of the existing node. This model reflects the power-law degree distribution found in many natural networks, where a few nodes dominate with many connections.

-

•

Popularity-similarity-optimization (PSO) model: The PSO model introduces a similarity space for nodes, where the connection probability between new and existing nodes depends on both the degree of the target node and their similarity. This model is well-suited for simulating social networks, where users (nodes) connect based on both popularity and shared interests.

-

•

Fitness model: In the Fitness model, each node is assigned a fitness value , representing its inherent attractiveness or importance. The probability of a new node connecting to an existing node is proportional to both the degree and fitness of the target node, . This model captures networks with strong competitive dynamics, such as academic citation networks or business networks.

The network types , , , and represent different network models, where the subscript indicates the number of nodes in the network. TrainingNet refers to the network used for standalone training, based on which enhanced samples are generated. Subsequently, the combination of this network and one enhanced sample is used to predict the target network (TargetNet). For the methods, NatureC is the baseline method presented in Nature Communications 2024 [6]. TIGGER combines NatureC with enhanced networks generated by TIGGER, while TopoEvoDiff integrates NatureC with enhanced networks generated by TopoEvoDiff. The improvements achieved through augmentation are summarized in Table VI, which demonstrates the effectiveness of our augmentation strategy in enhancing prediction performance.

| TrainingNet | TargetNet | NatureC | TIGGER | TopoEvoDiff |

| 0.5189 | 0.5368 (+3.4%) | 0.5478 (+5.6%) | ||

| 0.5148 | 0.5254 (+2.1%) | 0.5448 (+5.8%) | ||

| 0.6103 | 0.6267 (+2.7%) | 0.6447 (+5.6%) | ||

| 0.5562 | 0.5778 (+3.9%) | 0.6281 (+12.9%) |