A Diffusion-based Method for Multi-turn Compositional Image Generation

Abstract

Multi-turn compositional image generation (M-CIG) is a challenging task that aims to iteratively manipulate a reference image given a modification text. While most of the existing methods for M-CIG are based on generative adversarial networks (GANs), recent advances in image generation have demonstrated the superiority of diffusion models over GANs. In this paper, we propose a diffusion-based method for M-CIG named conditional denoising diffusion with image compositional matching (CDD-ICM). We leverage CLIP as the backbone of image and text encoders, and incorporate a gated fusion mechanism, originally proposed for question answering, to compositionally fuse the reference image and the modification text at each turn of M-CIG. We introduce a conditioning scheme to generate the target image based on the fusion results. To prioritize the semantic quality of the generated target image, we learn an auxiliary image compositional match (ICM) objective, along with the conditional denoising diffusion (CDD) objective in a multi-task learning framework. Additionally, we also perform ICM guidance and classifier-free guidance to improve performance. Experimental results show that CDD-ICM achieves state-of-the-art results on two benchmark datasets for M-CIG, i.e., CoDraw and i-CLEVR.

1 Introduction

Image generation is a hot topic in computer vision, which has many applications in a wide range of areas, such as art, education, and entertainment. The generation of an image often needs to follow a text prompt. Additionally, sometimes the generation also needs to be based on an existing image rather than starting from scratch. Combining the above two requirements brings about compositional image generation (CIG), which is to generate a target image by changing a reference image according to a modification text. Addressing this cross-modal task is useful in computer-aided design (CAD), as it enables a computer system to generate images given verbal instructions from users.

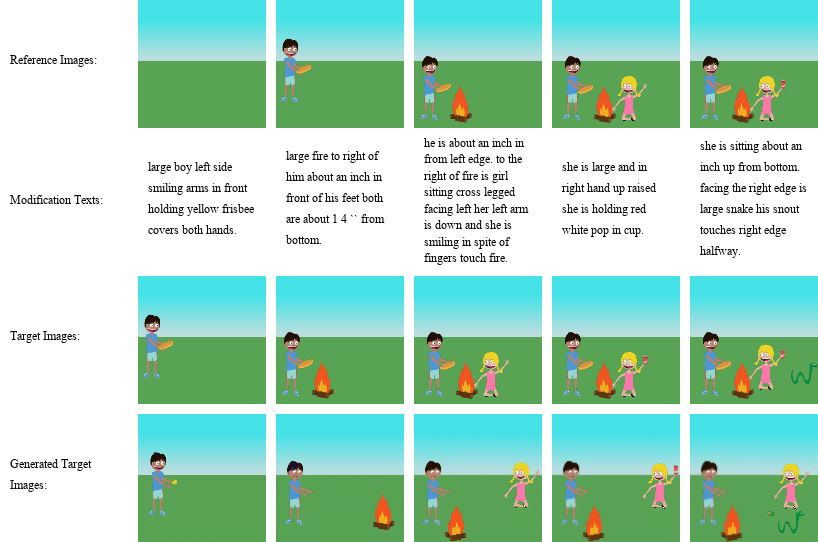

In this paper, we focus on multi-turn compositional image generation (M-CIG), which is to perform CIG in an iterative manner. As shown in Figure 1, M-CIG can be described as a sequence of CIG turns, where the initial reference image is a background canvas, and the target image generated at each turn will be used as the reference image at the next turn. Compared with CIG, M-CIG is more challenging due to the iterative setting. Meanwhile, M-CIG is also more practical than CIG, as in the real world, a user usually needs to go through a series of incremental interactions with a computer system before achieving a final goal.

To the best of our knowledge, the existing methods for M-CIG [12, 13, 34] are mostly based on generative adversarial networks (GANs) [15], which are currently the dominant family of techniques in image generation. According to some theoretical and empirical studies [54, 2, 6, 36, 4], although GANs can generate high-quality images, they are usually difficult to train, and the diversity of the generated images is also limited. Recently, diffusion models [58, 62, 16, 59], which are another family of generative modeling techniques, have gained great popularity in image generation. Compared with GANs, diffusion models are easier to train due to the straightforward definition of objectives, and can also generate more diverse images due to the explicit modeling of data distribution. As for the quality of the generated images, it has been demonstrated that diffusion models are comparable to or even better than GANs [63, 40, 8, 17]. Therefore, we apply diffusion models to M-CIG.

Diffusion models rely on a denoising diffusion mechanism [16] to generate images, which can be conditional so that only desired images are generated. Therefore, the key to addressing M-CIG using diffusion models is to learn conditional denoising diffusion (CDD), where the condition for generating the target image at each turn comes from the reference image and the modification text. However, this raises the following two problems:

The lack of an appropriate conditioning scheme. Although many conditioning schemes have been proposed for diffusion models, most of these works only deal with uni-modal cases, where the condition comes from either an image [51, 53, 49] or a text [39, 47, 52]. The conditioning scheme proposed by [22] is aimed at a multi-modal case, where the condition comes from an image-text pair, but this work assumes that the text just describes the semantics of the image rather than the desired change to it. In a word, the above conditioning schemes cannot support the application of diffusion models to M-CIG.

The concern about the semantic quality of the generated target image. For the generated target image, we are not only concerned with its visual quality, but also its semantic quality, which refers to whether it contains the desired objects and whether the contained objects constitute the desired topology. Actually, we believe that the semantic quality deserves more concern than the visual quality. The reason is two-fold. On the one hand, a high semantic quality implies a high visual quality, but the reverse is not true. On the other hand, due to the iterative nature of M-CIG, semantic mistakes are likely to accumulate from turn to turn, which may corrupt the rear turns.

To solve these problems, we propose a diffusion-based method for M-CIG named conditional denoising diffusion with image compositional matching (CDD-ICM), which features a novel conditioning scheme equipped with a multi-task learning framework. Specifically, we use CLIP [45] as the backbone to encode images and texts. On this basis, we borrow a gated fusion mechanism from a question answering (QA) method [67] to perform compositional fusion between the reference image and the modification text at each turn of M-CIG, and use the result as the condition of the denoising diffusion mechanism to generate the target image. To guarantee the semantic quality of the generated target image, we learn image compositional matching (ICM) as an auxiliary objective of CDD to explicitly enhance the conditon, where the compositional fusion result is aligned with the representation of the target image through contrastive learning. Moreover, we also perform ICM guidance and classifier-free guidance [18] to boost performance. Experimental results show that CDD-ICM achieves state-of-the-art (SOTA) performance on two benchmark datasets for M-CIG, namely CoDraw [24] and i-CLEVR [12].

The contribution of this paper is three-fold. First, we creatively apply diffusion models to M-CIG, where a novel conditioning scheme is developed to handle a compositional image-text pair, integrating the denoising diffusion mechanism with CLIP and a gated fusion mechanism. Second, to prioritize the semantic quality of the generated target image, we establish a multi-task learning framework for the conditioning scheme, where ICM serves as an auxiliary objective of CDD to explicitly enhance the condition. Third, our diffusion-based method outperforms the existing GAN-based methods on two M-CIG benchmark datasets.

2 Method

In this section, we elaborate CDD-ICM, which is our diffusion-based method for M-CIG. We begin by providing a task formulation of M-CIG, which is followed by a detailed introduction to the design of CDD-ICM.

2.1 Task Formulation

Given an initial reference image , which is a background canvas, and a sequence of modification texts , which describe the desired changes to be successively made to , M-CIG is a -turn iterative process, where at each turn , it is required to generate a target image by changing the current reference image according to , and if , will be used as the next reference image .

2.2 Encoding

Considering the cross-modal nature of M-CIG, we map images and texts into a joint representation space through encoding. As shown in Figure 2, we include an image encoder and a text encoder in CDD-ICM, where the former is used to encode reference images and target images, and the latter is used to encode modification texts. Since M-CIG is a vision-and-language (V&L) task, we take advantage of large-scale V&L pre-training by using CLIP, which is pre-trained on 400M image-text pairs, as the backbone of both encoders. Specifically, we use the vision part of CLIP, which is a vision transformer (ViT) [11], as the backbone of the image encoder, and use the language part of CLIP, which is a GPT-like [46] auto-regressive language model, as the backbone of the text encoder. In each encoder, we append a linear projection layer after the backbone, which finally yields the representations. For both linear projection layers, we set their output dimensionality to the same value , which is a hyper-parameter denoting the dimensionality of the joint representation space.

Additionally, as shown in Figure 2, we also include a noisy image encoder in CDD-ICM, which is used to encode the noisy target images obtained in the denoising diffusion mechanism. It has the same structure as the image encoder, but holds different trainable parameters.

2.3 Compositional Fusion

At each turn of M-CIG, to extract clues for the generation of the target image, we perform compositional fusion between the reference image and the modification text. As shown in Figure 2, we include a fusion module in CDD-ICM, which fuses the representation of the reference image with that of the modification text. Actually, we can interpret each turn of M-CIG from the perspective of QA. Specifically, we can regard the reference image as a context, the modification text as a relevant question, and the target image as the corresponding answer. In this way, to implement the fusion module, we borrow the following gated fusion mechanism from a QA method [67]:

| (1) |

where and are trainable weight matrices, and are trainable bias vectors, denotes element-wise multiplication, and denotes vector concatenation. In the fusion module, we set to the representation of the reference image, set to that of the modification text, and thereby obtain as the compositional fusion result.

2.4 Conditional Denoising Diffusion

To generate the target image at each turn of M-CIG, we learn conditional denoising diffusion (CDD), which is to perform the generation using a denoising diffusion mechanism conditioned on the compositional fusion result between the reference image and the modification text. As proposed by [16], the denoising diffusion mechanism consists of a forward diffusion process, which gradually injects noises to the target image, and a reverse denoising process, which gradually erases the injected noises.

With the target image denoted as , the forward diffusion process is a pre-defined Markov chain of time steps , where the state transfers from all the way to . Specifically, at each time step , we obtain by injecting a Gaussian noise to :

| (2) |

where is a hyper-parameter used to control the noise scale. It is easy to derive that with denoted as , we can actually obtain by directly injecting to :

| (3) |

As shown in Figure 2, we include a noise injector in CDD-ICM, which executes Equation 3 at an arbitrary time step to obtain as a noisy target image. To implement the noise injector, we set each in the following way proposed by [40]:

| (4) |

With the compositional fusion result between the reference image and the modification text denoted as , the reverse denoising process is a parameterized Markov chain of time steps , where the state transfers from all the way back to conditioned on . Specifically, at each time step , given and the condition , we obtain using a neural network :

| (5) |

where . According to [16], we can learn by minimizing the following variational lower-bound (VLB) loss:

| (6) |

Using Bayes’ theorem, it can be derived that with denoted as , is the following Gaussian distribution:

| (7) |

Based on Equation 3 and Equation 7, we parameterize in the following way proposed by [16]:

| (8) |

where is a prediction to . Besides, we also parameterize in the following way proposed by [40]:

| (9) |

where is a fraction used to interpolate between and . As shown in Figure 2, we include a denoising U-Net in CDD-ICM, which performs the above parameterizations and thus can be seen as . To implement the denoising U-Net, we make three changes to the U-Net structure used by [8]. First, we replace the class embedding with the condition . Second, we concatenate with the reference image along the channel dimension, and thereby use the result as the input. Third, we concatenate the patch representations of the reference image with the token representations of the modification text, and thereby use the result to augment each attention layer as suggested by [39]. The output of the denoising U-Net is divided into two parts along the channel dimension, which are separately used as and .

According to [16], to learn the above reverse denoising process, instead of minimizing , we can actually minimize the following mean squared error (MSE) loss:

| (10) |

Compared with , is not only simpler but also more effective. However, minimizing cannot bring any learning signal to . To benefit from learning , we combine with as suggested by [40]. Specifically, we minimize a CDD loss , which is calculated as follows:

| (11) |

where is a hyper-parameter used to control the weight of . Additionally, we also stop the gradients of from flowing to .

2.5 Image Compositional Matching

At each turn of M-CIG, the semantic quality of the generated target image depends on the condition of the denoising diffusion mechanism, which is the compositional fusion result between the reference image and the modification text. Although learning CDD ensures that the condition is learned, this effect is implicit. To explicitly enhance the condition so that it embodies more clues about the target image, we learn image compositional matching (ICM) as an auxiliary objective of CDD, which is to align the compositional fusion result with the representation of the target image.

To learn ICM, we adopt the InfoNCE loss [41] used in the contrastive pre-training of CLIP, and apply it to individual turns constituting M-CIG samples. Specifically, given a mini-batch of (reference image, modification text, target image) triples , each of which denotes an individual turn picked from an M-CIG sample, we treat them as positive samples, and generate negative samples by replacing the target image in each positive sample separately with the other target images . For each of the positive and negative samples, suppose that we have already obtained the compositional fusion result between the reference image and the modification text and the representation of the target image, then we calculate the cosine similarity between them. As a result, we construct a similarity matrix , where the element at the -th row and the -th column corresponds to the sample . It is easy to see that the diagonal elements in correspond to the positive samples, while the other elements correspond to the negative samples. Based on , we minimize an ICM loss , which is calculated as follows:

| (12) |

where is a trainable temperature scalar, denotes calculating matrix trace, and is calculated along the row dimension. In this way, the compositional fusion result between a (reference image, modification text) pair will be close to the representation of the real target image, while apart from those of the fake ones.

Besides, to enable ICM guidance, which will be introduced later, we also learn noise-aware image compositional matching (N-ICM). Specifically, we replace the above target images with their noisy variants, which are obtained using the noise injector, and encode these noisy target images using the noisy image encoder. On this basis, we minimize an N-ICM loss , which is calculated in the same way as we calculate .

2.6 Training and Inference

For the training, we disassemble M-CIG samples into individual turns and thereby apply teacher forcing. On this basis, we divide the training into three stages, where in each stage, we minimize a different loss through mini-batch gradient descent to update the corresponding trainable components of CDD-ICM. Specifically, in the first stage, we minimize to update the image encoder, the text encoder, and the fusion module. In the second stage, we minimize the following joint loss, which is a combination of and , to update the image encoder, the text encoder, the fusion module, and the denoising U-Net:

| (13) |

where is a hyper-parameter used to control the weight of . In the third stage, we freeze the image encoder, the text encoder, and the fusion module, and minimize to update the noisy image encoder. To effectively fine-tune CLIP, we set the backbone learning rate as a product of the global learning rate and a backbone activity ratio , which is a hyper-parameter. From the perspective of transfer learning, controls the trade-off between the knowledge transferred from CLIP and that embodied in the training data.

For the inference, at each turn of M-CIG, we use the image encoder to encode the reference image, use the text encoder to encode the modification text, use the fusion module to perform compositional fusion based on the encoding results, and use the denoising U-Net to iteratively execute Equation 5 from the time step until the time step , where the condition is set to the compositional fusion result. From Equation 3 and Equation 4, it can be derived that if is large enough, then , thus we sample from at the time step . To accelerate the inference, we traverse only a part of the time steps, which are uniformly distributed among all of them, and make this process deterministic as suggested by [60]. Finally, we obtain as the generated target image at the time step .

Moreover, we also perform ICM guidance and classifier-free guidance to boost performance. Our ICM guidance is similar to the CLIP guidance of [39]. Specifically, suppose that we have minimized in the training, then in the inference, instead of using in Equation 5, we use , which is obtained by perturbing using the gradient of with respect to :

| (14) |

where is a hyper-parameter used to control the perturbation scale. Our classifier-free guidance is similar to that of [39]. Specifically, in the training, when calculating in Equation 10, we set the condition to with a probability of , which is a hyper-parameter. On this basis, in the inference, instead of using in Equation 8, we use , which is obtained by perturbing using :

| (15) |

where is a hyper-parameter used to control the perturbation scale.

3 Related Works

3.1 Image Manipulation

The goal of image manipulation is to modify specific attributes of an image while avoiding unintended changes or generating a completely new image. Existing works can be split into two main categories: image-to-image translation and text-conditioned image manipulation.

Image-to-Image Translation. The image-to-image translation aims to generate an output image only conditioning on an input image, i.e., uni-modal condition. Image inpainting and image super-resolution are two typical image-to-image translation tasks. In recent years, deep learning has achieved great success in image inpainting. Context Encoders [44] first explores to utilize conditional GANs. Multiple variants [69, 72, 73, 29] of U-Net [50] have been proposed for image inpainting. Some works explore multi-stage generation by taking object edges [38], structures [48], or semantic segmentation maps [64] as intermediate clues. In terms of super-resolution, most early works are regression-based and trained with MSE loss [9, 10, 70, 23]. Auto-regressive models [7, 42] and GAN-based methods [19, 26, 68, 35, 32] have also shown high quality results.

Text-Conditioned Image Manipulation. The text-conditioned image manipulation targets generating an output image conditioned on both the input image and text, i.e., multi-modal condition. The input text can be a caption-like description of the target image, and the editing is usually single-turn. TAGAN [37] employs word-level local discriminators to preserve text-irrelevant content. ManiGAN [28] first selects image regions and then correlates the regions with semantic words. DiffusionCLIP [22] is a robust framework that utilizes the pre-trained diffusion models and CLIP loss for image manipulation.

The input text can also be user-provided text instructions that describe desired modifications, such as adding, changing, or removing the objects in images. Generating an image based on provided instructions and an input image is dubbed as the compositional image generation (CIG) task in this paper. [1] achieve great performance on the benchmarks CSS [65] and Fashion Synthesis [75] by designing an improved image & text composition layer and a multi-modal similarity module. [74] propose a GAN-based method to locally modify image features and show remarkable results on both CSS and Abstract Scene [76]. Afterward, the M-CIG task presents a more challenging setting compared to the above single-turn CIG task. [12] first propose the M-CIG task known as Generative Neural Visual Artist (GeNeVA) task, which requires iteratively generating an image according to ongoing linguistic input. [14] introduce the self-supervised counterfactual reasoning (SSCR) framework to tackle the data scarcity problem. LatteGAN [34] improves desired object generation by introducing a Latte module and a text-conditioned U-Net discriminator. Our research work targets the M-CIG task, following [12], we conducted experiments on CoDraw [25] and i-CLEVR [12].

3.2 Diffusion Models

Diffusion models (DMs) [58], which formulate the data sampling process as an iterative denoising procedure, are closely related to a large family of methods for learning generative models as transition operators of Markov chains [3, 58, 56, 61, 27]. Many research works concentrate on improving the diffusion process of DMs. [62] propose to estimate the gradients of data distribution via score matching and produce samples via Langevin dynamics. Denoising diffusion probabilistic models (DDPMs) [16], which optimize a variational lower bound to the log-likelihood, can achieve comparable sample quality as GANs [5, 20]. Denoising diffusion implicit models (DDIMs) [59] speed up the sampling process while enabling near-perfect inversion [8]. The improved DDPM [40] introduces several modifications to achieve competitive likelihoods without sacrificing sample quality. The latent diffusion [49] model is applied in latent space instead of pixel space to enable an efficient diffusion process. Although GANs have achieved plausible results in image synthesis, they are usually difficult to train and tend to limit the diversity of the generated images [57, 66]. DMs are more stable during training and demonstrate comparable or even better performance for image synthesis [8, 63, 17].

Motivated by the progress in developing DMs, some research works explore text or image conditional diffusion mechanisms. While certain diffusion models solely utilize an input image for conditioning, such as PALETTE [51] and SR3 [53], those that condition on both an input image and text are more pertinent to our work. GLIDE [39] is a text-guided diffusion model, where classifier-free guidance yields higher-quality images than CLIP guidance. DALL-E 2 [47] initially generates a CLIP [45] image embedding given a text caption and then generates an image conditioned on the image embedding. ImageGen [52] exhibits a deep level of language understanding which enables high-fidelity image generation. Stable Diffusion [49] is an efficient latent diffusion model and has achieved superior image synthesis performance. [30] compose pre-trained text-guided diffusion models to improve structured generalization for image generation. However, in the context of this paper, it should be noted that the textual input in the aforementioned models refers to a caption-like description, rather than iterative instructions on image manipulations. Designing diffusion-based methods for the M-CIG task remains a relatively underexplored area, presenting challenges in iteratively modifying images with instructions and conditioning the denoising diffusion mechanism on multi-modalities. To address this gap in the literature, we propose a diffusion-based approach coupled with auxiliary ICM objectives to enhance the visual and semantic fidelity of generated images in the M-CIG task.

4 Experiments

| Method | CoDraw | i-CLEVR | ||||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | RSIM | Precision | Recall | F1 | RSIM | |

| GeNeVA-GAN [12] | 66.64 | 52.66 | 58.83 | 35.41 | 92.39 | 84.72 | 88.39 | 74.02 |

| SSCR [13] | 58.17 | 56.61 | 57.38 | 39.11 | 73.75 | 46.39 | 56.96 | 34.54 |

| TIRG [21] | 76.56 | 73.40 | 72.40 | 46.64 | 94.30 | 92.96 | 93.71 | 77.55 |

| LatteGAN [34] | 81.50 | 78.37 | 77.51 | 54.16 | 97.72 | 96.93 | 97.26 | 83.21 |

| CDD-ICM (ours) | 90.61 | 87.55 | 89.05 | 57.39 | 99.99 | 99.94 | 99.96 | 85.66 |

4.1 Datasets

To verify the effectiveness of CDD-ICM, we conduct experiments on the following two M-CIG benchmark datasets:

CoDraw. CoDraw contains K M-CIG samples for training, K for validation, and K for test. The number of turns per M-CIG sample varies between and with an average of . The reference images and the target images contain classes of clip-art-style objects, such as boys, girls, and trees. The modification texts are conversations between a teller and a drawer.

i-CLEVER. i-CLEVER contains K M-CIG samples for training, K for validation, and K for test. The number of turns per M-CIG sample is always . The reference images and the target images contain classes of colored geometric objects, such as red spheres, yellow cubes, and blue cylinders. The modification texts are sentences indicating the addition of a new object.

Both datasets adopt four metrics to evaluate the semantic quality of the generated target images: precision, recall, F1 score, and relational similarity (RSIM). Specifically, each dataset comes with an object detector, which is trained on the dataset by [12]. In the evaluation, the object detector is applied to both the generated target images and the ground-truth target images. On this basis, precision, recall, and F1 score are calculated for each turn of M-CIG by comparing the object presence in the generated target image with that in the ground-truth target image:

| (16) |

where and denote the objects in the generated target image and the ground-truth target image, respectively. RSIM is calculated on the last turn of M-CIG by comparing the object topology in the generated target image with that in the ground-truth target image:

| (17) |

where and denote the edges interconnecting in the generated target image and the ground-truth target image, respectively.

4.2 Implementation Details

We use PyTorch [43] to implement CDD-ICM, and use HuggingFace’s Transformers [71] to load CLIP. In the three CLIP-based encoders, we adopt the basic version of CLIP (i.e. CLIP-ViT-B/32) as the backbone, set the backbone activity ratio to , and set the output dimensionality of all the linear projection layers to . In the denoising diffusion mechanism, we use time steps for the training (i.e. ), and use the time steps uniformly distributed among them for the inference. For ICM guidance, we set to . For classifier-free guidance, we set the value of to and to . To calculate , we set to . To calculate and , we initialize to . To calculate in the second training stage, we set to . For the optimization in each training stage, we apply an AdamW optimizer [31] with an initial learning rate of and a weight decay factor of . We perform the optimization on NVIDIA V100 GB GPUs in parallel, and set the mini-batch size on each GPU to . We calculate the average loss on the validation subset after every epochs. If the resulting number is reduced, then we save the current CDD-ICM model, otherwise we restore the CDD-ICM model to the previous saved version. We decay the learning rate by after each restoration, and terminate the optimization after the th restoration.

4.3 Experimental Results

On each dataset, we train a CDD-ICM model by using the training set for optimization and using the validation set for model selection. To compare CDD-ICM with the existing M-CIG methods, we use the test set for evaluation, and finally report the precision, recall, F1 score, and RSIM over all the M-CIG samples in the test set. As shown in Table 1, we achieve SOTA performance on both datasets. Specifically, on CoDraw, CDD-ICM outperforms the existing M-CIG methods by a large margin, where the advantage in precision, recall, and F1 score is relatively larger than that in RSIM. On i-CLEVR, although the existing M-CIG methods did not leave too much room for improvement in precision, recall, and F1 score, CDD-ICM is still better than them in these metrics, reaching almost perfect numbers, and also outperforms them in RSIM.

From all the M-CIG methods in Table 1, we observe two regularities. On the one hand, the performance of these methods on CoDraw is generally worse than that on i-CLEVR. By comparing CoDraw with i-CLEVR, we speculate that this is mainly because the modification texts in CoDraw are commonly longer and more complicated than those in i-CLEVR, which makes it more difficult to generate the desired target images. On the other hand, the performance of these methods in object presence, which is reflected by precision, recall, and F1 score, is generally better than that in object topology, which is reflected by RSIM. By investigating both datasets, we speculate that this is mainly because good performance in object presence just requires correctly identifying the names and attributes of objects from the modification texts, while that in object topology usually requires comprehensively understanding the modification texts.

4.4 Case Study

To visually demonstrate the capability of CDD-ICM, we select several representative M-CIG samples from the test set of both datasets, and use the inference results of CDD-ICM on them as demo cases. A demo case from CoDraw and another one from i-CLEVR are shown in Figure 3, and more demo cases are available in the appendix. In the demo cases from CoDraw, the generated target images contain most of the desired objects, but there are still some extra and missing objects. Besides, it also shows that on CoDraw, CDD-ICM struggles with accurately positioning and orienting objects. In the demo cases from i-CLEVR, the generated target images are very similar to the ground-truth target images, where only a few objects are misaligned.

4.5 Ablation Study

| Method | CoDraw | i-CLEVR | ||

| F1 | RSIM | F1 | RSIM | |

| CDD-ICM | 89.05 | 57.39 | 99.96 | 85.66 |

| w/o ICM | 75.86 | 50.58 | 89.92 | 74.49 |

| w/o ICM Guidance | 87.69 | 56.94 | 96.27 | 81.32 |

| w/o Classifier-free | ||||

| Guidance | 86.34 | 56.11 | 94.91 | 80.28 |

| Fine-tuning of CLIP: | ||||

| Frozen | 80.63 | 54.89 | 87.24 | 72.44 |

| Fine-tuning of CLIP: | ||||

| Fully-Trainable | 58.52 | 39.97 | 65.83 | 44.76 |

| w/o Iterative Setting | 92.51 | 75.93 | 100.00 | 96.20 |

To probe the performance contribution from each design point of CDD-ICM, we conduct the following five ablation experiments. As shown in Table 2, in each ablation experiment, we change a corresponding design point, and report the resulting F1 score and RSIM on each dataset.

ICM. We disable ICM by skipping the first training stage and setting to when calculating in the second training stage. As a result, we observe a significant drop in F1 score and RSIM. This verifies the effectiveness of learning ICM as an auxiliary objective of CDD. Besides, we also observe a significant drop in the converging speed of the second training stage. This further verifies that learning ICM is beneficial for learning CDD.

ICM Guidance. We disable ICM guidance by skipping the third training stage and setting to . As a result, we observe a drop in F1 score and RSIM. This verifies the effectiveness of ICM guidance.

Classifier-free Guidance. We disable classifier-free guidance by setting to and setting to . As a result, we observe a drop in F1 score and RSIM. This verifies the effectiveness of classifier-free guidance.

Fine-tuning of CLIP. For the fine-tuning of CLIP, which is controlled by the backbone activity ratio , we examine two extreme cases. On the one hand, we make CLIP frozen by setting to . On the other hand, we make CLIP fully-trainable by setting to . As a result, we observe a significant drop in F1 score and RSIM in both cases. This verifies the necessity of applying .

Iterative Setting. In the evaluation, we disable the iterative setting by using the ground-truth target image at each turn of M-CIG as the reference image of the next turn, which actually downgrades M-CIG to CIG. As a result, we observe a significant rise in F1 score and RSIM. This verifies that M-CIG is more challenging than CIG.

5 Conclusion and Limitation

In this paper, we focus on M-CIG, which is a challenging and practical image generation task, and propose a diffusion-based method named CDD-ICM, which achieves SOTA performance on CoDraw and i-CLEVR. The limitation of CDD-ICM mainly lies in its inference efficiency. Although we have accelerated the inference of CDD-ICM by traversing only a part of the time steps in a deterministic manner, it still takes GPU seconds for CDD-ICM to generate a target image, which is much slower than the GAN-based methods. In the future, we plan to further accelerate the inference of CDD-ICM by applying latent diffusion models [49] and knowledge distillation methods [33, 55].

References

- [1] Kenan E. Ak, Ying Sun, and Joo-Hwee Lim. Learning cross-modal representations for language-based image manipulation. 2020 IEEE International Conference on Image Processing (ICIP), pages 1601–1605, 2020.

- [2] Martin Arjovsky and Leon Bottou. Towards principled methods for training generative adversarial networks. In ICLR, 2017.

- [3] Yoshua Bengio, Eric Laufer, Guillaume Alain, and Jason Yosinski. Deep generative stochastic networks trainable by backprop. In ICML, pages 226–234. PMLR, 2014.

- [4] Andrew Brock, Jeff Donahue, and Karen Simonyan. Large scale gan training for high fidelity natural image synthesis. In ICLR, 2019.

- [5] Andrew Brock, Jeff Donahue, and Karen Simonyan. Large scale gan training for high fidelity natural image synthesis. ICLR, 2019.

- [6] Andrew Brock, Theodore Lim, James M Ritchie, and Nick Weston. Neural photo editing with introspective adversarial networks. In ICLR, 2017.

- [7] Ryan Dahl, Mohammad Norouzi, and Jonathon Shlens. Pixel recursive super resolution. In ICCV, pages 5439–5448, 2017.

- [8] Prafulla Dhariwal and Alexander Nichol. Diffusion models beat gans on image synthesis. In NeurIPS, 2021.

- [9] Chao Dong, Chen Change Loy, Kaiming He, and Xiaoou Tang. Learning a deep convolutional network for image super-resolution. In ECCV, pages 184–199. Springer, 2014.

- [10] Chao Dong, Chen Change Loy, Kaiming He, and Xiaoou Tang. Image super-resolution using deep convolutional networks. IEEE transactions on pattern analysis and machine intelligence, 38(2):295–307, 2015.

- [11] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. In ICLR, 2021.

- [12] Alaaeldin El-Nouby, Shikhar Sharma, Hannes Schulz, Devon Hjelm, Layla El Asri, Samira Ebrahimi Kahou, Yoshua Bengio, and Graham W Taylor. Tell, draw, and repeat: Generating and modifying images based on continual linguistic instruction. In ICCV, 2019.

- [13] Tsu-Jui Fu, Xin Wang, Scott Grafton, Miguel Eckstein, and William Yang Wang. SSCR: Iterative language-based image editing via self-supervised counterfactual reasoning. In EMNLP, 2020.

- [14] Tsu-Jui Fu, Xin Wang, Scott Grafton, Miguel Eckstein, and William Yang Wang. Sscr: Iterative language-based image editing via self-supervised counterfactual reasoning. In EMNLP, pages 4413–4422, 2020.

- [15] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. In NeurIPS, 2014.

- [16] Jonathan Ho, Ajay Jain, and Pieter Abbeel. Denoising diffusion probabilistic models. In NeurIPS, 2020.

- [17] Jonathan Ho, Chitwan Saharia, William Chan, David J Fleet, Mohammad Norouzi, and Tim Salimans. Cascaded diffusion models for high fidelity image generation. Journal of Machine Learning Research, 2022.

- [18] Jonathan Ho and Tim Salimans. Classifier-free diffusion guidance. arXiv preprint arXiv:2207.12598, 2022.

- [19] Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. Progressive growing of gans for improved quality, stability, and variation. In ICLR, 2018.

- [20] Tero Karras, Samuli Laine, and Timo Aila. A style-based generator architecture for generative adversarial networks. In CVPR, pages 4401–4410, 2019.

- [21] E Ak Kenan, Ying Sun, and Joo Hwee Lim. Learning cross-modal representations for language-based image manipulation. In 2020 IEEE International Conference on Image Processing (ICIP), 2020.

- [22] Gwanghyun Kim, Taesung Kwon, and Jong Chul Ye. Diffusionclip: Text-guided diffusion models for robust image manipulation. In CVPR, 2022.

- [23] Jiwon Kim, Jung Kwon Lee, and Kyoung Mu Lee. Accurate image super-resolution using very deep convolutional networks. In CVPR, pages 1646–1654, 2016.

- [24] Jin-Hwa Kim, Nikita Kitaev, Xinlei Chen, Marcus Rohrbach, Byoung-Tak Zhang, Yuandong Tian, Dhruv Batra, and Devi Parikh. CoDraw: Collaborative drawing as a testbed for grounded goal-driven communication. In ACL, 2019.

- [25] Jin-Hwa Kim, Nikita Kitaev, Xinlei Chen, Marcus Rohrbach, Byoung-Tak Zhang, Yuandong Tian, Dhruv Batra, and Devi Parikh. Codraw: Collaborative drawing as a testbed for grounded goal-driven communication. In ACL, pages 6495–6513, 2019.

- [26] Christian Ledig, Lucas Theis, Ferenc Huszár, Jose Caballero, Andrew Cunningham, Alejandro Acosta, Andrew Aitken, Alykhan Tejani, Johannes Totz, Zehan Wang, et al. Photo-realistic single image super-resolution using a generative adversarial network. In CVPR, pages 4681–4690, 2017.

- [27] Daniel Levy, Matthew D. Hoffman, and Jascha Sohl-Dickstein. Generalizing hamiltonian monte carlo with neural networks. In ICLR, 2018.

- [28] Bowen Li, Xiaojuan Qi, Thomas Lukasiewicz, and Philip HS Torr. Manigan: Text-guided image manipulation. In CVPR, pages 7880–7889, 2020.

- [29] Hongyu Liu, Bin Jiang, Yibing Song, Wei Huang, and Chao Yang. Rethinking image inpainting via a mutual encoder-decoder with feature equalizations. In ECCV, pages 725–741. Springer, 2020.

- [30] Nan Liu, Shuang Li, Yilun Du, Antonio Torralba, and Joshua B Tenenbaum. Compositional visual generation with composable diffusion models. In ECCV, pages 423–439. Springer, 2022.

- [31] Ilya Loshchilov and Frank Hutter. Decoupled weight decay regularization. In ICLR, 2019.

- [32] Andreas Lugmayr, Martin Danelljan, Luc Van Gool, and Radu Timofte. Srflow: Learning the super-resolution space with normalizing flow. In ECCV, pages 715–732. Springer, 2020.

- [33] Eric Luhman and Troy Luhman. Knowledge distillation in iterative generative models for improved sampling speed. arXiv preprint arXiv:2101.02388, 2021.

- [34] Shoya Matsumori, Yuki Abe, Kosuke Shingyouchi, Komei Sugiura, and Michita Imai. Lattegan: Visually guided language attention for multi-turn text-conditioned image manipulation. IEEE Access, 2021.

- [35] Sachit Menon, Alexandru Damian, Shijia Hu, Nikhil Ravi, and Cynthia Rudin. Pulse: Self-supervised photo upsampling via latent space exploration of generative models. In CVPR, pages 2437–2445, 2020.

- [36] Takeru Miyato, Toshiki Kataoka, Masanori Koyama, and Yuichi Yoshida. Spectral normalization for generative adversarial networks. In ICLR, 2018.

- [37] Seonghyeon Nam, Yunji Kim, and Seon Joo Kim. Text-adaptive generative adversarial networks: manipulating images with natural language. NeurIPS, 2018.

- [38] Kamyar Nazeri, Eric Ng, Tony Joseph, Faisal Z Qureshi, and Mehran Ebrahimi. Edgeconnect: Generative image inpainting with adversarial edge learning. arXiv preprint arXiv:1901.00212, 2019.

- [39] Alex Nichol, Prafulla Dhariwal, Aditya Ramesh, Pranav Shyam, Pamela Mishkin, Bob McGrew, Ilya Sutskever, and Mark Chen. Glide: Towards photorealistic image generation and editing with text-guided diffusion models. In ICML, 2022.

- [40] Alexander Quinn Nichol and Prafulla Dhariwal. Improved denoising diffusion probabilistic models. In ICML, 2021.

- [41] Aaron van den Oord, Yazhe Li, and Oriol Vinyals. Representation learning with contrastive predictive coding. arXiv preprint arXiv:1807.03748, 2018.

- [42] Niki Parmar, Ashish Vaswani, Jakob Uszkoreit, Lukasz Kaiser, Noam Shazeer, Alexander Ku, and Dustin Tran. Image transformer. In ICML, pages 4055–4064. PMLR, 2018.

- [43] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al. Pytorch: An imperative style, high-performance deep learning library. In NeurIPS, 2019.

- [44] Deepak Pathak, Philipp Krahenbuhl, Jeff Donahue, Trevor Darrell, and Alexei A Efros. Context encoders: Feature learning by inpainting. In CVPR, pages 2536–2544, 2016.

- [45] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In ICML, 2021.

- [46] Alec Radford, Karthik Narasimhan, Tim Salimans, and Ilya Sutskever. Improving language understanding by generative pre-training. OpenAI, 2018.

- [47] Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125, 2022.

- [48] Yurui Ren, Xiaoming Yu, Ruonan Zhang, Thomas H Li, Shan Liu, and Ge Li. Structureflow: Image inpainting via structure-aware appearance flow. In ICCV, pages 181–190, 2019.

- [49] Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. High-resolution image synthesis with latent diffusion models. In CVPR, 2022.

- [50] Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18, pages 234–241. Springer, 2015.

- [51] Chitwan Saharia, William Chan, Huiwen Chang, Chris Lee, Jonathan Ho, Tim Salimans, David Fleet, and Mohammad Norouzi. Palette: Image-to-image diffusion models. In ACM SIGGRAPH 2022 Conference Proceedings, 2022.

- [52] Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily Denton, Seyed Kamyar Seyed Ghasemipour, Burcu Karagol Ayan, S Sara Mahdavi, Rapha Gontijo Lopes, et al. Photorealistic text-to-image diffusion models with deep language understanding. arXiv preprint arXiv:2205.11487, 2022.

- [53] Chitwan Saharia, Jonathan Ho, William Chan, Tim Salimans, David J Fleet, and Mohammad Norouzi. Image super-resolution via iterative refinement. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

- [54] Tim Salimans, Ian Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, and Xi Chen. Improved techniques for training gans. In NeurIPS, 2016.

- [55] Tim Salimans and Jonathan Ho. Progressive distillation for fast sampling of diffusion models. In ICLR, 2022.

- [56] Tim Salimans, Diederik Kingma, and Max Welling. Markov chain monte carlo and variational inference: Bridging the gap. In ICML, pages 1218–1226. PMLR, 2015.

- [57] Konstantin Shmelkov, Cordelia Schmid, and Karteek Alahari. How good is my gan? In ECCV, pages 213–229, 2018.

- [58] Jascha Sohl-Dickstein, Eric Weiss, Niru Maheswaranathan, and Surya Ganguli. Deep unsupervised learning using nonequilibrium thermodynamics. In ICML, 2015.

- [59] Jiaming Song, Chenlin Meng, and Stefano Ermon. Denoising diffusion implicit models. In ICLR, 2021.

- [60] Jiaming Song, Chenlin Meng, and Stefano Ermon. Denoising diffusion implicit models. In ICLR, 2021.

- [61] Jiaming Song, Shengjia Zhao, and Stefano Ermon. A-nice-mc: Adversarial training for mcmc. NeurIPS, 30, 2017.

- [62] Yang Song and Stefano Ermon. Generative modeling by estimating gradients of the data distribution. In NeurIPS, 2019.

- [63] Yang Song, Jascha Sohl-Dickstein, Diederik P Kingma, Abhishek Kumar, Stefano Ermon, and Ben Poole. Score-based generative modeling through stochastic differential equations. In ICLR, 2021.

- [64] Yuhang Song, Chao Yang, Yeji Shen, Peng Wang, Qin Huang, and C.-C. Jay Kuo. Spg-net: Segmentation prediction and guidance network for image inpainting. In British Machine Vision Conference 2018, BMVC 2018, Newcastle, UK, September 3-6, 2018, page 97. BMVA Press, 2018.

- [65] Nam Vo, Lu Jiang, Chen Sun, Kevin Murphy, Li-Jia Li, Li Fei-Fei, and James Hays. Composing text and image for image retrieval-an empirical odyssey. In CVPR, pages 6439–6448, 2019.

- [66] Hao Wang, Guosheng Lin, Steven CH Hoi, and Chunyan Miao. Cycle-consistent inverse gan for text-to-image synthesis. In Proceedings of the 29th ACM International Conference on Multimedia, pages 630–638, 2021.

- [67] Wei Wang, Ming Yan, and Chen Wu. Multi-granularity hierarchical attention fusion networks for reading comprehension and question answering. In ACL, 2018.

- [68] Xintao Wang, Ke Yu, Shixiang Wu, Jinjin Gu, Yihao Liu, Chao Dong, Yu Qiao, and Chen Change Loy. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European conference on computer vision (ECCV) workshops, pages 0–0, 2018.

- [69] Yi Wang, Xin Tao, Xiaojuan Qi, Xiaoyong Shen, and Jiaya Jia. Image inpainting via generative multi-column convolutional neural networks. NeurIPS, 2018.

- [70] Zhaowen Wang, Ding Liu, Jianchao Yang, Wei Han, and Thomas Huang. Deep networks for image super-resolution with sparse prior. In ICCV, pages 370–378, 2015.

- [71] Thomas Wolf, Lysandre Debut, Victor Sanh, Julien Chaumond, Clement Delangue, Anthony Moi, Pierric Cistac, Tim Rault, Rémi Louf, Morgan Funtowicz, Joe Davison, Sam Shleifer, Patrick von Platen, Clara Ma, Yacine Jernite, Julien Plu, Canwen Xu, Teven Le Scao, Sylvain Gugger, Mariama Drame, Quentin Lhoest, and Alexander M. Rush. Huggingface’s transformers: State-of-the-art natural language processing. ArXiv, 2019.

- [72] Zhaoyi Yan, Xiaoming Li, Mu Li, Wangmeng Zuo, and Shiguang Shan. Shift-net: Image inpainting via deep feature rearrangement. In ECCV, pages 1–17, 2018.

- [73] Yanhong Zeng, Jianlong Fu, Hongyang Chao, and Baining Guo. Learning pyramid-context encoder network for high-quality image inpainting. In CVPR, pages 1486–1494, 2019.

- [74] Tianhao Zhang, Hung-Yu Tseng, Lu Jiang, Weilong Yang, Honglak Lee, and Irfan Essa. Text as neural operator: Image manipulation by text instruction. In Proceedings of the 29th ACM International Conference on Multimedia, pages 1893–1902, 2021.

- [75] Shizhan Zhu, Raquel Urtasun, Sanja Fidler, Dahua Lin, and Chen Change Loy. Be your own prada: Fashion synthesis with structural coherence. In ICCV, pages 1680–1688, 2017.

- [76] C Lawrence Zitnick and Devi Parikh. Bringing semantics into focus using visual abstraction. In CVPR, pages 3009–3016, 2013.

Appendix A Demo Cases from CoDraw

Appendix B Demo Cases from i-CLEVR