A Differentially Private Framework for Deep Learning with Convexified Loss Functions

Abstract

Differential privacy (DP) has been applied in deep learning for preserving privacy of the underlying training sets. Existing DP practice falls into three categories [1] – objective perturbation (injecting DP noise into the objective function), gradient perturbation (injecting DP noise into the process of gradient descent) and output perturbation (injecting DP noise into the trained neural networks, scaled by the global sensitivity of the trained model parameters). They suffer from three main problems. First, conditions on objective functions limit objective perturbation in general deep learning tasks. Second, gradient perturbation does not achieve a satisfactory privacy-utility trade-off due to over-injected noise in each epoch. Third, high utility of the output perturbation method is not guaranteed because of the loose upper bound on the global sensitivity of the trained model parameters as the noise scale parameter. To address these problems, we analyse a tighter upper bound on the global sensitivity of the model parameters. Under a black-box setting, based on this global sensitivity, to control the overall noise injection, we propose a novel output perturbation framework by injecting DP noise into a randomly sampled neuron (via the exponential mechanism) at the output layer of a baseline non-private neural network trained with a convexified loss function. We empirically compare the privacy-utility trade-off, measured by accuracy loss to baseline non-private models and the privacy leakage against black-box membership inference (MI) attacks [2], between our framework and the open-source differentially private stochastic gradient descent (DP-SGD) approaches on six commonly used real-world datasets. The experimental evaluations show that, when the baseline models have observable privacy leakage under MI attacks, our framework achieves a better privacy-utility trade-off than existing DP-SGD implementations, given an overall privacy budget for a large number of queries.

Index Terms:

Differential privacy, Membership inference attack, Neural networks, Convexified loss function.1 Introduction

Membership Inference (MI) attacks pose serious privacy risks to Machine Learning-as-a-Service (MLaaS). In a nutshell, MI attacks apply a binary classifier on the prediction vectors (outputs) of data samples obtained from a non-private machine learning (ML) model to infer whether the data samples are members of the training set or not. In this paper, we assume the baseline ML model is a deep neural network (DNN). There are two general types of MI attacks, black-box MI attacks [2] and white-box MI attacks [3], depending on the adversary’s access to the target ML model trained on some training set. Compared to the white-box MI attacks, the black-box attacks require less [3] (or even no [4]) auxiliary information about the online MLaaS, which does not release model details but only the queried prediction output. Our focus is on providing privacy against black-box MI attacks.

To prevent membership disclosure, differential privacy (DP) [5] is a promising technique, which (informally) shields the existence of any arbitrary data sample in a dataset, thereby preserving membership privacy for each member in the training set. There are three broad categories of applying DP into deep learning – objective perturbation, gradient perturbation and output perturbation – according to Jayaraman et al. [1]. In particular, objective perturbation injects DP noise into the objective function of a learning task [6]; gradient perturbation injects DP noise into each epoch during gradient descent [7]; output perturbation injects DP noise into the elements (edges or nodes) of a trained non-private neural network [8, 9] or into the prediction results following the sample-and-aggregate mechanism of DP [10]. Due to the non-convexity of the loss function, applying objective perturbation and output perturbation mainly rely on convexification techniques. For example, Phan et al. [11, 12] convexify the loss function of convolutional deep belief networks [13], then inject DP noise into the coefficients via the functional mechanism (originally for non-deep learning tasks) [6]. More generally, we could implement output perturbation by training a baseline non-private neural network with a universal convexified loss function [14, 15], then injecting DP noise into the elements of the trained network.

However, there are some weaknesses that limit the application and performance of existing DP approaches to deep learning. Objective perturbation approaches (combining convexified loss function [11, 12] and DP objective function [6]) only work for a specified learning task – convolutional deep belief network [13], which makes it hard to apply it to general deep learning tasks. The gradient perturbation approaches (including using different DP variants and composition theorems) suffer from over-injected noise, mainly since the overall noise injection depends on the number of training epochs, which are usually large in deep learning [16]. PATE [10], the only work implementing sample and aggregate mechanism of DP for output perturbation, works in a special configuration requiring additional publicly available data to assist differentially private aggregation of the distributed learning outputs. The output perturbation framework (universal convexification plus DP noise injection) relies on tight upper bound on the DP noise scale parameter (the global sensitivity). Existing theoretical results [8, 9] provide loose upper bounds assuming convexity of the loss function. To tighten their results, more conditions should be introduced in addition to convex loss function, such as normalised training sets with binary classes [8] or smooth loss functions with a decreasing step size during the process of stochastic gradient descent (SGD) [9]. These conditions on existing upper bounds are shown in Table I.

(-Lipschitz loss functions, -strongly convex regularisers, : constant SGD step, : number of classes,

: maximum norm of a data sample in the training data space, : size of the training set.)

Contributions. To address the problems in existing DP practice in deep learning, we propose a novel DP framework in the black-box setting. We summarise our main contributions below.

-

1.

We mathematically analyse a tighter upper bound on the global sensitivity of the trained model parameters, which, like existing upper bounds, assumes a convex loss function, but without other conditions (see Table I for a brief comparison). In contrast to existing works, we bound the maximum change of the model parameters by analysing the stability [17] of a trained model after removing an arbitrary data sample from a training set.

-

2.

We propose a novel framework in the black-box setting, where DP noise is injected into an individual neuron at the output layer of a trained neural network. Specifically, at the training stage (for a baseline non-private model), we apply a universal convexification of the loss function [14, 15] to meet the convexity condition. At the prediction stage (for a differentially private prediction probability vector), we first sample a neuron with the exponential mechanism of DP (Exp-DP) [18] at the output layer, and then inject DP noise into the sampled neuron, where we scale the noise to the global sensitivity of an individual neuron (bounded by the global sensitivity of the trained model parameters).

-

3.

We empirically compare the privacy-utility trade-off of our framework with existing open-source differentially private stochastic gradient descent (DP-SGD) approaches for classification on six commonly used real-world datasets, following the same experimental configurations as existing studies [2, 1]. The experimental results show that, when the baseline non-private models have observable privacy leakage under MI attacks, our framework achieves a better privacy-utility trade-off than existing DP-SGD implementations, given an overall privacy budget for a large number of queries, which is likely to be the size we expect in practice.

2 Related Work

Differentially private deep learning can be mainly categorised into objective perturbation, gradient perturbation and output perturbation [1]. The difference between the three categories is at the point where we inject DP noise. Objective perturbation injects DP noise into the objective function; gradient perturbation injects DP noise into the process of gradient descent; output perturbation injects DP noise into the output, i.e., the elements of a trained neural network.

There are two works for differentially private deep learning using objective perturbation [1]. Phan et al. [11, 12] replace the non-convex loss function with polynomial approximations via Taylor expansions, then inject DP noise into the coefficients of the approximate function via the functional mechanism [6]. However, this framework only works on a specific machine learning task – convolutional deep belief network [13], which makes it hard to apply it to general deep learning tasks. Additionally, it is hard to find the optimal polynomial degree to achieve an acceptable trade-off between utility and privacy in practice.

Gradient perturbation is the most used DP technique in deep learning (DP-SGD) [7]), including an open-source Python library provided by Google’s TensorFlow Privacy [19]. DP-SGD perturbs the minibatch stochastic optimisation process, i.e., injecting DP noise into the gradients of each epoch during the training process. Specifically, in each epoch, DP-SGD bounds the global sensitivity of the gradients (the maximum change of the gradients by an individual data samples) by norm clipping. However, since DP-SGD injects noise into all the epochs, the overall injected noise is highly depending on the number of training epochs. If this number is large, which it usually is, DP-SGD would finally inject too much noise.

For output perturbation, there are two main streams of techniques. One line of works applies the sample-and-aggregate mechanism of DP to produce differentially private prediction probability vectors. For example, Papernot et al. [10] propose a framework for differentially private aggregate machine learning, called Private Aggregation of Teacher Ensembles (PATE). Specifically, PATE splits the private dataset into several (at least 100 reported in [10]) disjoint subsets which are inputs for training sub-models/teacher models. Those teacher models predict the labels of a publicly available data (following the same distribution as the original dataset). Then PATE injects DP noise into the count of each predicted label (vote histogram), takes the label having maximum noisy count as the label. Such noisy labelled data are then used to train a student model, which provides differentially private prediction (MLaaS) for new observations. However, PATE may not always be realistic in real-world applications [16]. First, data curators may not have a large amount of private dataset to split, such that each subset is large enough to train a model. Second, data curators may not have any publicly accessible dataset to train the student model. Third, in case there is no such publicly accessible dataset, data curators may use Generative Adversarial Network (GAN) [20] to generate artificial datasets; however, this further introduces additional computational costs, as well as potential impact on utility.

The other line of work in output perturbation is to inject DP noise into the baseline non-private neural networks trained with a convexified loss function. To do so, we should analyse the upper bound on the global sensitivity of the trained model parameters for noise injection. There are two theoretical results [8, 9] for this upper bound, subject to the convexity of the loss function. To tighten these bounds, more conditions need to be introduced, such as normalised training data for binary classification tasks [8] or smooth loss function with decreasing step size during the SGD process [9]. However, these additional conditions may not always fit real-world machine learning tasks.

Based on the above analyses, we conclude that existing DP approaches in objective perturbation, gradient perturbation and output perturbation suffer from three problems. First, conditions on objective functions limit the objective perturbation in general deep learning tasks. Second, gradient perturbation does not achieve a satisfactory privacy-utility trade-off due to over-injected noise in each epoch. Third, the utility of output perturbation is not guaranteed because of loose upper bounds on the global sensitivity of the trained model parameters. Therefore, in this paper, we aim to provide a DP framework of output perturbation to control the overall noise injection with a tighter upper bound on global sensitivity of model parameters, achieving a better trade-off between utility and privacy, in general deep learning tasks.

3 Preliminaries

A dataset is a collection of rows: , where each is from some fixed data domain. Two datasets and are called neighbouring datasets, denoted , if one is obtained from the other by adding or removing a single row. Given a dataset , represents the dataset , i.e., the neighbouring dataset of with an arbitrary row removed. Similarly, denotes the dataset , i.e., the row replaced with an arbitrary row . Note that the Hamming distance between and , and datasets and are not neighbouring datasets according to our definition.

3.1 Differential Privacy

Informally, differential privacy (DP) [5] ensures that two outputs, produced by a randomised algorithm on two neighbouring datasets, are almost indistinguishable.

Definition 1 (-DP [5]).

A randomised mechanism is -differentially private if for all neighbouring datasets and , , and for all outputs , satisfies:

| (1) |

where is the privacy budget.

Two prototypical -DP mechanisms considered in this paper are the Laplace mechanism (Lap-DP) [5] and the exponential mechanism (Exp-DP) [18]. The former adds additive noise drawn from the Laplace distribution of scale to the numeric computation , where is the global sensitivity of . The global sensitivity of is:

| (2) |

where is the -norm. For simplicity, in this paper, denotes the global sensitivity.

The Exp-DP mechanism is a weighted sampling scheme to generate the output from a set of (arbitrary) candidates, and is well-suited for non-numeric computations. The mechanism simply outputs a given candidate from a set of candidates computed over a dataset , with probability proportional to , where is the score/quality function of the candidate , and is the maximum possible change in over all neighbouring datasets.

An important property of differential privacy is that the mechanisms compose [21]:

Theorem 1 (Sequential Composition [21]).

Let provide -DP. Then the sequence of provides -DP.

The recently proposed -Gaussian DP (-GDP) [22], injecting noise following a Gaussian distribution , is a relaxation of traditional -DP. We have

Theorem 2.

[22] A mechanism is -GDP if and only if it is -DP, , where , is the cumulative distribution function of .

The composition of GDP is as follows.

Theorem 3 (Composition of GDP [22]).

The -fold composition of -GDP is -GDP, where each -GDP injects Gaussian noise following .

3.2 Machine Learning Basics

Let be a learning algorithm, be a training set drawn from a distribution (), be the output of the learning algorithm on the training set , i.e., trained model parameters, where is the number of model parameters. Once the model parameters are set after training, we predict the class label of an observation as , where , is the learnt hypothesis and is the number of classes.

We use a loss function on an observation to measure the empirical error of on . Note that each is a composition of a set of features and an accompanying class label. Accordingly, we have the following equation to measure the training error of the learning algorithm on the dataset . The learning algorithm aims to minimise the training error.

| (3) |

Measurement for overfitting. A fundamental issue to avoid in machine learning is overfitting of a learning algorithm. One way to measure overfitting of a learning algorithm is the notion of On-Average-Replace-One stability of a model [17]. That is, replacing a single data sample does not result in a large difference on the value of the loss function.

Definition 2 (On-Average-Replace-One Stability [17]).

Let be a monotonically decreasing function. We say that a learning algorithm is on-average-replace-one stable with rate if for every distribution ,

| (4) |

where , and is the uniform distribution over .

We extend On-Average-Replace-One stability to On-Average-Remove-One stability to fit the neighbouring dataset requirement of Definition 1.

Definition 3 (On-Average-Remove-One (OARO) Stability).

Given a monotonically decreasing function , an arbitrary distribution , then a learning algorithm is on-average-remove-one stable with rate if

| (5) |

where is the uniform distribution over .

Based on Inequality (5), a less indicates higher OARO stability.

Topology of deep neural networks. A deep neural network is a graph, , formed by connecting layers. A layer is a set of disconnected vertices, . Each vertex is a neuron in the neural network, one of the neurons serves as the bias term. The vertices set is a union of disjoint layers, and the edges set is the set of weighted edges connecting vertices in two adjacent layers. For example, an edge (with a weight ) connects and . We call input layer, hidden layers and output layer. Note that , where is the dimension of a data sample, , where is the number of classes, is the number of model parameters.

When using a trained neural network (trained model parameters/weights and given topology) to make prediction for a given data sample , we calculate the output of each vertex/neuron, at layer as follows.

| (6) |

where is an activation function for the neurons, and is also known as the bias term. At the output layer, the final prediction probability vector of the given data sample would be , where . In our experiments in Section 5, is Tanh for , is Softmax for .

Furthermore, we also use the concepts of convex set, convex function, -strong convexity and -Lipschitzness in this paper. We follow the standard definitions of these concepts.

Definition 4 (Convex set [17]).

is a convex set if and only if for any two vector , and an , we have .

Definition 5 (Convex function [17]).

A function is convex if is convex and for any two vectors and in , we have

| (7) |

Definition 6 (-Strong convexity [17]).

A convex function is -strongly convex if for any two vectors and in and , we have

| (8) |

Definition 7 (-Lipschitzness [17]).

A function is -Lipschitz over if for any two vectors and in X, we have

| (9) |

3.3 Membership Inference Attacks

The goal of Membership Inference (MI) Attacks is to infer the membership of a given data sample by the prediction output of the data sample. In this paper we specify MI attacks as black-box MI attacks implemented via the shadow model technique by Shokri et al. [2]. That is, the MI adversaries only have access to the distribution of the target/private dataset and the prediction output of a given data sample.

For simplicity, a black-box MI attack via shadow models is a function . The adversary performs a shadow models-based MI attack in three steps. Firstly, the adversary trains shadow models with shadow datasets , , mimicking the behaviour of the target model trained with the target/private dataset . Then the adversary uses the shadow models to make predictions for training data (members) and test data (non-members). These prediction vectors will then be the training data to train an attack model (a binary classifier). Finally, for a given data sample , the adversary queries the prediction probability vector of from the target model, then feeds to the trained attack model to predict the membership () of .

Table II summarises the notations used in this paper.

| Notation | Description |

|---|---|

| Learning algorithm | |

| Number of classes | |

| Data distribution | |

| Privacy budget | |

| Edge connecting and | |

| Neural network | |

| Strongly convex constant | |

| Loss function on | |

| Training error on | |

| Dimension of the training set | |

| , | Size of the training set |

| Lipschitzness constant | |

| Uniform distribution over | |

| Neurons set at layer | |

| , | Model parameters trained on |

| global sensitivity of weights vector | |

| global sensitivity of an individual weight | |

| Weight on edge | |

| th neuron at th layer in | |

| Training set | |

| Neighbouring dataset of | |

| Data sample (vector) of | |

| drawn from distribution | |

| global sensitivity of a neuron (output layer) | |

| distance between and | |

| Cardinality of a set |

4 Differential Privacy via Output Perturbation and Theoretical Results

In this section, we show our differentially private algorithm, then mathematically analyse the properties of the proposed approach including global sensitivity of model parameters and the effect of differential privacy against membership inference attacks.

4.1 Idea Overview

Based on our discussion in the related works section (Section 2), an output perturbation-based DP solution has one potential benefit over gradient and input perturbation-based approaches. In the latter two approaches, the entire privacy budget is consumed for model training. Any subsequent use of the trained model does not further consume the privacy budget due to the post-processing property of differential privacy. This, however, means that the utility offered is fixed for any number of queries; whether we ask 10 queries or 10,000 queries (a query means one data sample given as input to the model; the model in turn returns the corresponding probability vector). On the other hand, the output perturbation approach depletes budget per query to the trained model. This means that if the trained model is used for a fixed number of queries (as opposed to an unlimited number of queries), then we can offer better utility by virtue of allocating larger chunks of the budget per query. This motivates our focus on output perturbation.

In this section, we propose an output perturbation-based DP algorithm for general deep learning tasks. To provide a better privacy-utility trade-off, we inject DP noise into a randomly sampled neuron at the output layer. Figure 1 depicts our general idea, where neuron is the randomly sampled neuron and the DP noise follows the distribution . We shall use both the Laplace and the Gaussian mechanisms as examples of this distribution in our experiments, providing -DP and -GDP (or -DP) guarantees, respectively.

Specifically, our DP algorithm works in three steps. Firstly, for a given data sample, we feed this data sample to a trained non-private neural network to calculate the values of the neurons at the output layer. Next, we inject DP noise into a randomly sampled neuron at the output layer. After noise injection, we apply Softmax function on the noisy neuron vector to produce a differentially private probability vector. Algorithm 1 shows the implementation details, where Line 1 to Line 7 are the first step, Line 8 to Line 10 are the second step, and Line 11 to Line 13 are the third step. Note that, Algorithm 1 only introduces marginally more time than the non-private neural network: Lines 9 and 10 to the non-private neural networks. Sampling (Line 9) is efficiently implementable even with a large number of classes. The injected noise does not depend on the number of classes and the number of training data samples.

Also, since noise is not injected into the rest of the neurons in the neural network, the output perturbation technique is useful only in the black-box setting, where model parameters are not released. The key point of implementing Algorithm 1 is to find a tight upper bounds on for deep learning models. In the following sections, we show our upper bound on by analysing the upper bound on the global sensitivity of the model parameters, (which is tighter than existing results [8, 9] subject to the convexity of the loss function). We then show the DP guarantee of Algorithm 1 and the effect of DP against the black-box MI attacks.

4.2 Analysis of the Upper Bound on Global Sensitivity

This section analyses the upper bounds on the global sensitivity of trained model parameters and the global sensitivity of an individual neuron at the output layer. We analyse these upper bounds based on the fundamental properties of convex and strongly convex functions.

Lemma 1.

[17] The -norm regulariser is -strongly convex.

Lemma 2.

[17] Let function be convex, function be -strongly convex. Their linear composition is -strongly convex.

Lemma 3.

[17] Let be a minimiser of a -strongly convex function (), then for any , we have

| (10) |

Based on Lemma 1, Lemma 2 (when is the loss function and is the -norm regulariser) and Lemma 3, we have the following theorem to find the upper bound on the On-Average-Remove-One (OARO) stability (Definition 2) of the loss function and the global sensitivity of the model parameters, where we measure the global sensitivity of the model parameters by -norm (a.k.a. -sensitivity [21]).

Theorem 4.

Given a convex and -Lipschitz loss function and a -strongly convex regulariser, the upper bound on the global sensitivity of the model parameters (of the neural network) is ; the On-Average-Remove-One stability of the loss function is bounded by .

Proof.

Based on Lemma 1, Lemma 2 and Lemma 3, we have

| (11) | ||||

| (12) | ||||

| (13) | ||||

| (14) |

where Inequality (11) is based on Lemma 3; we change the measurement domain for the training error in Equation (12), such that one more term is added for each training error; since is the minimiser of , we have Inequality (13); Inequality (14), is a result of -Lipschitz loss function. Because , , we immediately have

| (15) |

Furthermore, since the loss function is -Lipschitz, we have

| (16) |

Since this inequality holds for any and (), we then have

| (17) |

We note that Chaudhuri et al. [8] provide a similar result to Theorem 4. However, their upper bound on the global sensitivity of the model parameters is under the condition of a binary classification task and a normalised training set (). In addition, Wu et al. [9] also provide the same upper bound on the global sensitivity of the model parameters as ours. However, their result needs the loss function to be -smooth with a decreasing step size during the process of stochastic gradient descent. Once we remove these additional conditions, the two upper bounds become loose. Table I in Section 1 shows a brief comparison between these upper bonds and their conditions. Compared to [8] and [9], when achieving the same tight bound, our result only relies on the convexity of the loss function but not the additional conditions introduced in [8, 9].

Since is the global sensitivity, we have , where is the number of edges in a deep neural network. Since each has the same upper bound , we have the global sensitivity (-norm) of an individual model parameter

| (18) |

Once we have the global sensitivity of each model parameter/weight, we can further analyse the upper bound on the global sensitivity of the neuron (input to the Softmax function) at the output layer.

Corollary 1.

Given the global sensitivity of an individual model parameter , a fully connected -layer neural network, , where the output layer has no activation function, the activation function at the hidden layers is bounded by , the global sensitivity of a neuron at the output layer is bounded by .

Proof.

Let be the value of an arbitrary neuron at the output layer , then we have , where is the incoming degree of in a fully connected neural network. Since the activation function is bounded by . We achieve the maximum difference between and when and , , that is

| (19) |

as required. ∎

In practice, some commonly used activation functions, such as, Tanh, Sigmoid, Binary step and Gaussian functions, provide . In our experiments (Section 5), we use Tanh function as the activation function in the hidden layers, following existing works [2, 4, 23, 1] in MI attacks.

Corollary 2.

Given the global sensitivity of an individual neuron at the output layer of a neural network , the upper bound on the global sensitivity of , , is , where is the prediction probability vector provided by the Softmax function.

Proof.

For an arbitrary neuron at the output layer, we have , where is the number of classes. Since , the global sensitivity of is

| (20) |

Since both and are less than , we have to conclude this corollary. ∎

Note that, when , becomes trivial, i.e., , which is the maximum change for a probability of neuron at the output layer). However, based on the way the Exp-DP behaves (in Algorithm 1), even if the bound on is trivial, the highest probability neuron will still have a relatively high probability of being sampled. That is, Algorithm 1 still guarantees the quality of the sampled neuron. Moreover, in Table VI in Section 5.4.3, we show that there are datasets where this upper bound on is non-trivial, and we therefore get even better utility.

4.3 Lipschitz Constant of the Cross-entropy Loss Function

In this section, we show how to calculate the upper bound on the Lipschitz constant , which is important when computing the upper bound on global sensitivities , and (see Equation (18)).

Lemma 4.

[24, 25] Given a fully connected neural network containing layers, , where the hidden layers apply -Lipschitz activation functions (e.g., ReLU, Tanh and Sigmoid), the output layer applies Softmax function, and is the values of neurons at Layer , the Lipschitz constant (with respect to the model parameters) of is bounded by .

Lemma 5.

[26] For a one-layer neural network with cross-entropy loss function, the Lipschitz constant of the cross-entropy loss function (with respect to the model parameters) is , where is the number of classes, is the number of the neurons at the input layer and is a given data sample.

Based on Lemma 4 and Lemma 5, we have Proposition 1 to calculate the upper bound on the Lipschitz constant of the cross-entropy loss function, which is used in our experiments and also a commonly used loss function in existing works [2, 4, 23, 1], for a -layer neural network.

Proposition 1.

Using cross entropy function as the loss function of the aforementioned -layer neural network, the upper bound on Lipschitz constant (with respect to the model parameters) is

| (21) |

where is the number of classes, is the number of neurons at layer and is the maximum absolute value of all the neurons at layer .

Based on Equation (21), the Lipschitz constant grows exponentially with the number of layers. However, when calculating the global sensitivities of the parameters , and , this exponential growth (in the number of layers and the maximum value of neuron per layer), is somewhat compensated by the terms and (the number of data samples in the training set), as can be seen by putting the value of from Equation (21) into Equation (18). This is also empirically demonstrated in Table VI in Section 5.4.3.

4.4 Convexified Loss Function

In our proofs of the upper bounds on the global sensitivities, a key assumption is that the objective function of the learning algorithm is a combination of a convex loss function and a -strongly convex regulariser. Lemma 1 guarantees the strong convexity for an -norm regulariser. However, loss functions of deep learning models are non-convex due to a large number of model parameters [27]. To circumvent this, we follow existing results in multi-layer (more than one hidden layers) neural networks convexification [14, 15] by risk-averse optimisation to convexify the non-convex loss functions.

Theorem 5.

So we have

| (23) |

where can be conventional/commonly used non-convex loss functions, such as quadratic loss and cross-entropy loss. We use the convexified loss function rather than the traditional non-convex loss function in the experimental evaluations.

Intuitively, the convexification constant might impact the trade-off between privacy and utility of the non-private model against MI attacks (since it might impact the performance/overfitting of the convexified loss function [23]). In this paper, we take as another hyper-parameter to the machine learning model; hence, studying the relationship between and the privacy-utility trade-off is left as future work.

4.5 Effect of Differential Privacy

In this section, we study the effect of differential privacy, including the DP guarantee of Algorithm 1 and the OARO stability of a DP neural network. In the -DP proof of the algorithm, we assume the Laplace mechanism as an instance of . But this guarantee can be converted into an -GDP guarantee by invoking the advanced composition theorem of -DP and subsequently applying Theorem 2. Thus, the result holds for both the Laplace and the Gaussian mechanisms.

Theorem 6.

The prediction probability vector of a given observation , produced by Algorithm 1 (injecting Laplace noise) is -differentially private, where is the number of classes.

Proof.

Let the activation function at the output layer be Softmax and the values of the neurons prior to feeding Softmax be , then we have the prediction probability vector , where and is the number of classes.

Consider two sets of model parameters and trained from two neighbouring dataset and , respectively. We run Algorithm 1 on and with the same privacy budget, the same data sample for prediction and the same topology of the neural network. Based on Algorithm 1, we analyse the DP guarantee for the weighted sampling step and the noise injection step below.

Let the score function of the Exp-DP for sampling the neuron be . Based on Exp-DP and Lemma 2, the sampling weights of a neuron is , where . Following the standard proof of the Exp-DP [18], we have

| (24) |

For a neuron where noise has been injected and an arbitrary , we have the worst-case upper bound on the probability ratio for ( is the noisy prediction probability vector) as follows.

| (25) |

For an arbitrary neuron where noise has not been injected (we inject noise into a neuron ) and an arbitrary , we have the worst-case upper bound on the probability ratio for ( is the noisy prediction probability vector) under the condition of and .

| (26) |

Then we give the upper bound on the privacy guarantee for the final prediction result.

| (27) |

This concludes the proof. ∎

Corollary 3.

A differentially private neural network is more On-Average-Remove-One stable than a non-private neural network.

Proof.

Injecting DP noise, either by objective perturbation [11, 12] or gradient perturbation (as DP-SGD [7]) or output perturbation (as Algorithm 1), could be modelled as modifying the model parameters to be . Note that is not a minimiser of , replacing in Inequality (11) by decreases the value of Inequality (11) and Equation (12). Following the same proof as Theorem 4, we have a tight upper bound for , say, with a , . Since we assume the loss function is -Lipschitz, we have

| (28) |

Since this is valid for any and , , , we immediately have

| (29) |

Since and the upper bound on is (Theorem 4), injecting DP noise makes the model more On-Average-Remove-One stable. ∎

5 Experimental Evaluation

In this section, we show experimental evaluations of the proposed algorithm. We start with a description of the datasets, the performance metrics, experimental configurations and finally evaluation results and analyses. We are providing an open-source implementation of our algorithm to aid future research.111See https://github.com/suluz/dp_ml_API

5.1 Datasets

In the experiments, we use the same datasets as Shokri et al. [2] and Jayaraman et al. [1], i.e., US Adult (Income)222http://archive.ics.uci.edu/ml/datasets/Adult, MNIST333http://yann.lecun.com/exdb/mnist/, Location (Bangkok restaurants check-ins)444https://sites.google.com/site/yangdingqi/home/foursquare-dataset, Purchases555https://www.kaggle.com/c/acquire-valued-shoppers-challenge/data, CIFAR666https://www.cs.toronto.edu/~kriz/cifar.html and Texas Hospital777https://www.dshs.texas.gov/THCIC/Hospitals/Download.shtm. Since these datasets are commonly used in the field of machine learning and MI attacks, we only show the statistics of them in Table III, where #Rec. is the number of records (randomly sampled from the raw datasets) and #Feat. is the number of features/attributes in training sets. For the details of these datasets, please refer to Section IV-a of [2] and Section 4.1 of [1].

| Dataset | #Rec. | #Feat. | #Classes | #Shadow Models |

|---|---|---|---|---|

| US Adult | 10,000 | 14 | 2 | 20 |

| MNIST | 10,000 | 784 | 10 | 50 |

| Location | 1,200 | 446 | 30 | 30 |

| Purchase-2 | 10,000 | 600 | 2 | 20 |

| Purchase-10 | 10,000 | 600 | 10 | 20 |

| Purchase-20 | 10,000 | 600 | 20 | 20 |

| Purchase-50 | 10,000 | 600 | 50 | 20 |

| Purchase-100 | 10,000 | 600 | 100 | 20 |

| CIFAR-10 | 10,000 | 3,072 | 10 | 100 |

| CIFAR-100 | 10,000 | 3,072 | 100 | 100 |

| Texas Hospital | 10,000 | 6,169 | 100 | 10 |

5.2 Performance Metrics

Metrics for overfitting of the baseline non-private models. In our experiments, we use the upper bound on the On-Average-Remove-One (OARO) Stability (Definition 3 and Equation (17) in Theorem 4) to measure the overfitting of baseline non-private models, that is,

| (30) |

where is the maximum Lipschitz constant on a given training set and a given neural networks, is the strongly convex constant shown in Lemma 1 and is the size of the training set. In practice, for a given training set and a given neural network, , and () are fixed and pre-determined. We further take the maximum value of at each layer to calculate the upper bound on Lipschitz constant by Equation (21) in Proposition 1. We then use this empirical maximum to calculate the upper bound on the global sensitivities. Note that, a tight upper bound on Inequality (30) indicates more OARO stable.

Metrics for DP models. Following existing studies [23, 1] on measuring DP performance against MI attacks, we use the same metrics in this paper, i.e., accuracy loss, a DP model’s accuracy loss on the test set with respect to the baseline non-private model and privacy leakage, the difference between the true positive rate and the false positive rate of the MI attacks (as binary classifiers). They are defined as follows.

| (31) | |||

| (32) |

where Baseline (S) indicates the baseline non-private model trained on the Surrogate loss function, and are the true positive and false positive of the MI attacks (specifying as the black-box shadow model-based approach from Shokri et al. [2] in this paper), P and N are positive/member and negative/non-member labels of a test data sample. Note that, based on Equation (31) and Equation (32) both Acc_Loss and Priv_Leak are in . indicates that a DP model achieved the maximum prediction accuracy, i.e., the same as the baseline model and indicates no prediction utility of a DP model. indicates no privacy leakage of a given model and indicates that a given model leaks the maximum privacy under MI attacks.

5.3 Experimental Configurations

Since existing upper bounds on the global sensitivity of the trained model parameters are looser than ours under the same condition – convexity of the loss function – our upper bound is expected to outperform existing upper bounds given the same output perturbation-based algorithm. Hence we do not perform experimental comparison between our upper bound and existing upper bounds. In our experiments, we compare the performance achieved by Algorithm 1 and Google’s open-source implementation [19] of DP-SGD approaches on the real-world datasets.

| Non-Private/DP-SGD/MI Shadow Models | ||||||

| #hidden neurons | reg. | learning rate | #hidden layer | optimiser | batch size | #epoch |

| 128 | 0.001 | 0.001 | 1 | ADMA | 100 | 100 |

| activation func. | loss func. | DP Implementation | ||||

| Tanh, Softmax | cross-entropy | [0.01, 1000] | RDP, zCDP, AC, NC | |||

| Convexification Constant ( in Equation (4.4)) | ||||||

| : Location, MNIST, US Adult, Purchase-2, CIFAR-100 | : CIFAR-10 | |||||

| : Texas Hospital | : Purchase-100, Purchase-50, Purchase-20, Purchase-10 | |||||

| MI Attack Models | ||||||

| #hidden neurons | reg. | learning rate | Other Configurations | |||

| 64 | 0.01 | The Same as MI Shadow Models | ||||

Hyper-parameters. In general, we mainly follow the configurations in existing studies on MI attacks (the shadow model-based approach from Shokri et al.) [2] and DP-SGD [1] but train baseline non-private models, MI shadow models and DP models with a convexified loss function. Specifically, for training baseline non-private models and MI shadow models [2], we keep the same configurations as Shokri et al. [2]; to implement the DP-SGD (injecting Gaussian noise) approaches, we call Google’s open-source tensorflow-privacy package following the same configurations as Jayaraman et al. [1]. We also follow the same computation as Jayaraman et al. [1] to plot the theoretical upper bound on the privacy leakage of -DP, which is . When plotting it, we bound it by the privacy leakage of the baseline non-private model. We empirically search the value of convexification constant ( in Equation (4.4)) in with a step of to ensure the convexified loss function achieves (almost) the same training and test accuracy as the original (non-convex) loss function. Table IV shows the detailed configurations, where RDP, zCDP, AC and NC represent Rényi DP [28], zero-Concentrated DP [29], DP with advanced composition [21] and DP with naïve composition [30], respectively, is the size of the training set.

Privacy budget. In our experiments, we report the average performance of all DP models with the same privacy budget answering a single query (the prediction vector of a single data sample). This is mostly for ease of presentation. See Section 5.4.5 for a discussion of how this can be expanded to multiple queries. We apply both Laplace and Gaussian mechanisms to generate DP noise for Algorithm 1. In particular, when applying Laplace distribution, according to the sequential composition of privacy budget (Theorem 1), we split the overall privacy budget for a single query as Theorem 6, that is, . When applying Gaussian distribution, according to the relationship between -GDP and -DP (Theorem 3) and the composition theorem proposed in Gaussian differential privacy (Theorem 3), we split the overall privacy budget as . When implementing DP-SGD, we use the source code of Jayaraman et al. [1] to split into each epoch. The privacy budget values in this paper are the same as Jayaraman et al. [1], i.e., .

Experimental setup. To evaluate the DP models (Algorithm 1 and DP-SGD models) on a given dataset with a privacy budget for a single data sample for prediction, we first randomly sample two disjoint sets having the same size from the dataset to be the training set and the test set. Using the training set, we train the baseline non-private model and the -DP-SGD models. We then implement Algorithm 1 with on the test set. We calculate the accuracy loss of each DP model based on the test accuracy obtained from the test set. Then we perform the MI attack via shadow models [2] to attack the baseline non-private model and the DP models to calculate the privacy leakage. Finally, we repeat the training and attacking process 10 times to report the average accuracy loss and privacy leakage.

5.4 Experimental Results and Analysis

In this section, we empirically study the non-private model and DP models on real-world datasets. We first report the performance, including training and test accuracy, OARO stability and privacy leakage, of the baseline non-private model using the convexified loss function. Then we present the comparison between Algorithm 1 and DP-SGD on accuracy loss and privacy leakage. Finally we analyse the experimental results (accuracy loss and privacy leakage) of the DP models (Algorithm 1 and existing DP-SGD) obtained on the real-world datasets.

5.4.1 Performance of the Convexified Loss Function

We compare the performance of models trained on the original non-convex loss function and on the convexified loss function with the same hyper-parameters and the same training and training sets. Table V shows the average training accuracy and the average test accuracy achieved on the real-world datasets of experiments.

(sorted in decreasing order of Acc. Gap/Priv. Leak. of overfitting and fitting models).

| Dataset | Original Loss Func. | Convexified Loss Func. | Priv. Leak. | OARO Stability | ||||

|---|---|---|---|---|---|---|---|---|

| Training Acc. | Test Acc. | Acc. Gap | Training Acc. | Test Acc. | Acc. Gap | |||

| Location | 1.0000 | 0.6068 | 0.3932 | 0.9916 | 0.6484 | 0.3432 | 0.3600 | 10.8532 |

| Texas Hospital | 0.7990 | 0.5689 | 0.2301 | 0.8770 | 0.5364 | 0.3406 | 0.2295 | 18.8945 |

| Purchase-100 | 0.9992 | 0.7985 | 0.2007 | 0.9942 | 0.7723 | 0.2219 | 0.1664 | 1.8193 |

| Purchase-50 | 0.9994 | 0.8636 | 0.1358 | 0.9985 | 0.8315 | 0.1670 | 0.1236 | 1.7827 |

| Purchase-20 | 0.9986 | 0.9022 | 0.0964 | 0.9796 | 0.8759 | 0.1037 | 0.0727 | 1.6750 |

| Purchase-10 | 0.9973 | 0.9203 | 0.0770 | 0.9735 | 0.8903 | 0.0832 | 0.0292 | 1.5036 |

| Purchase-2 | 0.9963 | 0.9642 | 0.0321 | 0.9951 | 0.9642 | 0.0309 | 0.0073 | 0.4641 |

| MNIST | 0.9863 | 0.9528 | 0.0335 | 0.9494 | 0.9297 | 0.0197 | 0.0035 | 1.9845 |

| US Adult | 0.8310 | 0.8300 | 0.0010 | 0.8260 | 0.8262 | 0.0002 | 0.0023 | 0.0109 |

| CIFAR-10 | 0.6198 | 0.4453 | 0.1745 | 0.5731 | 0.4236 | 0.1495 | 0.0111 | 7.7760 |

| CIFAR-100 | 0.3224 | 0.1677 | 0.1647 | 0.2026 | 0.1389 | 0.0637 | 0.0099 | 9.4090 |

As shown in Table V, the convexified loss function provides approximately the same model performance (training accuracy and test accuracy) as the original non-convex loss function. Such a result is also confirmed by the experimental analysis in [15]. Therefore, we can use the convexified loss function to provide reliable baseline non-private models to further analyse the performance of the DP models.

5.4.2 Performance of the On-Average-Remove-One Stability on Measuring Overfitting

In this section, we examine the performance of the OARO stability on measuring overfitting via checking the relationship between the OARO stability and two empirical rules of detecting overfitting [23], i.e., accuracy gap between the training accuracy and the test accuracy and privacy leakage under the MI attacks.

Table V and Figure 2 show that the OARO stability is significantly correlated to both the accuracy gap and the privacy leakage, when the training accuracy of a baseline non-private model is acceptable (test accuracy is greater than ). Specifically, in Figure 2a, the Pearson Correlation Coefficient is 0.8277 (p-value = 0.0059). In Figure 2b, the Pearson Correlation Coefficient is 0.7412 (p-value = 0.0223). In both Figures 2a and 2b, the Spearman’s rank Correlation Coefficient is 0.7333 (p-value = 0.0246), the Kendall’s rank Correlation Coefficient is 0.6667 (p-value = 0.0127). Therefore, we conclude that the OARO stability can estimate the overfitting of a model when the model has a high training accuracy, since we calculate the OARO stability during the training process. This could be a potential benefit to detect overfitting of a model when there are not enough data to prepare training sets to compare the accuracy gap.

vs. Acc. Gap

vs. Priv. Leak.

vs. Acc. Gap

5.4.3 Performance of the Differentially Private Models

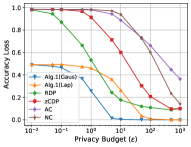

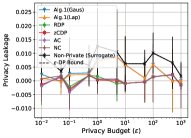

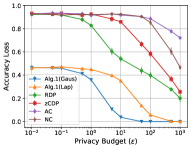

Figures 3 to 13 depict the accuracy loss and privacy leakage on the six real-world datasets, where Alg. 1 (Gaus) and Alg. 1 (Lap) represent Algorithm 1 implemented with Gaussian noise and Laplace noise, respectively. Table VI shows the Lipschitz constant and the global sensitivities we calculated and used in our experiments based on our theoretical results. We give our key findings below.

Finding 1: Avoiding overfitting is still the rule of thumb to mitigate the effect of MI attacks (via shadow models). Based on the observed accuracy gap and privacy leakage of the non-private models on different datasets (Figure 2c, where the Pearson correlation coefficient is 0.9592, Spearman’s rank and Kendall’s rank correlation coefficients are 1, the p-value of all the three correlation coefficient is 0), we have the same conclusion as existing works [23, 31, 32]. That is, when a model is not overfitting, the privacy leakage of the non-private model would be rather marginal (almost zero privacy leakage) against MI attacks.

Finding 2: The accuracy loss of Algorithm 1 follows a stable monotonic decreasing curve when tuning the privacy budget. This property gives us a predictable accuracy loss when configuring a specific value of the privacy budget to generate DP prediction. Such a stability is in two-fold. First, on a single query, the maximum accuracy loss of Algorithm 1 is about , which is much smaller than existing DP-SGD approaches. Second, the accuracy loss of Algorithm 1 (Gaussian noise) significantly decreases from its maximum value to when , which is the commonly used range for tuning the privacy budget in practice (for Laplace noise, ). We observe exceptions on Location (Figure 3) and Purchase-2 (Figure 9) datasets, where such ranges of the privacy budget are in and , respectively. Whereas, we cannot observe such a stable curve in existing DP-SGD approaches in any aspect, such as the maximum accuracy loss (varying from to on different datasets), range of privacy budget for decreasing the accuracy loss (varying from to on different datasets).

Finding 3: Algorithm 1 achieves a good privacy-utility trade-off when the privacy leakage of the baseline model does not approximately equal to 0. Specifically, in most datasets (except the two most fitted models on MNIST and US Adult datasets, Figures 10 and 11, where the privacy leakage is less than ), for a given privacy budget, Algorithm 1 (Gaussian noise and Laplace noise) provides the least accuracy loss and achieves the closest privacy leakage to the theoretical bound on -DP for a single query. When tuning the privacy budget to large values, say over (Gaussian noise) or (Laplace noise), Algorithm 1 could converge to the same privacy leakage as the non-private models in most of the datasets, which is expected as a DP algorithm.

Finding 4: Algorithm 1 (Laplace noise) achieves similar accuracy loss curve as Algorithm 1 (Gaussian noise) for the binary classes datasets. In the two binary classes datasets, Purchase-2 (Figure 9) and US Adult (Figure 11), the performance of injecting Gaussian noise and injecting Laplace noise is close to each other. Whereas, on the multi-class datasets, injecting Gaussian noise always outperforms Laplace noise injection on the privacy-utility trade-off.

Finding 5: For a given neural network topology, the upper bounds on the global sensitivities are highly depending to the number of classes and the number of records of the training set, rather than the Lipschitz constant. From the results reported in Table VI, the values of Lipschitz constant varies over different datasets; whereas the global sensitivities and remain relatively stable. Exceptions on the global sensitivities are US Adult, Purchase-2 and Location datasets, where the former two are binary classes datasets (where the global sensitivities are about half of the global sensitivities of the datasets having the same training set size) and the latter contains 1200 records (whose global sensitivities are about 10 times more than the global sensitivities of datasets having multiple classes).

| Dataset | () | |||

|---|---|---|---|---|

| US Adult | 0.1654 | 0.0015 | 0.1871 | 0.4538 (0.4538) |

| MNIST | 2.2274 | 0.0028 | 0.3577 | 1.0000 (1.0450) |

| Location | 1.8044 | 0.0244 | 3.1190 | 1.0000 (510.8338) |

| Purchase-2 | 1.0771 | 0.0016 | 0.2000 | 0.4918 (0.4918) |

| Purchase-10 | 1.9388 | 0.0028 | 0.3570 | 1.0000 (1.0421) |

| Purchase-20 | 2.0465 | 0.0029 | 0.3738 | 1.0000 (1.1119) |

| Purchase-50 | 2.1111 | 0.0029 | 0.3765 | 1.0000 (1.1234) |

| Purchase-100 | 2.1327 | 0.0029 | 0.3664 | 1.0000 (1.0809) |

| CIFAR-10 | 4.4091 | 0.0028 | 0.3594 | 1.0000 (1.0520) |

| CIFAR-100 | 4.8500 | 0.0030 | 0.3897 | 1.0000 (1.1802) |

| Texas Hospital | 6.8729 | 0.0031 | 0.3928 | 1.0000 (1.1937) |

5.4.4 Analysis of Experimental Results

The observations in Section 5.4.1 and Section 5.4.2, reflect the theoretical properties of the convexified loss function shown in [14, 15] and the OARO stability in Definition 3 and Corollary 3.

Findings 1, 2, 3, 4 and 5 in Section 5.4.3 are supported by the noise injection scheme of Algorithm 1, i.e., applying Exp-DP to sample an individual neuron for noise injection with a tight upper bound on the global sensitivity could provide a better privacy-utility trade-off for an output perturbation-based DP algorithm.

First, based on the measurement for model’s accuracy, injecting large positive noise into the neuron representing the accurate prediction class (or injecting small negative noise into the neurons representing the inaccurate prediction classes) would not impact the test accuracy of Algorithm 1. When , the sampling probabilities (applying Exp-DP) of all neurons at the output layer are similar. In this case, Algorithm 1, injecting noise following Laplace distribution or Gaussian distribution, gives the same (or similar) prediction as the baseline model half the time. Hence, we observe and from Algorithm 1 for . Once having a relatively greater privacy budget, the neuron representing the accurate prediction class would have much higher probability to be sampled, together with the tight upper bound on the global sensitivities, Algorithm 1 ensures a better privacy-utility trade-off for a single query.

Second, in the binary-class datasets, since there are only two neurons at the output layer, with high probability, the Exp-DP would sample the neuron representing the accurate prediction class. In this case, the amount of noise would not impact the final prediction outcome. Hence, we cannot observe significant differences between Gaussian noise and Laplace noise in binary-class datasets.

Third, as discussed in Section 4.3, roughly is the factor impacting the global sensitivities, where is the number of classes and is the number of training records. is a monotonically increasing function for and a monotonically decreasing function for . When (binary datasets) we get . On the other hand, when , we get . This implies that the global sensitivities obtained in US Adult and Purchase-2 datasets (binary class datasets) are about half of that in other datasets (, ) as can be seen in Table VI. On the other hand, the Location dataset is the only dataset having records, as compared to records for all other datasets (see Table III). As a result, the global sensitivities obtained in Location dataset are about 10 times than that in other datasets (, ).

5.4.5 Privacy Budget Consumption for Multiple Queries.

Since Algorithm 1 is based on output perturbation, it consumes privacy budget for each query (a single data sample for prediction), and hence can only be used for a fixed number of queries before exhausting the privacy budget. On the other hand, DP-SGD, as a gradient perturbation, consumes privacy budget during the training process, so it could accept an unlimited number of queries without further privacy budget consumption. However, we can always scale the privacy budget according to the number of queries we are willing to answer.

Based on the experimental results observed in Figures 3 to 6, we conclude that when the privacy leakage of a non-private model is no less than , given an overall privacy budget for multiple queries, Algorithm 1 (Gaussian) could answer a large number of queries (which is likely to be the size we expect in practice), while still outperforming DP-SGD in the privacy-utility trade-off. Note that while we report these results for single queries, we can use the same results assuming a larger number of queries for a fixed budget. For instance, consider the results on the Location dataset in Figure 3. If we have , and we would like to answer 1,000 queries, then we can look at the accuracy loss (privacy leakage) at in the figure, for the accuracy loss (privacy leakage) per query.

Thus, again on the Location dataset (Figure 3), given for queries, based on the sequential composition of privacy budget (Theorem 1), each single query consumes . At , Algorithm 1 (Gaussian) has about accuracy loss and privacy leakage, whereas the best DP-SGD (RDP) at (since DP-SGD does not consume privacy budget during the test/prediction phase) has about accuracy loss and privacy leakage. That is, Algorithm 1 has less accuracy loss and more privacy leakage, hence having a better privacy-utility trade-off in this example. For a larger number of queries (1,000 or 10,000) the privacy-utility loss is comparable to DP-SGD. Based on the analysis of Finding 2, for a single query, when , Algorithm 1 should still have and , then we could extend the number of queries answered by Algorithm 1 to be a large number in practice. Thus, the advantage of our method is that we can provide higher accuracy if the model is required to answer a small number of queries, which is not the case with input perturbation or gradient perturbation-based methods such as DP-SGD.

6 Conclusion

Concluding Remarks. In this paper we propose a framework that provides differentially private prediction probability vector for general deep learning tasks. Our approach only injects DP noise into one neuron (sampled with Exponential mechanism of differential privacy) at the output layer of a given neural network. To implement our approach, we mathematically analyse the upper bound on global sensitivity of an individual neuron via analysing a tighter upper bound on global sensitivity of the trained model parameters (than existing results). Our empirical studies show that our approach achieves a better trade-off between utility and privacy than existing DP-SGD approaches on six commonly used real-world datasets, given an overall privacy budget for a large number of queries.

Limitations and Future Work. Our approach only provides DP predictions before reaching a pre-defined number of queries, since we consume privacy budget per query. For an output perturbation-based solution, to answer an unlimited number of queries while guaranteeing DP, it requires injecting DP noise directly into all the trained model parameters. However, due to the complexity of the topology of neural networks, this would result in over-injected noise adversely impacting utility of prediction. Additionally, a tight upper bound on global sensitivity of the trained model parameters does not always give us a tight upper bound on global sensitivity of an individual neuron as shown in Section 4.2. Instead, experimental results show trivial upper bounds in most datasets (Table VI) having no greater than training data samples. To improve our results, i.e., answering more queries, we should consume less privacy budget per query by either exploring other noise injection schemes having better privacy budget composition or further tightening our upper bounds on the global sensitivity. This is an open question for future work.

Acknowledgements

We thank Bargav Jayaraman for clarifications on the use of their implementation of DP-SGD. This work was conducted with funding received from the Macquarie University Cyber Security Hub, in partnership with the Defence Science & Technology Group and Data61-CSIRO, through the Next Generation Technologies Fund. The experiments of this work was partially supported by the Australasian Leadership Computing Grants scheme, with computational resources provided by NCI Australia, an NCRIS enabled capability supported by the Australian Government. Hassan Jameel Asghar is the corresponding author.

References

- [1] B. Jayaraman and D. Evans, “Evaluating differentially private machine learning in practice,” in 28th USENIX Security Symposium (USENIX Security 19), 2019, pp. 1895–1912.

- [2] R. Shokri, M. Stronati, C. Song, and V. Shmatikov, “Membership inference attacks against machine learning models,” in 2017 IEEE Symposium on Security and Privacy (SP). IEEE, 2017, pp. 3–18.

- [3] M. Nasr, R. Shokri, and A. Houmansadr, “Comprehensive privacy analysis of deep learning: Stand-alone and federated learning under passive and active white-box inference attacks,” arXiv preprint arXiv:1812.00910, 2018.

- [4] A. Salem, Y. Zhang, M. Humbert, P. Berrang, M. Fritz, and M. Backes, “Ml-leaks: Model and data independent membership inference attacks and defenses on machine learning models,” arXiv preprint arXiv:1806.01246, 2018.

- [5] C. Dwork, F. McSherry, K. Nissim, and A. Smith, “Calibrating noise to sensitivity in private data analysis,” in Theory of cryptography. Springer, 2006, pp. 265–284.

- [6] J. Zhang, Z. Zhang, X. Xiao, Y. Yang, and M. Winslett, “Functional mechanism: Regression analysis under differential privacy,” Proceedings of the VLDB Endowment, vol. 5, no. 11, 2012.

- [7] M. Abadi, A. Chu, I. Goodfellow, H. B. McMahan, I. Mironov, K. Talwar, and L. Zhang, “Deep learning with differential privacy,” in Proceedings of the 2016 ACM SIGSAC conference on computer and communications security, 2016, pp. 308–318.

- [8] K. Chaudhuri, C. Monteleoni, and A. D. Sarwate, “Differentially private empirical risk minimization.” Journal of Machine Learning Research, vol. 12, no. 3, 2011.

- [9] X. Wu, F. Li, A. Kumar, K. Chaudhuri, S. Jha, and J. Naughton, “Bolt-on differential privacy for scalable stochastic gradient descent-based analytics,” in Proceedings of the 2017 ACM International Conference on Management of Data, 2017, pp. 1307–1322.

- [10] N. Papernot, S. Song, I. Mironov, A. Raghunathan, K. Talwar, and U. Erlingsson, “Scalable private learning with pate,” in International Conference on Learning Representations, 2018.

- [11] N. Phan, Y. Wang, X. Wu, and D. Dou, “Differential privacy preservation for deep auto-encoders: an application of human behavior prediction,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 30, no. 1, 2016.

- [12] N. Phan, X. Wu, and D. Dou, “Preserving differential privacy in convolutional deep belief networks,” Machine learning, vol. 106, no. 9, pp. 1681–1704, 2017.

- [13] H. Lee, R. Grosse, R. Ranganath, and A. Y. Ng, “Convolutional deep belief networks for scalable unsupervised learning of hierarchical representations,” in Proceedings of the 26th annual international conference on machine learning, 2009, pp. 609–616.

- [14] J. T.-H. Lo, Y. Gui, and Y. Peng, “Overcoming the local-minimum problem in training multilayer perceptrons with the nrae training method,” in International Symposium on Neural Networks. Springer, 2012, pp. 440–447.

- [15] K. Dvijotham, M. Fazel, and E. Todorov, “Universal convexification via risk-aversion,” in Proceedings of the Thirtieth Conference on Uncertainty in Artificial Intelligence. AUAI Press, 2014, pp. 162––171.

- [16] F. Harder, M. Bauer, and M. Park, “Interpretable and differentially private predictions,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 04, 2020, pp. 4083–4090.

- [17] S. Shalev-Shwartz and S. Ben-David, Understanding machine learning: From theory to algorithms. Cambridge university press, 2014.

- [18] F. McSherry and K. Talwar, “Mechanism design via differential privacy,” in Foundations of Computer Science, 2007. 48th Annual IEEE Symposium on. IEEE, 2007, pp. 94–103.

- [19] G. Andrew, S. Chien, N. Papernot, and Anonymous_Contributors, “Tensorflow privacy,” https://github.com/tensorflow/privacy, 2020.

- [20] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial nets,” in Advances in neural information processing systems, 2014, pp. 2672–2680.

- [21] C. Dwork, A. Roth et al., “The algorithmic foundations of differential privacy.” Foundations and Trends in Theoretical Computer Science, vol. 9, no. 3-4, pp. 211–407, 2014.

- [22] J. Dong, A. Roth, and W. J. Su, “Gaussian differential privacy,” arXiv preprint arXiv:1905.02383, 2019.

- [23] S. Yeom, I. Giacomelli, M. Fredrikson, and S. Jha, “Privacy risk in machine learning: Analyzing the connection to overfitting,” in 2018 IEEE 31st Computer Security Foundations Symposium (CSF). IEEE, 2018, pp. 268–282.

- [24] A. Virmaux and K. Scaman, “Lipschitz regularity of deep neural networks: analysis and efficient estimation,” in Advances in Neural Information Processing Systems, vol. 31. Curran Associates, Inc., 2018, pp. 3835–3844.

- [25] H. Gouk, E. Frank, B. Pfahringer, and M. J. Cree, “Regularisation of neural networks by enforcing lipschitz continuity,” Machine Learning, pp. 1–24, 2020.

- [26] R. Yedida, “Finding a good learning rate,” https://beginningwithml.wordpress.com/2019/01/07/2-2-finding-a-good-learning-rate/, 2019.

- [27] Y. Bengio, N. L. Roux, P. Vincent, O. Delalleau, and P. Marcotte, “Convex neural networks,” in Advances in neural information processing systems, 2006, pp. 123–130.

- [28] I. Mironov, “Rényi differential privacy,” in 2017 IEEE 30th Computer Security Foundations Symposium (CSF). IEEE, 2017, pp. 263–275.

- [29] M. Bun and T. Steinke, “Concentrated differential privacy: Simplifications, extensions, and lower bounds,” in Theory of Cryptography Conference. Springer, 2016, pp. 635–658.

- [30] C. Dwork, K. Kenthapadi, F. McSherry, I. Mironov, and M. Naor, “Our data, ourselves: Privacy via distributed noise generation,” in Annual International Conference on the Theory and Applications of Cryptographic Techniques. Springer, 2006, pp. 486–503.

- [31] S. Truex, L. Liu, M. E. Gursoy, W. Wei, and L. Yu, “Effects of differential privacy and data skewness on membership inference vulnerability,” arXiv preprint arXiv:1911.09777, 2019.

- [32] S. M. Tonni, F. Farokhi, D. Vatsalan, D. Kaafar, Z. Lu, and G. Tangari, “Data and model dependencies of membership inference attack,” arXiv preprint arXiv:2002.06856, 2020.