∎

22email: [email protected] 33institutetext: Qiu DI 44institutetext: Google Research

44email: [email protected] 55institutetext: Lok Ming LUI 66institutetext: The Chinese University of Hong Kong

66email: [email protected]

A deep neural network framework for dynamic multi-valued mapping estimation and its applications ††thanks: This work is supported by HKRGC GRF (Project ID: 14305919).

Abstract

This paper addresses the problem of modeling and estimating dynamic multi-valued mappings. While most mathematical models provide a unique solution for a given input, real-world applications often lack deterministic solutions. In such scenarios, estimating dynamic multi-valued mappings is necessary to suggest different reasonable solutions for each input. This paper introduces a deep neural network framework incorporating a generative network and a classification component. The objective is to model the dynamic multi-valued mapping between the input and output by providing a reliable uncertainty measurement. Generating multiple solutions for a given input involves utilizing a discrete codebook comprising finite variables. These variables are fed into a generative network along with the input, producing various output possibilities. The discreteness of the codebook enables efficient estimation of the output’s conditional probability distribution for any given input using a classifier. By jointly optimizing the discrete codebook and its uncertainty estimation during training using a specially designed loss function, a highly accurate approximation is achieved. The effectiveness of our proposed framework is demonstrated through its application to various imaging problems, using both synthetic and real imaging data. Experimental results show that our framework accurately estimates the dynamic multi-valued mapping with uncertainty estimation.

Keywords:

dynamic multi-valued mapping, deep neural network framework, uncertainty estimation1 Introduction

Uncertainty is a significant challenge that arises when making predictions for various tasks, including pose estimation, action prediction, and clinical diagnosis. In many cases, obtaining an accurate and definitive solution is difficult due to missing information or noise. There can be multiple possible interpretations or solutions. Moreover, in complex real-world scenarios, the relationship between inputs and solutions becomes even more intricate. Different inputs may correspond to different numbers of potential solutions. For example, in natural language processing, a sentence may have two different meanings if contextual information is lacking, while another sentence may have three meanings. Similarly, in diagnosing lung lesions, different medical professionals may provide varying diagnoses for a patient’s CT scan showing potential lung damage. This variation in diagnosis can be attributed to incomplete information and the inherent uncertainties in medical imaging. If we consider all the diagnoses provided by different medical professionals as potential solutions, each scan can have a varying number of potential diagnoses (see Fig 1). Therefore, there is a need to develop an effective framework that can address the challenge of uncertainty estimation in real-life scenarios.

Mathematically, the aforementioned problems can be described as follows: Let and represent the input space and output space, respectively. We are provided with a collection of paired datasets , where is a possible output corresponding to . These paired datasets consist of unorganized pairs, allowing for flexibility as each collection may contain repetitions. Our objective is to find a suitable mapping that fits this dataset. However, the required mapping for this type of dataset is non-standard. For each , , where depends on and ’s are distinct for different . In other words, the number of plausible outputs associated different may vary, and hence is dynamic depending on . We refer to this type of mapping as a dynamic multi-valued mapping (DMM). To find an optimal DMM that fits the given dataset, we need to solve the following mapping problem:

| (1) |

Here, is a suitable loss function, such as the fidelity data loss, that depends on the datasets and applications. In addition to fitting the dataset, it is also desirable to estimate the probability of each plausible output. The likelihood is dependent on the occurrence of an output in the input dataset. However, an immediate challenge we face is how to mathematically model a DMM. Directly implementing such a mapping can be mathematically challenging, particularly when dealing with the diversity of . Motivated by this challenge, we are interested in developing a numerical framework to solve the dynamic multivariate mapping problem described above.

In many scenarios, the number of possible outputs is bounded by a fixed number . In other words, for each input , the number of potential outputs is always less than or equal to . In this case, a DMM can be viewed as a one-to- mapping with uncertainty estimation. To measure the likelihood of each plausible output, we introduce probability measure for each output while disregarding those with zero probabilities. This approach allows us to obtain a sequence of results with a dynamic number of elements , which depends on the input . Although the size of is constrained by a predefined value , we can effectively handle most real-world problems by selecting an appropriate value for . To model a one-to- mapping with uncertainty estimation, we can utilize a dictionary , also known as a codebook, which consists of a collection of finite variables. Thus, a DMM can be described by two bivariate functions: and . For each , represents a potential output associated with input , while represents the probability of generating such an output . Outputs with a probability of are discarded. This formulation allows us to effectively represent a DMM as two bivariate functions. In this work, our numerical framework to solve the DMM problem is based on this construction. For ease of computation, the bivariate functions and are parameterized by deep neural networks. Different parameterizations of the deep neural networks result in different and functions. Consequently, the optimal DMM can be obtained by optimizing the parameters of the deep neural networks.

Numerous studies have explored the use of auto-encoders to model multi-valued mappings by sampling and decoding latent codes from the latent space. Conditional generation has shown promise in producing multiple outcomes for various tasks. Noteworthy examples include the works of Zhu et al. zhu2017toward and Huang et al. huang2018multimodal , which focus on the task of multi-modal image-to-image translation. Additionally, Zheng et al. zheng2019pluralistic introduced a specialized approach for image inpainting. These methods demonstrate the capability to generate a variety of plausible outputs. However, these methods often lack uncertainty estimation for each plausible output, making them inadequate for solving the DMM problem. One notable exception is the Probabilistic U-Net proposed by Kohl et al. kohl2018probabilistic . Built upon the conditional VAE framework sohn2015learning , the Probabilistic U-Net allows for quantitative performance evaluation thanks to its application and associated datasets. Moreover, the Probabilistic U-Net outperforms many other methods in calibrated uncertainty estimation, including the Image2Image VAE of zhu2017toward . However, it inherits the Gaussian latent representation from CVAE sohn2015learning , which leads to the drawback of posterior collapse, resulting in wrong outputs in the generated samples. In our previous conference paper qiu2021modal , we proposed a preliminary method to address the challenges faced by auto-encoders in modeling multi-valued mappings. By utilizing a discrete representation space to approximate the multi-modal distribution of the output space, our preliminary method aimed to overcome the issue of posterior collapse and provide rough estimates of the conditional probability associated with each output. However, further analysis revealed several drawbacks. Firstly, the preliminary method exhibited repetitions in outputs, where the same output corresponded to different modes, rendering it unsuitable for representing a DMM. Secondly, the performance of the preliminary method suffered when dealing with imbalanced datasets, as the accuracy of the uncertainty estimation associated with different modes was often inaccurate. Lastly, the output results of the preliminary method were found to be inaccurate when dealing with imbalanced and unorganized dataset. These drawbacks make it challenging to use the preliminary method to represent a DMM.

In this work, building upon our previous preliminary model qiu2021modal , we develop a deep neural network framework that is capable of addressing the DMM problem. The bivariate functions and are parameterized by a deep generative network and a classification network , respectively. The generative network is responsible for generating multiple results as a one-to- mapping , while the classification network predicts the probabilities associated with these multiple results. The multiple outcomes of a given input are associated with a codebook, which is a set of discrete variables . Specifically, we utilize the generative network to generate multiple results based on the discrete variables . Simultaneously, our classification network predicts the probability for each variable , which serves as the probability for each outcome . There are two key challenges that need to be addressed in the proposed framework. Firstly, to ensure that the deep neural network framework accurately represents a DMM, it is essential that the outputs of the generative network differ for different code values . In other words, for each input , the mapping must be injective, guaranteeing the generation of a unique output for each code and preventing duplication. Additionally, another significant challenge is to enrich the codebook with essential information, allowing the generative network to produce diverse and accurate results, while also accurately predicting the probabilities associated with each outcome. To overcome these challenges, we propose a specialized loss function that incorporates the covariance loss and the ETF cross-entropy loss. This loss function enables the generation of diverse, plausible, and unique outputs for each code, as well as giving an accurate uncertainty estimation. Consequently, our framework effectively parameterizes a DMM with a dynamic range of plausible outputs for each input. Even when dealing with imbalanced data, our proposed framework can identify an optimal DMM that fits the given dataset. We evaluate the performance of our proposed framework on various imaging problems, including both synthetic and real images. Experimental results demonstrate the effectiveness of our model in solving dynamic multi-valued mapping problems across a range of imaging applications.

The rest of the paper is organized as follows. The section 2 outlines the primary contributions of this work. We introduce our general framework and model details in 3. In 4, real and synthetic datasets are used in model experiments, and some details are introduced. Finally, we conclude the paper in 5.

2 Contribution

The main contributions of this paper are listed as follows.

-

1.

We propose a notion of dynamic multi-valued mapping (DMM) and formulate a general optimization problem for DMM to address the challenge of computing multiple plausible solutions with uncertainty estimation in real-life scenarios.

-

2.

By considering a DMM by a -to- mapping with uncertainty estimation, a DMM is represented by two bi-variate functions, which are parameterized by deep neural networks. A specialized loss function based on the covariance loss and the incorporation of the ETF cross-entropy loss is proposed to train the deep neural networks. This enables the framework to be capable of representing a DMM, with dynamic number of plausible outputs.

-

3.

We apply the proposed framework to various practical imaging problems, demonstrating its efficacy and effectiveness in solving real-world challenges.

3 Proposed model

In this section, we will describe our proposed deep neural network framework for computing multiple plausible solutions with uncertainty estimation in real-life scenarios.

3.1 Mathematical formulation of dynamic multi-valued mapping problem

In this subsection, we will first provide a mathematical formulation of our proposed problem. Our objective is to develop a framework that suggests multiple plausible outputs and estimates their likelihood based on a given dataset. Let and represent the input space and output space, respectively. Suppose we are given a collection of paired datasets . Here, represents a plausible solution associated with the input . In practice, the datasets can be imbalanced and unorganized. For each , may be repeated for different values of . For example, when labeling the lesion position for ambiguous medical images, different medical experts may provide the same predictions. Consequently, this results in duplicated outputs within . On the other hand, quantifying the likelihood of each suggested output can be challenging. The extent of repetition in can provide valuable information for estimating the probability associated with each plausible output.

In this context, our proposed problem can be mathematically formulated as finding an appropriate mapping to fit the given dataset . The main challenge arises from the fact that each input can be associated with multiple plausible outputs. Furthermore, the number of plausible outputs corresponding to different inputs can vary, making it dynamic and dependent on . Traditional mappings between and are inadequate for fitting . To address this unique dataset, we introduce the concept of a dynamic multi-valued mapping (DMM) defined as follows:

Definition 1

Let and be two metric spaces. A dynamic multi-valued mapping (DMM) between and is a mapping , where denotes the power set of and

In other words, for each , a DMM maps to a non-empty and finite subset of . We assume that the number of plausible outputs for each input is finite, and that each input must be associated with at least one plausible output. These assumptions generally hold true in most imaging problems.

Our objective is to find an optimal DMM that accurately represents the dataset . Specifically, for each input , we aim to find an optimal such that is a subset containing all plausible outputs , ,…, . It is important to note that for each , the same plausible output may appear multiple times for different values of . Consequently, the cardinality of the subset is always less than or equal to . Additionally, it is desirable to estimate the likelihood of each plausible output by considering the extent of repetition of each output in . Next, we will discuss how we can mathematically formulate this problem.

For this purpose, the first task is to mathematically model a DMM. In most real-world scenarios, the number of plausible output can be bounded by a fixed number for all . That means the number of possible outputs for each input is at most . In this case, a DMM can be represented by a one-to- mapping or for , together with an uncertainty estimation . measures the probaility or likelihood of the solution . If , the output is discarded and we can simply set . The probability measure helps us to model the dynamic nature of . More specifically,

where is the cardinality of a set. Also, we require that if , and . This requirement is necessary to ensure that can effectively represent a DMM.

Under this setup, we can formulate our problem of fitting as an optimization problem over the space of DMMs that minimizes:

| (2) | ||||

| such that | ||||

where is the -th item of , is the data fitting term, and is the distance functions for index choice. is a fixed parameter. The objective aims to encourage the suggested solutions to closely match the given dataset by minimizing the discrepancy between the mapped outputs and the true values. Moreover, minimizing promotes larger values of when the mapped outputs appear more frequently in the paired dataset . This allows to capture the repetitive patterns in the dataset.

To ensure the capability of to represent a DMM, we impose a constraint on that for each , such that if , and both and . In order to effectively enforce this property, we leverage the concept of a codebook in formulating and . Let be a codebook, where each . We can now express and as bivariate functions:

By formulating and in this manner, we can optimize the codebook to control the properties of and , ensuring their suitability for representing a DMM. Our optimization can now be rewritten as finding two bivariate functions and by minimizing:

| (3) | ||||

| such that | ||||

Note that obtaining the code index from the codebook by traversing all the output results can introduce a significant computational burden, especially when dealing with complex output spaces. Additionally, the choice of distance function, denoted as , defined on the output space can greatly impact the performance of our model. If the structure of the output space is excessively complex or overly simplistic, it may lead to suboptimal results when using certain distance functions. To address these challenges, we introduce the concept of cluster mapping, denoted as . This mapping allows us to bypass the need for traversing the entire output space and instead focus on finding the most suitable distance metric within the discrete codebook, leading to improved efficiency and effectiveness. The problem of obtaining the index of code can then be formulated as follows:

| (4) |

Additionally, the codebook can also be simultaneously optimized with a suitable regularization to obtain the best collection of codes to capture multiple outputs. The final optimization problem can now be written as finding optimal , , and , which minimizes:

| (5) | ||||

| such that | ||||

where are weight parameters. is a regularization term to restrict . is the regularization term to control the property of .

The primary challenge lies in effectively modeling , , and in a way that enables simultaneous optimization of the multi-valued mapping and uncertainty estimation. To address this challenge, we adopt a strategy of formulating the problem using a deep neural network, whereby the optimization problem is solved through training the network parameters. The specific details of this approach will be elaborated upon in the subsequent subsection.

3.2 Deep neural network framework for DMM problem

As outlined in the previous subsection, the problem at hand can be formulated as an optimization problem involving three bi-variate mappings: , , and . In this work, we propose to parameterize these mappings using deep neural networks. In this subsection, we will provide a comprehensive explanation of our proposed deep neural network framework for the dynamic multi-valued mapping (DMM) problem.

3.2.1 Overall Network structure

Our objective is to develop a deep neural network framework to solve the optimization problem 5. To achieve this, we parameterize the mappings , , and using deep neural networks and respectively. The network structures are illustrated in the Fig. 2. The framework for formulating the DMM is shown in Fig. 3.

The deep neural network represents the bi-variate mapping . In other words, we have . It is depicted within the region bounded by the red dotted boundary in Fig. 2. The input is passed through an embedding network, which generates a latent representation . This latent representation captures the meaningful features of . Subsequently, the latent representation, along with the codebook , is fed into another deep generative network that produces plausible outputs . Here, we assume that the discrete codebook captures shared label information across different . It is worth noting that different parameters of the deep neural network result in different one-to- mappings. Within this framework, we can effectively search for the optimal by optimizing .

Similarly, the deep neural network represents the bi-variate mapping . In other words, . It is shown within the region bounded by the green dotted boundary in Fig. 2. The input is first passed through an embedding network, which produces a latent vector . By performing a matrix multiplication and applying a softmax operation, we obtain a probability vector . The output of estimates the probability for each plausible output . The choice of the fixed matrix is crucial for accurately predicting uncertainty estimation, and we will discuss this in detail later. In addition, we fuse the embedding networks for and as one named . This reduces the parameters of the model and improves the efficiency of training. For simplification, we abbreviate and to and respectively.

To solve the optimization problem 5, we introduce the cluster mapping . The cluster mapping takes and an associated plausible output from the dataset as the input and output a vector, which is of the same dimension of the code in the codebook. To parameterize , we utilize another deep neural network . In other words, . As shown within the region bounded by the blue dotted boundary in Fig. 2, and are fed into an embedding network to output a vector . With , we can find the code in closest to that solves 4.

Under this setting, all mappings , , and to be optimized are parameterized using deep neural networks. Therefore, they can be optimized by training the deep neural networks to obtain the optimal parameters that minimize a loss function defined by the energy functional in our optimization problem.

3.2.2 Optimization of discrete codebook

A crucial component of our proposed framework is the use of a discrete codebook to represent a one-to- mapping. For each , we can obtain plausible outputs: , , …, . Additionally, we can estimate the corresponding probabilities associated with each output: , , …, .

It is important to highlight that for our framework to effectively represent a DMM, the following condition must hold for any : if both and are non-zero, then . In other words, each code in the codebook should be associated with a distinct output for every . The choice of the codebook is therefore crucial in enforcing this requirement.

In our framework, for every , a code corresponds to a plausible output . It is important to note that , where usually the dimension of is significantly smaller than the dimension of the output space . Consequently, the generator produces plausible outputs that have a much higher dimension. The separability of the codes encourages the separability of the corresponding outputs . Conversely, if two codes and are close to each other in the codebook , the plausible outputs and will also be close to each other. This can hinder the capability of our framework to effectively represent a DMM.

In practice, although the discrete codebook inherently lends itself to modeling multi-modal label data , the occurrence of similar codes within the codebook during continuous updates can lead to highly similar results. To prevent this repetition and ensure diversity in the generated outcomes, it becomes crucial to maximize the separation between each code in the codebook .

To maximize the separation between each code in the codebook, our strategy is to reduce the mutual correlation among vectors in the codebook. For this purpose, we introduce the following covariance loss.

Definition 2

Let be a finite subset of with . The covariance loss with a threshold is defined as

| (6) |

where is the Frobenius norm, given by:

| (7) |

where and is the -th row -th column entry of . Thus, Also, , where is the number of non-zero entries of .

To understand the meaning of the covariance loss, observe that all diagonal entries of are equal to 1. Thus, has 0 on its diagonal. Each non-diagonal entry of represents the inner product between two distinct data points in . By applying a threshold to , we retain only the entries that exceed . These entries signify pairs of data points that are close to each other, as their inner product surpasses the threshold. The covariance loss measures the mean squared sum of all non-zero entries in . Minimizing the covariance loss aims to encourage a greater separation between the data points in . By this loss function, we are only concerned with those vectors that are not almost orthogonal, i.e., inner products greater than the threshold. It can help us focus on preventing the occurrence of similar codes within the selected codes. In particular, when , it implies that the inner product between any pair of data points in is below the threshold . This indicates that the separability of each pair of data points in satisfies a set tolerance, promoting greater distinctiveness among the data points.

Using , we can encourage the separation of the codes in the codebook . To achieve this, we first normalize the codes in the codebook to . By minimizing the covariance loss , we can effectively separate the normalized codes in the codebook . This, in turn, promotes the separability of the corresponding plausible outputs in the output space , ensuring a diverse set of generated results for each input .

Furthermore, choosing suitable parameters for the size of the codebook and the dimensionality of the codes is also important. For a set of unit vectors in , if , it is possible for their inner products to be all equal to zero, ensuring perfect orthogonality. However, in practical applications, the hyperparameters and of the codebook may require a broader range of choices to adapt to different datasets. It is not always the case that . In situations where , we require the variables in the codebook to be ”almost orthogonal.” The Kabatjanskii-Levenstein bound for almost orthogonal vectors tao2013almostorthogonal helps us to decide on appropriate choices for the values of , , and the threshold in such cases. This theorem provides theoretical guidance on the trade-offs between the codebook size , the code dimensionality , and the degree of orthogonality required, allowing us to configure these parameters effectively for different datasets.

Theorem 3.1

Let be unit vectors in such that for all distinct , , then we have for some absolute constant .

For the special case when the hyperparameter , we have the following theorem tao2013almostorthogonal :

Theorem 3.2

Let be unit vectors in such that for all distinct . Then, .

Therefore, in our case, we set the hyperparameter . Then, the threshold is chosen as . As a condition on the hyperparameter choices, we must have , where is the size of the codebook and is the dimensionality of the codes. In our work, we carefully select the values of and such that the separability tolerance can be achieved using this threshold. With these parameters, we will incorporate the thresholded covariance loss in the overall loss function for training the deep neural network to solve the optimization problem in 5. Our experimental results demonstrate the powerful impact of the covariance loss in improving the separability and diversity of the generated outputs.

In practice, the number of codes is often larger than the actual number of plausible outputs. This means that for all , resulting in some codes being unused and left idle. Initially, the codes are configured such that the inner products between distinct pairs are less than the threshold. However, when updating the codes that have been used, they tend to accumulate in similar positions, reducing their separability. Minimizing the covariance loss plays a crucial role in addressing this issue. When minimizing the covariance loss, only the active codes are modified, while the inner products involving non-active codes remain at 0 even after applying the threshold function . This ensures that the focus is on adjusting the positions of the codes that are actually contributing to the plausible outputs, enhancing their separability and promoting diversity in the output space.

Another important challenge to address is that when solving the optimization problem 5, the following condition 4 has to be considered:

During the training process, a data-label pair is randomly sampled from the unorganized dataset , and fed into the embedding network . Subsequently, the nearest code is selected from the codebook for the corresponding feature , as follows:

| (8) |

Note that the selected code replaces the original feature as the input for the generative network . However, this approach presents a potential challenge: there is no direct gradient of from the data fidelity term in the backward propagation process. To address this problem, we use a simple gradient approximation method, following the approach of van2017neural . The key idea is to copy the gradient of the selected code and assign it to the feature so that the parameters of the embedding network can be updated using the gradient information from the loss function. Specifically, in the forward pass, we directly input the feature representation to the generator . In the backward computation, we directly assign the gradient to the embedding network . To ensure that this gradient approximation is meaningful, we need to make the output as close as possible to the selected code . To achieve this, we add an extra regularization loss for to the overall loss function, given by:

where and sg is the stop-gradient operation (identity in the forward pass, zero derivative in the backward pass, and easily implemented in neural network algorithms). This regularization loss encourages the embedding network to output features that are close to the selected codes, without backpropagating gradients to the codebook itself.

The rationale behind this approach is that a learnable codebook, while exhibiting a slower learning capability, is required to capture more information from the data. Therefore, we propose to update the codebook using a dictionary learning algorithm, Vector Quantization (VQ) van2017neural , which computes the exponential moving average of the corresponding embedding network’s outputs. Our ablation analysis 10 has shown that a learnable codebook outperforms a fixed codebook.

3.2.3 Probability prediction

Another crucial component in our framework is the estimation of the probability associated with each plausible output. In practice, the ground truth probability of each plausible output associated with an input is not known. Our goal is to estimate these probabilities from the sample training dataset . For each input , recall that the collection of sampled plausible outputs can contain repeated values. For example, when labeling lesions of a medical image, different medical experts might provide the same label, resulting in repeated labels in the dataset. The more repetitions of a particular label, the higher the estimated probability associated with that plausible output. The intuition behind this approach is that the frequency of a plausible output in the sample dataset can serve as a proxy for its true probability. The more often a plausible output appears, the more likely it is to be the correct label for the given input. By leveraging this idea, we can estimate the probability distribution over the plausible outputs for each input, even though the ground truth probabilities are not known.

In our framework, the probability is parameterized by a deep neural network . Given an input from the training dataset, it is fed into the network to obtain a feature vector . Next, we compute a vector as , where is a suitable matrix. We then pass through a softmax operation to obtain the probability vector , where:

| (9) |

Here, represents the probability of the plausible output . If , the output is considered meaningless and can be ignored.

The choice of the matrix is crucial in this setup. The value of is related to the inner product between the -th row of and the vector . Specifically, will be larger if is closer to the -th row of . One possible approach is to learn the matrix during the training process, allowing the network to discover the optimal projection that captures the relationship between the input features and the plausible output probabilities.

However, when training on an imbalanced dataset, the learnable vectors of the minority classes may collapse, a phenomenon known as “minority collapse”. To alleviate this issue, we define using the simplex equiangular tight frame (ETF) papyan2020prevalence . This results in a fixed ETF classifier, as proposed in yang2022we .

The simplex equiangular tight frame (ETF) is formally defined as follows:

Definition 3

(Simplex Equiangular Tight Frame) A collector of vectors , is said to be a simplex equiangular tight frame if:

where , allows a rotation and satisfies , is the identity matrix, and is an all-ones vector.

All vectors in a simplex ETF have an equal norm and the same pair-wise angle, i.e.: where equals 1 when and 0 otherwise. The pair-wise angle is the maximal equiangular separation of vectors in papyan2020prevalence .

We can then define whose -th row is given by . To find the optimal , we optimize the parameters of given a training dataset to minimize in our optimization problem 5. In particular, the first term in involves :

| (10) | ||||

Therefore, can be optimized by minimizing the following cross-entropy loss:

where is the fixed ETF classifier generated by Definition 3.

3.2.4 Loss Function

To solve the optimization problem 5, our framework is reduced to finding optimal parameters , and , which minimizes . More specifically, can now be written as follows:

| (11) | ||||

As discussed in the previous subsections, the regularization term for is chosen as . Also, the regularization for is chosen as . Hence,

| (12) |

where

| (13) |

The choice of distance function depends on datasets and tasks. The overall loss function to train the deep neural network to solve the optimization problem 5 can now be summarized as follows.

| (14) |

The parameters of the deep neural network can then be optimized by stochastic gradient descent through backward propagation.

3.2.5 Numerical algorithm

We describe the numerical algorithms in detail. Several techniques, such as simple gradient approximation, stop-gradient operation, and exponential moving average, are used in our numerical algorithms.

Firstly, the simple gradient approximation is used during the update of . Since the encoder receives no gradient from the reconstruction loss, we assign it the gradient of the code , abbreviated as here. Thus, with the input data pair , we have:

| (15) | ||||

Here, the first equation approximates the gradient of the loss function with respect to the encoder parameters . The second equation computes the gradient with respect to the generator parameters , which includes both the reconstruction loss and the cross-entropy loss. The third equation computes the gradient with respect to the code matrix .

Note that the selected codes receive no gradient from the reconstruction loss and regularization loss, but instead from the covariance loss. In addition, the codes are also updated by the exponential moving average van2017neural as follows:

| (16) | ||||

where is a set of the embedding features that are closest to code . is the decay coefficient.

During the testing process, the probabilities predicted by are not exactly equal but very close to 0. A small threshold is set to eliminate results with extremely low probability.

The details of the numerical algorithms for the training and testing processes are described in Algorithms 1 and 2, respectively.

4 Experiments

To evaluate the effectiveness of our proposed framework, we conducted experiments on both synthetic examples and real-world imaging problems. We also performed ablation studies to analyze the key components of the framework. In this section, the experimental results will be reported.

4.1 Experimental setup

We utilize convolutional neural networks (CNNs) for both the embedding networks and the generative module, resembling the U-Net architecture as in ronneberger2015u . To be more precise, both our embedding networks and consist of a sequence of downsampling residual blocks, while the generative module is comprised of a sequence of upsampling residual blocks. Additionally, the generative network incorporates feature information from the embedding network at each resolution level. There are four downsampling or upsampling blocks in the sequence of each module. The downsampling and upsampling operations use bilinear interpolation. Each residual block comprises three convolution layers, utilizing kernels and ReLU activation. The two embedding networks have the same architecture with output channel dimension . The 1×1 convolution and global average pool follow the network of the data-label pair to obtain the feature of the same dimension as the code in the codebook. However, a fixed ETF classifier as a probability network follows the embedding network to output the probability prediction of all codes in the codebook. The categories of the classifier are the same size as the codebook. We incorporate the selected code and the features of the generative network to the model’s last layers with convolutions and finally activated by softmax. Note that the code can incorporate any skip connection to the generative module for different tasks. Since the code selected from the codebook is a vector, it is impossible to directly incorporate the code into a generation network with spatial dimensions. We repeatedly extend each value in the code to the spatial size of the corresponding features, and then concatenate them. We initialize the codebook as a matrix of i.i.d random rotation matrices. Each column of the codebook represents an individual code with a norm of 1. During the training, we utilized the binary cross-entropy loss as label reconstruction loss and set the batch size to . Additionally, we employed a learning rate schedule with values of at epochs . The penalization weight was fixed at , another weight of covariance constraint is , and we utilized the Adam optimizer kingma2014adam with its default settings for all of our experiments. Specifically, we train the first epochs without the cross-entropy loss to avoid the impact of violent fluctuations in early code selection on the learning of probability network parameters. For the Probabilistic U-Net, we followed the parameter in its released version and the suggested hyperparameters for segmentation tasks in kohl2018probabilistic .

4.2 Synthetic examples: Shape reconstruction

To rigorously evaluate the capabilities of our proposed framework, we first conducted experiments on a synthetic dataset for the task of shape reconstruction. We generated a dataset of shape data-label pairs, where the data consisted of a randomly generated triangle image of size (160, 160), and the labels represented four distinct shapes derived from the properties of the input triangle.

Specifically, the dataset generation process was as follows. First, we randomly created a triangle image to serve as the input data. We then synthesized four corresponding label images, each representing a shape related to, but distinct from, the original triangle:

-

1.

The first label was a smaller triangle created by cropping a random portion of the input triangle.

-

2.

The second label was the original input triangle.

-

3.

The third label was a pentagon shape formed by cutting a random triangle from the upper-right corner of the parallelogram constructed from the input triangle.

-

4.

The fourth label was a complete parallelogram shape generated by extending the parallelogram formed by the input triangle.

So the label shapes were not simply transformations of the input triangle, but rather new shapes that were algorithmically derived from the properties and geometry of the original triangle. This allowed us to evaluate how well our model could capture the underlying relationships between the input triangle and these related shape outputs.

Using this procedure, we constructed a synthetic dataset consisting of 2,000 data-label pairs for the training set and 200 pairs for the test set. Our goal is to find an optimal DMM that fits the training dataset. Given an input triangle, the optimal DMM should predict all four shapes, along with an estimation of the probability associated with each output shape.

In the training process, we repeatedly randomly sample a triangle from the training set and one of its four labels as . Since the four labels are different, random sampling ensures that the distribution of the label space consists of four modes with the same probability equal to 0.25.

In the testing phase, we sample a triangle from the testing data and record its most likely outputs . Fig. 4 shows the results of two input triangles. In each case, the leftmost image in the first row shows the input sample. The other four images show the 4 labeled shapes associated with the input triangle. Note that the input and output pairs in the testing have not been used in the training process.

The second row of Fig. 4 shows the predictions by the optimal DMM. Observe that the generated plausible outputs closely resemble the 4 ground truth shapes. This demonstrates the efficacy of our proposed framework to make multi-modal predictions. The value in the top left corner of each output shows the probability associated with each plausible output generated by the DMM. All values are very close to the ground truth probability of 0.25. This demonstrates that our framework is successful in obtaining accurate uncertainty estimates corresponding to each output.

Fig. 5 shows the results of 8 more input shapes. Again, the optimal DMM accurately predicts the 4 labeled shapes and their associated probabilities. Overall, these results on the synthetic dataset validate the ability of the optimal DMM to not only generate the diverse set of shapes derived from the input triangle, but also provide reliable probability estimates for each predicted output.

4.3 Lung segmentation with pulmonary opacity

We next test our proposed framework for lung segmentation of chest radiographs with pulmonary opacities. Lung segmentation of chest radiographs with pulmonary opacities is a challenging task. The presence of pulmonary opacities, such as infections, masses, or consolidations, can introduce significant uncertainty in accurately delineating the lung boundaries. The opacities can obscure the true lung margins, making it difficult to determine the exact extent of the lungs. Due to the ambiguity introduced by the pulmonary opacities, different radiologists or experts may provide varying lung segmentation labels for the same chest radiograph. There can be disagreement on where to draw the precise lung boundaries, especially in regions with opacity.

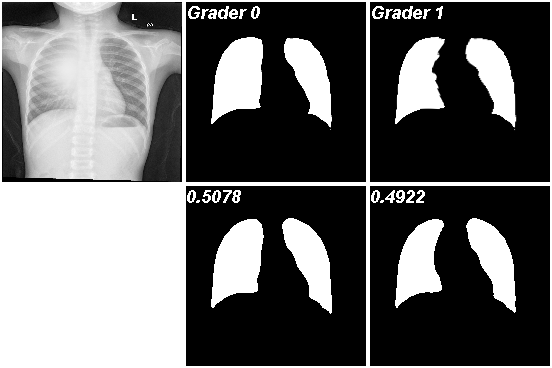

In this experiment, a collection of chest radiographs with pulmonary opacities are synthesized based on the lung X-ray dataset candemir2013lung ; jaeger2013automatic which comprises 640 data-label pairs for training and 63 pairs for testing with the size of . To simulate real-world scenarios, we introduce random intensity occlusions to intact the radiographs. The two corresponding segmentation masks labeled by two different experts are generated under different rules. The first-kind label is a segmentation mask from the original dataset, indicating the complete region of the lungs. The second kind differs from the original segmentation by randomly removing part of the opacity area to stimulate the doctors’ empirical annotations of the lung masks. Using our proposed framework, our goal is to obtain an optimal DMM that fits the dataset with the labeled segmentation mask, as well as predicts the probability associated with each plausible segmentation result.

In the training process, just as the shape reconstruction task, we still randomly sample a chest radiograph with pulmonary opacities from the training set and randomly sample one of its two labels as . Then the distribution of the label space consists of two modes with the same probability of .

To show the test results, we output predictions of chest radiograph from the testing set with explicit probability estimates () annotated on the upper-left corner in the second row in Fig. 6. More results are in Fig. 11. Obviously, the two outputs are very similar to the corresponding labels and have a probability close to . We also trained the Probabilistic U-Net model on this dataset with its results shown in Fig. 13, 14 and 15. Since it cannot directly predict the probability of the output, we respectively do samples, samples, and samples to estimate the results. For each mode’s probability, we count the proportion of predictions similar to that label in all sampling results as the probability of that label. For example, in the results of doing samplings, if both results are similar to the first-kind label, then the probability of the first-kind label is 1, and the probability of the other label is 0. This means that it only predicts one mode. If the two results are similar to the two labels respectively, then the probability of both labels is 0.5, which is the correct prediction. The probabilities’ distributions are shown in Fig. 8(a) and Table 1. It shows our model achieves excellent results in both segmentation and probabilistic prediction accuracy.

| model | Ours | Prob. U-net 2 | Prob. U-net 4 | Prob. U-net 16 |

| Grader 0 mean | 0.5040 | 0.4921 | 0.5238 | 0.5496 |

| Grader 1 mean | 0.4960 | 0.5079 | 0.4762 | 0.4504 |

| std | 0.0262 | 0.3391 | 0.2345 | 0.1482 |

4.4 Real applications

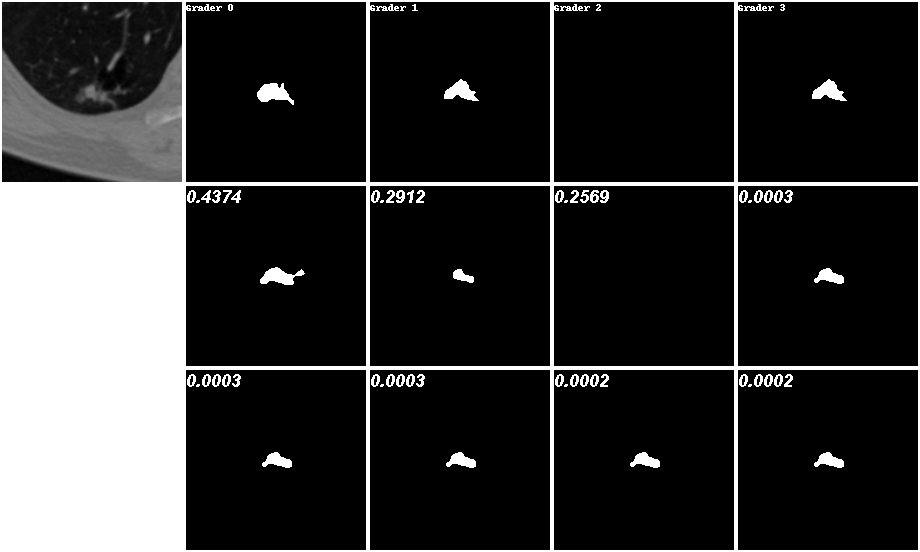

To evaluate the performance of our model on more complex real-world datasets, we work on the lesion segmentation task of ambiguous lung CT scans. In this experiment, the LIDC-IDRI dataset is provided by armato2011lung ; clark2013cancer , which contains 1018 lung CT scans from 1010 patients. It is an unorganized multi-modal distributed dataset. Each scan has four (out of twelve) medical experts labeling it a mask. And experts independently judged the location and shape of existing lesions based on their respective knowledge and experience. This does not mean that the label set is a balanced 4-modal discrete distribution. In actual situations, for the same scan, the masks given by experts may be the same, but when it comes to another scan, the masks they give will become different. The first rows of Fig. 7 that are sampled from the testing set can prove this. They even have objections to whether a lesion exists in the scan. Our task is to learn these imbalanced multi-modal distributions and make accurate uncertainty estimates while outputting possible outcomes.

During the training process, we randomly sample a CT scan from the training set and one of its four segmentations as . Fig. 7 shows some examples from the testing set with high precision. The first row is the lesion scan and its four labels. The last two rows (We have reserved eight positions for the results in the picture.) are our top predictions, where the probability (larger than ) associated with each prediction is annotated on the upper-left corner. More results are shown in Fig. 12. It’s obvious that our method effectively captures the uncertainty present in the segmentation labels, as evidenced by significant probability scores. The probabilities of predictions are almost equal to the probabilities of the true distribution. The no lesion inference on the left is especially approximated at , and on the right is close to .

Unlike synthetic datasets, there is no ground truth distribution for the LIDC-IDRI dataset. In order to evaluate the model performance, we adopt the generalized energy distance metric found in bellemare2017cramer ; szekely2013energy , which only access the samples from the distributions that models induce. It is used to evaluate the performance of the Probabilistic U-net. However, the Probabilistic U-net model cannot generate dynamic result sets and corresponding uncertainty estimates. Thus, we rewrite this metric according to the type of output we get as follows: given the label set and the prediction set which are corresponding to the data , the general energy distance metric of them is

where is the metric for evaluating the similarity of masks. is the probability prediction for the output and is the probability for the ground truth . In case the ground truth is not available like LIDC-IDRI, we use , where denotes the cardinality of . In particular, on the LIDC-IDRI task. For the Probabilistic U-Net, there are no probability predictions for each output. We set if we have samples from the model.

The quantity results of our model and Probabilistic U-net model are shown in Fig. 8(b) and Table 2. Lower values demonstrate the performance superiority of our model. More test predictions from Probabilistic U-Net are shown in Fig. 16 and Fig. 17.

| model | Ours | Prob. U-net 4 | Prob. U-net 16 |

|---|---|---|---|

| mean | 0.3058 | 0.4552 | 0.3253 |

| std | 0.2761 | 0.3375 | 0.2743 |

4.5 ABLATION ANALYSIS

In this subsection, we explore some tricks applied in the model and some terms in the loss function in order to verify how they promote the model.

To test the performance of covariance loss, we train our model on the LIDC dataset by setting the weight of covariance loss as and , respectively. Given the same initial codebook, we count the number of codes called in each epoch and their average pairwise inner products in the training process. The results are shown in 9. We can see that covariance can help us separate the codes so that the features of the data can be concentrated on codes with a small number. On the contrary, without the balance of covariance loss, the frequency of use of codes will fluctuate significantly, and the codes will be more similar to each other.

In addition, we explore the performances of the renewal of the codebook and fixed ETF classifier by training our model on the LIDC dataset. We introduce two variations to our original approach: the Fixed codebook and the Learnable classifier. The Fixed codebook approach involves no longer updating the codes once the codebook space is initialized. On the other hand, the Learnable classifier replaces the fixed ETF classifier with a commonly used linear classifier that can be learned to predict probabilities. To evaluate the performance of these variations, we measure the metric on the test dataset and analyze the usage of codes during training. The results, as shown in Fig. 10, indicate that our original approach outperforms the variation approaches. We observe that not updating the codebook results in worse scores and leads to unstable code utilization. This is because the covariance loss no longer functions concurrently when the codebook is fixed. Furthermore, Fig. 10 demonstrates the advantages of the fixed ETF classifier over the learnable linear classifier. In the absence of explicit data distribution for learning in the LIDC dataset, the fixed ETF classifier provided more accurate distribution predictions with fewer classes. In summary, our findings highlight the superiority of our original approach, which incorporates both the renewal of the codebook and the fixed ETF classifier. This approach yields better performance in terms of accurate distribution prediction and stable code utilization.

5 Conclusion

We have adopted dynamic multi-valued mapping to describe the uncertainty in practice. It is a multi-valued mapping with a probability measure for each output. A data-driven optimization problem is further designed to solve dynamic multi-valued mapping. We proposed a general deep neural network framework to achieve this. Meanwhile, a codebook is introduced to explain the corresponding relationship between input and output in multi-value mapping. It also includes an effective evaluation of prediction probabilities to capture uncertainty in the dataset. Through extensive validation of synthetic and realistic tasks, we have demonstrated the superior performance of our method compared to state-of-the-art approaches.

Acknowledgements.

L.M. Lui is supported by HKRGC GRF (Project ID: ).References

- (1) Armato III, S.G., McLennan, G., Bidaut, L., McNitt-Gray, M.F., Meyer, C.R., Reeves, A.P., Zhao, B., Aberle, D.R., Henschke, C.I., Hoffman, E.A., et al.: The lung image database consortium (lidc) and image database resource initiative (idri): a completed reference database of lung nodules on ct scans. Medical physics 38(2), 915–931 (2011)

- (2) Bellemare, M.G., Danihelka, I., Dabney, W., Mohamed, S., Lakshminarayanan, B., Hoyer, S., Munos, R.: The cramer distance as a solution to biased wasserstein gradients. arXiv preprint arXiv:1705.10743 (2017)

- (3) Candemir, S., Jaeger, S., Palaniappan, K., Musco, J.P., Singh, R.K., Xue, Z., Karargyris, A., Antani, S., Thoma, G., McDonald, C.J.: Lung segmentation in chest radiographs using anatomical atlases with nonrigid registration. IEEE transactions on medical imaging 33(2), 577–590 (2013)

- (4) Clark, K., Vendt, B., Smith, K., Freymann, J., Kirby, J., Koppel, P., Moore, S., Phillips, S., Maffitt, D., Pringle, M., et al.: The cancer imaging archive (tcia): maintaining and operating a public information repository. Journal of digital imaging 26, 1045–1057 (2013)

- (5) Huang, X., Liu, M.Y., Belongie, S., Kautz, J.: Multimodal unsupervised image-to-image translation. In: Proceedings of the European conference on computer vision (ECCV), pp. 172–189 (2018)

- (6) Jaeger, S., Karargyris, A., Candemir, S., Folio, L., Siegelman, J., Callaghan, F., Xue, Z., Palaniappan, K., Singh, R.K., Antani, S., et al.: Automatic tuberculosis screening using chest radiographs. IEEE transactions on medical imaging 33(2), 233–245 (2013)

- (7) Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

- (8) Kohl, S., Romera-Paredes, B., Meyer, C., De Fauw, J., Ledsam, J.R., Maier-Hein, K., Eslami, S., Jimenez Rezende, D., Ronneberger, O.: A probabilistic u-net for segmentation of ambiguous images. Advances in neural information processing systems 31 (2018)

- (9) Papyan, V., Han, X., Donoho, D.L.: Prevalence of neural collapse during the terminal phase of deep learning training. Proceedings of the National Academy of Sciences 117(40), 24,652–24,663 (2020)

- (10) Qiu, D., Lui, L.M.: Modal uncertainty estimation for medical imaging based diagnosis. In: Uncertainty for Safe Utilization of Machine Learning in Medical Imaging, and Perinatal Imaging, Placental and Preterm Image Analysis: 3rd International Workshop, UNSURE 2021, and 6th International Workshop, PIPPI 2021, Held in Conjunction with MICCAI 2021, Strasbourg, France, October 1, 2021, Proceedings 3, pp. 3–13. Springer (2021)

- (11) Ronneberger, O., Fischer, P., Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In: Medical image computing and computer-assisted intervention–MICCAI 2015: 18th international conference, Munich, Germany, October 5-9, 2015, proceedings, part III 18, pp. 234–241. Springer (2015)

- (12) Sohn, K., Lee, H., Yan, X.: Learning structured output representation using deep conditional generative models. Advances in neural information processing systems 28 (2015)

- (13) Székely, G.J., Rizzo, M.L.: Energy statistics: A class of statistics based on distances. Journal of statistical planning and inference 143(8), 1249–1272 (2013)

- (14) Tao, T.: A cheap version of the kabatjanskii-levenstein bound for almost orthogonal vectors. https://terrytao.wordpress.com/2013/07/18/ (2013)

- (15) Van Den Oord, A., Vinyals, O., et al.: Neural discrete representation learning. Advances in neural information processing systems 30 (2017)

- (16) Yang, Y., Xie, L., Chen, S., Li, X., Lin, Z., Tao, D.: Do we really need a learnable classifier at the end of deep neural network? arXiv e-prints pp. arXiv–2203 (2022)

- (17) Zheng, C., Cham, T.J., Cai, J.: Pluralistic image completion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1438–1447 (2019)

- (18) Zhu, J.Y., Zhang, R., Pathak, D., Darrell, T., Efros, A.A., Wang, O., Shechtman, E.: Toward multimodal image-to-image translation. Advances in neural information processing systems 30 (2017)