A Deep Learning Approach to Detect Lean Blowout in Combustion Systems

Abstract

Lean combustion is environment friendly with low emissions and also provides better fuel efficiency in a combustion system. However, approaching towards lean combustion can make engines more susceptible to lean blowout. Lean blowout (LBO) is an undesirable phenomenon that can cause sudden flame extinction leading to sudden loss of power. During the design stage, it is quite challenging for the scientists to accurately determine the optimal operating limits to avoid sudden LBO occurrence. Therefore, it is crucial to develop accurate and computationally tractable frameworks for online LBO detection in low emission engines. To the best of our knowledge, for the first time, we propose a deep learning approach to detect lean blowout in combustion systems. In this work, we utilize a laboratory-scale combustor to collect data for different protocols. We start far from LBO for each protocol and gradually move towards the LBO regime, capturing a quasi-static time series dataset at each condition. Using one of the protocols in our dataset as the reference protocol and with conditions annotated by domain experts, we find a transition state metric for our trained deep learning model to detect LBO in the other test protocols. We find that our proposed approach is more accurate and computationally faster than other baseline models to detect the transitions to LBO. Therefore, we recommend this method for real-time performance monitoring in lean combustion engines.

keywords:

Deep Learning , LSTM , Detection of Lean Blowout , Transition to LBO , Confusion Matrix1 Introduction

Nowadays, one of the primary technological problems of fuel-rich combustors in industrial and rocket propulsion applications is emissions, which are influenced by local high flame temperatures [1]. The necessity to have low emissions motivates scientists to move towards lean combustion [2, 3, 4, 5]. Lean combustion not only reduces emissions but also enhances combustion efficiency with better fuel utilization. Therefore, such an approach is aimed towards a cleaner and better environment. However, during such ultra-lean operation regimes, the fuel-air ratio becomes very low, making the flame unstable, and the flame can even get extinguished by blowing out of the combustion chamber. Such an unwanted event in the operation of combustion systems is referred to as lean blowout (LBO) [6, 7, 8, 9]. The chances of LBO get more pronounced when the unburnt mixture velocity gets higher than the reacting speed [4].

LBO may occur during scenarios like low fuel flow, highly turbulent combustion, and large variations in fuel flow. The concept of LBO in illustrated in Fig. 1. Low fuel flow scenarios may occur during rapid deceleration when sudden changes in the throttle rapidly reduce the fuel flow rate while the rate of change of air flow rate is slower [10]. Extreme low fuel conditions can also arise during the adoption of an ultra-lean premixed mode of combustion to avoid formation. On the other hand, to keep the power input constant, there can be a continuous increment of air flow rate leading to highly turbulent combustion and, thereafter, LBO [11, 12]. LBO can also be caused by large variations in the fuel flow, which can occur when there are sudden changes in power requirement [13].

As the lean combustors are used in a broad spectrum of industrial applications such as ground-based engines [14], aero-engines [15, 16] and other gas turbine engines [17], the possibility of LBO can have a significant impact on many such systems. For the ground-based engines in the power sector, LBO can cause unexpected stoppage of the engine, collapsing the entire power network [13]. Such disruptions of engine activity affect productivity and can cause significantly higher maintenance costs. On the other hand, for aviation, the risk of LBO is even higher when there is a low fuel flow scenario [10], and it can be challenging to recover from such a sudden flame loss during the running condition. Thus, the study of LBO has become of great importance to the science community when engineers want to deal with ”clean energy” technology (e.g., lean combustion). Nevertheless, it is difficult to determine the LBO regimes during the design phase, and therefore, developing an online detection tool for LBO can be an important contribution to different applications.

Previous works in the context of LBO detection are mostly concentrated on the premixed type of combustion (i.e., fixed degree of air-fuel mixing) [6, 18, 19, 20, 21]. In aero-engines, fuel is injected close to the reaction chamber, and therefore, LBO detection is also crucial for partially premixed combustion processes, where the degree of fuel-air mixing varies [22]. There has been a recent focus on LBO prediction in partially premixed combustion [22, 8, 9] and researchers have proposed different statistical [23, 19], flame emissions based [24, 8], nonlinear dynamics [25, 9] and data-driven techniques [26, 27, 28, 11, 29]. However, the online LBO detection frameworks should be accurate and computationally fast simultaneously. Some studies have focused on the computational time aspect of LBO [9, 11, 29]. But, further exploration is essential to simultaneously consider the aspects of detection accuracy, computation time, and robustness. While the application of deep learning models to detect combustion instability has started recently [30, 31, 32, 33, 34, 35], there has been no research work till now on LBO detection using deep learning.

In the present work, we propose a deep learning approach to detect LBO in a robust manner. We formulate the problem in such a way that detection performance can be tested for robustness. We compare the performance of the proposed framework against three baseline methods in terms of detection accuracy and computation time. The results demonstrate that the proposed framework is the most accurate, computationally fastest and robust to generalize well for test datasets from different protocols.

We summarize the contributions of this work as follows:

-

•

To the best of our knowledge, this is the first work using deep learning to detect lean blowout in combustion systems.

-

•

Our proposed LBO detection framework is dependent on pressure time-series data, which is a much convenient way of collecting data from real world combustors. Our training scheme is facilitated by the use of domain knowledge based labeling technique, which is based on flame images.

-

•

To show the robustness of our model we test in different conditions near the lean blowout regime and we use three different baseline models for comparison in terms of accuracy and computation time.

-

•

Our proposed deep learning approach is more accurate, computationally faster and captures the transition to lean blowout regime better than the baseline methods. It shows the potential to be implemented as an online detector to prevent the adverse effects of lean blowout in combustion systems.

2 Methods

In this portion, we describe the details of the experimental setup (Section 2.1), dataset (Section 2.2), and the domain knowledge-based label annotation technique (Section 2.3). The notations and problem formulation are described. Thereafter, we present the details of our proposed deep model architecture (Sections 2.4 2.5) and discuss the baseline methods (Section 2.6) used for comparison.

2.1 Details of the Model Combustor

The model combustor used in this study is the same as that has been used in [22, 8, 9]. An illustration of the experimental setup is provided in Fig. 2. The experimental setup primarily consists of three major components: premixing chamber, combustion chamber, and exhaust chamber or outlet. The premixing chamber is constructed to control the quality of oxidizer-fuel mixing [22], and the chemical reaction takes place in the combustion chamber.

The premixing chamber, shown in Fig. 2(a), has the bottommost entry point for the oxidizer (air is used), and the other entry points from upstream to downstream of the premixer are used for the fuel. Therefore, the premixing chamber allows the mixing of fuel and oxidizer at different mixing lengths [22]. At each entry point, there is a provision of four inlets (90o apart from each other) to reduce the asymmetry in the flow. After the topmost entry point, a swirler (swirl number = 1.26, the calculation has been shown in an earlier study [22]) is provided to improve the mixing, thereby enhancing the stability of combustion [36]. The vane angle of the swirler is 60o to the axial direction. After the swirler, the sudden expansion in the flow area of the dump plane (Fig.2(b)) slows down the flow velocity and generates re-circulation regions, ensuring continuous feedback of heat radicals to the incoming mixture. To provide optical accessibility, the combustion chamber (Fig. 2(c)) is surrounded by a quartz tube of length 200 mm and an outer diameter of 65 mm. The burnt gases come out of the combustion chamber through the exhaust chamber.

2.2 Dataset Collection

We have multiple protocols for dataset collection. The air flow rate is kept constant for each protocol, and the fuel flow rate is decreased to approach lean blowout. We vary the equivalence ratio () from the stoichiometric condition (where = 1) towards the blowout. We measure the fuel and air flow rates using mass flow controllers (Alicat Scientific, MCR series, range of MFCs: 0-250 SLPM for fuel and 0-1500 SLPM for air). For each equivalence ratio, an audio reader (sampling frequency of 48 kHz) is used to record the acoustic time series, which captures the pressure fluctuations in the system. We also capture flame images utilized to understand the combustion behavior while annotating the acoustic time series for each experiment.

We collect data for five protocols with different air flow rates (70 SLPM, 75 SLPM, 80 SLPM, 85 SLPM, and 90 SLPM). We use the third fuel entry point (towards the downstream of the air entry point) as the fuel port, ensuring partial premixing of the fuel and oxidizer. For each of the five protocols, we keep decreasing the fuel flow rate and record acoustic pressure time series for different fuel flow rates. Therefore, there is a set of quasi-static datasets for each protocol. The equivalence ratio () where lean blowout occurs is referred to as . We represent each quasi-static dataset by ratio where lean blowout implies and higher values of indicate regimes away from lean blowout.

2.3 Labels Annotated Using Domain Knowledge

The stability of a combustion process generally depends on factors like hydrodynamics of the flow [37], acoustic pressure fluctuations [38] and heat release rate oscillations (influenced by chemical kinetics or combustion reaction rates) [22]. A discrepancy in these factors may lead to unusual behavior in the combustion zone. Domain experts can understand such transition of the combustion regime from the flame images, from which they can annotate each as healthy or unhealthy. In Fig. 2, sample flame images at different are shown to describe the annotation procedure. Annotation ‘healthy’ refers to conditions away from the LBO regime with values higher than the transition . The conditions with lower than the transition are annotated ‘unhealthy’ and are therefore near the LBO regime.

From the sample set of flame images shown in Fig. 2, near stoichiometric condition (at = 1.76), the combustion process looks stable as the flame adheres to the dump plane. This is observed when there is a strong equilibrium between the flame speed and the upcoming unburnt mixture [4]. Therefore, flame exists at the inlet of the combustion chamber in the presence of high incoming flow (). The attachment exists till . A significant variation in combustion dynamics is observed at , where flame exists at a standoff distance from the chamber inlet. With the air flow rate fixed, as the fuel flow rate is decreased to lower values, the chemical reaction rate also decelerates which in turn reduces the heat release rate of combustion [4]. As a result of this, the flame speed decreases impacting the attachment between the flame base and combustion inlet. The bifurcation in the flame behavior at can be attributed as transition [22, 9]. Therefore, the flame behavior for can be referred to as ‘healthy’. With further reduction in , the flame dynamics gets sufficiently weak and irregularities in behavior are noticed in and as demonstrated in Fig. 2. As the condition approaches lean blowout, the rapid fluctuations start in the flame base with local extinction and re-ignition events [22, 8] ultimately leading to LBO, represented as . The flame behaviors at are referred to as ‘unhealthy’ states. The transition from healthy to unhealthy state can occur rapidly in real combustors and the probability of avoiding LBO becomes low once the flame enters into the critical regime (very close to LBO) [9]. Therefore, it is preferable to predict the occurrence of LBO in early stage so that sufficient lead time is available to adopt precautionary measures.

2.4 Training, Reference and Test Datasets

We keep one protocol (air flow rate = 90 SLPM) as the reference protocol and use the other four protocols as the test protocols.

For the reference protocol, the details of the quasi-static datasets in terms of are provided below:

-

•

90 SLPM: = [1, 1.142, 1.214, 1.285, 1.357, 1.428, 1.500, 1.571, 1.642, 1.714, 1.785]

The reason of choosing a protocol as ‘reference’ is that we can find a transition state metric from the corresponding transition state which is already annotated. And, then, during testing, for any particular case, the computed metric can be compared against this transition state metric to directly predict that state as ‘healthy’ or ‘unhealthy’.

The details of the quasi-static datasets in test protocols are:

-

•

70 SLPM: = [1, 1.076, 1.153, 1.23, 1.307, 1.384, 1.461, 1.538, 1.615, 1.692, 1.769]

-

•

75 SLPM: = [1, 1.071, 1.143, 1.214, 1.285, 1.357, 1.428, 1.5, 1.571, 1.643, 1.714, 1.785]

-

•

80 SLPM: = [1, 1.066, 1.133, 1.2, 1.266, 1.33, 1.4, 1.466, 1.533, 1.6, 1.67]

-

•

85 SLPM: = [1, 1.071, 1.143, 1.214, 1.285, 1.357, 1.428, 1.5, 1.571, 1.643, 1.714, 1.785]

2.5 LSTM Based Deep Learning Model

We formulate the problem after introducing the notations that we use in the work. We denote the univariate time series input as , where denotes the total length of the input time series. We divide the entire dataset into consecutive time windows - each window of length . The consecutive windows can be defined as: . We denote the -th time window as . This pre-processing technique is followed for reference set and the test sets. With a slight abuse of notation, the time series of the -th time window can be denoted by .

Recurrent Neural Network (RNN) based models have proved to be effective in different applications including machine translation [39], speech recognition [40], performance monitoring of engineering systems [32], financial markets [41] and healthcare [42]. Recurrent Neural Networks (RNNs) are efficient in learning the temporal dependencies from time series data for accurate forecasting [43]. RNNs are trained using the error backpropagation algorithm in which the error gradients are propagated through the unrolled temporal layers. In an RNN, at each time-step, the input is combined with the hidden state vector using a learned function to produce a new state vector. The architecture of a RNN allows the information from the previous time-steps to persist by passing the hidden state from one step of the network to the other. To overcome the problem of vanishing gradients of RNNs for long sequences [44, 45], an effective RNN architecture is Long Short Term Memory (LSTM) [46]. In an LSTM block, the input, output, and forget gates regulate the addition of any information to the cell state.

In this work, we develop an LSTM based deep learning model illustrated in Fig. 3. The first LSTM layer of the model reads the input sequence in order from to to compute the hidden states . This sequence of hidden states acts as the input to the second LSTM layer which generates the hidden states . The state , capturing the summarized temporal information of the input time series, is passed through two fully connected layers to predict .

We train the LSTM model on the dataset from the reference protocol using a training size of 90%. Next, we randomly split the training set into training and validation sets with a validation size of 20%. For each time series, the inputs are scaled to (0, 1). We perform experiments with different values of hyper-parameters to finalize the optimal set of hyper-parameters based on the validation set performance. We use Adam optimizer ([47]) with a learning rate of 0.001. We train the model on an NVIDIA Titan RTX GPU for 50 epochs with a batch size of 512.

2.6 Baseline Methods

Recurrent Neural Network (RNN): We use a deep learning baseline model based on RNN. To develop this model, we replace the LSTM units in Fig. 3 with RNN units keeping the architecture same. The RNN model is shown in supplementary materials. The details of the RNN baseline model are provided in supplementary materials. We train the RNN model in a similar way as the LSTM model.

Hidden Markov Model (HMM): We use a machine learning modeling approach involving the Hidden Markov Model as one of the baseline methods. Recently, Hidden Markov Models (HMMs) have been utilized for early prediction of a lean blowout from chemiluminescence time series data [11]. HMMs, probabilistic frameworks for recognizing patterns in stochastic processes, have proved to be successful in speech recognition problems [48]. In an HMM, a sequence of observations is generated from a sequence of internal states. The likelihood of the observations depends on the states which are hidden. It is assumed that the transitions between the hidden states follow a first-order Markov chain. An HMM is defined by the start probability vector , transition probability matrix , and emission probability of an observation .

In the training process, we utilize the dataset from the reference protocol using the same training size (90%) as used in the case of the LSTM model. The number of states which give the least Bayesian Information Criterion is chosen as the optimal number of states. After getting the trained optimal HMM, we divide the training dataset into consecutive time windows of length to compute the log-likelihood for each window.

To perform prediction for a test time window , we first predict the log-likelihood using the trained HMM and then compare that with the log-likelihoods of the training set windows to find the closest window . That window can be considered most similar to the test time window . The predicted change is computed as and then it is added to to get .

Translational Error: As another baseline method, we use an existing and well-known method - translational error [49]. Translational error has been proposed as a suitable candidate for prediction and control of lean blowout [49]. It is a nonlinear dynamic tool capable of identifying the dissimilarity in the directions of neighboring trajectories. Mathematically, it is expressed as:

| (1) |

where, . Here, ). In Eq.1, indicates the number of neighbouring points of . The values of is considered here as 5 as suggested in the previous study [49].

The median of values is estimated for 100 randomly chosen phase space vectors, . Therefore, the computation of translational error has a randomness factor in it, for which we use three independent computations in the case of the test dataset to report the mean and standard deviation. And to compare the computation time with other methods, we use the mean computation time from the three computations. There are two other important parameters used in constructing the phase space vectors: time delay and optimum embedding dimension. These are derived after experiments with the training dataset from the reference protocol.

3 Results

3.1 Transition State Error From Reference Set

We utilize the reference protocol (90 SLPM) to get the transition state metric. As a metric, we use root mean square error (RMSE), which is computed between the predicted output and target output of the test set samples. The reference protocol results for the LSTM model are shown in Fig. 4.

We have the model trained at and test the model on the unseen 10% of the dataset to get the test RMSE at . Next, we test the model on the quasi-static datasets at . For time series at each ratio, we get a corresponding test RMSE.

From Fig. 4, as the model has been trained on , we get the lowest test RMSE at and with increasing , the test RMSE increases. The transition state has been annotated at by the domain experts, and the corresponding transition state RMSE is 0.0793. This information will be used to predict ‘healthy’/‘unhealthy’ labels while testing the LSTM model. Test RMSE values higher than 0.0793 can be labeled as ‘healthy’ or away from the LBO regime, and test RMSE values lower than 0.0793 can be predicted as ‘unhealthy’ or near the LBO regime.

The reference protocol results for the RNN model, HMM model and translational error method are provided in supplementary materials. For the translational error method, we perform three independent computations as the method has a randomness factor and compute the mean and standard deviation.

3.2 Performance on the Test Protocols

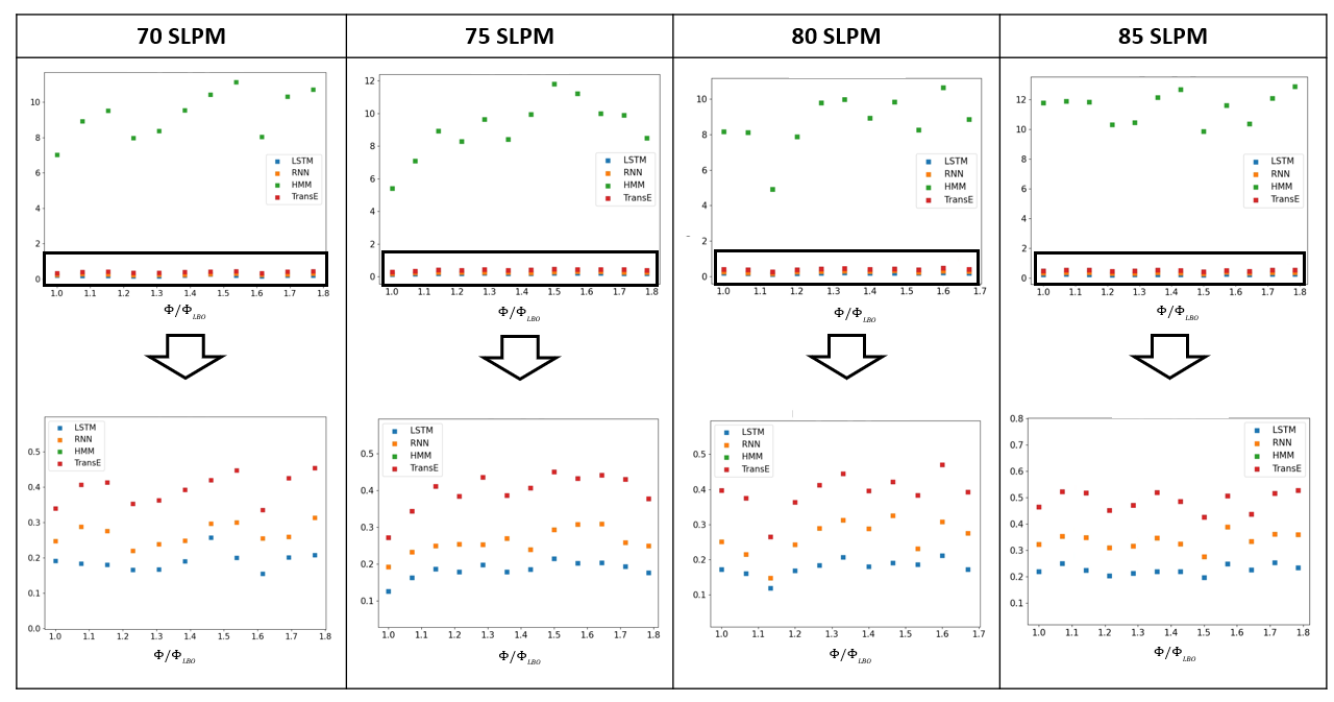

We have four test protocols - 70 SLPM, 75 SLPM, 80 SLPM, 85 SLPM, and each test protocol consists of a set of quasi-static datasets. For each quasi-static dataset in these protocols, the trained model is used to predict RMSE. The predicted label is ‘healthy’ or ‘unhealthy’ depending on whether the RMSE value is higher or lower than the transition state RMSE. Predicted labels are compared against actual labels for each test protocol to compute the confusion matrix.

Results for the test protocols using the LSTM model are shown in Fig. 5. We have marked the transition state RMSE (0.0793) with a red line in each RMSE plot. The black dotted line in each plot denotes the actual transition state annotated by domain experts. The plots show a decreasing trend as we move closer to lean blowout, which occurs at . The LSTM model-based detection framework shows 100% accuracy for all four protocols, as illustrated in the confusion matrices.

The test results for RNN model, HMM model and translational error method are provided in the supplementary materials. Like the LSTM model, the RNN model also demonstrates 100% accuracy. Therefore, both the deep learning models can accurately detect the transition to lean blowout in combustion systems. The HMM is not as effective as the deep learning models in capturing the transitions with changing conditions. The major disadvantage of the HMM model lies in false alarms, which can impact decision-making in real-time detection and control of LBO. The randomness is one of the major disadvantages of using translational error as a detection tool. Also, there are a lot of false negatives. False-negative refers to falsely predicting negative or ‘healthy’ while it is actually positive or ‘unhealthy.’ False negatives can be a serious issue in engine operation and lead to incorrect decision-making.

3.3 Performance Comparison in Terms of Detection Accuracy and Computation Time

To summarize the comparative performance considering all the test protocols, we demonstrate the overall confusion matrix for each method. The model-wise confusion matrices are shown in Fig. 6.

For both the LSTM and RNN models, the accuracy is 100% for ‘healthy’ and ‘unhealthy’ datasets. From Fig. 6, HMM can detect the ‘unhealthy’ datasets correctly, but it predicts most of the ‘healthy’ datasets as ‘unhealthy,’ leading to a lot of false positives. False positives refer to falsely predicting ‘healthy’ conditions as ‘unhealthy’ or positive. Out of 16 ‘healthy’ conditions, it predicts 9 conditions as ‘healthy’ and the remaining 17 as ‘unhealthy.’ Using the method of translational error, the detection accuracy for ‘healthy’ is 100%. But, it falsely predicts half of the ‘unhealthy’ conditions as ‘healthy,’ resulting in false negatives. It is highly important to have a detection framework with low false negatives to prevent the adverse effects of a lean blowout in combustion systems. Overall, the deep learning frameworks (LSTM, RNN) outperform Hidden Markov Model and Translational Error.

Next, we compare the computation time of the methods for each in the test protocols. We use the same computation (CPU) resources for an unbiased comparison, and the results are shown in Fig. 7. We observe that computation times of HMM are much higher than that of LSTM, RNN, and Translational Error. The computation time is the least for our proposed LSTM-based deep learning framework, followed by the RNN model and translational error. Therefore, in terms of both detection accuracy and computation time, our proposed LSTM model outperforms the baseline methods and can therefore has the potential to be utilized for real-time detection and control of lean blowout.

4 Conclusion

The adaption of lean combustion technology helps to reduce the emissions, although it often leads to the occurrence of lean blowout due to the extreme leanness of the fuel-air mixture. Such an extinction of flame can lead to sudden loss of power in combustion systems causing unwanted adverse events. Therefore, It is essential to develop effective and feasible frameworks for real-time accurate detection and control of LBO. With this motivation, in this paper, we develop a deep learning-based framework for detecting lean blowout in combustion systems.

In the present work, we take root mean square error (RMSE) as a metric to identify the combustion state (healthy or unhealthy). The proposed framework based on Long Short Term Memory is robust enough to detect the transition to the LBO regime in unseen test protocols. Further, we similarly compute metric using three baseline models: RNN, HMM, and Translational error. We find that our proposed framework is more accurate and computationally faster than these baseline methods across different test protocols. As the first deep learning work on LBO detection, it can be used for real-time performance monitoring in engines. A similar modeling approach can be adopted to develop control mechanisms of other cyber-physical systems using time series as a sensing modality.

Supplementary Material

See the supplementary material for reference protocol results of the baseline methods RNN, HMM and Translational Error.

Authors’ Contributions

A.M., S.Sen. and S.D. conceived the combustion experiment(s). S.D. conducted the combustion experiment(s) and collected the data. T.G. and S.S. have conceived the machine learning models. T.G. built the machine learning models and generated the machine learning results. T.G. and S.D. performed the analysis. T.G., S.D. and Q.L. generated the translational error results. T.G., S.D., A.M., S.Sen. and S.S. interpreted the results and contributed in writing the manuscript. All authors have contributed in reviewing the manuscript.

Acknowledgements

This work has been supported in part by NSF grants CNS1954556 and CNS 1932033.

References

- Zeldvich [1946] Y. B. Zeldvich, The oxidation of nitrogen in combustion and explosions, J. Acta Physicochimica 21 (1946) 577.

- Correa [1993] S. M. Correa, A review of nox formation under gas-turbine combustion conditions, Combustion science and technology 87 (1993) 329–362.

- Mongia [1998] H. Mongia, Aero-thermal design and analysis of gas turbine combustion systems-current status and future direction, in: 34th AIAA/ASME/SAE/ASEE Joint Propulsion Conference and Exhibit, 1998, p. 3982.

- Turns et al. [1996] S. R. Turns, et al., Introduction to combustion, volume 287, McGraw-Hill Companies, 1996.

- Mukhopadhyay and Sen [2019] A. Mukhopadhyay, S. Sen, Fundamentals of combustion engineering, CRC Press, 2019.

- Muruganandam et al. [2005] T. Muruganandam, S. Nair, D. Scarborough, Y. Neumeier, J. Jagoda, T. Lieuwen, J. Seitzman, B. Zinn, Active control of lean blowout for turbine engine combustors, Journal of Propulsion and Power 21 (2005) 807–814.

- Gupta et al. [2019] S. Gupta, P. Malte, S. L. Brunton, I. Novosselov, Prevention of lean flame blowout using a predictive chemical reactor network control, Fuel 236 (2019) 583–588.

- De et al. [2020a] S. De, A. Biswas, A. Bhattacharya, A. Mukhopadhyay, S. Sen, Use of flame color and chemiluminescence for early detection of lean blowout in gas turbine combustors at different levels of fuel–air premixing, Combustion Science and Technology 192 (2020a) 933–957.

- De et al. [2020b] S. De, A. Bhattacharya, S. Mondal, A. Mukhopadhyay, S. Sen, Application of recurrence quantification analysis for early detection of lean blowout in a swirl-stabilized dump combustor, Chaos: An Interdisciplinary Journal of Nonlinear Science 30 (2020b) 043115.

- Rosfjord and Cohen [1995] T. J. Rosfjord, J. M. Cohen, Evaluation of the transient operation of advanced gas turbine combustors, Journal of Propulsion and Power 11 (1995) 497–504.

- Mondal et al. [2022] S. Mondal, S. De, A. Mukhopadhyay, S. Sen, A. Ray, Early prediction of lean blowout from chemiluminescence time series data, Combustion Science and Technology 194 (2022) 1108–1135.

- Thampi and Sujith [2015] G. Thampi, R. Sujith, Intermittent burst oscillations: Signature prior to flame blowout in a turbulent swirl-stabilized combustor, Journal of Propulsion and Power 31 (2015) 1661–1671.

- Meegahapola and Flynn [2015] L. Meegahapola, D. Flynn, Characterization of gas turbine lean blowout during frequency excursions in power networks, IEEE Transactions on Power Systems 30 (2015) 1877–1887. doi:10.1109/TPWRS.2014.2356336.

- McDonell and Klein [2013] V. McDonell, M. Klein, Ground based gas turbine combustion: Metrics, constraints, and system interactions, Gas Turbine Emissions 38 (2013) 24.

- MULARZ [1979] E. MULARZ, Lean, premixed, prevaporized combustion for aircraft gas turbine engines, in: 15th Joint Propulsion Conference, 1979, p. 1318.

- Palies et al. [2019] P. Palies, R. Acharya, A. Hoffie, M. Thomas, Lean fully premixed injection for commercial jet engines: An initial design study, in: Turbo Expo: Power for Land, Sea, and Air, volume 58622, American Society of Mechanical Engineers, 2019, p. V04BT04A039.

- Bahlmann and Visser [1994] F. C. Bahlmann, B. M. Visser, Development of a lean-premixed two-stage annular combustor for gas turbine engines, in: Turbo Expo: Power for Land, Sea, and Air, volume 78859, American Society of Mechanical Engineers, 1994, p. V003T06A034.

- Prakash [2007] S. Prakash, Lean blowout mitigation in swirl stabilized premixed flames, Ph.D. thesis, Georgia Institute of Technology, 2007.

- Muruganandam [2006] T. Muruganandam, Sensing and dynamics of lean blowout in a swirl dump combustor, PhD diss., Georgia Institute of Technology 18 (2006) 950–960.

- Nair and Lieuwen [2005] S. Nair, T. Lieuwen, Acoustic detection of blowout in premixed flames, Journal of Propulsion and Power 21 (2005) 32–39.

- De et al. [2020] S. De, A. Bhattacharya, S. Mondal, A. Mukhopadhyay, S. Sen, Identification and early prediction of lean blowout in premixed flames, Sadhana 45 (2020) 1–12.

- De et al. [2019] S. De, A. Bhattacharya, S. Mondal, A. Mukhopadhyay, S. Sen, Investigation of flame behavior and dynamics prior to lean blowout in a combustor with varying mixedness of reactants for the early detection of lean blowout, International Journal of Spray and Combustion Dynamics 11 (2019) 1756827718812519.

- Chaudhari [2011] R. Chaudhari, Investigation of thermoacoustic instabilities and lean blowout in a model gas turbine combustor, Doctor of Philosophy, Jadavpur University, Kolkata, India (2011).

- Chaudhari et al. [2013] R. R. Chaudhari, R. P. Sahu, S. Ghosh, A. Mukhopadhyay, S. Sen, Flame color as a lean blowout predictor, International Journal of Spray and Combustion Dynamics 5 (2013) 49–65.

- Unni and Sujith [2016] V. R. Unni, R. I. Sujith, Precursors to blowout in a turbulent combustor based on recurrence quantification, in: 52nd AIAA/SAE/ASEE Joint Propulsion Conference, 2016, p. 4649.

- Mukhopadhyay et al. [2013] A. Mukhopadhyay, R. R. Chaudhari, T. Paul, S. Sen, A. Ray, Lean blow-out prediction in gas turbine combustors using symbolic time series analysis, Journal of Propulsion and Power 29 (2013) 950–960.

- Sarkar et al. [2015] S. Sarkar, A. Ray, A. Mukhopadhyay, S. Sen, Dynamic data-driven prediction of lean blowout in a swirl-stabilized combustor, International Journal of Spray and Combustion Dynamics 7 (2015) 209–241.

- Dey et al. [2015] D. Dey, R. R. Chaudhari, A. Mukhopadhyay, S. Sen, S. Chakravorti, A cross-wavelet transform aided rule based approach for early prediction of lean blow-out in swirl-stabilized dump combustor, International Journal of Spray and Combustion Dynamics 7 (2015) 69–90.

- De et al. [2021] S. De, A. Bhattacharya, A. Mukhopadhyay, S. Sen, Early detection of lean blowout in a combustor using symbolic analysis of colour images, Measurement 186 (2021) 110113.

- Sarkar et al. [2015] S. Sarkar, K. G. Lore, S. Sarkar, Early detection of combustion instability by neural-symbolic analysis on hi-speed video., in: CoCo@ NIPS, 2015.

- Akintayo et al. [2016] A. Akintayo, K. G. Lore, S. Sarkar, S. Sarkar, Prognostics of combustion instabilities from hi-speed flame video using a deep convolutional selective autoencoder, International Journal of Prognostics and Health Management 7 (2016) 1–14.

- Gangopadhyay et al. [2020a] T. Gangopadhyay, A. Locurto, J. B. Michael, S. Sarkar, Deep learning algorithms for detecting combustion instabilities, in: Dynamics and Control of Energy Systems, Springer, 2020a, pp. 283–300.

- Gangopadhyay et al. [2020b] T. Gangopadhyay, S. Y. Tan, A. LoCurto, J. B. Michael, S. Sarkar, Interpretable deep learning for monitoring combustion instability, IFAC-PapersOnLine 53 (2020b) 832–837.

- Gangopadhyay et al. [2021a] T. Gangopadhyay, V. Ramanan, A. Akintayo, P. K. Boor, S. Sarkar, S. R. Chakravarthy, S. Sarkar, 3d convolutional selective autoencoder for instability detection in combustion systems, Energy and AI 4 (2021a) 100067.

- Gangopadhyay et al. [2021b] T. Gangopadhyay, V. Ramanan, S. R. Chakravarthy, S. Sarkar, Cross-modal virtual sensing for combustion instability monitoring, arXiv preprint arXiv:2110.01659 (2021b).

- Schefer et al. [2002] R. W. Schefer, D. Wicksall, A. Agrawal, Combustion of hydrogen-enriched methane in a lean premixed swirl-stabilized burner, Proceedings of the combustion institute 29 (2002) 843–851.

- Chaudhuri and Cetegen [2008] S. Chaudhuri, B. M. Cetegen, Blowoff characteristics of bluff-body stabilized conical premixed flames with upstream spatial mixture gradients and velocity oscillations, Combustion and flame 153 (2008) 616–633.

- Domen et al. [2015] S. Domen, H. Gotoda, T. Kuriyama, Y. Okuno, S. Tachibana, Detection and prevention of blowout in a lean premixed gas-turbine model combustor using the concept of dynamical system theory, Proceedings of the Combustion Institute 35 (2015) 3245–3253.

- Cho et al. [2014] K. Cho, B. Van Merriënboer, C. Gulcehre, D. Bahdanau, F. Bougares, H. Schwenk, Y. Bengio, Learning phrase representations using rnn encoder-decoder for statistical machine translation, arXiv preprint arXiv:1406.1078 (2014).

- Miao et al. [2015] Y. Miao, M. Gowayyed, F. Metze, Eesen: End-to-end speech recognition using deep rnn models and wfst-based decoding, in: 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), IEEE, 2015, pp. 167–174.

- Selvin et al. [2017] S. Selvin, R. Vinayakumar, E. Gopalakrishnan, V. K. Menon, K. Soman, Stock price prediction using lstm, rnn and cnn-sliding window model, in: 2017 international conference on advances in computing, communications and informatics (icacci), IEEE, 2017, pp. 1643–1647.

- Jagannatha and Yu [2016] A. N. Jagannatha, H. Yu, Bidirectional rnn for medical event detection in electronic health records, in: Proceedings of the conference. Association for Computational Linguistics. North American Chapter. Meeting, volume 2016, NIH Public Access, 2016, p. 473.

- Hewamalage et al. [2020] H. Hewamalage, C. Bergmeir, K. Bandara, Recurrent neural networks for time series forecasting: Current status and future directions, International Journal of Forecasting (2020).

- Bengio et al. [1994] Y. Bengio, P. Simard, P. Frasconi, et al., Learning long-term dependencies with gradient descent is difficult, IEEE transactions on neural networks 5 (1994) 157–166.

- Gers et al. [1999] F. A. Gers, J. Schmidhuber, F. Cummins, Learning to forget: Continual prediction with lstm (1999).

- Hochreiter and Schmidhuber [1997] S. Hochreiter, J. Schmidhuber, Long short-term memory, Neural computation 9 (1997) 1735–1780.

- Kingma and Ba [2014] D. P. Kingma, J. Ba, Adam: A method for stochastic optimization, arXiv preprint arXiv:1412.6980 (2014).

- Rabiner [1989] L. R. Rabiner, A tutorial on hidden markov models and selected applications in speech recognition, Proceedings of the IEEE 77 (1989) 257–286.

- Gotoda et al. [2014] H. Gotoda, Y. Shinoda, M. Kobayashi, Y. Okuno, S. Tachibana, Detection and control of combustion instability based on the concept of dynamical system theory, Physical Review E 89 (2014) 022910.

Supplementary Material

Recurrent Neural Network (RNN) Baseline Model

We use a deep learning baseline model based on RNN, shown in Fig. 8. While describing the RNN based baseline model, to avoid clutter, we denote the input at time-step for a particular window as instead of . Therefore, for an univariate time-series, the input at is .

The model comprises two stacked RNN layers and two fully connected layers. We denote the hidden state corresponding to the first RNN layer with , where indicates the time-step. Similarly, the hidden state of the second layer of RNN is denoted as . The hidden state of the first RNN layer at time-step is .

At time-step , the input and previous hidden state is passed to the RNN block, which computes using a non-linear mapping as follows:

| (2) |

where with the previous hidden state and the input for the -th time step. The parameters to learn are and .

The updated state is passed onto the next time-step. After reading the input sequence in order from to , the first RNN layer returns a sequence of hidden states , where . This sequence acts as input to the second RNN layer.

For the second RNN layer, the hidden state at time-step is denoted as . At time-step , the inputs to the RNN block are and . The updated hidden state is computed as:

| (3) |

where . The learnable parameters are and .

The output from the last time-step of the second RNN layer is considered as the compressed information of the input sequence. Thereafter, one fully connected layer is used:

| (4) |

The learnable parameters are and .

The prediction is computed after passing through a fully connected layer.

| (5) |

where the parameters to be learned are and .

Results

Transition State Error From Reference Set

We demonstrate the reference protocol results for the RNN model in Fig. 9. We observe a similar trend as we observed for the LSTM model - with increasing the test RMSE increases, and the RMSE corresponding to the transition state is denoted as 0.0786.

The reference protocol results for the HMM are shown in Fig. 10. Unlike the deep learning results of RNN and LSTM, we observe that the trend is not smooth in the case of the HMM. From Fig. 10, the transition state RMSE is 0.1348.

To get the reference protocol results for the method translational error, we perform three independent computations as the method has a randomness factor. We present the mean and standard deviation with error bars in Fig. 11. We observe an increasing value of translational error as we go towards the lean blowout. The highest value of translational error is observed at . The mean translational error corresponding to the transition state is 0.1119. This error value will be used to predict the labels for the quasi-static datasets in the test protocols.

Performance on the Test Protocols

We present the performance of the RNN model for the test protocols in Fig. 12. For each quasi-static dataset in the test protocols, the trained model predicts an RMSE, and the RMSE values are plotted with varying . The confusion matrices are computed using the actual labels to evaluate the performance. Like the LSTM model, the RNN model also demonstrates 100% accuracy. Therefore, both the deep learning models can accurately detect the transition to lean blowout in combustion systems.

Test results using the Hidden Markov Model are shown in Fig. 13. The RMSE plots show that HMM is not as effective as the deep learning models in capturing the transitions with changing conditions. For all the test protocols, the confusion matrices show a lot of false positives, with the highest number of false positives for 75 SLPM, where it can only correctly identify one ‘healthy’ condition. Therefore, the major disadvantage of the HMM model lies in false alarms, which can impact decision-making in real-time detection and control of LBO.

We demonstrate the test results using the translational error method in Fig. 14. While the mean and standard deviation of the error have been shown in the plot for each , the confusion matrix computation is based on the mean translational error value. From the plots, we observe that the standard deviation values are pretty high for some datasets. This randomness is one of the major disadvantages of using translational error as a detection tool. While it can be a useful tool for offline analysis of lean blowout, the deep learning models outperform this method, as evident from the confusion matrices of Fig. 14. Except for 80 SLPM, for all the other test protocols, there are a lot of false negatives. False-negative refers to falsely predicting negative or ‘healthy’ while it is actually positive or ‘unhealthy.’ False negatives can be a serious issue in engine operation and lead to incorrect decision-making.