A Data-driven Hierarchical Control Structure for Systems with Uncertainty

Abstract

The paper introduces a Data-driven Hierarchical Control (DHC) structure to improve performance of systems operating under the effect of system and/or environment uncertainty. The proposed hierarchical approach consists of two parts: 1) A data-driven model identification component to learn a linear approximation between reference signals given to an existing lower-level controller and uncertain time-varying plant outputs. 2) A higher-level controller component that utilizes the identified approximation and wraps around the existing controller for the system to handle modeling errors and environment uncertainties during system deployment. We derive loose and tight bounds for the identified approximation’s sensitivity to noisy data. Further, we show that adding the higher-level controller maintains the original system’s stability. A benefit of the proposed approach is that it requires only a small amount of observations on states and inputs, and it thus works online; that feature makes our approach appealing to robotics applications where real-time operation is critical. The efficacy of the DHC structure is demonstrated in simulation and is validated experimentally using aerial robots with approximately-known mass and moment of inertia parameters and that operate under the influence of ground effect.

I Introduction

As robots increasingly venture outside of the lab, the effect of uncertainty within the system and/or at the robot-environment interactions becomes more pronounced. Motivating examples include the influence of surface effects for Unmanned Aerial Vehicles (UAVs), unknown flow dynamics for marine robots, and inherently uncertain leg-ground contacts for legged robots.

Central to exploiting system and robot-environment uncertainty is the ability to quickly identify deviations from nominal behaviors based on data collected during robot deployment. Pre-deployment model identification and calibration tools (e.g., [1, 2, 3]) can help improve model-based control. However, even though such models can be obtained with high precision, the presence of uncertain, time-varying disturbances during deployment may turn the utilized model invalid to the extent that an otherwise well-tuned controller will fail [4]. A way to tackle this is to incorporate Uncertainty Quantification (UQ) into control design.

Methods using UQ, like a-priori estimation, rely heavily on the employed model structure. Generated models are usually complex and hard to incorporate into controller design in practice [5, 6]. When prior information about the environment is limited, methods based on principles of adaptive control [7] can apply. An appealing feature of these methods is that they can provide some form of performance and safety guarantees (e.g., regarding system stability). Despite their overall effectiveness, such methods can still be challenged in two key ways. First, when the intensity of the uncertainty affecting system behavior violates the underlying controller assumptions. Second, when modeling errors [8], such as unmodeled dynamics, render the model invalid [4]. To address the problem of unmodeled dynamics, data-driven control techniques have been proposed.

Machine learning methods, such as Gaussian Process [9, 10], or Deep Neural Networks [11], can be used to either model the system [12, 13] or determine control inputs [14, 15] following a black-box input-output training procedure. However, as state dimensionality and system structure complexity increase (e.g., by using ‘deeper’ neural networks), the aforementioned methods may be challenged when it comes to be implemented in real-time for robotics research (e.g., [16, 17, 18]). One way to address this issue is by adopting a hierarchical structure to involve deep neural networks in control design. Instead of designing new controllers directly, reference trajectories are generated to deal with uncertainty through learning [19, 20]. Unfortunately, certain limitations still exist even when adopting a hierarchical structure. Neural-network-based approaches require a large body of data to train well [21]. At the same time, they remain limited in terms of offering some form of performance and safety guarantees, although this is currently an active research topic (e.g., [22, 20, 23]).

Besides neural-network-based approaches, dimensionality reduction and dynamics decomposition approaches can play an important role in data-driven algorithms. Methods like DMD (Dynamic Mode Decomposition [24]), EDMD (Extended DMD [25]), POD (Proper Orthogonal Decomposition [26, 27]) and their various kernel [28] and tensor [29] extensions have been successfully applied to across areas. A benefit of decomposition algorithms is that they can significantly reduce the amount of data required for approximating a system’s model through data (e.g., [30, 31, 32]).

Fueled by the potential of hierarchical methods and dimensionality-reduction approaches, this paper presents a new Data-driven Hierarchical Control (DHC) structure to handle uncertainty. The approach hinges on DMD with Control (DMDc [33]) to learn a higher-level controller and then generate a refined reference to improve an existing lower-level controller’s performance (Fig. 1). Our approach can be particularly appropriate in practice when a low-level, high-rate, pre-tuned controller is already in place, and a second higher-level controller is wrapped around the lower-level one to allow for the system to operate under uncertainty. The quadrotor UAV is one illustrative case, where it is now common practice to employ a hierarchical control structure [34, 35]. The low-level controller is responsible for controlling the attitude of the robot, while the high-level controller determines its position.

Succinctly, the paper’s contribution is twofold.

-

•

We propose a hierarchical control structure that refines a reference signal sent to a lower-level controller to deal with uncertainty. The structure builds on top of a model-identification block based on DMDc, and is shown that it retains stability of the underlying controller.

-

•

We analyze the sensitivity of DMDc to noisy data, and provide loose and tight bounds for the model identification component of our method.

Furthermore, we evaluate and validate the methodology using aerial robots both in simulation and experimentally. In turn, this effort can help design controllers able to learn how to harness uncertain aerodynamics, such as ground effect. A supplementary video can be found at https://youtu.be/OznDCskVnJU.

II Technical Background

Dynamic Mode Decomposition (DMD) can characterize nonlinear dynamics through analysis of an approximated linear system [36]. Most relevant to our work here, DMD with control (DMDc)–an extension of the original DMD [37]—aims to incorporate the effect of external inputs [33].

The goal of DMDc is to analyze the relation between a future system measurement , a current measurement , and the current input . For each triplet of measurement data , a pair of linear operators , and is determined to approximate

| (1) |

Operators and are selected as the best-fit solution for all triplets of available data. Given observations and inputs up to time instant arranged in vectors , , and , approximation (1) can be rewritten compactly as

| (2) |

Then we could seek the best-fit solution as:

where denotes the pseudo-inverse. The problem can be solved immediately by Singular Value Decomposition (SVD), or QR decomposition, among others [38, 39].

III Data-driven Hierarchical Control (DHC)

III-A Controller Structure

Given an existing low-level controller for a plant of interest, the proposed DHC structure adds a model identification block and a higher-level (“outer”) controller to extract uncertainties and remedy for them in real-time, respectively (Fig. 1). The low-level controller and the original plant are combined into a new plant. The model identification block is built from DMDc and estimates linear operators and from new plant output and reference signals. The identified operators are then used by the higher-level controller to refine the reference signal sent to the new plant.

III-B Control Procedure

At timestep , the outer controller refines the original reference given to the lower-level controller as per . To estimate and using DMDc, we treat the measured state as the “input” and the refined references and as the evolving “output.” The reason for doing so is that the controller operates on the reference signal, which in turn needs to be “learned” based on observed system state. Then, DMDc operates on the linear approximation . Note that to avoid singularities, we check the rank of the measurement matrices used to approximate models. After an initialization for steps,111From a general standpoint, (2) can be solved when linearly independent measurements are available [33]. Thus, a lower bound for is . In this work we set . the identification process repeats to refine the estimated model until the control task is finished (Algorithm 1).

III-C Stability Analysis

Let the underlying low-level controller be stable, and consider the origin as the reference, i.e. . Given the Lyapunov function , we calculate . When , we have . Then,

Thus, decays over time and satisfies . From the standard Lyapunov argument, the equilibrium is then stable. Hence, wrapping the outer controller around the underlying plant respects the stability properties of the original lower-level controller.

IV Sensitivity to Noisy Data

The model identification block extracts dynamics when measurement data are noisy. Below we analyze the sensitivity to noisy data and derive two estimation error bounds.

Lemma 2.1. Consider the system

| (3) |

The sensitivity of system (3) to perturbations in s and is

| (4) |

where is the condition number of linear operator H, and

Proof of Lemma 2.1: Start with the normal equations

The first-order perturbation relation is

which we re-arrange to get

Define , and let be singular values of . Then,

Dividing both sides by and after some algebraic manipulation so that and only appear in ratios of and , as , we get

Then with

we arrive at (4).

Corollary 2.2. The estimation error of problem (2) is bounded by the measurements’ noise intensity, i.e.

| (5) |

where is the condition number of observation matrix , and

Proof of Corollary 2.2: The proof follows directly from Lemma 2.1 by setting , , and .

Lemma 2.3 [A tighter bound]. Let . In the case that , the sensitivity of (3) is reduced to

| (6) |

Proof of Lemma 2.3: From system (3), we have

and it follows that

Then,

Setting yields

which concludes the proof.

Corollary 2.4. When the disturbance occurs in observed states, i.e. a tighter error bound is

| (7) |

Proof of Corollary 2.4: The proof follows directly from Lemma 2.3 by setting , , and .

Toy Example: Consider the simple linear system

| (8) |

We add zero-mean white noise to corrupt state observations, i.e. , with in increments. The initial condition is set at . We perform a Monte Carlo simulation for trials to produce the average error. With reference to Fig. 2, the effect of the disturbance increases as variance increases (as expected). The general bound given in (5) expands at higher rates than the tighter one we obtain via (7) as the noise variance increases.

V Control Structure Experiments

We demonstrate the utility and evaluate the performance of DHC in simulating a planar quadrotor with different noise intensity, and then test experimentally with a quadrotor flying under the influence of ground effect.

V-A Simulation in a Planar Quadrotor

While quadrotor dynamics is well understood, there is a certain limit on the degree of variations in parameters like mass and moment of inertia that model-based controllers can handle. Such variations make the utilized model inaccurate, which in turn may render the controller unstable. Online adjustment can help quantify model errors and inform the controller so as to maintain stable operation.

V-A1 System Description

V-A2 Controller Design

Besides our proposed DHC structure, we design and compare against a State Feedback Controller (SFC), a linear Model Predictive Controller (MPC), and a Model Reference Adaptive Controller (MRAC).

-

•

State Feedback Controller (SFC): With the closed-loop system poles set at [0.9;0.8;-0.9;-0.8;0.95;-0.95], we get

-

•

Model Predictive Control (MPC): A linear MPC is designed directly with model (9) at hand. The prediction and control horizon is set to be and , and the pitch angle is constrained in the interval .

-

•

Model Reference Adaptive Control (MRAC): A parameter adaptation rule is used together with the previously described MPC to adjust the controller parameter so that the output of the plant tracks the output of the reference model having the same reference input. The adaptation rule is designed based on [42] as

where the weights were selected empirically to and . To tune the weights, we first chose their order to be consistent with the physical quantity they relate to. Then, we solved a sequential optimization problem to identify those parameter values that maximize MRAC’s performance.

-

•

DHC: Our method also uses the linear MPC as the underlying controller. Then, we follow the steps in Algorithm 1 to simulate the system.

V-A3 Simulation

The quadrotor’s task is to reach position from initial condition . We analyze the performance of each controller in two cases: when parameters are uncertain with low noise intensity (c1) where and , and with high noise intensity (c2) where and . Perturbations in model parameters are generated at initialization of each simulated trial by sampling from normal distributions. Distribution means are the nominal values for and . Distribution variances are selected as above. Once the perturbation is sampled, it remains constant for the duration of the trial.222The specific selection of constant perturbations in model parameters emulates the case of inaccurate measurements of mass and inertia of the vehicle; these values may be approximately known (or estimated), but do not change during operation. The robustness properties of the proposed control structure to general perturbations is analyzed in Section IV. For every different condition we perform trials.

| Case | Controller | Settling time | Total control effort to stabilize | Steady state error | Overshoot |

| (s) | |||||

| c1 | SFC | 1.95 | 1.2453 | [0;0] | 2.5828 |

| MPC | 0.04 | 0.6020 | [0.6846;0.7740] | 0 | |

| MRAC | 0.72 | 11.1852 | [ 0.2986;0.7038] | 1.4575 | |

| DHC | 0.38 | 4.0847 | [0;0.0011] | 0 | |

| c2 | SFC | Unstable | |||

| MPC | 0.04 | 0.6032 | [1.6951;1.1906] | 0 | |

| MRAC | 0.92 | 11.0816 | [ 1.0351;1.0357] | 0.9718 | |

| DHC | 0.38 | 3.6052 | [0;0.0085] | 0 |

V-A4 Results and Discussion

Simulation results suggest that all controllers can stabilize the system in low noise intensity (see Table I). However, as noise intensity increases SFC fails. Linear MPC converges faster than all other methods, but produces increasingly large steady-state error. MRAC can reduce such steady-state errors, at the expense of longer settling time (within 2%) and larger control effort, and could possibly cause a large overshoot as in SFC. Despite its efficiency shown here, tuning MRAC can be tedious, and its performance relies a lot on the tuned weights. On the other hand, our proposed DHC structure keeps updating the model that implicitly includes noise. As such, it can quickly extract refined dynamics from uncertain data to maintain system stability faster, with less control energy and smaller steady state error. Further, as the disturbance gets embedded in the learned model, the performance of controller does not deteriorate significantly as noise intensity increases.

V-B Controlling a Crazyflie Quadrotor Under Ground Effect

We evaluate experimentally our DHC structure on a Crazyflie quadrotor operating under the influence of ground effect over sustained periods of time. Ground effect [43] manifests itself as an increase in the generated rotor thrust given constant input rotor power [44] when operating close to the ground or over other surfaces. When operating in ground effect, the dynamics of the robot-environment interaction change to a degree that an underlying model tuned for mid-air operation may no longer be valid [4, 27]. In turn, model mismatches caused by varying robot-environment interaction (i.e. a form of uncertainty) degrade the robot’s performance, both in terms of stability [45] and control. As we show below, controllers unaware of ground effect will tend to raise the height of the robot when the latter flies sufficiently close over an obstacle. This behavior may lead to crashes when operating in confined and cluttered environments. Wrapping DHC around such a controller can help the robot adjust the impact of ground effect without any specific models for it.

V-B1 Controller Design

We follow the controller structure shown in Fig. 1 (as in simulation). We test using two distinct lower-level controllers: a nonlinear geometric controller [46], and a PID controller. Controller gains are those pre-tuned by the manufacturer. Hence, note that the controllers have been tuned for operation in mid-air, not in near-ground operation. The controllers are different from those in simulation since 1) they are often used in practice, and 2) their underlying performance in simulation would depend on quality of tuning (especially for PID) and would thus not add significant value beyond the controllers already tested in simulation.

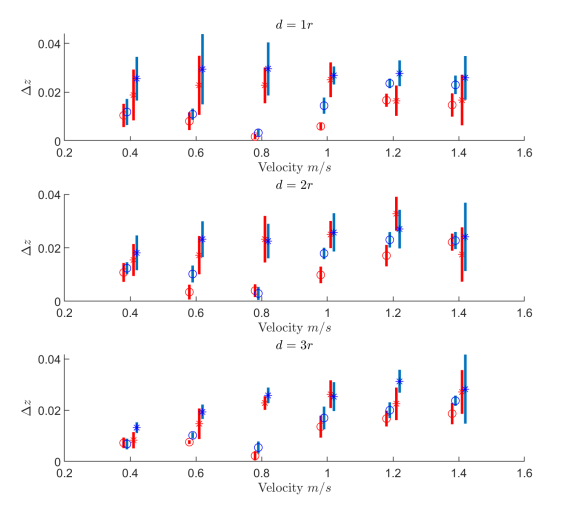

We evaluate the performance of both low-level controllers with and without DHC wrapped around them. The task is to fly at a constant height between an initial and a goal position (Fig. 4). During part of the trajectory, the robot flies over an obstacle which creates sustained ground effect and influences robot behavior. Performance is quantified in terms of deviating from the desired height due to the ground effect.

V-B2 Experiment

The robot is commanded to follow m straight-line constant-height trajectories. The height of the obstacle is m. The robot propeller radius is m. We collect data at three distinct heights , and while flying at six distinct forward speeds m/s. Experiments are run with the four aforementioned control structures. This leads to a total of distinct case studies. For each case study, we collect data from repeated trials. To minimize depleting battery effects, a fully charged battery is used at the beginning of each -trial experimental session. Position data are collected via motion capture (VICON).

V-B3 Results and Discussion

Experimental results (Figs. 5 and 6) reveal that wrapping the proposed hierarchical structure around a low-level controller (red curves in figures) can help keep the quadrotor closer to the desired height when compared to the standalone original controller (blue curves), on average. This indicates that incorporating model identification and controller adaptation modules through DHC helps the overall control structure adjust better and faster to uncertain perturbations as, in this case, ground effects.

Furthermore, model/controller adaptation appears to perform better at low distances from the ground and as the forward velocity increases. This observation is in line with aerodynamics suggesting that 1) ground effect starts diminishing when the flying height above the obstacle increases to , and 2) as forward speed increases both ground effect and drag affect rotorcraft flight in still not well-understood ways [44]. Hence, through this experiment we show how DHC can be used to render a controller adaptive to environment changes that excite unmodeled dynamics.

The hierarchical structure may still remain bound to the low-level controller’s possible limitations, as shown in simulation. In the experiments, this is observed as forward speed increases. After clearing out the obstacle, the controller’s apparent steady-state error seems to increase (c.f. bottom two rows of Fig. 6) at higher speeds. While this may be an artefact of the limited experimental volume, the settling time of the controller with and without DHC still increases. Future work will investigate the interplay between the lower-level controller and DHC, and consider different hierarchical adaptive control structures to change an underlying controller’s behavior more drastically when needed.

VI Conclusions

The paper introduced a Data-driven Hierarchical Control (DHC) structure with two key functions. 1) DHC utilizes an online data-driven approach to learn a model of input reference and true outputs. 2) DHC utilizes the derived model to keep refining a reference signal given to an underlying low-level controller to ensure system performance in the presence of system and/or system-environment uncertainty. We showed that DHC retains the stability properties of the underlying lower-level controller, and further determined lower bounds for our method’s sensitivity to noisy data.

The utility and performance of the proposed approach are tested in simulation and experimentally with a quadrotor. The test cases were designed to excite specific types of uncertainty that are common in practice: 1) constant model parameter errors in simulation; and 2) variable uncertain robot-environment interaction experimentally through operation under ground effect. Results suggest that DHC can successfully be wrapped around existing controllers in practice, to improve their performance by discovering and harnessing unmodeled dynamics during deployment.

References

- [1] S. Zarovy, M. Costello, A. Mehta, G. Gremillion, D. Miller, B. Ranganathan, J. S. Humbert, and P. Samuel, “Experimental study of gust effects on micro air vehicles,” AIAA Atmospheric Flight Mechanics Conference, p. 7818, 2010.

- [2] G. S. Aoude, B. D. Luders, J. M. Joseph, N. Roy, and J. P. How, “Probabilistically safe motion planning to avoid dynamic obstacles with uncertain motion patterns,” Autonomous Robots, pp. 51–76, 2013.

- [3] C. Powers, D. Mellinger, A. Kushleyev, B. Kothmann, and V. S. A. Kumar, “Influence of aerodynamics and proximity effects in quadrotor flight,” Int. Symp. on Experimental Robotics (ISER), 2012.

- [4] K. Karydis, I. Poulakakis, J. Sun, and H. G. Tanner, “Probabilistically valid stochastic extensions of deterministic models for systems with uncertainty,” The International Journal of Robotics Research, vol. 34, no. 10, pp. 1278–1295, 2015.

- [5] H. Yoon, D. B. Hart, and S. A. McKenna, “Parameter estimation and predictive uncertainty in stochastic inverse modeling of groundwater flow: Comparing null-space monte carlo and multiple starting point methods,” Water Resources Research, pp. 536–553, 2013.

- [6] M. L. Rodríguez-Arévalo, J. Neira, and J. A. Castellanos, “On the importance of uncertainty representation in active slam,” IEEE Transactions on Robotics, vol. 34, no. 3, pp. 829–834, 2018.

- [7] I. D. Landau, R. Lozano, and M. M’Saad, Adaptive Control, J. W. Modestino, A. Fettweis, J. L. Massey, M. Thoma, E. D. Sontag, and B. W. Dickinson, Eds. Berlin, Heidelberg: Springer-Verlag, 1998.

- [8] D. Q. Mayne, J. B. Rawlings, C. V. Rao, and P. O. Scokaert, “Constrained model predictive control: Stability and optimality,” Automatica, vol. 36, no. 6, pp. 789–814, 2000.

- [9] D. Piga, M. Forgione, S. Formentin, and A. Bemporad, “Performance-oriented model learning for data-driven mpc design,” IEEE Control Systems Letters, vol. 3, no. 3, pp. 577–582, 2019.

- [10] K. Pereida and A. P. Schoellig, “Adaptive model predictive control for high-accuracy trajectory tracking in changing conditions,” CoRR, 2018.

- [11] G. Shi, X. Shi, M. O’Connell, R. Yu, K. Azizzadenesheli, A. Anandkumar, Y. Yue, and S. Chung, “Neural lander: Stable drone landing control using learned dynamics,” CoRR, 2018.

- [12] C. D. McKinnon and A. P. Schoellig, “Experience-based model selection to enable long-term, safe control for repetitive tasks under changing conditions,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 2977–2984, 2018.

- [13] T. Wang, H. Gao, and J. Qiu, “A combined adaptive neural network and nonlinear model predictive control for multirate networked industrial process control,” IEEE Transactions on Neural Networks and Learning Systems, vol. 27, no. 2, pp. 416–425, 2015.

- [14] M. T. Frye and R. S. Provence, “Direct inverse control using an artificial neural network for the autonomous hover of a helicopter,” IEEE Int. Conf. on Systems, Man, and Cybernetics (SMC), pp. 4121–4122, 2014.

- [15] H. Suprijono and B. Kusumoputro, “Direct inverse control based on neural network for unmanned small helicopter attitude and altitude control,” Journal of Telecommunication, Electronic and Computer Engineering (JTEC), pp. 99–102, 2017.

- [16] S. A. Nivison and P. P. Khargonekar, “Development of a robust deep recurrent neural network controller for flight applications,” in American Control Conf. (ACC). IEEE, 2017, pp. 5336–5342.

- [17] B. J. Emran and H. Najjaran, “Adaptive neural network control of quadrotor system under the presence of actuator constraints,” in IEEE International Conference on Systems, Man, and Cybernetics (SMC), 2017, pp. 2619–2624.

- [18] S. Bansal, A. K. Akametalu, F. J. Jiang, F. Laine, and C. J. Tomlin, “Learning quadrotor dynamics using neural network for flight control,” in IEEE Conf. on Decision and Control (CDC), 2016, pp. 4653–4660.

- [19] Q. Li, J. Qian, Z. Zhu, X. Bao, M. K. Helwa, and A. P. Schoellig, “Deep neural networks for improved, impromptu trajectory tracking of quadrotors,” IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 5183–5189, 2017.

- [20] S. Zhou, M. K. Helwa, and A. P. Schoellig, “Design of deep neural networks as add-on blocks for improving impromptu trajectory tracking,” IEEE Conf. on Decision and Control (CDC), pp. 5201–5207, 2017.

- [21] M. K. Helwa, A. Heins, and A. P. Schoellig, “Provably robust learning-based approach for high-accuracy tracking control of lagrangian systems,” IEEE Robotics and Automation Letters, vol. 4, no. 2, pp. 1587–1594, 2019.

- [22] T. Westenbroek, D. Fridovich-Keil, E. Mazumdar, S. Arora, V. Prabhu, S. S. Sastry, and C. J. Tomlin, “Feedback linearization for unknown systems via reinforcement learning,” arXiv preprint:1910.13272, 2019.

- [23] M.-B. Radac, R.-E. Precup, E.-L. Hedrea, and I.-C. Mituletu, “Data-driven model-free model-reference nonlinear virtual state feedback control from input-output data,” in 26th Mediterranean Conf. on Control and Automation (MED). IEEE, 2018, pp. 1–7.

- [24] J. H. Tu, C. W. Rowley, D. M. Luchtenburg, S. L. Brunton, and J. N. Kutz, “On dynamic mode decomposition: theory and applications,” ”Journal of Computational Dynamics”, 2014.

- [25] M. O. Williams, I. G. Kevrekidis, and C. W. Rowley, “A data–driven approximation of the koopman operator: Extending dynamic mode decomposition,” Journal of Nonlinear Science, vol. 25, no. 6, pp. 1307–1346, 2015.

- [26] A. Chatterjee, “An introduction to the proper orthogonal decomposition,” Current science, pp. 808–817, 2000.

- [27] K. Karydis and M. A. Hsieh, “Uncertainty quantification for small robots using principal orthogonal decomposition,” in Int. Symp. on Experimental Robotics. Springer, 2016, pp. 33–42.

- [28] I. Kevrekidis, C. Rowley, and M. Williams, “A kernel-based method for data-driven koopman spectral analysis,” Journal of Computational Dynamics, vol. 2, no. 2, pp. 247–265, 2015.

- [29] P. Gelß, S. Klus, J. Eisert, and C. Schütte, “Multidimensional approximation of nonlinear dynamical systems,” Journal of Computational and Nonlinear Dynamics, vol. 14, no. 6, p. 061006, 2019.

- [30] I. Abraham and T. D. Murphey, “Active learning of dynamics for data-driven control using koopman operators,” arXiv preprint:1906.05194, 2019.

- [31] S. L. Brunton, B. W. Brunton, J. L. Proctor, and J. N. Kutz, “Koopman invariant subspaces and finite linear representations of nonlinear dynamical systems for control,” PloS one, vol. 11, no. 2, p. e0150171, 2016.

- [32] M. Haseli and J. Cortés, “Approximating the koopman operator using noisy data: noise-resilient extended dynamic mode decomposition,” in American Control Conf. (ACC). IEEE, 2019, pp. 5499–5504.

- [33] J. L. Proctor, S. L. Brunton, and J. N. Kutz, “Dynamic mode decomposition with control,” SIAM Journal on Applied Dynamical Systems, vol. 15, no. 1, pp. 142–161, 2016.

- [34] V. Kumar and N. Michael, “Opportunities and challenges with autonomous micro aerial vehicles,” The International Journal of Robotics Research, vol. 31, no. 11, pp. 1279–1291, 2012.

- [35] N. Michael, D. Mellinger, Q. Lindsey, and V. Kumar, “The grasp multiple micro-uav testbed,” IEEE Robotics & Automation Magazine, vol. 17, no. 3, pp. 56–65, 2010.

- [36] I. Mezić, “Analysis of fluid flows via spectral properties of the koopman operator,” Annual Review of Fluid Mechanics, vol. 45, pp. 357–378, 2013.

- [37] P. J. Schmid, “Dynamic mode decomposition of numerical and experimental data,” Journal of fluid mechanics, vol. 656, pp. 5–28, 2010.

- [38] G. Strang, Introduction to Linear Algebra. Wellesley, MA: Wellesley-Cambridge Press, 2009.

- [39] C. L. Lawson and R. J. Hanson, Solving least squares problems, ser. Classics in Applied Mathematics. Society for Industrial and Applied Mathematics (SIAM), 1995.

- [40] G. H. Golub and C. F. Van Loan, Matrix Computations, 3rd ed. The Johns Hopkins University Press, 1996.

- [41] F. Sabatino, “Quadrotor control: modeling, nonlinearcontrol design, and simulation,” 2015.

- [42] R. Ibarra, S. Florida, W. Rodríguez, G. Romero, D. Lara, and I. Pérez, “Attitude control of a quadrocopter using adaptive control technique,” in Applied Mechanics and Materials, vol. 598. Trans Tech Publ, 2014, pp. 551–556.

- [43] W. Johnson, Rotorcraft Aeromechanics, ser. Cambridge Aerospace Series. Cambridge University Press, 2013.

- [44] X. Kan, J. Thomas, H. Teng, H. G. Tanner, V. Kumar, and K. Karydis, “Analysis of ground effect for small-scale uavs in forward flight,” IEEE Robotics and Automation Letters, vol. 4, no. 4, pp. 3860–3867, 2019.

- [45] S. Aich, C. Ahuja, T. Gupta, and P. Arulmozhivarman, “Analysis of ground effect on multi-rotors,” Int. Conf. on Electronics, Communication and Computational Engineering (ICECCE), pp. 236–241, 2014.

- [46] T. Lee, M. Leok, and N. H. McClamroch, “Geometric tracking control of a quadrotor uav on se(3),” IEEE Conf. on Decision and Control (CDC), pp. 5420–5425, 2010.