A Computer Vision Method for Estimating Velocity from Jumps

Abstract

Athletes routinely undergo fitness evaluations to evaluate their training progress. Typically, these evaluations require a trained professional who utilizes specialized equipment like force plates. For the assessment, athletes perform drop and squat jumps, and key variables are measured, e.g. velocity, flight time, and time to stabilization, to name a few. However, amateur athletes may not have access to professionals or equipment that can provide these assessments. Here, we investigate the feasibility of estimating key variables using video recordings. We focus on jump velocity as a starting point because it is highly correlated with other key variables and is important for determining posture and lower-limb capacity. We find that velocity can be estimated with a high degree of precision across a range of athletes, with an average R-value of 0.71 (SD = 0.06).

1 Introduction

Athletes benefit from feedback from professional evaluations to maximize performance and minimize injury risk. Often, these evaluations require athletes to perform key movements that facilitate evaluation, e.g. drop or squat jumps, with the use of specialized equipment like force plates. However, not all athletes have access to this equipment, nor to trained professionals to help them understand the variables measured by these movements and how they should adjust their training in response. Here, we establish that key variables from these jumps can be estimated using from videos of athletes performing both squat and drop jumps.

We use force plate data as the ground truth for our variables. The accuracy of force plates has been well established. Garcia-Ramos et al. [4] show that the force plate variables like velocity and power are highly reliable when evaluating a countermovement jump for an athlete’s performance. Velocity is an important variable when determining posture [3] or to represent the maximum lower-limb capacity of an athlete [10], which is helpful in creating individual and prioritized training profiles for athletes.

The usefulness of force plates is limited to laboratory settings [6], but it is substantially more feasible to record a video outside of such settings and utilize a computer vision model to estimate these key variables. However, for this to be realistic, the computer vision model has to be sufficiently reliable. Prior work has examined the feasibility of identifying when an athlete incorrectly performs a drop or squat jump [9], but thus far no works have estimated the key variables themselves.

Prior work has also focused on alternate solutions for athlete performance evaluation, useful in the absence of standard force plate data. Johnson et al. [6] provide a method to analyze athlete ground reaction forces and moments (GRF/Ms) in real time using eight marker motion capture trajectories, allowing for a field-based study in identifying early injury risk; they [7] also use the motion capture data to estimate Knee Joint Movements (KJM). Goldacre et al. [5] utilized preprocessed marker motion capture data and compared a Least Squares Estimator method for estimating GRF with a convolutional neural network (CNN) estimator and found the CNN-best method was more reliable and consistent. All of these works showcase the feasibility of estimating a key metric, GRF, but none have considered other essential metrics like velocity. Further, none of these works have attempted to estimate variables using raw video data. Here, we train a custom Swin Transformer architecture to estimate key metrics like velocity using the dataset from Blanchard et al. [1].

We propose an automatic force plate variable estimation tool that uses athlete motion videos — performing evaluative jumps such as countermovement and drop jumps — as input, which can be run on any handy smart device such as a mobile phone. The tool is trained on the videos directly with minimal preprocessing steps, allowing faster inference during its execution. In the absence of a force plate, our method can provide a good approximation of the desired force plate variables, which can further be used to investigate the performance of an athlete.

In summary, this work has several novel contributions:

-

1.

This is the first work to showcase that key variables for assessing athlete performance can be estimated using video alone.

-

2.

We create a custom variant on the Swin Transformer architecture (see Figure 1) and achieve state-of-the-art performance across 86 athletes.

-

3.

Our solution is generalized across two different kinds of jumps: the squat jump and the drop jump. We did not create separate models for each kind of jump.

2 Methods

2.1 Data

Blanchard et al. [1] collected a dataset which consisted of 582 RGB videos of 89 athletes performing multiple countermovement and drop jumps [1]. Each jump was recorded from three different angles: a left, right, and center perspective. Jumps were performed on force plate data, which recorded a multitude of variables associated with the jump (181 for countermovement jumps and 58 for drop jumps). Only 86 athletes’ force plate data (545 videos) were available, so we only utilized this subset of the data.

We divided the dataset into three separate folds. No participant from one fold was present in any other fold. The first two folds included 29 unique participants each, and the third fold included the remaining 28 participants. There were 180, 178, and 161 videos in each fold, respectively.

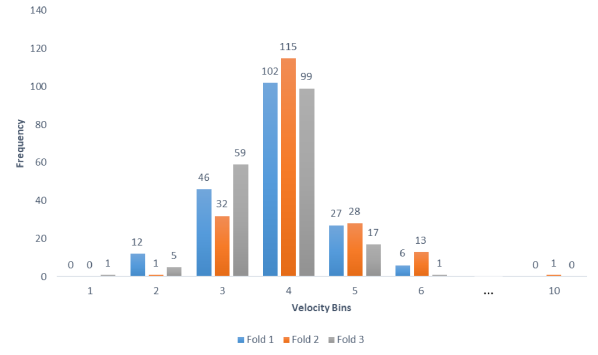

We focused on training a model to estimate concentric peak velocity. Figure 2 displays the distribution of concentric peak velocity (hereafter, velocity) across the three folds of our dataset. In our experiments, models used for evaluation were trained on each individual view, with an additional model trained on the combined input of the three views.

2.2 Model Architectures

Estimating velocity, as well as other metrics from the force plate data, is a regression problem. We experimented with three different methods to evaluate the feasibility of estimating velocity.

Baseline Models We evaluated two different baseline methods for estimating the participant’s velocity using video data in order to measure athletic performance without a force plate setup. First, we used a ResNet-50 architecture to extract features from all video frames. For our first baseline, we trained a support vector machine (SVM) using these features. For our second, we added a regression dense layer to the ResNet-50.

In the first baseline method, we use the SVM regression method for velocity estimation, with the kernel set to ’rbf’, degree to 3, coef0 to 0.0, tol to 0.0001, C to 1.0 and epsilon to 0.01. The second baseline method uses a dense layer for final score estimation.

Swin Transformer Architecture We hypothesized a transformer architecture [2] would be better suited for video data than our baseline methods. We modified the Swin Transformer [8] to extract features from video frames and utilized a dense layer to predict velocity. The first layer of the Swin Transformer divided the image into smaller patches with equal window sizes using the window multi-attention head (W-MSA), as shown in Equation 1. The second layer repeated this, but shifted the starting position using shifted window multi-attention head (SW-MSA), as shown in Equation 2. This change in how window patches are made across layers produced cross-connections that let the model identify the relevant image features. As illustrated in Equations 3 and 4, self attention was conducted in the local window patches before the window patches were sent via a multi-layer perceptron (MLP). Finally, the adjacent patches were combined. The two successive layers were part of a Swin Transformer block; we used four such Swin Transformer blocks to generate the features with respect to each video frame.

| (1) |

| (2) |

| (3) |

| (4) |

where are the output features of window-based multihead self-attention layers () and shifted window-based multihead self-attention layers () for block respectively.

The architecture with the Swin Transformer backbone is as shown in Figure 1. The inputs to the model are video frames from each view with respect to a single participant. Each of the video frames are processed through the Swin Transformer Block [8].

The mean of the frames from each view after flattening these features are calculated. Each view is passed through a dense layer before concatenating them all together. The combined features are then processed through another dense layer to obtain the final features.

2.3 Training and Evaluation Details

Velocity scores followed a Gaussian distribution, with the majority of data ranging between 0.4 and 0.6, as seen in Figure 2. We exploited this distribution during training by using a balanced sampler to create mini batches, thus allowing the model to accurately learn the embedding space of jumps across all velocities.

All of our models were trained on a Titan V GPU with an Intel(R) Xeon(R) E5-1650 v4 processor and 16GB RAM. We used L1 loss for training and kept a fixed learning rate of 1e-3. The models were trained for 100 epochs using the Adam Optimizer.

All models were trained and evaluated following a 3-fold cross validation paradigm. No participants present in the training data were present in the test data.

We evaluated models using two metrics: Pearson’s R and Mean Absolute Error (MAE). All reported results are the mean and standard deviation of each metric across all folds.

3 Results

| Method | MAE () | R () |

|---|---|---|

| Baseline method with SVM | 0.19 ±0.017 | 0.12 ±0.06 |

| Baseline method with Dense Layer | 0.28 ±0.017 | 0.16 ±0.05 |

| Our method (Combined view) | 0.21 ±0.015 | 0.71 ±0.06 |

Table 1 shows the performance of our baseline estimators and our transformer method. Although these methods appear similar when considering only MAE, our transformer-based model performs substantially better when evaluated by Pearson’s R. In Figure 3, we showcase how our estimated velocity compares with the actual velocity for each fold — our models slightly underestimated velocity, but they were extremely consistent with their estimations across folds. The low R values of the baseline methods indicate the estimations from those models were extremely inconsistent.

3.1 Ablation

We investigated how influential each component of the data was for our method’s prediction. Table 2 showcases the results of this experiment — video from the center perspective was more important for accurately estimating velocity, exhibiting an R value 0.21 higher than either the left or right perspective (the left and right perspectives exhibited similar performances). This contrasts the findings of [9], which found the left and right perspectives were more influential for estimating when a jump was performed incorrectly. This indicates that different perspectives may be essential to fully estimating all components of a jump. Further, data from all three perspectives culminated in the best model — combining all perspectives increased the R value by 0.15 over the center view alone.

| Video capture view | MAE () | R () |

|---|---|---|

| Left | 0.16 ±0.016 | 0.35 ±0.06 |

| Right | 0.20 ±0.014 | 0.34 ±0.04 |

| Center | 0.19 ±0.014 | 0.56 ±0.06 |

| Combined View | 0.21 ±0.015 | 0.71 ±0.06 |

4 Conclusion and Future Work

Velocity is a key evaluation metric for evaluating an athlete’s performance. To our knowledge, this is the first work that estimates velocity from RGB video alone. Our novel solution is generalized across participants and two kinds of evaluative jumps: the squat jump and the drop jump. This work also provides insights into what kinds of data are important for estimating key metrics like velocity. In the future, we plan to expand our architecture to estimate a multitude of other essential variables for athlete performance following a multi-task regression paradigm.

References

- [1] Nathaniel Blanchard, Kyle Skinner, Aden Kemp, Walter Scheirer, and Patrick Flynn. “Keep me in, coach!”: A computer vision perspective on assessing ACL injury risk in female athletes. In 2019 IEEE/CVF Winter Conference on Applications of Computer Vision, pages 1366–1374. IEEE, 2019.

- [2] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- [3] Marcos Duarte and Sandra MSF Freitas. Revision of posturography based on force plate for balance evaluation. Brazilian Journal of physical therapy, 14:183–192, 2010.

- [4] Amador García-Ramos, Slobodan Jaric, Alejandro Pérez-Castilla, Paulino Padial, and Belén Feriche. Reliability and magnitude of mechanical variables assessed from unconstrained and constrained loaded countermovement jumps. Sports Biomechanics, 16(4):514–526, 2017.

- [5] Molly Goldacre, Amar El-Sallam, Hannah Wyatt, William Johnson, Jian Liu, Ajmal Mian, and Jacqueline Alderson. Predicting ground reaction forces from 2d video: Bridging the lab to field nexus. ISBS Proceedings Archive, 39(1):9, 2021.

- [6] William R Johnson, Ajmal Mian, Cyril J Donnelly, David Lloyd, and Jacqueline Alderson. Predicting athlete ground reaction forces and moments from motion capture. Medical & biological engineering & computing, 56(10):1781–1792, 2018.

- [7] William R Johnson, Ajmal Mian, David G Lloyd, and Jacqueline A Alderson. On-field player workload exposure and knee injury risk monitoring via deep learning. Journal of biomechanics, 93:185–193, 2019.

- [8] Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, and Baining Guo. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10012–10022, 2021.

- [9] Chaitanya Roygaga, Dhruva Patil, Michael Boyle, William Pickard, Raoul Reiser, Aparna Bharati, and Nathaniel Blanchard. Ape-v: Athlete performance evaluation using video. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pages 691–700, 2022.

- [10] Pierre Samozino, P Edouard, S Sangnier, M Brughelli, P Gimenez, and J-B Morin. Force-velocity profile: imbalance determination and effect on lower limb ballistic performance. International journal of sports medicine, 35(06):505–510, 2014.