A Comparison of Reward Functions in Q-Learning Applied to a Cart Position Problem

Abstract

Growing advancements in reinforcement learning has led to advancements in control theory. Reinforcement learning has effectively solved the inverted pendulum problem [1] and more recently the double inverted pendulum problem [2]. In reinforcement learning, our agents learn by interacting with the control system with the goal of maximizing rewards. In this paper, we explore three such reward functions in the cart position problem. This paper concludes that a discontinuous reward function that gives non-zero rewards to agents only if they are within a given distance from the desired position gives the best results.

Keywords: cart position problem, control theory, reinforcement learning, reward function

1 Introduction

Reinforcement Learning (RL) is a branch of Machine Learning that deals with agents that learn from interacting with an environment. This is inspired by the trial-and-error method of learning dealt with in psychology [1]. The goal of any RL problem is to maximize the total reward, and the reward is calculated using a reward function. At any point, the goal of an RL agent is to choose an action that maximizes not only its immediate reward, but also the total reward it expects to get if it follows a certain sequence of actions. The RL algorithm used in this paper is Q-Learning. It is a basic algorithm used in RL, and the math used in it is easy to understand for a reader that does not have much machine learning background. It also comes with the merit that training a Q-Learning algorithm is significantly faster compared to other commonly used RL algorithms.

The cart position problem is a toy problem that will be explored in this paper. The goal is to move a cart from a position to a position . This will be done by adjusting the voltage of the cart at every time step. Currently there exists theoretical input functions that solve the cart position problem. It will be interesting to see how RL compares with these functions.

The objective of this paper is to compare three different reward functions based on the performance of the RL agent. The comparison is done in three ways. First, we see which RL agent reaches steady-state motion the earliest. Second, we see which RL agent has the lowest variability in its steady-state motion. Third, we see which RL agent is the closest to in its steady-state motion.

This paper is organized as follows. In section 2, we describe the cart position problem. In section 3, we present the RL algorithm and the reward functions we intend to use. In section 4, the theoretical solution to the cart position problem is explained. In section 5, we present the results of the RL algorithm and compare them. In section 6, we discuss the applicability of the RL algorithm in real life situations.

2 Cart Position Problem

This paper concerns the control of the position of a cart by a rotary motor. Let be the position of a cart at time and the motor voltage at time . is the gearbox efficiency, the gearbox gear ratio, the motor efficiency, the motor torque constant, the motor pinion radius, the back-EMF constant, the motor armature resistance, the coefficient of viscous friction, and the mass of the cart. These are all constants. The equation for this control system is a second-order differential equation shown in equation 1 below:

| (1) |

Throughout the course of this paper, we can define constants and as:

This simplifies our governing equation to equation 2 below:

| (2) |

Where and . In the absence of any control input , the solution to the differential equation is:

In this model, , so . The steady-state position is . The goal of the input function is to change the steady-state position to a position of our choice.

We can express our governing equation as a system of first order differential equations. Let represent the velocity of the cart. The system of first order equations is expressed below:

Let . This system of first order equations can be expressed in matrix form, as shown below:

We also know that the objective of our control problem is to drive the cart to a particular position. For this reason, we want our output to be the position . To express this in a matrix form involving , this can be written as:

The state-space form of our equation is:

| (3a) | |||

| (3b) |

Where is the state, is the input, is the output. The objective of the cart position problem is to find an input function so that the steady-state value of is . And this will be done using RL.

3 Use of RL in the Cart Position Problem

The initial conditions used in the control system are: . In this paper, we will let . The solution to Equation 3b is computed numerically using Euler’s method with a step-size of s.

At each time step, the RL agent takes the output of the control system, which is the position of the cart, and returns the voltage that should be used as the input to the control system at that time step. The voltage is an integer between and . In order to ensure that the cart does not go too far from , the bounds of has been set to .

The training process involves running samples. Each sample has rounds. When a round starts, the cart is at position . The round ends if the cart goes out of bounds (i.e. ) or time steps have passed since the start of the round. This means the training process of the RL agent involves observing at most time steps.

The objective of the RL agent is to find a sequence of voltage inputs such that the total reward is maximized.

3.1 Q-Learning

The RL algorithm used in this paper is Q-Learning. Q-Learning was first coined by Watkins (1989) in his PhD thesis [3]. It finds an optimal policy where is the state and is the action. This optimal policy maximizes the total reward in the system. can be thought of a table of values for all states and actions.

At first, is initialized to for all . At every time step , the action is determined from the state through an -greedy process. In order to find the best action from , we must first use a random process to figure out the result of the action. When the training of the agent starts, we let . At every time step, we take a random sample from the distribution. If the random sample is greater than , then . Otherwise, is a randomly selected action.

Let be the policy after updates. When is calculated using and , then is updated using the following equation:

| (4) |

Where is the reward, is the learning rate. is a measure of the guess of the future reward, and is a measure of the weight we give to future rewards. and are hyperparameters to this agent. The hyperparameters used in this paper are:

In , the state refers to the position of the cart, and the action refers to the voltage of the cart.

The Q-Learning code used in this paper is taken from Lin’s (2018) GitHub repository. This code was originally used to solve the Blackjack problem using Q-Learning. The equation for updating after every time step according to Lin’s code is given by the equation below [4]:

| (5) |

Where is the number of time steps used to train the agent.

3.2 Reward Functions

As shown previously, reward functions are used to update the policy function at each time step with the intention that the RL agent maximizes the total reward. This paper will explore three reward functions. The first reward function takes the negative of the square of the distance between and with the intention that the RL agent will be rewarded better if it is closer to .

| (6) |

The second reward function is piece-wise and is always positive. This function is linear instead of quadratic.

| (7) |

The third reward function is discontinuous. It only rewards a if the cart is a distance of less than unit away from and it rewards a if the cart is a distance of less than units away from .

| (8) |

To reproduce our results, we provided the code used for this problem [7].

4 Theoretical solution

Consider the following voltage function:

| (9) |

Where . Substituting the voltage function into equation 2 gives us the following:

| (10) |

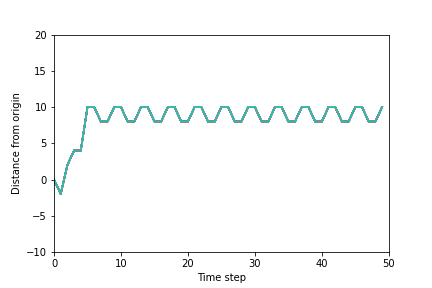

This equation gives us a solution whose steady-state position is [6]. The proof is given in Appendix A. Numerical simulations of Equation 10 have been done using Euler’s method with and . The plot of the two trajectories are given below:

With , the position of the cart is within after time steps (or seconds). With , the position of the cart is within after time steps (or seconds).

This theoretical solution will be compared to the solution derived from Q-Learning.

5 Results of Q-Learning

In this section, we train three RL agents using each of the reward functions provided in section 3.2. We then compare the results of each of our RL agents.

5.1 Reward Function 1

The first reward function takes the negative of the square of the distance between and with the intention that the RL agent will be rewarded better if it is closer to , as shown in Equation 6. Figure 3 below shows how the average reward varies with each sample. The average reward is calculated by taking the mean of the total reward of each of the trajectories in the sample.

It is clear that, after training for samples, the training is complete and the average reward is approximately . Figure 4 shows the trajectory of each cart in sample 1. These carts follow a random motion. All trajectories of carts in later samples will be compared to this plot.

In this graph, most of the carts go out of bounds within the first 10 time steps. During the first sample, the value of used in the -greedy algorithm is almost , which is why the voltage applied at each time step is picked randomly. Thus, the trajectory that each cart takes is random.

At samples to , the average reward plot in Figure 3 shows an increasing trend. Figure 5 shows the trajectory of each cart at sample .

In this plot, clearly the RL agent has learned that the cart needs to move towards during the start of the round. The trajectories are less likely to move out of bounds. Several trajectories appear to move back and forth . It shows a significant improvement compared to Figure 4.

Figure 6 shows the trajectory of each cart in sample .

This graph shows that the cart reaches a steady-state motion after time steps (or seconds). The position alters between and and the voltage alters between and . The total reward in this trajectory is , which is significantly high compared to the average rewards in the first few samples shown in Figure 3.

The steady-state position is within of . If at , then the cart is expected to drift past , which is why is the best action to take here.

5.2 Reward Function 2

The second function gives a positive reward only if the position of the cart is in the range , as shown in Equation 7. Contrary to reward function 1 and 3, the step-size used here is s because it leads to better results, and the number of time steps in a round is still . Figure 7 below shows how the average reward varies with each sample.

It is clear that, after training for samples, each trajectory of the cart has a total reward of . Figure 8 shows the trajectory of each cart in sample 1. These carts follow a random motion. All trajectories of carts in later samples will be compared to this plot. The distance from origin is the value at time and the time step is .

In this graph, most of the carts go out of bounds within the first 40 time steps. This makes sense since the time step size used here is half the step size used for the first reward function. For this reason, the trajectories shown in this graph are different from the trajectories shown in Figure 4. The trajectory that each cart takes is random because the voltage applied at each time step is picked randomly.

At samples to , the average reward in Figure 7 shows an increasing trend. Figure 9 shows the trajectory of each cart in sample 25.

In this plot, it is clear the cart has learned to move towards during the start of the round. Several trajectories appear to stay near and the cart is less likely to go out of bounds. It shows a significant improvement compared to Figure 8.

Figure 10 shows the trajectory of each cart in sample 100.

This graph shows that the cart reaches a steady-state motion after time steps (or seconds). The validation plot is the same as this plot. The position alters between , and and the voltage alters between , and . The total reward in this trajectory is , which is significantly high compared to the average rewards in the first few samples shown in Figure 7.

However, the variation in the position during the steady-state motion is too big. The steady-state position is within of . And is not in the range of the positions in the steady-state motion. This is because the agent has learned that a positive reward comes if the cart is in the range . Thus, there is less of an incentive to stay closer to since the current trajectory already significantly maximizes the total reward. This shows that the second reward function is not as reliable as the first reward function.

5.3 Reward Function 3

As a measure of ensuring that the variability of the steady-state position is minimized, the third reward function gives a positive reward only if the position of the cart is in the range , as shown in Equation 8. Figure 11 shows how the average reward varies with each sample.

It is clear that, after training for samples, each trajectory of the cart has a total reward of . Figure 12 shows the trajectory of each cart in sample 1. These carts follow a random motion. All trajectories of carts in later samples will be compared to this plot.

In this graph, most of the carts go out of bounds within the first 10 time steps. This graph shows similar trends to Figure 4. The direction each cart goes at the start of the round is random as the voltage applied at each time step is picked randomly.

At samples to , the average reward in Figure 11 shows an increasing trend. Figure 13 shows the trajectory of each cart in sample .

In this plot, it is clear that the agent has learned to move the cart towards during the start of the round. Trajectories are less likely to go out of bounds. In some trajectories, the cart moves back and forth the interval multiple times in order to increase its total reward.

Figure 14 shows the trajectory of each cart in sample 100.

This graph shows that the cart reaches exactly after time steps ( seconds) and stays there. The validation plot is the same as this plot. The steady-state motion has no variability compared to the first and second reward function. On the other hand, a drawback of using this reward function is that training this agent takes more samples compared to training RL agents that use the first or second reward function, as shown in Figure 11.

We first compare which RL agent reaches steady-state motion the earliest. For the first and second reward function, the Q-Learning agents take equally as long to reach steady-state motion (s). The Q-Learning agent using the third reward function reaches steady-state motion the quickest (s).

We then compare which RL agent has the lowest variability in its steady-state motion. In the first reward function, the steady-state motion alternates between positions and , thus having a width of . In the second reward function, the steady-state motion alternates between positions and , thus having a width of . In the third reward function, the steady-state motion just takes values of , thus having a width of . This shows that, while the first reward function leads to a smaller width compared to the second reward function, the third reward function has the smallest width.

Lastly, we compare which RL agent is closest to in its steady-state motion. Clearly, the third reward function performs the best since it’s steady state position is exactly . And the second reward function performs the worst since is not in its set of steady-state positions.

Thus, the third reward function gives the best results.

6 Discussion

While the previous section discussed the steady-state behaviour of the Q-Learning agent for each of the reward functions, this section will discuss the practicality of Q-Learning agents on real carts based on the results of the third reward function.

We first compare the trajectory of the Q-Learning agent shown in Figure 14 with the trajectories of the theoretical solutions shown in Figure 1 and 2. It is clear that the Q-Learning agent shows better performance compared to the theoretical solutions as the Q-Learning agent reached steady-state motion within seconds and the theoretical solutions reached a position within of within seconds and seconds.

6.1 Applicability of Q-Learning on real carts

In this paper, the cart position problem is thought of as a toy problem where Q-Learning may be useful. Suppose a real cart follows the model shown in Equation 1 and its goal is to get from a position to . This means the position is updated continuously as opposed to time steps shown in Euler’s method. In Q-Learning, the policy function picks actions based on trial-and-error. The set of states in are finite and discrete. Thus, Q-Learning is impractical on a real cart where the position can be any real number. In this situation, the theoretical solution is more effective as it adjusts the voltage based on a continuous set of positions.

Having a discrete set of actions can also be undesirable in this problem. In the situation with reward function 1 (Figure 6), it is clear that has to alter between and in order to maintain a steady-state motion. If at , then the cart will drift away from since its velocity is non-zero. Thus, further areas of exploration involve adding values of with a smaller magnitude (i.e. ) into the range of actions the Q-learning agent could take.

Further areas of exploration also involve using Deep Q learning. Artificial neural networks (ANNs) are trained by modifying weights rather than entries in a table, thus, ANNs may be more reliable in problems involving continuous states or continuous actions.

Consider the trajectory of the Q-Learning agent shown in Figure 14. Between time and , the velocity of the cart increases from to . This means the acceleration is approximately . In a real cart, this could be dangerously high, given that cars that have an acceleration of approximately to are considered one of the fastest accelerating cars [5]. This shows that the Q-Learning agent is impractical on real carts.

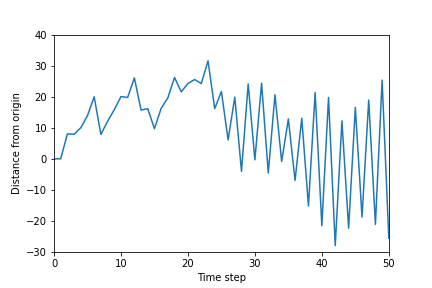

Lastly, in real world carts, there is always an error associated with measuring the mass of the cart, the coefficient of friction, or any of the constants described in Equation 1. This means there can be an error associated with measuring or . Suppose we trained a Q-Learning agent with and we intend to test it on a cart with . Figure 15 shows what the trajectory will look like.

If we use a cart with , we get the trajectory shown in Figure 14. If we use a cart with , we get the trajectory shown in Figure 15. This shows that a small error in the measurement of can lead to a significant change in the trajectory of the cart. This occurs because the set of states in are finite and discrete. This is why a small change in means that the cart will reach positions that are either not in the set of states in , or have a different action associated with it. This again shows that Q-Learning is not reliable for real carts.

7 Conclusion

This paper compared three different reward functions based on the performance of the RL agent if we intend to use Q-learning to solve the cart position problem. In conclusion, a discontinuous reward function that rewards the RL agent only if the position of the cart lies in gives the best results. Through this analysis, we also created a RL agent that outperforms theoretical solutions.

Acknowledgements

The author gratefully acknowledges Jun Liu and Milad Farsi for their continued support, feedback and suggestions on this paper.

Appendix A: Proof of the theoretical solution

Consider the following input function:

Substituting the voltage function into equation 2, gives us the following:

Re-arranging this gives us a non-homogenous second order differential equation:

| (11) |

The goal of this subsection is to find the steady-state position . This involves finding a general solution to equation 11. This will be done by finding the general solution homogenous version of the equation, and by finding a particular solution to the non-homogenous version of the equation, .

A.1: Solve the homogenous equation

Writing this in matrix form with gives the following equation:

The solution to this equation is:

The eigenvalues of are:

Since , this means:

And since , this means:

This shows that is Hurwitz, and by extension:

Thus, the steady-state value of the homogenous equation is: .

A.2: Find a particular solution to the non-homogenous equation

Consider:

Substituting it into equation 2 gives:

Thus, is a particular solution to the non-homogenous equation. The steady-state value of is .

A.3: Steady-state value of equation 11

All steady-state values of the homogenous equation are and the steady-state solution to the particular solution of the non-homogenous equation is . This means the steady-state value of equation 11 is .

This shows that using the input function is effective in moving the cart from position to position .

References

- [1] Sutton RS, Barto AG. (1998) Reinforcement Learning: an Introduction . MIT Press.

- [2] Zheng Y, Luo S,Lv Z. (2006). Control Double Inverted Pendulum by Reinforcement Learning with Double CMAC Network. Proceedings - International Conference on Pattern Recognition. 4. 639 - 642. 10.1109/ICPR.2006.416.

- [3] Watkins, C.J.C.H. (1989), Learning from Delayed Rewards (Ph.D. thesis), Cambridge University

- [4] Lin C (2018), Blackjack–Reinforcement-Learning, GitHub Repository https://github.com/ml874/Blackjack–Reinforcement-Learning

- [5] Autocar (2020), The top fastest-accelerating cars in the world 2021, https://www.autocar.co.uk/car-news/best-cars/top-fastest-accelerating-cars-world

- [6] Farsi, M (2021), Lab 1: Cart Position, AMATH 455/655, University of Waterloo

- [7] Mukherjee, A (2021), Q Learning Cart Problem, GitHub Repository https://github.com/amartyamukherjee/ReinforcementLearningCartPosition